Abstract

The notion of statistical order derives from the disequilibrium concept introduced by López-Ruiz, Mancini, and Calbet thirty years ago. In this effort, it is shown that the disequilibrium is intimately linked to the celebrated Rényi entropy. One also explores this link in connection with the van der Waals gas description.

1. Introduction

In this work, one wishes to link the notion of Rényi’s entropy to that of statistical order. We start then by recalling some essential features of this kind of order.

1.1. Statistical Disorder

Statistically, maximum or total disorder is associated with a uniform probability distribution (UPD). Thus, the more dissimilar a given probability distribution (PD) is to the UPD, the more statistical order this given PD represents. Recall at this point that the ordinary entropy S adequately grasps disorder as well and is maximized by the UPD. Furthermore, recall first of all that the Shannon entropy S is a measure of disorder, as extensively discussed in Refs. [1,2]. López-Ruiz, Mancini and Calbet (LMC) [1,2] defined an appropriate tool called disequilibrium D to measure statistical order. It is the distance in probability space between an extant PD and the UPD. Furthermore, they baptized the product as the statistical complexity C

Since one is interested here in the notion of statistical order represented by D, from here on, we call this quantity the statistical order index (SOI).

For an -microstates system, each element of the UPD is equal to . Thus, if the elements of the extant PD are called , then D is given by [1]

and the Shannon entropy is

where are de corresponding probabilities, with the condition [1].

In this effort, one will focus attention, within a classical canonical-ensemble environment, on the LMC statistical order index concept and show that it is intimately linked to Rényi’s entropy. One focuses attention upon classical integrable systems and will associate them with an SOI. As a matter of fact, it will be seen that the SOI (given by D) can entirely replace the partition functions in thermal relationships.

1.2. Rényi’s Entropy

Rényi’s entropy plays a significant role in Information Theory [3]. For example, it generalizes Hartley’s and Shannon–Boltzmann’s entropies and the concept of dimension. It is relevant in ecology and statistics as a diversity index [4,5], as well as a measure of quantum entanglement and much more. It is thus an entropic functional of immense relevance (for more details, see the other articles of this Special Issue).

The Rényi’s entropy of order q, with and , is defined as

where are the corresponding set of probabilities [6]. Appealing to the L’Hôpital’s rule, it is possible to demonstrate that, when the limit q tends to unity, Rényi’s entropy approaches to Shannon’s entropy S (given by Equation (3)), i.e.,

Moreover, the max-entropy or Hartley entropy is the special case of Rényi’s entropy for , i.e.,

1.3. Review on Properties of the Statistical Order Index

Consider a classical ideal system of identical particles contained in a volume V and Hamiltonian , where are the concomitant phase space variables. Furthermore, the system is in equilibrium at temperature T. The ensuing distribution becomes [7]

with , and the Boltzmann constant.

The canonical partition function is

with , while Helmholtz’ free energy reads [7]

López-Ruiz showed in Ref. [8] that the statistical order index can be cast as

a form valid only for continuous PDs. Now, change T to in Equation (9) and replace the ensuing expression into Equation (10). Thus,

Therefore, replacing the above expression with Equation (11), one has

an often used expression (see Ref. [10]). Note that one adds the subscript 0 (or superscript (0)) to indicate that the system under consideration is a classical ideal gas. This notation is important to avoid confusion in future sections.

2. Statistical Order and Thermodynamic Relations

A reminder that D is an expression of statistical order and constitutes an essential concept. In Ref. [9], it has been shown for the classical ideal gas in a canonical ensemble that the basic classical thermodynamic relations (TR) are expressed in statistical order terms via the statistical order index, which we now call . The relevant TR, in addition to Helmholtz’ free energy , read [7]

One refers above, respectively, to the mean energy , the chemical potential , pressure , and entropy that in turn depend on the number of particles, volume V and temperature T. As it was stated in Ref [9], it is possible to recast these TR in terms of the statistical order index represented by .

Deriving Equation (11) with respect to , it is possible to find

The equipartition theorem for the energy states that

with f the number of degrees of freedom [11,12]. Thus, from (20), one has Inserting Equation (20) into Equation (19), one finds

One emphasizes that (19) is of a more general character than (21) because the latter holds only for systems that satisfy the equipartition of energy. Now, differentiating (11) with respect to , one sees that

Given that U is linked to via Equation (20), one also has .

Then, assuming once more equipartition, one finds

The pair of relations (21) and (23) constitutes a basic theoretical set and are called reciprocity relations. According to Jaynes, they yield a complete description of the thermodynamic properties, here, in statistical order language, for a system described via Gibb’s canonical ensemble [13]. One sees then that Gibb’s canonical ensemble theory can be totally recast in terms of temperature and statistical order—a significant result indeed.

In addition, one comments that Baez pointed out that, while Shannon’s entropy has a strong thermodynamic’ flavor, has not quite been completely integrated into the thermal discourse [3]. Here, one wishes to find a natural role for Rényi’s entropy in physics via the notion of statistical order as represented by the statistical order index D. This role is related to the free energy, using a parameter (for an arbitrary fixed temperature ) defined as a ratio of temperatures [9]. It was argued in Ref. [3] that, physically, is the temperature for which the system automatically has zero free energy.

Setting now and employing Equation (10), one finds

which is the wished-for direct relation between Rényi’s entropy of order and the statistical order index D. To summarize, it has been connected to Rényi’s entropy with statistical order [9].

Indeed, a possible generalized LMC-like complexity family that involves Rényi’s entropy instead of Shannon’s is given by this expression

as it was pointed out in Ref. [9]. We see that one can also express solely in Rényi’s terms. To sum up: statistical order is represented by .

3. Real Gases Application

3.1. Notions about Real Gases

Consider now intermolecular interactions in a classical gas of identical particles, confined into a space of volume V and in equilibrium at the temperature T. The pertinent Hamiltonian reads as a sum over the number of particles N [7]. Considering particles i and j, we have

where is the free Hamiltonian, and m is the mass of the system. Moreover, is the energy of interaction between the particles ith and jth, a function of the inter-particle distance only. The second sum in the right-hand term of the Hamiltonian runs over the extant pairs of particles [7]. For such a system, the canonical partition function is the product of the ideal gas partition function and a configuration integral [7]

where, as just stated, the canonical partition function for the ideal gas is

with as the particles’ mean thermal wavelength and h the Planck’s constant [7]. The quantity is the well-known configuration integral given by [7,14]

3.2. Rényi Entropy for a Real Gas

In this article, one focuses attention on the relation of Rényi’s entropy to statistical order via its connection with the free energy, as explained by Baez in Ref. [3]. For such purpose, one first defines Rényi’s entropy of order q for a classical system in the following manner

where is the differential of the phase space and the probability distribution of the system. With the motif of connecting with thermodynamics, one adds the Boltzmann constant to the definition (31). Second, one considers , which is associated with the Hamiltonian (27) of the vdW gas. Therefore, setting

one obtains,

Let us single out the following two terms

and

Then, replacing Equations (34) and (35) in Equation (33), one is immediately led to the Ré’s entropy given by

Via Equation (28), one then rewrites Equation (36) as

where one has considered the canonical partition function of the ideal gas given by Equation (29). Note that we have changed T by . In addition, one has taken into account the transformation , with .

Using Stirling’s approximation () and conveniently rearranging terms in Equation (37), one arrives at

A reminder now that the classical entropy of the ideal gas is called the Sackur–Tetrode [14]

3.3. Dilute Gas

If the gas density is low enough (), the configuration integral is suitably approximated, as it was shown in Ref. [14]. One usually approximates the interaction above in the form of a suitable function [14].

where is the second Virial coefficient, given by [14]

In this case, Equation (40) becomes

3.4. The Van Der Waals Instance

One appeals now to the van der Waals assumptions [9]: for , and for , where denotes here the minimum possible separation between molecules [14]. It is easy then to compute the second virial coefficient by recourse to Equation (42), which, in this case, the virial coefficient becomes

where is related to the volume of a hard-sphere molecular gas, and

is the mean potential energy, which measures the intermolecular interaction strength. One also sees that

Therefore, the van der Waals Rényi’s entropy given by Equation (43) becomes

where

is the Shannon’s entropy for the van der Waals gas [15]. Notice that, in the limit that q tends to unity, the term , then .

In addition, one calculates the statistical complexity according to definition (26) and using Equation (48). Thus, one has

which is independent of the temperature T. Note that as N grows, the system becomes less complex.

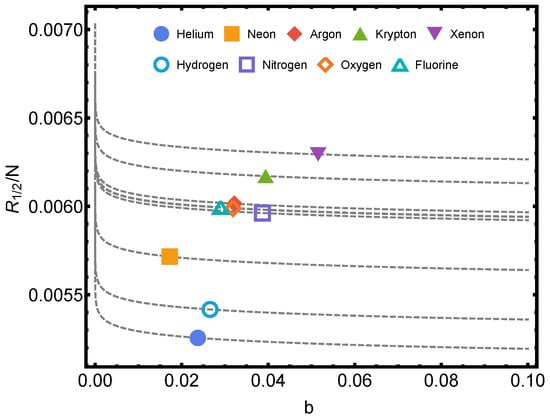

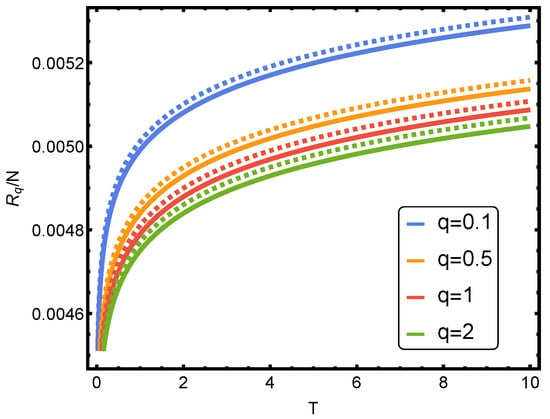

In Figure 1, Rényi’s entropy is plotted for , corresponding to some noble gases versus the parameter b of Equation (44). Here, one evaluates things at the van der Waals critical (1) temperature and (2) volume per particle [12,15]. Also, we plot the Rényi’s entropy against temperature T for several values of parameter q in Figure 2.

Figure 1.

Rényi’s entropy per particle being for the typical van der Waals values and versus b. One considers the noble gases helium, neon, argon, krypton, and xenon, and also, hydrogen, nitrogen, oxygen, and fluorine. The numerical values of a and b are given in the table of Ref. [16]. The lines are visual aids representing virtual trajectories as b varies.

Figure 2.

Rényi’s entropy per particle versus temperature T for several values of q. One takes . The solid curve corresponds to (vdW case) and the dashed curve to (ideal case). The numerical value of b is of the helium, obtained in the table of Ref. [16].

4. Some Relations for the Statistical Order in the vdW Approximation

In this section, one will apply some ideas developed in Section 2. Therefore, one needs to consider the statistical order index D in the vdW instance.

In order to repeat the procedure developed in Section 2, one uses the general definition of D, which in this case is

Taking first the logarithm of D and then differentiating with respect to , one obtains

Considering Equation (41), one arrives at

Now, taking into account the virial coefficient given by Equation (46), one finally obtains

where the last equality is due to Equation (21).

The result (53) can also be achieved via the statistical order obtained in Ref. [15], whose appearance is

One must emphasize that the derivative of the vdW with respect to is not equal to the energy of the ideal gas. This is because the term does not depend on T and then . Note also the vdW Hamiltonian is not quadratic, unlike that for the ideal gas.

5. Rq—Related Statistical Complexity via Fisher’S Information Measure

The statistical complexity can be thought of as the product of a measure of order times a measure of disorder (the entropy). Accordingly, one considers now, for thee sake of completeness, an alternative, Rényi related, definition of the statistical complexity (26) for the case (ideal gas) as follows

where is the Fisher information measure [17]. This quantity is a measure of order [17], as D, so that it can legitimately replace D in the definition. in the phase-space appearance appears as

where the probability distribution , and is Rényi’s entropy obtained in Equation (47). Performing the above integral, one can analytically obtain (this is the great advantage of this )

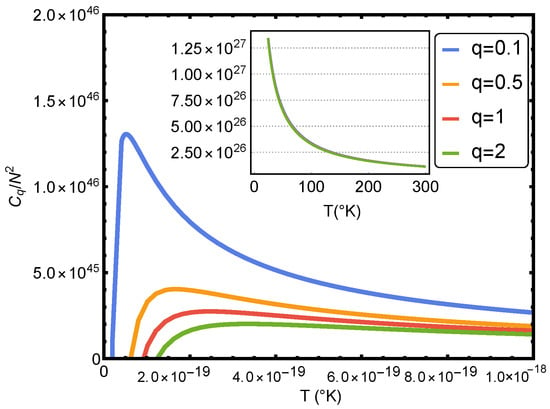

One appreciates the shape of in Figure 3. The complexity reaches its maximum value near and approaches zero as T approaches infinity, as one sees in the figure. Of course, the complexity diminishes as T augments and grows when the particle number increases (see Figure 3). The complexity grows as q decreases. Its maximum corresponds to temperatures for which the classical treatment is no longer valid. This last statement is carefully investigated in Ref. [15].

Figure 3.

Statistical complexity versus the temperature T for several values of q. One considers and (ideal case).

6. Conclusions

In this paper, one has connected Rényi’s entropy (RE) with the statistical order–disorder disjunction. Disorder is represented by several types of entropies, Rényi’s being one of them. Order is represented by Fisher’s information measure and also by high values of different types of distances in probability space from incumbent probability distribution to the uniform one (PU). The Euclidean distance is called the disequilibrium D. The Kullback–Leibler divergences involving the PU are another possibility.

Equation (25) directly links D with the Rényi’s entropy, which gives this special entropy a privileged role in the order–disorder disjunction. This equation straightforwardly states that the degree of order/disorder (O/D) links, at the same time, RE to D. This jointly expresses the same O/D situation. One has chosen de van der Waals gas to illustrate some O/D instances in an actual system: several gases.

Accordingly, one has shown in this review note that, in a classical phase space context, with continuous probability distributions, the LMC notion of statistical order index D has a suitable role in statistical thermodynamics by virtue of Equation (25), plus a host of other thermal relations that have been discussed above.

Indeed, for the classical ideal gas, in the new thermal relations, the partition function does not need to appear at all, so that one may be tempted to suggest that it has been replaced by the statistical order index. All important thermodynamic relations can indeed be expressed in terms of D. One might argue that the logarithm of D exhibits properties analogous to those of Massieu potentials. Finally, D itself can be simply expressed in terms of a particular Rényi’s entropy. This is not exactly so for the vdW gas because its Hamiltonian is not quadratic.

Interesting future work and open problems would be to apply the exposed ideas considering other information quantifiers, for example, (1) Tsallis entropy, (2) gas equations different from the van der Waals one, or (3) alternative statistical quantifiers.

Author Contributions

Investigation, F.P. and A.P.; project administration, F.P. and A.P.; writing—original draft, F.P. and A.P. All authors have read and agreed to the published version of the manuscript.

Funding

Research was partially supported by FONDECYT, grant 1181558 and by CONICET (Argentine Agency) Grant PIP0728.

Data Availability Statement

The data presented in this study are available in the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- López-Ruiz, R.; Mancini, H.L.; Calbet, X. A statistical measure of complexity. Phys. Lett. A 1995, 209, 321–326. [Google Scholar] [CrossRef]

- López-Ruiz, R. A Statistical Measure of Complexity in Concepts and Recent Advances in Generalized Information Measures and Statistics; Kowalski, A., Rossignoli, R., Curado, E.M.C., Eds.; Bentham Science Books: New York, NY, USA, 2013; pp. 147–168. [Google Scholar]

- Baez, J.C. Rényi Entropy and Free Energy. Entropy 2022, 24, 706. [Google Scholar] [CrossRef] [PubMed]

- Mora, T.; Walczak, A.M. Rényi entropy, abundance distribution, and the equivalence of ensembles. Phys. Rev. E 2016, 93, 52418. [Google Scholar] [CrossRef] [PubMed]

- Mayotal, M.M. Rényi’s entropy as an index of diversity in simple-stage cluster sampling. Inf. Sci. 1998, 105, 101–114. [Google Scholar]

- Rényi, A. On measures of information and entropy. In Proceedings of the 4th Berkeley Symposium on Mathematics, Statistics and Probability 1960, Berkeley, CA, USA, 20 June–30 July 1960; Neyman, J., Ed.; UC Press: Berkeley, CA, USA, 1906; Volume 1, pp. 547–561. [Google Scholar]

- Pathria, R.K. Statistical Mechanics, 2nd ed.; Butterworth-Heinemann: Oxford, UK, 1996. [Google Scholar]

- López-Ruiz, R. Complexity in some physical systems. Int. J. Bifurc. Chaos 2001, 11, 2669–2673. [Google Scholar] [CrossRef]

- Pennini, F.; Plastino, A. Disequilibrium, thermodynamic relations, and Rényi’s entropy. Phys. Lett. A 2017, 381, 212–215. [Google Scholar] [CrossRef]

- Sañudo, J.; López-Ruiz, R. Calculation of statistical entropic measures in a model of solids. Phys. Lett. A 2012, 376, 2288–2291. [Google Scholar] [CrossRef][Green Version]

- Lima, J.A.S.; Plastino, A.R. On the Classical Energy Equipartition Theorem. Braz. J. Phys. 2000, 30, 176–180. [Google Scholar] [CrossRef][Green Version]

- Tolman, R.C. The Principles of Statistical Mechanics, Great Britain; University Press: Oxford, UK, 2010; p. 95. [Google Scholar]

- Jaynes, E.T. Information Theory and Statistical Mechanics. Phys. Rev. E 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Reif, F. Fundamentals of Statistical and Thermal Physics, 1st ed.; Waveland Press: Long Grove, IL, USA, 2009. [Google Scholar]

- Pennini, F.; Plastino, A. Peculiarities of the Van der Waals classical-quantum phase transition. Entropy 2022, 24, 182. [Google Scholar] [CrossRef]

- Johnston, D.C. Advances in Thermodynamics of the van der Waals Fluid; Morgan and Claypool Publishers: San Rafael, CA, USA, 2014. [Google Scholar]

- Sañudo, J.; López-Ruiz, R. Statistical complexity and Fisher-Shannon information in the H-atom. Phys. Lett. 2008, 372, 5283–5286. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).