Abstract

Caching technique is a promising approach to reduce the heavy traffic load and improve user latency experience for the Internet of Things (IoT). In this paper, by exploiting edge cache resources and communication opportunities in device-to-device (D2D) networks and broadcast networks, two novel coded caching schemes are proposed that greatly reduce transmission latency for the centralized and decentralized caching settings, respectively. In addition to the multicast gain, both schemes obtain an additional cooperation gain offered by user cooperation and an additional parallel gain offered by the parallel transmission among the server and users. With a newly established lower bound on the transmission delay, we prove that the centralized coded caching scheme is order-optimal, i.e., achieving a constant multiplicative gap within the minimum transmission delay. The decentralized coded caching scheme is also order-optimal if each user’s cache size is larger than a threshold which approaches zero as the total number of users tends to infinity. Moreover, theoretical analysis shows that to reduce the transmission delay, the number of users sending signals simultaneously should be appropriately chosen according to the user’s cache size, and always letting more users send information in parallel could cause high transmission delay.

1. Introduction

With the rapid development of Internet of Things (IoT) technologies, IoT data traffic, such as live streaming and on-demand video streaming, has grown dramatically over the past few years. To reduce the traffic load and improve the user latency experience, the caching technique has been viewed as a promising approach that shifts the network traffic to low congestion periods. In the seminal paper [1], Maddah-Ali and Niesen proposed a coded caching scheme based on centralized file placement and coded multicast delivery that achieves a significantly larger global multicast gain compared to the conventional uncoded caching scheme.

The coded caching scheme has attracted wide and significant interest. The coded caching scheme was extended to a setup with decentralized file placement, where no coordination is required for the file placement [2]. For the cache-aided broadcast network, ref. [3] showed that the rate–memory tradeoff of the above caching system is within a factor of 2.00884. For the setting with uncoded file placement where each user stores uncoded content from the library, refs. [4,5] proved that Maddah-Ali and Niesen’s scheme is optimal. In [6], both the placement and delivery phases of coded caching are depicted using a placement delivery array (PDA), and an upper bound for all possible regular PDAs was established. In [7], the authors studied a cached-aided network with heterogeneous setting where the user cache memories are unequal. More asymmetric network settings have been discussed, such as coded caching with heterogeneous user profiles [8], with distinct sizes of files [9], with asymmetric cache sizes [10,11,12] and with distinct link qualities [13]. The settings with varying file popularities have been discussed in [14,15,16]. Coded caching that jointly considers various heterogeneous aspects was studied in [17]. Other works on coded caching include, e.g., cache-aided noiseless multi-server network [18], cache-aided wireless/noisy broadcast networks [19,20,21,22], cache-aided relay networks [23,24,25], cache-aided interference management [26,27], coded caching with random demands [28], caching in combination networks [29], coded caching under secrecy constraints [30], coded caching with reduced subpacketization [31,32], the coded caching problem where each user requests multiple files [33], and a cache-aided broadcast network for correlated content [34], etc.

A different line of work is to study the cached-aided networks without the presence of a server, e.g., the device-to-device (D2D) cache-aided network. In [35], the authors investigated coded caching for wireless D2D network [35], where users locate in a fixed mesh topology wireless D2D network. A D2D system with selfish users who do not participate in delivering the missing subfiles to all users was studied in [36]. Wang et al. applied the PDA to characterize cache-aided D2D wireless networks in [37]. In [38], the authors studied the spatial D2D networks in which the user locations are modeled by a Poisson point process. For heterogeneous cache-aided D2D networks where users are equipped with cache memories of distinct sizes, ref. [39] minimized the delivery load by optimizing over the partition during the placement phase and the size and structure of D2D during the delivery phase. A highly dense wireless network with device mobility was investigated in [40].

In fact, combining the cache-aided broadcast network with the cache-aided D2D network can potentially reduce the transmission latency. This hybrid network is common in many practical distributed systems such as cloud network [41], where a central cloud server broadcasts messages to multiple users through the cellular network, and meanwhile users communicate with each other through a fiber local area network (LAN). A potential scenario is that users in a moderately dense area, such as a university, want to download files, such as movies, from a data library, such as a video service provider. It should be noted that the user demands are highly redundant, and the files need not only be stored by a central server but also partially cached by other users. Someone can attain the desired content through both communicating with the central server and other users such that the communication and storage resources can be used efficiently. Unfortunately, there is very little research investigating the coded caching problem for this hybrid network. In this paper, we consider such hybrid cache-aided network where a server consisting of files connects with users through a broadcast network, and meanwhile the users can exchange information via a D2D network. Unlike the settings of [35,38], in which each user can only communicate with its neighboring users via spatial multiplexing, we consider the D2D network as either an error-free shared link or a flexible routing network [18]. In particular, for the case of the shared link, all users exchange information via a shared link. In the flexible routing network, there exists a routing strategy adaptively partitioning all users into multiple groups, in each of which one user sends data packets error-free to the remaining users in the corresponding group. Let be the number of groups who send signals at the same time, then the following fundamental questions arise for this hybrid cache-aided network:

- How does α affect the system performance?

- What is the (approximately) optimal value of α to minimize the transmission latency?

- How can communication loads be allocated between the server and users to achieve the minimum transmission latency?

In this paper, we try to address these questions, and our main contributions are summarized as follows:

- We propose novel coded caching schemes for this hybrid network under centralized and decentralized data placement. Both schemes efficiently exploit communication opportunities in D2D and broadcast networks, and appropriately allocate communication loads between the server and users. In addition to multicast gain, our schemes achieve much smaller transmission latency than both that of Maddah-Ali and Niesen’s scheme for a broadcast network [1,2] and the D2D coded caching scheme [35]. We characterize a cooperation gain and a parallel gain achieved by our schemes, where the cooperation gain is obtained through cooperation among users in the D2D network, and the parallel gain is obtained through the parallel transmission between the server and users.

- We prove that the centralized scheme is order-optimal, i.e., achieving the optimal transmission delay within a constant multiplicative gap in all regimes. Moreover, the decentralized scheme is also optimal when the cache size of each user M is larger than the threshold that is approaching zero as .

- For the centralized data placement case, theoretical analysis shows that should decrease with the increase of the user caching size. In particular, when each user’s caching size is sufficiently large, only one user should be allowed to send information, indicating that the D2D network can be just a simple shared link connecting all users. For the decentralized data placement case, should be dynamically changing according to the sizes of subfiles created in the placement phase. In other words, always letting more users parallelly send information can cause a high transmission delay.

Please note that the decentralized scenario is much more complicated than the centralized scenario, since each subfile can be stored by users, leading to a dynamic file-splitting and communication strategy in the D2D network. Our schemes, in particular the decentralized coded caching scheme, differ greatly with the D2D coded caching scheme in [35]. Specifically, ref. [35] considered a fixed network topology where each user connects with a fixed set of users, and the total user cache sizes must be large enough to store all files in the library. However, in our schemes, the user group partition is dynamically changing, and each user can communicate with any set of users via network routing. Moreover, our model has the server share communication loads with the users, resulting in an allocation problem on communication loads between the broadcast network and D2D network. Finally, our schemes achieve a tradeoff between the cooperation gain, parallel gain and multicast gain, while the schemes in [1,2,35] only achieve the multicast gain.

The remainder of this paper is as follows. Section 2 presents the system model, and defines the main problem studied in this paper. We summarize the obtained main results in Section 3. Following that is a detailed description of the centralized coded caching scheme with user cooperation in Section 4. Section 5 extends the techniques we developed for the centralized caching problem to the setting of decentralized random caching. Section 6 concludes this paper.

2. System Model and Problem Definition

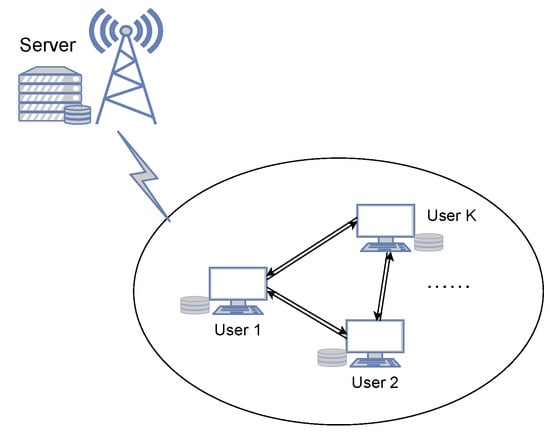

Consider a cache-aided network consisting of a single server and K users as depicted in Figure 1. The server has a library of N independent files . Each file , , is uniformly distributed over

for some positive integer F. The server connects with K users through a noisy-free shared link but rate-limited to a network speed of bits per second (bits/s). Each user is equipped with a cache memory of size bits, for some , and can communicate with each other via a D2D network.

Figure 1.

Caching system considered in this paper. A server connects with K cache-enabled users and the users can cooperate through a flexible network.

We mainly focus on two types of D2D networks: a shared link as in [1,2] and a flexible routing network introduced in [18]. In the case of a shared link, all users connect with each other through a shared error-free link but rate-limited to bits/s. In the flexible routing network, K users can arbitrarily form multiple groups via network routing, in each of which at most one user can send error-free data packets at a network speed bits/s to the remaining users within the group. To unify these two types of D2D networks, we introduce an integer , which denotes the maximum number of groups allowed to send data parallelly in the D2D network. For example, when , the D2D network degenerates into a shared link, and when , it turns to be the flexible network.

The system works in two phases: a placement phase and a delivery phase. In the placement phase, all users will access the entire library and fill the content to their caching memories. More specifically, each user k, for , maps to its cache content:

for some caching function

In the delivery phase, each user requests one of the N files from the library. We denote the demand of user k as , and its desired file as . Let denotes the request vector. In this paper, we investigate the worst request case where each user makes a unique request.

Once the request vector is informed to the server and all users, the server produces the symbol

and broadcasts it to all users through the broadcast network. Meanwhile, user produces the symbol (Each user k can produce as a function of and the received signals sent by the server, but because all users can access to the server’s signal due to the fact that the server broadcasts its signals to the network, it is equivalent to generating as a function ).

and sends it to a set of intended users through the D2D network. Here, represents the set of destination users served by node k, and are some encoding functions

where and denote the transmission rate sent by the server in the broadcast network and by each user in the D2D network, respectively. Here we focus on the symmetric case where all users have the same transmission rate. Due to the constraint of , at most users can send signals parallelly in each channel use. The set of users who send signals in parallel could be adaptively changed in the delivery phase.

At the end of the delivery phase, due to the error-free transmission in the broadcast and D2D networks, user k observes symbols sent to them, i.e., , and decodes its desired message as where is a decoding function.

We define the worst-case probability of error as

A coded caching scheme consists of caching functions , encoding functions and decoding functions . We say that the rate region is achievable if for every and every large enough file size F, there exists a coded caching scheme such that is less than .

Since the server and the users send signals in parallel, the total transmission delay, denoted by T, can be defined as

When , e.g., , one small adjustment allowing our scheme to continue to work is multiplying by , where is a devisable parameter introduced later.

Our goal is to design a coded caching scheme to minimize the transmission delay. Finally, in this paper we assume and . Extending the results to other scenarios is straightforward, as mentioned in [1].

3. Main Results

We first establish a general lower bound on the transmission delay for the system model described in Section 2, then present two upper bounds of the optimal transmission delay achieved by our centralized and decentralized coded caching schemes, respectively. Finally, we present the optimality results of these two schemes.

Theorem 1

(Lower Bound). For memory size , the optimal transmission delay is lower bounded by

Proof.

See the proof in Appendix A. □

3.1. Centralized Coded Caching

In the following theorem, we present an upper bound on the transmission delay for the centralized caching setup.

Theorem 2

(Upper Bound for the Centralized Scenario). Let , and . For memory size , the optimal transmission delay is upper bounded by , where

For general , the lower convex envelope of these points is achievable.

Proof.

See scheme in Section 4. □

The following simple example shows that the proposed upper bound can greatly reduce the transmission delay.

Example 1.

Consider a network described in Section 2 with . The coded caching scheme without D2D communication [1] has the server multicast an XOR message useful for all K users, achieving the transmission delay . The D2D coded caching scheme [35] achieves the transmission delay . The achievable transmission delay in Theorem 2 equals by letting , almost twice as short as the transmission delay of previous schemes if K is sufficiently large.

From (10), we obtain that the optimal value of , denoted by , equals 1 if and to if . When ignoring all integer constraints, we obtain . We rewrite this choice as follows:

Remark 1.

From (11), we observe that when M is small such that , we have . As M is increasing, becomes , smaller than . When M is sufficiently large such that , only one user should be allowed to send information, i.e., . This indicates that letting more users parallelly send information could be harmful. The main reason for this phenomenon is the existence of a tradeoff between the multicast gain, cooperation gain and parallel gain, which will be introduced below in this section.

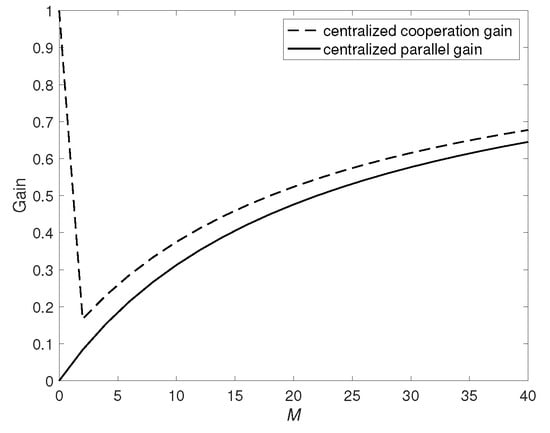

Comparing with the transmission delay achieved by Maddah-Ali and Niesen’s scheme for the broadcast network [1], i.e., , consists of an additional factor

referred to as centralized cooperation gain, as it arises from user cooperation. Comparing with the transmission delay achieved by the D2D coded caching scheme [35], i.e., , consists of an additional factor

referred to as centralized parallel gain, as it arises from parallel transmission among the server and users. Both gains depend on K, and .

Substituting the optimal into (12), we have

When fixing , in general is not a monotonic function of M. More specifically, when M is small enough such that , the function is monotonically decreasing, indicating that the improvement caused by introducing D2D communication. This is mainly because relatively larger M allows users to share more common data with each other, providing more opportunities on user cooperation. However, when M grows larger such that , the local and global caching gains become dominant, and less improvement can be obtained from user cooperation, turning to a monotonic increasing function of M,

Similarly, substituting the optimal into (13), we obtain

Equation (15) shows that is monotonically increasing with t, mainly due to the fact that when M increases, more content can be sent through the D2D network without the help of the central server, decreasing the improvement from parallel transmission between the server and users.

Figure 2.

Centralized cooperation gain and parallel gain when , and .

Remark 2.

Larger α could lead to better parallel and cooperation gain (more uses can concurrently multicast signals to other users), but will result in worse multicast gain (signals are multicast to fewer users in each group). The choice of α in (11) is in fact a tradeoff between the multicast gain, parallel gain and cooperation gain.

The proposed scheme achieving the upper bound in Theorem 2 is order-optimal.

Theorem 3.

For memory size ,

Proof.

See the proof in Appendix B. □

The exact gap of could be much smaller. One could apply the method proposed in [3] to obtain a tighter lower bound and shrink the gap. In this paper, we only prove the order optimality of the proposed scheme, and leave the work of finding a smaller gap as the future work.

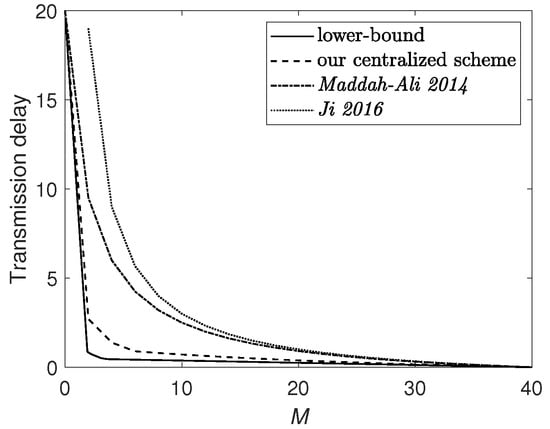

Figure 3 plots the lower bound (9) and upper bounds achieved by various schemes, including the proposed scheme, the scheme Maddah-Ali 2014 in [1] which considers the broadcast network without D2D communication, and the scheme Ji 2016 in [35], which considers the D2D network without server. It is obvious that our scheme outperforms the previous schemes and approaches closely to the lower bound.

Figure 3.

Transmission delay when , and . The upper bounds are achieved under the centralized caching scenario.

3.2. Decentralized Coded Caching

We exploit the multicast gain from coded caching, D2D communication, and parallel transmission between the server and users, leading to the following upper bound.

Theorem 4

(Upper Bound for the Decentralized Scenario). Define . For memory size , the optimal transmission delay is upper bounded by

where

with

Proof.

Here, represents the transmission rate of sending contents that are not cached by any user, and represent the transmission rate sent by the server via the broadcast network, and the transmission rate sent by users via the D2D network, respectively. Equation (17) balances the communication loads assigned to the server and users. See more detailed proof in Section 5. □

The key idea of the scheme achieving (17) is to partition K users into groups for each communication round , and let each group perform the D2D coded caching scheme [35] to exchange information. The main challenge is that that among all groups, there are groups of the same size s, and an abnormal group of size if , leading to an asymmetric caching setup. One may use the scheme [35] for the groups of size s, for the group of size , but how to exploit the caching resource and communication capability of all groups while balancing communication loads among the two types of groups to minimize the transmission delay remains elusive and needs to be carefully designed. Moreover, this challenge poses complexities both in establishing the upper bound and in optimality proof.

Remark 3.

The upper bound in Theorem 4 is achieved by setting the number of users that exactly send signals in parallel as follows:

If , the number of users who send data in parallel is smaller than , indicating that always letting more users parallelly send messages could cause higher transmission delay. For example, when , and , we have .

Remark 4.

From the definitions of , , and , it is easy to obtain that ,

decreases as increases, and increases as increases if .

Due to the complex term , in Theorem 4 is hard to evaluate. Since is increasing as increases (see Remark 4), substituting the following upper bound of into (17) provides an efficient way to evaluate .

Corollary 1.

For memory size , the upper bound of is given below:

- (a shared link):

- (a flexible network):

Proof.

See the proof in Appendix C. □

Recall that the transmission delay achieved by the decentralized scheme without D2D communication [2] is equal to given in (19). We define the ratio between and as decentralized cooperation gain:

with because of . Similar to the centralized scenario, this gain arises from the coordination between users in the D2D network. Moreover, we also compare with the transmission delay , achieved by the D2D decentralized coded caching scheme [35], and define the ratio between and as decentralized parallel gain:

where arises from the parallel transmission between the server and the users.

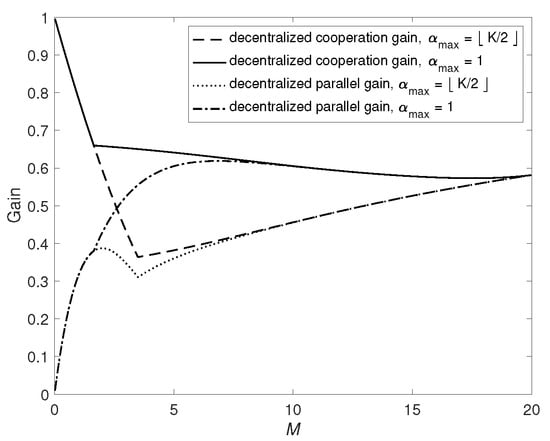

We plot the decentralized cooperation gain and parallel gain for the two types of D2D networks in Figure 4 when and . It can be seen that and in general are not monotonic functions of M. Here performs in a way similar to . When M is small, the function is monotonically decreasing from value 1 until reaching the minimum. For larger M, the function turns to monotonically increase with M. The reason for this phenomenon is that in the decentralized scenario, when M increases, the proportion of subfiles that are not cached by any user and must be sent by the server is decreasing. Thus, there are more subfiles that can be sent parallelly via D2D network as M increases. Meanwhile, the decentralized scheme in [2] offers an additional multicasting gain. Therefore, we need to balance these two gains to reduce the transmission delay.

Figure 4.

Decentralized cooperation gain and parallel gain when and .

The function behaves differently as it monotonically increases when M is small. After reaching the maximal value, the function decreases monotonically until meeting the local minimum (The abnormal bend in parallel gain when comes from a balance effect between the and in (27)), then turns to be a monotonic increasing function for large M. Similar to the centralized case, as M increases, the impact of parallel transmission among the server and users becomes smaller since more data can be transmitted by the users.

Theorem 5.

Define and , which tends to 0 as K tends to infinity. For memory size ,

- if (shared link), then

- if , then

Proof.

See the proof in Appendix D. □

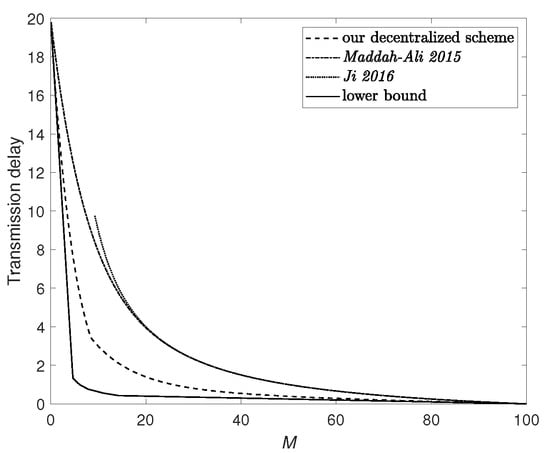

Figure 5 plots the lower bound in (9) and upper bounds achieved by various decentralized coded caching schemes, including our scheme, the scheme Maddah-Ali 2015 in [2] which considers the case without D2D communication, and the scheme Ji 2016 in [35] which considers the case without server.

Figure 5.

Transmission delay when , and . The upper bounds are achieved under the decentralized random caching scenario.

4. Coding Scheme under Centralized Data Placement

In this section, we describe a novel centralized coded caching scheme for arbitrary K, N and M such that is a positive integer. The scheme can be extended to the general case by following the same approach as in [1].

We first use an illustrative example to show how we form D2D communication groups, split files and deliver data, and then present our generalized centralized coding caching scheme.

4.1. An Illustrative Example

Consider a network consisting of users with cache size , and a library of files. Thus, . Divide all six users into two groups of equal size, and choose an integer that guarantees to be an integer. (According to (11) and (29), one optimal choice could be (, , ), here we choose (, , ) for simplicity, and also in order to demonstrate that even with a suboptimal choice, our scheme still outperforms that in [1,35]). Split each file , for , into subfiles:

We list all the requested subfiles uncached by all users as follows: for ,

The users can finish the transmission in different partitions. Table 1 shows the transmission in four different partitions over the D2D network.

Table 1.

Subfiles sent by users in different partition, .

In Table 1, all users first send XOR symbols with superscript . Please note that the subfiles and are not delivered at the beginning since is not an integer. Similarly, for subfiles with , and remain to be sent to user 3 and 4. In the last transmission, user 1 delivers the XOR message to user 2 and 3, and user 6 multicasts to user 5 and 6. The transmission rate in the D2D network is

For the remaining subfiles with superscript , the server delivers them in the same way as in [1]. Specifically, it sends symbols , for all . Thus, the rate sent by the server is , and the transmission delay , which is less than the delay achieved by the coded caching schemes for the broadcast network [1] and the D2D communication [35], respectively.

4.2. The Generalized Centralized Coding Caching Scheme

In the placement phase, each file is first split into subfiles of equal size. More specifically, split into subfiles as follows: . User k caches all the subfiles if for all , occupying the cache memory of bits. Then split each subfile into two mini-files as , where

with

Here, the mini-file and will be sent by the server and users, respectively. For each mini-file , split it into pico-files of equal size , i.e., where satisfies

As we will see later, condition (29) ensures that communication loads can be optimally allocated between the server and the users, and (30) ensures that the number of subfiles is large enough to maximize multicast gain for the transmission in the D2D network.

In the delivery phase, each user k requests file . The request vector is informed by the server and all users. Please note that different parts of file have been stored in the user cache memories, and thus the uncached parts of can be sent both by the server and users. Subfiles

are requested by user k and will be sent by the users via the D2D network. Subfiles

are requested by user k and will be sent by the server via the broadcast network.

First consider the subfiles sent by the users. Partition the K users into groups of equal size:

where for , , and , if . In each group , one of users plays the role of server and sends symbols based on its cached contents to the remaining users within the group.

Focus on a group and a set . If , then all nodes in share subfiles

In this case, user sends XOR symbols that contains the requested subfiles useful to all remaining users in , i.e., where is a function of which avoids redundant transmission of any fragments.

If , then the nodes in share subfiles

In this case, user sends an XOR symbol that contains the requested subfiles for all remaining users in , i.e., . Other groups perform the similar steps and concurrently deliver the remaining requested subfiles to other users.

By changing group partition and performing the delivery strategy described above, we can send all the requested subfiles

to the users.

Since groups send signals in a parallel manner ( users can concurrently deliver contents), and each user in a group delivers a symbol containing non-repeating pico-files requested by other users, in order to send all requested subfiles in (31), we need to send in total

XOR symbols, each of size bits. Notice that is chosen according to (30), ensuring that (32) equals to an integer. Thus, we obtain as

where the last equality holds by (29).

Now consider the delivery of the subfiles sent by the server. Apply the delivery strategy as in [1], i.e., the server broadcasts

to all users, for all . We obtain the transmission rate of the server

5. Coding Scheme under Decentralized Data Placement

In this section, we present a novel decentralized coded caching scheme for joint broadcast network and D2D network. The decentralized scenario is much more complicated than the centralized scenario, since each subfile can be stored by users, leading to a dynamic file-splitting and communication strategy in the D2D network. We first use an illustrative example to demonstrate how we form D2D communication groups, split data and deliver data, and then present our generalized coding caching scheme.

5.1. An Illustrative Example

Consider a joint broadcast and D2D network consisting of users. When using the decentralized data placement strategy, the subfiles cached by user k can be written as

We focus on the delivery of subfiles , i.e., each subfile is stored by users. A similar process can be applied to deliver other subfiles with respect to .

To allocate communication loads between the server and users, we divide each subfile into two mini-files , where mini-files and will be sent by the server and users, respectively. To reduce the transmission delay, the size of and need to be chosen properly such that , i.e., the transmission rate of the server and users are equal; see (37) and (39) ahead.

Divide all the users into two non-intersecting groups , for which satisfies

There are kinds of partitions in total, thus . Please note that for any user , of its requested mini-files are already cached by the rest users in , for .

To avoid repetitive transmission of any mini-file, each mini-file in

is divided into non-overlapping pico-files and , i.e.,

The sizes of and need to be chosen properly to have equal transmission rate of group and ; see (51) and (52) ahead.

To allocate communication loads between the two different types of groups, split each and into 3 and two equal fragments, respectively, e.g.,

During the delivery phase, in each round, one user in each group produces and multicasts an XOR symbol to all other users in the same group, as shown in Table 2.

Table 2.

Parallel user delivery when , , and , .

Please note that in this example, each group only appears one time among all partitions. However, for some other values of s, each group could appear multiple times in different partitions. For example, when , group appears in both partitions and . To reduce the transmission delay, one should balance communication loads between all groups, and between the server and users as well.

5.2. The Generalized Decentralized Coded Caching Scheme

In the placement phase, each user k applies the caching function to map a subset of bits of file into its cache memory at random: The subfiles cached by user k can be written as When the size of file F is sufficiently large, by the law of large numbers, the subfile size with high probability can be written by

The delivery procedure can be characterized into three different levels: allocating communication loads between the server and user, inner-group coding (i.e., transmission in each group) and parallel delivery among groups.

5.2.1. Allocating Communication Loads between the Server and User

To allocate communication loads between the server and users, split each subfile , for , into two non-overlapping mini-files

where

and is a design parameter whose value is determined in Remark 5.

Mini-files will be sent by the server using the decentralized coded caching scheme for the broadcast network [2], leading to the transmission delay

where is defined in (19).

Mini-files will be sent by users using parallel user delivery described in Section 5.2.3. The corresponding transmission rate is

where represents the transmission bits sent by each user normalized by F.

Since subfile is not cached by any user and must be sent exclusively from the server, the corresponding transmission delay for sending is

where coincides with the definition in (18).

According to (8), we have .

Remark 5

(Choice of ).The parameter λ is chosen such that is minimized. If , then the inequality always holds and reaches the minimum with . If , solving yields and

5.2.2. Inner-Group Coding

Given parameters where , , with indicators described in (37) and (51), and , we present how to successfully deliver

to every user via D2D communication.

Split each into non-overlapping fragments of equal size, i.e.,

and each user takes turn to broadcast XOR symbol

where is a function of which avoids redundant transmission of any fragments. The XOR symbol will be received and decoded by the remaining users in .

For each group , inner-group coding encodes in total of , and each XOR symbol in (43) contains fragments required by users in .

5.2.3. Parallel Delivery among Groups

The parallel user delivery consists of rounds characterized by . In each round s, mini-files

are recovered through D2D communication.

The key idea is to partition K users into groups for each communication round , and let each group perform the D2D coded caching scheme [35] to exchange information. If , there will be numbers of groups of the same size s, and an abnormal group of size , leading to an asymmetric caching setup. We optimally allocate the communication loads between the two types of groups, and between the broadcast network and D2D network as well.

Based on K, s and , the delivery strategy in the D2D network is divided into 3 cases:

- Case 1: . In this case, users are allowed to send data simultaneously. Select users from all users and divide them into groups of equal size s. The total number of such kinds of partition isIn each partition, users, selected from groups, respectively, send data in parallel via the D2D network.

- Case 2: and . In this case, choose users from all users and partition them into groups of equal size s. The total number of such kind partition isIn each partition, users selected from groups of equal size s, respectively, together with an extra user selected from the abnormal group of size send data in parallel via the D2D network.

- Case 3: and . In this case, every s users form a group, resulting in groups consisting of users. The remaining users form an abnormal group. The total number of such kind of partition isIn each partition, users selected from groups of equal size s, respectively, together with an extra user selected from the abnormal group of size send data in parallel via the D2D network.

Thus, the exact number of users who parallelly send signals can be written as follows:

Please note that each group re-appears

times among partitions.

Now we present the decentralized scheme for these three cases as follows.

Case 1 (): Consider a partition , denoted by

where and , and .

Since each group re-appears times among partitions, and users take turns to broadcast XOR symbols (43) in each group , in order to guarantee that each group can send a unique fragment without repetition, we split each mini-file into fragments of equal size.

Each group , for and , performs inner-group coding (see Section 5.2.2) with parameters

for all s satisfying . For each round r, all groups parallelly send XOR symbols containing fragments required by other users of its group. By the fact that the partitioned groups traverse every set , i.e.,

and since inner-group coding enables each group to recover

we can recover all required mini-files

Case 2 ( and ): We apply the same delivery procedure as Case 1, except that is replaced by and . Thus, the transmission delay in round s is

Case 3 ( and ): Consider a partition , denoted as

where , , and and with

Since group and have different group sizes, we further split each mini-file into two non-overlapping fragments such that

where is a designed parameter satisfying (52).

Split each mini-file and into fragments of equal size:

Following the similar encoding operation in (43), group and group send the following XOR symbols, respectively:

For each , the transmission delay for sending the XOR symbols above by group and group can be written as

respectively. Since and group can send signals in parallel, by letting

we eliminate the parameter and obtain the balanced transmission delay at users for Case 3:

Remark 6.

In each round , all requested mini-files can be recovered by the delivery strategies above. By Remark 6, the transmission delay in the D2D network is

where is defined in (20) and

6. Conclusions

In this paper, we considered a cache-aided communication via joint broadcast network with a D2D network. Two novel coded caching schemes were proposed for centralized and decentralized data placement settings, respectively. Both schemes achieve a parallel gain and a cooperation gain by efficiently exploiting communication opportunities in the broadcast and D2D networks, and optimally allocating communication loads between the server and users. Furthermore, we showed that in the centralized case, letting too many users parallelly send information could be harmful. The information theoretic converse bounds were established, with which we proved that the centralized scheme achieves the optimal transmission delay within a constant multiplicative gap in all regimes, and the decentralized scheme is also order-optimal when the cache size of each user is larger than a small threshold which tends to zero as the number of users tends to infinity. Our work indicates that combining the cache-aided broadcast network with the cache-aided D2D network can greatly reduce the transmission latency.

Author Contributions

Project administration, Y.W.; Writing—original draft, Z.H., J.C. and X.Y.; Writing—review & editing, S.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China grant number 61901267.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of the Converse

Let and denote the optimal rate sent by the server and each user. We first consider an enhance system where every user is served by an exclusive server and user, which both store full files in the database, then we are easy to obtain the following lower bound:

Another lower bound follows similar idea to [1]. However, due to the flexibility of D2D network, the connection and partitioning status between users can change during the delivery phase, prohibiting the direct application of the proof in [1] into the hybrid network considered in this paper. Moreover, the parallel transmission of the server and many users creates abundant different signals in the networks, making the scenario more sophisticated.

Consider the first s users with cache contents . Define as the signal sent by the server, and as the signals sent by the users, respectively, where for and . Assume that are determined by , and . Additionally, define , as the signals which enable the users to decode . Continue the same process such that , are the signals which enable the users to decode . We then have , , and

to determine . Let

Consider the cut separating , , and from the corresponding s users. By the cut-set bound and (A2), we have

Since we have and from the above definition, we obtain

Appendix B

We prove that is within a constant multiplicative gap of the minimum transmission delay for all values of M. To prove the result, we compare them in the following regimes.

- If , from Theorem 1, we havewhere (a) follows from [1] [Theorem 3]. Then we havewhere the last inequality holds by setting .

- If , we havewhere holds by choosing .

- If , setting , we havewhere holds since for any . Please note that for all values of M, the transmission delay

Appendix C

Appendix C.1. Case αmax =

When , we have

where denotes the user’s transmission rate for a flexible D2D network with . In the flexible D2D network, at most users are allowed to transmit messages simultaneously, in which the user transmission turns to unicast.

Please note that in each term of the summation:

where the last inequality holds by and

Therefore, by (A15), can be rewritten as

Appendix C.2. Case αmax =1

When , the cooperation network degenerates into a shared link where only one user acts as the server and broadcasts messages to the remaining users. A similar derivation is given in [35]. In this case, can be rewritten as

where the inequality holds by the fact that .

Appendix D

Appendix D.1. When αmax =

Recall that , which tends to zero as K goes to infinity. We first introduce the following three lemmas.

Lemma A1.

Given arbitrary convex function and arbitrary concave function , if they intersect at two points with , then for all .

We omit the proof of Lemma A1 as it is straightforward.

Lemma A2.

For memory size and , we have

Proof.

When , from Equation (20), we have

where the first inequality holds by letting and . It is easy to show that is a concave function of p by verifying . □

On the other hand, one can easily show that

is a convex function of p by showing . Since the two functions and intersect at and with , from Lemma A1 and (A16), we have

for all . From Remark 4, we know that if

Lemma A3.

For memory size and , we have

From Theorem 1, we have . Thus, we obtain

Next, we use Lemmas A2 and A3 to prove that when ,

Appendix D.1.1. Case αmax = and p ≥ pth

In this case, from Lemma A2, we have

Thus, from Lemma A3,

Appendix D.1.2. Case αmax = and p ≥ pth

From the definition of in (17), we have

From Lemma A3, we know that

and thus only focus on the upper bound of .

According to Theorem 1, has the following two lower bounds: , and

Let and , then we have

Here and both are monotonic functions of p according to the following properties:

Additionally, notice that if , then , and if , . Therefore, the maximum value of is chosen at which satisfying , implying that

Appendix D.2. When αmax = 1

From Equation (24), we obtain that

where the second inequality holds by (A19) and the last equality holds by the definition in (19). On the other hand, rewrite the second lower bound of :

If , by (A27) and since (see Remark 4), we have

the last equality holds by the fact .

If , from Lemma A2, we have and

where the second inequality holds by (A27) and the last equality is from the fact in this case.

References

- Maddah-Ali, M.A.; Niesen, U. Fundamental limits of caching. IEEE Trans. Inf. Theory 2014, 60, 2856–2867. [Google Scholar] [CrossRef]

- Maddah-Ali, M.A.; Niesen, U. Decentralized coded caching attains order-optimal memory-rate tradeoff. IEEE/ACM Trans. Netw. 2015, 23, 1029–1040. [Google Scholar] [CrossRef]

- Yu, Q.; Maddah-Ali, M.A.; Avestimehr, A.S. Characterizing the Rate-Memory Tradeoff in Cache Networks within a Factor of 2. IEEE Trans. Inf. Theory 2019, 65, 647–663. [Google Scholar] [CrossRef]

- Wan, K.; Tuninetti, D.; Piantanida, P. On the optimality of uncoded cache placement. In Proceedings of the IEEE Information Theory Workshop (ITW), Cambridge, UK, 11–14 September 2016; pp. 161–165. [Google Scholar]

- Yu, Q.; Maddah-Ali, M.A.; Avestimehr, A.S. The exact rate-memory tradeoff for caching with uncoded prefetching. IEEE Trans. Inf. Theory 2018, 64, 1281–1296. [Google Scholar] [CrossRef]

- Yan, Q.; Cheng, M.; Tang, X.; Chen, Q. On the placement delivery array design for centralized coded caching scheme. IEEE Trans. Inf. Theory 2017, 63, 5821–5833. [Google Scholar] [CrossRef]

- Zhang, D.; Liu, N. Coded cache placement for heterogeneous cache sizes. In Proceedings of the IEEE Information Theory Workshop (ITW), Guangzhou, China, 25–29 November 2018; pp. 1–5. [Google Scholar]

- Wang, S.; Peleato, B. Coded caching with heterogeneous user profiles. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), France, Paris, 7–12 July 2019; pp. 2619–2623. [Google Scholar]

- Zhang, J.; Lin, X.; Wang, C.C. Coded caching for files with distinct file sizes. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Hong Kong, China, 14–19 June 2015; pp. 1686–1690. [Google Scholar]

- Ibrahim, A.M.; Zewail, A.A.; Yener, A. Centralized coded caching with heterogeneous cache sizes. In Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC), San Francisco, CA, USA, 19–22 March 2017; pp. 1–6. [Google Scholar]

- Ibrahim, A.M.; Zewail, A.A.; Yener, A. Coded caching for heterogeneous systems: An Optimization Perspective. IEEE Trans. Commun. 2019, 67, 5321–5335. [Google Scholar] [CrossRef]

- Amiri, M.M.; Yang, Q.; Gündüz, D. Decentralized caching and coded delivery with distinct cache capacities. IEEE Trans. Commun. 2017, 65, 4657–4669. [Google Scholar] [CrossRef]

- Cao, D.; Zhang, D.; Chen, P.; Liu, N.; Kang, W.; Gündüz, D. Coded caching with asymmetric cache sizes and link qualities: The two-user case. IEEE Trans. Commun. 2019, 67, 6112–6126. [Google Scholar] [CrossRef]

- Niesen, U.; Maddah-Ali, M.A. Coded caching with nonuniform demands. IEEE Trans. Inf. Theory 2017, 63, 1146–1158. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X.; Wang, X. Coded caching under arbitrary popularity distributions. IEEE Trans. Inf. Theory 2018, 64, 349–366. [Google Scholar] [CrossRef]

- Pedarsani, R.; Maddah-Ali, M.A.; Niesen, U. Online coded caching. IEEE/ACM Trans. Netw. 2016, 24, 836–845. [Google Scholar] [CrossRef]

- Daniel, A.M.; Yu, W. Optimization of heterogeneous coded caching. IEEE Trans. Inf. Theory 2020, 66, 1893–1919. [Google Scholar] [CrossRef]

- Shariatpanahi, S.P.; Motahari, S.A.; Khalaj, B.H. Multi-server coded caching. IEEE Trans. Inf. Theory 2016, 62, 7253–7271. [Google Scholar] [CrossRef]

- Zhang, J.; Elia, P. Fundamental limits of cache-aided wireless BC: Interplay of coded-caching and CSIT feedback. IEEE Trans. Inf. Theory 2017, 63, 3142–3160. [Google Scholar] [CrossRef]

- Bidokhti, S.S.; Wigger, M.; Timo, R. Noisy broadcast networks with receiver caching. IEEE Trans. Inf. Theory 2018, 64, 6996–7016. [Google Scholar] [CrossRef]

- Sengupta, A.; Tandon, R.; Simeone, O. Cache aided wireless networks: Tradeoffs between storage and latency. In Proceedings of the 2016 Annual Conference on Information Science and Systems (CISS), Princeton, NJ, USA, 15–18 March 2016; pp. 320–325. [Google Scholar]

- Tandon, R.; Simeone, O. Cloud-aided wireless networks with edge caching: Fundamental latency trade-offs in fog radio access networks. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Barcelona, Spain, 10–15 July 2016; pp. 2029–2033. [Google Scholar]

- Karamchandani, N.; Niesen, U.; Maddah-Ali, M.A.; Diggavi, S.N. Hierarchical coded caching. IEEE Trans. Inf. Theory 2016, 62, 3212–3229. [Google Scholar] [CrossRef]

- Wang, K.; Wu, Y.; Chen, J.; Yin, H. Reduce transmission delay for caching-aided two-layer networks. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), France, Paris, 7–12 July 2019; pp. 2019–2023. [Google Scholar]

- Wan, K.; Ji, M.; Piantanida, P.; Tuninetti, D. Caching in combination networks: Novel multicast message generation and delivery by leveraging the network topology. In Proceedings of the IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar]

- Naderializadeh, N.; Maddah-Ali, M.A.; Avestimehr, A.S. Fundamental limits of cache-aided interference management. IEEE Trans. Inf. Theory 2017, 63, 3092–3107. [Google Scholar] [CrossRef]

- Xu, F.; Tao, M.; Liu, K. Fundamental tradeoff between storage and latency in cache-aided wireless interference Networks. IEEE Trans. Inf. Theory 2017, 63, 7464–7491. [Google Scholar] [CrossRef]

- Ji, M.; Tulino, A.M.; Llorca, J.; Caire, G. Order-optimal rate of caching and coded multicasting with random demands. IEEE Trans. Inf. Theory 2017, 63, 3923–3949. [Google Scholar] [CrossRef]

- Ji, M.; Tulino, A.M.; Llorca, J.; Caire, G. Caching in combination networks. In Proceedings of the 2015 49th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 8–11 November 2015. [Google Scholar]

- Ravindrakumar, V.; Panda, P.; Karamchandani, N.; Prabhakaran, V. Fundamental limits of secretive coded caching. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Barcelona, Spain, 10–15 July 2016; pp. 425–429. [Google Scholar]

- Tang, L.; Ramamoorthy, A. Coded caching schemes with reduced subpacketization from linear block codes. IEEE Trans. Inf. Theory 2018, 64, 3099–3120. [Google Scholar] [CrossRef]

- Cheng, M.; Li, J.; Tang, X.; Wei, R. Linear coded caching scheme for centralized networks. IEEE Trans. Inf. Theory 2021, 67, 1732–1742. [Google Scholar] [CrossRef]

- Wan, K.; Caire, G. On coded caching with private demands. IEEE Trans. Inf. Theory 2021, 67, 358–372. [Google Scholar] [CrossRef]

- Hassanzadeh, P.; Tulino, A.M.; Llorca, J.; Erkip, E. Rate-memory trade-off for caching and delivery of correlated sources. IEEE Trans. Inf. Theory 2020, 66, 2219–2251. [Google Scholar] [CrossRef]

- Ji, M.; Caire, G.; Molisch, A.F. Fundamental limits of caching in wireless D2D networks. IEEE Trans. Inf. Theory 2016, 62, 849–869. [Google Scholar] [CrossRef]

- Tebbi, A.; Sung, C.W. Coded caching in partially cooperative D2D communication networks. In Proceedings of the 9th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), Munich, Germany, 6–8 November 2017; pp. 148–153. [Google Scholar]

- Wang, J.; Cheng, M.; Yan, Q.; Tang, X. Placement delivery array design for coded caching scheme in D2D Networks. IEEE Trans. Commun. 2019, 67, 3388–3395. [Google Scholar] [CrossRef]

- Malak, D.; Al-Shalash, M.; Andrews, J.G. Spatially correlated content caching for device-to-device communications. IEEE Trans. Wirel. Commun. 2018, 17, 56–70. [Google Scholar] [CrossRef]

- Ibrahim, A.M.; Zewail, A.A.; Yener, A. Device-to-Device coded caching with distinct cache sizes. arXiv 2019, arXiv:1903.08142. [Google Scholar] [CrossRef]

- Pedersen, J.; Amat, A.G.; Andriyanova, I.; Brännström, F. Optimizing MDS coded caching in wireless networks with device-to-device communication. IEEE Trans. Wirel. Commun. 2019, 18, 286–295. [Google Scholar] [CrossRef]

- Chiang, M.; Zhang, T. Fog and IoT: An overview of research opportunities. IEEE Internet Things J. 2016, 3, 854–864. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).