Abstract

In statistical inference, the information-theoretic performance limits can often be expressed in terms of a statistical divergence between the underlying statistical models (e.g., in binary hypothesis testing, the error probability is related to the total variation distance between the statistical models). As the data dimension grows, computing the statistics involved in decision-making and the attendant performance limits (divergence measures) face complexity and stability challenges. Dimensionality reduction addresses these challenges at the expense of compromising the performance (the divergence reduces by the data-processing inequality). This paper considers linear dimensionality reduction such that the divergence between the models is maximally preserved. Specifically, this paper focuses on Gaussian models where we investigate discriminant analysis under five f-divergence measures (Kullback–Leibler, symmetrized Kullback–Leibler, Hellinger, total variation, and ). We characterize the optimal design of the linear transformation of the data onto a lower-dimensional subspace for zero-mean Gaussian models and employ numerical algorithms to find the design for general Gaussian models with non-zero means. There are two key observations for zero-mean Gaussian models. First, projections are not necessarily along the largest modes of the covariance matrix of the data, and, in some situations, they can even be along the smallest modes. Secondly, under specific regimes, the optimal design of subspace projection is identical under all the f-divergence measures considered, rendering a degree of universality to the design, independent of the inference problem of interest.

1. Introduction

1.1. Motivation

Consider a simple binary hypothesis testing problem in which we observe an n-dimensional sample X and aim to discern the underlying model according to:

The optimal decision rule (in the Neyman-Pearson sense) involves computing the likelihood ratio and the performance limit (sum of type I and type II errors) is related to the total variation distance between and . We emphasize that our focus is on the settings in which the n elements of X are not statistically independent, in which case the likelihood ratio cannot be decomposed into the product of the coordinate-level likelihood ratios. One of the key practical obstacles to solve such problems pertains to the computational cost of finding and performing the statistical tests. This renders a gap between the performance that is information-theoretically viable (unbounded complexity) versus a performance possible under bounded computational complexity [1,2].

Dimensionality reduction techniques have become an integral part of statistical analysis in high dimensions [3,4,5,6]. In particular, linear dimensionality reduction methods have been developed and used for over a century for various reasons, such as their low computational complexity and simple geometric interpretation, as well as for a multitude of applications, such as data compression, storage, and visualization, to name only a few. These methods linearly map the high-dimensional data to lower dimensions while ensuring that the desired features of the data are preserved. There exist two broad sets of approaches to linear dimensionality reduction in one dataset X, which we review next.

1.2. Related Literature

(1)Feature extraction: In one set of approaches, the objective is to select and extract informative and non-redundant features in the dataset X. These approaches are generally unsupervised. These widely-used approaches are principal component analysis (PCA), and its variations [7,8,9], multidimensional scaling (MDS) [10,11,12,13], and sufficient dimensionality reduction (SDR) [14]. The objective of PCA is to retain as much variation in the data in a lower dimension by minimizing the reconstruction error. In contrast, MDS aims to maximize the scatter of the projection and maximizes an aggregate scatter metric. Finally, the objective of SDR is to design an orthogonal mapping of the data that makes the data X and the responses conditionally independent (given the projected data). There exist extensive variations to the three approaches, and we refer the reader to Reference [6] for more discussions.

(2)Class separation: In another set of approaches, the objective is to perform classification in the lower dimensional space. These approaches are supervised. Depending on the problem formulation and the underlying assumptions, the resulting decision boundaries between the models can be linear or non-linear. One approach pertinent to this paper’s scope is discriminant analysis (DA), that leverages the distinction between given models and designs a mapping such that its lower-dimensional output exhibits maximum separation across different models [15,16,17,18,19,20]. In general, this approach generates two matrices: within-class and between-class scatter matrices. The within-class scatter matrix shows the scatter of the samples around their respective class means, whereas, in contrast, the between-class scatter matrix captures the scatter of the samples around the mixture mean of all the models. Subsequently, a univariate function of these matrices is formed such that it increases when the between-class scatter becomes larger, or when the within-class scatter becomes smaller. Examples of such a function of between-class and within-class matrices is a classification index that includes the ratio of their determinants, difference of their determinants, and ratio of their traces [17]. These approaches focus on reducing the dimension to one and maximize separability between the two classes. There exist, however, studies that consider reducing to dimensions higher than one and separation across more than two classes. Finally, depending on the structure of the class-conditional densities, the resulting shape of the decision boundaries give rise to linear and quadratic DA.

The f-divergences between a pair of probability measures quantifies the similarity between them. Shannon [21] introduced the mutual information as a divergence measure, which was later studied comprehensively by Kullback and Leibler [22] and Kolmogorov [23], establishing the importance of such measures in information theory, probability theory, and related disciplines. The family of f-divergences, independently introduced by Csiszár [24], Ali and Silvey [25], and Morimoto [26], generalize the Kullback–Leibler divergence which enable characterizing the information-theoretic performance limits of a wide range of inference, learning, source coding, and channel coding problems. For instance, References [27,28,29,30] consider their application to various statistical decision-making problems [31,32,33,34]. More recent developments on the properties of f-divergence measures can be found in References [31,35,36,37].

1.3. Contributions

The contribution of this paper has two main distinctions from the existing literature on DA. First, DA generally focuses on the classification problem for determining the underlying model of the data. Secondly, motivated by the complexities of finding the optimal decision rules for classification (e.g., density estimation), the existing criteria used for separation are selected heuristically. In this paper, we study this problem by referring to the family of f-divergences as measures of the distinction between a pair of probability distributions. Such a choice has three main features: (i) it enables designing linear mappings for a wider range of inference problems (beyond classification); (ii) it provides the designs that are optimal for the inference problem at hand; and (iii) it enables characterizing the information-theoretic performance limits after linear mapping. Our analyses are focused on Gaussian models. Even though we observe that the design of the linear mapping has differences under different f-divergence measures, we have two main observations in the case of zero-mean Gaussian models: (i) the optimal design of the linear mapping is not necessarily along the most dominant components of the data matrix; and (ii) in certain regimes, irrespective of the choice of the f-divergence measure, the design of the linear map that retains the maximal divergence between the two models is robust. In such cases, this makes the optimal design of the linear map independent of the inference problem at hand rendering a degree of universality (in the considered space of the Gaussian probability measures).

The remainder of the paper is organized as follows. Section 2 provides the linear dimensionality reduction model, and it provides an overview of the f-divergence measures considered in this paper. Section 3 formulates the problem, and it helps to facilitate the mathematical analysis in subsequent sections. In Section 4, we provide a motivating operational interpretation for each f-divergence measure and then characterize an optimal design of the linear mapping for zero-mean Gaussian models. Section 5 considers numerical simulations for inference problems associated with the f-divergence measure of interest for zero-mean Gaussian models. Section 6 generalizes the theory to non-zero mean Gaussian models and discusses numerical algorithms that help characterize the design of the linear map, and Section 7 concludes the paper. A list of abbreviations used in this paper is provided on page 22.

2. Preliminaries

Consider a pair of n-dimensional Gaussian models:

where and are two distinct mean vectors and covariance matrices, respectively, and and denote their associated probability measures. The nature selects one model and generates a random variable . We perform linear dimensionality reduction on X via matrix , where , rendering

After linear mapping, the two possible distributions of Y induced by matrix are denoted by and , where

Motivated by inference problems that we discuss in Section 3, our objective is to design the linear mapping parameterized by matrix that ensures that the two possible distributions of Y, i.e., and , are maximally distinguishable. That is, to design as a function of the statistical models (i.e., , , and ) such that relevant notions of f-divergences between and are maximized. We use a number of f-divergence measures for capturing the distinction between and , each with a distinct operational meaning under specific inference problems. For this purpose, we denote the f-divergence of from by , where

We use the shorthand for the canonical notation for emphasizing the dependence on and for the simplicity in notations. denotes the expectation with respect to , and is a convex function that is strictly convex at 1 and . Strict convexity at 1 ensures that the f-divergence between a pair of probability measures is zero if and only if the probability measures are identical. Given the linear dimensionality reduction model in (3), the objective is to solve

for the following choices of the f-divergence measures.

- Kullback–Leibler (KL) divergence for :We also denote the KL divergence from to by .

- Symmetric KL divergence for :

- Squared Hellinger distance for :

- Total variation distance for :

- -divergence for :We also denote the -divergence from to by .

3. Problem Formulation

In this section, without loss of generality, we focus on the setting where one of the covariance matrices is the identity matrix, and the other one has a covariance matrix in order to avoid complex representations. One key observation is that the design of under different measures has strong similarities. We first note that, by defining , , and , designing for maximally distinguishing

is equivalent to designing for maximally distinguishing

Hence, without loss of generality, we focus on the setting where , , and . Next, we show that determining an optimal design for can be confined to the class of semi-orthogonal matrices.

Theorem 1.

For every , there exists a semi-orthogonal matrix such that .

Proof.

See Appendix A. □

This observation indicates that we can reduce the unconstrained problem in (6) to the following constrained problem:

We show that the design of in the case of , under the considered f-divergence measures, directly relates to analyzing the eigenspace of matrix . For this purpose, we denote the non-negative eigenvalues of ordered in the descending order by , where for an integer m we have defined . For an arbitrary permutation function , we denote the permutation of with respect to by . We also denote the eigenvalues of ordered in the descending order by . Throughout the analysis, we frequently use Poincaré separation theorem [38] for finding the row space of matrix with respect to the eigenvalues of .

Theorem 2

(Poincaré Separation Theorem). Let Σ be a real symmetric matrix and be a semi-orthogonal matrix. The eigenvalues of Σ denoted by (sorted in the descending order) and the eigenvalues of denoted by (sorted in the descending order) satisfy

Finally, we define the following functions, which we will refer to frequently throughout the paper:

In the next sections, we analyze the design of under different f-divergence measures. In particular, in Section 4 and Section 5, we focus on zero-mean Gaussian models for and where we provide an operational interpretation of the measure in the dichotomous mode in (4). Subsequently, we will discuss the generalization to non-zero mean Gaussian models in Section 6.

4. Main Results for Zero-Mean Gaussian Models

In this section, we analyze problem defined in (14) for each of the f-divergence measures separately. Specifically, for each case, we briefly provide an inference problem as a motivating example, in the context of which we relate the optimal performance limit of that inference problem to the f-divergence of interest. These analyses are provided in Section 4.1, Section 4.2, Section 4.3, Section 4.4 and Section 4.5. Subsequently, we provide the main results on the optimal design of the linear mapping matrix in Section 4.6.

4.1. Kullback Leibler Divergence

4.1.1. Motivation

The KL divergence, being the expected value of the log-likelihood ratio, captures, at least partially, the performance of a wide range of inference problems. One specific problem whose performance is completely captured by is the quickest change-point detection. Consider an observation process (time-series) in which the observations are generated by a distribution with probability measure specified in (2). This distribution changes to at an unknown (random or deterministic) time , i.e.,

Change-point detection algorithms sample the observation process sequentially and aim to detect the change point with the minimal delay after it occurs subject to a false alarm constraint. Hence, the two key figures of merit capturing the performance of a sequential change-point detection algorithm are the average detection delay () and the rate of false alarms. Whether the change-point is random or deterministic gives rise to two broad classes of quickest change-point detection problems, namely the Bayesian setting ( is random) and minimax setting ( is deterministic). Irrespective of their discrepancies in settings and the nature of performance guarantees, the for the (asymptotically) optimal algorithms are in the form [39]:

Hence, after the linear mapping induced by matrix , for the , we have

where and are constants specified by the false alarm constraints. Clearly, the design of that minimizes the will be maximizing the disparity between the pre- and post-change distributions and , respectively.

4.1.2. Connection between and

By noting that is a semi-orthogonal matrix and recalling that the eigenvalues of are denoted by , simple algebraic manipulations simplify to:

By setting, and leveraging, Theorem 2, the problem of finding an optimal design for that solves (14) can be found as the solution to:

where we have defined

Likewise, finding the optimal design for that optimizes when can be found by replacing by in (23). In either case, the optimal design of is constructed by choosing r eigenvectors of as the rows of . The results and observations are formalized in Section 4.6.

4.2. Symmetric KL Divergence

4.2.1. Motivation

The KL divergence discussed in Section 4.1 is an asymmetric measure of separation between two probability measures. It is symmetrized by adding two directed divergence measures in reverse directions. The symmetric KL divergence has applications in model selection problems in which the model selection criteria is based on a measure of disparity between the true model and the approximating models. As shown in Reference [40], using the symmetric KL divergence outperforms the individual directed KL divergences since it better reflects the risks associated with underfitting and overfitting of the models, respectively.

4.2.2. Connection between and

For a given , the symmetric KL divergence of interest specified in (8) is given by

By setting , and leveraging Theorem 2, the problem of finding an optimal design for that solves (14) can be found as the solution to:

where we have defined

4.3. Squared Hellinger Distance

4.3.1. Motivation

Squared Hellinger distance facilitates analysis in high dimensions, especially when other measures fail to take closed-form expressions. We will discuss an important instance of this in the next subsection in the analysis of . Squared Hellinger distance is symmetric, and it is confined in the range .

4.3.2. Connection between and

For a given matrix , we have the following closed-form expression:

By setting , and leveraging Theorem 2, the problem of finding an optimal design for that solves (14) can be found as the solution to:

where we have defined

4.4. Total Variation Distance

4.4.1. Motivation

The total variation distance appears as the key performance metric in binary hypothesis testing and in high-dimensional inference, e.g., Le Cam’s method for the binary quantization and testing of the individual dimensions (which is in essence binary hypothesis testing). In particular, for the simple binary hypothesis testing model in (65), the minimum total probability of error (sum of type-I and type-II error probabilities) is related to the total variation . Specifically, for a decision rule , the following holds:

The total variation between two Gaussian distributions does not have a closed-form expression. Hence, unlike the other settings, an optimal solution to (6) in this context cannot be obtained analytically. Alternatively, in order to gain intuition into the structure of a near optimal matrix , we design such that it optimizes known bounds on . In particular, we use two sets of bounds on . One set is due to bounding it via the Hellinger distance, and another set is due to a recent study that established upper and lower bounds that are identical up to a constant factor [41].

4.4.2. Connection between and

(1) Bounding by Hellinger Distance: The total variation distance can be bounded by the Hellinger distance according to

It can be readily verified that these bounds are monotonically increasing with in the interval . Hence, they are maximized simultaneously by maximizing the squared Hellinger distance as discussed in Section 4.3. We refer to this bound as the Hellinger bound.

(2) Matching Bounds up to a Constant: The second set of bounds that we used are provided in Reference [41]. These bounds relate the total variation between two Gaussian models to the Frobenius norm (FB) of a matrix related to their covariance matrices. Specifically, these FB-based bounds on the total variation are given by

where we have defined

Since the lower and upper bounds on are identical up to a constant, they will be maximized by the same design of .

4.5. -Divergence

4.5.1. Motivation

-divergence appears in a wide range of statistical estimation problems for the purpose of finding a lower bound on the estimation noise variance. For instance, consider the canonical problem of estimating a latent variable from the observed data X, and denote two candidate estimates by and . Define and as the probability measures of and , respectively. According to the Hammersly-Chapman-Robbins (HCR) bound on the quadratic loss function, for any estimator , we have

which, for unbiased estimators p and q, simplifies to the Cramér-Rao lower bound

depending on and through their -divergence. Besides the applications to estimation problems, is easier to compute compared to some of other f-divergence measures (e.g., total variation). Specifically, for product distributions tensorizes to be expressed in terms of the one-dimensional components that are easier to compute than the KL divergence and TV variation distance. Hence, a combination of bounding other measures with and then analyzing appears in a wide range of inference problems.

4.5.2. Connection between and

By setting , for a given matrix , from (11), we have the following closed-form expression:

where we have defined

As we show in Appendix C, for to exist (i.e., be finite), all the eigenvalues should fall in the interval . Subsequently, finding the optimal design for that optimizes when can be done by replacing in (38) by , which is given by

Based on this, and by following a similar line of argument as in the case of the KL divergence, designing an optimal reduces to identifying a subset of the eigenvalues of and assigning their associated eigenvectors as the rows of matrix . These observations are formalized in Section 4.6.

4.6. Main Results

In this section, we provide analytical closed-form solutions to design optimal matrices for the following f-divergence measures: , , , and . The total variation measure does not admit a closed-form for Gaussian models. In this case, we provide a design for that optimizes the bound we have provided for in Section 4.4. Due to their structural similarities of the results, we group and treat , , and in Theorem 3. Similarly, we group and treat and in Theorem 4.

Theorem 3

(, , ). For a given function , define the permutations:

Then, for and functions :

- For maximizing , set and select the eigenvalues of as

- Row of matrix is the eigenvector ofΣassociated with the eigenvalue .

Proof.

See Appendix B. □

By further leveraging the structures of functions , and , we can simplify approaches for designing the matrix . Specifically, note that the functions are all strictly convex functions taking their global minima at . Based on this, we have the following observations.

Corollary 1

(, , ). For maximizing , when , we have for all , and the rows of are eigenvectors of Σ associated with its r largest eigenvalues, i.e., .

Corollary 2

(, , ). For maximizing , when , we have for all , and the rows of are eigenvectors of Σ associated with its r smallest eigenvalues, i.e., .

Remark 1.

In order to maximize when , finding the best permutation of eigenvalues involves sorting all the n eigenvalues ’s and subsequently performing r comparisons as illustrated in Algorithm 1. This amounts to time complexity instead of time complexity involved in determining the design for in the case of Corollaries 1 and 2, which require finding the r extreme eigenvalues in determining the design for .

Remark 2.

The optimal design of often does not involve being aligned with the largest eigenvalues of the covariance matrixΣ, which is in contrast to some of the key approaches to linear dimensionality reduction that generally perform linear mapping along the eigenvectors associated with the largest eigenvalues of the covariance matrix. When the eigenvalues ofΣare all smaller than 1, in particular, will be designed by choosing eigenvectors associated with the smallest eigenvalues ofΣin order to preserve largest separability.

Next, we provide the counterpart results for the and -divergence measures. Their major distinction from the previous three measures is that, for these two, can be decomposed into a product of individual functions of the eigenvalues . Next, we provide the counterparts of Theorem 3 and Corollaries 1 and 2 for and .

Theorem 4

(, ). For a given function , define the permutations:

Then, for and functions :

- For maximizing , set and select the eigenvalues of as

- Row of matrix is the eigenvector ofΣassociated with the eigenvalue .

Proof.

See Appendix C. □

Next, note that is a strictly convex function taking its global minimum at . Furthermore, for are strictly convex over and take their global minimum at .

Corollary 3

(, ). For maximizing , when , we have for all , and the rows of are eigenvectors of Σ associated with its r largest eigenvalues, i.e., .

Corollary 4

(, ). For maximizing , when , we have for all , and the rows of are eigenvectors of Σ associated with its r smallest eigenvalues, i.e., .

| Algorithm 1: Optimal Permutation When |

|

Finally, we remark that, unlike the other measures, total variation does not admit a closed-form, and we used two sets of tractable bounds to analyze this case of total variations. By comparing the design of based on different bounds, we have the following observation.

Remark 3.

We note that both sets of bounds lead to the same design of when either or . Otherwise, each will be selecting a different set of the eigenvectors ofΣto construct according to the functions

5. Zero-Mean Gaussian Models–Simulations

5.1. KL Divergence

In this section, we show gains of the above analysis for the KL divergence measure through simulations on a change-point detection problem. We focus on the minimax setting in which the change-point is deterministic. The objective is to detect a change in the stochastic process with minimal delay after the change in the probability measure occurs at and define as the time that we can form a confident decision. A canonical model to quantify the decision delay is the conditional average detection delay () due to Pollak [42]

where is the expectation with respect to the probability distribution when the change happens at time . The objective of this formulation is to optimize the decision delay for the worst-case realization of the random change-point (that is, the change-point realization that leads to the maximum decision delay), while the constraints on the false alarm rate are satisfied. In this formulation, this worst-case realization is , in which case all the data points are generated from the post-change distribution. In the minimax setting, a reasonable measure of false alarms is the mean-time to false alarm, or its reciprocal, which is the false alarm rate () defined as

where is the expectation with respect to the distribution when a change never occurs, i.e., . A standard approach to balance the trade-off between decision delay and false alarm rates involves solving [42]

where controls the rate of false alarms. For the quickest change-point detection formulation in (48), the popular cumulative sum (CuSum) test generates the optimal solutions, involving computing the following test statistic:

Computing follows a convenient recursion given by

where . The CuSum statistic declares a change at a stopping time given by

where C is chosen such that the constraint on in (48) is satisfied.

In this setting, we consider two zero-mean Gaussian models with the following pre- and post-linear dimensionality reduction structures:

where the covariance matrix is generated randomly, and its eigenvalues are sampled from a uniform distribution. In particular, for the original data dimension n, eigenvalues are sampled such that , and the remaining eigenvalues are sampled such that . We note that this is done since the objective function lies in the same range for the eigenvalues within the range and . In order to consider the worst case detection delay, we set and generate stochastic observations according to the model described in (52) that follows the change-point detection model in (19). For every random realization of covariance matrix , we run the CuSum statistic (50), where we generate according to the following two schemes:

(1) Largest eigen modes: In this scheme, the linear map is designed such that its rows are eigenvectors associated with the r largest eigenvalues of .

(2) Optimal design: In this scheme, the linear map is designed such that its rows are eigenvectors associated with r eigenvalues of that maximize according to Theorem 3.

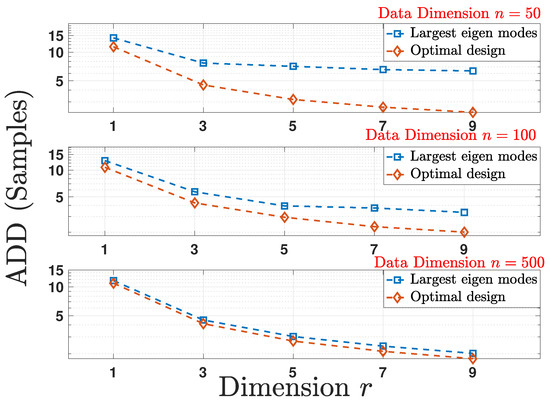

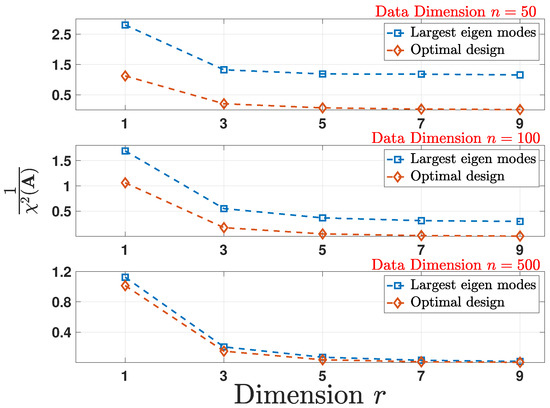

In order to evaluate and compare the performance of the two schemes, we compute the obtained by running a Monte-Carlo simulation over 5000 random realizations of the stochastic process following the change-point detection model in (19) for every random realization of and for each reduced dimension . The detection delays obtained are then averaged again over 100 random realizations of covariance matrices for each reduced dimension r. Figure 1 shows the plot for versus r for multiple initial data dimension n and for a fixed . Owing to the dependence on given in (21), the delay associated with the optimal linear mapping in Theorem 3 achieves better performance.

Figure 1.

Comparison of the average detection delay () under the optimal design and largest eigen modes schemes for multiple reduced data dimensions r as a function of original data dimension n for a fixed false alarm rate () which is equal to .

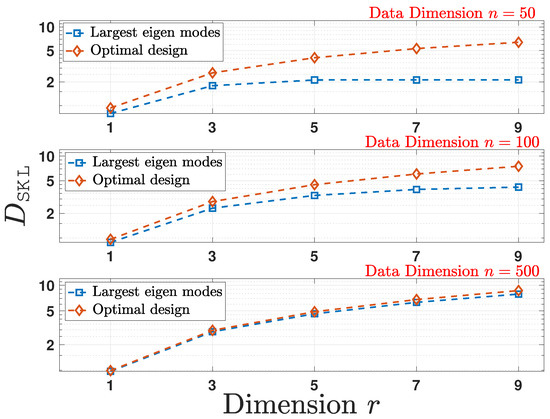

5.2. Symmetric KL Divergence

In this section, we show the gains of the analysis by numerically computing . We follow the pre- and post-linear dimensionality reduction structures given in (52), where the covariance matrix is randomly generated following the setup used in Section 5.1. As plotted in Figure 2, by choosing the design scheme for according to Theorem 3, the optimal design outperforms other schemes.

Figure 2.

Comparison of the empirical average computed for the optimal design and largest eigen modes schemes for multiple reduced data dimensions r as a function of original data dimension n.

5.3. Squared Hellinger Distance

We consider a Bayesian hypothesis testing problem given class a priori parameters , and Gaussian class conditional densities for the linear dimensionality reduction model in (52). Without loss of generality, we assume a 0–1 loss function associated with misclassification for the hypothesis test. In order to quantify the performance of the Bayes decision rule, it is imperative to compute the associated probability of error, also known as the Bayes error, which we denote by . Since, in general, computing for the optimal decision rule for multivariate Gaussian conditional densities is intractable, numerous techniques have been devised to bound . Owing to its simplicity, one of the most commonly employed metric is the Bhattacharyya coefficient given by

The metric in (53) facilitates upper bounding the error probability as

which is widely referred to as the Bhattacharrya bound. Relevant to this study is that the squared Hellinger distance is related to the Bhattacharyya coefficient in (53) through

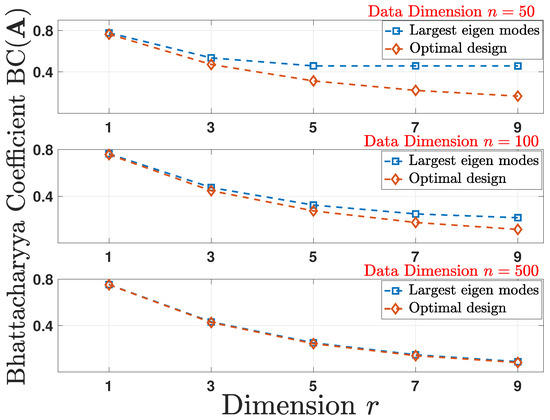

Hence, maximizing the Hellinger distance results in a tighter bound on from (54). To show the performance numerically, we compute the via (55). For the pre- and post-linear dimensionality reduction structures as given in (52), the covariance matrix is randomly generated following the setup used in Section 5.1. As plotted in Figure 3, by employing the design scheme according to Theorem 4, the optimal design results in a smaller and, hence, a tighter upper bound on in comparison to other schemes.

Figure 3.

Comparison of the empirical average of the Bhattacharyya coefficient under optimal design and largest eigen modes schemes for multiple reduced data dimensions r as a function of original data dimension n.

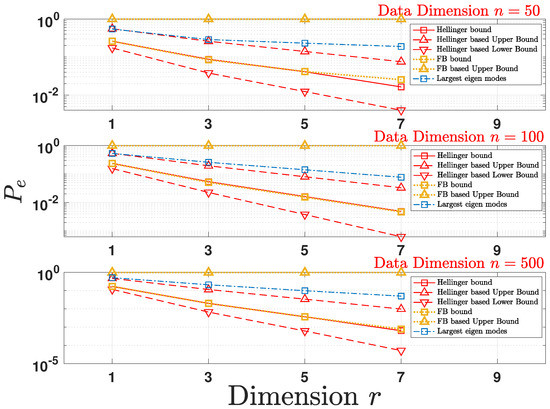

5.4. Total Variation Distance

Consider a binary hypothesis test with Gaussian class conditional densities following the model in (52) and equal class a priori probabilities, i.e., . We define as the cost associated with deciding in favor of when the true hypothesis is such that , and denote the densities associated with measures , by and , respectively. Without loss of generality, we assume a 0–1 loss function such that and . The optimal Bayes decision rule that minimizes the error probability is given by

Since the total variation distance cannot be computed in closed-form, we numerically compute the error probability under the two bounds (Hellinger-based and FB-based) introduced in Section 4.4.2 to quantify the performance of the design of matrix for the underlying inference problem. The covariance matrix is randomly generated following the setup used in Section 5.1. As plotted in Figure 4, by optimizing the Hellinger-based bound according to Theorem 4 and optimizing the FB-based bound according to Theorem 3, the two design schemes achieve a smaller . We further observe that the bounds due to FB-based are loose in comparison to Hellinger-based bounds. Therefore, we choose not to plot the lower bound on for the FB-based bounds in Figure 4.

Figure 4.

Comparing the logarithm of the empirical average value for under the two bounds on (Hellinger-based and Frobenius norm (FB)-based) with the largest eigen modes scheme for multiple projected data dimensions r as a function of initial data dimension n.

5.5. -Divergence

In this section, we show the gains of the proposed analysis through numerical evaluations by numerically computing , to find a lower bound on the noise variance up to a constant. Following the pre- and post-linear dimensionality reduction structures given in (52), the covariance matrix is randomly generated following the setup used in Section 5.1. As shown in Figure 5, constructing the optimal design according to Theorem 4 achieves a tighter lower bound in comparison to the other scheme.

Figure 5.

Comparison of the lower bound on noise variance given by under the optimal and largest eigen modes schemes for multiple reduced data dimensions r as a function of original data dimension n.

6. General Gaussian Models

In the previous section, we focused on . When , optimizing each f-divergence measure under the semi-orthogonality constraint does not render closed-form expressions. Nevertheless, to provide some intuitions, we provide a numerical approach to the optimal design of , which might also enjoy some local optimality guarantees. To start, note that the feasible set of solutions given by owing to the orthogonality constraints in is often referred to as the Stiefel manifold. Therefore, solving requires designing algorithms that optimize the objective while preserving manifold constraints during iterations.

We employ the method of Lagrange multipliers to formulate the Lagrangian function. By denoting the matrix of Lagrangian multipliers by , the Lagrangian function of problem (14) is given by

From the first order optimality condition, for any local maximizer of (14), there exists a Lagrange multiplier such that

where we denote the partial derivative with respect to by . In what follows, we iterate the design mapping using the gradient ascent algorithm in order to find a solution for . As discussed in the next subsection, this solution is guaranteed to be at least locally optimal.

6.1. Optimizing via Gradient Ascent

We use an iterative gradient ascent-based algorithm to find the local maximizer of such that . The gradient ascent update at any given iteration is given by

Note that, following this update, since the new point in (59) may not satisfy the semi-orthogonality, i.e., , it is imperative to establish a relation between the multipliers and in every iteration k to ensure a constraint-preserving update scheme. In particular, to enforce the semi-orthogonality constraint on , a relationship between the multipliers and the gradients in every iteration k is derived. Following a similar line of analysis for gradient descent in Reference [43], the relationship between multipliers and the gradients is provided in Appendix E. More details on the analysis of the update scheme can be found in Reference [43], and a detailed discussion on the convergence guarantees of classical steepest descent update schemes adapted to semi-orthogonality constraints can be found in Reference [44].

In order to simplify and state the relationships, we define and subsequently find a relationship between and in every iteration k. This is obtained by right-multiplying (59) by and solving for that enforces the semi-orthogonality constraint on . To simplify the analysis, we take a finite Taylor series expansion of around and choose such that the error in forcing the constraint is a good approximation of the gradient of the objective subjected to . As derived in the Appendix E, by simple algebraic manipulations, it can be shown that the matrices , and , for which the finite Taylor series expansion of is a good approximation of the constraint, are given by

Additionally, we note that, since finding the global maximum is not guaranteed, it is imperative to initialize close to the estimated maximum. In this regard, we leverage the structure of the objective function for each f-divergence measure as given in Appendix D. In particular, we observe that the objective of each f-divergence measure can be decomposed into two objectives: the first not involving (making this objective a convex problem as shown in Section 4), and the second objective a function of . Hence, leveraging the structure of the solution from Section 4, we initialize such that it maximizes the objective in the case of zero-mean Gaussian models. We further note that, while there are more sophisticated orthogonality constraint-preserving algorithms [45], we find that our method adopted from Reference [43] is sufficient for our purpose, as we show next through numerical simulations.

6.2. Results and Discussion

The design of when is not characterized analytically. Therefore, we resort to numerical simulations to show the gains of optimizing f-divergence measures when . In particular, we consider the linear discriminant analysis (LDA) problem where the goal is to design a mapping and perform classification in the lower dimensional space (of dimension r). Without loss of generality, we assume and consider Gaussian densities with the following pre- and post-linear dimensionality reduction structures:

where the covariance matrix is generated randomly the eigenvalues of which are sampled from a uniform distribution . For the model in (63), we consider two kinds of performance metrics that have information-theoretic performance interpretations: (i) the total probability of error related to the , and (ii) the exponential decay of error probability related to . In what follows, we demonstrate that optimizing appropriate f-divergence measures between and lead to better performance when compared to the performance of the popular Fisher’s quadratic discriminant analysis (QDA) classifier [20]. In particular, the Fisher’s approach sets and designs by solving

In contrast, we design such that the information-theoretic objective functions associated with the total probability of error (captured by ) and the exponential decay of error probability (captured by ) are minimized. The structure of the objective functions is discussed in Total probability of error and Type-II error subjected to type-I error constraints. Both methods and Fisher’s method, after projecting the data into a lower dimension, deploy optimal detectors to discern the true model. It is noteworthy that, in both methods the data in the lower dimensions has a Gaussian model, and the conventional QDA [20] classifier is the optimal detector. Hence, we emphasize that our approach aims to have a design for that maximizes the distance between the probability measures after reducing the dimensions, i.e., the distance between and . Since this distance captures the quality of the decisions, our design of outperforms that of Fisher’s. For each comparison, we consider various values for and compare the appropriate performance metrics with that of Fisher’s QDA for each. In all cases, the data is synthetically generated, i.e., sampled from a Gaussian distribution where we consider 2000 data points associated with each measure and .

6.2.1. Schemes for Linear Map

(1) Total Probability of Error: In this scheme, the linear map is designed such that is optimized via gradient ascent iterations until convergence. As discussed in Section 4.4.1, since the total probability of error is the key performance metric that arises while optimizing , it is expected that optimizing will result in a smaller total error in comparison to other schemes that optimize other objective functions (e.g., Fisher’s QDA). We note that, since there do not exist closed-form expressions for the total variation distance, we maximize bounds on instead via the Hellinger bound in (33) as a proxy to minimize the total probability of error. The corresponding gradient expression to optimize (to perform iterative updates as in (59)) is derived in closed-form and is given in Appendix D.

(2) Type-II Error Subjected to Type-I Error Constraints: In this scheme, the linear map is designed such that is optimized via gradient ascent iterations until convergence. In order to establish a relation, consider the following binary hypothesis test:

When minimizing the probability of type-II error subjected to type-I error constraints, the optimal test guarantees that the probability of type-II error decays exponentially as

where we have define as the decision rule for the hypothesis test, and s denotes the sample size. As a result, appears as the error exponent for hypothesis test in (65). Hence, it is expected that optimizing will result in a smaller type-II error for the same type-I error when comparing with a method that optimizes other objectives (e.g., Fisher’s QDA). The corresponding gradient expression to optimize the is derived in closed-form and is given in Appendix D.

For the sake of comparison and reference, we also consider schemes in which is designed to optimize the objectives , the largest eigen modes (LEM), and the smallest eigen modes (SEM), which carry no specific operational significance in the context of the binary classification problem. In the case of LEM and SEM schemes, the linear map is designed such that the rows of are the eigenvector associated with the largest and smallest modes of the matrix , respectively. Furthermore, we define as the vector of all those of appropriate dimension.

6.2.2. Performance Comparison

After learning the linear map for each scheme described in Section 6.2.1, we perform classification in the lower dimensional space of dimension r to find the type-I, type-II, and total probability of error for each scheme. Table 1, Table 2, Table 3 and Table 4 tabulate the results for various choices of the mean parameter . We have the following important observations: (i) we observe that optimizing results in a smaller total probability of error in comparison to the total error obtained by optimizing the Fisher’s objective; it is important to note that the superior performance is observed despite maximizing bounds on (that is sub-optimal) and not the distance itself; and (ii) we observe that except for the case of , optimizing results in a smaller type-II error in comparison to that obtained by optimizing the Fisher’s objective indicating a gain in optimizing in comparison to the Fisher’s objective in (64).

Table 1.

.

Table 2.

.

Table 3.

.

Table 4.

.

It is important to note that the convergence of the gradient ascent algorithm only guarantees a locally optimal solution. While we have restricted the results that consider a maximum separation of , we have performed additional simulations for larger separation between models (greater ). We have the following observations: (i) solution for the linear map obtained through gradient ascent becomes highly sensitive to the initialization ; specifically, it was observed that optimizing the Fisher’s objective outperforms optimizing for various initializations , and vice versa, for other random initializations; and (ii) the gradient ascent solver becomes more prone to getting stuck at the local maxima for larger separations between the models. We conjecture that the odd observation in the case of when optimizing (where optimizing the Fisher’s objective outperforms optimizing ) supports this observation. Furthermore, we note that, since the problem is convex for , a deviation from this assumption moves the problem further from being convex, making the solver prone to getting stuck at the locally optimal solutions for larger separation between the Gaussian models.

6.2.3. Subspace Representation

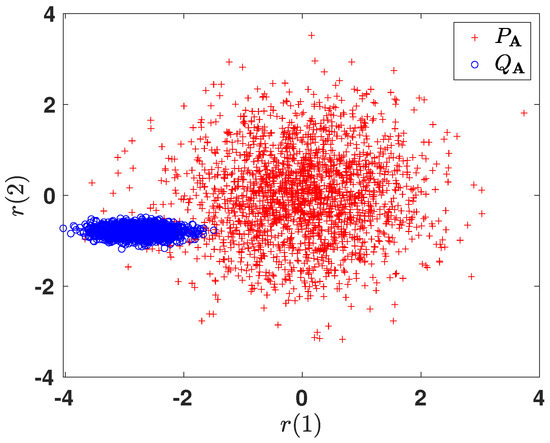

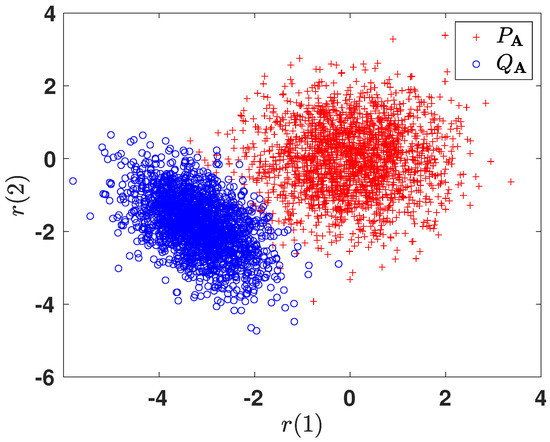

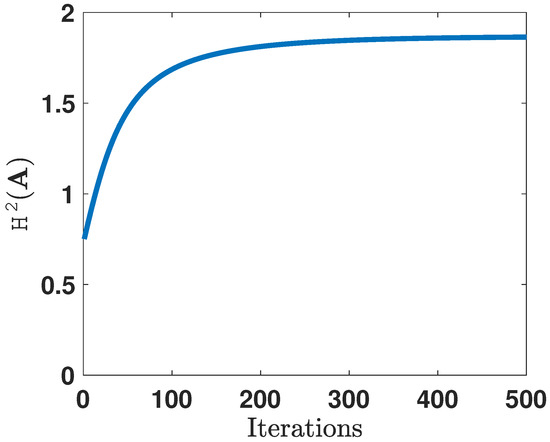

In order to gain more intuition towards the learned representations, we illustrate the 2-dimensional projections of the original 10-dimensional data obtained after optimizing the corresponding f-divergence measures. For brevity, we only show the plots for and . Figure 6 and Figure 7 plot the two-dimensional projections of the synthetic dataset that optimize and , respectively. As expected, it is observed that the total probability of error is smaller when optimizing . Figure 8 shows the variation in the objective function as a function of gradient ascent iterations. As the iterations grow, the objective functions eventually converges to a locally optimal solution.

Figure 6.

Two-dimensional projected data obtained by optimizing .

Figure 7.

Two-dimensional projected data obtained by optimizing .

Figure 8.

Convergence of the gradient ascent algorithm as a result of optimizing .

7. Conclusions

In this paper, we have considered the problem of discriminant analysis such that separation between the classes is maximized under f-divergence measures. This approach is motivated by dimensionality reduction for inference problems, where we have investigated discriminant analysis under Kullback–Leibler, symmetrized Kullback–Leibler, Hellinger, , and total variation measures. We have characterized the optimal design for the linear transformation of the data onto a lower-dimensional subspace for each in the case of zero-mean Gaussian models and adopted numerical algorithms to find the design of the linear transformation in the case of general Gaussian models with non-zero means. We have shown that, in the case of zero-mean Gaussian models, the row space of the mapping matrix lies in the eigenspace of a matrix associated with the covariance matrix of the Gaussian models involved. While each f-divergence measure favors specific eigenvector components, we have shown that all the designs become identical in certain regimes, making the design of the linear mapping independent of the inference problem of interest.

Author Contributions

A.D., S.W. and A.T. contributed equally. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the U. S. National Science Foundation under grants CAREER Award ECCS-1554482 and ECCS-1933107, and RPI-IBM Artificial Intelligence Research Collaboration (AIRC).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PCA | Principal Component Analysis |

| MDS | Multidimensional Scaling |

| SDR | Sufficient Dimension Reduction |

| DA | Discriminant Analysis |

| KL | Kullback Leibler |

| TV | Total Variation |

| Average Detection Delay | |

| False Alarm Rate | |

| CuSum | Cumulative Sum |

| Bhattacharyya Coefficient | |

| LEM | Largest Eigen Modes |

| SEM | Smallest Eigen Modes |

| LDA | Linear Discriminant Analysis |

| QDA | Quadratic Discriminant Analysis |

Appendix A. Proof of Theorem 1

Consider two pairs of probability measures and associated with the mapping in space and in space , respectively. Let denote any invertible transformation. Under the invertible map, we have

where denotes the determinant of the Jacobian matrix associated with g. Leveraging (A1), the f-divergence measure simplifies as follows.

Therefore, f-divergence measures are invariant under invertible transformations (both linear and non-linear) ensuring the existence of for every as a special case for linear transformations.

Appendix B. Proof of Theorem 3

We observe that , , and the objective to be optimized through the matching bound Section 4.4.2, Matching Bounds up to a Constant on can be decomposed as the summation of strictly convex functions involving , , and , respectively. Since the summation of strictly convex functions is strictly convex, we conclude that each objective is strictly convex.

Next, the goal is to choose such that is maximized subjected to spectral constraints given by . In order to choose appropriate ’s, we first note that the global minimizer for functions appears at . By noting that each is strictly convex, it can be readily verified that is monotonically increasing for and monotonically decreasing for . This will guide selecting , as explained next.

In the case of , i.e., when all the eigenvalues are larger than or equal to 1, the objective of maximizing each boils down to maximizing a monotonically increasing function (considering the domain). This is trivially done by choosing for , proving Corollary 1. On the other hand, when , i.e., when all the eigenvalues are smaller than or equal to 1, following the same line of argument, the objective boils down to maximizing each , where each is a monotonically decreasing function (considering the domain). This is trivially done by choosing for .

When , the selection process is not trivial. Rather, an iterative algorithm can be followed, where we start from the eigenvalues farthest away from 1 on both sides and, subsequently, choose the one in every iteration that achieves a higher objective. This procedure can be repeated recursively until r eigenvalues are chosen. This procedure is also discussed in Algorithm 1 in Section 4.6.

Finally, constructing the optimal matrix , which maximizes for any data matrix , becomes equivalent to choosing eigenvectors as the rows of associated with the chosen permutation of eigenvalues for each of the aforementioned cases.

Appendix C. Proof for Theorem 4

We first find a closed-form expression for and . From the definition, we have

where we defined . We note that is a real symmetric matrix since is a real symmetric matrix. We denote the eigen decomposition of as , where the matrix is a diagonal matrix with the eigenvalues as its elements. Based on this decomposition, we have

where we have defined . We note that, in order for to be finite, it is required that the eigenvalues be non-negative. Hence, based on the definition of , all the eigenvalues should fall in the interval . Hence, we obtain:

Recall that the eigenvalues of are given by in the descending order. Therefore, (A13) simplifies to:

Hence, from (A14), maximizing is equivalent to choosing the eigenvalues such that they maximize . Similarly, the closed-form expression for can be derived as follows:

where we defined . We note that is a real symmetric matrix due to being a real symmetric matrix. Hence, following a similar line of argument as in the case of , and as a consequence of Theorem 2, we conclude that all the eigenvalues should fall in the interval to ensure a finite value for . Following this requirement, since the integrands are bounded, we obtain the following closed-form expression:

Recall that the eigenvalues of are given by ; then, (A16) simplifies to

Hence, from (A17), maximizing is equivalent to choosing the eigenvalues such that they maximize .

We observe that , , and can be decomposed as the product of r non-negative identical convex functions involving , , and , respectively. Hence, the goal is to choose such that is maximized subjected to spectral constraints given by . In order to choose appropriate ’s, we first note that the global minimizer for each is attained at . Leveraging this observation, along with the structure that each is convex, it is easy to infer that each is monotonically increasing for and monotonically decreasing . From the exact same argument in Appendix B, we obtain Corollaries 3 and 4.

Therefore, similar to Appendix B, constructing the linear map that maximizes for any data matrix boils down to choosing eigenvectors as rows of associated with the chosen permutation of eigenvalues for each of the aforementioned cases.

Appendix D. Gradient Expressions for f-Divergence Measures

For clarity in analysis, we define the following functions:

Based on these definitions, we have the following representations for the divergence measures and their associated gradients:

Appendix E. Proof for Lagrange Multipliers

Substituting in (A27) and simplifying the expression, we obtain:

References

- Kunisky, D.; Wein, A.S.; Bandeira, A.S. Notes on computational hardness of hypothesis testing: Predictions using the low-degree likelihood ratio. arXiv 2019, arXiv:1907.11636. [Google Scholar]

- Gamarnik, D.; Jagannath, A.; Wein, A.S. Low-degree hardness of random optimization problems. arXiv 2020, arXiv:2004.12063. [Google Scholar]

- van der Maaten, L.; Postma, E.; van den Herik, J. Dimensionality reduction: A comparative review. J. Mach. Learn. Res. 2009, 10, 66–71. [Google Scholar]

- Lee, J.A.; Verleysen, M. Nonlinear Dimensionality Reduction; Springer Science: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- DeMers, D.; Cottrell, G.W. Non-linear dimensionality reduction. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 3–6 November 1993; pp. 580–587. [Google Scholar]

- Cunningham, J.P.; Ghahramani, Z. Linear dimensionality reduction: Survey, insights, and generalizations. J. Mach. Learn. Res. 2015, 16, 2859–2900. [Google Scholar]

- Pearson, K. On lines and planes of closest fit to systems of points in space. Philos. Mag. 1901, 2, 559–572. [Google Scholar] [CrossRef] [Green Version]

- Eckart, C.; Young, G. The approximation of one matrix by another of lower rank. Psychometrika 1936, 1, 211–218. [Google Scholar] [CrossRef]

- Jolliffe, I. Principal Component Analysis; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Torgerson, W.S. Multidimensional scaling: I. Theory and method. Psychometrika 1952, 17, 401–419. [Google Scholar] [CrossRef]

- Cox, T.F.; Cox, M.A. Multidimensional scaling. In Handbook of Data Visualization; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Borg, I.; Groenen, P.J. Modern Multidimensional Scaling: Theory and Applications; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Izenman, A.J. Linear discriminant analysis. Modern Multivariate Statistical Techniques; Springer: New York, NY, USA, 2013; pp. 237–280. [Google Scholar]

- Globerson, A.; Tishby, N. Sufficient dimensionality reduction. J. Mach. Learn. Res. 2003, 3, 1307–1331. [Google Scholar]

- Fisher, R.A. The use of multiple measurements in taxonomic problems. Ann. Eugen. 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Rao, C.R. The utilization of multiple measurements in problems of biological classification. J. R. Stat. Soc. Ser. B 1948, 10, 159–203. [Google Scholar] [CrossRef]

- Fukunaga, K. Introduction to Statistical Pattern Recognition; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Suresh, B.; Ganapathiraju, A. Linear discriminant analysis- A brief tutorial. Inst. Signal Inf. Process. 1998, 18, 1–8. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef] [Green Version]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Gelfand, I.M.; Kolmogorov, A.N.; Yaglom, A.M. On the general definition of the amount of information. Dokl. Akad. Nauk SSSR 1956, 11, 745–748. [Google Scholar]

- Csiszár, I. Eine Informationstheoretische Ungleichung und ihre Anwendung auf den Bewis der Ergodizität von Markhoffschen Ketten. Magy. Tudományos Akad. Mat. Kut. Intézetének Közleményei 1948, 8, 379–423. [Google Scholar]

- Ali, S.M.; Silvey, S.D. General Class of Coefficients of Divergence of One Distribution from Another. J. R. Stat. Soc. 1966, 28, 131–142. [Google Scholar] [CrossRef]

- Morimoto, T. Markov Processes and the H-Theorem. J. Phys. Soc. Jpn. 1963, 18, 328–331. [Google Scholar] [CrossRef]

- Arimoto, S. Information-theoretical considerations on estimation problems. Inf. Control 1971, 19, 181–194. [Google Scholar] [CrossRef] [Green Version]

- Barron, A.R.; Gyorfi, L.; Meulen, E.C. Distribution estimation consistent in total variation and in two types of information divergence. IEEE Trans. Inf. Theory 1992, 38, 1437–1454. [Google Scholar] [CrossRef] [Green Version]

- Berlinet, A.; Vajda, I.; Meulen, E.C. About the asymptotic accuracy of Barron density estimates. IEEE Trans. Inf. Theory 1998, 44, 999–1009. [Google Scholar] [CrossRef]

- Gyorfi, L.; Morvai, G.; Vajda, I. Information-theoretic methods in testing the goodness of fit. In Proceedings of the IEEE International Symposium on Information Theory, Sorrento, Italy, 25–30 June 2000. [Google Scholar]

- Liese, F.; Vajda, I. On Divergences and Informations in Statistics and Information Theory. IEEE Trans. Inf. Theory 2006, 52, 4394–4412. [Google Scholar] [CrossRef]

- Kailath, T. The Divergence and Bhattacharyya Distance Measures in Signal Selection. IEEE Trans. Commun. Technol. 1967, 15, 52–60. [Google Scholar] [CrossRef]

- Poor, H. Robust decision design using a distance criterion. IEEE Trans. Inf. Theory 1980, 26, 575–587. [Google Scholar] [CrossRef]

- Clarke, B.S.; Barron, A.R. Information-theoretic asymptotics of Bayes methods. IEEE Trans. Inf. Theory 1990, 36, 453–471. [Google Scholar] [CrossRef] [Green Version]

- Harremoes, P.; Vajda, I. On Pairs of f-divergences and their joint range. IEEE Trans. Inf. Theory 2011, 57, 3230–3235. [Google Scholar] [CrossRef]

- Sason, I.; Verdú, S. f-Divergence Inequalities. IEEE Trans. Inf. Theory 2016, 62, 5973–6006. [Google Scholar] [CrossRef]

- Sason, I. On f-divergence: Integral representations, local behavior, and inequalities. Entropy 2018, 20, 383. [Google Scholar] [CrossRef] [Green Version]

- Rao, C.R.; Statistiker, M. Linear Statistical Inference and Its Applications; Wiley: New York, NY, USA, 1973. [Google Scholar]

- Poor, H.V.; Hadjiliadis, O. Quickest Detection; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Cavanaugh, J.E. Criteria for linear model selection based on Kullback’s symmetric divergence. Aust. N. Z. J. Stat. 2004, 46, 257–274. [Google Scholar] [CrossRef]

- Devroye, L.; Mehrabian, A.; Reddad, T. The total variation distance between high-dimensional Gaussians. arXiv 2020, arXiv:1810.08693. [Google Scholar]

- Pollak, M. Optimal detection of a change in distribution. Ann. Stat. 1985, 13, 206–227. [Google Scholar] [CrossRef]

- Carter, K.M.; Raich, R.; Finn, W.G.; Hero, A.O. Information preserving component analysis: Data projections for flow cytometry analysis. IEEE J. Sel. Top. Signal Process. 2009, 3, 148–158. [Google Scholar] [CrossRef] [Green Version]

- Wen, Z.; Yin, W. A feasible method for optimization with orthogonality constraints. Math. Program. 2013, 142, 397–434. [Google Scholar] [CrossRef] [Green Version]

- Edelman, A.; Arias, T.; Smith, S. The geometry of algorithms with orthogonality constraints. SIAM J. Matrix Anal. Appl. 1998, 20, 303–353. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).