Abstract

In this work, we first consider the discrete version of Fisher information measure and then propose Jensen–Fisher information, to develop some associated results. Next, we consider Fisher information and Bayes–Fisher information measures for mixing parameter vector of a finite mixture probability mass function and establish some results. We provide some connections between these measures with some known informational measures such as chi-square divergence, Shannon entropy, Kullback–Leibler, Jeffreys and Jensen–Shannon divergences.

1. Introduction

Over the last seven decades, several different criteria have been introduced in the literature for measuring uncertainty in a probabilistic model. Shannon entropy and Fisher information are the most important information measures that have been used rather extensively. Information theory started with Shannon entropy, introduced in the pioneering work of Shannon [1], based on a study of systems described by probability density (or mass) functions. About two decades earlier, Fisher [2] had proposed another information measure, describing the interior properties of a probabilistic model, that plays an important role in likelihood-based inferential methods. Fisher information and Shannon entropy are fundamental criteria in statistical inference, physics, thermodynamics and information theory. Complex systems can be described by means of their behavior (Shannon) and their architecture (Fisher) information. For more discussions, see Zegers [3] and Balakrishnan and Stepanov [4].

Let X be a discrete random variable with probability mass function (PMF) . Then, the Shannon entropy of random variable X is defined as

where “log” denotes the natural logarithm. For more details, see Shannon [1]. Following the work of Shannon [1], considerable attention has been paid to providing some extensions of Shannon entropy. Jensen–Shannon (JS) divergence is one such important extension of Shannon entropy that has been widely used; see Lin [5]. The Jensen–Shannon divergence between two probability mass functions and , for , is defined as

The JS divergence is a smoothed and symmetric version of the most important divergence measure of information theory, namely, Kullback–Leibler divergence. Recently, Jensen–Fisher (JF) and Jensen–Gini (JG) divergence measures have been introduced by Sánchez-Moreno et al. [6] and Mehrali et al. [7], respectively.

In the present paper, motivated by the idea of JS divergence, we consider discrete versions of Fisher information (DFI) and Fisher information distance (DFID), and then develop a new information measure associated with DFI measure. In addition, we provide some results for the Fisher information of a finite mixture probability mass function through a Bayesian perspective. The discrete Fisher information of a random variable X with PMF is defined as

with .

The Fisher information in (1) has been made use of in the processing of complex and stationary signals. For example, the discrete version of Fisher information has been used in detecting epileptic seizures in EEG signals recorded in humans and turtles, in detecting dynamical changes in many non-linear models such as logistic map and Lorenz model, and also in the analysis of geoelectrical signals; see Martin et al. [8], Ramírez-Pacheco et al. [9] and Ramírez-Pacheco et al. [10] for pertinent details.

The discrete Fisher information distance (DFID) between two probability mass functions and is defined as

where, as above, . For some of its properties, one may refer to Ramírez-Pacheco et al. [10] and Johnson [11].

With regard to informational properties of finite mixture models, one may refer to Contreras-Reyes and Cortés [12] and Abid et al. [13]. These authors have provided upper and lower bounds for Shannon and Rényi entropies of non-gaussian finite mixtures, skew-normal and skew-t distributions, respectively. Kolchinsky and Tracey [14] have studied the upper and lower bounds for the entropy of Gaussian mixture distributions using the Bhattacharyya and Kullback–Leibler divergences.

The first purpose of this paper is to propose Jensen–Fisher information for discrete random variables , with probability mass functions respectively. For this purpose, we first define discrete version of Jensen–Fisher information for two PMFs and , and then provide some results concerning this new information measure. Then, this idea is extended to the general case of PMFs .

The second purpose of this work is to study Fisher and Bayes–Fisher information measures for the mixing parameter of a finite mixture probability mass function. Let be n probability mass functions, where . Then, a finite mixture probability mass function with mixing parameter vector , for is given by where

and .

Let X and Y be two discrete random variables with PMFs and , respectively. Then, the Kullback–Leibler (KL) distance between X and Y (or and ) is defined as

The Kullback–Leibler discrimination between Y and X can be defined similarly. For more details, see Kullback and Leibler [15]. The chi-square divergence between PMFs and is defined by

For pertinent details, see Broniatowski [16] and Cover and Thomas [17].

The rest of this paper is organized as follows. In Section 2, we first consider discrete version of Fisher information and then propose the discrete Jensen–Fisher information (DJFI) measure. We show that DJFI measure can be represented based on the mixture of discrete Fisher information distance measures. In Section 3, we consider a finite mixture probability mass function and establish some results for the Fisher information measure of the mixing parameter vector. We show that the Fisher information of the mixing parameter vector is connected to chi-square divergence. Next, in Section 4, we discuss the Bayes–Fisher information for the mixing parameter vector of probability mass functions under some prior distributions for the mixing parameter. We then show that this measure is connected to Shannon entropy, Jensen–Shannon entropy, Kullback–Leibler and Jeffreys divergence measures. Finally, we present some concluding remarks in Section 5.

2. Discrete Version of Jensen-Fisher Information

In this section, we first give a result for the DFI measure based on the log-convex and log-concave property of the probability mass function. Then, we define the discrete Jensen–Fisher information measure, and establish some interesting properties of it.

Theorem 1.

Let be a probability mass function.

- (i)

- If is log-concave, then

- (ii)

- If is log-convex, then

Proof.

is log-convex (log-concave) if So, from the definition of DFI in (1), we have

□

2.1. Discrete Jensen–Fisher Information Based on Two Probability Mass Functions P and Q

We first define a symmetric version of DFID measure in (2), and then propose the discrete Jensen–Fisher information measure involving two probability mass functions.

Definition 1.

Let and be two probability mass functions given by and . Then, a symmetric version of discrete Fisher information distance in (2) is defined as

Definition 2.

Let and be two probability mass functions given by and Then, the discrete Jensen–Fisher information is defined as

In the following theorem, we show that the discrete Jensen–Fisher information measure can be obtained based on mixtures of Fisher information distances.

Theorem 2.

Let and be two probability mass functions given by and . Then,

Proof.

From the definition of DFID in (2), we get

In a similar way, we get

Upon adding the above two expressions, we obtain

as required. □

Example 1.

Let

and

The corresponding PMFs of variables X and Y are given by and , respectively. From Theorem 2, we then have

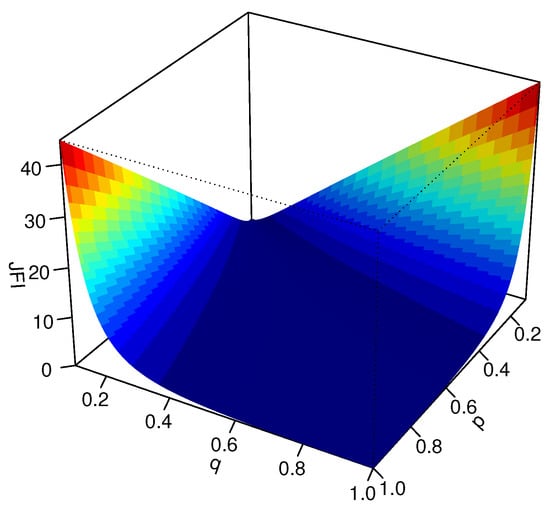

A 3D-plot of this is presented in Figure 1.

Figure 1.

3D-plot of the DJFI divergence between the PMFs and .

2.2. Discrete Jensen–Fisher Information Based on n Probability Mass Functions

Let be n probability mass functions, where . In the following definition, we extend the discrete Jensen–Fisher information measure in (4) to the case of n probability mass functions.

Definition 3.

Let be n probability mass functions given by , , with , and be non-negative real numbers such that . Then, the discrete Jensen–Fisher information (DJFI) based on the n probability mass functions is defined as

where

Theorem 3.

Let be n probability mass functions given by , , and . Then, the DJFI measure can be expressed as a mixture of DFID measures in (2) as follows:

where is the weighted PMF.

Proof.

From the definition in (5), we get

as required. □

3. Fisher Information of a Finite Mixture Probability Mass Function

In this section, we discuss Fisher information for parameter of a finite mixture probability mass function.

Theorem 4.

Proof.

From the definition of Fisher information in (1) and for , we have

where the third equation follows from the fact that, for

□

4. Bayes–Fisher Information of a Finite Mixture Probability Mass Function

In this section, we discuss Bayes–Fisher information for the mixing parameter vector of the finite mixture probability mass function in (3) under some prior distributions for the mixing parameter vector. We now introduce two notations that will be used in the sequel. Consider the parameter vector , and then define and

Theorem 5.

The Bayes–Fisher information for parameter , of the finite mixture PMF in (3), under the uniform prior on is given by

where with

and with

and J corresponds to Jeffreys’ divergence.

Proof.

Theorem 6.

For the mixture model with PMF in (3), we have the following:

- (i)

- The Bayes–Fisher information for , under prior with PMF , is

- (ii)

- The Bayes-Fisher information for parameter , under prior with PMF , is

Proof.

By definition, and from (7), for , we have

as required for Part (i). Part (ii) can be proved in an analogous manner.

Let us now consider the following general triangular prior for the parameter :

for some □

Theorem 7.

Proof.

From the assumptions made, for , we have

as required. □

5. Concluding Remarks

In this paper, we have introduced the discrete version of Jensen–Fisher information measure, and have shown that this information measure can be expressed as a mixture of discrete Fisher information distance measures. Further, we have considered a finite mixture probability mass function and have derived Fisher information and Bayes–Fisher information for the mixing parameter vector. We have shown that the Fisher information for the mixing parameter is connected to chi-square divergence. We have also studied the Bayes–Fisher information for the mixing parameter of a finite mixture model under some prior distributions. These results have provided connections between the Bayes–Fisher information and some known informational measures such as Shannon entropy, Kullback–Leibler, Jeffreys and Jensen–Shannon divergence measures.

Author Contributions

All authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Fisher, R.A. Tests of significance in harmonic analysis. Proc. R. Soc. Lond. A Math. Phys. Sci. 1929, 125, 54–59. [Google Scholar]

- Zegers, P. Fisher information properties. Entropy 2015, 17, 4918–4939. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Stepanov, A. On the Fisher information in record data. Stat. Probab. Lett. 2006, 76, 537–545. [Google Scholar] [CrossRef]

- Lin, J. Divergence measures based on the Shannon entropy. IEEE Trans. Inf. Theory 1991, 37, 145–151. [Google Scholar] [CrossRef]

- Sánchez-Moreno, P.; Zarzo, A.; Dehesa, J.S. Jensen divergence based on Fisher’s information. J. Phys. A Math. Theor. 2012, 45, 125305. [Google Scholar] [CrossRef]

- Mehrali, Y.; Asadi, M.; Kharazmi, O. A Jensen-Gini measure of divergence with application in parameter estimation. Metron 2018, 76, 115–131. [Google Scholar] [CrossRef]

- Martin, M.T.; Pennini, F.; Plastino, A. Fisher’s information and the analysis of complex signals. Phys. Lett. A 1999, 256, 173–180. [Google Scholar] [CrossRef]

- Ramírez-Pacheco, J.; Torres-Román, D.; Rizo-Dominguez, L.; Trejo-Sanchez, J.; Manzano-Pinzón, F. Wavelet Fisher’s information measure of 1/fα signals. Entropy 2011, 13, 1648–1663. [Google Scholar] [CrossRef]

- Ramírez-Pacheco, J.; Torres-Román, D.; Argaez-Xool, J.; Rizo-Dominguez, L.; Trejo-Sanchez, J.; Manzano-Pinzón, F. Wavelet q-Fisher information for scaling signal analysis. Entropy 2012, 14, 1478–1500. [Google Scholar] [CrossRef]

- Johnson, O. Information Theory and the Central Limit Theorem; World Scientific Publishers: Singapore, 2004. [Google Scholar]

- Contreras-Reyes, J.E.; Cortés, D.D. Bounds on Rényi and Shannon entropies for finite mixtures of multivariate skew-normal distributions: Application to swordfish (Xiphias gladius linnaeus). Entropy 2017, 18, 382. [Google Scholar] [CrossRef]

- Abid, S.H.; Quaez, U.J.; Contreras-Reyes, J.E. An information-theoretic approach for multivariate skew-t distributions and applications. Mathematics 2021, 9, 146. [Google Scholar] [CrossRef]

- Kolchinsky, A.; Tracey, B.D. Estimating mixture entropy with pairwise distances. Entropy 2017, 19, 361. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Broniatowski, M. Minimum divergence estimators, Maximum likelihood and the generalized bootstrap. Entropy 2021, 23, 185. [Google Scholar] [CrossRef] [PubMed]

- Cover, T.; Thomas, J. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).