Abstract

This paper presents objective priors for robust Bayesian estimation against outliers based on divergences. The minimum -divergence estimator is well-known to work well in estimation against heavy contamination. The robust Bayesian methods by using quasi-posterior distributions based on divergences have been also proposed in recent years. In the objective Bayesian framework, the selection of default prior distributions under such quasi-posterior distributions is an important problem. In this study, we provide some properties of reference and moment matching priors under the quasi-posterior distribution based on the -divergence. In particular, we show that the proposed priors are approximately robust under the condition on the contamination distribution without assuming any conditions on the contamination ratio. Some simulation studies are also presented.

1. Introduction

The problem of the robust parameter estimation against outliers has a long history. For example, Huber and Ronchetti [1] provided an excellent review of the classical robust estimation theory. It is well-known that the maximum likelihood estimator (MLE) is not robust against outliers because it is obtained by minimizing the Kullback–Leibler (KL) divergence between the true and empirical distributions. To overcome this problem, we may use other (robust) divergences instead of the KL divergence. The robust parameter estimation based on divergences has been one of the central topics in modern robust statistics (e.g., [2]). Such a method was firstly proposed by [3], who referred to it as the minimum density power divergence estimator. Reference [4] also proposed the “type 0 divergence”, which is a modified version of the density power divergence, and Reference [5] showed that it has good robustness properties. The type 0 divergence is also known as the -divergence, and statistical methods based on the -divergence have been presented by many authors (e.g., [6,7,8]).

In Bayesian statistics, the robustness against outliers is also an important issue, and divergence-based Bayesian methods have been proposed in recent years. Such methods are known as quasi-Bayes (or general Bayes) methods in some studies, and the corresponding posterior distributions are called quasi-posterior (or general posterior) distributions. To overcome the model misspecification problem (see [9]), the quasi-posterior distributions are based on a general loss function rather than the usual log-likelihood function. In general, such general loss functions may not depend on an assumed statistical model. However, in this study, we use loss functions that depend on the assumed model because we are interested in the robust estimation problem against outliers, that is the model is not misspecified, but the data generating distribution is wrong. In other words, we use divergences or scoring rules as a loss function for the quasi-posterior distribution (see also [10,11,12,13,14]). For example, Reference [10] used the Hellinger divergence. Reference [11] used the density power divergence. References [12,14] used the -divergence. In particular, the quasi-posterior distribution based on the -divergence was referred to as the -posterior in [12], and they showed that the -posterior has good robustness properties to overcome the problems in [11].

Although the selection of priors is an important issue in Bayesian statistics, we often have no prior information in some practical situations. In such cases, we may use priors called default or objective priors, and we should select an appropriate objective prior in a given context. In particular, we consider the reference and moment matching priors in this paper. The reference prior was firstly proposed by [15], and the moment matching prior was proposed by [16]. However, such objective priors generally depend on an unknown data generating distribution when we cannot assume that the contamination ratio is approximately zero. For example, if we assume the -contamination model (see, e.g., [1]) as a data generating distribution, many objective priors depend on the unknown contamination ratio and unknown contamination distribution because these objective priors involve the expectations under the data generating distribution. Although [17] derived some kinds of reference priors under the quasi-posterior distributions based on some kinds of scoring rules, they only discussed the robustness of such reference priors when the contamination ratio is approximately zero. Furthermore, their simulation studies largely depended on the assumption for the contamination ratio. In other words, they indirectly assumed that the contamination ratio is approximately zero. The current study derives the moment matching priors under the quasi-posterior distribution in a similar way as [16], and we show that the reference and moment matching priors based on the -divergence do not approximately depend on such unknown quantities under a certain assumption for the contamination distribution even if the contamination ratio is not small.

The rest of this paper is organized as follows. In Section 2, we review robust Bayesian estimation based on divergences referring to some previous studies. We derive moment matching priors based on the quasi-posterior distribution using an asymptotic expansion of the quasi-posterior distribution given by [17] in Section 3. Furthermore, we show that the reference and moment matching priors based on the -posterior do not depend on the contamination ratio and the contamination distribution. In Section 4, we compare the empirical bias and mean squared error of posterior means through some simulation studies. Some discussion about the selection of tuning parameters is also provided.

2. Robust Bayesian Estimation Using Divergences

In this section, we review the framework of robust estimation in the seminal paper by Fujisawa and Eguchi [5], and we introduce the robust Bayesian estimation using divergences. Let be independent and identically distributed (iid) random variables according to a distribution G with the probability density function g on , and let . We assume the parametric model () and consider the estimation problem for .

Then, the -divergence between two probability densities g and f is defined by:

where is a tuning parameter on robustness. We also define the -cross-entropy as:

(see [4,5]).

2.1. Framework of Robustness

Fujisawa and Eguchi [5] introduced a new framework of robustness, which is different from the classical one. When some of the data values are regarded as outliers, we need a robust estimation procedure. Typically, an observation that takes a large value is regarded as an outlier. Under this convention, many robust parameter estimation procedures have been proposed to reduce the bias caused by an outlier. An influence function is one of the methods to measure the sensitivity of models against outliers. It is known that the bias of an estimator is approximately proportional to the influence function when the contamination ratio is small. However, when is not small, the bias cannot be approximately proportional to the influence function. Reference [5] showed that the likelihood function based on the -divergence gives a sufficiently small bias under heavy contamination. Suppose that observations are generated from a mixture distribution , where is the underlying density, is another density function, and is the contamination ratio. In Section 3, we assume that the condition:

holds for a constant (see [5]). When is generated from , we call the outlier. We note that we do not assume that the contamination ratio is sufficiently small. This condition means that the contamination distribution mostly lies on the tail of the underlying density . In other words, for an outlier , it holds that . We note that the condition (1) is also a basis to prove the robustness against outliers for the minimum -divergence estimator in [5]. Furthermore, Reference [18] provided some theoretical results for the -divergence, and related works in the frequentist setting have been also developed (e.g., [6,7,8], and so on).

In the rest of this section, we give a brief review of the general Bayesian updating and introduce some previous works that are closely related to this paper.

2.2. General Bayesian Updating

We consider the same framework as [9,13]. We are interested in (), and we define a loss function . Further, let be the target parameter. We define the risk function by , and its empirical risk is defined by . For the prior distribution , the quasi-posterior density is defined by:

where is a tuning parameter called the learning rate. We note that the quasi-posterior is also called the general posterior or Gibbs posterior. In this paper, we fix for the same reason as [13]. For example, if we set , we can estimate the median of the distribution without assuming the statistical model. However, we consider the model-dependent loss function, which is based on statistical divergence (or the scoring rule) in this study (see also [11,12,13,14]). The unified framework of inference using the quasi-posterior distribution was discussed by [9].

2.3. Assumptions and Previous Works

Let be a cross-entropy induced by a divergence, and let be a statistical model. In general, the quasi-posterior distribution based on the cross-entropy is defined by:

where is the empirically estimated cross-entropy and is the empirical density function. In robust statistics based on divergences, we may use the cross-entropy induced by a robust divergence (e.g., [3,4,5]). In this paper, we mainly use the -cross-entropy proposed by [4,5]. Recently, Reference [12] proposed the -posterior based on the monotone transformation of the -cross-entropy:

for . The -posterior is defined by taking in (2). On the other hand, Reference [11] proposed the -posterior based on the density power cross-entropy:

for . The -posterior is defined by taking in (2). Note that cross-entropies and converge to the negative log-likelihood function as and , respectively. Hence, we can establish that they are some kind of generalization of the negative log-likelihood function. It is known that the posterior mean based on the -posterior works well for the estimation of a location parameter in the presence of outliers. However, this is known to be unstable in the case of the estimation for a scale parameter (see [12]). Nakagawa and Hashimoto [12] showed that the posterior mean under the -posterior has a small bias under heavy contamination for both location and scale parameters in some simulation studies.

Let be the target parameter. We now assume the following regularity conditions on the density function . We use indices to denote derivatives of with respect to the components of the parameter . For example, and for .

- (A1)

- The support of the density function does not depend on unknown parameter , and is fifth-order differentiable with respect to in neighborhood U of .

- (A2)

- The interchange of the order of integration with respect to x and differentiation as is justified. The expectations:are all finite, and exists such that:and for all , where and , while is the expectation of X with respect to a probability density function g.

- (A3)

- For any , with probability one:for some and for all sufficiently large n.

The matrices and are defined by:

respectively. We also assume that and are positive definite matrices. Under these conditions, References [11,12] discussed several asymptotic properties of the quasi-posterior distributions and the corresponding posterior means.

In terms of the higher order asymptotic theory, Giummolè et al. [17] derived the asymptotic expansion of such quasi-posterior distributions. We now introduce the notation that will be used in the rest of the paper. Reference [17] presented the following theorem.

Theorem 1

(Giummolè et al. [17]). Under the conditions (A1)–(A3), we assume that is a consistent solution of and as . Then, for any prior density function that is third-order differentiable and positive at , it holds that:

where is the quasi-posterior density function of the normalized random variable given , is the density function of a p-variate normal distribution with a zero mean vector and covariance matrix A, , , and:

Proof.

The proof is given in the Appendix A of [17]. □

As previously mentioned, quasi-posterior distributions depend on the cross-entropy induced by a divergence and a prior distribution. If we have some information about unknown parameters , we can use a prior distribution that takes such prior information into account. However, in the absence of prior information, we often use prior distributions known as default or objective priors. Reference [17] proposed the reference prior for quasi-posterior distributions, which is a type of objective prior (see [15]). The reference prior is obtained by asymptotically maximizing the expected KL divergence between prior and posterior distributions. As a generalization of the reference prior, Reference [19] discussed such priors under a general divergence measure known as the -divergence (see also [20,21]). The reference prior under the -divergence is given by asymptotically maximizing the expected -divergence:

where is the -divergence defined as:

which corresponds to the KL divergence as , the Hellinger divergence for , and the -divergence for . Reference [17] derived reference priors with the -divergence under the quasi-posterior based on some kinds of proper scoring rules such as the Tsallis scoring rule and the Hyvärinen scoring rule. We note that the former rule is the same as the density power score of [3] with minor notational modifications.

Theorem 2

(Giummolè et al. [17]). When , the reference prior that asymptotically maximizes the expected α-divergence between the quasi-posterior and prior distributions is given by:

The result of Theorem 2 is similar to that of [19,20]. Objective priors such as the above theorem are useful because they can be determined by the data generating model. However, such priors do not have a statistical guarantee when the model is misspecified such as Huber’s -contamination model. In other words, the reference prior in Theorem 2 depends on data generating distribution g because of , where when the contamination ratio is not small such as for heavy contamination cases. We now consider some objective priors under the -posterior, which is robust against such unknown quantities, in the next section.

3. Main Results

In this section, we show our main results. Our contributions are as follows. We derive moment matching priors for quasi-posterior distributions (Theorem 3). We prove that the proposed priors are robust under the condition on the tail of the contamination distribution (Theorem 4).

3.1. Moment Matching Priors

The moment matching priors proposed by [16] are priors that match the posterior mean and MLE up to the higher order (see also [22]). In this section, we attempt to extend the results of [16] to the context of quasi-posterior distributions. Our goal is to identify a prior such that the difference between the quasi-posterior mean and frequentist minimum divergence estimator converges to zero up to the order of . From Theorem 1, we have the following theorem.

Theorem 3

Let , , and . Under the same assumptions as Theorem 1, it holds that:

as , where , , and . Furthermore, if we set a prior that satisfies:

for all , then it holds that:

for as , where .

Hereafter, the prior that satisfies Equation (4) up to the order of for all is referred to as a moment matching prior, and we denote it by .

Proof.

From the asymptotic expansion of the posterior density (3), we have the asymptotic expansion of the posterior mean for as:

for . The integral in the above equation is calculated by:

In general, it is not easy to obtain the moment matching priors explicitly. Two examples are given as follows.

Example 1.

When , the moment matching prior is given by:

for a constant C, where is a third derivation of g. This prior is very similar to that of [16], but the quantities and are different from it.

Example 2.

When , we put:

where . If only depends on for all and does not depend on other parameters , we have:

Then, we can solve the differential equation given by (4), and the moment matching prior is obtained by

3.2. Robustness of Objective Priors

For data that may be heavily contaminated, we cannot assume that the contamination ratio is approximately zero. In general, reference and moment matching priors depend on the contamination ratio and distribution. Therefore, we cannot directly use such objective priors for the quasi-posterior distributions because the contamination ratio and the contamination distribution are unknown. In this subsection, we prove that priors based on the -divergence are robust against these unknown quantities. In addition to (1), we assume the following condition of the contamination distribution:

for all and an appropriately large constant (see also [5]). Note that the assumption (7) is also a basis to prove the robustness against outliers for the minimum -divergence estimator in [5]. Then, we have the following theorem.

Theorem 4

Then, it holds that:

for , where . The notation is the same use as that of [5]. Furthermore, from the above results, the reference prior and Equation (4) are approximately given by:

where and .

Proof.

Put , , and . First, from Hölder’s inequality and Lyapunonv’s inequality, it holds that:

for . Using (10) and the results in Appendix A, we have:

where:

for . Similarly, it also holds that:

for . Since,

the proof of (8) is complete. It is also easy to see the result of (9) from (8). □

It should be noted that (8) looks like the results for Theorem 5.1 in [5]. However, , and its derivative functions are different formulae from those of [5], so that the derivative functions and the proof of (8) are given in the Appendix A. Theorem 4 shows that expectations on the right-hand side of and only depend on the underlying model , but do not depend on the contamination distribution. Furthermore, reference and moment matching priors for the -posterior are obtained by the parametric model , that is, these do not depend on the contamination ratio and the contamination distribution. For example, for a normal distribution , reference and moment matching priors are given by:

However, reference and moment matching priors under the -posterior depend on unknown quantities in the data generating distribution unless , since and have the following forms:

where:

The priors given by (11) can be practically used under the condition (7) even if the contamination ratio is not small.

4. Simulation Studies

4.1. Setting and Results

We present the performance of posterior means under reference and moment matching priors through some simulation studies. In this section, we assume that the parametric model is the normal distribution with mean and variance and consider the joint estimation problem for and . We assume that the true values of and are zero and one, respectively. We also assume that the contamination distribution is the normal distribution with mean and variance one. In other words, the data generating distribution is expressed by:

where is the contamination ratio and n is the sample size. We compare the performances of estimators in terms of empirical bias and mean squared error (MSE) among three methods, which include the ordinary KL divergence-based posterior, -posterior, and -posterior (our proposal). We also employ three prior distributions for , namely (i) uniform prior, (ii) reference prior, and (iii) moment matching prior.

Since exact calculations of posterior means are not easy, we use the importance sampling Monte Carlo algorithm using the proposal distributions for and for (the inverse gamma distribution with parameters a and b is denoted by ), where and (for the details of the importance sampling, see, e.g., [23]). We carry out the importance sampling with 10,000 steps, and we compute the empirical bias and MSE for posterior means of by 10,000 iterations. The simulation results are reported in Table 1, Table 2, Table 3 and Table 4. The reference and the moment matching priors for the -posterior are given by (11), and those for the -posterior are “formally” given as follows:

where is a constant given by:

The term “formally” means that since the reference and the moment matching priors for the -posterior strictly depend on an unknown contamination ratio and contamination distribution, we set in these priors. On the other hand, our proposed objective priors do not need such an assumption, but we assume only the condition (7). We note that [17] also used the same formal reference prior in their simulation studies.

Table 1.

Empirical biases of the posterior means for .

Table 2.

Empirical biases of the posterior means for .

Table 3.

Empirical MSEs of the posterior means for .

Table 4.

Empirical MSEs of the posterior means for .

The simulation results of the empirical bias and MSE of posterior means of and are provided by Table 1, Table 2, Table 3 and Table 4. We consider three prior distributions for , namely uniform, reference, and moment matching priors. In these tables, we set , and . We also set the tuning parameters for the - and -posteriors as .

Table 1 and Table 3 show the empirical bias and MSE of the posterior means of mean parameter based on the standard posterior and the - and -posteriors. The empirical bias and MSE for the two robust methods are smaller than those of the standard posterior mean (denoted by “Bayes” in Table 1, Table 2, Table 3 and Table 4) in the presence of outliers for a large sample size. When there are no outliers (), it seems that the three methods are comparable. On the other hand, when and , the standard posterior mean gets worse, while the performances of the posterior means based on the -posterior and the -posterior are comparable for both empirical bias and MSE.

We also present the results of the estimation for variance parameter in Table 2 and Table 4. When there are no outliers, the performances of robust Bayes estimators under the uniform prior are slightly worse. On the other hand, the reference and moment matching priors provide relatively reasonable results even if the sample size is small and . The empirical bias and MSE of the -posterior and the -posterior means for remain small even if the contamination ratio is not small. In particular, the empirical bias and MSE of the -posterior means for are shown to be drastically smaller than those of the -posterior.

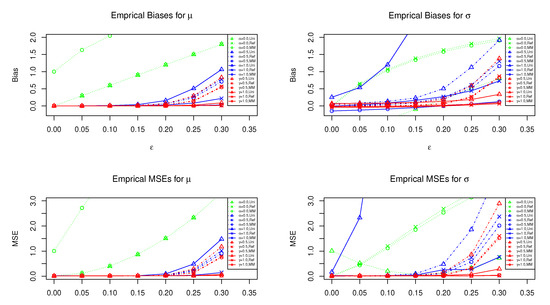

Figure 1 shows the results of the empirical bias and MSE of the posterior means of and under the uniform, reference, and moment matching priors when (fixed) and the contamination ratio varies from to . In all cases, we can find that the standard posterior means (i.e., cases ) do not work well. For the estimation of mean parameter , the - and -posterior means seems to be reasonable for the value of between and . In particular, the -posterior means under reference and moment matching priors have better performance even if . For the estimation of variance parameter , the -posterior means under the uniform prior have larger bias and MSE than the other methods. The -posterior mean with still may be better than other competitors for any . For , the - and -posterior means seem to be comparable.

Figure 1.

The horizontal axis is the contamination ratio . The red lines show the empirical bias and MSE of the -posterior means under the three priors when and . Similarly, the blue and green lines show that of the -posterior and ordinary posterior means, respectively. The uniform, reference, and moment matching priors are denoted by “Uni”, “Ref”, and “MM”, respectively.

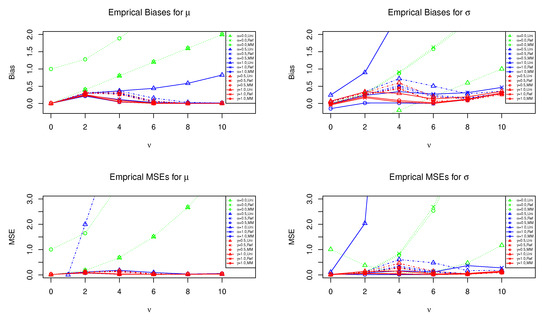

Figure 2 also presents the results of the empirical bias and MSE of the posterior means of and under the same priors as Figure 1 when the contamination ratio is (fixed) and varies from to . For the estimation of mean parameter in Figure 2, the empirical bias and MSE for the robust estimators seem to be nice regardless of except for the case of the -posterior under the uniform prior. Although we can find that some differences appear near , the -posterior means with have better performance for the estimation of both mean and variance for all .

Figure 2.

The horizontal axis is the location parameter of the contamination distribution. The red lines show the empirical bias and MSE of the -posterior means under the three priors when and . Similarly, the blue and green lines show that of the -posterior and ordinary posterior means, respectively. The uniform, reference, and moment matching priors are denoted by “Uni”, “Ref”, and “MM”, respectively.

In these simulation studies, the -posterior mean under the reference and moment matching priors seems to have better performance for the joint estimation of in most scenarios. Although we provide the results for the univariate normal distribution, the other distribution (including the multivariate distribution) should be also considered in the future.

2

4.2. Selection of Tuning Parameters

The selection of a tuning parameter (or ) is very challenging, and to the best of our knowledge, there is no optimal choice of . The tuning parameter controls the degree of robustness, that is, if we set large , we obtain higher robustness. However, there is a trade-off between the robustness and efficiency of estimators. One of the solutions for this problem is to use the asymptotic relative efficiency (ARE) (see, e.g., [11]). It should be noted that [11] only dealt with a one parameter case. In general, the asymptotic relative efficiency of the robust posterior mean of p-dimensional parameter relative to the usual posterior mean is defined by:

(see, e.g., [24]). This is the ratio of the determinants of the covariance matrices, raised to the power of , where p is the dimension of the parameter . We now calculate the in our simulation setting. After some calculations, the asymptotic relative efficiency is given by:

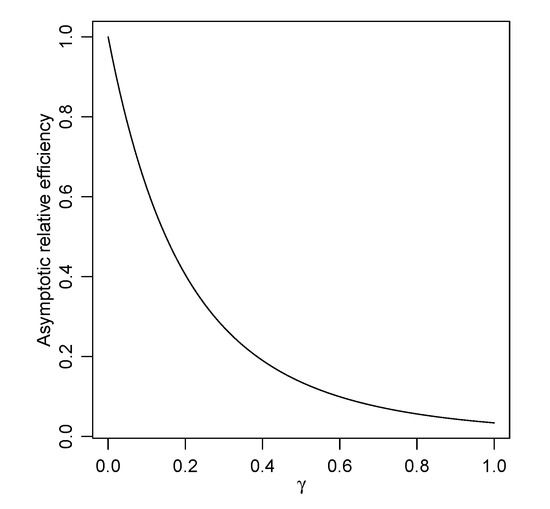

for . We note that it holds as . Hence, we may be able to choose to allow for the small inflation of the efficiency. For example, if we require the value of the asymptotic relative efficiency , we may choose the value of as the solution of the equation (see Table 5). The curve of the function is also given in Figure 3. Several authors have provided methods for the selection of the tuning parameters (e.g., [25,26,27]). Reference [5] focused on the reduction of the latent bias of the estimator, and they recommended setting for the normal mean-variance estimation problem; however, it seems to be unreasonable in terms of the asymptotic relative efficiency (see Table 5 and Figure 3). To the best of our knowledge, there is no method that is robust and efficient under the heavy contamination setting. Hence, other methods that have higher efficiency under heavy contamination should be considered in the future.

Table 5.

The value of and the corresponding asymptotic relative efficiency.

Figure 3.

The curve of the asymptotic relative efficiency for normal mean and variance estimation under the -posterior.

5. Concluding Remarks

We consider objective priors for divergence-based robust Bayesian estimation. In particular, we prove that the reference and moment matching priors under quasi-posterior based on the -divergence are robust against unknown quantities in a data generating distribution. The performance of the corresponding posterior means is illustrated through some simulation studies. However, the proposed objective priors are often improper, and showing their posterior propriety remains as future research. Our results should be extended to other settings. For example, Kanamori and Fujisawa [28] proposed the estimation of the contamination ratio using an unnormalized model. Examining such a problem from the Bayesian perspective is also challenging because there is the problem of how to set a prior distribution for an unknown contamination ratio. Furthermore, it would also be interesting to consider an optimal data-dependent choice of tuning parameter .

Author Contributions

T.N. and S.H. contributed to the method and algorithm; T.N. and S.H. performed the experiments; T.N. and S.H. wrote the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the JSPS Grant-in-Aid for Early-Career Scientists Grant Number JP19K14597 and the JSPS Grant-in-Aid for Young Scientists (B) Grant Number JP17K14233.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Acknowledgments

The authors are grateful to the referees for their valuable comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Some Derivative Functions

We now put , , , and and let a norm be defined by:

We then obtain derivative functions of with respect to as follows:

Similarly, we obtain derivative functions of as follows:

where:

References

- Huber, J.; Ronchetti, E.M. Robust Statistics, 2nd ed.; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- Basu, A.; Shioya, H.; Park, C. Statistical Inference: The Minimum Distance Approach; Chapman & Hall: Boca Raton, FL, USA, 2011. [Google Scholar]

- Basu, A.; Harris, I.R.; Hjort, N.L.; Jones, M. Robust and efficient estimation by minimising a density power divergence. Biometrika 1998, 85, 549–559. [Google Scholar] [CrossRef]

- Jones, M.; Hjort, N.L.; Harris, I.R.; Basu, A. A comparison of related density-based minimum divergence estimators. Biometrika 2001, 88, 865–873. [Google Scholar] [CrossRef]

- Fujisawa, H.; Eguchi, S. Robust parameter estimation with a small bias against heavy contamination. J. Multivar. Anal. 2008, 99, 2053–2081. [Google Scholar] [CrossRef]

- Hirose, K.; Fujisawa, H.; Sese, J. Robust sparse Gaussian graphical modeling. J. Multivar. Anal. 2016, 161, 172–190. [Google Scholar] [CrossRef]

- Kawashima, T.; Fujisawa, H. Robust and sparse regression via γ-divergence. Entropy 2017, 19, 608. [Google Scholar] [CrossRef]

- Hirose, K.; Masuda, H. Robust relative error estimation. Entropy 2018, 20, 632. [Google Scholar] [CrossRef] [PubMed]

- Bissiri, P.G.; Holmes, C.C.; Walker, S.G. A general framework for updating belief distributions. J. R. Stat. Soc. Ser. B Stat. Methodol. 2016, 78, 1103–1130. [Google Scholar] [CrossRef] [PubMed]

- Hooker, G.; Vidyashankar, A.N. Bayesian model robustness via disparities. Test 2014, 23, 556–584. [Google Scholar] [CrossRef]

- Ghosh, A.; Basu, A. Robust Bayes estimation using the density power divergence. Ann. Inst. Stat. Math. 2016, 68, 413–437. [Google Scholar] [CrossRef]

- Nakagawa, T.; Hashimoto, S. Robust Bayesian inference via γ-divergence. Commun. Stat. Theory Methods 2020, 49, 343–360. [Google Scholar] [CrossRef]

- Jewson, J.; Smith, J.Q.; Holmes, C. Principles of Bayesian inference using general divergence criteria. Entropy 2018, 20, 442. [Google Scholar] [CrossRef] [PubMed]

- Hashimoto, S.; Sugasawa, S. Robust Bayesian regression with synthetic posterior distributions. Entropy 2020, 22, 661. [Google Scholar] [CrossRef] [PubMed]

- Bernardo, J.M. Reference posterior distributions for Bayesian inference. J. R. Stat. Soc. Ser. B Methodol. 1979, 41, 113–128. [Google Scholar] [CrossRef]

- Ghosh, M.; Liu, R. Moment matching priors. Sankhya A 2011, 73, 185–201. [Google Scholar] [CrossRef]

- Giummolè, F.; Mameli, V.; Ruli, E.; Ventura, L. Objective Bayesian inference with proper scoring rules. Test 2019, 28, 728–755. [Google Scholar] [CrossRef]

- Kanamori, T.; Fujisawa, H. Affine invariant divergences associated with proper composite scoring rules and their applications. Bernoulli 2014, 20, 2278–2304. [Google Scholar] [CrossRef]

- Ghosh, M.; Mergel, V.; Liu, R. A general divergence criterion for prior selection. Ann. Inst. Stat. Math. 2011, 63, 43–58. [Google Scholar] [CrossRef]

- Liu, R.; Chakrabarti, A.; Samanta, T.; Ghosh, J.K.; Ghosh, M. On divergence measures leading to Jeffreys and other reference priors. Bayesian Anal. 2014, 9, 331–370. [Google Scholar] [CrossRef]

- Hashimoto, S. Reference priors via α-divergence for a certain non-regular model in the presence of a nuisance parameter. J. Stat. Plan. Inference 2021, 213, 162–178. [Google Scholar] [CrossRef]

- Hashimoto, S. Moment matching priors for non-regular models. J. Stat. Plan. Inference 2019, 203, 169–177. [Google Scholar] [CrossRef]

- Robert, C.P.; Casella, G. Monte Carlo Statistical Methods; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Serfling, R. Approximation Theorems of Mathematical Statistics; Wiley: Hoboken, NJ, USA, 1980. [Google Scholar]

- Warwick, J.; Jones, M. Choosing a robustness tuning parameter. J. Stat. Comput. Simul. 2005, 75, 581–588. [Google Scholar] [CrossRef]

- Sugasawa, S. Robust empirical Bayes small area estimation with density power divergence. Biometrika 2020, 107, 467–480. [Google Scholar] [CrossRef]

- Basak, S.; Basu, A.; Jones, M. On the ‘optimal’ density power divergence tuning parameter. J. Appl. Stat. 2020, 1–21. [Google Scholar] [CrossRef]

- Kanamori, T.; Fujisawa, H. Robust estimation under heavy contamination using unnormalized models. Biometrika 2015, 102, 559–572. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).