Abstract

Although linear regression models are fundamental tools in statistical science, the estimation results can be sensitive to outliers. While several robust methods have been proposed in frequentist frameworks, statistical inference is not necessarily straightforward. We here propose a Bayesian approach to robust inference on linear regression models using synthetic posterior distributions based on γ-divergence, which enables us to naturally assess the uncertainty of the estimation through the posterior distribution. We also consider the use of shrinkage priors for the regression coefficients to carry out robust Bayesian variable selection and estimation simultaneously. We develop an efficient posterior computation algorithm by adopting the Bayesian bootstrap within Gibbs sampling. The performance of the proposed method is illustrated through simulation studies and applications to famous datasets.

1. Introduction

Linear regression models are fundamental tools in statistical science. However, it is recognized that the model can be highly influenced by outliers, which may result in biased or inefficient statistical inference on regression coefficients and an error variance. In the content of frequentist inference, general robust estimation methods using divergence e.g., [1,2,3] are known to be appealing, and specialized methods for regression models have been proposed [4,5]. On the other hand, the valid inference under existing outliers would be a challenging problem even if the divergence method is adopted, and the problem is more difficult when the penalized methods for variable selection such as Lasso [6] are incorporated.

In this paper, we develop a Bayesian approach for robust inference in linear regression models based on γ-divergence [2,3]. We adopt a framework of synthetic (general) posterior inference e.g., [7,8,9,10,11], and define the synthetic posterior distribution of the unknown parameters in the linear regression models by replacing the log-likelihood function with γ-divergence, which enables us to naturally carry out point estimation as well as uncertainty quantification based on the posterior. For the prior distributions of the regression coefficients, we assign a class of shrinkage priors expressed as a scale mixture of normals that includes typical ones such as Laplace [12] and horseshoe [13] priors, leading to robust Bayesian variable selection and estimation at the same time. However, the main difficulty of using the proposed synthetic posterior is that the form of the posterior is a complicated function of the unknown parameters, thereby the efficient posterior computation algorithms as done in the standard Bayesian linear regression models are no more applicable. To solve the issue, we develop an efficient sampling algorithm using the Bayesian bootstrap e.g., [14,15,16,17] within Gibbs sampling. In the step of the Bayesian bootstrap, we maximize the randomized posterior distribution to generate a sample from the full conditional distributions of the unknown parameters, which will be shown to be efficiently carried out by modifying the Majorization-Minimization (MM) algorithm given in Kawashima and Fujisawa [4]. The advantage of the proposed algorithm is that there is no rejection steps that are typically required in the standard Metropolis-Hastings algorithm, and performance in terms of mixing and autocorrelation are quite reasonable as shown in our numerical results. R code implementing the proposed method is available at https://github.com/sshonosuke/RBR (see the Supplementary Materials).

In the context of Bayesian inference, standard approaches for robust inference are replacing the normal distribution with heavy-tailed distributions for the error term. The most typical choice is the t-distribution, but this approach is not necessarily a good solution and still suffer from undesirable performance under some scenarios of outliers as shown in our simulation studies. Recently, Gagnon et al. [18] proposed a more robust error distribution than the Cauchy distribution, but the new distribution does not admit stochastic representations that facilitate efficient posterior computation, which would be quite problematic especially when the number of covariates is large as in most modern applications. Moreover, the advantage of the synthetic posterior approach compared with the use of heavy-tailed distribution is that the synthetic posterior can still be applicable to other types of regression models such as logistic and Poisson regressions although we focus only on the application to linear regression in this paper.

The paper is organized as follows: In Section 2, we introduce the setting of our model and formulate the synthetic posterior distribution. Next, we consider the Bayesian linear regression model under shrinkage priors expressed as scale mixtures of normals. Then a new posterior computation algorithm for synthetic posterior with shrinkage priors is proposed. In Section 3, we provide results of numerical studies and real data analysis. Under several types of contaminations, it is shown that the proposed method outperforms the original Bayesian lasso and Bayesian lasso with the t-distribution as the error distribution. Also, we show mixing properties of the proposed algorithm compared with other options.

2. Robust Bayesian Regression via Synthetic Posterior

2.1. Settings and Synthetic Posterior

Suppose we have independent observation for , where is a continuous response and is a p-dimensional vector of covariates. We consider fitting a regression model with . Let be a prior distribution for the model parameters β and . Then the standard posterior distribution of is given by

where is the density function of , and D denotes the set of sampled data. When there exist outliers which have large residuals , the posterior distribution (1) is known to be sensitive to such outliers, and it can produce biased or inefficient posterior inference.

To overcome the problem, we propose replacing the log-likelihood function in (1) with robust alternatives. Specifically, we employ γ-divergence [2,3] of the form:

where

Note that γ is a tuning parameter that controls the robustness, and reduces to the log-likelihood function as . We now define the following synthetic posterior based on γ-divergence:

Since reduces to the standard posterior (1) under , the synthetic posterior (2) can be regarded as a natural extension of the standard posterior (1).

A robustness property of the posterior distribution (2) can be checked by considering the framework adopted in Gagnon et al. [18]. Let be an indicator of being non-outlying observations, that is, if is not an outlier and if is an outlier. For outliers, we consider a situation that and . Under the framework, it follows that for arbitral , where Θ is a compact set. Therefore, the posterior distribution (2) converges to the distribution proportional to

as , where is the number of non-outlying observations. This means that the information of outliers are automatically ignored in the posterior distribution (2) as long as the outlier values are extreme, that is, the outliers and non-outliers are well-separated.

2.2. Posterior Computation

We first consider the standard prior for , namely, normal prior for β and inverse gamma prior for , independently, given by , where and a are hyperparameters. Since the synthetic posterior distribution (2) is not a familiar form, the posterior computation to generate random samples of is not straightforward. We use crude approaches such as Metropolis-Hastings algorithm, but the acceptance probabilities might be very small even when the dimension of covariates is moderate. To avoid such undesirable situation, we adopt approximated sampling strategy using weighted likelihood bootstrap [15] which generate posterior samples as the minimizer of the weighted objective function:

where , so that . The minimization of (3) can be efficiently carried by MM algorithm [19] obtained by slight modification of one given in Kawashima and Fujisawa [4]. From Jensen’s inequality, with current values of the model parameters, the upper bound of the objective function (3) can be obtained as follows:

where C is an irrelevant constant and is a new weight defined as

noting that . The upper bound in (4) can be easily minimized, so that the updating process is given by

Therefore, the MM algorithm to get the minimizer of (3) repeats calculation of the weight (5) and updating parameter values via (6) until convergence.

For generating B random samples from synthetic posterior distribution (2), we generate B samples of ’s and solve minimization problems of (3) for B times via the MM algorithm. The resulting samples can be approximately regarded as posterior samples of (2), and the approximation error would be negligible when n is large e.g., [16,17].

2.3. Incorporating Shrinkage Priors

When the dimension of is moderate or large, it is desirable to select a subset of that are associated with , which corresponds to shrinkage estimation of β. Here we rewrite the regression model to explicitly express an intercept term as . We consider normal prior for α, that is, with fixed . For coefficients β, we introduce shrinkage priors expressed as a scale mixture of normals given by

where is a mixing distribution which may depends on some tuning (scale) parameter λ. Many existing shrinkage priors are included in this class by appropriately choosing the mixing distribution . Among many others, we consider two priors for : exponential distribution which results in Laplace prior of β known as Bayesian Lasso [12], and half-Cauchy distribution for which results in horseshoe prior for β [13]. The detailed settings of these two priors are given as follows:

where and are fixed hyperparameters, denotes the gamma distribution with shape parameter a and rate parameter b, and is the inverse gamma distribution with shape parameter a and scale parameter b. Note that in the horseshoe prior is additional latent variable to make posterior computation easier, and the density of is proportional to , so that follows half-Cauchy distribution.

Using the mixture representation (7), the full conditional distribution of given the other parameters including ’s is given by

where . Similarly to the previous section, we can generate approximate posterior samples of by minimizing the weighted objective function obtained by replacing with in the above expression, where is defined in the same way in the previous section. Then, the objective function can be minimized by a similar MM-algorithm given in the previous section. Under given regression coefficients β, the full conditional distribution of latent variables and hyperparameter λ are the same as the case with the standard linear regression, so that we can use the existing Gibbs sampling methods. We summarize our Markov chain Monte Carlo (MCMC) algorithm under the two shrinkage priors in what follows.

MCMC Algorithm under Shrinkage Prior

- (Sampling from and ) Generate , and set initial values and . Repeat the following procedures until convergence:

- –

- Compute the following weight:

- –

- Update the parameter values:

We adopt the final values as sampled values from the full conditional distribution. - (Sampling from and λ)

- –

- (Laplace prior) The full conditional distribution of is inverse-Gaussian with parameters and in the parametrization of the inverse-Gaussian density given byThe full conditional distribution of is .

- –

- (Horseshoe prior) The full conditional distribution of , and λ are , and , respectively.

Note that the sampling scheme in the main parameter β does not use the previous sampled values of β and there is no rejection steps, thereby autocorrelation of β generated from the above MCMC algorithm is expected to be very small, which will be demonstrated in our numerical studies in Section 3.

3. Numerical Studies

3.1. Bayesian Robustness Properties

We demonstrate Bayesian robustness properties of the proposed method compared with existing ones. We first consider the influence function of posterior means. To this end, we employ a simple linear regression model given by

where , with , is generated from the standard normal distribution, and we set . Let be the set of unknown parameters in the model. Let be the assumed density function for each given . Then, the Bayesian analog of the influence function for the posterior means [1,11] of evaluated at x is given by , where denotes the covariance with respect to the posterior distribution of θ under the model (8), and is the derivative of the (synthetic) likelihood under contamination with respect to the contamination ratio [1,11]. We used uniform priors for α and β, and prior for to obtain the posterior distribution of θ under the model (8). Under the standard likelihood function, it holds that , where is the true density under (8). Also, it follows that

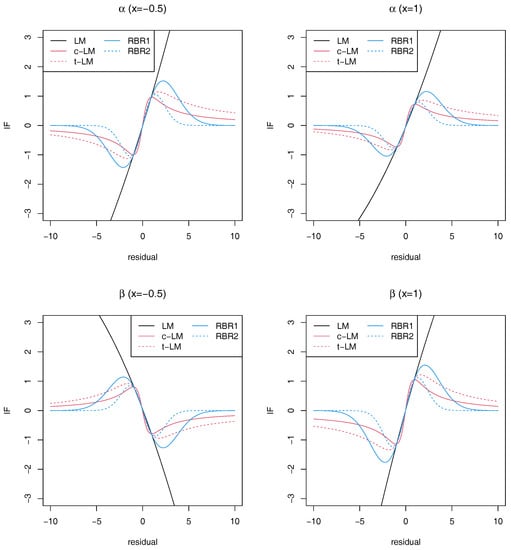

under the γ-divergence. We note that z can be interpreted as the residual of the outlying value, namely, the distance between the outlying value and the regression line . We approximated the integral appeared in H by Monte Carlo integration based on 2000 random samples from . Based on 10,000 posterior samples of θ, we computed and which are the influence functions for α and β, respectively, for and , under the γ-divergence with (RBR1) and (RBR2). For comparison, we also computed the influence functions using the normal distribution (LM), Cauchy distribution (c-LM) and t-distribution with 3 degrees of freedom (t-LM) for the error term in the model (8). The results are presented in Figure 1. It shows that using heavy-tailed distributions such as Cauchy and t-distribution provide bounded influence functions for both parameters, but the influence functions based on the proposed γ-divergence method quickly converges to 0 as increases. This would indicate more strong robustness of the proposed method than using the heavy-tailed distributions.

Figure 1.

Influence functions for and under a simple linear model.

We next more directly evaluate the robustness properties. To this end, we employ the contaminated structure for the error term, for , and for , so the first observations are outliers, where ω and a control the number of outliers and severity of the contamination, respectively. We then define the oracle posterior distribution as the posterior distribution based on the normality assumption and observations without outliers, that is, for . Let be (synthetic) posterior distributions based on the whole data including outliers. If the distance between and is small, we can conclude that the posterior successfully eliminate the information from outliers to make the posterior inference robust. Therefore, we assess the distance by computing the Kullback–Leibler divergence given by . In Table 1, we reported the results averaged over 300 replications under scenarios with and . It is observed that the proposed synthetic posterior is reasonably close to the oracle posterior, which is consistent to the theoretical argument given in Section 2.1, and the distance is smaller than those of the other methods.

Table 1.

Kullback-Leibler divergence averaged over 300 replications.

3.2. Simulation Study

We here evaluate the performance of the proposed methods through simulation studies. We first compare the point and interval estimation performance of the proposed methods with those of some existing methods. To this end, we consider the following regression model with and :

where , and and the other ’s were set to 0. The covariates were generated from a multivariate normal distribution with with . For the error term , we adopted contaminated structure given by , where is a contamination distribution and is contamination probability which might be heterogeneous over samples. We considered two settings , that is, (I) and (II), noting that the contamination distribution has very large variance in scenario (I) while the contamination distribution tends to produce large values in scenario (II). Regarding the contamination probability, we considered the following scenarios:

Note that the contamination probability in the first setting is constant over the samples, which is refereed to homogeneous contamination. On the other hand, in the second setting, the probability depends on covariates and is different over the samples, so that it is refereed to heterogenous contamination.

For the simulated dataset, we applied the proposed robust methods with Laplace and horseshoe priors, denoted by RBL and RHS, respectively, as well as the standard (non-robust) Bayesian lasso (BL). The tuning parameter γ in the proposed method is set to . Moreover, as existing robust methods, we also applied the regression model with the error term following Cauchy distribution and t-distribution with 3 degrees of freedom, denoted by c-BL and t-BL, respectively. In applying the above methods, we generated 2000 posterior samples after discarding the first 1000 samples as burn-in. For point estimates of ’s, we computed posterior median of each element of ’s, and their performance is evaluated via mean squared error (MSE) defined as . We also computed 95% credible intervals of ’s, and calculated average lengths (AL) and coverage probability (CP) defined as and , respectively. These values were averaged over 300 replications of simulating datasets.

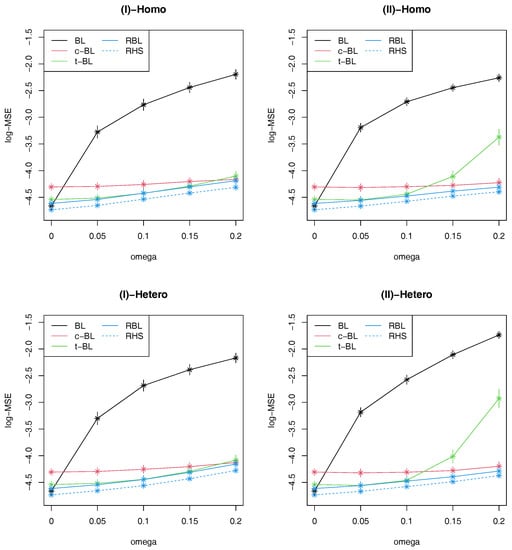

In Figure 2, we presented the results of logarithm of MSE (log-MSE) with error bars corresponding to three times estimated Monte Carlo errors. When there is no outliers, the standard BL method performs quite well while the proposed two robust methods (RBL, RHS) are comparable. On the other hand, the performance of BL gets worse as the ratio of outliers increases, compared with the other robust methods. Comparing the proposed methods with t-BL, it is observed that they perform similarly when the contaminated error distribution is symmetric and has large variance as in Scenario (I), possibly because t-distribution can be effectively adapted to such structure. However, when the contaminated distribution is not symmetric as in Scenario (II), the performance of t-BL gets worse than the proposed robust methods as the contamination ratio increases. In these scenarios, c-BL works better than t-BL, but the proposed method still provides better results than c-BL. It is also observed that c-BL is considerably inefficient compared with the other robust methods when the contamination ratio is not large. Comparing the proposed two robust methods, RHS is slightly better than RBL in all the scenarios. As the horseshoe prior is known to have better performance than Laplace prior as shrinkage priors under no contamination, this results would indicate that such property can be inherited even under contamination by using the proposed robust approach.

Figure 2.

Average values of log-MSE with ±3 Monte Carlo error bands based on 300 replications in four simulation scenarios.

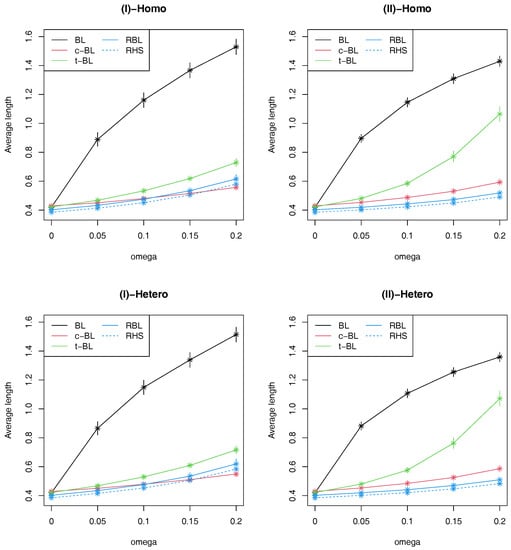

Regarding interval estimation, the results for AL and CP are given in Figure 3 and Table 2, respectively. From Table 2, we can see that CPs of the proposed methods are around the nominal level whereas t-CL and c-BL shows over-coverage and short-coverage properties, respectively, in some scenarios. Figure 3 reveals a similar trend to one observed in log-MSE, so that the credible intervals of BL are shown to be very inefficient when there exist outliers. Also it is observed that the credible intervals of t-BL and c-BL tend to be inefficient compared with the proposed methods. Comparing the proposed two robust methods, RHS provides slightly more efficient interval estimation than RBL, which is consistent with the results of log-MSE in Figure 2.

Figure 3.

Average values of average length of 95% credible intervals with ±3 Monte Carlo error bands based on 300 replications in four simulation scenarios.

Table 2.

Coverage probability of 95% credible intervals averaged over 300 replications.

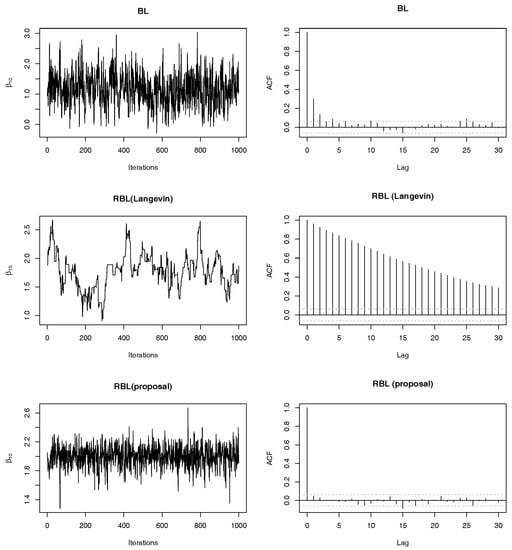

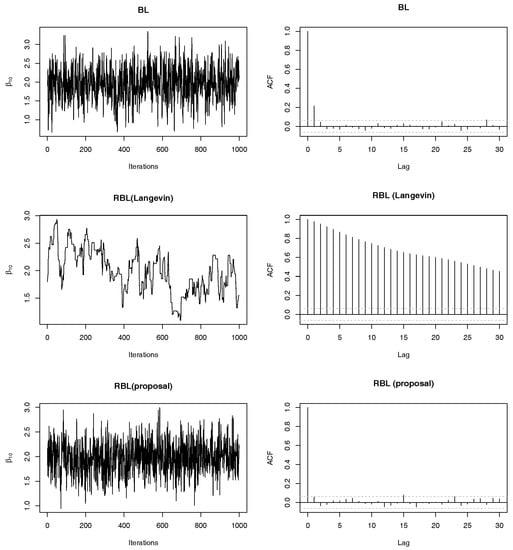

We next checked mixing properties of the proposed MCMC algorithm (denoted by RBL-proposal) compared with standard methods. We consider the same setting as above simulations. In particular, we show only results in the case of (II)-Homo. Note that we can obtain similar results in other cases, i.e., (I)-Homo, (I)-Hetero and (II)-Hetero. We set . In addition to the case of , we also consider the case of , where we set the same true non-zero regression coefficients as the case of . For comparison, we employed (non-robust) Bayesian lasso (BL) and robust Bayesian lasso with Langevin algorithm e.g., [20] with the step size 0.01 (denoted by RBL-Langevin). Note that the Langevin algorithm is applied to the full conditional distribution of β, so that this algorithm has only difference from the proposed algorithm in sampling from the full conditional distribution of β. Figure 4 shows mixing and autocorrelation results for one-shot posterior simulation of . As is well-known, since the ordinal BL have the efficient Gibbs sampling algorithm, we can find that mixing and autocorrelation of them are quite well whereas the sample path of BL is away from the true value due to outliers. From the second row Figure 4, it is observed that the Langevin algorithm produces poor mixing and relatively high autocorrelation. We tried other choices of tuning parameters in the Langevin algorithm, but the results were comparable. On the other hand, the bottom of Figure 4 shows that the mixing properties of the proposed algorithm are quite satisfactory, that is, sample paths are around the true value and there is almost no autocorrelations. It should be noted that the proposed algorithm does not depend on any tuning parameters unlike the Langevin algorithm algorithm. In Figure 5, we reported the results under , which also clearly shows the preferable performance of the proposed sampling algorithm compared with the direct application of the Langevin algorithm.

Figure 4.

Trace plots and autocorrelations of posterior samples of based on three methods (BL, RBL-Langevin and RBL-proposal) in (II)-Homo case with and .

Figure 5.

Trace plots and autocorrelations of posterior samples of based on three methods (BL, RBL-Langevin and RBL-proposal) in (II)-Homo case with and .

3.3. Real Data Examples

We compare results of the proposed methods with that of the non-robust Bayesian Lasso through applications to two famous datasets, Boston Housing [21] and Diabetes data [22]. The response variable in the Boston housing data is corrected median value of owner-occupied homes in USD 1000 $, and there are 15 covariates including one binary covariate. We standardized 14 continuous valued covariates, and included squared values of these covariates, which results in 29 predictors in our models. The sample size is 506. Regarding the Diabetes data, the data contains information of 442 individuals and we adopted 10 covariates, following [12].

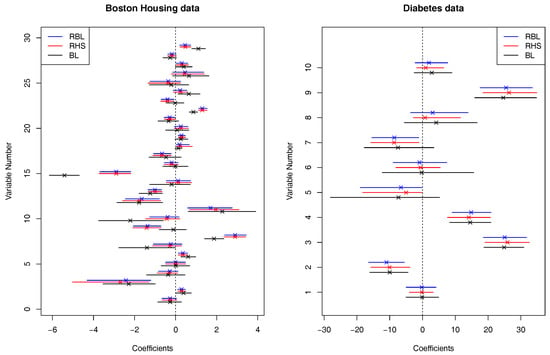

For the datasets, we applied the proposed robust Bayesian methods with Laplace prior (RBL) and horseshoe prior (RHS). We set in both methods. For comparison, we also applied the standard non-robust Bayesian Lasso (BL). Based on 4000 posterior samples after discarding 1000 posterior samples, we computed posterior medians as well as 95% credible intervals of regression coefficients, which are shown in Figure 6. It is observed that the two robust methods, RBL and RHS produce similar results while the standard BL provides quite different results than the others in some coefficients. In particular, in the Diabetes dataset, the two robust methods detected 5th and 7th variables as significant ones based on their credible intervals while the credible intervals of the BL method contains 0, which shows that the robust methods may be able to detect significant variables that the non-robust method cannot. A similar phenomena is observed in several covariates in the Boston Housing data. Comparing two figures, the degree of difference of the results between the two robust methods and the non-robust method in the Diabetes data is smaller than that in the Boston Housing data, for example, the posterior medians among the three methods are almost identical in the Diabetes data. This might show that the ratio of outliers in the Diabetes dataset is smaller than that of the Boston Housing data.

Figure 6.

95% credible intervals with posterior medians (×) of regression coefficients based on the standard Bayesian Lasso (BL), the proposed robust Bayesian methods with Laplace prior (RBL) and horseshoe prior (RHS), applied to Boston Housing data and Diabetes data.

4. Conclusions and Discussion

We proposed a new robust Bayesian regression method by using synthetic posterior based on γ-divergence. Using a technique of Bayesian bootstrap that optimizes a weighted objective function within Gibbs sampling, we developed an efficient posterior computation algorithm to generate posterior samples of regression coefficients under shrinkage priors. The numerical performance of the proposed method compared with existing methods are investigated through simulation and real data examples.

Although our presentation is focused on a linear regression in this paper, the proposed method can be conceptionally extended to other models such as generalized linear models. However, under generalized linear models, corresponding objective functions from γ-divergence is not necessarily tractable since it might include intractable integrals or infinite sums [5], thereby the posterior computation would not be feasible even if we use a similar techniques used in this paper. Only logistic regression for binary outcomes could be a tractable regression model in which γ-divergence is obtained in an analytical form, and outliers in logistic regression is typically related to a mislabeling problem e.g., [23]. The detailed investigation including developing an efficient posterior computation algorithm would be an important future work.

Regarding the choice of γ, it is not straightforward to estimate/select the value in a data-dependent way as it is not a model parameter. From the results in Section 3.1, the posterior distribution would not be very sensitive to the different values of γ, and γ-divergence is known to be robust as long as . Hence, our recommendation is simply using a small value for γ such as as adopted in our numerical studies. On the other hand, some ideas have been proposed for the data-dependent selection of γ (e.g., [4,24]). Also several methods for estimating the ratios of outliers or the outlier probability are available (e.g., [25,26,27,28]), and such information could be useful to determine the value of γ. The detailed investigation is left to a future research.

Supplementary Materials

R code implementing the proposed robust Bayesian method is available at GitHub repository (https://github.com/sshonosuke/RBR).

Author Contributions

S.H. and S.S. contributed the method and algorithm; S.S. performed the experiments; S.H. and S.S. wrote the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work is partially supported by Japan Society for Promotion of Science (KAKENHI) grant numbers 17K14233 and 18K12757.

Acknowledgments

The authors would like to thank the anonymous referees for their valuable comments and suggestions to improve the quality of this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Basu, A.; Harris, I.R.; Hjort, N.L.; Jones, M.C. Robust and efficient estimation by minimising a density power divergence. Biometrika 1998, 85, 549–559. [Google Scholar] [CrossRef]

- Fujisawa, H.; Eguchi, S. Robust parameter estimation with a small bias against heavy contamination. J. Multivar. Anal. 2008, 99, 2053–2081. [Google Scholar] [CrossRef]

- Jones, M.C.; Hjort, N.L.; Harris, I.R.; Basu, A. A comparison of related density-based minimum divergence estimators. Biometrika 2001, 88, 865–873. [Google Scholar] [CrossRef]

- Kawashima, T.; Fujisawa, H. Robust and sparse regression via gamma-divergence. Entropy 2017, 19, 608. [Google Scholar] [CrossRef]

- Kawashima, T.; Fujisawa, H. Robust and Sparse Regression in GLM by Stochastic Optimization. Jpn. J. Stat. Data Sci. 2019, 2, 465–489. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection via the Lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Bissiri, P.G.; Holmes, C.; Walker, S.G. A General Framework for Updating Belief Distributions. J. R. Stat. Soc. Ser. B 2016, 78, 1103–1130. [Google Scholar] [CrossRef]

- Jewson, J.; Smith, J.Q.; Holmes, C. Principles of Bayesian inference using general divergence criteria. Entropy 2018, 20, 442. [Google Scholar] [CrossRef]

- Bhattacharya, A.; Pati, D.; Yang, Y. Bayesian fractional posteriors. Ann. Stat. 2019, 47, 39–66. [Google Scholar] [CrossRef]

- Miller, J.W.; Dunson, D.B. Robust Bayesian Inference via Coarsening. J. Am. Stat. Assoc. 2019, 114, 1113–1125. [Google Scholar] [CrossRef]

- Nakagawa, T.; Hashimoto, S. Robust Bayesian inference via γ-divergence. Commun. Stat. Theory Methods 2020, 49, 343–360. [Google Scholar] [CrossRef]

- Park, T.; Casella, G. The Bayesian Lasso. J. Am. Stat. Assoc. 2008, 103, 681–686. [Google Scholar] [CrossRef]

- Carvalho, C.M.; Polson, N.G.; Scott, J.G. The horseshoe estimator for sparse signals. Biometrika 2010, 97, 465–480. [Google Scholar] [CrossRef]

- Rubin, D.B. The Bayesian bootstrap. Ann. Stat. 1981, 9, 130–134. [Google Scholar] [CrossRef]

- Newton, M.A.; Raftery, A.E. Approximate Bayesian inference with weighted likelihood bootstrap. J. R. Stat. Soc. Ser. B 1994, 56, 3–48. [Google Scholar] [CrossRef]

- Lyddon, S.; Walker, S.G.; Holmes, C. Nonparametric learning from Bayesian models with randomized objective functions. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NIPS 2018), Montreal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Newton, M.A.; Polson, N.G.; Xu, J. Weighted Bayesian bootstrap for scalable Bayes. arXiv 2018, arXiv:1803.04559. [Google Scholar]

- Gagnon, P.; Desgagne, A.; Bedard, M. A New Bayesian Approach to Robustness Against Outliers in Linear Regression. Bayesian Anal. 2020, 15, 389–414. [Google Scholar] [CrossRef]

- Hunter, D.R.; Lange, K. A tutorial on MM algorithms. Am. Stat. 2004, 58, 30–37. [Google Scholar] [CrossRef]

- Welling, M.; Teh, Y.W. Bayesian Learning via Stochastic Gradient Langevin Dynamics. In Proceedings of the International Conference on Machine Learning (ICML 2011), Bellevue, WA, USA, 28 June–2 July 2011. [Google Scholar]

- Harrison, D.; Rubinfeld, D.L. Hedonic prices and the demand for clean air. J. Environ. Econ. Manag. 1978, 5, 81–102. [Google Scholar] [CrossRef]

- Efron, B.; Hastie, T.; Johnstone, I.; Tibshirani, R. Least Angle Regression. Ann. Stat. 2004, 32, 407–499. [Google Scholar]

- Hung, H.; Jou, Z.; Huang, S. Robust Mislabel Logistic Regression without Modeling Mislabel Probabilities. Biometrics 2018, 74, 145–154. [Google Scholar] [CrossRef]

- Warwick, J.; Jones, M.C. Choosing a robustness tuning parameter. J. Stat. Comput. Simul. 2005, 75, 581–588. [Google Scholar] [CrossRef]

- von der Linden, W.; Dose, V.; Padayachee, J.; Prozesky, V. Signal and background separation. Phys. Rev. 1999, 59, 6527–6534. [Google Scholar] [CrossRef]

- von der Linden, W.; Dose, V.; Fischer, R.; Preuss, R. Maximum Entropy and Bayesian Methods Garching, Germany 1998: Proceedings of the 18th International Workshop on Maximum Entropy and Bayesian Methods of Statistical Analysis; Springer: London, UK, 1999; Volume 105. [Google Scholar]

- Von Toussaint, U. Bayesian inference in physics. Rev. Mod. Phys. 2011, 83, 943. [Google Scholar] [CrossRef]

- Kanamori, T.; Fujisawa, H. Robust estimation under heavy contamination using unnormalized models. Biometrika 2015, 102, 559–572. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).