1. Introduction

Thermodynamics of computing has a peculiar history. Many years, scientists have searched for the minimum energy needed to perform an elementary computing step. It was Landauer [

1,

2,

3,

4] who demonstrated in the period 1960–1990 that, in principle, computing can be performed without energy consumption, provided the computing process applies exclusively logically reversible computing steps. As long as information is not destroyed and computing is performed infinitely slowly, no work has to be supplied to the computer. Only erasure of information requires energy input. It is remarkable that we had to wait until the period 2012–2018 to have experimental confirmation [

5,

6,

7,

8] of Landauer’s principle.

Basic thermodynamics, i.e., the Carnot theory, describes thermal engines acting infinitely slowly. In 1975, Curzon and Ahlborn [

9] presented a thermodynamical model for an engine working at non-zero speed: the endoreversible engine. It consists of a reversible core, performing the actual conversion (of heat into work) and two irreversible channels for the heat transport. The approach turned out to be very fruitful: not only processes in engineering, but also in physics, chemistry, economics, etc. can successfully profit from endoreversible modelling, especially when processes happen at non-zero speed and thus tasks are performed in a finite time [

10,

11].

The present paper is an attempt to apply the endoreversible scheme to the Landauer principle, thus to thermodynamically describe computing at a non-zero speed.

2. Logic Gates

Any computer is built from basic building blocks, called gates. In a conventional electronic computer, such building block is e.g., a

not gate, an

or gate, a

nor gate, an

and gate, a

nand gate, etc. Such gate has both a short input (denoted with subscript 1) and a short output (denoted with subscript 2). As an example,

Table 1a defines the

and gate, by means of its truth table. We see an input word

and the corresponding output word

. If the input word is given, the table suffices to read what the output ‘will be’. If, however, the value of the output word is given, this information is not sufficient to recover what the input word ‘has been’. Indeed, output

can equally well be the result of either

or

, or

. For this reason, we say that the gate is logically irreversible. In contrast, the

not gate is logically reversible, as can be verified from its truth table in

Table 1b. Indeed, knowledge of

suffices to know

, but also: knowledge of

suffices to know

.

We not only can distinguish logically irreversible gates from logically reversible gates. We also can quantify how strongly a gate is irreversible. For this purpose, we apply Shannon’s entropy:

where

k is Boltzmann’s constant and

is the probability that a word

(either an input word

or an output word

) has a particular value. As an example, we examine

Table 1a in detail. Let

be the probability that input word

equals 00, let

be the probability that

equals 01, let

be the probability that it equals 10, and let

be the probability that it equals 11. We, of course, assume

for all

i, as well as

. Let

be the probability that output word

equals 0 and let

be the probability that

equals 1. Inspection of

Table 1a reveals that

Automatically, we have

for both

i, as well as

. We now compare the entropies of input and output:

We find that these two quantities are not necessarily equal. For example, if the inputs 00, 01, 10, and 11 are equally probable, i.e., if

then we have

such that

where

is called ‘one bit of information’. Thus, evolving from input to output is accompanied by a loss of entropy

of about 1.189 bits. A similar examination of

Table 1b leads to

. Thus, both input and output contain one bit of information. There is no change in entropy:

.

A reversible computer is a computer exclusively built from reversible logic gates [

12,

13]. As among the conventional logic gates, only the

not gate is logically reversible, we need to introduce unconventional reversible gates, in order to be able to build a general-purpose reversible computer.

Table 2 shows two examples: the

controlled not gate (a.k.a. the

Feynman gate) and the

controlled controlled not gate (a.k.a. the

Toffoli gate). The truth table of the

controlled not gate has the following properties:

such that

and thus

. This result is true whatever the values of the input probabilities

,

,

, and

, thus not only if these four numbers all are equal to

. The reason of this property is clear: the output words

of

Table 2a are merely a permutation of the input words

. Analogously, in

Table 2b, the output words

form a permutation of the input words

. Therefore, the

controlled controlled not gate also satisfies

and hence is logically reversible.

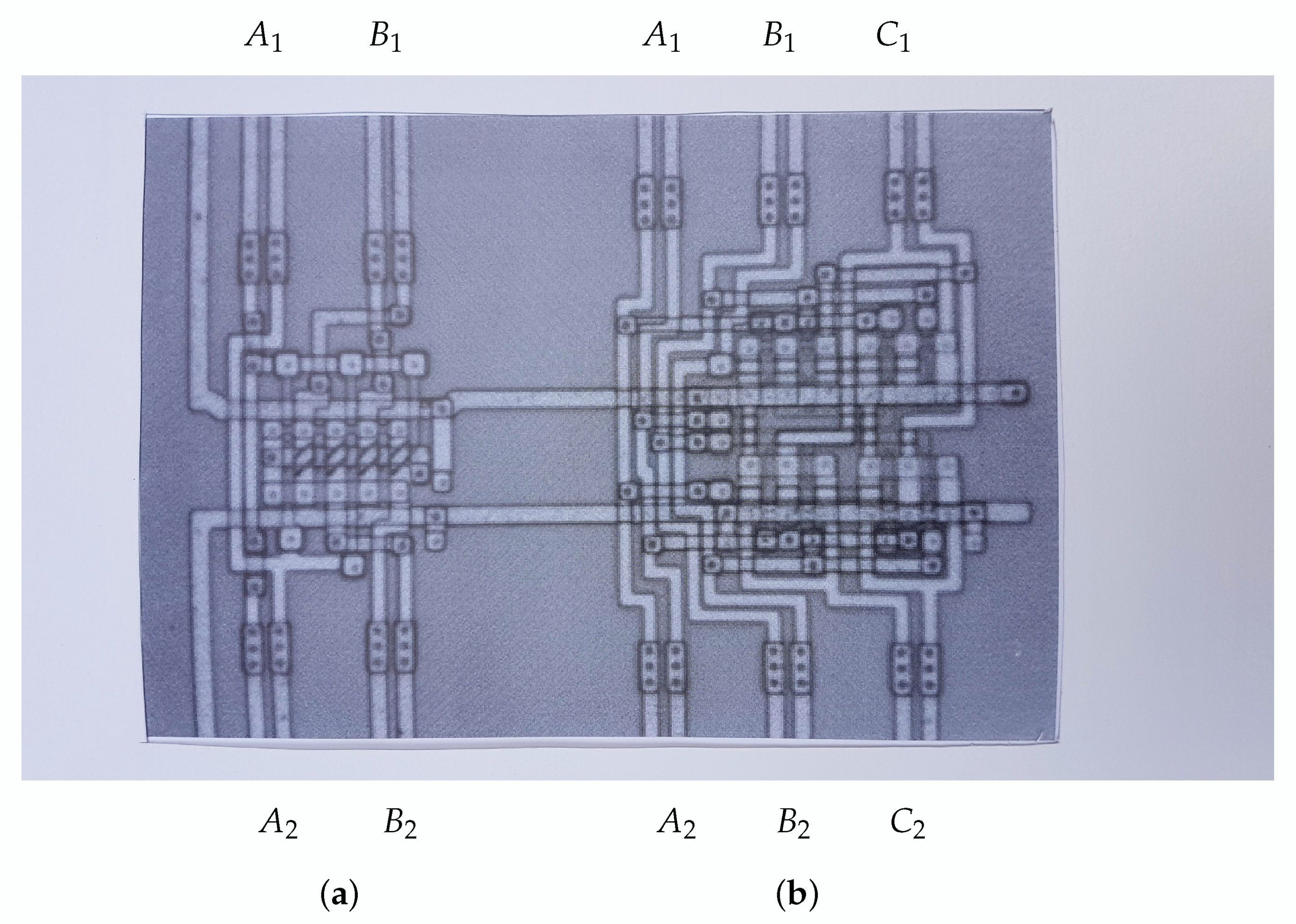

Figure 1 shows a c-MOS (i.e., complementary metal–oxide–semiconductor) implementation of these two reversible gates in a silicon chip.

The reader may easily verify that, in general, we are allowed to summarize as follows:

if the logic gate is logically reversible, then entropy is neither increased nor decreased;

if the logic gate is logically irreversible, then entropy is decreased.

Of course, in the framework of the second law, any entropy decrease sounds highly suspicious. The next section will demonstrate that fortunately there is no need to worry.

3. Macroentropy and Microentropy

Let the phase space of a system be divided into

N parts. Let

be the probability that the system finds itself in part #

m of the phase space. Then, the entropy of the system is

Figure 2a shows an example with

.

We now assume that the division of phase space happens in two steps. First, we divide it into

M large parts (with

), called macroparts. Then, we divide each macropart into microparts: macropart # 1 into

microparts, macropart # 2 into

microparts, ..., and macropart #

M into

microparts:

We denote by

the probability that the system is in microcell #

j of macrocell #

i. Let

be the probability that the system finds itself in macropart #

i:

Figure 2b shows an example with

and

(

,

,

, and

);

Figure 2c shows an example with

and

(

and

).

Let

be the entropy of the system consisting of the

M macrocells. We have

One can easily check that this expression can be written as

The former contribution to the rhs of Equation (

3) is called the macroentropy

S, whereas the latter contribution is called the microentropy

s. We identify the macroentropy with the information entropy of

Section 2. We associate the microentropy with the heat

Q, i.e., with the energy exchange which would occur, if the microentropy enters or leaves the system at temperature

T, according to the Gibbs formula

This decomposition can be expressed in several ways:

We now assume that the probabilities of being in a particular microcell is the same in the three cases of

Figure 2. For example,

of

Figure 2a equals both

of

Figure 2b and

of

Figure 2c. Then, Equations (

1) and (

2) tell us that the entropy

is the same in the three cases:

Assuming all probabilities

are non-zero, it is clear that the macroentropies satisfy

Therefore, the microentropies satisfy

In particular, the inequality

corresponds with the inequality

in

Section 2 for the

and gate in

Table 1a. Indeed:

Figure 2b corresponds with the left part of the truth table, whereas

Figure 2c corresponds with the right part of the table. The decrease of macroentropy (

) thus is compensated by the increase of microentropy (

), leading to

, thus saving the second law:

.

4. Reversible Engine

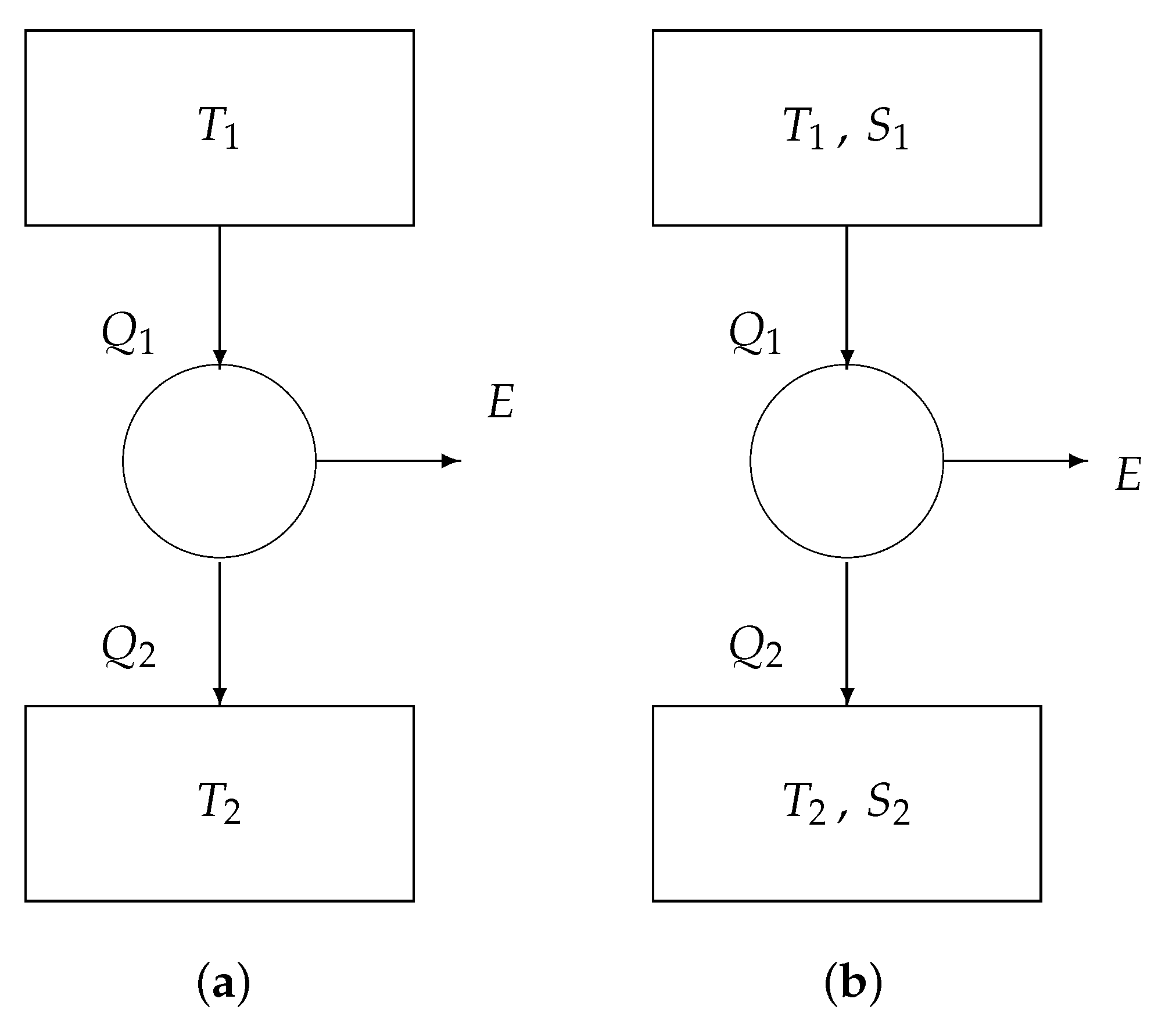

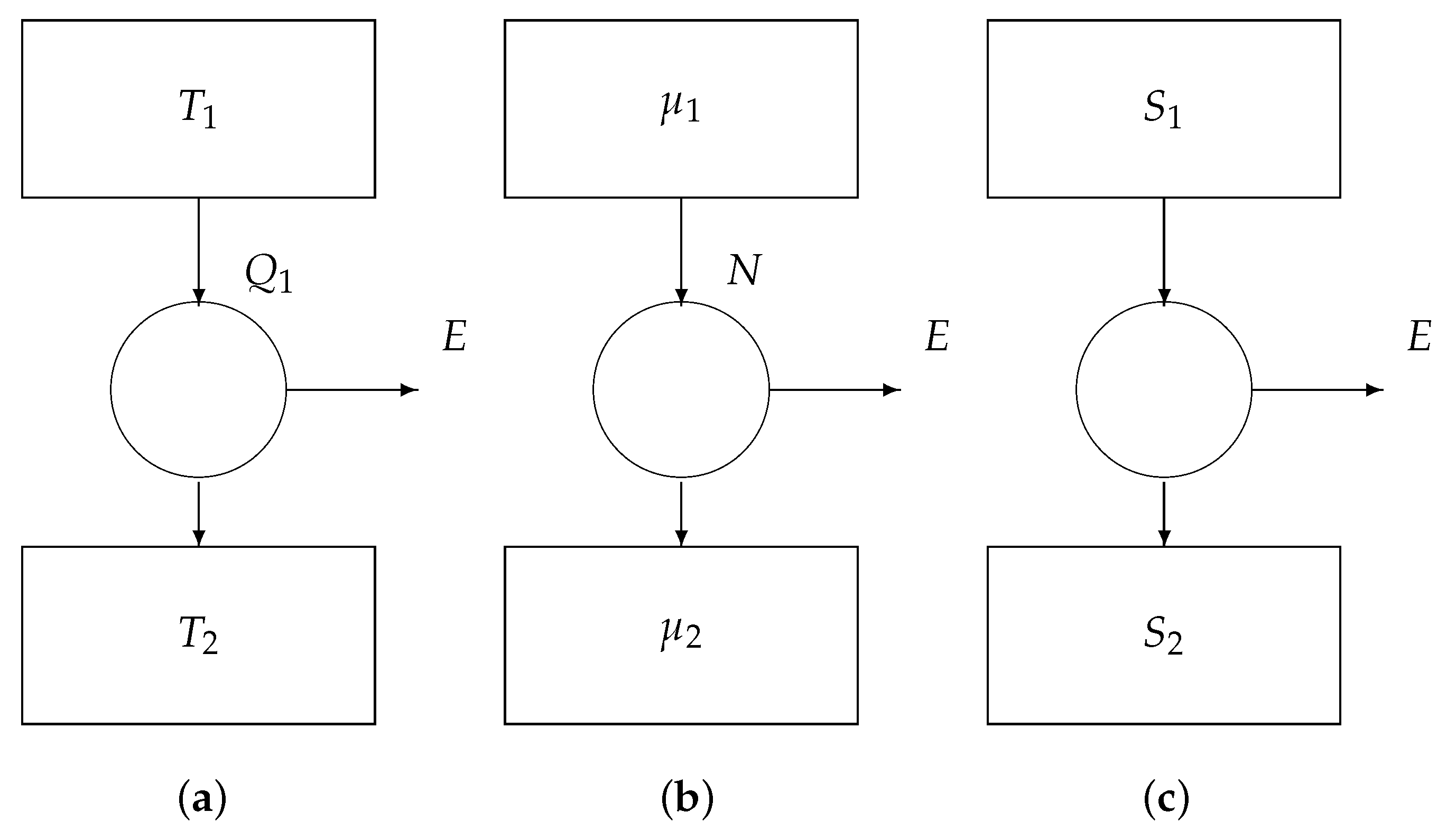

Figure 3a is the classical model of the Carnot engine, consisting of

a heat reservoir at temperature , providing a heat ,

a heat reservoir at temperature , absorbing a heat , and

a reversible convertor, generating the work E.

For our purpose, we provide each reservoir with a second parameter, i.e., the macroentropy

S. See

Figure 3b.

We write the two fundamental theorems of reversible thermodynamics:

conservation of energy: the total energy leaving the convertor is zero:

conservation of entropy: the total entropy leaving the convertor is zero:

Eliminating the variable

from the above two equations yields

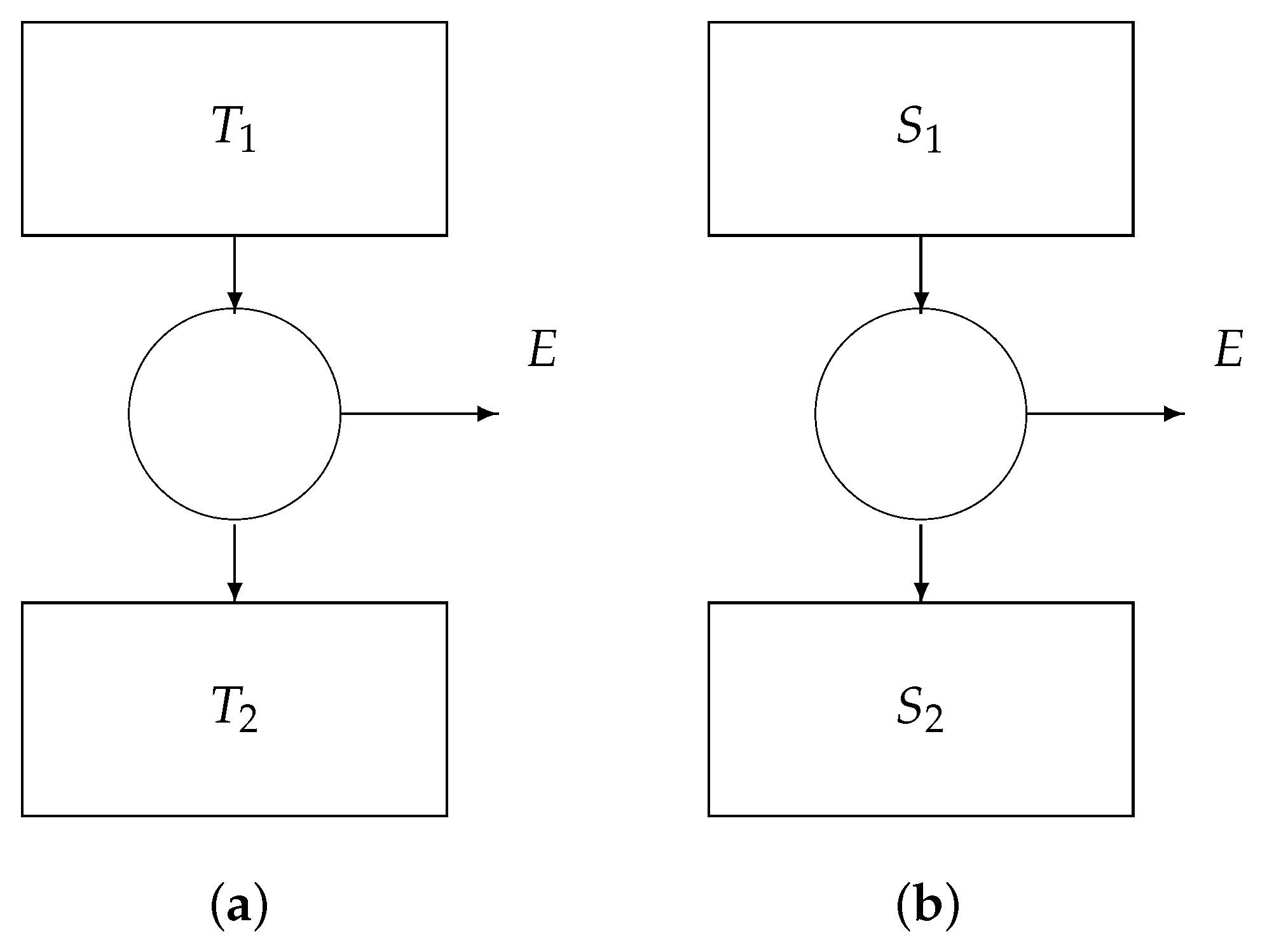

We can distinguish two special cases (

Figure 4):

If

, then we obtain from Equation (

4) that

known as Carnot’s law.

If

(say

T), then we obtain from (

4) that

known as Landauer’s law.

We thus retrieve, besides Carnot’s formula, the priciple of Landauer: if no information is erased (), then no work E is involved; if information is erased (), then a negative work E is produced, meaning that we have to supply a positive work .

We note that, in

Figure 4a, the arrows indicate the sence of positive

(heat leaving the upper heat reservoir) and positive

(heat entering the lower heat reservoir). Analogously, in

Figure 4b, the arrows indicate the sence of positive

(macroentropy leaving the upper memory register) and

(macroentropy entering the lower memory register). In order to actually perform the computation in the positive direction, an external driving force is necessary. The next section introduces this ‘arrow of computation’.

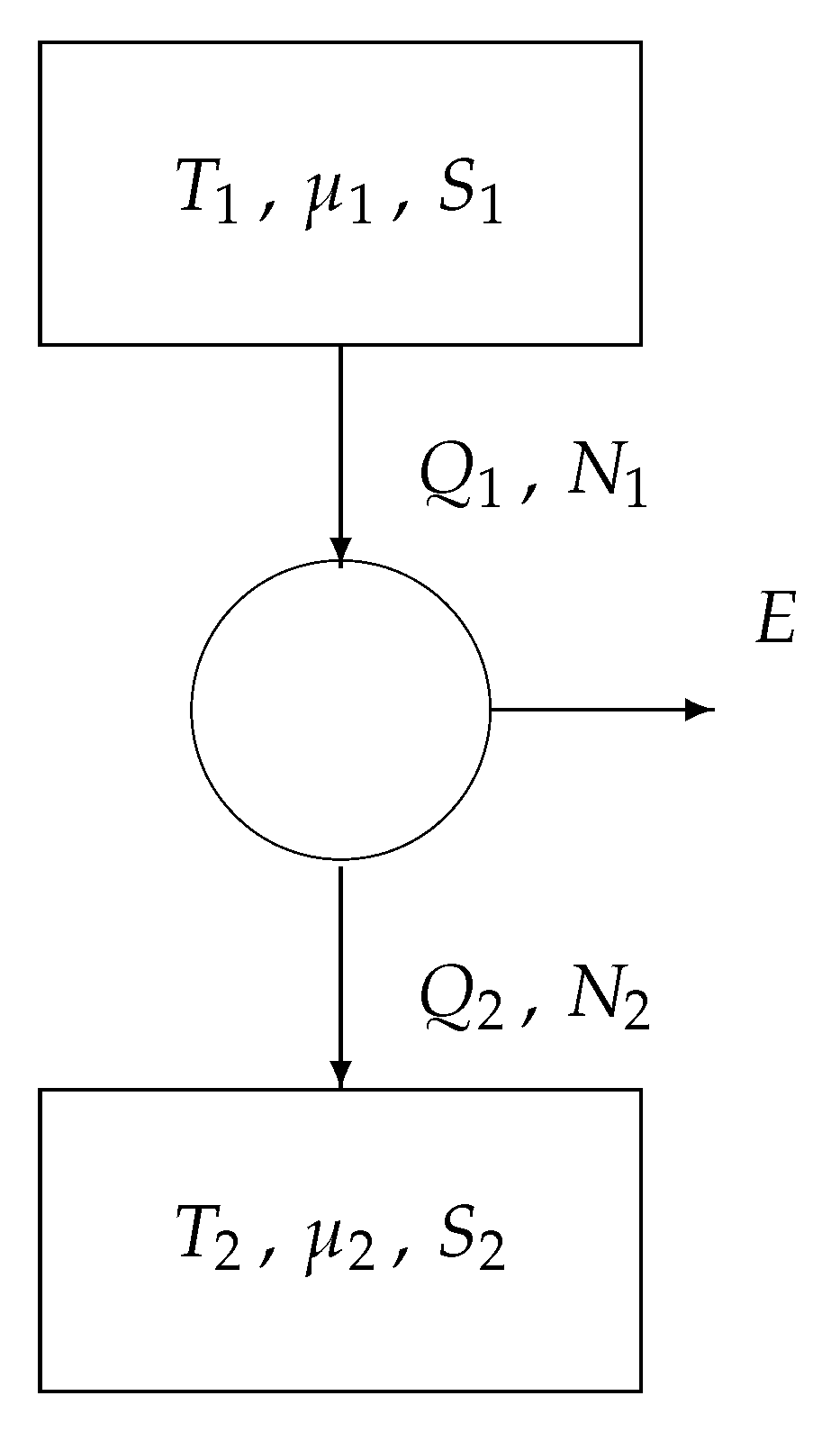

5. Reversible Engine Revisited

Information is carried by particles. Therefore, we have to complement the reservoirs of

Figure 3b with a third parameter, i.e., the chemical potential

of the particles. See

Figure 5. Besides a heat flow

Q, a reservoir also provides (or absorbs) a matter flow

N.

In conventional electronic computers, the particles are electrons and holes within silicon and copper. There, the particle flow

N is (up to a constant) equal to the electric current

I:

where

q is the elementary charge. The chemical potential

is (up to a constant) equal to the voltage

V:

In the present model, we maintain the quantities N and , in order not to exclude unconventional computing, e.g., computation by means of ions, photons, Majorana fermions, ... or even good old abacus beads.

We write the three fundamental theorems of reversible thermodynamics:

conservation of matter: the total amount of matter leaving the convertor is zero:

conservation of energy: the total energy leaving the convertor is zero:

conservation of entropy: the total entropy leaving the convertor is zero:

The first equation leads to

, which we simply denote by

N. Eliminating the variable

from the remaining two equations yields the output work:

We can distinguish three special cases (

Figure 6):

If

and

, then we obtain

i.e., Carnot’s law.

If

and

, then we obtain

known as Gibb’s law.

If

(say

T) and

, then we obtain

i.e., Landauer’s principle.

6. Irreversible Transport

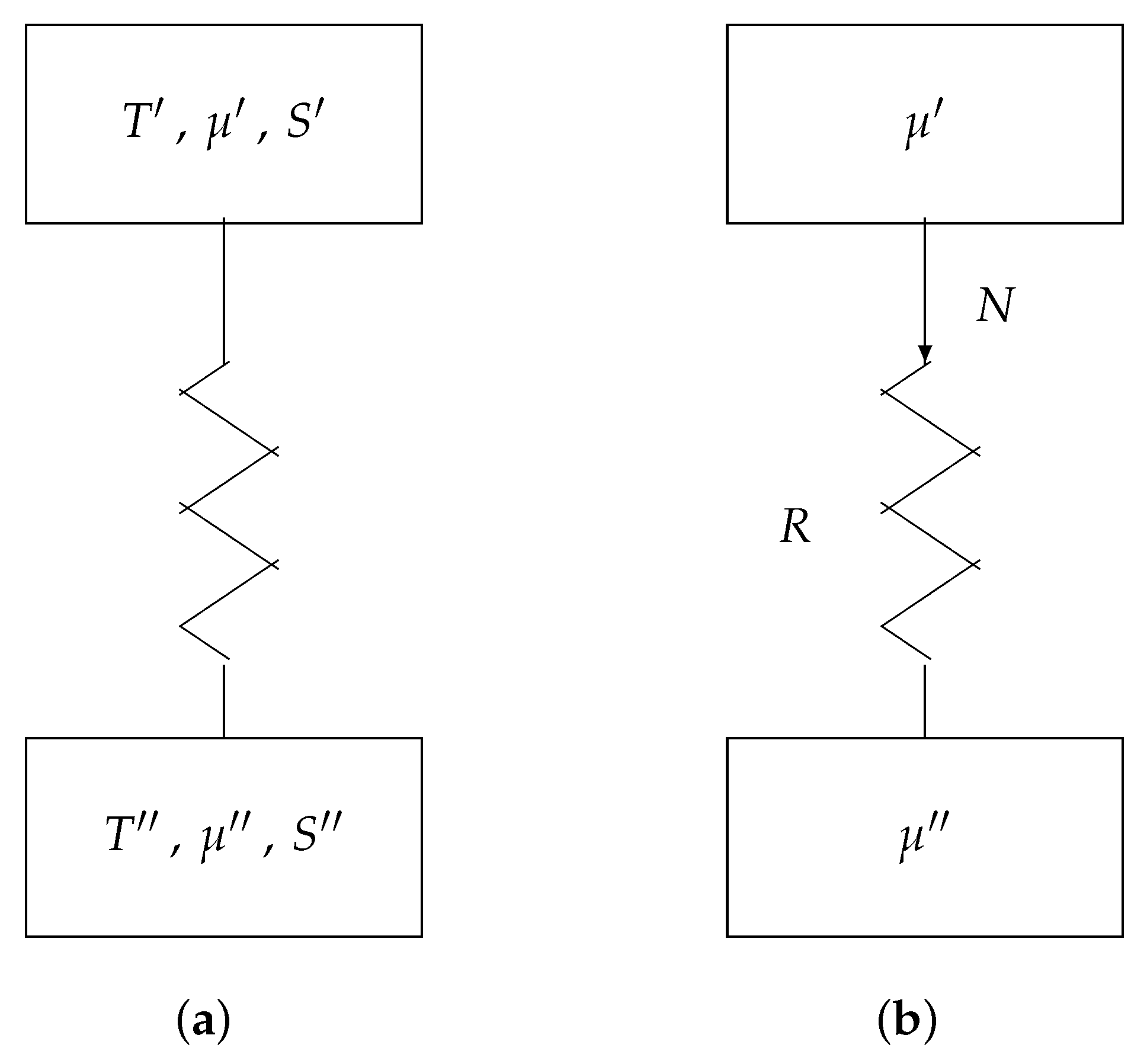

Figure 7a represents a transport channel between two reservoirs. The upper reservoir has parameter values

,

, and

; the lower reservoir has parameter values

,

, and

. We assume that the computer hardware is at a uniform temperature. Hence,

(say

T). Furthermore, we assume that

(say

S). This means, e.g., that noise does not cause random bit errors during the transport of the information. Thus, reservoirs only differ by

and

. See

Figure 7b.

The particle drift

N is caused by the difference of the two potentials

and

. The law governing the current is not necessarily linear. Hence, we have

where

is an appropriate (monotonically increasing) function of

and

R (called resistance) is a constant depending primarily on the material properties and geometry of the particle channel. Many different functions

f are applicable in different circumstances. For example, in classical c-MOS technology, an electron or hole diffusion process in silicon [

14,

15] can successfully be modeled by the function

such that

Below, however, for sake of mathematical transparency, we will apply

such that we have a linear transport equation, i.e., Ohm’s law:

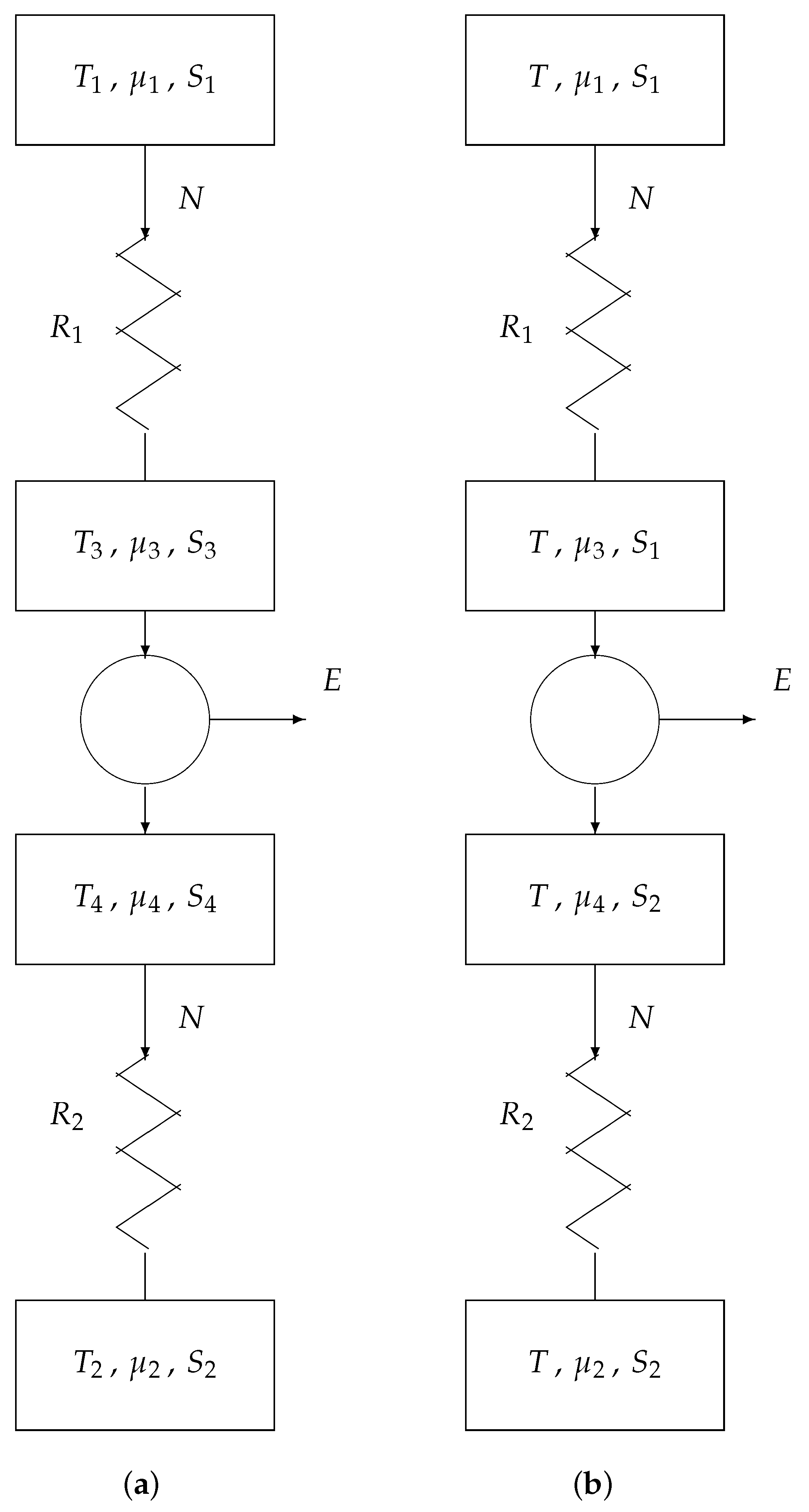

7. Endoreversible Engine

Figure 8 shows an endoreversible computer gate. It consists of

The core is modeled according to

Section 5; the two transport channels are modeled according to

Section 6. In

Figure 8a, the two outermost reservoirs (i.e., the input and output data registers) have fixed boundary conditions:

and

, respectively. The inner parameters

,

,

,

,

, and

are variables. In accordance with

Section 6, we choose

and

equal to

and

, respectively, as well as

and

equal to

and

, respectively. Finally, we assume the whole engine is isothermal, such that

. This results in

Figure 8b. We thus only hold back as variable parameters the chemical potentials

and

.

According to

Section 5, we have, for the core of the endoreversible engine:

According to

Section 6, the two transport laws are

We remind that, in the present model, the intensive quantities

T,

,

,

, and

have given values, whereas the quantities

and

have variable values. We eliminate the two parameters

and

from the three Equations (

5)–(

7). We thus obtain

where

and

. The total energy dissipated in the endoreversible engine is

The former term on the rhs of Equation (

8) consists of the energy

dissipated in resistor

and the energy

dissipated in resistor

; the latter term is the energy dissipated in the information loss in the core of the engine.

If

, then we dissipate a minimum of energy

. Unfortunately,

corresponds with an engine computing infinitely slowly (just like a heat engine produces power with a maximum, i.e., Carnot, efficiency when driven infinitely slowly). For reasons of speed, a computer is usually operated in so-called short-circuit mode:

. This operation corresponds with a short circuit between the two inner reservoirs of

Figure 8:

. Under such condition, we have

For the sake of energy savings, we aim at low . As is large for sake of speed, we thus need both small R and small .