Abstract

Experimental diffusivities are scarcely available, though their knowledge is essential to model rate-controlled processes. In this work various machine learning models to estimate diffusivities in polar and nonpolar solvents (except water and supercritical CO2) were developed. Such models were trained on a database of 90 polar systems (1431 points) and 154 nonpolar systems (1129 points) with data on 20 properties. Five machine learning algorithms were evaluated: multilinear regression, k-nearest neighbors, decision tree, and two ensemble methods (random forest and gradient boosted). For both polar and nonpolar data, the best results were found using the gradient boosted algorithm. The model for polar systems contains 6 variables/parameters (temperature, solvent viscosity, solute molar mass, solute critical pressure, solvent molar mass, and solvent Lennard-Jones energy constant) and showed an average deviation (AARD) of 5.07%. The nonpolar model requires five variables/parameters (the same of polar systems except the Lennard-Jones constant) and presents AARD = 5.86%. These results were compared with four classic models, including the 2-parameter correlation of Magalhães et al. (AARD = 5.19/6.19% for polar/nonpolar) and the predictive Wilke-Chang equation (AARD = 40.92/29.19%). Nonetheless Magalhães et al. requires two parameters per system that must be previously fitted to data. The developed models are coded and provided as command line program.

1. Introduction

Diffusivities are important properties for the proper design, simulation and scale-up of rate-controlled separations and chemical reactions, where they are required for the estimation of dispersion coefficients, convective mass transfer coefficients, and catalysts effectiveness factors [,,]. However, diffusivity data is scarce both in terms of compounds and operating conditions, leading to the need of accurate models capable of predicting diffusivities when no experimental data is available [].

Currently the Wilke-Chang model [], proposed in 1955, remains the most widely used equation to estimate binary diffusivities mainly due to its simplicity. It requires only knowledge of solvent viscosity, solute molar mass, solute volume at normal boiling point and operating conditions like temperature. Other hydrodynamic equations have been proposed such as Scheibel [], Tyn-Calus [], and Hayduk and Minhas []. Correlative models validated for both polar and nonpolar systems have been put forward by Magalhães et al. [,], and one may also cite the 2-parameter correlation of Dymond–Hildebrand–Batschinski (DHB) [,], based on the free-volume theory, for nonpolar and weakly polar systems at moderate densities. However, these correlations require that data of a given system is available in order to interpolate and extrapolate diffusivities for the desired condition. Hybrid models are also available, such as the predictive Zhu et al. [] and the predictive Tracer Liu-Silva-Macedo (TLSM) and its 1-parameter correlations (TLSMd and TLSMen) [,,]. These are Lennard-Jones fluid models and comprehend two contributions: a free-volume part and an energy component.

With the increase of readily available computing power, Artificial Intelligence and machine learning (ML) techniques have been increasingly applied for the estimation of physical properties of various compounds. In the chemistry field, machine learning is commonly applied in the scope of quantitative structure-property relationship (QSPR) or quantitative structure-activity relationship (QSAR) studies. These are regression or classification models that relate the structure and physicochemical properties of a molecule with a desired response: a chemical property, in the case of QSPR, or a biological activity, in the case of QSAR. QSPR/QSAR approaches have been applied to predict the diffusivity of pure chemicals [] and acids in water [] using a database of 320 chemicals and 65 acids, respectively. In both cases, a genetic algorithm was employed to select the molecular descriptors while feed-forward and radial basis function neural networks were used to build the diffusion coefficients models. A squared correlation coefficient above 0.98 was obtained for the test set in either case. Beigzadeh and coworkers [] developed a feed-forward artificial neural network to estimate the Fick diffusion coefficient in binary liquid systems, using a database of 851 points. Results showed superior performance when compared with other theoretical and empirical correlative models commonly used, with a total average relative deviation of 4.75%. Eslamloueyan and Khademi [] used a database of 336 experimental data points to developed a feed forward neural network to predict binary diffusivities of gaseous mixtures at atmospheric pressure as a function of temperature and based on the critical temperature, critical volume and molecular weight of each component. This model showed a relative error of 4.47%, lower than other alternative correlations. A QSRP model by Abbasi and Eslamloueyan [] applied a multi-layer perceptron (MLP) neural network and an adaptive neuro-fuzzy inference system (ANFIS) to estimate the binary diffusion coefficients of liquid hydrocarbons mixtures. These models were constructed on a database of 345 experimental points and showed very good accuracies, with average absolute relative deviation (AARD) of 7.79% for the test data, when compared with five semi-empirical correlations, such as the Tyn-Calus and Wilke-Chang equations. Another QSPR model with five parameters based on genetic function approximation has been proposed to predict diffusion coefficient of non-electrolyte organic compounds in air at ambient temperature []. It used a dataset of 4579 organic compounds and provided a very low AARD of 0.3%. The authors applied leverage value statistics to define the applicability domain of the final model. A neural network model based on mega-trend diffusion algorithm was proposed to predict CO2 diffusivity in biodegradable polymers []. It showed better precision when compared with free-volume and conventional back-propagation models. More recently, machine learning and neural networks models have also been applied to the estimation of the thermal diffusivity of hydrocarbons [], aromatic compounds insulating material [], and diffusivity of solutes in supercritical carbon dioxide [].

In this work we develop models for the prediction of binary diffusion coefficients in polar and nonpolar systems by employing several machine learning algorithms, such as decision tree, nearest neighbors and ensemble methods. A large database of experimental data was collected, divided into polar and nonpolar systems, and used for training the models. The database comprehends experimental points for liquids (except water), compressed gases and supercritical fluids (except CO2). Water was excluded due to its usual distinct behavior from other polar solvents, and the large amount of experimental data available for aqueous systems may cause a bias in the model. This later argument also applies to binary diffusivities in supercritical CO2. Results were compared with four methods to estimate diffusivities: two hydrodynamic equations (Wilke-Chang and Tyn-Calus), a 2-parameter correlation (Magalhães et al.), and a hybrid model (Zhu et al.).

2. Theory and Methods

The methodology used in this work to develop machine learning (ML) models for the prediction of diffusivities can be summarized in the following steps: (i) variable selection; (ii) learning algorithms selection; (iii) data splitting into training and testing sets; (iv) data scaling; (v) hyper-parameters optimization by grid search with cross validation; and (vi) final model evaluation. These steps are detailed below. The ML models were compared with the hydrodynamic models of Wilke-Chang [] and Tyn-Calus [], the hybrid model of Zhu et al. [] and one of the correlations proposed by Magalhães et al. [].

2.1. Database Compilation

The database of binary diffusivities used in this work relied on the recent compilation published by Zêzere et al. [], in the case of nonpolar solvent systems, and on an updated version of the database reported by Magalhães et al. for polar solvent systems []. Globally, database covers a wide range of temperatures (213.2–567.2 K) and densities (0.30–1.65 g cm−3) being composed by 244 binary systems and 2560 data points. This includes 90 polar systems (polar solvent/solute) totalizing 1431 points and 154 nonpolar systems (nonpolar solvent/solute) totalizing 1129 points. Data were collected for the 20 properties shown in Table 1. Whenever not reported by the authors, densities and viscosities were taken from the National Institute of Standards and Technology (NIST) database or calculated by the following set of equations: Yaws [], Cibulka and Ziková [], Cibulka et al. [,], Cibulka and Takagi [], Przezdziecki and Sridhar [], Viswanath et al. [] the Lucas method [], Assael et al. [], Cano-Gómez et al. [] and Pádua et al. []. Solute molar volumes at normal boiling point were estimated by Tyn–Calus equation []. The critical constants, whenever not provided with the diffusion data and not found in the other references [,,,,,,,,], were estimated by Joback [,,], Somayajulu [], Klincewicz [,], Ambrose [,,,] and Wen–Qiang [] methods. For ionic liquids the critical constants were retrieved from Valderrama and Rojas []. The acentric factors, when not provided, were estimated by the Lee-Kesler [] and Pitzer [] equations or retrieved from [,,,,,,,,]. The Lennard-Jones diameter and energy were taken from Silva et al. [] and, when not available, were estimated by equations 7 and 8 from Liu et al. [] and equation 9 from Magalhães et al. []. Detailed information on the database used, including pure compound properties, is presented in Table 2.

Table 1.

Properties and variables available for each system in the database.

Table 2.

Pure compounds properties and respective sources.

Polar and nonpolar systems were separated into two databases based on the polarity of the solvent and, for each, data were split randomly 70/30% into training and testing sets. The training set was used for model learning and fitting, and the testing set was used to evaluate the performance of the fitted model after learning. Information from the testing set is never known during learning. In order to guarantee that all models are fed the same data, these data sets were also used for the evaluation of the classic models.

Most learning algorithms benefit from scaling input variables in order to improve model robustness and training speed []. The most common scaling strategies are normalization or standardization. Normalization consists in transforming the real range of values into a standard range (e.g., [0, 1] or [−1, 1]). Standardization consists in transforming variables so that they follow a standard normal distribution (mean of zero and standard deviation of one). In this work, variables were normalized to the [0, 1] range before passing them to training.

2.2. Variable Selection and Hyper-Parameter Optimization

Model variables were selected from the ones shown in Table 1 while removing collinear variables systematically. For each pair of variables with collinearity above a defined threshold of 0.50, the one with lower correlation with was removed from the model. The simplicity of obtaining a variable for a given system was also considered if both show similar correlation with . This was done to improve the simplicity and ease of use of the final model.

Besides the model parameters discussed thus far, each learning algorithm possesses a set of parameters, which can be seen as configuration options, that specify how the algorithm behaves. These variables are often called hyper-parameters and are not fitted to data but rather must be set before training. Hyper-parameters were optimized for each learning algorithm using a grid search method with 4-fold cross validation implemented using GridSearchCV of scikit-learn (version 0.22.1). This method performs an exhaustive search for the best hyper-parameter values in a predefined grid by evaluating the model performance by 4-fold cross-validation. The k-fold cross-validation approach divides the training set into k subsets and trains the model with data from k − 1 of the folds while testing it on the fold. This process is repeated using every different k − 1 fold combination and the best model (best combination of hyper-parameters) emerges as that with the best average performance while avoiding both overfitting and underfitting of the models. The evaluated hyper-parameters for each learning algorithm are showed in Table S1 of the Supplementary Material.

2.3. Machine Learning Algorithms

Five ML algorithms were evaluated for the prediction of binary diffusivities: A multilinear regression, a k-nearest neighbors model, a decision tree algorithm, and two ensemble methods (random forest and gradient boosted). Models were implemented using the Python machine learning library scikit-learn version 0.22.1 [].

A simple ordinary least squares multilinear regression was used as a baseline model for the prediction of binary diffusivities. In a multilinear regression [], the target value, , is a linear combination of explanatory variables, , weighted by coefficients . The coefficients are optimized to minimize the residual sum of squares between the observed and the calculated values. It was implemented using the LinearRegression class in scikit-learn.

The k-nearest neighbors (kNN) [,] is one of the simplest machine learning algorithms. Its prediction is the average of its k closest neighbors in the input space. Neighbors are selected from a set of examples for which the target property is known. This can be seen as the training set, although unlike other algorithms, kNN does not require an explicit training phase. The nearest neighbors are identified by position vectors in the multidimensional input space, usually in terms of Euclidean distance, nonetheless other distance measures could be applied. The kNN algorithm was implemented using the KNeighborsRegressor class in scikit-learn.

Decision tree [,] models take the training data and create a set of decision rules that are applied to the explanatory variables. Prediction is performed by following these tree-like rule graphs and selecting the paths that return the best metric, usually lowest entropy or largest information gain, until an output node is reached. The decision tree algorithm was implemented using the DecisionTreeRegressor class in scikit-learn.

Finally, ensemble methods are a combination of a large number of simple models, thus improving generalizability and robustness over a single model []. They can be divided into averaging ensemble methods, as the random forest algorithm, and boosting ensemble methods, such as the gradient boosted model, and have proven to be effective for regression learning [].

Random forests [,] are comprised by several strong models, such as decision trees, trained independently. For the construction of each tree a random subset of training data is selected, while the remaining subset is used for testing. The final prediction is obtained as an average of the ensemble. Random forests are fast and simple to apply as they have simpler hyper-parameters settings than other methods, can be applied in cases with a large amount of noise and are less prone to overfitting []. The random forest model was implemented in scikit-learn using the RandomForestRegressor class.

Gradient boosted [] models combine several learners, which are not independently trained but combined so that each new learner mitigates the bias of the previous one. The gradient boosted model also uses decision trees which are fitted to the gradient of a loss function, for instance, the squared error. The gradient is calculated for every sample of the training set but only a random subset of those gradients is used at by each learner. Gradient boosted has showed to provide very good predictions at least on par with random forests and usually superior to other methods []. The gradient boosted algorithm was implemented using the GradientBoostingRegressor class.

2.4. Classic Models

Several classic models were used as a benchmark for the proposed ML models, including the still extensively used Wilke-Chang equation [], the Tyn-Calus equation [], one of the Magalhães et al. correlations [], and the Zhu et al. hybrid model []. Bellow, these models are briefly presented.

The Wilke-Chang equation [] is an empirical modification of the Stokes-Einstein relation and is given by:

where (dimensionless) is the association factor of the solvent (1.9 for the case of methanol, 1.5 for ethanol and 1.0 if it is unassociated []), and (cm3 mol−1) is the solute molar volume at normal boiling temperature, which can be estimated using the critical volume () by the Tyn-Calus relation [,]:

The Tyn-Calus equation [] is another commonly used hydrodynamic equation, which is described by:

Magalhães et al. [] proposed nine correlations for , and four of them depend explicitly on solvent viscosity and temperature. Here we adopt the following:

where and are fitted parameters for each system. This equation consists of a modification of the Stokes–Einstein theory [].

Zhu et al. [] developed a hybrid model containing a component related with the free volume and another related with energy. It was devised for the estimation of of real nonpolar fluids. It is described by:

where the subscripts 1 and 2 denominate solvent and solute, respectively, is the mass of the solvent, and and are the density and temperature reduced using binary Lennard-Jones (LJ) parameters and as described by:

The binary LJ parameters are calculated by the following combining rules:

and the interaction parameter is estimated through:

Finally, the LJ parameters and for the solute are calculated by:

and for the solvent:

where is the number critical density (cm−3) and and are the reduced density and reduced temperature of the solvent, calculated with the corresponding critical constants: and .

3. Results and Discussion

3.1. Machine Learning Models

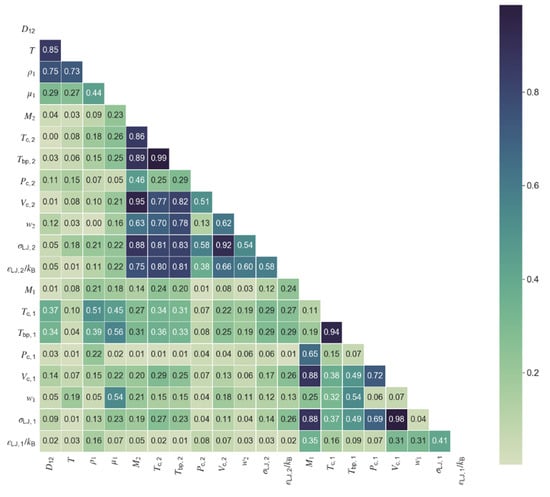

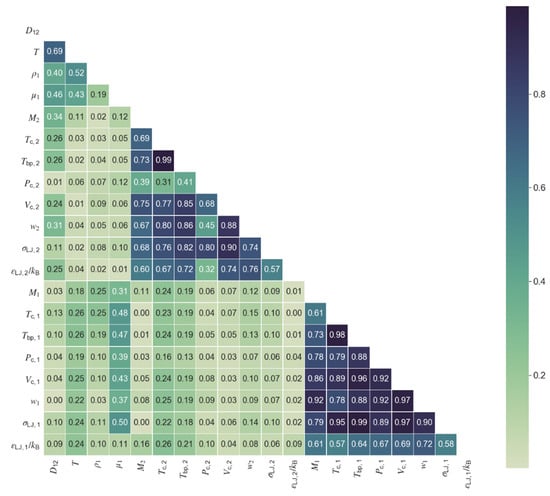

The first step towards model development was the choice of relevant variables for the model. Selection was conducted on the basis of the collinearities between the available variables/properties and their level of correlation with the diffusivity. Figure 1 and Figure 2 show the correlation matrix (in the form of a heat map) for the polar and nonpolar data sets, where the values represent the absolute Pearson correlation. When two variables presented collinearities above a defined threshold of 0.50, only one was kept in the model, namely the one providing of the best correlation with diffusivity. Following this procedure, six variables were selected for the polar diffusivity model: temperature, solvent viscosity, solute molar mass, solute critical pressure, solvent molar mass, and the Lennard-Jones energy constant of solvent. For the nonpolar diffusivity model, temperature, solvent viscosity, solute molar mass, solute critical pressure, and solvent molar mass were chosen, totaling five variables. A summary of the variables required for the machine learning models for polar (ML Polar) and nonpolar (ML Nonpolar) systems is presented in Table 3, together with the required inputs for the classic models of Wilke-Chang, Tyn-Calus, Magalhães et al., and Zhu et al. The two hydrodynamic equations require four input variables, the same number as the Magalhães et al. correlation although, in this later case, two of the four parameters must be fitted to experimental data, thus reducing the model applicability. The Zhu et al. hybrid model requires the larger number of parameters (seven) and is only applicable to nonpolar systems.

Figure 1.

Correlation heat map for all properties and variables in the database of polar compounds. Colormap shows the absolute value of the Pearson correlation from zero (light green) to one (dark blue).

Figure 2.

Correlation heat map for all properties and variables in the database of nonpolar compounds. Colormap shows the absolute value of the Pearson correlation from zero (light green) to one (dark blue).

Table 3.

Required inputs for the new and classic diffusivity models.

The performance of all models was evaluated by calculating the average absolute relative deviation (AARD) of each system:

where superscripts calc and exp denote calculated and experimental values, and NDP is the number of data points of a system. For the whole database, the global deviation (i.e., weighted AARD) and the arithmetic systems average (AARDarith) were calculated. The minimum and maximum system AARD are reported as an indication of the performance of the best and worst systems. The root mean square error (RMSE) was also calculated and is defined as:

The coefficient of determination, R2, which is calculated for the training set, and the Q2 value, which corresponds to R2 value obtained when applying the model to the test set, are also reported for all models.

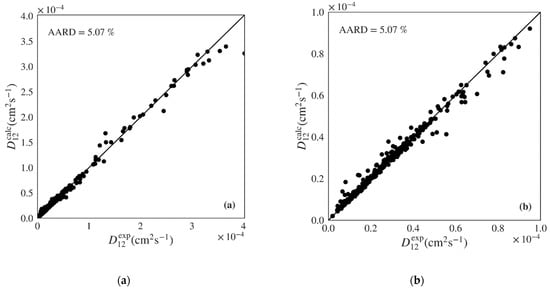

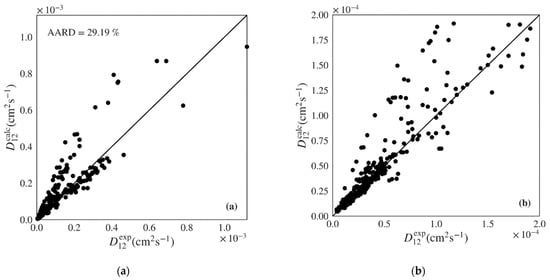

A final validation of the best machine learning models was conducted by performing a y-randomization test (also called y-scrambling). This test compares the performance of the original model with that of models built for a scrambled (randomly shuffled) response while still following the original model building procedure. The randomization process eliminates the relation between the independent variables and target response. If the performance of the models when using scrambled data is much lower than when using original data, one can be confident of the relevance of the original model. Five algorithms were tested to develop the supervised learning models including a multilinear regression, k-nearest neighbors, decision tree, random forest (an averaging ensemble method), and gradient boosted (a boosting ensemble method). The performance of the several machine learning algorithms when applied to the test set of polar data, covering 79 systems and 430 points, is shown in Table 4. The gradient boosted algorithm presents the best performance for the test set (pure prediction) with an AARD of 5.07% followed by the random forest, decision tree, k-nearest neighbors, and multilinear regression (from lower to higher AARD). Similar trends are present when analyzing the arithmetic average of 79 systems AARD, as well as the minimum and the maximum AARD. As expected, the multilinear regression exhibits much worse results than the other four algorithms for all the AARD metrics. The gradient boosted algorithm also presents the lowest RMSE and highest Q2. The Q2 value is also close to R2 indicating that the model works well independently of its training data. Figure 3 plots the diffusivities predicted by the gradient boosted ML model against the respective experimental values for the test set of polar systems, showing a very good distribution along the diagonal. Similar representations are provided for the remaining four algorithms in Figures S1–S4 of the Supplementary Material. The multilinear regression model presents significant underestimation at higher values of and overestimation in the intermediate region. On the other hand, the remaining three algorithms show good dispersion around the diagonal, however with larger deviations than the gradient boosted model.

Table 4.

Performance of several machine learning (ML)models for the prediction of diffusivities in polar systems (test set) and comparison with classic predictive and correlation models.

Figure 3.

Predicted versus experimental diffusivities for the test set of polar systems for the best machine learning model (Gradient Boosted): (a) plot including all calculated results; (b) plot zooming on lower range.

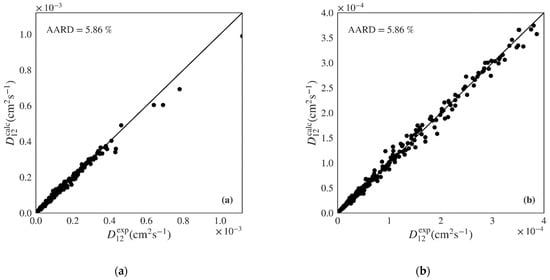

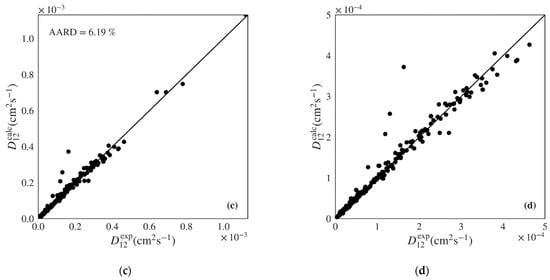

Table 5 presents the results obtained using each ML algorithm for the test set of nonpolar compounds (130 systems and 342 points). Once again, the gradient boosted algorithm presents the best global AARD for the 130 systems of the test set (5.86%), followed by the random forest, then by the decision tree and k-nearest neighbors with similar results, and lastly by the multilinear regression with significantly worst results. A similar trend is visible when calculating a simple arithmetic average of systems AARD. The gradient boosted algorithm shows the lowest RMSE and highest Q2. The calculated versus experimental diffusivities for the test set of nonpolar compounds using the Gradient Boosted model are plotted in Figure 4, showing unbiased distribution along the diagonal over all range of experimental points. Figures S5–S8 of the Supplementary Material provide the calculated against experimental plots for the remaining four algorithms. As in the case of the polar data, the multilinear regression model once again presents significant deviations. The k-nearest neighbors, decision tree, and random forest algorithms provide better scattering around the diagonal. Few outliers may be observed, particularly in the case of the decision tree model.

Table 5.

Performance of several machine learning (ML) models for the prediction of diffusivities in nonpolar systems (test set) and comparison with classic predictive and correlation models.

Figure 4.

Predicted versus experimental diffusivities for the test set of nonpolar systems for the best machine learning model (Gradient Boosted) showing (a) plot including all calculated results; (b) plot zooming on lower range.

As a final validation of the gradient boosted models selected for polar and nonpolar systems, a y-randomization test was performed by scrambling the diffusivity vector. This process was repeated 200 times and always returned random models with performances much lower than the original ones, thus confirming the significance of the proposed models. Figure S9 of the Supplementary Material shows the contrast between the Q2 values of our models (0.9919 for polar and 0.9879 for nonpolar) and the lower ones obtained for the permutations. It is worth noting that: (i) the best possible score of Q2 (and R2) is 1.0; (ii) for a constant model that always predicts the expected value of the response, both indicators are zero; (iii) Q2 (and R2) can be negative for arbitrarily worse model.

Summarily, the ML Polar Gradient Boosted model showed good performance for the prediction of diffusivities of multiple solutes in polar solvents in the following train and test domain: = 268–554 K; = 0.0241–17.6 cP; = 17–674 g mol−1; = 4.1–221.2 bar; = 20–113 g mol−1; and = 208–2121 K. Likewise the ML Nonpolar Gradient Boosted can be applied over: = 213–567 K; = 0.0229–2.92 cP; = 2–461 g mol−1; = 12.5–96.3 bar; and = 30–395 g mol−1. Both models showed good interpolation capability, however it is expected that they can also provide reasonable extrapolations.

The ML Polar Gradient Boosted and ML Nonpolar Gradient Boosted models are provided as a command line program in the Supplementary Material.

3.2. Detailed Comparison of ML Gradient Boosted and Classic Models

Four classic models for the calculation of diffusivities were adopted for comparison: two hydrodynamic equations (Wilke-Chang [] and Tyn-Calus []), a correlation by Magalhães et al. [], and the hybrid model of Zhu et al. []. The performance metrics of the classic models are shown in Table 4, for the polar systems, and Table 5, for the nonpolar systems. Overall, the proposed ML models outperform the classic models.

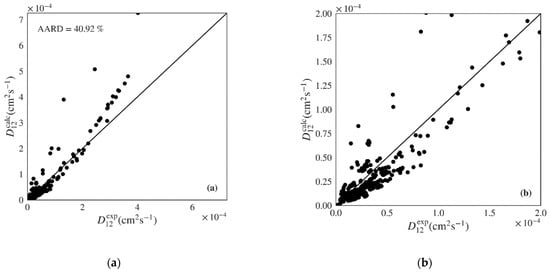

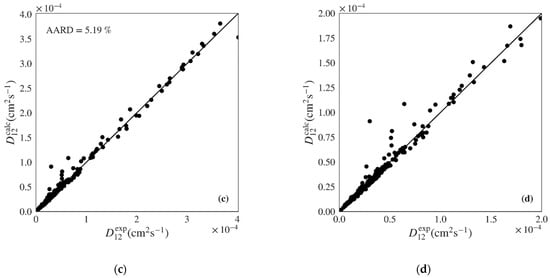

The Wilke-Chang and Tyn-Calus hydrodynamic equations provide similar performance indicators in both data sets, though the former shows much higher maximum AARDs (Table 4: 197.71% vs. 97.11%; Table 5: 172.30% vs. 64.97%). Analyzing Figure 5a,b, where the calculated versus experimental diffusivities are plotted for the polar data set over the entire range and over a low range of values, we see that the Wilke-Chang equation overestimates higher diffusivities and tends to underestimate lower ones. The Tyn-Calus equation for polar solvents provides systematic underestimation as shown in Figure S10 of the Supplementary Material. In the case of nonpolar systems, both Wilke-Chang (Figure 6a,b) and Tyn-Calus (Figure S11) models exhibit a dual biased distribution of the calculated values.

Figure 5.

Calculated versus experimental diffusivities for the test set of polar systems for: (a) and (b) Wilke-Chang (Equation (1)) [] and (c) and (d) Magalhães et al. (Equation (4)) [] models. Note the distinct scale between plots.

Figure 6.

Calculated versus experimental diffusivities for the test set of nonpolar systems for: (a) and (b) Wilke-Chang (Equation (1)) [] and (c) and (d) Magalhães et al. (Equation (4)) [] models. Note the distinct scale between plots.

The correlation of Magalhães et al. is able to deliver the best performance among the classic models, with a unbiased distribution along the diagonal in Figure 5c,d and Figure 6c,d and an AARD only slightly above that provided by the machine learning gradient boosted models proposed in this work (5.19% and 6.19% for the polar and nonpolar sets, respectively). However, the Magalhães et al. can often be difficult to apply since it requires that data on the system of interest is available in order to fit its two parameters. In this work, data in the train sets was used to fit the and parameters for each system, which were then applied to the calculation of diffusivities for the test sets. For this reason, fewer points were calculated for the Magalhães et al. model, corresponding to the systems where not enough data were available in the train sets to optimize parameters and .

Finally, the Zhu et al. model, which was developed for nonpolar and weakly polar fluids, does not appear to provide any benefit over the much simpler Wilke-Chang and Tyn-Calus equations when applied to the nonpolar data set of this work. It provides higher AARD (Table 5: 37.93%) than both hydrodynamic equations (Table 5: 29.19% and 28.84%, respectively), although it shows lower biased dispersion along diagonal (Figure S12).

Table 6 details the results of the best machine learning (gradient boosted) and classic diffusivity models for each system of the polar database, as well as the distribution of points among train and test sets. The best results are found for the ethylbenzene/acetone system (AARD of 0.08%) and the worst for the ethylene glycol/ethanol system (76.23%). However, these two systems have only one and two points in the test set, respectively. Considering only cases where at least 10 points are available for train and test sets, the carbon dioxide/n-butanol shows the best result (1.19%) while ammonia/1-propanol has the worst (5.65%).

Table 6.

Calculated deviations of the individual systems of the polar database (divided into test and train sets) achieved by the best machine learning model of this work (Gradient Boosted) and classic equations adopted for comparison.

Table 7 presents equivalent information for the nonpolar systems. In this case, the n-decane/n-dodecane and tetraethyltin/n-decane systems show the best (0.03%) and worst (25.87%) results, respectively, but, once again, with only one point in the test set. If only systems with at least five points in the train and test sets are considered, the best result appears for 1,3,5-trimethylbenzene/n-hexane (2.98%) and the worst for toluene/n-hexane (4.58%).

Table 7.

Calculated deviations of the individual systems of the nonpolar database (divided into test and train sets) achieved by the best machine learning model of this work (Gradient Boosted) and classic equations adopted for comparison.

The models proposed in this work can be easily retrained as new experimental data is made available, thus increasing its robustness and scope. A program that allows the estimation of diffusivities in polar and nonpolar systems is provided in the Supplementary Material, along with instructions on its use.

4. Conclusions

Two machine learning (ML) models were developed for the estimation of binary diffusivities in polar and nonpolar systems. These models were trained and tested on a database containing 20 properties for polar (90 systems and 1431 points) and nonpolar (154 systems and 1129 points) systems. Several learning algorithms were tested, including multilinear regression, k-nearest neighbors, decision tree, random forest and gradient boosted. The best ML results were obtained for the gradient boosted model, which provided global AARDs of 5.07% and 5.86% for the test set of polar and nonpolar systems, respectively. The nonpolar model relies on five input variables/properties: temperature, solvent viscosity, solute molar mass, solute critical pressure and solvent molar mass. The polar model takes the Lennard-Jones energy of solvent as an additional parameter, thus requiring six inputs totally. The classic models of Wilke-Chang, Tyn-Calus, Magalhães et al. and Zhu et al. were adopted for comparison and demonstrated worse performance for the same test sets. The 2-parameter correlation of Magalhães et al. showed results closer to the new gradient boosted models with AARD of 5.19% (polar) and 6.19% (nonpolar), however, that equation requires previous data to fit its two parameters, and thus it is impractical to apply to unknown systems. Among the remaining classic models, Wilke-Chang provided the best result for polar systems (40.92%) while Tyn-Calus performed best for nonpolar systems (28.84%). The developed models are provided as application in the Supplementary Material.

Supplementary Materials

The following are available online at https://www.mdpi.com/1996-1944/14/3/542/s1, Software, Table S1: Tested and best hyper-parameter values for each machine learning algorithm, Figure S1: Predicted versus experimental diffusivities for the test set of polar systems using the Multilinear Regression model, Figure S2: Predicted versus experimental diffusivities for the test set of polar systems using the k-Nearest Neighbors model, Figure S3: Predicted versus experimental diffusivities for the test set of polar systems using the Decision Tree model, Figure S4: Predicted versus experimental diffusivities for the test set of polar systems using the Random Forest model, Figure S5: Predicted versus experimental diffusivities for the test set of nonpolar systems using the Multilinear Regression model, Figure S6: Predicted versus experimental diffusivities for the test set of nonpolar systems using the k-Nearest Neighbors model, Figure S7: Predicted versus experimental diffusivities for the test set of nonpolar systems using the Decision Tree model, Figure S8: Predicted versus experimental diffusivities for the test set of nonpolar systems using the Random Forest model, Figure S9: y-Randomization calculations for the selected ML Gradient Boosted models for (a) polar systems and (b) nonpolar systems. The bars show the Q2 values for models based on randomized diffusivity data. The dashed horizontal lines show the Q2 values of the actual models. Figure S10: Calculated versus experimental diffusivities for the test set of polar systems for the Tyn-Calus model. (a) full range; (b) zoomed on lower range, Figure S11: Calculated versus experimental diffusivities for the test set of nonpolar systems for the Tyn-Calus model. (a) full range; (b) zoomed on lower range, Figure S12: Calculated versus experimental diffusivities for the test set of nonpolar systems for the Zhu et al. model. (a) full range; (b) zoomed on lower range.

Author Contributions

Conceptualization, J.P.S.A. and C.M.S.; Formal analysis, J.P.S.A. and C.M.S.; Funding acquisition, C.M.S.; Investigation, J.P.S.A. and B.Z.; Methodology, J.P.S.A. and C.M.S.; Project administration, C.M.S.; Resources, C.M.S.; Software, J.P.S.A. and B.Z.; Supervision, C.M.S.; Visualization, J.P.S.A.; Writing—original draft, J.P.S.A.; Writing—review & editing, C.M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was developed within the scope of the project CICECO-Aveiro Institute of Mate rials, UIDB/50011/2020 & UIDP/50011/2020, financed by national funds through the Foundation for Science and Technology/MCTES, as well as the Multibiorefinery project (POCI-01-0145-FEDER-016403). Bruno Zêzere thanks FCT for PhD grant SFRH/BD/137751/2018.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wankat, P.C. Rate-Controlled Separations; Blackie Academic & Professional: Glasgow, UK, 1994. [Google Scholar]

- Oliveira, E.L.G.; Silvestre, A.J.D.; Silva, C.M. Review of kinetic models for supercritical fluid extraction. Chem. Eng. Res. Des. 2011, 89, 1104–1117. [Google Scholar] [CrossRef]

- Carberry, J.J. Chemical and Catalytic Reaction Engineering; McGraw-Hill: New York, NY, USA, 1971. [Google Scholar]

- Zêzere, B.; Portugal, I.; Gomes, J.R.B.; Silva, C.M. Revisiting Tracer Liu-Silva-Macedo model for binary diffusion coefficient using the largest database of liquid and supercritical systems. J. Supercrit. Fluids 2021, 168, 105073. [Google Scholar] [CrossRef]

- Wilke, C.R.; Chang, P. Correlation of diffusion coefficients in dilute solutions. AIChE J. 1955, 1, 264–270. [Google Scholar] [CrossRef]

- Scheibel, E.G. Liquid Diffusivities. Ind. Eng. Chem. 1954, 9, 2007–2008. [Google Scholar] [CrossRef]

- Tyn, M.T.; Calus, W.F. Diffusion Coefficients in Dilute Binary Liquid Mixtures. J. Chem. Eng. Data 1975, 20, 106–109. [Google Scholar] [CrossRef]

- Hayduk, W.; Minhas, B.S. Correlations for prediction of molecular diffusivities in liquids. Can. J. Chem. Eng. 1982, 60, 295–299. [Google Scholar] [CrossRef]

- Magalhães, A.L.; Lito, P.F.; Da Silva, F.A.; Silva, C.M. Simple and accurate correlations for diffusion coefficients of solutes in liquids and supercritical fluids over wide ranges of temperature and density. J. Supercrit. Fluids 2013, 76, 94–114. [Google Scholar] [CrossRef]

- Magalhães, A.L.; Da Silva, F.A.; Silva, C.M. Tracer diffusion coefficients of polar systems. Chem. Eng. Sci. 2012, 73, 151–168. [Google Scholar] [CrossRef]

- Dymond, J.H. Corrected Enskog theory and the transport coefficients of liquids. J. Chem. Phys. 1974, 60, 969–973. [Google Scholar] [CrossRef]

- Silva, C.M.; Liu, H. Modelling of Transport Properties of Hard Sphere Fluids and Related Systems, and its Applications. In Theory and Simulation of Hard-Sphere Fluids and Related Systems; Springer: Berlin, Germany, 2008; pp. 383–492. [Google Scholar]

- Zhu, Y.; Lu, X.; Zhou, J.; Wang, Y.; Shi, J. Prediction of diffusion coefficients for gas, liquid and supercritical fluid: Application to pure real fluids and infinite dilute binary solutions based on the simulation of Lennard–Jones fluid. Fluid Phase Equilib. 2002, 194–197, 1141–1159. [Google Scholar] [CrossRef]

- Magalhães, A.L.; Cardoso, S.P.; Figueiredo, B.R.; Da Silva, F.A.; Silva, C.M. Revisiting the liu-silva-macedo model for tracer diffusion coefficients of supercritical, liquid, and gaseous systems. Ind. Eng. Chem. Res. 2010, 49, 7697–7700. [Google Scholar] [CrossRef]

- Liu, H.; Silva, C.M.; Macedo, E.A. New Equations for Tracer Diffusion Coefficients of Solutes in Supercritical and Liquid Solvents Based on the Lennard-Jones Fluid Model. Ind. Eng. Chem. Res. 1997, 36, 246–252. [Google Scholar] [CrossRef]

- Gharagheizi, F.; Sattari, M. Estimation of molecular diffusivity of pure chemicals in water: A quantitative structure-property relationship study. SAR QSAR Environ. Res. 2009, 20, 267–285. [Google Scholar] [CrossRef] [PubMed]

- Khajeh, A.; Rasaei, M.R. Diffusion coefficient prediction of acids in water at infinite dilution by QSPR method. Struct. Chem. 2011, 23, 399–406. [Google Scholar] [CrossRef]

- Beigzadeh, R.; Rahimi, M.; Shabanian, S.R. Developing a feed forward neural network multilayer model for prediction of binary diffusion coefficient in liquids. Fluid Phase Equilib. 2012, 331, 48–57. [Google Scholar] [CrossRef]

- Eslamloueyan, R.; Khademi, M.H. A neural network-based method for estimation of binary gas diffusivity. Chemom. Intell. Lab. Syst. 2010, 104, 195–204. [Google Scholar] [CrossRef]

- Abbasi, A.; Eslamloueyan, R. Determination of binary diffusion coefficients of hydrocarbon mixtures using MLP and ANFIS networks based on QSPR method. Chemom. Intell. Lab. Syst. 2014, 132, 39–51. [Google Scholar] [CrossRef]

- Mirkhani, S.A.; Gharagheizi, F.; Sattari, M. A QSPR model for prediction of diffusion coefficient of non-electrolyte organic compounds in air at ambient condition. Chemosphere 2012, 86, 959–966. [Google Scholar] [CrossRef]

- Rahimi, M.R.; Karimi, H.; Yousefi, F. Prediction of carbon dioxide diffusivity in biodegradable polymers using diffusion neural network. Heat Mass Transf. Stoffuebertragung 2012, 48, 1357–1365. [Google Scholar] [CrossRef]

- Lashkarbolooki, M.; Hezave, A.Z.; Bayat, M. Thermal diffusivity of hydrocarbons and aromatics: Artificial neural network predicting model. J. Thermophys. Heat Transf. 2017, 31, 621–627. [Google Scholar] [CrossRef]

- Chudzik, S. Measurement of thermal diffusivity of insulating material using an artificial neural network. Meas. Sci. Technol. 2012, 23, 065602. [Google Scholar] [CrossRef]

- Aniceto, J.P.S.; Zêzere, B.; Silva, C.M. Machine learning models for the prediction of diffusivities in supercritical CO2 systems. J. Mol. Liq. 2021, 115281. [Google Scholar] [CrossRef]

- Yaws, C.L. Chemical Properties Handbook: Physical, Thermodynamic, Environmental, Transport, Safety, and Health Related Properties for Organic and Inorganic Chemicals; McGraw-Hill Professional: New York, NY, USA, 1998. [Google Scholar]

- Cibulka, I.; Ziková, M. Liquid densities at elevated pressures of 1-alkanols from C1 to C10: A critical evaluation of experimental data. J. Chem. Eng. Data 1994, 39, 876–886. [Google Scholar] [CrossRef]

- Cibulka, I.; Hnědkovský, L.; Takagi, T. P−$ρ$−T data of liquids: Summarization and evaluation. 4. Higher 1-alkanols (C11, C12, C14, C16), secondary, tertiary, and branched alkanols, cycloalkanols, alkanediols, alkanetriols, ether alkanols, and aromatic hydroxy derivatives. J. Chem. Eng. Data 1997, 42, 415–433. [Google Scholar] [CrossRef]

- Cibulka, I.; Takagi, T.; Růžička, K. P−ρ−T data of liquids: Summarization and evaluation. 7. Selected halogenated hydrocarbons. J. Chem. Eng. Data 2000, 46, 2–28. [Google Scholar] [CrossRef]

- Cibulka, I.; Takagi, T. P−ρ−T data of liquids: Summarization and evaluation. 8. Miscellaneous compounds. J. Chem. Eng. Data 2002, 47, 1037–1070. [Google Scholar] [CrossRef]

- Reid, R.C.; Prausnitz, J.M.; Poling, B.E. The Properties of Gases and Liquids, 4th ed.; Company, M.-H.B., Ed.; McGraw-Hill International Editions: New York, NY, USA, 1987. [Google Scholar]

- Viswanath, D.S.; Ghosh, T.K.; Prasad, D.H.; Dutt, N.V.K.; Rani, K.Y. Viscosity of Liquids: Theory, Estimation, Experiment, and Data; Springer: Dordrecht, The Netherlands, 2007; ISBN 978-1-4020-5482-2. [Google Scholar]

- Lucas, K. Ein einfaches verfahren zur berechnung der viskosität von Gasen und Gasgemischen. Chem. Ing. Tech. 1974, 46, 157–158. [Google Scholar] [CrossRef]

- Assael, M.J.; Dymond, J.H.; Polimatidou, S.K. Correlation and prediction of dense fluid transport coefficients. Fluid Phase Equilib. 1994, 15, 189–201. [Google Scholar] [CrossRef]

- Cano-Gómez, J.J.; Iglesias-Silva, G.A.; Rico-Ramírez, V.; Ramos-Estrada, M.; Hall, K.R. A new correlation for the prediction of refractive index and liquid densities of 1-alcohols. Fluid Phase Equilib. 2015, 387, 117–120. [Google Scholar] [CrossRef]

- Pádua, A.A.H.; Fareleira, J.M.N.A.; Calado, J.C.G.; Wakeham, W.A. Density and viscosity measurements of 2,2,4-trimethylpentane (isooctane) from 198 K to 348 K and up to 100 MPa. J. Chem. Eng. Data 1996, 41, 1488–1494. [Google Scholar] [CrossRef]

- Tyn, M.T.; Calus, W.F. Estimating liquid molar volume. Processing 1975, 21, 16–17. [Google Scholar]

- ChemSpider—Building Community for Chemists. Available online: http://www.chemspider.com (accessed on 22 August 2020).

- Korea Thermophysical Properties Data Bank (KDB). Available online: http://www.cheric.org/research/kdb/hcprop/cmpsrch.php (accessed on 22 August 2020).

- Design Institute for Physical Properties (DIPPR). Available online: http://dippr.byu.edu/ (accessed on 22 August 2020).

- Yaws, C.L. Thermophysical Properties of Chemicals and Hydrocarbons; William Andrew Inc.: New York, NY, USA, 2008. [Google Scholar]

- LookChem.com—Look for Chemicals. Available online: http://www.lookchem.com (accessed on 22 August 2020).

- AspenTech. Aspen Physical Property System—Physical Property Methods; AspenTech: Cambridge, MA, USA, 2007. [Google Scholar]

- Cordeiro, J. Medição e Modelação de Difusividades em CO2 Supercrítico e Etanol; Universidade de Aveiro: Aveiro, Potugal, 2015. [Google Scholar]

- Joback, K.G.; Reid, R.C. A Unified Approach to physical Property Estimation Using Multivariate Statistical Techniques; Massachusetts Institute of Technology: Cambridge, MA, USA, 1984. [Google Scholar]

- Joback, K.G.; Reid, R.C. Estimation of pure-component properties from group-contributions. Chem. Eng. Commun. 1987, 57, 233–243. [Google Scholar] [CrossRef]

- Somayajulu, G.R. Estimation Procedures for Critical Constants. J. Chem. Eng. Data 1989, 34, 106–120. [Google Scholar] [CrossRef]

- Klincewicz, K.M.; Reid, R.C. Estimation of critical properties with group contribution methods. AIChE J. 1984, 30, 137–142. [Google Scholar] [CrossRef]

- Ambrose, D. Correlation and estimation of vapour-liquid critical properties. I: Critical temperatures of organic compounds. In NPL Technical Report Chem. 92; National Physical Lab.: London, UK, 1978. [Google Scholar]

- Ambrose, D. Correlation and Estimation of Vapour-Liquid Critical Properties. II: Critical Pressure and Critical Volume. In NPL Technical Report. Chem. 92; National Physical Lab.: London, UK, 1979. [Google Scholar]

- Green, D.W.; Perry, R.H. Perry’s Chemical Engineers’ Handbook, 8th ed.; McGraw-Hill Professional: New York, NY, USA, 2008. [Google Scholar]

- Wen, X.; Qiang, Y. A new group contribution method for estimating critical properties of organic compounds. Ind. Eng. Chem. Res. 2001, 40, 6245–6250. [Google Scholar] [CrossRef]

- Valderrama, J.O.; Rojas, R.E. Critical properties of ionic liquids. Revisited. Ind. Eng. Chem. Res. 2009, 48, 6890–6900. [Google Scholar] [CrossRef]

- Lee, B.I.; Kesler, M.G. A generalized thermodynamic correlation based on three-parameter corresponding states. AIChE J. 1975, 21, 510–527. [Google Scholar] [CrossRef]

- Pitzer, K.S.; Lippmann, D.Z.; Curl, R.F.; Huggins, C.M.; Petersen, D.E. The Volumetric and Thermodynamic Properties of Fluids. II. Compressibility Factor, Vapor Pressure and Entropy of Vaporization. J. Am. Chem. Soc. 1955, 77, 3433–3440. [Google Scholar] [CrossRef]

- Zêzere, B.; Magalhães, A.L.; Portugal, I.; Silva, C.M. Diffusion coefficients of eucalyptol at infinite dilution in compressed liquid ethanol and in supercritical CO2/ethanol mixtures. J. Supercrit. Fluids 2018, 133, 297–308. [Google Scholar] [CrossRef]

- Leite, J.; Magalhães, A.L.; Valente, A.A.; Silva, C.M. Measurement and modelling of tracer diffusivities of gallic acid in liquid ethanol and in supercritical CO2 modified with ethanol. J. Supercrit. Fluids 2018, 131, 130–139. [Google Scholar] [CrossRef]

- Catchpole, O.J.; Von Kamp, J.C. Phase equilibrium for the extraction of squalene from shark liver oil using supercritical carbon dioxide. Ind. Eng. Chem. Res. 1997, 36, 3762–3768. [Google Scholar] [CrossRef]

- Liu, H.; Silva, C.M.; Macedo, E.A. Unified approach to the self-diffusion coefficients of dense fluids over wide ranges of temperature and pressure-hard-sphere, square-well, Lennard-Jones and real substances. Chem. Eng. Sci. 1998, 53, 2403–2422. [Google Scholar] [CrossRef]

- Cordeiro, J.; Magalhães, A.L.; Valente, A.A.; Silva, C.M. Experimental and theoretical analysis of the diffusion behavior of chromium(III) acetylacetonate in supercritical CO2. J. Supercrit. Fluids 2016, 118, 153–162. [Google Scholar] [CrossRef]

- Burkov, A. The Hundred-Page Machine Learning Book; Andriy Burkov: Quebec City, QC, Canada, 2019; ISBN 978-1-99-957950-0. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning, 2nd ed.; Springer: New York, NY, USA, 2009; ISBN 978-0-38-784857-0. [Google Scholar]

- Altman, N.S. An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 1992, 46, 175–185. [Google Scholar] [CrossRef]

- Mitchell, J.B.O. Machine learning methods in chemoinformatics. Wiley Interdiscip. Rev. Comput. Mol. Sci. 2014, 4, 468–481. [Google Scholar] [CrossRef]

- Quinlan, J.R. Simplifying decision trees. Int. J. Man. Mach. Stud. 1987, 27, 221–234. [Google Scholar] [CrossRef]

- Müller, A.C.; Guido, S. Introduction to Machine Learning with Python: A Guide for Data Scientists; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2016; ISBN 978-1-449-36941-5. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Svetnik, V.; Wang, T.; Tong, C.; Liaw, A.; Sheridan, R.P.; Song, Q. Boosting: An ensemble learning tool for compound classification and QSAR modeling. J. Chem. Inf. Model. 2005, 45, 786–799. [Google Scholar] [CrossRef]

- Cooper, E. Diffusion Coefficients at Infinite Dilution in Alcohol Solvents at Temperatures to 348 K and Pressures to 17 MPa; University of Ottawa: Ottawa, ON, Canada, 1992. [Google Scholar]

- Pratt, K.C.; Wakeham, W.A. The mutual diffusion coefficient for binary mixtures of water and the isomers of propanol. Proc. R. Soc. Lond. A 1975, 342, 401–419. [Google Scholar] [CrossRef]

- Sun, C.K.J.; Chen, S.-H. Tracer diffusion in dense methanol and 2-propanol up to supercritical region: Understanding of solvent molecular association and development of an empirical correlation. Ind. Eng. Chem. Res. 1987, 24, 815–819. [Google Scholar] [CrossRef]

- Man, C.W. Limiting Mutual Diffusion of Nonassociated Aromatic Solutes; The Hong Kong Polytechnic University: Hong Kong, China, 2001. [Google Scholar]

- Tyn, M.T.; Calus, W.F. Temperature and concentration dependence of mutual diffusion coefficients of some binary liquid systems. J. Chem. Eng. Data 1975, 20, 310–316. [Google Scholar] [CrossRef]

- Sarraute, S.; Gomes, M.F.C.; Pádua, A.A.H. Diffusion coefficients of 1-alkyl-3-methylimidazolium ionic liquids in water, methanol, and acetonitrile at infinite dilution. J. Chem. Eng. Data 2009, 54, 2389–2394. [Google Scholar] [CrossRef]

- Hurle, R.L.; Woolf, L.A. Tracer diffusion in methanol and acetonitrile under pressure. J. Chem. Soc. Faraday Trans. 1982, 78, 2921–2928. [Google Scholar] [CrossRef]

- Wong, C.-F.; Hayduk, W. Molecular diffusivities for propene in 1-butanol, chlorobenzene, ethylene glycol, and n-octane at elevated pressures. J. Chem. Eng. Data 1990, 35, 323–328. [Google Scholar] [CrossRef]

- Wong, C.-F. Diffusion Coefficients of Dissolved Gases in Liquids; University of Ottawa: Ottawa, ON, Canada, 1989. [Google Scholar]

- Kopner, A.; Hamm, A.; Ellert, J.; Feist, R.; Schneider, G.M. Determination of binary diffusion coefficients in supercritical chlorotrifluoromethane and sulfurhexafluoride with supercritical fluid chromatography (SFC). Chem. Eng. Sci. 1987, 42, 2213–2218. [Google Scholar] [CrossRef]

- Han, P.; Bartels, D.M. Temperature dependence of oxygen diffusion in H2O and D2O. J. Phys. Chem. 1996, 100, 5597–5602. [Google Scholar] [CrossRef]

- Tominaga, T.; Matsumoto, S. Diffusion of polar and nonpolar molecules in water and ethanol. Bull. Chem. Soc. Jpn. 1990, 63, 533–537. [Google Scholar] [CrossRef]

- Sun, C.K.J.; Chen, S.H. Tracer diffusion in dense ethanol: A generalized correlation for nonpolar and hydrogen-bonded solvents. AIChE J. 1986, 32, 1367–1371. [Google Scholar] [CrossRef]

- Suárez-Iglesias, O.; Medina, I.; Pizarro, C.; Bueno, J.L. Diffusion of benzyl acetate, 2-phenylethyl acetate, 3-phenylpropyl acetate, and dibenzyl ether in mixtures of carbon dioxide and ethanol. Ind. Eng. Chem. Res. 2007, 46, 3810–3819. [Google Scholar] [CrossRef]

- Lin, I.-H.; Tan, C.-S. Diffusion of benzonitrile in CO2—Expanded ethanol. J. Chem. Eng. Data 2008, 53, 1886–1891. [Google Scholar] [CrossRef]

- Kong, C.Y.; Watanabe, K.; Funazukuri, T. Measurement and correlation of the diffusion coefficients of chromium(III) acetylacetonate at infinite dilution in supercritical carbon dioxide and in liquid ethanol. J. Chem. Thermodyn. 2017, 105, 86–93. [Google Scholar] [CrossRef]

- Zêzere, B.; Cordeiro, J.; Leite, J.; Magalhães, A.L.; Portugal, I.; Silva, C.M. Diffusivities of metal acetylacetonates in liquid ethanol and comparison with the transport behavior in supercritical systems. J. Supercrit. Fluids 2019, 143, 259–267. [Google Scholar] [CrossRef]

- Funazukuri, T.; Yamasaki, T.; Taguchi, M.; Kong, C.Y. Measurement of binary diffusion coefficient and solubility estimation for dyes in supercritical carbon dioxide by CIR method. Fluid Phase Equilib. 2015, 420, 7–13. [Google Scholar] [CrossRef]

- Kong, C.Y.; Sugiura, K.; Natsume, S.; Sakabe, J.; Funazukuri, T.; Miyake, K.; Okajima, I.; Badhulika, S.; Sako, T. Measurements and correlation of diffusion coefficients of ibuprofen in both liquid and supercritical fluids. J. Supercrit. Fluids 2020, 159, 104776. [Google Scholar] [CrossRef]

- Snijder, E.D.; te Riele, M.J.M.; Versteeg, G.F.; van Swaaij, W.P.M. Diffusion Coefficients of CO, CO2, N2O, and N2 in ethanol and toluene. J. Chem. Eng. Data 1995, 40, 37–39. [Google Scholar] [CrossRef]

- Kong, C.Y.; Watanabe, K.; Funazukuri, T. Diffusion coefficients of phenylbutazone in supercritical CO2 and in ethanol. J. Chromatogr. A 2013, 1279, 92–97. [Google Scholar] [CrossRef]

- Zêzere, B.; Iglésias, J.; Portugal, I.; Gomes, J.R.B.; Silva, C.M. Diffusion of quercetin in compressed liquid ethyl acetate and ethanol. J. Mol. Liq. 2020, 114714. [Google Scholar] [CrossRef]

- Pratt, K.C.; Wakeham, W.A. The mutual diffusion coefficient of ethanol-water mixtures: Determination by a rapid, new method. Proc. R. Soc. Lond. A 1974, 336, 393–406. [Google Scholar]

- Zêzere, B.; Silva, J.M.; Portugal, I.; Gomes, J.R.B.; Silva, C.M. Measurement of astaxanthin and squalene diffusivities in compressed liquid ethyl acetate by Taylor-Aris dispersion method. Sep. Purif. Technol. 2020, 234, 116046. [Google Scholar] [CrossRef]

- Heintz, A.; Ludwig, R.; Schmidt, E. Limiting diffusion coefficients of ionic liquids in water and methanol: A combined experimental and molecular dynamics study. Phys. Chem. Chem. Phys. 2011, 13, 3268–3273. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Takemura, F.; Yabe, A. Solubility and diffusivity of carbon monoxide in liquid methanol. J. Chem. Eng. Data 1996, 41, 589–592. [Google Scholar] [CrossRef]

- Lin, I.-H.; Tan, C.-S. Measurement of diffusion coefficients of p-chloronitrobenzene in CO2-expanded methanol. J. Supercrit. Fluids 2008, 46, 112–117. [Google Scholar] [CrossRef]

- Funazukuri, T.; Sugihara, T.; Yui, K.; Ishii, T.; Taguchi, M. Measurement of infinite dilution diffusion coefficients of vitamin K3 in CO2 expanded methanol. J. Supercrit. Fluids 2016, 108, 19–25. [Google Scholar] [CrossRef]

- Lee, Y.E.; Li, F.Y. Binary diffusion coefficients of the methanol water system in the temperature range 30–40 °C. J. Chem. Eng. Data 1991, 36, 240–243. [Google Scholar] [CrossRef]

- Fan, Y.Q.; Qian, R.Y.; Shi, M.R.; Shi, J. Infinite dilution diffusion coefficients of several aromatic hydrocarbons in octane and 2,2,4-trimethylpentane. J. Chem. Eng. Data 1995, 40, 1053–1055. [Google Scholar] [CrossRef]

- Sun, C.K.J.; Chen, S.H. Diffusion of benzene, toluene, naphthalene, and phenanthrene in supercritical dense 2,3-dimethylbutane. AIChE J. 1985, 31, 1904–1910. [Google Scholar] [CrossRef]

- Toriurmi, M.; Katooka, R.; Yui, K.; Funazukuri, T.; Kong, C.Y.; Kagei, S. Measurements of binary diffusion coefficients for metal complexes in organic solvents by the Taylor dispersion method. Fluid Phase Equilib. 2010, 297, 62–66. [Google Scholar] [CrossRef]

- Sun, C.K.J.; Chen, S.H. Tracer diffusion of aromatic hydrocarbons in n-hexane up to the supercritical region. Chem. Eng. Sci. 1985, 40, 2217–2224. [Google Scholar]

- Funazukuri, T.; Nishimoton, N.; Wakao, N. Binary diffusion coefficients of organic compounds in hexane, dodecane, and cyclohexane at 303.2-333.2 K and 16.0 MPa. J. Chem. Eng. Data 1994, 39, 911–915. [Google Scholar] [CrossRef]

- Chen, S.H.; Davis, H.T.; Evans, D.F. Tracer diffusion in polyatomic liquids. II. J. Chem. Phys. 1981, 75, 1422–1426. [Google Scholar] [CrossRef]

- Sun, C.K.J.; Chen, S.H. Tracer diffusion of aromatic hydrocarbons in liquid cyclohexane up to its critical temperature. AIChE J. 1985, 31, 1510–1515. [Google Scholar] [CrossRef]

- Chen, B.H.C.; Sun, C.K.J.; Chen, S.H. Hard sphere treatment of binary diffusion in liquid at high dilution up to the critical temperature. J. Chem. Phys. 1985, 82, 2052–2055. [Google Scholar] [CrossRef]

- Noel, J.M.; Erkey, C.; Bukur, D.B.; Akgerman, A. Infinite dilution mutual diffusion coefficients of 1-octene and 1-tetradecene in near-critical ethane and propane. J. Chem. Eng. Data 1994, 39, 920–921. [Google Scholar] [CrossRef]

- Chen, H.C.; Chen, S.H. Tracer diffusion of crown ethers in n-decane and n-tetradecane: An improved correlation for binary systems involving normal alkanes. Ind. Eng. Chem. Fundam. 1985, 24, 187–192. [Google Scholar] [CrossRef]

- Chen, S.H.; Davis, H.T.; Evans, D.F. Tracer diffusion in polyatomic liquids. III. J. Chem. Phys. 1982, 77, 2540–2544. [Google Scholar] [CrossRef]

- Pollack, G.L.; Kennan, R.P.; Himm, J.F.; Stump, D.R. Diffusion of xenon in liquid alkanes: Temperature dependence measurements with a new method. Stokes–Einstein and hard sphere theories. J. Chem. Phys. 1990, 92, 625–630. [Google Scholar] [CrossRef]

- Matthews, M.A.; Rodden, J.B.; Akgerman, A. High-temperature diffusion of hydrogen, carbon monoxide, and carbon dioxide in liquid n-heptane, n-dodecane, and n-hexadecane. J. Chem. Eng. Data 1987, 32, 319–322. [Google Scholar] [CrossRef]

- Matthews, M.A.; Akgerman, A. Diffusion coefficients for binary alkane mixtures to 573 K and 3.5 MPa. AIChE J. 1987, 33, 881–885. [Google Scholar] [CrossRef]

- Rodden, J.B.; Erkey, C.; Akgerman, A. High-temperature diffusion, viscosity, and density measurements in n-eicosane. J. Chem. Eng. Data 1988, 33, 344–347. [Google Scholar] [CrossRef]

- Qian, R.Y.; Fan, Y.Q.; Shi, M.R.; Shi, J. Predictive equation of tracer liquid diffusion coefficient from viscosity. Chin. J. Chem. Eng. 1996, 4, 203–208. [Google Scholar]

- Li, S.F.Y.; Wakeham, W.A. Mutual diffusion coefficients for two n-octane isomers in n-heptane. Int. J. Thermophys. 1989, 10, 995–1003. [Google Scholar] [CrossRef]

- Grushka, E.; Kikta, E.J. Diffusion in liquids. II. Dependence of diffusion coefficients on molecular weight and on temperature. J. Am. Chem. Soc. 1976, 98, 643–648. [Google Scholar] [CrossRef]

- Lo, H.Y. Diffusion coefficients in binary liquid n-alkane systems. J. Chem. Eng. Data 1974, 19, 236–241. [Google Scholar] [CrossRef]

- Alizadeh, A.A.; Wakeham, W.A. Mutual diffusion coefficients for binary mixtures of normal alkanes. Int. J. Thermophys. 1982, 3, 307–323. [Google Scholar] [CrossRef]

- Padrel de Oliveira, C.M.; Fareleira, J.M.N.A.; Nieto de Castro, C.A. Mutual diffusivity in binary mixtures of n-heptane with n-hexane isomers. Int. J. Thermophys. 1989, 10, 973–982. [Google Scholar] [CrossRef]

- Li, S.F.Y.; Yue, L.S. Composition dependence of binary diffusion coefficients in alkane mixtures. Int. J. Thermophys. 1990, 11, 537–554. [Google Scholar] [CrossRef]

- Matthews, M.A.; Rodden, J.B.; Akgerman, A. High-temperature diffusion, viscosity, and density measurements in n-hexadecane. J. Chem. Eng. Data 1987, 32, 317–319. [Google Scholar] [CrossRef]

- Awan, M.A.; Dymond, J.H. Transport properties of nonelectrolyte liquid mixtures. X. Limiting mutual diffusion coefficients of fluorinated benzenes in n-hexane. Int. J. Thermophys. 1996, 17, 759–769. [Google Scholar] [CrossRef]

- Okamoto, M. Diffusion coefficients estimated by dynamic fluorescence quenching at high pressure: Pyrene, 9,10-dimethylanthracene, and oxygen in n-hexane. Int. J. Thermophys. 2002, 23, 421–435. [Google Scholar] [CrossRef]

- Dymond, J.H.; Woolf, L.A. Tracer diffusion of organic solutes in n-hexane at pressures up to 400 MPa. J. Chem. Soc. Faraday Trans. 1 1982, 78, 991–1000. [Google Scholar] [CrossRef]

- Safi, A.; Nicolas, C.; Neau, E.; Chevalier, J.L. Measurement and correlation of diffusion coefficients of aromatic compounds at infinite dilution in alkane and cycloalkane solvents. J. Chem. Eng. Data 2007, 52, 977–981. [Google Scholar] [CrossRef]

- Leffler, J.; Cullinan, H.T. Variation of liquid diffusion coefficients with composition. Dilute ternary systems. Ind. Eng. Chem. Fundam. 1970, 9, 88–93. [Google Scholar] [CrossRef]

- Harris, K.R.; Pua, C.K.N.; Dunlop, P.J. Mutual and tracer diffusion coefficients and frictional coefficients for systems benzene-chlorobenzene, benzene-n-hexane, and benzene-n-heptane at 25 °C. J. Phys. Chem. 1970, 74, 3518–3529. [Google Scholar] [CrossRef]

- Bidlack, D.L.; Kett, T.K.; Kelly, C.M.; Anderson, D.K. Diffusion in the solvents hexane and carbon tetrachloride. J. Chem. Eng. Data 1969, 14, 342–343. [Google Scholar] [CrossRef]

- Grushka, E.; Kikta, E.J. Extension of the chromatographic broadening method of measuring diffusion coefficients to liquid systems. I. Diffusion coefficients of some alkylbenzenes in chloroform. J. Phys. Chem. 1974, 78, 2297–2301. [Google Scholar] [CrossRef]

- Holmes, J.T.; Olander, D.R.; Wilke, C.R. Diffusion in mixed Solvents. AIChE J. 1962, 8, 646–649. [Google Scholar] [CrossRef]

- Funazukuri, T.; Ishiwata, Y. Diffusion coefficients of linoleic acid methyl ester, Vitamin K3 and indole in mixtures of carbon dioxide and n-hexane at 313.2 K, and 16.0 MPa and 25.0 MPa. Fluid Phase Equilib. 1999, 164, 117–129. [Google Scholar] [CrossRef]

- Moore, J.W.; Wellek, R.M. Diffusion coefficients of n-heptane and n-decane in n-alkanes and n-alcohols at several temperatures. J. Chem. Eng. Data 1974, 19, 136–140. [Google Scholar] [CrossRef]

- Márquez, N.; Kreutzer, M.T.; Makkee, M.; Moulijn, J.A. Infinite dilution binary diffusion coefficients of hydrotreating compounds in tetradecane in the temperature range from (310 to 475) K. J. Chem. Eng. Data 2008, 53, 439–443. [Google Scholar] [CrossRef]

- Debenedetti, P.G.; Reid, R.C. Diffusion and mass transfer in supercritical fluids. AIChE J. 1986, 32, 2034–2046. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).