- Article

End-to-End Tool Path Generation for Triangular Mesh Surfaces in Five-Axis CNC Machining

- Shi-Chu Li,

- Hong-Yu Ma and

- Li-Yong Shen

- + 1 author

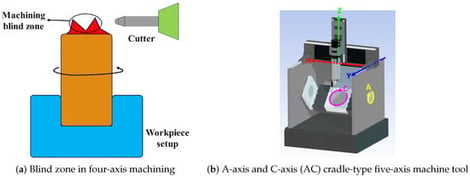

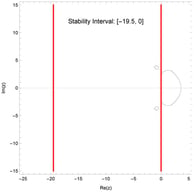

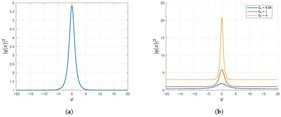

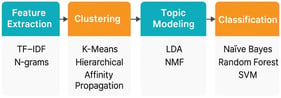

Triangular mesh surface representation is widely adopted in geometric design and reverse engineering applications. However, in high-precision Computer Numerical Control (CNC) machining, significant limitations persist in automated Computer-Aided Manufacturing (CAM) tool path generation for such representations. Conventional CAM workflows heavily rely on manual engineering interventions, such as creating drive surfaces or tuning extensive parameters—a dependency that becomes particularly acute for generic free-form models. To address this critical challenge, this paper proposes a novel end-to-end single-step end-milling tool path generation methodology for triangular mesh surfaces in high-precision five-axis CNC machining. The framework includes clustering analysis for optimal workpiece orientation, normal vector distribution analysis to identify shallow and steep regions, Graphics Processing Unit (GPU)-accelerated collision detection for feasible tool orientation domains, and iso-planar tool path generation with Traveling Salesman Problem (TSP) optimization for efficient tool lifting and movement. Experimental validation confirms the framework ensures machining quality and algorithmic robustness.

24 February 2026