Abstract

Information theoretic measures were applied to the study of the randomness associations of different financial time series. We studied the level of similarities between information theoretic measures and the various tools of regression analysis, i.e., between Shannon entropy and the total sum of squares of the dependent variable, relative mutual information and coefficients of correlation, conditional entropy and residual sum of squares, etc. We observed that mutual information and its dynamical extensions provide an alternative approach with some advantages to study the association between several international stock indices. Furthermore, mutual information and conditional entropy are relatively efficient compared to the measures of statistical dependence.

1. Introduction

Financial markets are dynamic structures which exhibit nonlinear behavior. The correlation structure of time series data for financial securities incorporates important statistics for many real world applications such as portfolio diversification, risk measures, and asset pricing modeling [1,2]. Strict linear correlation studies are likely to neglect important financial system characteristics. Traditionally, non-linear correlations with higher order moments have been studied.

Some researchers considered the information measures theoretic approach to study this statistical dependence because of its potential to identify a stochastic relationship as a whole, including linearity and non-linearity (refer to [3,4,5,6]), and so making it a general approach. For example, network statistics based on mutual information can successfully replace statistics based on the coefficient of correlation [7]. Ormos et al. [8] defined continuous entropy as an alternative measure of risk in an asset pricing model. Mercurio et al. [9] introduced a new family of portfolio optimization problems called the return-entropy portfolio optimization (REPO), which simplifies the computation of portfolio entropy using a combinatorial approach. Novais et al. [10] described a new model for portfolio optimization (PO), using entropy and mutual information instead of variance and covariance as measurements of risk.

Ghosh et al. [11] presented a non-parametric estimate of the pricing kernel, extracted using an information-theoretic approach. This approach delivers smaller out-of-sample pricing errors and a better cross-sectional fit than leading factor models. Batra et al. [12] compared the efficiency of the traditional Mean-Variance (MV) portfolio model proposed by Markowitz with the models that incorporate diverse information theoretic measures such as Shannon entropy, Renyi entropy, Tsallis entropy, and two-parameter Varma entropy. Furthermore, they derived risk-neutral density functions of multi-asset to model the price of European options by incorporating a simple constrained minimization of the Kullback measure of relative information to evaluate the explicit solution of this multi-asset option pricing model [13].

In this paper, we propose a way of integrating non-linear dynamics and dependencies in the study of financial markets using several information entropic measures and some dynamical extensions using mutual information, such as the normalized mutual information measure, relative mutual information rate, and variation of information. We show that this approach leads to better results than other studies using a correlation-based approach on the basis of nine international stock indices which are traded continuously over eighteen years, i.e., (2001–2018).

The paper is divided into four sections. In Section 1, we give the theoretical framework of the information measures and methodology used. In Section 2, we describe financial market daily closing price data of some international stock indices with comparative results. In Section 3, we give a detailed comparative study with the help of tables and figures based on our analysis of the several international stock indices networks. In Section 4, we conclude the paper with a discussion.

2. Theoretical Framework: Entropy Measures

2.1. Shannon Entropy

Shannon [14] introduced the concept of entropy in the communication system. For the given probability distribution , of a random variable X, the Shannon entropy is given by

This entropy has found many applications in various diversified fields specifically as a measure of volatility in financial market [15].

2.2. Joint Entropy

The joint entropy is the uncertainty measure allied with a joint probability distribution of two discrete random variables, say and , and is defined as

where is the joint probability density function of random variables X and Y.

2.3. Conditional Entropy

The conditional entropy, also called the equivocation, of the random variable

given is defined as

where is the conditional probability of the random variable Y given .

The joint and conditional entropy measures follow some properties as given below:

- .

2.4. Mutual Information

The mutual information, also called the transinformation, of Y relative to X is defined as:

This information measure follows some properties, as given below:

- Non-negative:

- Symmetric: .

It is easy to see that

where and are marginal probability distributions of X and Y, respectively; and is the joint probability density function of X and Y.

Next, we can estimate the entropy of a specific stock X from the stock index Y, refer to [16], as

Further, for Y as independent and X as dependent variables, the total variance of the stock can be subdivided into two parts: the explained sum of squares, i.e., systematic risk and the residual sum of squares, i.e., specific risk.

Next, we summarize some of the extensions of mutual information measure as follows:

2.4.1. Relative Mutual Information

The relative mutual information measure is directly comparable to the coefficient of determination (), because both estimate the proportion of variability demonstrated by an independent variable in the dependent variable [16].

2.4.2. Normalized Mutual Information

For the two discrete random variables say, X and Y, the normalized mutual information is defined as:

In addition to capturing strong linear relationships, this non-linear approach based on mutual information captures the non-linearity found in the volatile market data which could not be captured by the approach based on correlation coefficient [17].

2.4.3. Global Correlation Coefficient

This standardized measure of mutual information can be used as a predictability measure based on the distribution of empirical probability, and is defined as:

It ranges from 0 to 1 and records the linear as well as the nonlinear dependence between two discrete random variables, say X and Y, and is easily comparable with the linear correlation coefficient [18].

2.5. Variation of Information

This information measure, denoted as , is a true estimate of distance between two discrete random variables, say X and Y and, as such it obeys the triangle inequality [19]. Furthermore, it is closely related with mutual information measure and is given as

It is easy to see that

where is the entropy of the discrete random variable and is mutual information between two discrete random variables and and is the joint entropy of X and Y.

3. Data and Result

The main objective of this paper is to show that information theoretic measures present a decent solution to the problem of dependence in the financial series data. For this purpose, we considered 14 time series comprising the daily closing price of the international stock indices and their selective stocks which were traded continuously between 1 January 2001 and 31 December 2018 (about 4433 observations in each time series).

To apply entropic measures in operation and to find their properties, we obtained daily closing price data over eighteen years of international stock market indices, namely IBOVESPA (Brazil), Hang Seng (Hong Kong), SSE Composite (China), Dow Jones Industrial Average (United States), CAC 40 (France), DAX (France), NASDAQ (United States), Nifty 50 (India) and BSE sensex (India), accessed on 1 August 2020 from in.finance.yahoo.com, the free financial data online platform. Furthermore, we make a comparison of two Indian market indices namely, NIFTY 50 and S&P BSE sensex with their common stocks. During the complete selection process, daily closing price data filtered to select stocks listed in their respective indices and as a result due to inadequate data, five stocks were selected from the study.

Our main emphasis is on determining the dependence level between each stock and its stock index, i.e., to select a stock which is more dependent on its relative index and this has been estimated by the information theoretic measures. Further, to compare two different indices with their stocks and measure their level of dependence, the concept of mutual information is used as analogous to covariance and normalized mutual information is calculated akin to the Pearson’s coefficient of correlation.

4. Tables and Figures

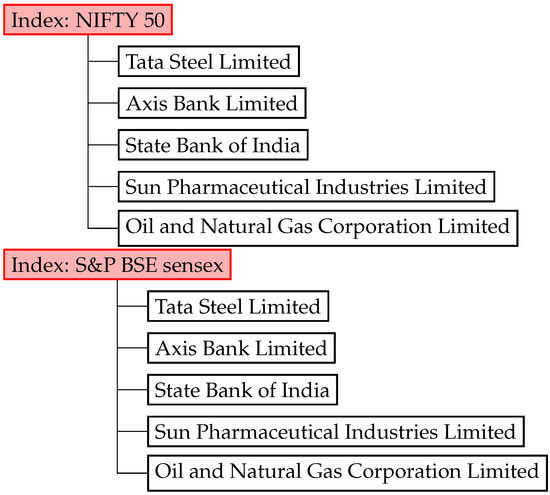

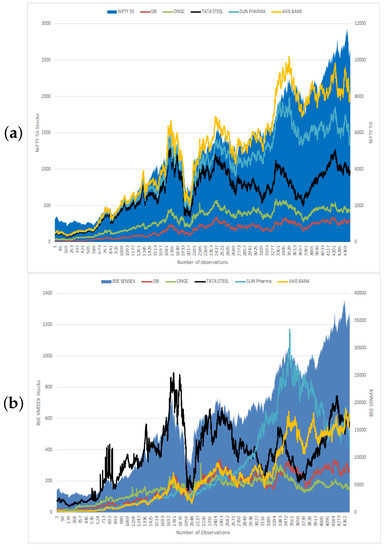

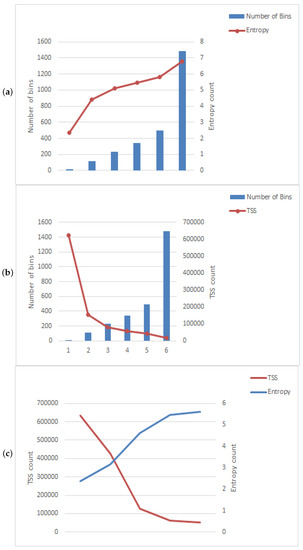

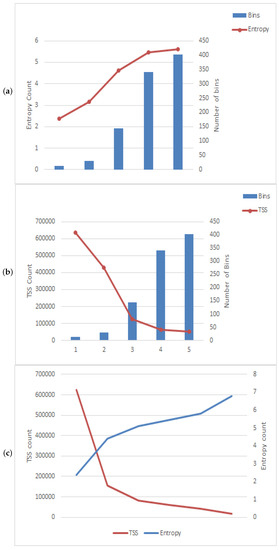

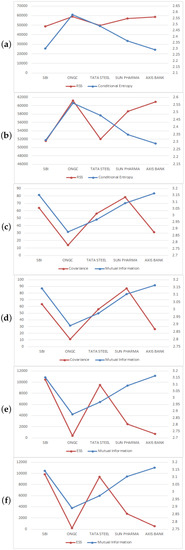

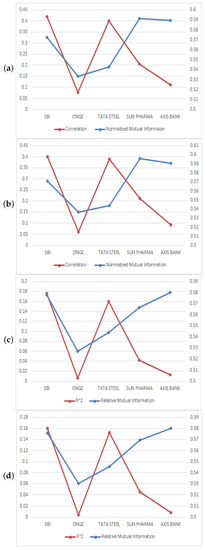

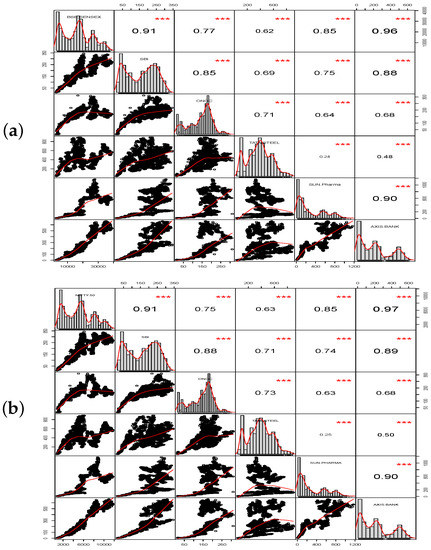

Figure 1 presents the allocation of the aforementioned Indian stock indices with their selected stocks. Figure 2 gives the details of the distribution of the aforementioned international stock indices; and Figure 3 gives the nature of indices with their stocks. Next, Figure 4, Figure 5, Figure 6 and Figure 7 represent the visual comparison of information and statistical measures. Furthermore, in Figure 4 and Figure 5 if we increases bin counts, entropy and TSS measures become inversely symmetric. We have presented equivalence between statistical measures and information theoretic measures, refer to Table 1. From the data given in Table 2, Table 3, Table 4 and Table 5, by using statistical software R (RStudio© 2021.09.1, 2009–2021 RStudio, Build 372, PBC, 250 Northern Ave, Boston, MA 02210), we present four correlation matrix plots of above-mentioned international indices with their stocks, refer to Figure 8 and Figure 9.

Figure 1.

Distribution of Indian market indices with their stocks.

Figure 2.

Distribution of International market indices.

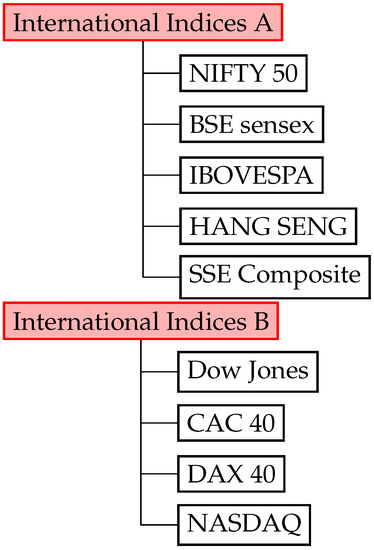

Figure 3.

Nature of indices with their stocks. (a) NIFTY 50. (b) BSE SENSEX.

Figure 4.

Comparison of Shannon entropy and Total sum of square of BSE sensex stocks. (a) Entropy with bins. (b) TSS with bins. (c) Entropy and TSS.

Figure 5.

Comparison of Shannon entropy and Total sum of square of NIFTY 50 stocks. (a) Entropy with bins. (b) TSS with bins. (c) Entropy and TSS.

Figure 6.

Comparison of information and statistical meaures of NIFTY 50 and BSE sensex stocks. (a) Conditional entropy and RSS with Nifty stocks. (b) Conditional entropy and RSS with BSE sensex stocks. (c) Mutual information and covariance with Nifty stocks. (d) Mutual information and covariance with BSE sensex stocks. (e) Mutual information and ESS with Nifty stocks. (f) Mutual information and ESS with BSE sensex stocks.

Figure 7.

Comparison of information and statistical meaures of NIFTY 50 and BSE sensex stocks. (a) Correlation and Normalized mutual information with Nifty stocks. (b) Correlation and Normalized mutual information with BSE sensex stocks. (c) Relative mutual information and R2 with Nifty stocks. (d) Relative mutual information and R2 with BSE sensex stocks.

Table 1.

Equivalences between statistical measures and information theoretic measures.

Table 2.

Correlation between NIFTY 50 index and its stocks with = 0.96765.

Table 3.

Correlation between BSE sensex index and its stocks with = 0.96035.

Table 4.

Correlation between International Indices A.

Table 5.

Correlation between International Indices B.

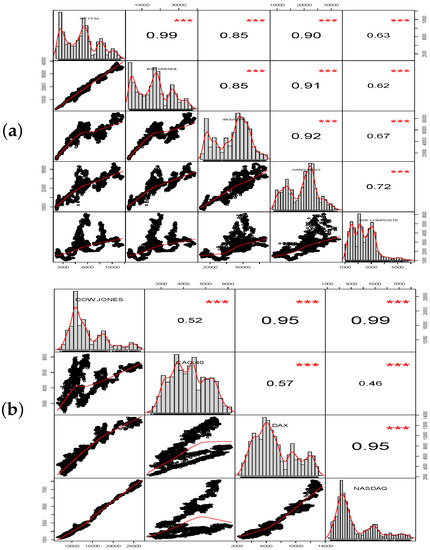

Figure 8.

Correlation matrix plot of BSE sensex and Nifty 50 with their stocks SBI, ONGC, Tata Steel, Sun Pharma, and Axis Bank. (a) BSE sensex. (b) NIFTY 50. The distribution of each stock/index is shown on the diagonal; on the lower diagonal, the bi-variate scatter plots with the fitted higher order degree polynomial are shown and on the upper diagonal, the correlation value plus the significance level (p-values: 0.001) as stars (“***”).

Figure 9.

Correlation matrix plot of indices: Nifty 50 with BSE sensex, IBOVESPA, Hang Seng, and SSE Composite and Dow Jones with CAC 40, DAX, and NASDAQ. (a) Nifty 50. (b) Dow Jones. The distribution of each stock/index is shown on the diagonal; on the lower diagonal, the bi-variate scatter plots with the fitted higher order degree polynomial are shown and on the upper diagonal, the correlation value plus the significance level (p-values: 0.001) as stars (“***”).

We discussed the choice of bin counts for the nature of calculation of statistical and information theoretic measures, see Table 6, Table 7, Table 8, Table 9, Table 10 and Table 11. In general, the count of bins should be selected on the basis of availability of the number of observations of time series data. In Table 6 and Table 7 we show a count of total number of bins for the indices NIFTY 50 (342 bins) and BSE sensex (341 bins), respetively; we come to a conclusion that BSE sensex is more uncertain stock index as compared to NIFTY 50 and furthermore, Axis bank is the underlying stock which is more dependent compared with other stocks.

Table 6.

NIFTY 50 and its stocks.

Table 7.

BSE sensex and its stocks.

Table 8.

International market indices A.

Table 9.

International market indices B.

Table 10.

Comparison of Shannon entropy and Total sum of square of BSE Sensex stocks.

Table 11.

Comparison of Shannon entropy and Total sum of square of NIFTY 50 stocks.

In other words, by observing the Axis bank stock, more information about NIFTY 50 and BSE sensex can be obtained. Furthermore, by observing ONGC stock, remaining uncertainty about the aforementioned indices is greater than other stocks. We have also come to the conclusion that the measure of global correlation coefficient can be useful if a lower count of bins are considered, otherwise this measure gives a similar output to all stock variables. Thus, in this case, the large count of data bins would not be a reasonable choice for comparative analysis of information theoretic measures with statistical measures, as even in very small change in the prices no depth-patterns are to be found. The coefficient of correlation is a long-standing estimator of the degree of statistical dependence; however mutual information has advantages over it. Furthermore, it is evident from from Table 7 and Table 8 that the Shannon entropy, mutual information and the conditional entropy perform well in accordance with the systematic risk and the specific risk derived through the linear market model. Furthermore, from Table 8, as per maximum count of bins (i.e., 403), the index BSE sensex can be used as the appropriate variable for the dependency of the index NIFTY 50.

Furthermore, studies based on other stock market indices and foreign exchange markets can also be carried out to further examine the effectiveness of this approach. One cannot directly visualize dependency of a stock on its index using technical analysis; therefore the investor has to work on theoretical aspects too. In Table 9, as per maximum count of bins (i.e., 1132), the international index DAX can be used as the appropriate variable for the dependency of DOW Jones in comparison of other indices, such as CAC 40 and NASDAQ.

5. Discussion and Conclusions

5.1. Discussion

We find that the information measures such as mutual information is an ideal way to expand the financial networks of stock market indices based on statistical measures, while the measure of variation of information presents a different side of the markets but produces networks with troubling characteristics. The proposed methodology is sensitive to the choice of data bin counts in which the daily closing prices of market data are separated and require a large sample size. Furthermore, the comparison of entropy measures with statistical measures of dependent stock indices, such as Shannon entropy vs. variance of a stock, conditional entropy vs. residual sum of squares, coefficient of determination vs. the relative mutual information rate, and correlation vs. normalized mutual information measures indicate the further existence of non-linear relationships that are not identified by the statistical correlation measure and hence present a different aspect of market analysis.

Information measures such as Shannon entropy and one parametric entropy, such as Renyi and Tsallis entropies, were studied extensively by many researchers for analyzing volatility in financial markets. However, this concept can still be explored further using various two parametric entropy measures. The non-linear fluctuations in a variety of markets (i.e., stock, future, currency, commodity, and cryptocurrency) require powerful tools such as entropy for extracting information from the market that is otherwise not visible in standard statistical methods. Further, we can extend the information theoretic approach used in this paper to highly volatile financial markets.

5.2. Conclusions

This paper uses information theoretic concepts such as entropy, conditional entropy, and mutual information and their dynamic extensions to analyze the statistical dependence of the financial market. We checked some equivalences between information theoretic measures and statistical measures normally employed to capture the randomness in financial time series. This approach has the ability to check the non-linear dependence of market data without any specific probability distributions. We used different international stock indices to address our problem. Mutual information and conditional entropy were relatively efficient compared to the measures of statistical dependence. From the results obtained, it is inferred that the information theoretic approach observes the dependency effectively and can be considered to be an efficient approach to study financial markets. In addition, these measures can provide some additional information for financial market investors.

Author Contributions

L.B., Conceptualization, Writing—original draft, Methodology, Software. H.C.T., Conceptualization, Supervision, Writing—review & editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We thank Junzo Watada for their keen interest in this work, and the reviewers for their valuable comments leading to the improvement of the earlier version of paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mantegna, R.N. Hierarchical structure in financial markets. Eur. Phys. J. B Condens. Matter Complex Syst. 2012, 11, 193–197. [Google Scholar] [CrossRef] [Green Version]

- Tumminello, M.; Matteo, T.D.; Aste, T.; Mantegna, R.N. Correlation based networks of equity returns sampled at different time horizons. Eur. Phys. J. B 2007, 55, 209–217. [Google Scholar] [CrossRef]

- Gulko, L. The entropy theory of stock option pricing. Int. J. Theor. Appl. Financ. 1999, 2, 331–355. [Google Scholar] [CrossRef]

- Taneja, H.C.; Batra, L.; Gaur, P. Entropy as a measure of implied volatility in options market. AIP Conf. Proc. 2019, 2183, 110005. [Google Scholar]

- Batra, L.; Taneja, H.C. Evaluating volatile stock markets using information theoretic measures. Phys. A Stat. Mech. Its Appl. 2020, 537, 122711. [Google Scholar] [CrossRef]

- Darbellay, G.A.; Wuertz, D. The entropy as a tool for analysing statistical dependences in financial time series. Phys. A Stat. Mech. Appl. 2000, 287, 429–439. [Google Scholar] [CrossRef]

- Song, L.; Langfelder, P.; Horvath, S. Comparison of co-expression measures: Mutual information, correlation, and model based indices. BMC Bioinform. 2012, 13, 328. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ormos, M.; Zibriczky, D. Entropy-Based Financial Asset Pricing. PLoS ONE 2014, 9, E115742. [Google Scholar] [CrossRef] [PubMed]

- Mercurio, P.J.; Wu, Y.; Xie, H. An Entropy-Based Approach to Portfolio Optimization. Entropy 2020, 22, 332. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Novais, R.G.; Wanke, P.; Antunes, J.; Tan, Y. Portfolio Optimization with a Mean-Entropy-Mutual Information Model. Entropy 2022, 24, 369. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, A.; Julliard, C.; Taylor, A.P. An Information-Theoretic Asset Pricing Model; London School of Economics: London, UK, 2016. [Google Scholar]

- Batra, L.; Taneja, H.C. Portfolio optimization based on generalized information theoretic measures. Commun. Stat. Theory Methods 2020, 1861294. [Google Scholar] [CrossRef]

- Batra, L.; Taneja, H.C. Approximate-Analytical solution to the information measure’s based quanto option pricing model. Chaos Solitons Fractals 2021, 153, 111493. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef] [Green Version]

- Zhou, R.; Cai, R.; Tong, G. Applications of entropy in finance: A Review. Entropy 2013, 15, 4909–4931. [Google Scholar] [CrossRef]

- Dionisio, A.; Menezes, R.; Mendes, D.A. Entropy and uncertainty analysis in financial markets. arXiv 2007, arXiv:0709.0668. [Google Scholar]

- Guo, X.; Zhang, H.; Tian, T. Development of stock correlation networks using mutual information and financial big data. PLoS ONE 2018, 13, e0195941. [Google Scholar] [CrossRef] [PubMed]

- Soofi, E.S. Information theoretic regression methods. In Applying Maximum Entropy to Econometric Problems (Advances in Econometrics); Emerald Group Publishing Limited: Bingley, UK, 1997; Volume 12, pp. 25–83. [Google Scholar]

- Melia, M. Comparing clusterings—An information based distance. J. Multivar. Anal. 2007, 98, 873–895. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).