Abstract

For the purpose of obtaining solutions to Banach-space-valued nonlinear models, we offer a new extended analysis of the local convergence result for a seventh-order iterative approach without derivatives. Existing studies have used assumptions up to the eighth derivative to demonstrate its convergence. However, in our convergence theory, we only use the first derivative. Thus, in contrast to previously derived results, we obtain conclusions on calculable error estimates, convergence radius, and uniqueness region for the solution. As a result, we are able to broaden the utility of this efficient method. In addition, the convergence regions of this scheme for solving polynomial equations with complex coefficients are illustrated using the attraction basin approach. This study is concluded with the validation of our convergence result on application problems.

1. Introduction

Numerous very complicated scientific and engineering phenomena may be treated using nonlinear equations of the kind:

where is derivable, as suggested by Fréchet. , are complete normed vector spaces, and is non-null, convex, and open. Confronting such nonlinearity has remained a major challenge in mathematics. Analytical solutions to these problems are incredibly difficult to come up with. As a result, scientists and researchers often utilize iterative procedures to obtain the desired answer. Among iterative approaches, Newton’s method is often employed to solve these nonlinear equations. Steffensen method [1,2] is well known among iterative schemes without derivatives. Sharma and Arora [3] deduced the following algorithm, which is a variant of the Steffensen method:

where is the divided difference of order one, and and is an initial point. This algorithm uses one matrix inversion. Furthermore, Wang and Zhang’s [4] extended method (3) to design a seventh convergence order method is as follows:

In addition, numerous novel higher-order iterative strategies [3,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23] have been developed and implemented during the past few years. The majority of these research papers provide convergence theorems for iterative schemes by imposing requirements on higher-order derivatives. Furthermore, these investigations make no judgments about the convergence radius, error distances, or existence-uniqueness area for the solution.

In the research of iterative schemes, it is essential to determine the domain of convergence. The convergence domain is often rather narrow in most circumstances. Thus, without making any additional assumptions, the convergence domain must be extended. Additionally, accurate error distances must be approximated in the convergence investigation of iterative methods. Focusing on these points, we consider a method without derivatives, which is as follows:

where , , and .

However, it is crucial to note that the seventh order convergence of (4) was achieved in [24] by the use of conditions on the derivative of order eight, while this scheme is derivative-free. Because the convergence of this scheme is reliant on derivatives of a higher order, its usefulness is reduced. Taking the function , defined on by:

one can observe that the previous conclusion on the convergence of this method [24] fails to hold because of the unboundedness of . Aside from that, the analytical outcome in [24] is not sufficient for the calculation of error and convergence radius. There is no conclusion in [24] that can be drawn concerning the location and uniqueness of . The local analysis results allow one to estimate the error , convergence radius and uniqueness zone for . The findings of local convergence, in particular, are very useful since they provide information on the critical problem of identifying starting points. For this reason, we propose a new extended local analysis of the derivative free method (4). In determining the convergence radius, error , and uniqueness area of , our work is beneficial. This technique can be used to extend the applicability of other methods and relevant topics along the same lines [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,25]. In addition, using the attraction basin approach, the areas where this method can find solutions to complex polynomial equations are shown.

The arrangement of this paper can be described by summarizing the remainder of the text into the following statements. Section 2 deduces the theoretical outcomes with respect to local convergence of method (4). In Section 3, attraction basins for this scheme are shown. The suggested local analysis is verified numerically in Section 4. Finally, several conclusions are offered.

2. Local Convergence

We introduce scalar parameters and functions to deal with the local convergence analysis of scheme (4). Let and .

Suppose function:

- (1)

- has a smallest zero for some function , which is continuous and non-decreasing (function). Let .

- (2)

- has a smallest zero for some (function) and function defined by:

- (3)

- has a smallest zero for some (functions) , , , , and function defined by:

- (4)

- has a smallest zero for some function defined by:

The parameter defined by

is shown next to be a convergence radius for method (4).

Let . It follows from the definition of radius that for all :

and

By , we denote the closure of the open ball with center and of radius .

The conditions are needed provided that is a simple solution of equation , and functions are used as previously defined.

Suppose the following:

- (a1)

- for all and some , .

- Let .

- (a2)

- and

- for all some , , and s, z given by the first two substeps of method (4).

- (a3)

- , where .

Next, we show the following local convergence result for method (4) using the preceding notation and the conditions .

Theorem 1.

Suppose that the conditions hold. Then, iteration generated by method (4) converges to provided that starter .

Proof.

Mathematical induction shall be used to show items:

By hypothesis , so (9) holds for . Using (6), (7), and , we obtain:

Estimate (13) with the Banach perturbation lemma on linear invertible operators [5,25] and imply and:

Hence, iterates , and are well-defined by the three substeps of method (4), respectively. Moreover, we can write:

and

Notice that and similarly , so .

Using (6), (8)(for ), (14), (15), and (), we have:

showing and (10) for . Moreover, using (6), (8) (for ), (14), (16), (), and (18), we obtain:

showing and (11) for .

Furthermore, using (6), (8) (for ), (14), (17), (), (18), and (19), we obtain:

showing (9) for and (12) for .

So, items (9) for and (10)–(13) hold for . Then, if we exchange by in the preceding calculations, we terminate the induction. It then follows from the estimation:

where that and . □

Next, concerning the uniqueness of the solution , we have:

Proposition 1.

Suppose the following:

- (i)

- Pointis a simple solution of equation.

- (ii)

- has a smallest solution.Let .

- (iii)

- for all and some function .Let .Then, the only solution of the equation in the set is .

Proof.

Let for some with . Then, using (ii) and (iii), we have:

so, . In view of the identity , we conclude . □

Remark 1. (a) Let us consider the choices or or the standard definition of the divided difference when [5,8,9,15,16,22]. Moreover, suppose:

and

where functions , are continuous and nondecreasing. Then, under the first or second choice above, it can easily be seen that the hypotheses (A) require for , and the choices as given in Example 1.

(b) Hypotheses (A) can be condensed using instead the classical but strongest and less precise condition for studying methods with divided differences [22]:

for all , where function is continuous and nondecreasing. However this condition does not give the largest convergence conditions, and all the functions are at least as small as .

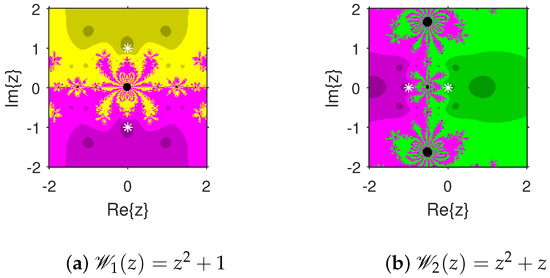

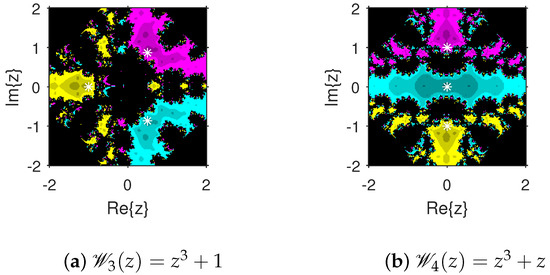

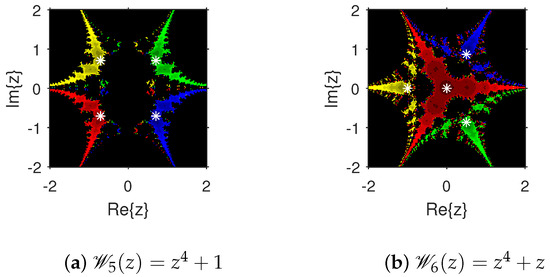

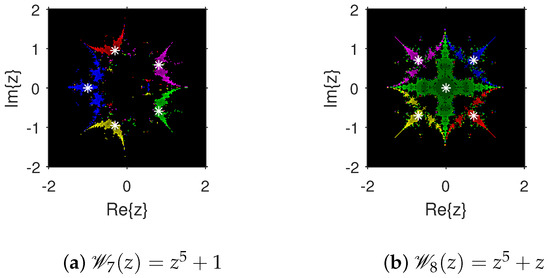

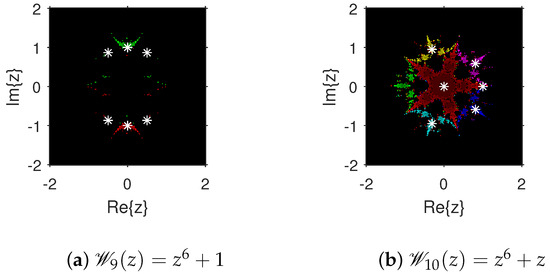

3. Attraction Basins

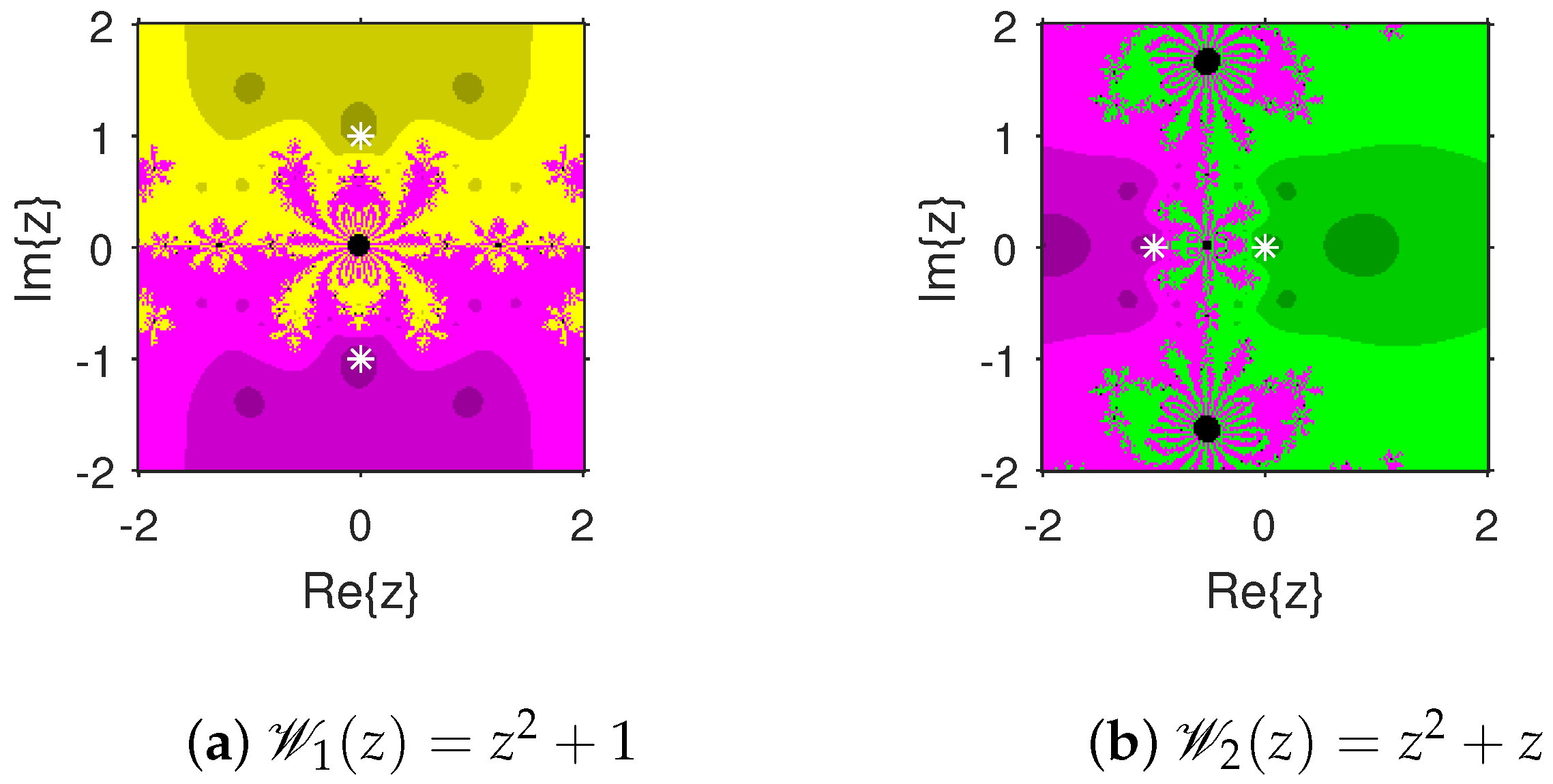

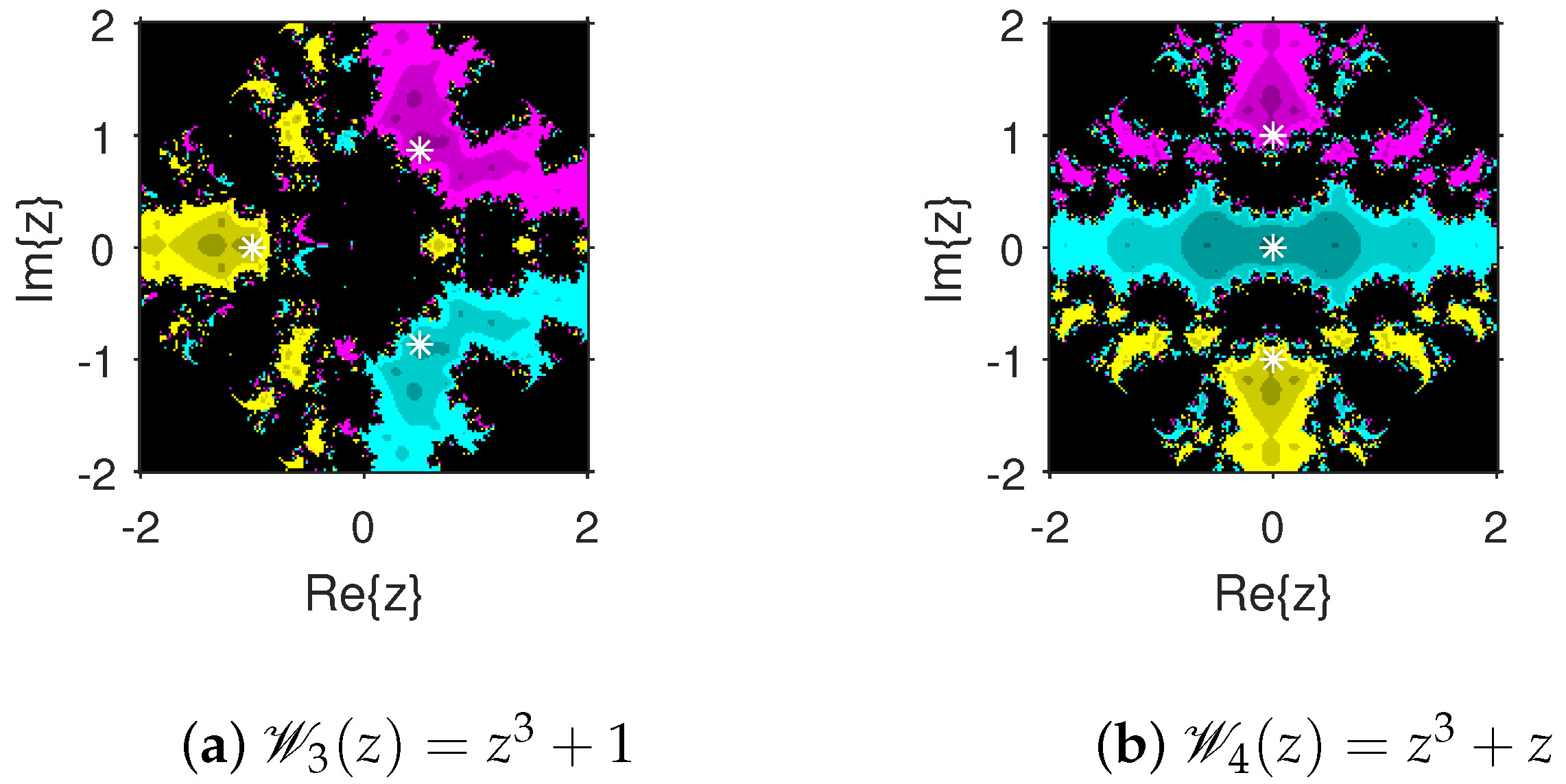

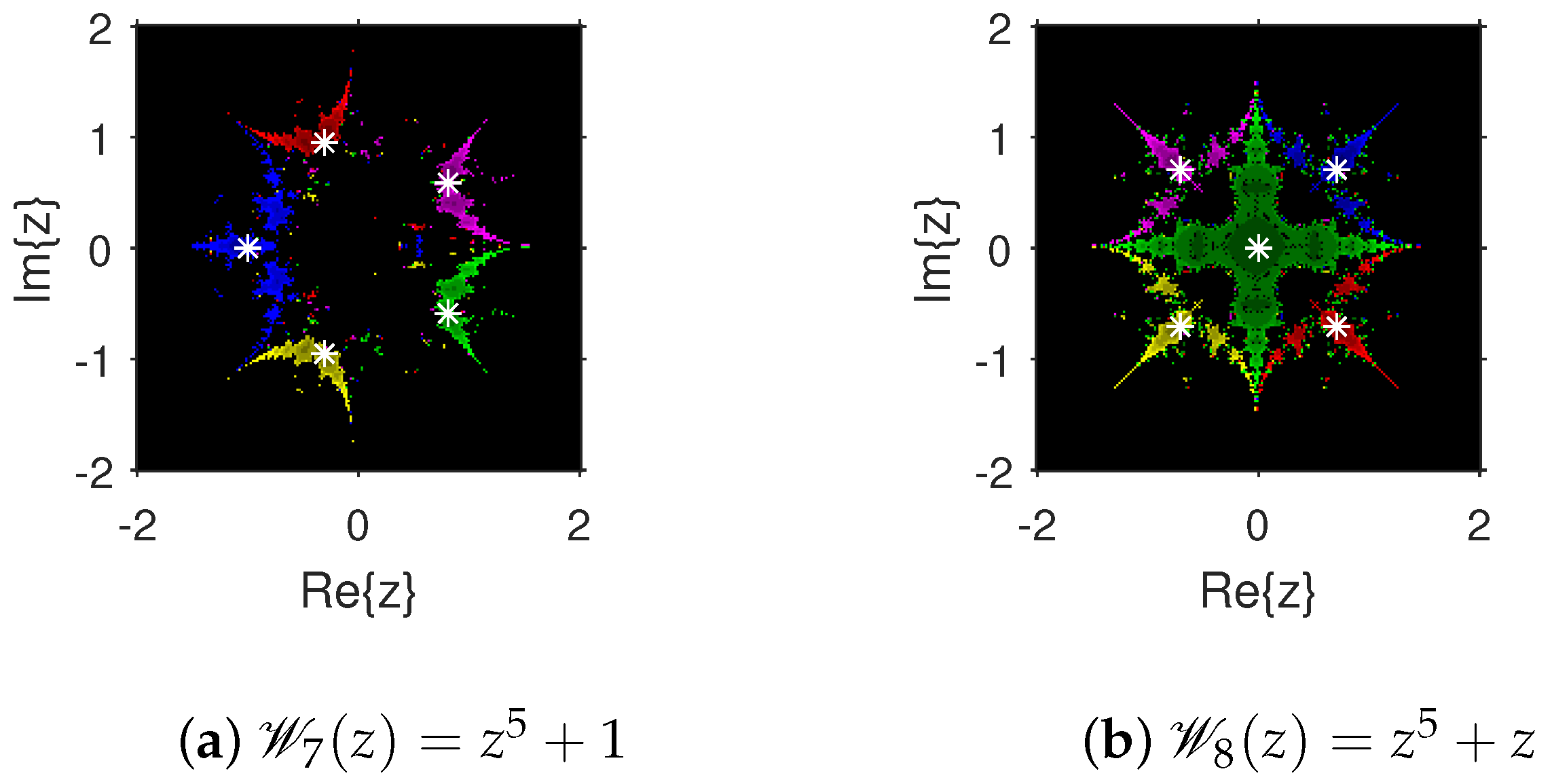

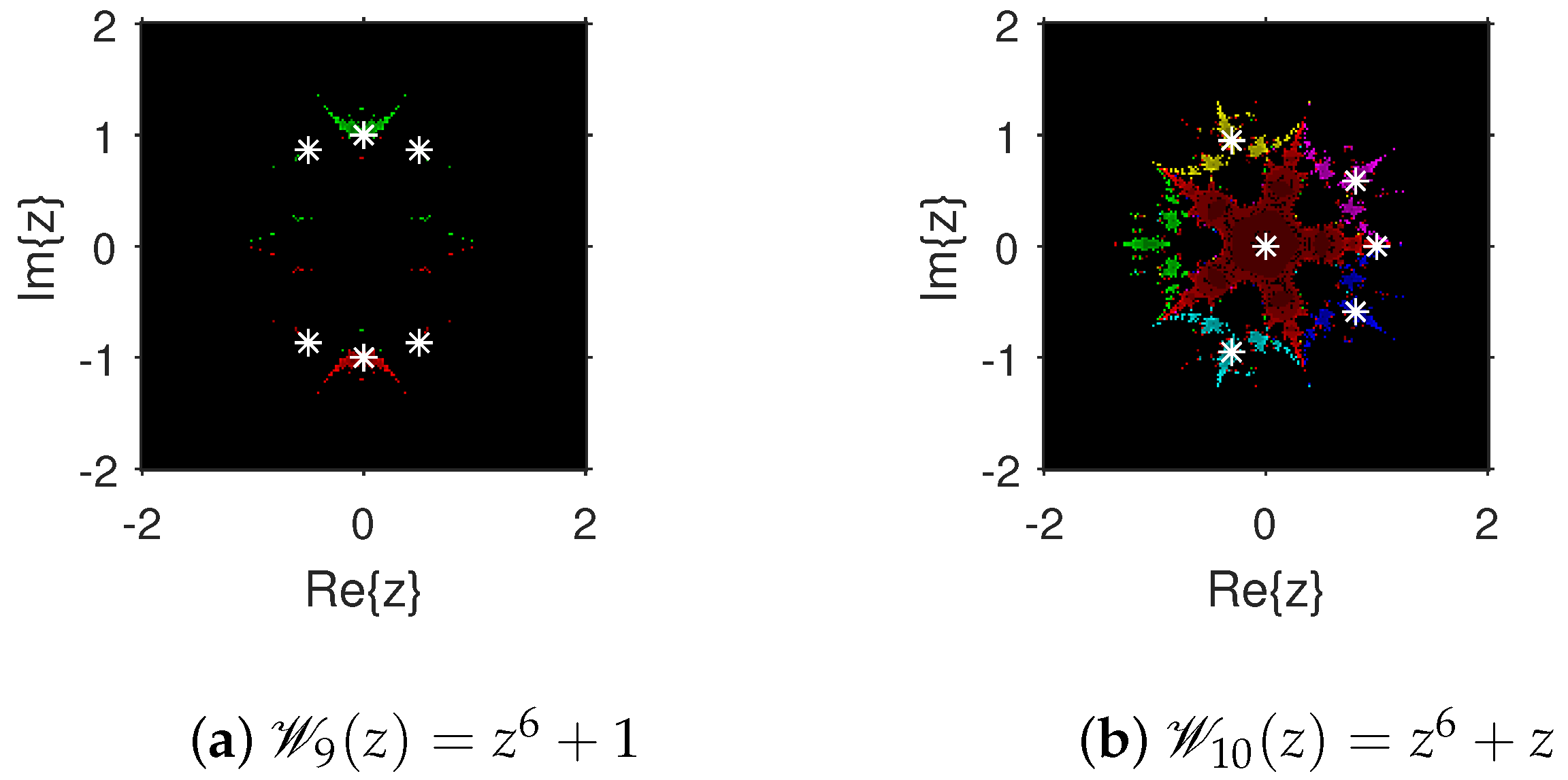

The attraction basins is an extremely valuable geometrical tool for measuring the convergence zones of various iteration schemes. Using this tool, we can see all of the beginning points that converge to any root when we use an iterative procedure. This allows us to identify in a visual fashion which locations are excellent selections as starting points and which ones are not. We select the starting point ×, and algorithm (4) is applied on 10 polynomials with complex coefficients. The point is a member of the basin of a zero of a test polynomial if , and then is displayed using a specific color associated with . As per the number of iterations, we employ light to dark colors for each starting guess . The point is denoted in black if it is not a member of the attraction basin of any zero of the test polynomial. The conditions or maximum 100 iterations are set to end the iteration process. For constructing the fractal diagrams, MATLAB 2019a is employed.

In the first step, we take and to generate the basins related to their zeros. In Figure 1a, yellow and magenta colors indicate the attraction basins of the zeros i and , respectively, of . Figure 1b displays the attraction basins related to the zeros and 0 of in magenta and green colors, respectively. Next, we choose polynomials and . Figure 2a shows the attraction basins associated to the zeros , and of in cyan, yellow, and magenta, respectively. In Figure 2b, the basins of the zeros 0, , and i of are illustrated in cyan, yellow, and magenta colors, respectively. Furthermore, the complex polynomials and of degree four are considered to demonstrate the attraction basins associated with their zeros. In Figure 3a, the basins of the solutions , , and of are respectively displayed in green, blue, red, and yellow zones. In Figure 3b, convergence to the zeros , , and 0 of the polynomial is presented in yellow, blue, green, and red, respectively. Furthermore, and of degree five are selected. In Figure 4a, magenta, green, yellow, blue, and red colors stand for the attraction basins of the zeros , , , , and , respectively, of . Figure 4b provides the basins related to the solutions , 0, , , and of in blue, green, magenta, yellow, and red colors, respectively. Lastly, and degree six are considered. In Figure 5a, the basins of the solutions , , , , , and of are painted in yellow, blue, green, magenta, cyan, and red, respectively. Figure 5b gives the basins related to the roots , , 0, , , and of in green, yellow, red, cyan, magenta, and blue colors, respectively.

Figure 1.

Attraction basins associated with polynomials and .

Figure 2.

Attraction basins associated with polynomials and .

Figure 3.

Attraction basins associated with polynomials and .

Figure 4.

Attraction basins associated with polynomials and .

Figure 5.

Attraction basins associated with polynomials and .

4. Numerical Examples

The numerical verification of the new convergence result is conducted in this section. The following example is taken.

Example 1

([6]). Let and . Consider on Ω for as:

We have: ,

- ,

- ,

- ,

- ,

- ,

- ,

- ,

- ,

- ,

- and

Table 1. Convergence radius for Example 1.

Table 1. Convergence radius for Example 1.

Example 2.

Consider the following nonlinear system of equations:

Let , , , , where , , and . The divided difference is a two-by-two real matrix defined for by , , if and . However, if or , then use for . Similarly, replace by above to define the divided difference provided that and . However, if or , use the zero matrix for . Choose initial points and . Then, after three iterations, the application of method (4) gives the solution for the given system of equations.

5. Conclusions

By eliminating the Taylor series tool from the existing convergence theorem, extended local convergence of a seventh order method without derivatives is developed. The first derivative is all that is required for our convergence result, unlike the preceding concept. In addition, the error estimates, convergence radius, and region of uniqueness for the solution are calculated. As a result, the usefulness of this effective algorithm is enhanced. The convergence zones of this algorithm for solving polynomial equations with complex coefficients are also shown. This aids in the selection of beginning points with the purpose of obtaining a certain root. Our convergence result is validated by numerical testing.

Author Contributions

Conceptualization, I.K.A. and D.S.; methodology, I.K.A., D.S., C.I.A. and S.K.P.; software, I.K.A., C.I.A. and D.S.; validation, I.K.A., D.S., C.I.A. and S.K.P.; formal analysis, I.K.A., D.S. and C.I.A.; investigation, I.K.A.; resources, I.K.A., D.S., C.I.A. and S.K.P.; data curation, C.I.A. and S.K.P.; writing—original draft preparation, I.K.A., D.S., C.I.A. and S.K.P.; writing—review and editing, I.K.A. and D.S.; visualization, I.K.A., D.S., C.I.A. and S.K.P.; supervision, I.K.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The researchers have no conflict of interest.

References

- Ortega, J.M.; Rheinholdt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Traub, J.F. Iterative Methods for Solution of Equations; Prentice-Hall: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Sharma, J.R.; Arora, H. An efficient derivative free iterative method for solving systems of nonlinear equations. Appl. Anal. Discr. Math. 2013, 7, 390–403. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Zhang, T. A family of steffensen type methods with seventh-order convergence. Numer. Algor. 2013, 62, 429–444. [Google Scholar]

- Argyros, I.K. Computational Theory of Iterative Methods, Series: Studies in Computational Mathematics; Chui, C.K., Wuytack, L., Eds.; Elsevier: New York, NY, USA, 2007. [Google Scholar]

- Argyros, I.K.; George, S. On the complexity of extending the convergence region for Traub’s method. J. Complex. 2020, 56, 101423. [Google Scholar] [CrossRef]

- Argyros, I.K. Unified Convergence Criteria for Iterative Banach Space Valued Methods with Applications. Mathematics 2021, 9, 1942. [Google Scholar] [CrossRef]

- Argyros, I.K.; Magreñán, Á.A. A Contemporary Study of Iterative Methods; Elsevier: New York, NY, USA, 2018. [Google Scholar]

- Argyros, I.K. The Theory and Applications of Iterative Methods, 2nd ed; Engineering Series; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Argyros, I.K.; Sharma, D.; Argyros, C.I.; Parhi, S.K.; Sunanda, S.K. Extended iterative schemes based on decomposition for nonlinear models. J. Appl. Math. Comput. 2021. [Google Scholar] [CrossRef]

- Behl, R.; Bhalla, S.; Magreñán, Á.A.; Moysi, A. An optimal derivative free family of Chebyshev-Halley’s method for multiple zeros. Mathematics 2021, 9, 546. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martinez, E.; Torregrosa, J.R. A modified Newton-Jarratts composition. Numer. Algor. 2010, 55, 87–99. [Google Scholar] [CrossRef]

- Ezquerro, J.A.; Hernandez, M.A.; Romero, N.; Velasco, A.I. On Steffensen’s method on Banach spaces. J. Comput. Appl. Math. 2013, 249, 9–23. [Google Scholar] [CrossRef]

- Grau-Sanchez, M.; Grau, A.; Noguera, M. Frozen divided difference scheme for solving systems of nonlinear equations. J. Comput. Appl. Math. 2011, 235, 1739–1743. [Google Scholar] [CrossRef]

- Hernandez, M.A.; Rubio, M.J. A uniparametric family of iterative processes for solving nondifferentiable equations. J. Math. Anal. Appl. 2002, 275, 821–834. [Google Scholar] [CrossRef] [Green Version]

- Kantorovich, L.V.; Akilov, G.P. Functional Analysis; Silcock, H.L., Translator; Pergamon Press: Oxford, UK, 1982. [Google Scholar]

- Liu, Z.; Zheng, Q.; Zhao, P. A variant of Steffensen’s method of fourth-order convergence and its applications. Appl. Math. Comput. 2010, 216, 1978–1983. [Google Scholar] [CrossRef]

- Magreñán, Á.A.; Argyros, I.K.; Rainer, J.J.; Sicilia, J.A. Ball convergence of a sixth-order Newton-like method based on means under weak conditions. J. Math. Chem. 2018, 56, 2117–2131. [Google Scholar] [CrossRef]

- Magreñán, Á.A.; Gutiérrez, J.M. Real dynamics for damped Newton’s method applied to cubic polynomials. J. Comput. Appl. Math. 2015, 275, 527–538. [Google Scholar] [CrossRef]

- Ren, H.; Wu, Q.; Bi, W. A class of two-step Steffensen type methods with fourth-order convergence. Appl. Math. Comput. 2009, 209, 206–210. [Google Scholar] [CrossRef]

- Sharma, D.; Parhi, S.K. On the local convergence of higher order methods in Banach spaces. Fixed Point Theory. 2021, 22, 855–870. [Google Scholar] [CrossRef]

- Sharma, J.R.; Arora, H. A novel derivative free algorithm with seventh order convergence for solving systems of nonlinear equations. Numer. Algor. 2014, 67, 917–933. [Google Scholar] [CrossRef]

- Sharma, J.R.; Arora, H. Efficient derivative-free numerical methods for solving systems of nonlinear equations. Comp. Appl. Math. 2016, 35, 269–284. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, T.; Qian, W.; Teng, M. Seventh-order derivative-free iterative method for solving nonlinear systems. Numer. Algor. 2015, 70, 545–558. [Google Scholar] [CrossRef]

- Rall, L.B. Computational Solution of Nonlinear Operator Equations; Robert E. Krieger: New York, NY, USA, 1979. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).