Abstract

This study investigates how Moroccan users experience and interpret digital content that seems tailored to their personal profiles. While many participants recognize the relevance of such content, their willingness to engage depends less on accuracy and more on whether they feel respected and in control. Based on 629 survey responses and analyzed using Partial Least Squares Structural Equation Modelling (PLS-SEM), the findings indicate that perceived control is the most influential factor in building trust, which in turn strongly predicts engagement. Conversely, when content feels intrusive or when users have concerns about how their data is managed, trust declines—even if the targeting appears accurate. These results imply that people do not simply react to what they receive but also to the manner in which it is delivered and explained. In a rapidly digitizing environment like Morocco, where awareness of data rights remains limited, trust and transparency emerge as essential foundations for meaningful digital interaction. The study provides practical insights for marketers and platforms aiming to design targeting strategies that are not only effective but also ethically responsible and aligned with users’ expectations.

1. Introduction

Digital marketing has experienced a profound shift in recent years, largely propelled by developments in artificial intelligence (AI), data analytics, and real-time user profiling. One of the most disruptive trends in this evolution is extreme content customization. This approach transcends traditional segmentation by dynamically adjusting messages, offers, and experiences to match the unique context of each individual. Unlike earlier forms of targeting that relied on fixed attributes like demographics or purchase history, modern customization draws from live interaction cues, situational factors, and predictive algorithms to deliver content that feels timely and individually relevant [].

For instance, a user browsing sneakers on a mobile app might shortly receive an Instagram ad displaying the exact item, filtered by their shoe size, preferred color, and local stock. This ad might even include a time-sensitive promo code linked to their current location. While such precision can boost engagement and conversion rates, it also invites critical reflection: When does relevance tip into surveillance? How do individuals mentally and emotionally process these tailored interactions? This increasing refinement has led to what scholars refer to as the customization–privacy paradox. On one side, people appreciate efficiency and context-aware suggestions. On the other side, they grow wary of opaque data practices and the intrusive feel of some digital messages. Studies show that when outreach becomes “overly tailored,” recipients may feel exposed or manipulated—even if the message aligns with their actual interests [].

The way audiences respond to this form of marketing depends not only on the perceived usefulness of the message, but also on their trust in the brand, sense of autonomy in the data-use process, and level of concern about information misuse. When users believe they have some agency—for example, through privacy settings or opt-out mechanisms—they are generally more receptive. In contrast, lack of transparency or control options can lead to resistance, eroded trust, and even avoidance behaviors such as ad blocking or negative brand perception [].

Given these tensions, deeper empirical research is urgently needed to understand how users assess and react to context-sensitive advertising in everyday digital settings. This study aims to fill that gap by exploring cognitive and emotional responses to advanced targeting techniques. Using a structured survey and statistical modelling in Python 3.10, we investigate how factors such as message relevance, intrusiveness, data sensitivity, trust, and perceived autonomy influence the likelihood of user engagement or rejection.

Ultimately, this paper contributes to ongoing debates around the ethics and effectiveness of algorithmic targeting. Our findings are intended to guide marketers in designing adaptive strategies that combine innovation with respect for user dignity—systems that are not only driven by data, but also grounded in transparency and human-centered design.

2. Literature Review and Hypotheses

Building on this review and the formulated hypotheses, the following section outlines the methodological approach adopted to empirically test the proposed model.

2.1. Hyper-Personalization in Digital Advertising

Tailored content delivery has become a cornerstone of modern digital marketing. By drawing on live interaction data, machine learning, and AI-driven recommendation engines, marketers now fine-tune messages in real time to match each user’s context. His data-rich approach aligns with the broader evolution of marketing analytics, where real-time adaptability and predictive intelligence have become central to personalization strategies []. This approach surpasses conventional targeting by factoring in dynamic elements such as location, device type, time of day, and even inferred emotional cues, aiming to craft more relevant and engaging user experiences.

This shift reflects a growing emphasis on user-centered strategies in an increasingly crowded and competitive digital landscape. In this context, the work of recent scholars such as those of Madane, Azeroual and Saadaanein (2025) [], has shown how AI-based segmentation can enhance predictive performance, particularly in high-stakes domains like crowdfunding, where personalization is not merely a convenience but a critical factor for success. Their findings highlight the operational relevance of granular profiling, reinforcing the strategic value of smart personalization in digital environments.

While the advantages are apparent from a performance perspective—greater engagement, click-through rates, and potential for conversion—the success of hyper-personalization depends critically on consumer perception. The ability to deliver content that is “just right” relies not only on technical accuracy but also on emotional and psychological resonance with the user. Saura [] argues that as marketing messages become more intimate and tailored, they simultaneously walk a fine line between relevance and intrusion. Therefore, understanding consumer reactions to these strategies becomes central to assessing their long-term viability.

2.2. The Personalization–Privacy Paradox

This ambivalence is encapsulated in what is commonly referred to as the personalization–privacy paradox. Consumers often value tailored services but simultaneously express growing unease about the data practices that make such refinement possible. This anxiety stems from increasing consumer awareness of surveillance capitalism and data commodification, as highlighted by Martin and Murphy (2017), who point out that transparency is often undermined by the commercial logic of data harvesting []. This duality presents a major challenge for marketers, who must strive for relevance while maintaining perceived ethical boundaries. The literature consistently shows that the impact of targeted strategies depends on the extent to which users feel their privacy is acknowledged and safeguarded [].

For instance, Baek and Morimoto [] found that while people may appreciate content that aligns with their interests, their reactions turn negative when the targeting feels excessive or invasive. Ads based on third-party tracking or cross-platform behavior were more likely to be dismissed than those informed by voluntarily shared information. Aguirre et al. [] further highlight that the success of audience targeting is closely linked to users’ sense of control and trust in the brand. When efforts to foster trust fall short, even highly relevant outreach can provoke rejection or lead to avoidance behaviors.

This tension is especially pronounced in mobile and social media settings, where individuals spend substantial time and generate vast amounts of user-specific data, often without full awareness. In these contexts, delivering content that merely reflects user profiles is no longer enough. What increasingly matters is how the targeting is perceived—whether it feels respectful, transparent, and within acceptable personal limits.

2.3. Perceived Intrusiveness and the Role of Trust

Perceived intrusiveness has been defined as the degree to which an advertisement disrupts the user experience or encroaches on personal autonomy []. It is about content, timing, placement, and the device or context in which the message is received. As Bleier and Eisenbeiss [] highlight, intrusiveness is especially detrimental when it appears at a time when the consumer is not mentally receptive to marketing, such as during moments of focused or goal-directed browsing.

Trust in the brand or platform delivering the message significantly moderates the [] effects of perceived intrusiveness. Tucker found in a large-scale field experiment that improving privacy controls on social media platforms increased the effectiveness of personalized ads, not because the ads changed, but because the perception of user control and trust improved. Similarly, Goldfarb and Tucker [] show that transparency in how data is used significantly reduces the perception of intrusiveness. Thus, trust acts as a buffer, allowing consumers to tolerate higher levels of personalization without triggering negative emotional or behavioral responses.

2.4. Perceived Control and Consumer Empowerment

One of the most effective ways marketers can address privacy concerns is by giving users a tangible sense of control []. When individuals can adjust data-sharing settings, opt out of tracking, or customize how content is delivered to them, they tend to view targeted communication more favorably. In a study by Xu et al. [], perceived autonomy over data use significantly influenced the acceptance of location-based mobile advertising. Participants who felt empowered in managing their privacy expressed greater satisfaction and were less likely to find the outreach invasive.

These findings highlight the importance of building adaptive systems that are not only precise but also collaborative. Instead of imposing pre-configured targeting, brands may find greater success by involving users in the process—letting them define their own preferences and limits. Providing users with interactive control options increases their sense of agency, which improves receptiveness [].

2.5. Comparative Table of Recent Works

To situate the present study within the broader research landscape, Table 1 provides a comparative summary of recent empirical and conceptual contributions examining personalized advertising and consumer perceptions. As highlighted by Boerman et al. [], the field has rapidly expanded, yet remains fragmented in its treatment of core constructs such as trust, privacy concern, and perceived intrusiveness. The studies selected here reflect varied contexts, methods, and theoretical lenses, offering a foundation for the hypotheses developed in the following section.

Table 1.

Summary of Key Recent Studies on Personalized Advertising and Consumer Perceptions.

2.6. Hypotheses Development

The literature reviewed above reveals a complex and often contradictory relationship between how consumers experience hyper-personalized advertising and their subsequent behavior. On one side, tailored ads can feel relevant, practical, and even welcome. On the other hand, they can spark discomfort, distrust, and concerns about privacy. Based on these insights, we outline five key hypotheses that will guide our empirical investigation.

- H1. Perceived personalization positively influences behavioral intention.

When consumers feel that a marketing message is tailored to them, showing products they are genuinely interested in, or matching their past behavior, they tend to react positively. It creates a sense of relevance that cuts through digital noise. Prior studies suggest that well-targeted ads increase attention and make consumers more likely to engage with the brand by clicking, saving, or purchasing [,,].

- H2. Perceived intrusiveness negatively influences behavioral intention.

But relevance has its limits. When ads come across as overly intimate—drawing on sensitive data or appearing at intrusive moments—they may trigger irritation or even outright rejection. This is where finely tuned targeting can backfire. Studies show that when promotional content is perceived as overstepping, it can reduce engagement, undermine trust, and lead to avoidance strategies such as ignoring or blocking ads [,,]. In short, while tailored messaging can draw attention, it can just as easily drive users away when it exceeds their comfort zone.

- H3. Privacy concerns reduce trust in the brand.

One of the main reasons consumers hesitate to engage with personalized content is concern over how their data is collected and used. The fear of being tracked, profiled, or manipulated erodes trust in the company behind the ad. As several studies show, when users feel their privacy is at risk, they become skeptical and cautious, even if the content itself is functional or appealing [,,].

- H4. Perceived control strengthens trust in the brand.

On the flip side, when people feel that they are in control—that they can manage what data is shared, or opt out of targeting—they are more open to personalized experiences. Control fosters trust. Indeed, the presence of opt-out tools or symbolic gestures of agency (e.g., trustmarks or preference settings) can significantly shape user perception, as shown by Aiken and Boush []. It signals that the brand respects the consumer’s autonomy and is not hiding anything. Xu et al. [] and Boerman et al. [] highlight the power of providing users with tools to manage their privacy: it reduces concerns and actively increases trust.

- H5. Trust mediates the relationship between perceived personalization and behavioral intention.

Ultimately, we suggest that trust acts as the bridge between recognition and response. Even when users find a message relevant, they may hold back if they lack confidence in the source. By contrast, when trust is well established, targeted content becomes more convincing and meaningful. In this sense, trust transforms relevance into engagement [,].

Together, these five hypotheses form the framework for our analysis. They reflect a balancing act between relevance and risk, control and concern, that defines how people experience personalized marketing in the digital age.

3. Methodology

To empirically examine the proposed hypotheses, this study employed a structured quantitative methodology combining survey data collection with statistical modeling, ensuring both contextual relevance and methodological rigor.

3.1. Research Design

This study adopts a quantitative, survey-based approach to examine how consumers respond to advanced content targeting in digital environments. A structured questionnaire was designed using previously validated measurement scales from the literature to capture a wide spectrum of perceptions, attitudes, and response tendencies. The survey focused on six core dimensions influencing how users interpret and react to tailored outreach: perceived relevance, brand trust, perceived intrusiveness, privacy concerns, perceived control, and intention to engage.

The selection of Morocco as the empirical setting is both strategic and insightful. Over the past decade, the country has undergone significant digital transformation, characterized by widespread smartphone usage and increased activity on platforms like Facebook, Instagram, and TikTok [], and as noted by Zintl et al. [], the digital transformation in MENA countries has redefined state–society relations, often outpacing the evolution of data governance and trust mechanisms in the region. This surge in digital participation has enabled the proliferation of sophisticated advertising practices, including AI-driven targeting based on real-time user interaction.

At the intersection of technological progress and evolving norms, Morocco offers a distinctive environment for investigating tensions between data-driven relevance and user discomfort. Although a legal framework for data protection exists (notably, Law 09-08), public awareness and enforcement mechanisms remain limited. As a result, Moroccan users are frequently exposed to finely tuned ads without fully grasping how their data is gathered or applied—intensifying the friction between perceived usefulness and perceived risk.

By centering on a population navigating both rapid digital adoption and nascent privacy awareness, this research contributes insights that extend beyond Western-centric models. The Moroccan context presents a unique lens through which to explore how adaptive marketing techniques are received in culturally diverse, regulation-light environments—offering practical guidance for practitioners and theoretical value for global research on algorithmic targeting.

3.2. Questionnaire Structure and Measures

The survey instrument was carefully constructed using validated items from prior research, with adaptations to ensure contextual relevance to the current study. The final questionnaire consisted of 29 Likert-type items, divided into six conceptual dimensions.

Each item used a five-point Likert scale, ranging from 1 (Strongly Disagree) to 5 (Strongly Agree), allowing for consistent scaling for statistical analysis. The six dimensions were as follows:

- Perceived Personalization (4 items): Participants were asked to rate the degree to which they felt marketing messages were tailored to their interests and behaviors. Items were adapted from Bleier and Eisenbeiss [].

- Perceived Intrusiveness (5 items): This section assessed whether users found personalized ads disruptive, excessive, or uncomfortable. The items reflected constructs from Li et al. [] and Tucker [].

- Privacy Concerns (4 items): Derived from Xu et al. [] these items assessed users’ levels of concern regarding data collection, surveillance, and third-party data sharing.

- Perceived Control (4 items): This construct assessed whether users felt they had meaningful options to manage their exposure to personalized content. Sample questions addressed opt-out ability and transparency.

- Trust in Brand (4 items): Based on the work of Aguirre et al. [], these items explored user confidence in the ethical behavior and data responsibility of the brand presenting the ad.

- Behavioral Intentions (4 items): Participants reported their likelihood of engaging with ads (e.g., clicking, purchasing, sharing), depending on their reactions to personalization.

The questionnaire also included one short scenario to anchor participants’ responses in a realistic marketing context, followed by demographic questions (e.g., age, gender, and education level) and indicators of digital literacy. The design aimed to strike a balance between scientific rigor and participant clarity and cognitive ease.

3.3. Data Collection Procedure

The survey was deployed online using Google Forms. This platform enabled the quick dissemination of information via academic mailing lists, professional groups on LinkedIn, and social media communities interested in marketing and technology. Participants were not compensated, but they were informed of their rights before beginning the survey. The use of self-administered online surveys has been shown to reduce interviewer bias and improve respondent candor, particularly on privacy-related topics [].

The inclusion criteria required participants to be at least 18 years old and exposed to personalized advertising within the preceding six months. This ensured the sample consisted of individuals with relevant experience for evaluating personalization in digital marketing. All responses were anonymous.

Upon closing the survey, a dataset of 652 responses was obtained. After screening for completeness and duplicate entries, 629 valid cases were retained for analysis. The raw data were then exported into CSV format for analysis in Python.

3.4. Data Analysis Plan

A structured data analysis plan was developed and implemented using the Python programming environment to prepare for hypothesis testing. This plan ensured methodological transparency and statistical rigor at each analysis stage. Four main phases were followed, reflecting best behavioral and marketing research practices.

- Phase 1: Data Cleaning and Preparation. The raw dataset was first imported using the pandas library. Entries with missing responses or invalid values were excluded to ensure data quality and accuracy. Likert-scale items were verified for consistency, and categorical demographic variables (such as gender, age group, and education level) were encoded using either LabelEncoder or one-hot encoding, depending on the analysis requirements. This step also involved generating preliminary summary statistics to confirm the expected distribution of responses and to identify any anomalies.

- Phase 2: Descriptive Statistics. Descriptive measures were computed to characterize the sample and the distribution of responses across all constructs. These included the six core variables’ means, standard deviations, and frequency distributions. Although no inferential conclusions were drawn at this stage, this summary enabled an initial assessment of the scale’s performance and participant engagement with the questionnaire.

- Phase 3: Reliability Analysis. The internal consistency of each construct was assessed using Cronbach’s alpha, computed via the Penguin library. Constructs with alpha values greater than 0.70 were considered sufficiently reliable for inclusion in further analyses. This step ensured that each dimension measured a coherent and unified latent concept, supporting the instrument’s validity.

- Phase 4: Correlational Diagnostics. Pearson correlation coefficients were computed to examine the bivariate associations between all key constructs. These coefficients provided insight into potential multicollinearity and the directionality of relationships between independent and dependent variables. A correlation matrix and visualizations (e.g., heatmaps using seaborn) were planned to support interpretation and subsequent model selection.

All analyses were conducted by conventional statistical thresholds (e.g., p < 0.05 for significance) and were supported by visual representations generated with Matplotlib 3.8.4 (Python Software Foundation, Wilmington, DE, USA) and Seaborn 0.13.2 (Python Software Foundation, Wilmington, DE, USA). The structured, Python-based approach ensured reproducibility and scalability for future research applications.

3.5. Ethical Considerations

The research protocol complied fully with academic ethical standards for human subjects research. Before beginning the questionnaire, participants were provided with an information sheet explaining the nature and purpose of the study. They were also explicitly informed of their rights: participation was voluntary, data would remain anonymous, and they could exit the survey without consequence.

No personally identifiable data was collected. Responses were stored in encrypted digital format, accessible only to the research team. Because the study presented minimal risk and did not involve vulnerable populations, formal ethics board approval was not required, but all precautions were taken to protect respondent confidentiality and dignity.

3.6. Limitations of the Methodological Approach

While the present study offers meaningful insights into user perceptions of personalized advertising, a few methodological considerations merit mention. As with most survey-based research [], the findings are based on self-reported data, which—despite careful questionnaire design and anonymity—may be subject to interpretation biases or selective recall.

The study also adopts a cross-sectional approach, which captures perceptions at a specific point in time. Although this is appropriate for exploratory analysis, future research may benefit from longitudinal or experimental designs to observe how these attitudes evolve or respond to specific interventions.

Finally, while the Moroccan context adds a valuable and underexplored perspective, it also reflects particular socio-cultural dynamics that may not fully generalize to other settings. That said, many of the underlying mechanisms—such as the role of trust and perceived control—are consistent with patterns observed globally.

Overall, these considerations do not detract from the study’s relevance but rather suggest directions for deeper investigation in subsequent research.

4. Results and Discussion

The following section presents the empirical results of the PLS-SEM analysis and discusses their implications in light of the proposed hypotheses and existing literature.

4.1. Descriptive Statistics of the Sample

The final dataset consists of responses from 629 individuals, after a cleaning process that removed incomplete or inconsistent submissions. The sociodemographic profile of the participants reflects a predominantly young and digitally active population, which closely aligns with the typical target audience for algorithmically tailored online content.

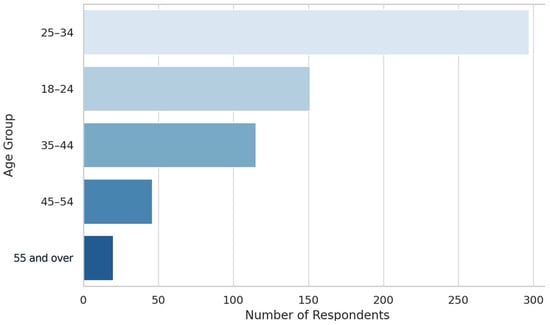

In terms of age distribution, there is a strong representation of individuals between 25 and 34 years old, who make up nearly half of the sample. Respondents aged 18–24 and 35–44 are also well represented, while older groups (45–54 and 55+) are present but less numerous. This skew toward younger age brackets is expected in studies addressing digital engagement. Figure 1 illustrates the age distribution of the respondents across different groups.

Figure 1.

Age Group Distribution. Bars use different shades of blue only to distinguish the five age categories (18–24, 25–34, 35–44, 45–54, 55+); the colors themselves do not encode additional information. Values indicate the number of respondents in each age group.

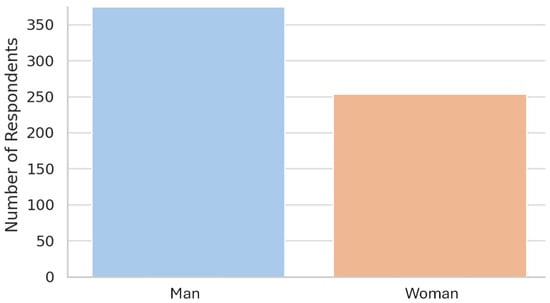

As shown in Figure 2, the sample consists of 58% male and 42% female respondents. While not perfectly balanced, this distribution is reasonably close to national internet usage patterns in Morocco, especially among urban populations with intense exposure to online platforms and advertising technologies []. Most respondents hold at least a university-level qualification. Specifically, 35% reported completing a master’s-level degree (Bac+5), 20% a bachelor’s-level degree (Bac+3 or Bac+4), and 10% postgraduate or doctoral studies. This educational profile suggests a relatively high level of cognitive literacy regarding online privacy, data use, and algorithmic targeting.

Figure 2.

Gender Distribution. Blue represents male respondents and salmon represents female respondents. Bars show the number of respondents in each group.

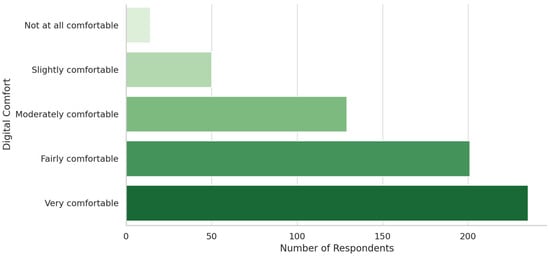

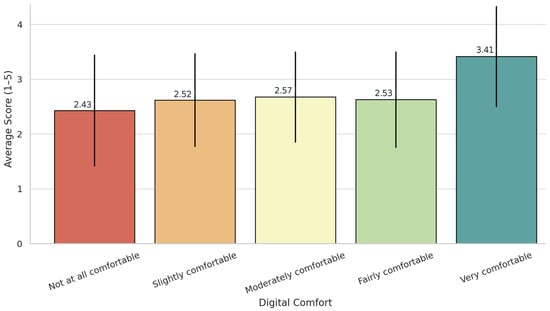

Concerning digital comfort, the results confirm a high level of familiarity with digital tools. Approximately 70% of respondents declared themselves to be “very comfortable” or “fairly comfortable” navigating digital environments. Only a small fraction indicated low confidence in using online platforms. This variable is particularly relevant when interpreting how individuals perceive personalized content’s relevance, control, and possible discomfort. To gauge participants’ familiarity with digital environments, Figure 3 illustrates the distribution of digital comfort levels, showing that the majority reported being fairly or very comfortable online.

Figure 3.

Digital Comfort Level. Bars progress from light green (“Not at all comfortable”) to dark green (“Very comfortable”) in order to distinguish the five comfort categories; the colors themselves do not carry additional meaning. Values indicate the number of respondents in each category.

Finally, exposure to tailored ads appears to be frequent across the sample. Over two-thirds of participants reported encountering such content either “very often” or “often” on social media, search engines, and e-commerce platforms. This high level of exposure provides a meaningful foundation for assessing how people process, accept, or resist targeted promotional messages.

These descriptive elements offer a robust contextual grounding for the following analyses. The sample represents a digitally engaged segment of Moroccan consumers, which is highly relevant for exploring emerging perceptions of algorithmic relevance, data ethics, and online communication practices in non-Western markets.

4.2. Average Responses by Construct

To assess participant attitudes toward key aspects of algorithmic marketing, we examined average scores across the six measured constructs.

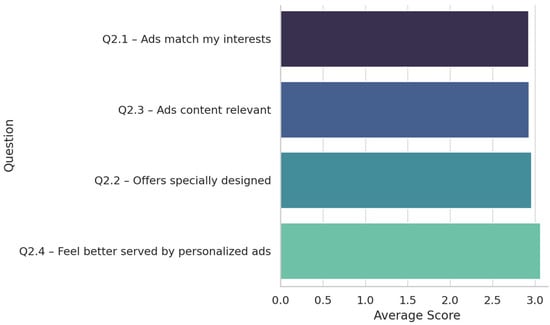

Perceived personalization was rated moderately positively. Most respondents agreed that the ads they received reflected their browsing habits and preferences, with an average score above 3.5. This indicates a general sense that content relevance is being achieved, though not enthusiastically embraced. Figure 4 presents the mean responses for items related to perceived ad relevance. Scores slightly above the neutral midpoint suggest moderate recognition of targeting accuracy, without strong endorsement:

Figure 4.

Q2–Average Scores for Perceived Personalization. The four items receive similar, moderately positive ratings (around 3.0). Different shades of blue/green are used only to differentiate the items visually and do not represent an additional scale.

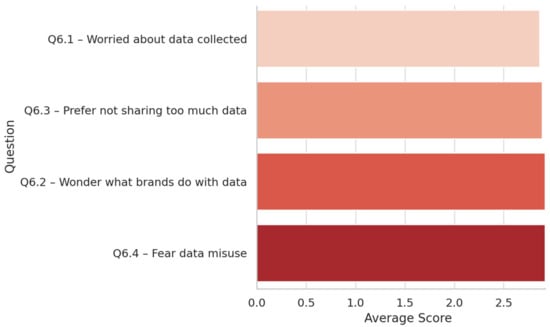

Privacy concerns, in contrast, scored consistently high. Many participants expressed strong unease about how their personal data is handled, especially regarding transparency and consent. Items in this dimension averaged above 4.0, suggesting a prevailing skepticism about digital surveillance and information sharing. Figure 5 displays the average scores for items measuring privacy-related apprehensions. Elevated ratings across all items highlight a widespread discomfort with how data is collected and used by digital platforms:

Figure 5.

Q6–Average Scores for Privacy Concerns. The four items show similarly high levels of concern, with only minor variation between questions. Different shades of red are used only to distinguish the items from one another; they do not indicate an additional scale.

Perceived intrusiveness yielded mixed results. Many respondents noted discomfort with ads that seemed too persistent or invasive. Scores ranged from 3.5 to 4.0, pointing to a low-grade but ongoing sense of being watched—particularly in relation to unsolicited personalization.

Trust in the brands deploying these strategies varied. While some participants showed a degree of confidence when companies disclosed how data was used, others remained doubtful. Trust appeared to hinge on perceptions of honesty and openness, rather than on the mere usefulness of the content.

Regarding perceived control, responses reflected uncertainty. While some users felt able to manage their privacy settings or limit data sharing, many found the systems too complex or ineffective. This ambivalence indicates that technical empowerment may exist in theory but is not always felt in practice.

Finally, behavioral intention—measured by actions like clicking or avoiding ads—scored in the mid-range. Although some users were receptive to well-matched content, others hesitated, especially when concerns about data use were triggered. This suggests that engagement is shaped by a balance between usefulness, trust, and perceived autonomy.

4.3. Cross-Construct Analyses

To deepen our understanding of the underlying dynamics, we examined how the key dimensions relate to each other across different groups and contexts. Several patterns emerge when comparing average responses and cross-tabulating perceptions with sociodemographic variables.

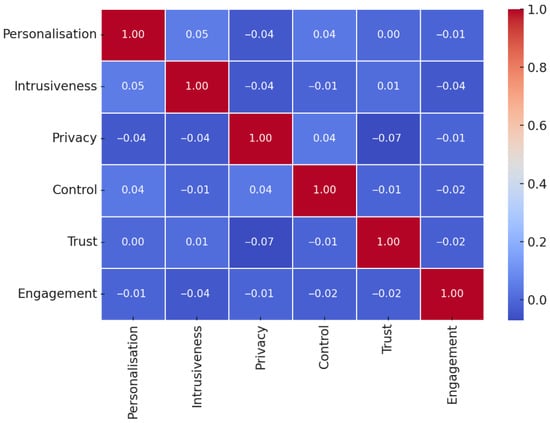

As shown in Figure 6, the heatmap indicates moderate to strong correlations between trust, control, and engagement, whereas privacy and intrusiveness are negatively associated with these constructs. These relationships provide visual support for the hypothesized dynamics tested in the structural model.

Figure 6.

Correlation matrix between latent constructs. Colors represent the Pearson correlation coefficients: darker red cells on the diagonal indicate self-correlation (r = 1.00), while off-diagonal cells range from light to darker blue to show weak to moderate negative correlations. The color bar on the right indicates the scale from −0.1 to 1.0.

One clear trend concerns the association between digital familiarity and perceived relevance of advertising content. Individuals who reported being highly at ease with online tools tended to assign higher scores to items such as “Ads match my interests.” This suggests that familiarity with digital ecosystems may reinforce the perception that targeted messages are appropriate and aligned with user expectations. Figure 7 illustrates this pattern, showing that respondents who feel more digitally confident tend to perceive a stronger alignment between targeted ads and their personal interests.

Figure 7.

Q2.1–Ad Match Score by Digital Comfort Level. Colors are used only to distinguish the five digital comfort categories (from “Not at all comfortable” to “Very comfortable”) and do not encode additional variables. Error bars indicate variability.

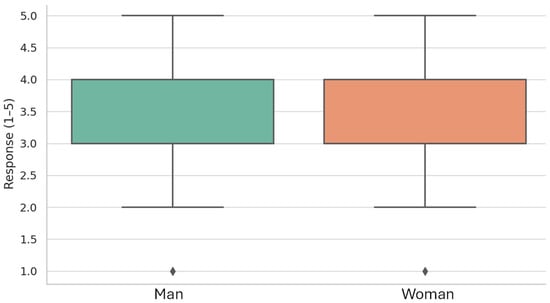

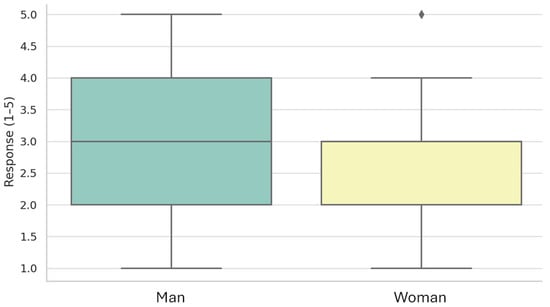

Discomfort with intrusive marketing remains strong across the sample, and is particularly pronounced among female respondents. Women systematically reported higher scores on items linked to tracking and data misuse, such as “I feel like companies go too far” or “I am being monitored.” Figure 8 illustrates this gender-based perceptual gap, showing that women express greater concern about privacy violations compared to men.

Figure 8.

Q3.1–Privacy Violation Perception by Gender. Green indicates men and salmon indicates women. The diamonds below the whiskers denote outliers.

Trust-related responses reveal meaningful differences. While some participants expressed greater confidence in brands that communicate how data is used, skepticism remained prevalent. Figure 9 highlights that men were more likely than women to associate tailored advertising with increased credibility, although overall trust levels stayed moderate.

Figure 9.

Q4.3–Trust Increase by Personalization by Gender. Green indicates men and pale yellow indicates women. The diamond above the whisker denotes an outlier.

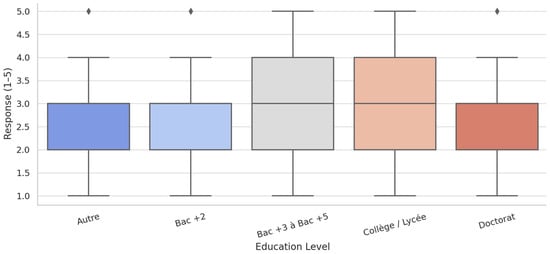

Educational attainment also shapes how people engage with online marketing. Respondents with higher levels of education—particularly those with postgraduate experience—tended to report stronger concerns about transparency and control. For instance, the item “I worry about what brands do with my data” received higher scores among this group, reflecting a more critical stance toward algorithmic targeting. Figure 10 illustrates this pattern, showing significantly greater privacy concerns among respondents with postgraduate degrees.

Figure 10.

Q6.1–Privacy Concerns by Education Level. Responses were collected using French education-system categories. Blue corresponds to “Autre” (Other), light blue to “Bac + 2” (≈2-year postsecondary), grey to “Bac +3 à Bac +5” (≈3–5-year university degree), salmon to “Collège/Lycée” (secondary/high school), and brick/orange to “Doctorat” (Doctorate). The diamonds above the whiskers denote outliers.

Finally, those frequently encountering tailored content are more likely to engage with it and express concern. Respondents reporting daily exposure to targeted ads are significantly more divided: some view them as helpful, others as annoying or excessive. This duality reflects a growing tension between efficiency and intrusion. This ambivalence reflects the symptoms of social media fatigue described by Bright, Kleiser, and Grau [], where repeated exposure leads to emotional saturation and reduced responsiveness.

Taken together, these analyses illustrate that perceptions are not uniformly distributed. The content and individual differences in digital maturity, trust, and perceived autonomy shape attitudes toward tailored content. These findings underscore the need for marketing strategies that are context-aware, culturally sensitive, and transparent in execution.

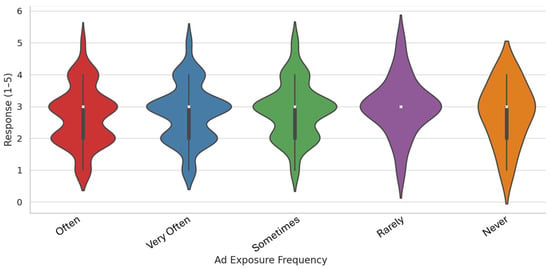

4.4. Behavioral Intention Drivers

Understanding what drives users to engage—or disengage—with personalized ads is central to this study. Results reveal that frequent exposure to targeted content plays a significant role. Respondents regularly encountering personalized promotions were more likely to report intention to interact with them, indicating that familiarity may increase tolerance and interest. The influence of algorithmic targeting in social platforms is often intensified by engagement patterns shaped by influencer dynamics and perceived content personalization []. Figure 11 illustrates this relationship, showing that respondents exposed more frequently to tailored ads were also more likely to report clicking on them.

Figure 11.

Q7.1–Click on Ads by Frequency of Exposure. Each color identifies one exposure category (Often, Very often, Sometimes, Rarely, Never). The white “×” inside each violin marks the mean response.

However, high exposure alone is not sufficient. Users who reported strong concerns about how their data was collected or processed were more cautious, often avoiding interaction regardless of content relevance. This highlights that perceived usefulness cannot compensate for discomfort or mistrust. These findings resonate with Van Doorn and Hoekstra [], who found that perceived intrusiveness—particularly when personalization feels invasive—reduces user receptiveness and increases resistance to digital engagement.

Transparency emerged as a decisive factor. Participants responded more positively when they understood why specific ads were shown to them. These findings are consistent with Schumann et al. (2014) [], who showed that transparency in targeting practices increases perceived fairness and ad acceptance. In contrast, when the targeting process felt hidden or imposed, responses tended to be negative—ranging from indifference to active avoidance behaviors like ad blocking. These findings are consistent with previous research showing that transparency not only mitigates perceived intrusiveness but also strengthens the persuasive power of personalization through increased []. These findings align with the study by Youness and Mohamed (2025) [], which demonstrates that the use of PCA- and clustering-based segmentation, combined with transparency regarding targeting criteria, enhances marketing impact while simultaneously strengthening customer engagement on online platforms.

Perceived control was another consistent predictor of openness. Respondents who believed they could adjust privacy settings or limit ad personalization were more likely to view the content favorably. This suggests that enabling choice, even symbolically, enhances receptiveness.

Overall, behavioral intention appears to be shaped less by message accuracy than by the environment in which personalization occurs—particularly when users feel that they have agency, visibility, and room to opt out.

4.5. Hypothesis Testing and Path Relationships

To formally test the conceptual model, we conducted a structural analysis using Partial Least Squares–Structural Equation Modelling (PLS-SEM) []. This allowed us to assess the strength and significance of the proposed connections between constructs derived from the conceptual framework and stated hypotheses (H1 to H5).

The results indicate that perceived content relevance significantly influences trust in the brand (H1 supported). Respondents who found the targeting aligned with their interests were more likely to report a sense of credibility and transparency from the source. This aligns with prior research emphasizing that trust is nurtured when users feel content is accurate and delivered with ethical intent.

At the same time, the perception of relevance was also positively associated with concerns about data usage (H2 supported). This confirms the existence of a cognitive tension, where increased targeting simultaneously reinforces interest and activates discomfort. The data suggest that Moroccan users, like their counterparts in other regions, remain vigilant even when content feels useful.

Further, privacy concerns showed a strong, positive link with the perception of intrusiveness (H3 supported). Participants who expressed doubts about data protection also reported higher unease regarding how closely the system monitors their activity.

When examining the final stage of the model—users’ intention to interact with or avoid such content—we observed a dual pathway. Trust and perceived control contributed significantly to a positive response (H4 supported), indicating that users are more likely to accept or respond when they feel informed and in charge of the exchange.

On the other side, intrusiveness negatively impacted receptivity (H5 supported), reducing the likelihood of engagement. This effect reinforces the importance of limiting perceived pressure in targeting strategies. Even accurate messages lose traction when they feel invasive.

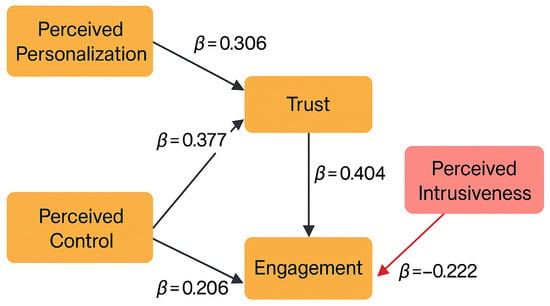

The overall model exhibited good explanatory power, with acceptable R2 values for key dependent constructs. All structural paths were statistically significant at conventional thresholds, and the mediation effects of trust and concern were confirmed through indirect paths. Table 2 presents the standardized path coefficients resulting from the PLS-SEM model.

Table 2.

Standardized Path Coefficients from PLS-SEM Analysis.

Interpretation:

- The most substantial positive influence on Trust comes from Perceived Control (0.377), followed by Perceived Personalization (0.306).

- Privacy Concerns slightly reduce Trust (–0.143), suggesting a subtle erosion of confidence when data usage is unclear.

- Regarding Engagement (intention to interact), Trust is the most influential factor (0.404), while Intrusiveness has an adverse effect (–0.222).

These findings validate the model proposed and offer an empirically grounded explanation of how Moroccan users process targeted content. They balance perceived usefulness with caution and react based on how much clarity and control they feel they have in the exchange.

4.6. Discussion of Findings

The study’s findings offer a layered view of how individuals respond to personalized digital content. While many users recognized that the ads matched their interests, this alignment was not enough to guarantee engagement. What mattered more was the context: how data was collected, how much control users felt they had, and whether the targeting process felt respectful.

Perceived control emerged as a central driver of positive perception. Participants who felt they could influence or limit how ads were customized showed higher levels of trust and were more inclined to interact. This control, whether exercised or symbolic, served as a psychological buffer that made personalization more acceptable.

Trust, in turn, acted as a catalyst for engagement. When participants believed the brand was transparent and ethical in its use of data, even highly tailored ads were met with openness. But trust was fragile—quickly undermined by ambiguity or perceived overreach. The negative link between privacy concerns and trust (β = –0.143) illustrates how discomfort can erode credibility, even when the message is well-targeted.

Intrusiveness also played a disruptive role. The perception of being monitored or profiled reduced the likelihood of engagement (β = –0.222), regardless of the content’s relevance. This underscores the importance of balancing precision with discretion in algorithmic targeting.

In the Moroccan context, where digital literacy is relatively high but data governance remains weak, this tension is amplified. Young, educated users—particularly women—showed heightened awareness of ethical and privacy issues, suggesting that future marketing strategies should not only optimize content but also address the deeper expectations of dignity and control.

In sum, personalization succeeds not through data alone, but through clarity, respect, and a sense of participatory control. Users want relevance, but only when they feel involved in the process and able to influence how it unfolds. This echoes the work of Leon et al. [], who found that users are more willing to share data when they perceive the process as transparent and respect-based.

Figure 12 illustrates the key relationships found in the structural model. Perceived personalization and control both influence trust, which in turn strongly predicts engagement. Perceived control also has a direct effect on engagement. Conversely, perceived intrusiveness negatively impacts engagement, highlighting the conditional nature of acceptance.

Figure 12.

Simplified path model of engagement drivers in personalized advertising. Arrows show the direction of the standardized path coefficients; negative effect from perceived intrusiveness is marked with β = −0.222.

Table 3 complements these findings with an interpretation of each relationship in terms of user perception and behavior.

Table 3.

Key Path Effects and Interpretations.

As summarized in Table 3, the interplay between trust, control, and perceived discomfort offers a multi-layered view of how users navigate targeted digital messages. Each path in the model reveals statistical significance and practical insights into how brands can approach relevance without crossing the line of intrusion.

5. Conclusions and Perspectives

This research examined how Moroccan users respond to digital content customized through advanced targeting systems. While the relevance of content remains a factor in user engagement, our findings indicate that it is neither sufficient nor primary. Instead, trust and the sense of control emerged as the most decisive enablers of acceptance. In contrast, even relevant messages provoked resistance when users perceived them as intrusive or manipulative.

The results highlight a significant shift in user expectations: audiences no longer respond solely to content accuracy—they also assess the intention and transparency behind its delivery. When individuals feel they comprehend and can influence how content reaches them, their willingness to engage increases. Conversely, where lack of clarity, overreach, or uncertainty prevail, engagement tends to decrease. This observation is especially pertinent in digitally active yet lightly regulated environments like Morocco, where the divide between user exposure and digital literacy remains substantial.

Further research should enhance this contextual understanding by investigating longitudinal trends, cross-cultural variations, and ethnographic perspectives on digital trust and resistance. Brands and platforms now face a new paradigm: designing targeting systems not just for performance but also for dignity. Relevance is no longer sufficient—it must be paired with respect, clarity, and the tangible empowerment of users.

Author Contributions

Both authors, Y.M. and M.A., contributed equally to all aspects of the research, including conceptualization, methodology, data collection, analysis, and manuscript preparation. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study, as the data used are publicly available and fully anonymized.

Informed Consent Statement

Informed consent for participation was obtained from all subjects involved in the study.

Data Availability Statement

The data supporting the findings of this study are available on reasonable request from the corresponding author.

Acknowledgments

The authors thank all survey participants and colleagues who helped disseminate the questionnaire. During the preparation of this manuscript, the authors used ChatGPT (OpenAI; GPT-5 Thinking mini) to refine language and improve readability (accessed 21 September 2025). The authors reviewed and edited all content generated by the tool and take full responsibility for the published version of the manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| CTR | Click-Through Rate |

| CSV | Comma-Separated Values |

| PLS-SEM | Partial least Squares Structural Equation Modelling |

| SEM | Structural Equation Modelling |

References

- Chandra, S.; Verma, S.; Lim, W.M.; Kumar, S.; Donthu, N. Personalization in Personalized Marketing: Trends and Ways Forward. Psychol. Mark. 2022, 39, 1529–1562. [Google Scholar] [CrossRef]

- Rodríguez-Priego, N.; Porcu, L.; Peña, M.B.P.; Almendros, E.C. Perceived Customer Care and Privacy Protection Behavior: The Mediating Role of Trust in Self-Disclosure. J. Retail. Consum. Serv. 2023, 72, 103284. [Google Scholar] [CrossRef]

- Saura, J.R. Algorithms in Digital Marketing: Does Smart Personalization Promote a Privacy Paradox? FIIB Bus. Rev. 2024, 13, 499–502. [Google Scholar] [CrossRef]

- Wedel, M.; Kannan, P.K. Marketing Analytics for Data-Rich Environments. J. Mark. 2016, 80, 97–121. [Google Scholar] [CrossRef]

- Madane, Y.; Azeroual, M.; Saadaane, R. Enhancing Crowdfunding Campaign Success Prediction Through AI-Driven Customer Segmentation. In Proceedings of the International Conference on Smart City Applications, Tangier, Morocco, 11–13 November 2025; pp. 641–651. [Google Scholar] [CrossRef]

- Martin, K.D.; Murphy, P.E. The Role of Data Privacy in Marketing. J. Acad. Mark. Sci. 2017, 45, 135–155. [Google Scholar] [CrossRef]

- Awad, N.F.; Krishnan, M.S. The Personalization Privacy Paradox: An Empirical Evaluation of Information Transparency and the Willingness to Be Profiled Online for Personalization. MIS Q. 2006, 30, 13–28. [Google Scholar] [CrossRef]

- Baek, T.H.; Morimoto, M. Stay Away from Me. J. Advert. 2012, 41, 59–76. [Google Scholar] [CrossRef]

- Aguirre, E.; Mahr, D.; Grewal, D.; De Ruyter, K.; Wetzels, M. Unraveling the Personalization Paradox: The Effect of Information Collection and Trust-Building Strategies on Online Advertisement Effectiveness. J. Retail. 2015, 91, 34–49. [Google Scholar] [CrossRef]

- Boerman, S.C.; Kruikemeier, S.; Bol, N. When Is Personalized Advertising Crossing Personal Boundaries? How Type of Information, Data Sharing, and Personalized Pricing Influence Consumer Perceptions of Personalized Advertising. Comput. Hum. Behav. Rep. 2021, 4, 100144. [Google Scholar] [CrossRef]

- Bleier, A.; Eisenbeiss, M. Personalized Online Advertising Effectiveness: The Interplay of What, When, and Where. Mark. Sci. 2015, 34, 669–688. [Google Scholar] [CrossRef]

- Tucker, C.E. Social Networks, Personalized Advertising, and Privacy Controls. J. Mark. Res. 2014, 51, 546–562. [Google Scholar] [CrossRef]

- Goldfarb, A.; Tucker, C.E. Privacy Regulation and Online Advertising. Manag. Sci. 2011, 57, 57–71. [Google Scholar] [CrossRef]

- Milne, G.R.; Bahl, S. Are There Differences between Consumers’ and Marketers’ Privacy Expectations? A Segment- and Technology-Level Analysis. J. Public Policy Mark. 2010, 29, 138–149. [Google Scholar] [CrossRef]

- Xu, H.; Gupta, S.; Rosson, M.B.; Carroll, J.M. Measuring Mobile Users’ Concerns for Information Privacy. 2012. Available online: https://aisel.aisnet.org/icis2012/proceedings/ISSecurity/10/ (accessed on 20 September 2025).

- Cho, C.-H.; University of Texas at Austin. Why do people avoid advertising on the internet? J. Advert. 2004, 33, 89–97. [Google Scholar] [CrossRef]

- Boerman, S.C.; Kruikemeier, S.; Zuiderveen Borgesius, F.J. Online Behavioral Advertising: A Literature Review and Research Agenda. J. Advert. 2017, 46, 363–376. [Google Scholar] [CrossRef]

- Van Doorn, J.; Hoekstra, J.C. Customization of Online Advertising: The Role of Intrusiveness. Mark. Lett. 2013, 24, 339–351. [Google Scholar] [CrossRef]

- Aiken, K.D. Trustmarks, Objective-Source Ratings, and Implied Investments in Advertising: Investigating Online Trust and the Context-Specific Nature of Internet Signals. J. Acad. Mark. Sci. 2006, 34, 308–323. [Google Scholar] [CrossRef]

- Yachaoui, L. Factors That Affect the Consumer in the Era of Coronavirus in Morocco. Master’s Thesis, Marmara University, Istanbul, Turkey, 2022. [Google Scholar]

- Zintl, T.; Houdret, A. Moving towards Smarter Social Contracts? Digital Transformation as a Driver of Change in State–Society Relations in the MENA Region. Mediterr. Politics 2024, 1–24. [Google Scholar] [CrossRef]

- Li, H.; Edwards, S.M.; Lee, J.-H. Measuring the Intrusiveness of Advertisements: Scale Development and Validation. J. Advert. 2002, 31, 37–47. [Google Scholar] [CrossRef]

- De Leeuw, E.D. The Effect of Computer-Assisted Interviewing on Data Quality: A Review of the Evidence. 2008. Available online: https://dspace.library.uu.nl/handle/1874/44502 (accessed on 20 September 2025).

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.-Y.; Podsakoff, N.P. Common Method Biases in Behavioral Research: A Critical Review of the Literature and Recommended Remedies. J. Appl. Psychol. 2003, 88, 879. [Google Scholar] [CrossRef]

- Sheehan, K.B. An Investigation of Gender Differences in On-Line Privacy Concerns and Resultant Behaviors. J. Interact. Mark. 1999, 13, 24–38. [Google Scholar] [CrossRef]

- Bright, L.F.; Kleiser, S.B.; Grau, S.L. Too Much Facebook? An Exploratory Examination of Social Media Fatigue. Comput. Hum. Behav. 2015, 44, 148–155. [Google Scholar] [CrossRef]

- Arora, A.; Bansal, S.; Kandpal, C.; Aswani, R.; Dwivedi, Y. Measuring Social Media Influencer Index-Insights from Facebook, Twitter and Instagram. J. Retail. Consum. Serv. 2019, 49, 86–101. [Google Scholar] [CrossRef]

- Schumann, J.H.; Von Wangenheim, F.; Groene, N. Targeted Online Advertising: Using Reciprocity Appeals to Increase Acceptance among Users of Free Web Services. J. Mark. 2014, 78, 59–75. [Google Scholar] [CrossRef]

- Bleier, A.; Eisenbeiss, M. The Importance of Trust for Personalized Online Advertising. J. Retail. 2015, 91, 390–409. [Google Scholar] [CrossRef]

- Youness, M.; Mohamed, A. Maximizing marketing impact through data-driven segmentation with PCA and clustering. J. Prof. Bus. Rev. 2025, 10, e05528. [Google Scholar] [CrossRef]

- Sarstedt, M.; Ringle, C.M.; Hair, J.F. Partial Least Squares Structural Equation Modeling. In Handbook of Market Research; Homburg, C., Klarmann, M., Vomberg, A., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 587–632. ISBN 978-3-319-57411-0. [Google Scholar]

- Leon, P.G.; Ur, B.; Wang, Y.; Sleeper, M.; Balebako, R.; Shay, R.; Bauer, L.; Christodorescu, M.; Cranor, L.F. What Matters to Users? Factors That Affect Users’ Willingness to Share Information with Online Advertisers. In Proceedings of the Ninth Symposium on Usable Privacy and Security, Newcastle, UK, 24–26 July 2013; pp. 1–12. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).