1. Introduction

The design of solar energy projects requires long-term, up-to-date, high-quality solar radiation datasets at the finest spatiotemporal resolution. Accurate knowledge of surface solar irradiance and its components at all-sky conditions is vital for assessing the solar potential in a specific area. Clouds are significant in the climate–atmosphere continuum, modifying the incoming solar irradiance reaching the Earth’s surface. The strongly varying spatiotemporal structure of the cloud field results in uncertain solar irradiance estimations. All-sky imagers are valuable tools providing continuous information regarding the sky’s state. ASIs have been extensively used in cloud detection, segmentation, and solar forecasting applications, e.g., ([

1,

2,

3,

4]).

Modeling all-sky solar irradiances is very challenging due to the uncertain behavior of clouds. Today, the Copernicus Atmospheric Monitoring Service for radiation (CAMS-Rad) has gained significant visibility among the available solar products. The CAMS-Rad service provides solar data at various temporal resolutions, easily retrieved through the Solar Radiation Data (SoDa) website (

http://www.soda-pro.com/) (accessed on 1 March 2023). In CAMS-Rad, solar irradiances at cloudy atmospheres are retrieved using the concept of cloud modification factor (a function of cloud attenuation and ground reflection) [

5], while the cloud properties are extracted through MSG satellite images and the APOLLO method [

6]. Among other models, the Fast All-sky Radiation Model for Solar applications (FARMS) [

7] parametrized all-sky irradiances using the REST2 clear-sky model and look-up tables of cloud transmittances and reflectances (created at various cloud optical thicknesses, cloud particle sizes, and solar zenith angles) from the Rapid Radiation Transfer Model (RRTM). However, such modeling approaches require a significant set of parameters not always available at the spatiotemporal resolution. Alternatively, solar irradiances can be modeled using information from all-sky images and deep-learning approaches [

8], showing promising results.

This work is focused on modeling the global and diffuse horizontal irradiances (GHI and DHI) using deep learning techniques and ASI images as independent information. More specifically, Red–Green–Blue (RGB) components from ASI images are imported into a Convolutional Neural Network (CNN) to estimate GHI and DHI. The results are validated using reference solar irradiance measurements.

2. Material and Methods

In this study, 1 min global and diffuse horizontal irradiances (GHI and DHI) were derived from the radiometric station located on the rooftop of the Laboratory of Atmospheric Physics, University of Patras, Greece (38.291° N, 21.789° E). The solar irradiances were measured with Kipp & Zonen CMP11 pyranometers. The manufacturer calibrated the instrument; systematic comparisons with a similar instrument showed differences within the standard uncertainty. Moreover, the commercial Mobotix Q24M model retrieved images of the sky dome, capturing images from the entire upper hemisphere every 640 μs. It stored them in 24-bit JPEG format with a spatial resolution of 1024 × 768 pixels. The sensor had a Red–Green–Blue (RGB) filter, including color intensities ranging from 0 to 255. The main objective of this study is to model GHI and DHI at all-sky conditions using RGB images. A pre-processing stage was applied to ASI images before the modeling process. More specifically, the images were cropped using an image mask to obtain only the necessary information, avoiding possible obstacles near the image’s edges. Then, the cropped images were resized to 128 × 128 pixel resolution to speed up the modeling process. Finally, the RGB values were scaled to 0–1 by dividing by 255. Since solar irradiances exhibit seasonal cycles, normalized forms were calculated. The clear-sky index (CSI) is a detrended form of GHI (Equation (1)), defined as the ratio between the measured GHI to that at clear-sky conditions (GHIc). On the other side, a min-max normalization process was applied to DHI (Equation (2)),

where GHIc is the clear-sky GHI derived from the CAMS McClear model ((

http://www.soda-pro.com/ (accessed on 1 March 2023). Furthermore, only cases with a solar zenith angle (SZA) lower than 80° were kept for subsequent analysis to avoid low-sun issues and shading effects.

A Convolutional Neural Network model was employed to model GHI and DHI using RGB images as modeling inputs. CNN has been used extensively in processing images, videos, and speech, due to its powerful feature learning ability and efficient weight-sharing strategy [

9]. The model architecture was similar to [

8]. The CNN structure was determined through a randomized splitting procedure, where 70% of the data was used to build the CNN. This subset of data was again randomly divided using a 70/30 rule for training and testing purposes. Therefore, 30% of data remaining from the initial splitting acted as an independent validation dataset. The mean absolute error (MAE) was selected as the minimization loss function. The output parameters in CNN were scaled according to Equations (1) and (2), where the minimum and maximum DHI values correspond to the training period. The RGB images were scaled to 0–1. The data scaling process ensures that all parameters are in a similar data range, and it is necessary for the efficient training of the CNN.

3. Results

This section discusses the modeling results against reference measurements.

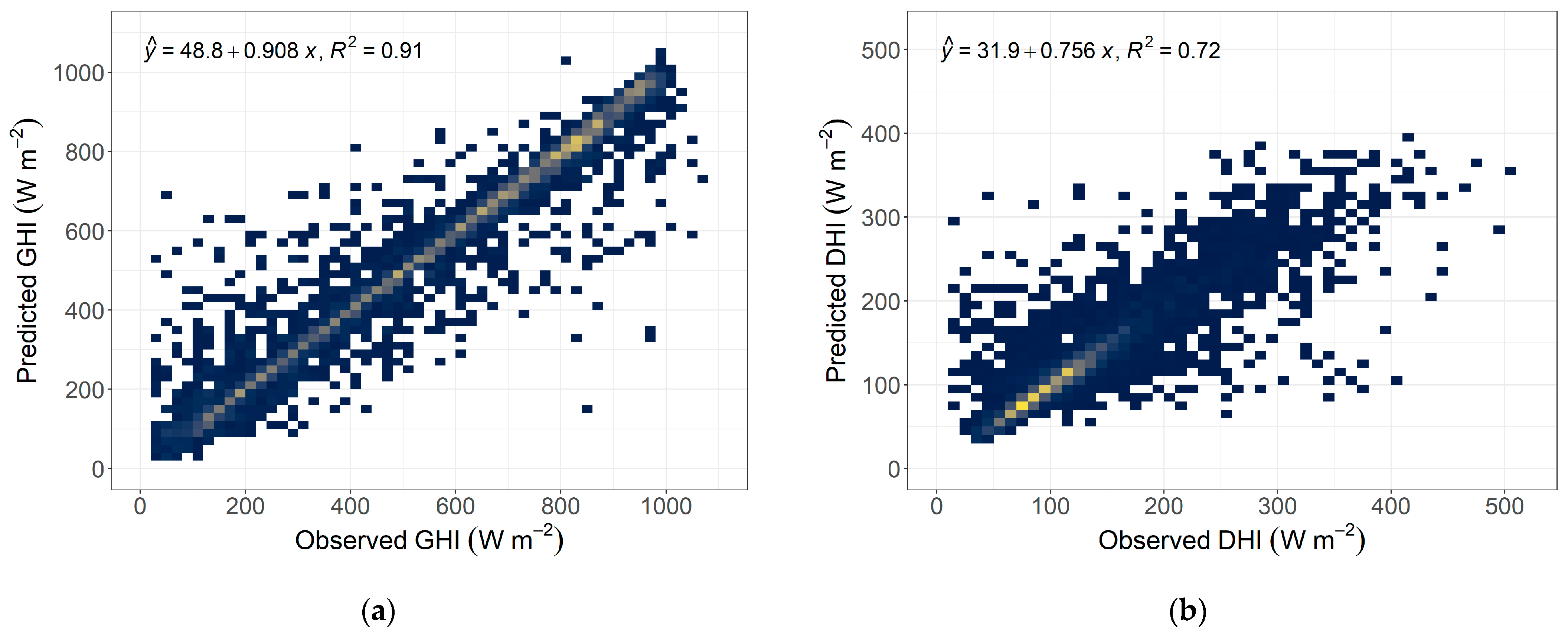

Figure 1 represents the modeled vs. observed GHI and DHI. In general, the modeled GHI and DHI seemed to reproduce the observations quite well. For GHI (

Figure 1a), especially, the modeled values were in good agreement, with observations exhibiting a high correlation of determination value (R

2 = 0.91).

The best-fit line of

Figure 1a explains the underestimation of observations since the slope is lower than unity. Several points are also far from the general tendency, indicating a significant dispersion. To address these facts, the systematic and dispersion errors (Mean Bias Error, MBE, and Root Mean Square Error, RMSE) and the normalized forms (nMBE and nRMSE), using as skill reference the average observed irradiances, were further computed. A slight underestimation was found with MBE = −1.8 W m

−2 (nMBE = −0.32%), while the dispersion error was 82.7 W m

−2 (nRMSE = 15%).

Similar results could be extracted for DHI (

Figure 1b). The slope of the best-fit line equaled 0.756, substantially lower than unity, while significant dispersion was detected even by visual inspection. The respective (normalized) systematic and dispersion errors were −0.5 W m

−2 (−0.39%) and 39.8 W m

−2 (30%). The modeled DHI was in good agreement with observations, with R

2 = 0.74.

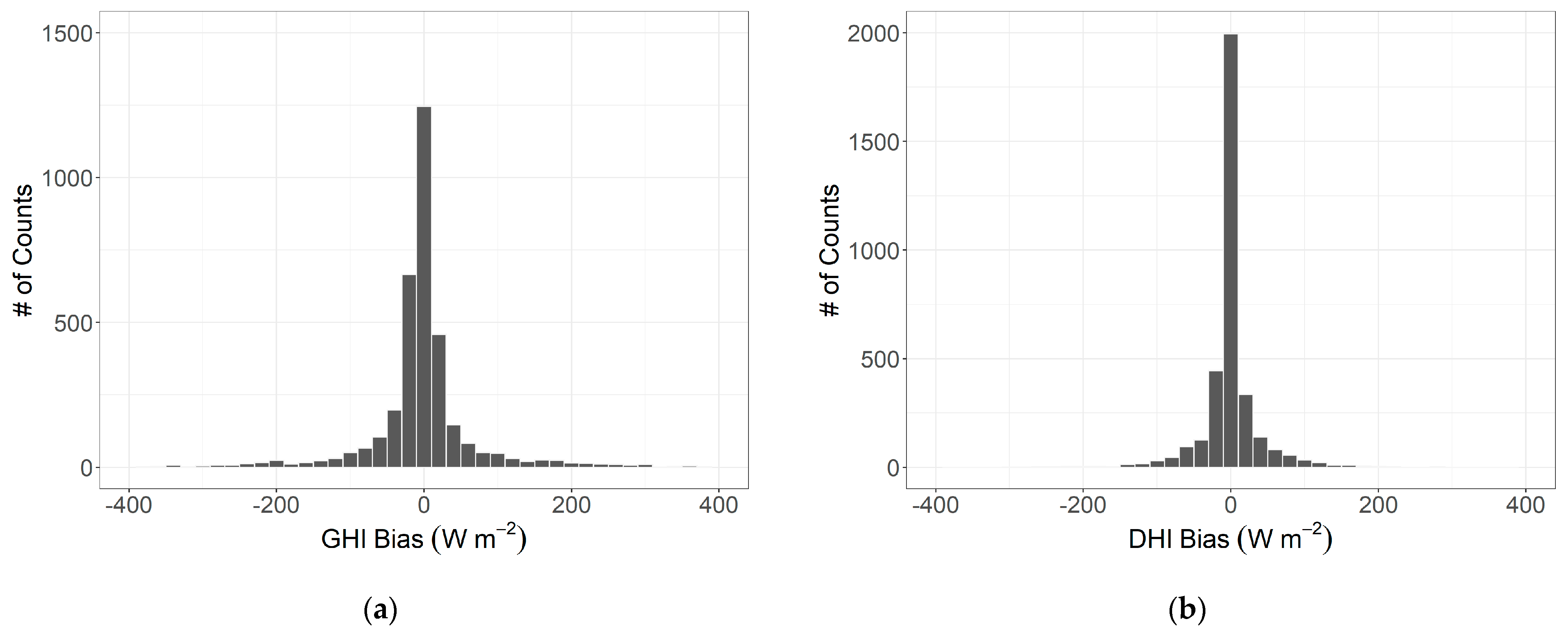

Figure 2 represents the frequencies of GHI and DHI differences (model–observations). Both histograms are zero-skewed, and the values are distributed around the mean (in this case, close to zero). However, the strongly peaked distributions are representative of the modeling process. The significant number of values at both tails of the statistical distributions explains the high calculated dispersion errors.

It is worth mentioning here that the modeling process was designed using solely information from the ASIs. Including other exploratory variables representative of the cloud field, such as the cloud optical thickness, cloud fraction, etc., could substantially improve the results.

4. Conclusions

Accurate knowledge of surface solar irradiance at all-sky conditions is crucial for solar-related applications. This study focused on modeling the global and diffuse horizontal irradiances using deep learning models (i.e., Convolutional Neural Network) and input information from all-sky images. The validation against real observations showed high correlation values, minor systematic errors, and dispersion errors of 15% and 30% for GHI and DHI, respectively. In a future step, including other exploratory variables representative of the cloud field (such as the cloud optical thickness, cloud fraction, etc.) in the model design process could substantially improve the results.

Author Contributions

Conceptualization, V.S. and P.T.; methodology, V.S.; software, V.S.; validation, V.S.; formal analysis, V.S.; investigation, V.S.; data curation, P.T.; writing—original draft preparation, V.S. and P.T.; writing—review and editing, V.S., P.T. and A.K.; visualization, V.S.; supervision, A.K.; funding acquisition, A.K. All authors have read and agreed to the published version of the manuscript.

Funding

The publication of this article has been co-financed by the European Union and Greek national funds through the Operational Program Competitiveness, Entrepreneurship and Innovation, under the call RESEARCH–CREATE-INNOVATE (project code: T2EDK-00681).

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Acknowledgments

We acknowledge the support for this work by the project DeepSky co-financed by the European Union and Greek national funds through the Operational Program Competitiveness, Entrepreneurship and Innovation, under the call RESEARCH–CREATE-INNOVATE (project code: T2EDK-00681).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ghonima, M.S.; Urquhart, B.; Chow, C.W.; Shields, J.E.; Cazorla, A.; Kleissl, J. A method for cloud detection and opacity classification based on ground based sky imagery. Atmos. Meas. Tech. 2012, 5, 2881–2892. [Google Scholar] [CrossRef]

- Kazantzidis, A.; Tzoumanikas, P.; Bais, A.F.; Fotopoulos, S.; Economou, G. Cloud detection and classification with the use of whole-sky ground-based images. Atmos. Res. 2012, 113, 80–88. [Google Scholar] [CrossRef]

- Dev, S.; Savoy, F.M.; Lee, Y.H.; Winkler, S. Estimating solar irradiances using sky imagers. Atmos. Meas. Tech. 2019, 12, 5417–5429. [Google Scholar] [CrossRef]

- Logothetis, S.-A.; Salamalikis, V.; Nouri, B.; Remund, J.; Zarzalejo, L.; Xie, Y.; Wilbert, S.; Ntavelis, E.; Nou, J.; Hendrikx, E.; et al. Benchmarking of solar irradiance nowcast performance derived from all-sky imagers. Renew. Energy 2022, 199, 246–261. [Google Scholar] [CrossRef]

- Qu, Z.; Oumbe, A.; Blanc, P.; Espinar, B.; Gessel, G.; Gschwind, B.; Klüser, L.; Lefèvre, M.; Saboret, L.; Schroedter-Homscheidt, M.; et al. Fast radiative transfer parameterisation for assessing the surface solar irradiance: The Heliosat-4 method. Meteorol. Z. 2017, 26, 33–57. [Google Scholar] [CrossRef]

- Oumbe, A.; Qu, Z.; Blanc, P.; Lefèvre, M.; Wald, L.; Cros, S. Decoupling the effects of clear atmosphere and clouds to simplify calculations of the broadband solar irradiance at ground level. Geosci. Model. Dev. 2014, 7, 1661–1669. [Google Scholar] [CrossRef]

- Xie, Y.; Sengupta, M.; Dudhia, J. A Fast All-sky Radiation Model for Solar applications (FARMS): Algorithm and performance evaluation. Sol. Energy 2016, 135, 435–445. [Google Scholar] [CrossRef]

- Feng, C. and Zhang, J SolarNet: A sky image-based deep convolutional neural network for intra-hour solar forecasting. Sol. Energy 2020, 204, 71–78. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).