Abstract

UAV-mounted multispectral sensors are widely used to study crop health. Utilising the same cameras to capture close-up images of crops can significantly improve crop health evaluations through multispectral technology. Unlike RGB cameras that only detect visible light, these sensors can identify additional spectral bands in the red-edge and near-infrared (NIR) ranges. This enables early detection of diseases, pests, and deficiencies through the calculation of various spectral indices. In this work, the ability to use UAV-multispectral sensors for close-proximity imaging of crops was studied. Images of plants were taken with a Micasense Rededge-MX from top and side views at a distance of 1 m. The camera has five sensors that independently capture blue, green, red, red-edge, and NIR light. The slight misalignment of these sensors results in a shift in the swath. This shift needs to be corrected to create a proper layer stack that could allow for further processing. This research utilised the Oriented FAST and Rotated BRIEF (ORB) method to detect features in each image. Random sample consensus (RANSAC) was used for feature matching to find similar features in the slave images compared to the master image (indicated by the green band). Utilising homography to warp the slave images ensures their perfect alignment with the master image. After alignment, the images were stacked, and the alignment accuracy was visually checked using true colour composites. The side-view images of the plants were perfectly aligned, while the top-view images showed errors, particularly in the pixels far from the centre. This study demonstrates that UAV-mounted multispectral sensors can capture images of plants effectively, provided the plant is centred in the frame and occupies a smaller area within the image.

1. Introduction

UAV-remote sensing has revolutionised crop health monitoring by providing remote sensing images on demand for calculating crop health metrics [1,2,3,4,5,6]. The remote sensing images collected from a variety of sensors have made UAV-based remote sensing a mainstay in precision agriculture [7]. Images from RGB sensors provide very-high-resolution images [8]. Data from hyperspectral sensors is highly valuable as it provides detailed spectral information about the crops [9]. However, RGB sensors offer limited spectral information, whereas hyperspectral sensors, while more comprehensive, are often prohibitively expensive [10]. Multispectral sensors offer a good balance between RGB and hyperspectral sensors, providing essential spectral depth at a lower cost [11]. UAVs equipped with multispectral imaging sensors are commonly used to monitor crop growth in fields because they provide advantages over other sensors [12]. The use of these sensors has yielded outstanding results in the analysis of field crops from a UAV [13], but the design limitations have restricted its use for capturing close-up images from low-flying UAVs or for crops growing in pots [14].

Proximal imaging with multispectral sensors can provide valuable information about pests, diseases, and other stresses affecting crops by utilising visual and spectral data [15], while water and nutrient stress in crops can be detected using vegetation indices and other methods that analyse spectral signatures [16]. Crops’ pests and diseases can only be identified through close-range remote sensing images that capture leaf symptoms [17]. These limitations have led the agriculture community to invest in various sensors for different needs, increasing the cost of crop health monitoring and precision agriculture [18]. Removing the limitations of current multispectral sensors could enable their use with UAVs and for close-range imaging, benefiting the entire community [19].

Currently, multispectral sensors feature a rig with multiple cameras, each sensitive to specific parts of the electromagnetic spectrum (EMR). Each camera is placed separately to avoid obstructing the field of view of others. This placement creates a shift in FOV and causes variations in the images captured by the cameras. For example, for the Micasense RedEdge-MX (MX) five-band sensor, five distinct images will be captured by each camera for every individual instance. All the images will have a parallax shift due to the position of the cameras [20]. This change in images complicates the process of stacking individual layers, making it exceedingly challenging [21]. OEMs recommend flying UAVs at altitudes above 15m to ensure that most of the area of interest is at the centre of the images, where shifting errors are typically lower. At close distances, the parallax shift greatly impacts images because the object of interest takes up most of the frame, making the alignment challenging [14]. Addressing the challenges arising due to the sensor’s distance from objects can enhance its usability in agriculture [22].

Computer vision methods are often favoured for aligning images with parallax errors or disorientations compared to complex deep learning models and simple GPS-based alignment procedures [23]. Computer vision-based feature matching approaches are data-agnostic, lightweight, and offer strong generalisation across diverse scenes and spectral conditions [24]. The ORB (Oriented FAST and Rotated BRIEF) algorithm is a computer vision method known for its efficiency, accuracy, and robustness. ORB combines the FAST corner detection algorithm with orientation estimation and BRIEF descriptor extraction, making it invariant to scale and rotation. Its use of binary descriptors and Hamming distance matching enables quick feature comparison while retaining strong discriminative power, even in distorted views [25]. Further, ORB is fully open-source and free of patents, which makes it perfect for scalable research and real-time applications in agriculture and remote sensing [25]. Thus, ORB is a preferred option in the computer vision field for multimodal image registration tasks due to its robustness across different image resolutions, lightweight computational requirements, and strong performance in low-texture scenes, such as vegetation [25]. The Random Sample Consensus (RANSAC) algorithm, often used with ORB, helps remove outliers from feature matches in computer vision [26]. It works by iteratively selecting random subsets and estimating the best-fitting transformation model. This work uses the RANSAC method to improve the matching of features detected in different spectral bands by eliminating mismatches from parallax errors [26]. After finding reliable matches, a homography matrix is created to align each slave image with the reference band geometrically [27]. This transformation preserves spatial consistency across bands, enabling precise layer stacking for further multispectral analysis [27]. The study aims to enhance the use of current UAV-mounted sensors for close-range applications, lessen reliance on specialised equipment, and provide more accessible and affordable crop monitoring solutions in various agricultural situations. The main goal is to create and test a reliable image alignment pipeline using the ORB feature detection and matching technique, along with RANSAC for homography estimation. This approach aims to align individual spectral bands captured at low altitudes to create high-quality stacked multispectral composites for plant health analysis. Using the pipeline, this study evaluates the use of the UAV-mounted MX sensor for close-range crop imaging to address parallax-induced misalignments in multispectral imagery.

2. Study Area

ICAR-Indian Agricultural Research Institute (IARI) is a leading agricultural research centre located in New Delhi, India. It has several essential facilities for research on various agricultural topics. One such facility is the Big Data Analytics Lab (BDL Lab) (28.639541, 77.162105). The BDL lab uses remote sensing data from ground sensors, UAVs, and satellites to evaluate crop health in different ways. The study was conducted at the Big Data Analytics lab, where the MX sensor was used to assess the health of potted plants. Crops in the pots were subjected to multiple treatments to see their reaction to nutrient and water stress treatments. The MX sensor was applied to assess the impact of these treatments as a remote sensing sensor. The problem of parallax error was first observed during the initial image alignment and layer stacking process. The methodology applied here will address the image alignment issues so that the health of these potted plants can be assessed from the MX images. Figure 1 shows the location of the BDL lab inside IARI.

Figure 1.

Shows the location of the Big Data Analytics Lab (Credits: Google Maps).

3. Methodology

3.1. Experiment Setup

The MX sensor was used to capture an image of the subject (potted plants) from either the top or side view. For top-view photos, the sensor was placed directly above the plant in the nadir position at a distance of 1.5 m from the plant. For the side view, the camera was placed on a tripod at 1.5 m from the plant. It was ensured that the object was in the centre of the frame. The MX camera was connected to a mobile device using the Wi-Fi generated from the sensors. After the sensor is connected, we can access the control panel for the sensor in any web browser by entering the IP address 192.168.10.254 into the search bar of the browser. From the control panel, all the functions of Micasense, like camera triggering and live image feed visualisation, can be controlled. Once the sensor is ready with all the required settings, images of the crops were captured, and the raw images were used for further analysis. For the top view, the pot is placed on a black uniform background with markers indicating the edge of the crop. Further, the image also shows a calibration panel placed on the flat ground, along with a label panel with the name of the specimen. This image was taken outdoors under natural sunlight. The side-view image was captured indoors under artificial lights. A potted indoor plant was used as a subject, and we did not use any background to see how the alignment works when images have diverse backgrounds. Since computer vision-based ORB methods are being used for image alignment, these diverse backgrounds and lighting conditions, along with plants, will reveal the robustness of the techniques.

3.2. Image Layer Staking

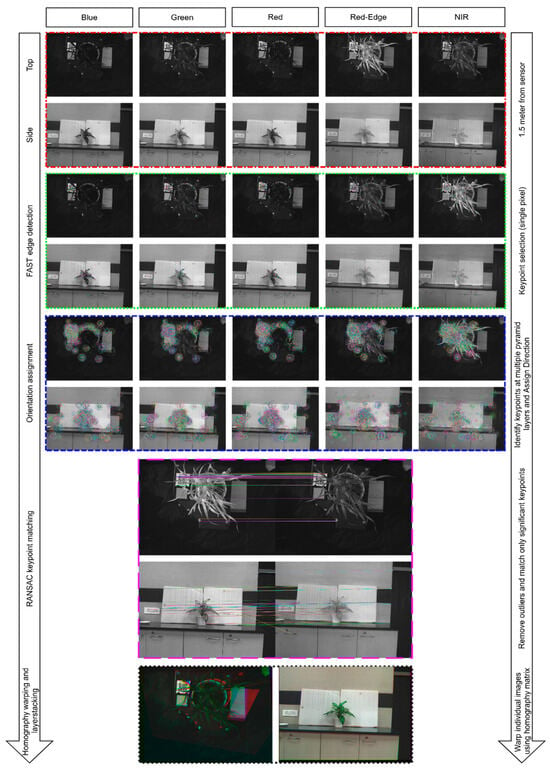

Figure 2 shows the process followed while layer stacking the proximal MX images. Figure 2 shows two sets of images; the first image is taken from the nadir position when the camera is positioned directly above the crop. A potted wheat crop in its early growth stage was used as a subject for this image. There are five images, one for each band. The brightest of the images is the NIR image, followed by rededge and green, due to the crop’s high reflectance in these bands. The red and blue images are dark, suggesting high absorbance in these bands. Similarly, the second set of images shows a potted indoor plant captured from the MX sensor from a side view. These images are promptly labelled and can be visualised in Figure 2.

Figure 2.

Shows the steps involved in layer stacking the individual images from the Micasense Rededge-MX sensor using Oriented FAST and Rotated BRIEF (ORB) computer vision techniques.

The images were processed in a Python 3.12 environment. The ORB technique was accessed from the OpenCV library. OpenCV was used for image processing, feature detection, matching, warping and drawing of the images. In addition, the numpy, rasterio, and matplotlib.pyplot libraries were used for numerical operations, generating a multispectral layer stack, and displaying images and matches, respectively. The MX provide individual images in TIFF format; these images are read using the rasterio library. The OpenCV library was used to setup ORB flow for the images. In the first step, the ORB uses the Features from the Accelerated Segment Test (FAST) method, a corner detection algorithm, to quickly detect keypoints in the images. The FAST approach is particularly known for its speed and is generally used for real-time applications like object tracking or image alignment. The FAST approach looks at each pixel and 16 pixels surrounding it (a Bresenham circle). It checks if a certain number of contiguous pixels in that circle are all significantly brighter or darker than the centre pixel. If there is a significant change, the centre pixel is marked as an edge pixel. Figure 2 shows the keypoints detected in each image from the corresponding views. Each edge pixel is indicated by a small circle drawn around it by the FAST detection algorithm.

FAST detects key points but does not provide orientation to the key points. Orientation is a really important feature that tells the dominant direction and rotation angle of a key point. While this is happening, the ORB also uses the Binary Robust Independent Elementary (BRIEF) descriptor to provide a unique ID to individual key points by comparing the intensity differences between pairs of pixels in a patch around the keypoint. These descriptors are used to detect and match similar key points across the images. Each descriptor takes the rotation angle of a key point into consideration while identifying matching key points. This process makes the ORB alignment process rotation invariant. Figure 2 shows the rotation angle of the images in the orientation assignment section. Here, the ORB reads the images at multiple pyramid layers and then identifies the key points, calculates the rotation angle and assigns them to the key points. In this part of the image, the key points are detected and shown by concentric circles (a circle for each pyramid layer). The direction is indicated by the line connecting these concentric circles.

Once the ORB detects orientation, the BRIEF descriptors are generated for each key point detected by the FAST algorithm. The Random Sample Consensus (RANSAC) removes the outlier keypoint that does not have proper matches across all the images. The RANSAC randomly picks some of the inliers to formulate a transformation that can best align the images together. The algorithm then checks how many of the unselected points agree with this transformation. This process is repeated several times to find the best consensus between the transformation and the maximum inliers. Using this transformation matrix, the homography method warps the slave images to match the master image (rededge) so that all the images are aligned perfectly for layer stacking. Finally, the aligned images are layer-stacked to provide a 5-band TIFF image for further assessment.

4. Results and Discussion

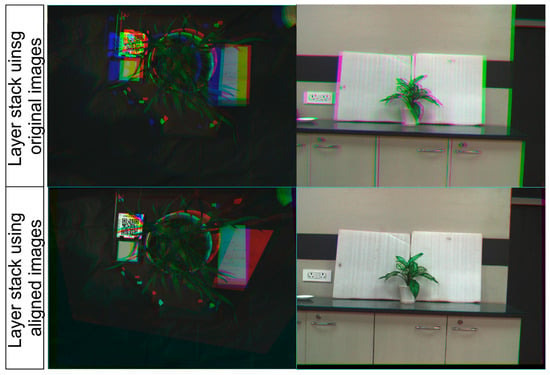

The close-up images of the potted plants taken from the MX sensor were processed using the methodology mentioned above. A layer stack of these bands was generated using QGIS 3.40 LTS version; the outcome of the process is shown in Figure 3. Figure 3 shows the layer-stacked true colour composite (TCC) image of the front and side view images before and after alignment. Before the alignment, the shift between the bands is visible in both the front and side view images. After the alignment, too, the top-view image shows a lot of shit between the bands. The image taken from the nadir position has a uniform background with markers to identify the edge, which the feature detection algorithms can use to improve alignment. In addition, the image also has high-contrast edges that can make the alignment process more accurate. But in this case, the ORB failed to provide a proper alignment [28], and from Figure 3, the aligned top-view image looks very similar to the original layer-stacked image, except for some zones.

Figure 3.

Shows the comparison between the layer stack generated using the original bands without alignment and the results obtained after the bands are aligned using the ORB approach. Top-view layer stack without alignment (top-left), top-view layer stack after alignment (bottom-left), side-view layer stack without alignment (top-right), and side-view layer stack after alignment (bottom-right).

Though most of the aligned images from the nadir view have lapses, some features, like the reflectance panel, show near-perfect alignment. This indicates that the image went through the alignment process, features were detected, but the alignment failed to produce a perfectly aligned layer stack. One strong reason could be that the key points are mainly concentrated in and around the reflectance panel area of the top-view image. From Figure 2, the key points in the blue, green, red, and red-edge bands clearly show that most of the key points are situated near the panel area due to its high contrast edges. Since FAST detects only the edges and ORB only uses these key points for further processing [29], all the key features are concentrated only in a small zone. Further, RANSAC utilises these features to formulate the transformation matrix for aligning the images (see Figure 2, RANSAC key point matching). Since the algorithm failed to detect a large no of key points on the crop, the alignment process warped the images along the available key points, resulting in a near-perfect reflectance panel layer stack, disregarding the rest of the image. Thus, the lack of contrast between the crop and the background, along with having high contrast edges concentrated in a single zone, could have caused the ORB algorithm to fail in image alignment [30].

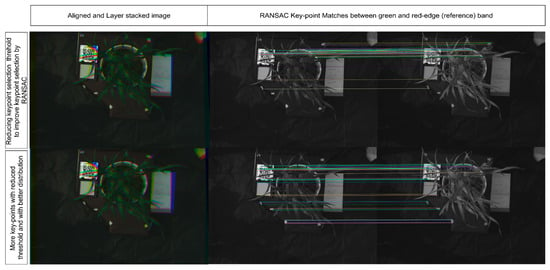

To improve the alignment further, we have reduced the key-point threshold to allow RANSAC to select more inliers for better alignment [31]. Figure 4 shows the aligned images after improving keypoint selection and distribution. From Figure 4, the top-left image was the result of the ORB alignment after the key-point selection threshold was reduced. The reduction in the threshold value improved the number of keypoints available for the alignment process. Additionally, the key features near the label sheet (right side of the pot), on the pot edge markers, and the pot itself were also included for alignment. The inclusion of more key points, especially from diverse positions, has drastically enhanced the alignment of the images. The aligned image clearly shows that the panel, label sheet and most of the crop, along with the pot, have aligned, leaving some portions still distorted. Mainly, the leaf edges that are not in the centre and spread across the image with several twists show significant variations in the alignment. The improvement in the alignment is clearly due to the increasing key points for feature matching and their distribution. To improve the alignment further, the RANSAC method was used to select only those key points based on their spatial distribution. The bottom-left image from Figure 4 shows the results after improving the key point selection by checking for distribution. From the figure, the change in the number of key points selected based on the positions was not significant, which affected the alignment process. The bottom-left aligned image in Figure 4 does not show any noteworthy change in the crop alignment from the last output. Thus, clearly showing that the most significant part of the feature-based image alignment is the detection of key points that are numerous and distributed adequately across the image [32]. To improve key point detection, the background and the subject must be in stark contrast. Further, the figures also show that the object must be at the centre of the frame with less spreading towards the corners of the image. The spread of the object was more across the image as the image was captured from the top. If the image was captured from the side view, then the plant may not spread across the image that well, and the image can be appropriately aligned.

Figure 4.

Shows the top-view image after alignment using more no of key-points (top-left) and after improving the distribution of key-points (bottom-left). The corresponding key-point matching between the green bands and the red-edge (reference) band can be observed in (top-right) and (bottom-right) positions, respectively.

With these learning, the MX sensor was used to capture the side-view image of an indoor potted plant with diverse backgrounds and bright indoor lighting conditions. Figure 2 shows how the pot looks in different bands. The figure also shows the key points detected in each band. From the figure, the key points in the side view image are spread across the image, with several of them being on the plant and the pot itself. The edges and high contrast features are spread across the side-view images, thus providing well-distributed features at prominent positions. The RANSAC matching also shows highly diverse matching lines connecting the well-distributed features, indicating how the high-quality key points can influence the alignment process. The results of the alignment can be seen from the images displayed in Figure 3 (bottom-left). The figure shows the TCC of the side-view layer stacked image without alignment (top-right) and the layer stacked image after alignment (bottom-right). From the figure, the TCC of the unaligned image shows a significant shift between the bands. In contrast, the TCC of the aligned image show a perfect layer stack where the alignment process was able to align the bands to near perfection. This layer stack image can be used to perform an analysis of choice to extract crop health information. Figure 5 shows the normalised difference vegetation index (NDVI) image generated from the stacked image layer. The image is colour-coded from red to green, where red indicates very low NDVI and green indicate higher NDVI. The minimum and maximum values of NDVI for the plant are around 0.1 and 0.7 [33]. The leaf of the indoor plant has several pale green spots where the chlorophyll is very low, and some areas are still bright green where the chlorophyll content is high. The NDVI image clearly shows a difference between the zones with lower chlorophyll content and the areas with high chlorophyll content in the leaves [34]. Wherever the chlorophyll content is low, the NDVI values are low and vice versa [35]. Since the NDVI is showing all these variations accurately per the plant chlorophyll content, it clearly shows that the alignment and layer stacking of the image worked perfectly. Thus, the MX sensor can be used effectively for assessing crop health from a very close distance, provided some precautions are taken before capturing the images.

Figure 5.

Shows the NDVI image generated from the side-view aligned layer stack image.

Though this research was conducted with the Micasense RedEdge-MX sensor, care must be taken to explore what might occur if paired with alternative types of sensors for similar close-proximity imaging applications. Other multispectral sensors (such as the Parrot Sequoia and Sentera 6X) have a different set of spectral bands but, likewise, can be utilised for proximal imaging. In these instances, the alignment approach will need to be modified, as bands count, optics, and camera configurations affect parallax and registration performance [12,13]. Hyperspectral sensors, on the other hand, typically employ pushbroom or whiskbroom scanning, which yields a complete spectral datacube instead of several separate images. This obviates band-to-band alignment requirements, but poses other issues, such as motion Artefact, illumination, and the requirement for accurate calibration at acquisition. RGB cameras, while providing extremely high spatial resolution, do not capture key spectral bands (e.g., red-edge and NIR), and thus are not very effective in capturing early plant stresses [8,10]. Multispectral sensors, therefore, find a middle ground between spectral richness, cost, and data volumes that can be handled [7,11,15]. An initial examination of sensor specifications indicates that proximal imaging is possible across platforms, although the processing pipeline would need to be adapted based on sensor design and acquisition geometry. For additional information, refer to Table 1, which compares various sensors and how the current approach can be applied to these sensors.

Table 1.

Comparison of imaging sensors, ranging from RGB to Hyperspectral, to evaluate the feasibility of the current approach.

5. Conclusions

The study shows that UAV-mounted multispectral sensors can be effectively used for closer-range crop imaging and health assessment even when they have multiple camera systems in the rig. The work demonstrated how computer vision techniques can be used to process close-up MX images of potted plants from top- and side-view angles taken from a 1.5-m distance while discussing the challenges encountered during the process. Robust computer vision techniques like ORB feature detection and RANSAC-based alignment were used to answer these challenges to generate a perfect layer-stacked image that can be used for further image analysis. While utilising these alignment techniques, it is essential to make sure that the crop has a fine contrast in all the bands against the background, which can be either homogeneous or non-homogeneous. Further, the image should have some markers with sharp edges and proper contrast. These steps will ensure that the key points are numerous and well distributed across the image, including on the crop itself. Further, the crop must always be in the centre of the FOV, which will allow for better alignment. By ensuring that these steps are followed, the MX sensors can be effectively used to study crops using close-up MX images.

Author Contributions

Conceptualization, T.T.K.; methodology, T.T.K.; software, R.N.S.; validation, T.T.K. and R.R.; formal analysis, T.T.K. and R.R.; investigation, T.T.K.; resources, T.T.K., R.G.R. and A.B.; data curation, R.G.R., A.B., D.V.S.C.R. and S.R.; writing—original draft preparation, T.T.K.; writing—review and editing, T.T.K. and R.N.S.; visualization, T.T.K.; supervision, R.N.S.; project administration, R.N.S.; funding acquisition, R.N.S. All authors have read and agreed to the published version of the manuscript.

Funding

The results summarized in the manuscript were achieved as a part of the research project “Network Program on Precision Agriculture (NePPA)”, which is funded by the Indian Council of Agricultural Research (ICAR), India, and is hereby duly acknowledged.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data would be made available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, C.; Kovacs, J.M. The Application of Small Unmanned Aerial Systems for Precision Agriculture: A Review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Rejith, R.G.; Sahoo, R.N.; Ranjan, R.; Kondraju, T.; Bhandari, A.; Gakhar, S. Estimating Leaf Area Index of Wheat Using UAV-Hyperspectral Remote Sensing and Machine Learning. Biol. Life Sci. Forum 2025, 41, 11. [Google Scholar] [CrossRef]

- Rejith, R.G.; Sahoo, R.N.; Verrelst, J.; Ranjan, R.; Gakhar, S.; Anand, A.; Kondraju, T.; Kumar, S.; Kumar, M.; Dhandapani, R. UAV-Based Retrieval of Wheat Canopy Chlorophyll Content Using a Hybrid Machine Learning Approach. In Proceedings of the 2023 IEEE India Geoscience and Remote Sensing Symposium (InGARSS), Bangalore, India, 10–13 December 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Sahoo, R.N.; Gakhar, S.; Rejith, R.G.; Verrelst, J.; Ranjan, R.; Kondraju, T.; Meena, M.C.; Mukherjee, J.; Daas, A.; Kumar, S.; et al. Optimizing the Retrieval of Wheat Crop Traits from UAV-Borne Hyperspectral Image with Radiative Transfer Modelling Using Gaussian Process Regression. Remote Sens. 2023, 15, 5496. [Google Scholar] [CrossRef]

- Sahoo, R.N.; Rejith, R.G.; Gakhar, S.; Verrelst, J.; Ranjan, R.; Kondraju, T.; Meena, M.C.; Mukherjee, J.; Dass, A.; Kumar, S.; et al. Estimation of Wheat Biophysical Variables through UAV Hyperspectral Remote Sensing Using Machine Learning and Radiative Transfer Models. Comput. Electron. Agric. 2024, 221, 108942. [Google Scholar] [CrossRef]

- Sahoo, R.N.; Rejith, R.G.; Gakhar, S.; Ranjan, R.; Meena, M.C.; Dey, A.; Mukherjee, J.; Dhakar, R.; Meena, A.; Daas, A.; et al. Drone Remote Sensing of Wheat N Using Hyperspectral Sensor and Machine Learning. Precis. Agric. 2024, 25, 704–728. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.S.T. What Good Are Unmanned Aircraft Systems for Agricultural Remote Sensing and Precision Agriculture? Int. J. Remote Sens. 2018, 39, 5345–5376. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV Remote Sensing for Urban Vegetation Mapping Using Random Forest and Texture Analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Aasen, H.; Bolten, A. Multi-Temporal High-Resolution Imaging Spectroscopy with Hyperspectral 2D Imagers—From Theory to Application. Remote Sens. Environ. 2018, 205, 374–389. [Google Scholar] [CrossRef]

- Feng, H.; Tao, H.; Li, Z.; Yang, G.; Zhao, C. Comparison of UAV RGB Imagery and Hyperspectral Remote-Sensing Data for Monitoring Winter Wheat Growth. Remote Sens. 2022, 14, 4158. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty Five Years of Remote Sensing in Precision Agriculture: Key Advances and Remaining Knowledge Gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Gómez-Candón, D.; Virlet, N.; Labbé, S.; Jolivot, A.; Regnard, J.-L. Field Phenotyping of Water Stress at Tree Scale by UAV-Sensed Imagery: New Insights for Thermal Acquisition and Calibration. Precision Agric. 2016, 17, 786–800. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, Sensors, and Data Processing in Agroforestry: A Review towards Practical Applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. An Automated Technique for Generating Georectified Mosaics from Ultra-High Resolution Unmanned Aerial Vehicle (UAV) Imagery, Based on Structure from Motion (SfM) Point Clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef]

- Mahlein, A.-K. Plant Disease Detection by Imaging Sensors–Parallels and Specific Demands for Precision Agriculture and Plant Phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the Third ERTS-1 Symposium; NASA SP-351; NASA: Washington, DC, USA, 1974; pp. 309–317. [Google Scholar]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, N.R. Low-Altitude, High-Resolution Aerial Imaging Systems for Row and Field Crop Phenotyping: A Review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Araus, J.L.; Cairns, J.E. Field High-Throughput Phenotyping: The New Crop Breeding Frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Li, L.; Zhang, Q.; Huang, D. A Review of Imaging Techniques for Plant Phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High Throughput Field Phenotyping of Wheat Plant Height and Growth Rate in Field Plot Trials Using UAV Based Remote Sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Li, Y.; Guo, S.; Jia, S.; Yan, Y.; Jia, H.; Zhang, W. Quantifying the Effects of UAV Flight Altitude on the Multispectral Monitoring Accuracy of Soil Moisture and Maize Phenotypic Parameters. Agronomy 2025, 15, 2137. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned Aerial Systems for Photogrammetry and Remote Sensing: A Review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Tsai, C.-H.; Lin, Y.-C. An Accelerated Image Matching Technique for UAV Orthoimage Registration. ISPRS J. Photogramm. Remote Sens. 2017, 128, 130–145. [Google Scholar] [CrossRef]

- Karami, E.; Prasad, S.; Shehata, M. Image Matching Using SIFT, SURF, BRIEF and ORB: Performance Comparison for Distorted Images. In Proceedings of the 2017 IEEE 30th Canadian Conference on Electrical and Computer Engineering (CCECE), Windsor, ON, Canada, 30 April–3 May 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Arya, K.V.; Gupta, P.; Kalra, P.K.; Mitra, P. Image Registration Using Robust M-Estimators. Pattern Recognit. Lett. 2007, 28, 1957–1968. [Google Scholar] [CrossRef]

- Ngo Thanh, T.; Nagahara, H.; Sagawa, R.; Mukaigawa, Y.; Yachida, M.; Yagi, Y. An Adaptive-Scale Robust Estimator for Motion Estimation. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 2455–2460. [Google Scholar] [CrossRef]

- Liu, C.; Xu, J.; Wang, F. A Review of Keypoints’ Detection and Feature Description in Image Registration. Math. Probl. Eng. 2021, 2021, 8509164. [Google Scholar] [CrossRef]

- Guan, S.; Fukami, K.; Matsunaka, H.; Okami, M.; Tanaka, R.; Nakano, H.; Sakai, T.; Nakano, K.; Ohdan, H.; Takahashi, K. Assessing Correlation of High-Resolution NDVI with Fertilizer Application Level and Yield of Rice and Wheat Crops Using Small UAVs. Remote Sens. 2019, 11, 112. [Google Scholar] [CrossRef]

- Jain, K.; Pandey, A. Calibration of Satellite Imagery with Multispectral UAV Imagery. J. Indian Soc. Remote Sens. 2021, 49, 479–490. [Google Scholar] [CrossRef]

- Gallardo-Salazar, J.L.; Rosas-Chavoya, M.; Pompa-García, M.; López-Serrano, P.M.; García-Montiel, E.; Meléndez-Soto, A. Multi-Temporal NDVI Analysis Using UAV Images of Tree Crowns in a Northern Mexican Pine-Oak Forest. J. For. Res. 2023, 34, 1855–1867. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).