Abstract

Drones equipped with onboard cameras offer promising potential for modern digital media and remote sensing applications. However, effectively tracking moving objects in real time remains a significant challenge. Aerial footage captured by drones often includes complex scenes with dynamic elements such as people, vehicles, and animals. These scenarios may involve large-scale changes in viewing angles, occlusions, and multiple object crossings occurring simultaneously, all of which complicate accurate object detection and tracking. This paper presents an autonomous tracking system that leverages the YOLOv8 algorithm combined with a re-detection mechanism, enabling a quadrotor to effectively detect and track moving objects using only an onboard camera. To regulate the drone’s motion, a PID controller is employed, operating based on the target’s position within the image frame. The proposed system functions independently of external infrastructure such as motion capture systems or GPS. By integrating both positional and appearance-based cues, the system demonstrates high robustness, particularly in challenging environments involving complex scenes and target occlusions. The performance of the optimized controllers was assessed through extensive real-world testing involving various trajectory scenarios to evaluate the system’s effectiveness. Results confirmed consistent and accurate detection and tracking of moving objects across all test cases. Furthermore, the system exhibited robustness against noise, light reflections, and illumination interference, ensuring stable object tracking even when implemented on low-cost computing platforms.

1. Introduction

Autonomous drones often called commercial micro aerial vehicles (MAVs) have long been integral to robotics and are widely deployed for surveying, surveillance, search-and-rescue missions, military operations, and even package delivery. Yet achieving fully autonomous quadrotor navigation including stable flight, reliable tracking, and robust obstacle avoidance remains a difficult challenge.

In recent years, unmanned aerial vehicles (UAVs) have seen growing adoption across many applications, driven by their low operating costs and strong performance. Cameras, in particular, have emerged as a practical, low-cost sensing option that enables the estimation of rich, information-dense representations of the environment [1]. In computer vision, visual tracking focuses on automatically estimating an object’s bounding box in each subsequent frame [2]. Despite strong progress, many high-performing visual tracking methods remain too computationally heavy for real-time use on UAVs, where long-term tracking must robustly handle fast motion, scale changes, heavy occlusion, and out-of-view events while operating within strict onboard processing limits [3]. Many studies on unmanned aerial vehicles (UAVs) have shown substantial progress toward systems that operate without human intervention. Consistent with human- and society-centered notions of autonomy, technical systems claiming autonomy must be able to make decisions and respond to events independently of direct human control [4]. Our system targets GPS-denied environments (e.g., tunnels and basements) and avoids external infrastructure such as motion capture or GPS by leveraging image-based detection and tracking to both perform target tracking and explore vision-driven control of UAVs [5]. By employing detection and tracking techniques, drones can capture target imagery via onboard cameras and conduct real-time analysis to accurately determine the target’s position and orientation [6]. Deep learning has driven significant progress in detection methods over the past few years. R-CNN [7] applies high-capacity CNNs to bottom-up region proposals for object localization and segmentation. SPP-net [8] introduces spatial pyramid pooling to remove the fixed-size input constraint. Building on both, Faster R-CNN [9] unifies proposal generation and detection, yielding faster training/inference and improved accuracy. Many detectors, most notably SSD [10], tend to underperform at small-object detection. The YOLO family (YOLO [11], YOLOv2 [12], YOLOv3 [13], and later variants such as YOLOv4 [14]–YOLOv8 [15]) is a prominent line of one-stage detectors. YOLOv8 achieves state-of-the-art accuracy and speed, building on prior YOLO advances to suit a wide range of object detection tasks. For our drone, we adopt a streamlined YOLOv8 variant to cut computational load, enabling deployment on severely resource-constrained onboard hardware. In recent years, numerous studies have advanced quadrotor guidance and tracking. Still, designing a robust flight system remains difficult due to the platform’s strongly nonlinear, coupled dynamics. To improve performance on resource-constrained airframes, researchers have introduced efficient, real-time tracking techniques specifically tailored to the limited onboard computational capacity of UAVs [16,17]. Bertinetto et al. [18] proposed an end-to-end trainable, fully convolutional Siamese network for visual tracking. In [19], a quadcopter vision system was developed to track a ground-based moving target, with switching controllers used to maintain flight stability. Li et al. [20] introduced a tracker built on a Siamese Region Proposal Network, which was trained offline on large-scale image pairs. A ResNet-based Siamese tracker is presented in [21]. We achieve real-time DATMO [22] on a quadrotor with a single camera by estimating inter-frame UAV motion to synthesize an artificial optical flow, contrasting it with the real optical flow to isolate and cluster dynamic pixels into moving objects, and then tracking them over time to suppress noise while acknowledging limitations from dependence on accurate motion estimation and good texture/lighting, sensitivity to parallax, vibration/rolling shutters, fast maneuvers and occlusions, and tight onboard computers. DB-Tracker is a detection-based multi-object drone video tracker that combines RFS-based position modeling (Box-MeMBer) with hierarchical OSNet appearance features and a joint position–appearance cost matrix, achieving robust results in complex, occluded scenes [23].

In [6], the authors combine a YOLOv4 detector, a SiamMask tracker, and PID attitude control to deliver efficient, real-time detection and stable target tracking on resource-constrained drones indoors and outdoors, experimentally validating systematic model pruning for practical control.

This work uses a UAV with a single monocular RGB camera to develop an accurate and precise autonomous braking system for indoor environments. The approach pairs a YOLOv4 object detector with a Kalman filter (KF)-based tracker to enable real-time object tracking. This study focuses on detecting a ball as the target object. Our system comprises three core modules, (1) object detection, (2) target tracking, and (3) Proportional–Integral–Derivative (PID) control, and we implement and evaluate it on a DJI Tello drone for end-to-end detection and tracking.

2. Materials and Methods

The UAV used in this study is a DJI Tello, as shown in Figure 1a (https://www.ryzerobotics.com/tello, accessed on 12 June 2025). Figure 1b depicts the ball used as the target in our system. The ball moves along a predefined trajectory, and the UAV’s task is to autonomously detect and continuously track it.

Figure 1.

UAV and ball object. (a) UAV with camera; (b) spherical object (ball).

The scenario involves a UAV and a single target (a ball) captured by the onboard camera and followed along a predefined path. A laptop computer is connected to the Tello drone via Wi-Fi for communication. For this study, the onboard camera is used to detect and track the target (ball) in real time. The sensor captures 5 MP still images and streams 720p video; with electronic image stabilization (EIS), the captured images and streamed video remain stable even under airframe vibrations [24].

2.1. Target Detection

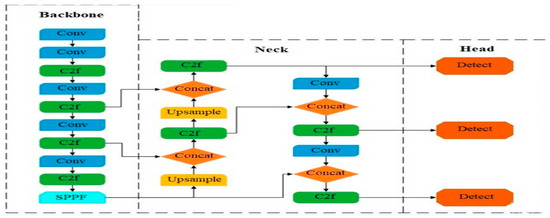

To track the target with a UAV, we first use YOLOv8 to detect it and produce a 2D image-plane bounding box, which then serves as the measurement for state estimation. The ball in aerial imagery is detected using YOLOv8, whose architecture is shown in Figure 2 [25]. Before inference, all input images regardless of original size are resized to 640 × 480. We selected YOLOv8 as the architecture most likely to succeed for this task as it is widely regarded as a state-of-the-art model offering higher (mAP) and faster inference (lower latency) on the COCO dataset [26].

Figure 2.

YOLOv8 architecture.

The performance and speed of various YOLO versions are compared in Table 1. The data illustrates a clear trade-off between speed and accuracy, with YOLOv8n being one of the fastest real-time detectors available, delivering impressive accuracy for its size. In contrast, the larger YOLOv8x model achieves 16 mAP accuracy, demonstrating a powerful option for tasks where precision is paramount, without sacrificing real-time capability [27].

Table 1.

A comparative analysis of YOLO model performance.

2.2. Target Tracking

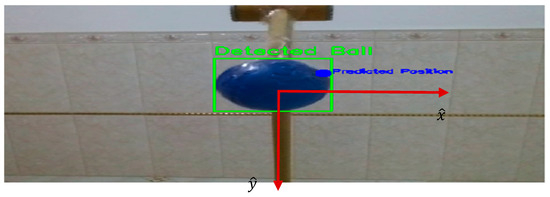

The algorithm uses a single onboard camera to estimate the target’s position in the environment and track it along its trajectory. Using a Kalman filter, we estimate the ball’s image-plane center () (Figure 3) and guide the UAV for precise tracking; the filter also predicts the target’s next position, improving robustness during brief detection dropouts.

Figure 3.

Ball definition in the image coordinate.

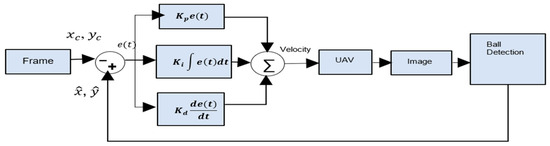

3. Control Method

This section describes the Proportional–Integral–Derivative (PID) controller used to steer the drone, enabling smooth and safe tracking of the ball. Leveraging state estimates from the Kalman filter, the UAV autonomously adjusts its motion to follow the ball’s trajectory. Figure 4 provides an overview of the control architecture.

Figure 4.

PID control system for the UAV.

From the camera stream, the Kalman filter provides the ball’s estimated image-plane center (); the tracking errors relative to the image center (, ) are

which feed the PID law

where , , and are the proportional, integral, and derivative coefficients of the PID controller, respectively.

4. Experimental Results

This section describes a vision-based guidance method integrated with a PID controller for visual detection and tracking. We evaluate the system on a DJI Tello drone connected to a laptop with a single NVIDIA GeForce GTX 1650 GPU to assess robustness and reliability; the experiments demonstrate stable, successful tracking.

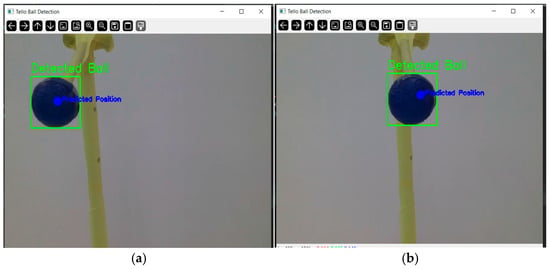

After detection, the UAV aligns with the ball to keep it at the image center and flies toward it. Figure 5 illustrates this process: in Figure 5a, the ball appears in the upper-left corner of the camera view; in Figure 5b, after tracking, the ball is centered in the frame.

Figure 5.

Autonomous ball detection and tracking. (a) ball positioned in the upper-left; (b) ball centered in the frame.

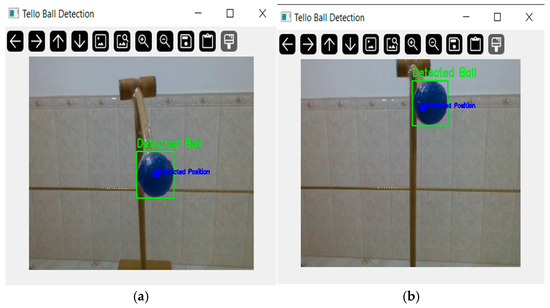

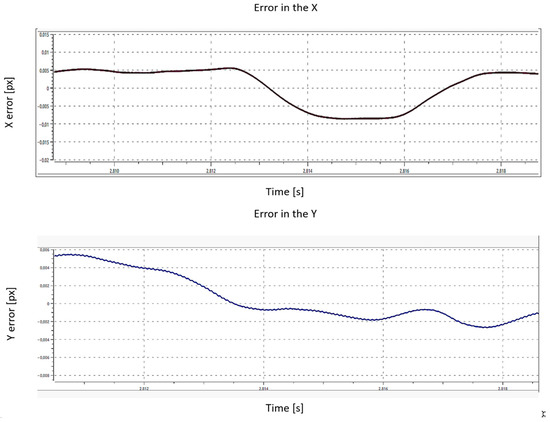

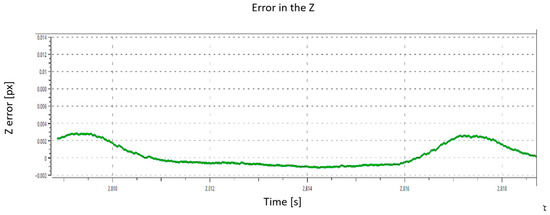

Figure 6 evaluates vertical tracking by moving the ball up and down, showing that the drone can follow these changes. In Figure 6a the ball appears low; after it shifts upward (Figure 6b), the PID controller drives the drone up to track and recenter the target. Figure 7 shows the response to each maneuver. When a change occurs, the tracking error briefly increases; then the controller quickly reduces it and returns the system to a stable state. These results indicate that the designed control system corrects disturbances effectively.

Figure 6.

Up/down tracking of the ball’s movement with the UAV.

Figure 7.

Error in X, Y, and Z over time.

Our method is designed to balance maximum accuracy with real-time speed. We chose the large and powerful YOLOv8x model for the computationally intensive task of initial object detection, ensuring the blue ball is located with high precision. For the subsequent frame-to-frame tracking, we selected a Kalman filter, which is ideal for speed-critical tasks. This hybrid system, when attached to a DJI Tello camera, creates an effective and engaging tracker that demonstrates high-performance capabilities on a low-cost drone.

5. Conclusions

This paper details the design and implementation of a UAV-mounted, vision-based system for autonomous air-target tracking. We use YOLOv8 to detect the moving ball in real time, even when it occupies only a few pixels. Once the target ball is identified, a Kalman filter-based tracking algorithm is used for visual tracking. We further incorporate a PID module to compute tracking errors and adjust the drone’s attitude. PID is a classic feedback controller that derives commands from the proportional (current error), integral (accumulated error), and derivative (rate of error change) terms to drive the system output toward the desired setpoint. The PID module uses the ball’s location relative to the image center and the drone’s state to compute errors and update attitude control for steady target tracking. Overall, flight tests validate the system’s effectiveness and robustness: YOLOv8 delivers fast, real-time detection. The Kalman filter maintains accurate ball tracking, and the PID controller computes attitude corrections that keep the UAV stably locked on the target.

Author Contributions

Conceptualization, O.G., M.M.T. and M.B.; methodology, O.G. and M.B.; software, O.G., I.B.G. and A.H.D.; validation, O.G. and M.B.; investigation, I.B.G. and M.M.T.; writing—original draft preparation, O.G. and N.A.; writing—review and editing, O.G. and I.B.G.; visualization, O.G., M.B., M.M.T., I.B.G., N.A. and A.H.D.; supervision, M.M.T. and M.B.; project administration, M.M.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Moali, O.; Mezghani, D.; Mami, A.; Oussar, A.; Nemra, A. UAV trajectory tracking using proportional-integral-derivative-type-2 fuzzy logic controller with genetic algorithm parameter tuning. Sensors 2024, 24, 6678. [Google Scholar] [CrossRef] [PubMed]

- Kang, H.C.; Han, H.N.; Bae, H.C.; Kim, M.G.; Son, J.Y.; Kim, Y.K. HSV color-space-based automated object localization for robot grasping without prior knowledge. Appl. Sci. 2021, 11, 7593. [Google Scholar] [CrossRef]

- Hirano, S.; Uchiyama, K.; Masuda, K. Controller Design Using Backstepping Algorithm for Fixed-Wing UAV with Thrust Vectoring System. Adv. Sci. Technol. Eng. Syst. J. 2020, 5, 284–290. [Google Scholar] [CrossRef]

- Cheng, H.; Lin, L.; Zheng, Z.; Guan, Y.; Liu, Z. An autonomous vision-based target tracking system for rotorcraft unmanned aerial vehicles. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1732–1738. [Google Scholar]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned aerial vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Gharsa, O.; Touba, M.M.; Boumehraz, M.; Abderrahman, N.; Bellili, S.; Titaouine, A. Autonomous landing system for A Quadrotor using a vision-based approach. In Proceedings of the 2024 8th International Conference on Image and Signal Processing and Their Applications (ISPA), Biskra, Algeria, 21–22 April 2024; pp. 1–5. [Google Scholar]

- Yang, S.Y.; Cheng, H.Y.; Yu, C.C. Real-time object detection and tracking for unmanned aerial vehicles based on convolutional neural networks. Electronics 2023, 12, 4928. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Saavedra-Ruiz, M.; Pinto-Vargas, A.M.; Romero-Cano, V. Monocular visual autonomous landing system for quadcopter drones using software in the loop. IEEE Aerosp. Electron. Syst. Mag. 2021, 37, 2–16. [Google Scholar] [CrossRef]

- Lee, D.; Park, W.; Nam, W. Autonomous landing of micro unmanned aerial vehicles with landing-assistive platform and robust spherical object detection. Appl. Sci. 2021, 11, 8555. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2016; pp. 850–865. [Google Scholar]

- Gomez-Balderas, J.E.; Flores, G.; García Carrillo, L.R.; Lozano, R. Tracking a ground moving target with a quadrotor using switching control: Nonlinear modeling and control. J. Intell. Robot. Syst. 2013, 70, 65–78. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Rodríguez-Canosa, G.R.; Thomas, S.; Del Cerro, J.; Barrientos, A.; MacDonald, B. A real-time method to detect and track moving objects (DATMO) from unmanned aerial vehicles (UAVs) using a single camera. Remote Sens. 2012, 4, 1090–1111. [Google Scholar] [CrossRef]

- Yuan, Y.; Wu, Y.; Zhao, L.; Chen, J.; Zhao, Q. DB-Tracker: Multi-Object Tracking for Drone Aerial Video Based on Box-MeMBer and MB-OSNet. Drones 2023, 7, 607. [Google Scholar] [CrossRef]

- Iskandar, M.; Bingi, K.; Prusty, B.R.; Omar, M.; Ibrahim, R. Artificial intelligence-based human gesture tracking control techniques of Tello EDU Quadrotor Drone. In IET Conference Proceedings CP837; The Institution of Engineering and Technology: Stevenage, UK, 2023; Volume 2023, pp. 123–128. [Google Scholar]

- Elhanashi, A.; Dini, P.; Saponara, S.; Zheng, Q. TeleStroke: Real-time stroke detection with federated learning and YOLOv8 on edge devices. J. Real-Time Image Process. 2024, 21, 121. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-time flying object detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).