1. Introduction

In recent decades, humanity has had to contend with global population growth and urbanization processes. At present, almost 75% of the European population lives in cities, and this ratio is expected to rise to approximately 84% by 2050. These processes may pose new challenges for sustainable urban growth. Smart urban development strategies focus on technological and social solutions aimed at improving the quality of urban life, reducing environmental impact, and increasing transport safety [

1]. Intelligent mobility solutions could be key elements in these efforts, as they have the potential to reduce the number of accidents caused by human factors, manage traffic congestion, and contribute to reducing transport-related environmental impacts [

2]. Based on research results, the majority of road accidents are attributable to human error. The most prominent sources of danger include speeding, inattention, drunk driving, and failure to use passive safety devices. The Vision Zero principle aims to create a transport system that can tolerate human error without leading to serious or fatal consequences. In this systematic approach, injury prevention is ensured through the use of infrastructure and vehicle technology [

3].

Advanced driver assistance systems (ADASs) operate on the basis of data collected by various sensors integrated in vehicles [

4]. An investigation of the road safety effects of ADAS technologies in different driving contexts, based on accident statistics from the United Kingdom. The research analyzed different driving environments, including urban, rural, and highway road types, as well as clear, rainy, foggy, snowy, and stormy weather conditions, and daytime and nighttime lighting conditions. Based on the results of the analysis, the widespread use of ADAS across the entire vehicle fleet could reduce the number of road accidents by 23.8% annually [

5].

The introduction of autonomous vehicles into the transport system could have an impact on further improving road safety. These vehicles eliminate the risks of human errors and can potentially reduce the number of fatal and serious accidents. Their safe operation is closely related to the reliability of sensors, the accuracy of environmental perception, and the decision-making capabilities of algorithms. The introduction of these vehicles will require an interdisciplinary approach that takes into account the characteristics of all components of the system, including people, machines, and infrastructure [

6]. The fundamental task of autonomous vehicles is to continuously detect and interpret their environment. They use sensors and algorithms that recognize road obstacles, other road users, vehicles, pedestrians, traffic signs, traffic lights, and road markings. Based on the collected information, the vehicle is required to make fast and reliable decisions that ensure smooth travel in a dynamic traffic environment [

7].

LiDAR sensors provide high-precision, high-resolution 3D mapping, which helps vehicles detect objects and measure distances. They are capable of detecting static and moving objects in detail, even at long distances. However, unfavorable weather conditions such as rain, fog, or snow can significantly reduce LiDAR sensitivity, as the light beams can be absorbed or distorted. Radar sensors are similarly suited to detecting moving objects and accurately measuring distances and velocities. The technology is particularly advantageous in fog, rain, or darkness. However, radar cannot provide as high-resolution information as LiDAR, making it less suitable for detailed object recognition. Cameras provide visual information for recognizing traffic signs, lane markings, signal lights, and pedestrians. The images enable the identification of the color, shape, and texture of objects, which serve as the basis for machine vision algorithms. However, these sensors are sensitive to lighting changes, such as low light conditions, nighttime conditions, or strong sunlight. Sensor fusion is responsible for the coordinated operation of the aforementioned sensors [

8].

Image processing is used to improve image quality, extract information, and support automated decision-making in autonomous vehicle systems. During preprocessing, image data is prepared, which includes noise reduction, contrast enhancement, and correction of geometric distortions to improve the visibility of relevant information. Object segmentation involves dividing images into regions to separate individual objects [

9].

Dursun et al. focused on the real-time recognition of traffic signs and traffic lights using the YOLOv3 (You Only Look Once) algorithm. A unique dataset consisting of images of traffic sign models under various lighting conditions was created. The system was trained based on these images. The system became capable of providing reliable recognition in real-time environments, which is necessary to support the safe operation of autonomous systems [

10]. Priya et al. presented a real-time, integrated system that detected and tracked vehicles, traffic signs, and signals using the YOLOv8 algorithm. YOLOv8 was able to perform detection and classification in a single step during image processing. This ensured high speed and accuracy. The system was complemented by a convolutional neural network-based classification. The method was trained on open-source datasets, and its performance was evaluated using precision, F1 score, and mAP metrics, confirming its effectiveness in various traffic situations [

11]. Barrozo and Lazcano presented a simulated control system for an autonomous vehicle designed for off-road environments, where navigation is based solely on visual information, specifically road surface recognition. All decisions are made based on the ratio of road pixels within the image field. The results showed that the approach could be more effective than edge detection-based strategies, especially in low-texture environments [

12]. A distinctive contribution of this study is the combined evaluation of object detection performance across multiple object classes and environmental conditions, such as varying weather and lighting scenarios.

Most previous studies focused either on evaluating the effect of a single environmental condition across multiple object classes [

13,

14] or on the detection performance of a specific class under varying conditions [

15,

16]. This research integrates both aspects into a unified experimental setup. This approach enables a more realistic and comprehensive understanding of perception challenges in autonomous driving. The resulting framework offers a valuable baseline for developing and benchmarking detection systems under diverse and complex traffic situations.

2. Materials and Methods

The development of environmental perception systems for autonomous vehicles requires a realistic, flexibly customizable simulation platform that supports sensor integration and the modeling of complex traffic situations. Three well-known tools were examined: CARLA, LGSVL, and AirSim. CARLA and LGSVL offer detailed, real-time sensor modeling and urban environments, while AirSim is primarily designed for drone simulation, with more limited on-road vehicle simulation capabilities. Based on a comparison of functionality, flexibility, and realism, CARLA proved to be the most suitable for testing object tracking systems for autonomous vehicles [

17,

18,

19]. CARLA (Car Learning to Act) is an open source simulation environment designed specifically for autonomous vehicle research and development. It is built on the Unreal Engine graphics engine, enabling detailed modeling of realistic urban environments and traffic situations. The platform is able to support real-time data generation from camera, LIDAR, radar, and GPS sensors and their joint operation, enabling the testing of sensor fusion algorithms under various environmental conditions such as rain, fog, daytime, or nighttime lighting. CARLA provides full control over vehicle movements, traffic scenarios, pedestrian behavior, and weather conditions. The platform is suitable for creating complex and interactive simulation scenarios. The movement of all dynamic and static actors can be controlled, facilitating the testing of decision-making algorithms for autonomous systems [

17].

The simulation data were generated using CARLA version 0.9.14, which allows data from various sensors to be recorded. The maps used, Town02 and Town05, are available on the official CARLA website and provide a realistic and detailed urban structure. To generate traffic, not only autonomous vehicles but also simulation-controlled vehicles and pedestrians, as uncontrolled actors, were created to ensure realistic traffic scenarios. During the tests, the current speed, position, and timestamp were saved for each frame. While annotating, YOLO-format .txt files were automatically generated for the images, containing the object class and normalized bounding box coordinates. The classes examined were car, motorcycle, bicycle, traffic light, traffic sign, and background, for which no label was created, but it was taken into account during the evaluation. During the tests, YOLOv7, a single-stage object detection network, was used, processing the entire input image at once, enabling fast and efficient recognition. The network consists of three main layers within a single forward convolutional architecture. The backbone network extracts visual features such as edges, textures, and shapes from the input image using various convolution and pooling layers. The neck network is a multi-level feature processing layer that facilitates the detection of objects of different sizes. The head network is the final component, which divides the image into a grid and examines the probability of the object’s presence in each cell. Object recognition is not simply the selection of pixels, but a statistical decision about the content of image segments [

20,

21].

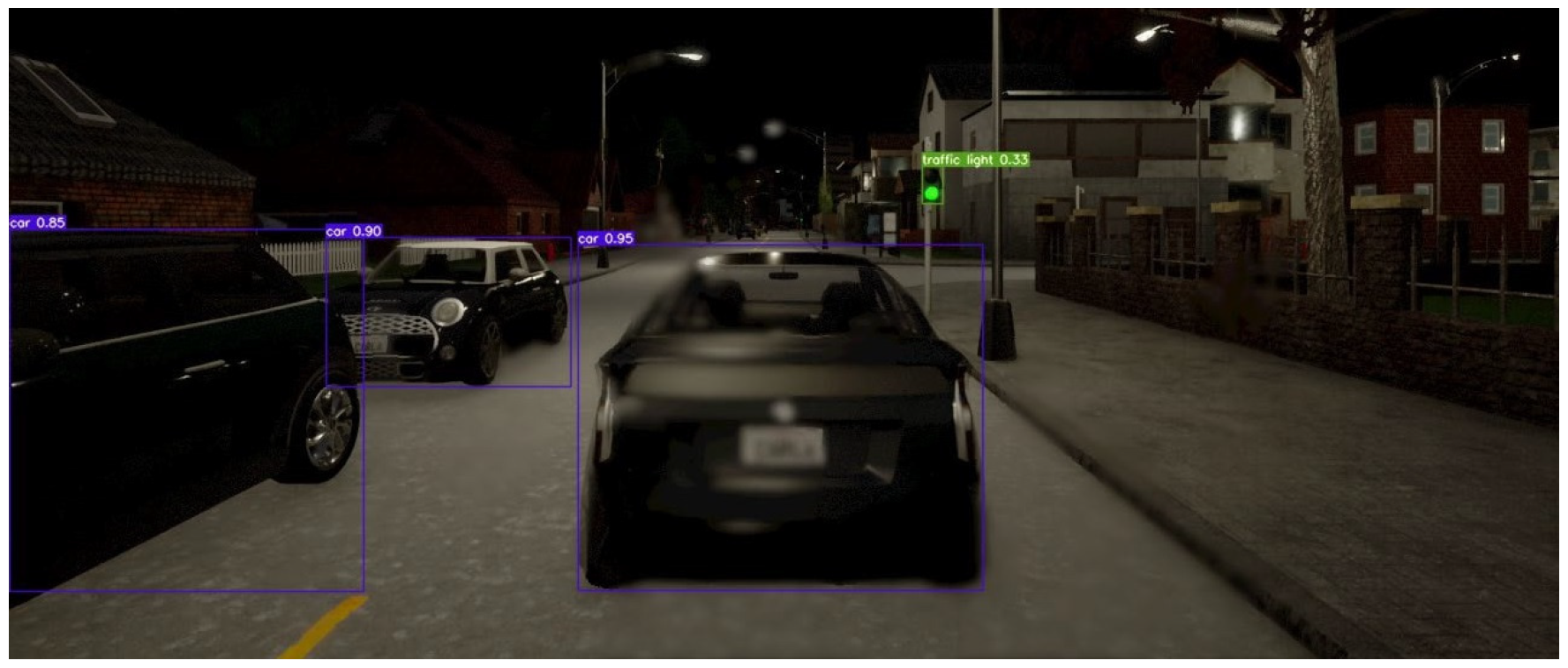

To test the model under challenging conditions, two weather presets were used in CARLA. The critical weather setup included 90% cloudiness, 90% precipitation, 80% precipitation deposits, 50% wind intensity, 90% fog density, and 100% surface wetness, simulating an extreme low-visibility scenario with a low sun angle (−5°). In addition, a nighttime scenario was created with 80% cloudiness, 100% precipitation, and a sun altitude angle of −10°, representing heavy rain in near-darkness. These configurations aimed to evaluate detection performance under high-variance environmental conditions.

Figure 1 is taken from the simulation of the latter case.

The YOLOv7 model was used from an open source implementation (source:

https://github.com/WongKinYiu/yolov7 (accessed on 14 November 2024)), which is PyTorch-1.11.0 based and easily configurable for unique data. Based on the images and annotations extracted from CARLA, a YOLO-compatible data structure was created, which is summarized in a data.yaml configuration file. The file contains a list of classes and the paths to the training and validation datasets. The dataset containing 779 images was divided into 80–20% for training and validation. Training was performed in a GPU environment over 150 episodes, monitoring the evolution of loss functions, which consist of three parts: localization error (bounding box accuracy), classification error, and object presence error. During optimization, we followed the metrics of mean average precision (mAP@0.5) and mean precision at various IoU thresholds (mAP@0.5:0.95), which show the overall and detailed recognition performance of the model. The limited size of the dataset and the uneven distribution of object classes probably contributed to the instability observed in the accuracy and recall performance discussed below, especially for smaller or less frequent categories such as traffic signs and traffic lights. The simulation was performed using an ASUS ROG Strix G15 G512 notebook (Taipei, Taiwan) equipped with an Intel

® Core™ i5-10300H processor (2.5 GHZ, 4 cores) (Santa Clara, CA, USA), NVIDIA

® GeForce

® GTX 1650 Ti 4GB GDDR6 graphics card, and 16GB DDR4 memory (Santa Clara, CA, USA).

3. Results

This section analyzes the model’s performance across different object classes. A detailed examination was conducted in terms of recognition accuracy, F1 score, precision, and recall values. The confusion matrix was used to map the classes that the model recognizes accurately and where frequent errors occur, such as the misidentification of smaller objects as background. The results provide a comprehensive overview of the strengths and weaknesses of the object detection system and information on further development opportunities.

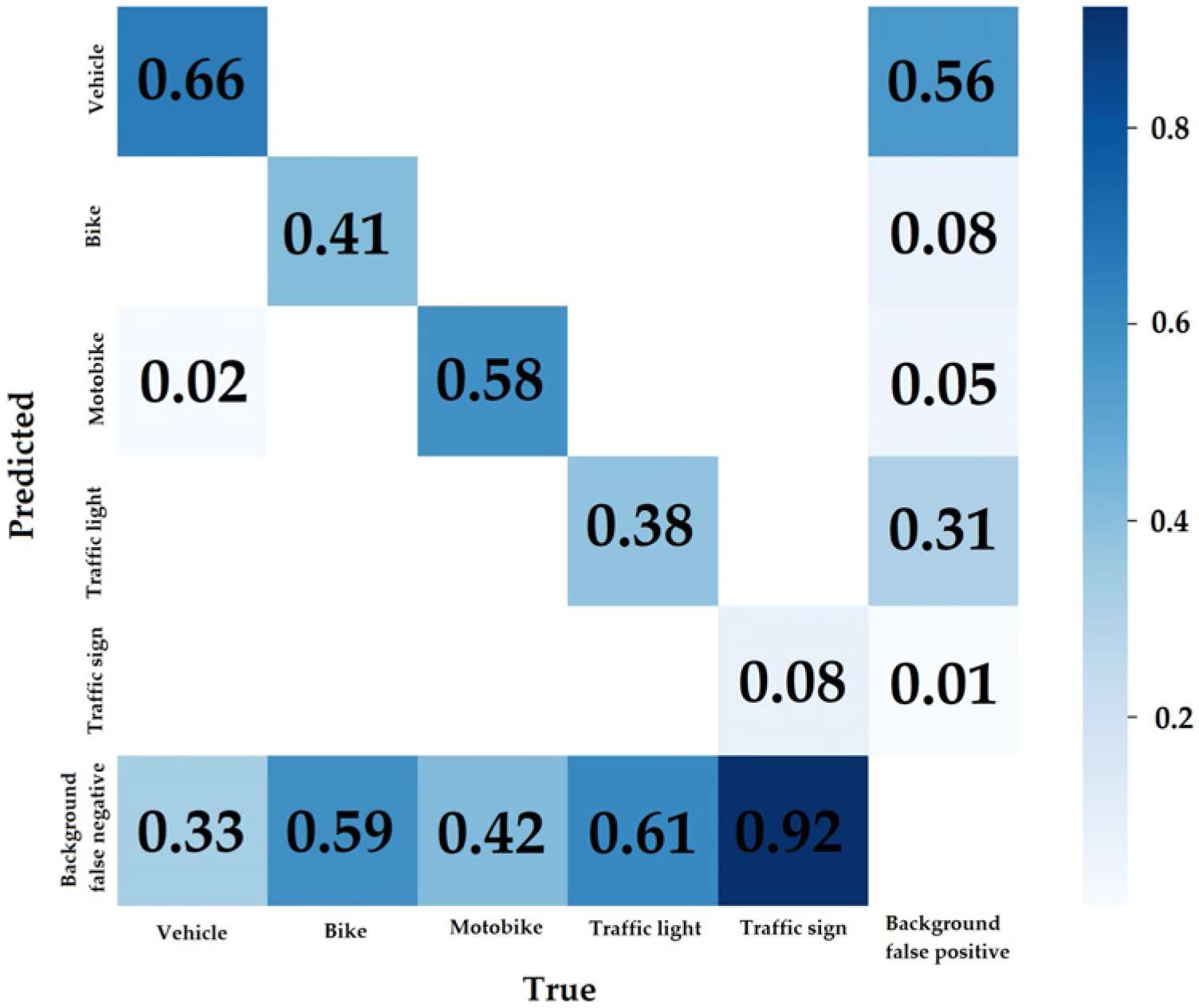

Figure 2 displays a confusion matrix illustrating the model’s classification performance across different object classes. The best-performing category is vehicle, which the model correctly identifies in 66% of cases. However, in one-third of cases (33%), it fails to detect the object and ignores it as background. The motorcycle class performs similarly, with 58% correct classification. The model performs worse in the case of bicycles, correctly recognizing only 41% of objects, while treating the remaining 59% as background. The performance of the traffic light and, in particular, the traffic sign classes declines further. Traffic lights have a correct classification rate of 38%, while traffic signs have a rate of only 8%, indicating that the model treats this class almost entirely as background. The last column of the matrix shows the false positive rate. The vehicle class stands out with a value of 0.56, which means that the model often detects vehicles where there are none.

The model’s performance was more favorable for larger, easily recognizable classes such as vehicles and motorcycles, while it exhibited significant weaknesses in recognizing smaller or visually more difficult objects such as traffic signs and lights. These results suggest that the model’s limitations in detecting smaller or less visually distinct objects may be partly due to insufficient training exposure. A larger and more balanced dataset—especially one that includes a greater number of annotated examples of traffic signs and lights under varying conditions—could have provided the model with a more comprehensive basis for learning the characteristic features of these classes.

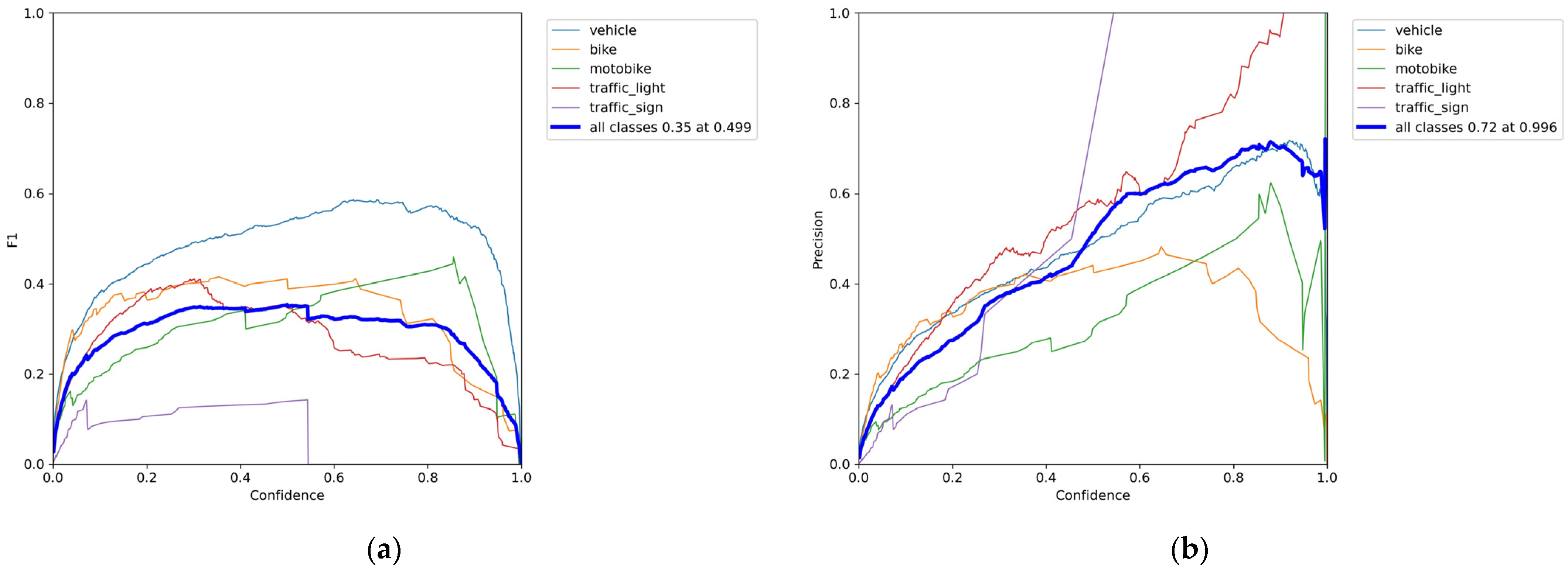

Figure 3a displays the F1 score of the model as a function of the confidence value, divided into different object classes. The F1 score reflects the balance between accuracy and recall, thus characterizing detection performance. The vehicle class performs best, with an F1 value rising to nearly 0.6 and remaining stable across a wider confidence range. These values suggest that the model is quite confident and accurate in recognizing vehicles. For the bike and motobike classes, the F1 value peaks at around 0.4, but based on the variability of the curves, the model’s performance is less consistent in these cases. This may indicate similarities between the classes or image uncertainties. The traffic light class also shows a medium F1 value, but its curve is stepped, indicating fluctuating performance, probably due to more difficult visual recognition. Traffic sign detection is the weakest, with an F1 value rarely exceeding 0.2, indicating low reliability. The combined, wider blue curve shows the overall performance of the model for all classes. The best average F1 score is 0.499 at a confidence threshold of 0.35, as highlighted in the figure. In practice, this value may represent the optimal confidence threshold.

Figure 3b shows the precision value of the model by class as a function of confidence. The motobike class behaves most favorably with high confidence, with a precision value exceeding 0.9, meaning that false alarms are extremely rare. The vehicle class also shows consistently high accuracy, around a maximum of 0.6. The precision value of the traffic sign class increases sharply with greater confidence, but the curve is irregular, which may indicate a small number of elements. The bike and traffic light classes show lower and more fluctuating performance, especially as confidence increases. Based on the curve displaying the cumulative performance, the model achieves its highest average precision value (0.72) with a confidence of 0.996. High accuracy requires very strict threshold values. The model is accurate in detecting motorcycles and vehicles, while for other classes, accuracy depends more on the confidence setting, which determines the threshold value to minimize false positives.

Figure 4a presents the precision–recall curves and average precision (AP) for each model class. The vehicle class performs reasonably well, with an AP value of 0.474, supported by a balanced precision–recall curve. The traffic light (0.288) and motobike (0.276) classes also perform acceptably, although they are characterized by high precision and low recall, meaning that they are less frequent but more accurate. The AP value of the bike class is lower at 0.238, and its curve drops rapidly, indicating that many detections are missing or incorrect. The traffic sign class performs the worst (0.087), as shown by its curve rapidly approaching 0, indicating few correct detections and many false detections. The mAP@0.5 value calculated for the entire model is 0.272, which indicates moderate performance, especially due to the weaker recognition of smaller and visually complex classes.

Figure 4b shows the relationship between recall and confidence by class. The vehicle class shows the most favorable performance, achieving a recall value above 0.8 even with low confidence, meaning that it recognizes the majority of vehicles. The bike, motobike, and traffic light classes show moderate recall (0.3–0.5), with performance rapidly deteriorating as confidence increases. Traffic signs are the least accurate, with a recall value of just over 0.1. The maximum overall recall is 0.64, which the model achieves at the lowest confidence value (0.0). Based on this, the model is most accurate at recognizing vehicles and often misses smaller objects.

Figure 5 illustrates the development of the model training and validation process along different metrics, by epoch. The top row shows the values for the training data, while the bottom row shows the values for the validation data. In the case of the loss functions (box, objectness, classification), it is clear that all components decrease continuously during training, indicating the convergence of the model and the effectiveness of the learning process. In terms of validation losses, box and classification losses show a similar decreasing trend, but objectness loss begins to rise slightly in later epochs, which could indicate overfitting. The accuracy metrics, precision, recall, mAP@0.5, and mAP@0.5:0.95, stabilize over the learning process. The precision value is between 0.6 and 0.7, the recall is around 0.4, while the mean average precision (mAP@0.5) reaches 0.3. The stricter mean average precision (mAP@0.5:0.95) value ranges between 0.15 and 0.2, indicating moderate object recognition performance. It can be concluded that the model learns effectively, which is reflected in the improvement of the metrics. However, based on the increase in validation objectness loss and moderate mAP values, it might be worth improving performance with further development or data augmentation, especially for object classes that are more difficult to identify.

A key limitation affecting the model’s performance is the relatively small and imbalanced dataset used for training and evaluation, consisting of only 779 annotated images. This constrained the model’s ability to generalize across object classes—particularly for small, less frequent categories such as traffic signs and lights—resulting in unstable precision–recall behavior and low F1 scores for these classes. Based on these limitations, this study should be viewed as a starting point for further investigations. The primary goal was to demonstrate the feasibility of a simulation-based testing framework using CARLA and YOLOv7. Future research will build upon this foundation by expanding the dataset, improving class balance, and validating results in real-world environments. The presented methodology offers a reproducible and extensible basis for such comparative studies and for continued development in environment perception for autonomous driving systems.

4. Conclusions

The objective of this research was to investigate an environment recognition system for autonomous vehicles using the CARLA simulation platform and the YOLOv7 object detection model. Various urban traffic scenarios were simulated, and the model was trained using YOLO-format annotations generated from 779 images. Performance evaluation was based on key detection metrics, including F1 score, precision, recall, mAP@0.5, and confusion matrix. The results showed that the model achieved acceptable recognition performance for large and frequently occurring object classes such as vehicles and motorcycles. However, detection performance dropped significantly for smaller or less visually distinct classes, such as traffic signs and traffic lights.

A major limitation of the study was the small and imbalanced dataset, which constrained the model’s ability to generalize and led to unstable detection behavior—particularly in the case of underrepresented object categories. The low recall and irregular F1 score patterns across confidence thresholds reflect the insufficient exposure of the model to diverse object instances during training. These shortcomings highlight the need for a larger and more representative dataset that better captures variability in object types, scales, and environments.

The novelty of the present study lies in the combined assessment of multiple object classes under varying environmental and weather conditions, which provides a more realistic simulation of autonomous vehicle perception challenges. This comprehensive evaluation setup establishes a methodological foundation for further research.

Future work will extend this framework by increasing the dataset size, validating the model in real-world settings, and performing comparative analysis with alternative object detection architectures, including other YOLO variants. The flexible and reproducible pipeline demonstrated in this study offers a solid baseline for iterative development and algorithmic benchmarking in simulated and practical environments.