Abstract

Early Autism Spectrum Disorder (ASD) detection is important for early intervention. This study investigates the potential of eye-tracking (ET) data combined with machine learning (ML) models to classify ASD and Typically Developed (TD) children. Using a publicly available dataset, five ML models were evaluated: Support Vector Machine (SVM), Random Forest, Convolutional Neural Network (CNN), Artificial Neural Network (ANN), and Random Forest improved with Convolutional Filters (ConvRF). The models were trained and tested using a set of evaluation metrics, including accuracy, precision, recall, F1-score, and ROC Area Under the Curve (AUC). Among these, the ConvRF model attained superior performance, achieving a recall of 90% and an AUC of 88%, indicating its robustness in identifying ASD children. These results highlight the model’s effectiveness in ensuring high sensitivity, which is critical for early ASD detection. This study shows the promise of combining ML and eye-tracking technology as accessible non-invasive tools for enhancing early ASD detection, resulting in timely and personalized interventions. Limitations and recommendations for future research are also included.

1. Introduction

Autism Spectrum Disorder (ASD) is a neurodevelopmental disorder affecting how people interact, learn, and behave. Symptoms typically appear within the first three years of life, and the disorder tends to affect males more frequently, with a ratio of 4 to 1. Regarding high-functioning individuals, this ratio increases to approximately 10 to 1, whereas it decreases to around 2 to 1 in low-functioning cases [1]. Early autism diagnosis is crucial to maximize the benefits of intervention [2,3]. Despite the progress in intervention strategies, studies show that about 10% of people with autism remain non-verbal throughout their lives [4]. Individualized and timely support, especially at the age of preschool, increases the likelihood of developing verbal communication and social skills [5,6,7], improving the quality of their lives and reducing stress for their families.

Eye-tracking (ET) technology is a method for monitoring eye movements, recording where people look, for how long, and how their eyes move. Moreover, it measures gaze points relative to the face and pupil reactions to different stimuli. Implementing ET requires recording devices (cameras and sensors) to capture eye movements, often enhanced by infrared light reflecting off the eyes for greater accuracy.

ET technology is a useful tool for tracking and analyzing human behavior, providing insights into how individuals process visual stimuli, interact with their environment, and make decisions in real time. ET is widely applied in psychology and neuroscience to detect neurological disorders such as autism [8,9] and Parkinson’s disease [10]. Additionally, it is utilized in marketing to understand which aspects of advertisements capture attention, as well as in educational fields to assess how students interact with teaching materials [11]. Finally, ET encompasses applications in entertainment, offering more interactive experiences for consumers in movies and video games [12].

Three main ET techniques are used as follows:

- Video-Oculography (VOG): Visible light and cameras are employed to capture eye movement, with options for remote or head-mounted setups. However, it might be affected by lighting conditions and reflections.

- Pupil-Corneal Reflection (PCR): Infrared light is utilized to produce a corneal reflection as a stable reference point, allowing for accurate tracking even with head movement. Although precise, PCR struggles with varying lighting conditions.

- Electrooculography (EOG): Records eye movement by detecting electrical potential differences across electrodes around the eye. This technique is particularly useful in low-light environments and can measure movements even with closed eyes, but the electrodes can make it more intrusive.

2. Research Objective

This study employs an eye-tracking dataset developed by Carette et al. [13] and aims to achieve autism classification in children through five different machine learning (ML) algorithms, i.e., Support Vector Machine (SVM), Random Forest, Convolutional Neural Network (CNN), Artificial Neural Network (ANN), and Random Forest with Convolutional Filters (ConvRF). The key innovation of this approach, compared to earlier studies using the same dataset, is the application of the ConvRF algorithm. This algorithm combines pattern recognition in images, enabled by CNNs’ convolutional filters, with Random Forest’s training speed, offering an efficient and accurate classification method.

3. Research and Related Work

Autism research has significantly benefited from the application of ML techniques in a variety of data sources, such as images [14,15], data collected during web browsing tasks [16,17], as well as biological data. With respect to biological data, one prominent approach involves the use of brain Magnetic Resonance Imaging (MRI) images, which provide valuable insights into the structural and functional differences in the brains of ASD individuals. Multiple studies have shown that ML algorithms, such as Support Vector Machines (SVMs) and Convolutional Neural Networks (CNNs), can be highly effective in analyzing these brain images. For example, Krishna Kumar et al. [18] implemented ML models to identify patterns in MRI data, attaining diagnostic accuracy rates of up to 92%. Similarly, Song et al. [19] employed radiomics, a method for extracting features from medical images, and attained impressive results, i.e., an accuracy of 89.47% using SVM and 86.48% using CNN. These findings underscore the potential of MRI-based approaches for ASD classification.

In addition to MRI, Electroencephalography (EEG) data have proven to be a valuable tool in ASD research. EEG measures the electrical activity of the brain, providing insights into the frequency bands that reflect various brain functions. Studies have utilized EEG signals to identify biomarkers related to ASD, employing ML algorithms to classify individuals based on their brain activity. Jayawardana et al. [20] utilized EEG data from children with ASD, leading to accuracy of over 90% employing CNN models, whereas Bhaskarachary et al. [21] implemented Transfer Learning (TL) techniques regarding EEG data, utilizing Extra Trees and XGBoost classifiers, which resulted in accuracy of 67.7% and recall of 83.3%. These results highlight the potential of EEG-based models to improve diagnostic accuracy, providing a complementary approach to MRI-based methods.

Another technology applied in ASD research is ET, examining the gaze patterns of individuals while engaging with visual stimuli. ET has been shown to reveal distinct differences in gaze behavior between ASD individuals and neurotypical ones. ML algorithms have also been employed regarding gaze patterns for autism classification, with promising results. For example, Carette et al. [22] utilized Long Short-Term Memory (LSTM) networks to analyze ET data, achieving increased accuracy (83%) in classifying ASD children, showing the power of temporal sequence analysis in understanding ASD-related gaze behavior. In another study, Zhao et al. [23] combined ML with ET data, employing algorithms such as Naïve Bayes and Random Forest to attain high diagnostic accuracy of 92%. These studies emphasize that ML, when applied to ET data, can offer highly reliable classification results, further improving early ASD detection and diagnosis.

Taken together, these studies demonstrate the developing intersection of ML, biological data (such as MRI, EEG, and ET), and ASD detection. The integration of these methodologies has shown great promise in enhancing the accuracy and efficiency of early ASD detection, emphasizing that ML is a powerful tool for advancing clinical diagnostics.

4. Methodology

4.1. Dataset

In the current study, the dataset from [13] was utilized. This dataset includes visualized ET data recording eye movements and attention distribution of ASD children and neurotypical ones. The participants, 59 individuals (38 males and 21 females), watched videos that included various visual stimuli, and the data contain gaze coordinates, fixation durations, and saccadic eye movements. Participants were classified into two categories: (1) 30 neurotypical (TC) and (2) 29 individuals with ASD (TS).

The participants’ ages ranged from 3 to 13 years, with 47% being between 5 and 9 years old. The dataset also includes assessments using the Childhood Autism Rating Scale (CARS), which is a tool that classifies individuals within the autism spectrum. The majority of autistic participants were rated with CARS scores ranging from 25 to 36, indicating mild to moderate forms of autism.

A total of 547 images were generated (328 from neurotypical participants and 219 from individuals with ASD), depicting the patterns of eye movements and areas of interest. In Table 1, the overall statistics of the participants are shown.

Table 1.

Overall statistics of participants.

4.2. Preprocessing

The dataset initially consisted of 480 × 640 pixel images with 3 color channels. In the first preprocessing step, these images were normalized to a range of 0–1. To achieve this, all pixel values were divided by 255.0, which is the maximum possible value for a pixel. This normalization process leads to a more stable learning process and reduces overfitting as models utilizing input data with large variations tend to learn overly specific features rather than generalizing to new data. Moreover, normalization accelerates the convergence of optimization algorithms.

The images were then converted to grayscale to reduce the color channels from three (RGB) to one. This reduces data complexity and enhances processing speed. Additionally, this technique contributes to the elimination of any noise present in the images caused by color information, making pattern recognition easier.

Finally, since the images had a black background with significant empty space, the image size was adjusted to 225 × 225 pixels. This resizing maintains uniformity across all images as they now share the same dimensions while also reducing the amount of data the model needs to process. This reduces both training and prediction time without losing any important details in the images.

4.3. Algorithms

For the classification task, several algorithms were tested with various hyperparameter values to determine the optimal implementation. The following algorithms were evaluated:

- Random Forest.

- N_estimators: The number of decision trees used in the algorithm.

- Max_depth: The maximum depth of the decision trees.

- Min_samples_split: The minimum number of samples required to split a node.

- Min_samples_leaf: The minimum number of samples required to form a leaf node.

- Support Vector Machine (SVM).

- Artificial Neural Network (ANN). The hyperparameters used for training the ANN are shown in Table 2.

Table 2. ANN hyperparameters.

Table 2. ANN hyperparameters.- Number of hidden layers: The number of hidden layers in the model.

- Number of neurons: The number of neurons in each hidden layer.

- Activation function: The activation function for each neuron in the layer.

- Convolutional Neural Network (CNN). The hyperparameters used for training the CNN are shown in Table 3.

Table 3. CNN hyperparameters.

Table 3. CNN hyperparameters.- Convolutional Filters: The number of filters applied at each convolution stage. Each filter extracts specific features from the image.

- Kernel Size: The two-dimensional matrix used as a filter in the convolution process. The kernel’s dimensions determine how many points in the image are checked during each convolution step.

- Pool Size: The size of the region from which the maximum or average value is selected during pooling. Pooling reduces the spatial dimensions of the features while preserving significant information.

- Convolutional Filters + Random Forest (ConvRF).

5. ConvRF Model Tuning

The ConvRF model consists of two distinct components. The first is a Convolutional Neural Network (CNN) employed for feature extraction, whereas the second one is a Random Forest classifier responsible for classification. The pretrained VGG16 (Visual Geometry Group) model [18], a deep learning architecture developed at the University of Oxford including sixteen layers, was utilized for feature extraction from images. The initial thirteen layers are convolutional, and the final three are fully connected. This model contains a total of 138 million parameters but is commonly used for TL applications. In these cases, it is pretrained and only the last layers are retrained to adapt to the specific problem.

5.1. Fine-Tuning

To fine-tune the model for the dataset of this study, a GlobalAveragePooling2D layer was first added to reduce the feature dimensions and create a simplified vector for each feature. In addition, a Dense layer with a single neuron was incorporated to perform the classification. The weights for each neuron in the initial 12 layers were set as non-trainable, ensuring that only the layers responsible for extracting complex features from the images were trained. The model was trained for 15 epochs using the Adam optimizer with a low learning rate (0.00001) to prevent overfitting.

Once the model was trained on the dataset, the last two layers—responsible for classification—were removed and replaced with a Random Forest classifier. This classifier was then trained not on the original dataset images but on the data extracted by VGG16.

5.2. Hyperparameter Tuning

Following the configuration of the feature extraction model, hyperparameter tuning took place on the classifier to assess potential improvements in the overall model. The Grid Search technique was implemented for hyperparameter tuning, systematically exploring a grid of values and testing all possible combinations to identify the optimal set based on the selected metric. Table 4 presents the grid used for the search.

Table 4.

Parameter search grid.

The Grid Search technique evaluated combinations, performing 5-fold cross-validation, a training evaluation technique dividing data into five subsets, using four for training and one for validation; this process was repeated five times to use each subset for validation, thus running 405 times.

6. Results and Discussion

6.1. Training Results

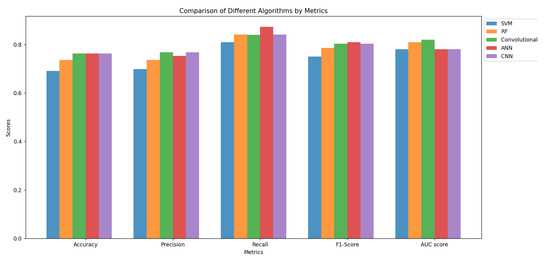

In Table 5, the algorithms used and their performance on selected metrics are presented, whereas these results are visualized in Figure 1.

Table 5.

Algorithm performance results by metric.

Figure 1.

Comparison of algorithms.

Table 5 shows that all the algorithms attained relatively similar metric scores, with Random Forest and ConvRF showing higher AUC scores. Additionally, ConvRF, CNN, and ANN demonstrated the highest accuracy and precision, whereas ANN achieved the best F1-score and recall values.

Examining these metrics and the AUC score, we notice that the models using the Random Forest classification method exhibited better class separation as their curves were closer to 1 compared to the other models, whose curves were between 1 and the 0.5 line, indicating greater randomness.

6.2. Model Tuning Results

Table 6 includes the performance of the fine-tuned model on the test dataset across the evaluation metrics applied.

Table 6.

Fine-tuning results.

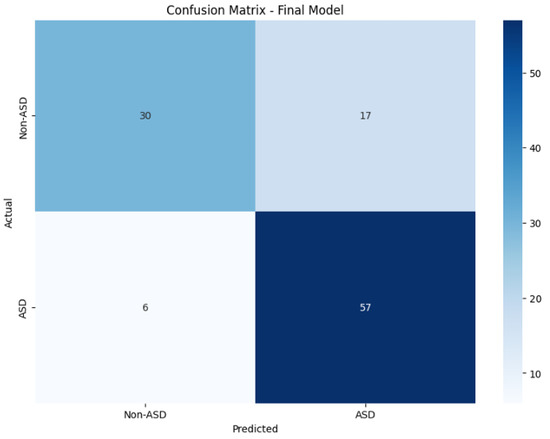

A general improvement across all the metrics was observed, with the most notable enhancement observed in the AUC score. Figure 2 depicts the model’s confusion matrix, which also reveals that this model showed a tendency to identify ASD more readily while struggling with neurotypical cases, misclassifying approximately 1 in 3 instances.

Figure 2.

Fine-tuned model confusion matrix.

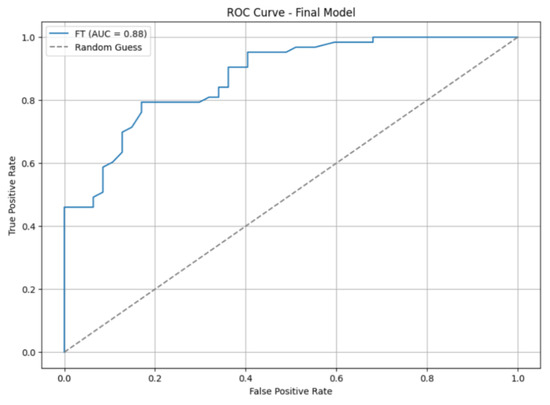

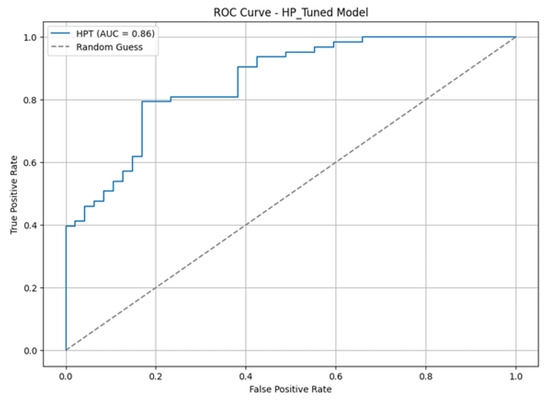

Figure 3 shows the ROC curve along with the AUC score. In addition to the significant improvement in the AUC score, the ROC curve appeared smoother, indicating more stable performance.

Figure 3.

Fine-tuned model AUC.

6.3. Hyperparameter Tuning Results

As shown in Table 7, Grid Search demonstrated the following optimal values for this issue.

Table 7.

Optimal hyperparameters.

Next, VGG16 was used for feature extraction from the images, and Random Forest was trained on these features utilizing the optimal hyperparameters shown in Table 7. Table 8 contains the performance of the fine-tuned model on the test dataset across the evaluation metrics used.

Table 8.

Hyperparameter tuning results.

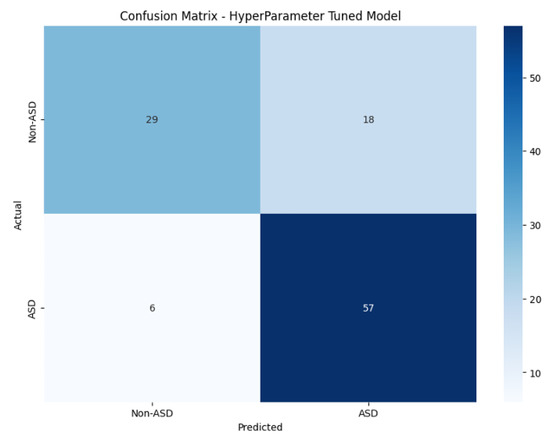

An improvement in results was observed compared to the initial models, although the results were slightly lower than the fine-tuned VGG16. Figure 4 displays the model’s confusion matrix, whereas Figure 5 shows the ROC curve and the AUC score.

Figure 4.

Hyperparameter-tuned model confusion matrix.

Figure 5.

Hyperparameter-tuned model AUC.

6.4. Overall Results

Several studies have examined the combination of ML algorithms with ET technology for ASD detection. Akter et al. [24] used the dataset of [13] and found that a Multi-Layer-Perceptron (MLP) achieved the best results, i.e., an AUC of 79.2% and recall of 88.9%. Elbattah et al. [15] employed the same ET dataset and implemented Transfer Learning methods to classify the results. Applying VGG-16, ResNet, and DenseNet, they attained an AUC of 78% and recall of 56%.

Building on these findings, our study focused on implementing ML algorithms to ET data for early autism detection in children. Five algorithms were evaluated—Support Vector Machine (SVM), Random Forest (RF), Convolutional Neural Network (CNN), Artificial Neural Network (ANN), and Random Forest with Convolutional Filters (ConvRF). These algorithms were tested using metrics such as accuracy, precision, recall, F1-score, ROC curve, AUC score, and confusion matrix.

ConvRF was the most effective model due to its consistent performance across all the metrics. Particularly, it outperformed SVM by 18.95%, Random Forest by 10.27%, ANN by 8.51%, and CNN by 4.62%. After fine-tuning certain layers of the pretrained model on the study’s dataset and employing hyperparameter optimization, the ConvRF model attained an AUC of 88% and a recall of 90%. These results emphasize the model’s ability to effectively distinguish between neurotypical and ASD children, specifically excelling in recall, which ensures high sensitivity in recognizing ASD cases. The ConvRF approach shows advantages in efficiency and interpretability, providing an effective tool for ASD classification based solely on ET data.

Compared to other studies combining machine learning (ML) with eye-tracking (ET) data for ASD detection, our method demonstrates significant improvements. For example, Akter et al. [24] attained an AUC of 79.2% and a recall of 88.9% employing a Multi-Layer-Perceptron (MLP) on the dataset from [13]. In contrast, our model achieved an AUC of 88% and a recall of 90%, demonstrating higher overall discriminative power and slightly improved sensitivity. Furthermore, Elbattah et al. [15] applied Transfer Learning methods, particularly VGG-16, ResNet, and DenseNet, on the same ET dataset, resulting in an AUC of 78% and a significantly lower recall of 56%. Our method obviously outperforms these Transfer Learning models in both AUC and recall, indicating a more robust and effective approach for early autism detection based exclusively on ET data.

7. Limitations

Despite the promising performance of the Convolutional Random Forest (ConvRF) model, several limitations need to be taken into account. Firstly, the relatively small sample of 59 participants, combined with an age bias of 5–9 years and a focus on autism of specific functionality, limit the generalizability of our findings. Furthermore, the imbalance in the dataset, with 328 images of neurotypical individuals compared to 219 of ASD ones, may result in bias regarding the model’s training and affect classification performance. Secondly, although the ConvRF model attained a competitive AUC of 0.88, the presentation of results depends on combined performance metrics. This method lacks detailed subgroup analyses, such as performance stratified by age or CARS score, and omits confidence intervals. Such detailed analysis would provide a more robust assessment of the model’s behavior across different conditions. Lastly, although the model has a rapid prediction time of 0.0006 seconds, the overall pipeline, containing the initial generation of eye-tracking pattern images, demands substantial computational resources. This requirement limits the scalability and real-time use of the model, specifically when dealing with raw eye-tracking data in clinical settings.

8. Future Work

To advance our research, future studies could prioritize several key areas. Firstly, the acquisition of larger, more diverse datasets, encompassing varied demographics and clinical presentations, is essential to improve model generalizability and decrease bias. Secondly, the implementation of advanced machine learning models, specifically deep neural networks (DNNs) such as attention-based and transformer networks, could increase classification accuracy. Cloud computing could be implemented to optimize training and processing of large datasets. Thirdly, the integration of eye-tracking data with multimodal data, such as EEG, fMRI, and clinical outcomes, is crucial for a comprehensive understanding of the disorder and personalized interventions. Longitudinal studies are needed to track disorder progression and intervention effectiveness. Fourthly, Explainable AI (XAI) techniques could be employed to improve model interpretability and clinical trust. Finally, future research could focus on developing individualized treatment plans based on multimodal predictive models and conducting precise clinical validation studies to translate research into effective clinical practice.

9. Conclusions

The application of eye-tracking (ET) technology in combination with machine learning (ML) presents a promising approach to improve the understanding and early detection of Autism Spectrum Disorder (ASD). This study presented a novel approach to ASD classification, utilizing an ET dataset from Carette et al. [13], aiming to differentiate between ASD and Typically Developed (TD) children. Among the machine learning algorithms evaluated, Convolutional Random Forest (ConvRF) achieved superior performance, obtaining a recall of 90% and an Area Under the Curve (AUC) of 88%. This performance highlights the potential of ConvRF to accurately classify ASD in children, contributing to a significant improvement over other traditional methods. Particularly, the ConvRF model attained these results with considerably faster training times compared to deep neural network models such as Artificial Neural Networks (ANNs) and Convolutional Neural Networks (CNNs), which is an important advantage for practical application. This efficiency in training time, in combination with the high classification performance, makes ConvRF an interesting option for clinical applications. The recall and AUC values achieved show that our model could be implemented as a valuable tool in clinical settings, potentially combined with existing diagnostic methods and advancing earlier interventions. Early ASD detection is important since it enables the implementation of timely and personalized interventions, which can significantly enhance developmental outcomes. The success of the ConvRF approach highlights the potential of combining advanced machine learning techniques with eye-tracking data to improve ASD detection and intervention strategies. Future studies could focus on validating these findings in larger, more diverse populations and examining the integration of this model into real-world clinical workflows.

Author Contributions

Conceptualization, N.K., K.-F.K., P.R.-G., P.S. and G.F.F.; methodology, N.K. and K.-F.K.; software, N.K.; validation, N.K., K.-F.K., P.R.-G., P.S. and G.F.F.; formal analysis, N.K. and K.-F.K.; investigation, N.K. and K.-F.K.; resources, N.K., K.-F.K. and P.R.-G.; data curation, N.K. and K.-F.K.; writing—original draft preparation, N.K. and K.-F.K.; writing—review and editing, N.K. and K.-F.K.; visualization, N.K.; supervision, K.-F.K. and G.F.F.; project administration, K.-F.K. and G.F.F.; funding acquisition, P.R.-G. and P.S. All authors have read and agreed to the published version of the manuscript.

Funding

This project has received funding from the European Union’s Horizon Europe research and innovation program under grant agreement No. 101070214 (TRUSTEE). Disclaimer: Funded by the European Union. Views and opinions expressed are, however, those of the author(s) only and do not necessarily reflect those of the European Union or European Commission. Neither the European Union nor the European Commission can be held responsible for them.

Institutional Review Board Statement

This study is based on a publicly available dataset, for which ethical approval had been obtained, therefore, it is not required for the present study.

Informed Consent Statement

This study is based on a publicly available dataset, for which informed consent had been obtained from all the subjects involved in the study, therefore, it is not required for the present study.

Data Availability Statement

The original data presented in the study are openly available in FigShare at https://figshare.com/s/5d4f93395cc49d01e2bd (accessed on 21 August 2025).

Conflicts of Interest

Author Panagiotis Radoglou-Grammatikis was employed by the company K3Y. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Fombonne, E. Epidemiology of pervasive developmental disorders. Pediatr. Res. 2009, 65, 591–598. [Google Scholar] [CrossRef] [PubMed]

- Cohen, H.; Amerine-Dickens, M.; Smith, T. Early intensive behavioral treatment: Replication of the UCLA model in a community setting. J. Dev. Behav. Pediatr. 2006, 27, S145–S155. [Google Scholar] [CrossRef] [PubMed]

- Helt, M.; Kelley, E.; Kinsbourne, M.; Pandey, J.; Boorstein, H.; Herbert, M.; Fein, D. Can children with autism recover? If so, how? Neuropsychol. Rev. 2008, 18, 339–366. [Google Scholar] [CrossRef] [PubMed]

- Koegel, L.K. Interventions to facilitate communication in autism. J. Autism Dev. Disord. 2000, 30, 383–391. [Google Scholar] [CrossRef]

- Educating Children with Autism; The National Academies Press: Washington, DC, USA, 2001.

- Landa, R. Early communication development and intervention for children with autism. Ment. Retard. Dev. Disabil. Res. Rev. 2007, 13, 16–25. [Google Scholar] [CrossRef] [PubMed]

- Koegel, L.K.; Koegel, R.L.; Ashbaugh, K.; Bradshaw, J. The importance of early identification and intervention for children with or at risk for autism spectrum disorders. Int. J. -Speech-Lang. Pathol. 2014, 16, 50–56. [Google Scholar] [CrossRef] [PubMed]

- Kollias, K.F.; Syriopoulou-Delli, C.K.; Sarigiannidis, P.; Fragulis, G.F. The contribution of Machine Learning and Eye-tracking technology in Autism Spectrum Disorder research: A Review Study. In Proceedings of the 2021 10th International Conference on Modern Circuits and Systems Technologies (MOCAST), Thessaloniki, Greece, 5–7 July 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Kollias, K.F.; Syriopoulou-Delli, C.K.; Sarigiannidis, P.; Fragulis, G.F. The contribution of machine learning and eye-tracking technology in autism spectrum disorder research: A systematic review. Electronics 2021, 10, 2982. [Google Scholar] [CrossRef]

- Brien, D.C.; Riek, H.C.; Yep, R.; Huang, J.; Coe, B.; Areshenkoff, C.; Grimes, D.; Jog, M.; Lang, A.; Marras, C.; et al. Classification and staging of Parkinson’s disease using video-based eye tracking. Park. Relat. Disord. 2023, 110, 105316. [Google Scholar] [CrossRef] [PubMed]

- Wedel, M.; Pieters, R. Eye tracking for visual marketing. Found. Trends® Mark. 2006, 1, 231–320. [Google Scholar] [CrossRef]

- Corcoran, P.; Nanu, F.; Petrescu, S.; Bigioi, P. Real-time eye gaze tracking for gaming design and consumer electronics systems. IEEE Trans. Consum. Electron. 2012, 58, 347–355. [Google Scholar] [CrossRef]

- Carette, R.; Elbattah, M.; Dequen, G.; Guérin, J.L.; Cilia, F. Visualization of Eye-Tracking Patterns in Autism Spectrum Disorder: Method and Dataset. In Proceedings of the 2018 Thirteenth International Conference on Digital Information Management (ICDIM), Berlin, Germany, 24–26 September 2018; pp. 248–253. [Google Scholar] [CrossRef]

- Nikou, R.; Tsaknis, A.; Margaritis, P.; Alvanos, S.; Kollias, K.F.; Maraslidis, G.S.; Asimopoulos, N.; Sarigiannidis, P.; Argyriou, V.; Fragulis, G.F. Machine learning data-based approaches for autism spectrum disorder classification utilising facial images. In Proceedings of the International Conference Series on ICT, Entertainment Technologies, and Intelligent Information Management in Education and Industry (ETLTC 2024), Aizuwakamatsu, Japan, 23–26 January 2024; p. 050013. [Google Scholar] [CrossRef]

- Elbattah, M.; Carette, R.; Dequen, G.; Guérin, J.L.; Cilia, F. Learning clusters in autism spectrum disorder: Image-based clustering of eye-tracking scanpaths with deep autoencoder. In Proceedings of the 2019 41st Annual international conference of the IEEE engineering in medicine and biology society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 1417–1420. [Google Scholar]

- Kollias, K.F.; Syriopoulou-Delli, C.K.; Sarigiannidis, P.; Fragulis, G.F. Autism detection in High-Functioning Adults with the application of Eye-Tracking technology and Machine Learning. In Proceedings of the 2022 11th International Conference on Modern Circuits and Systems Technologies (MOCAST), Bremen, Germany, 8–10 June 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Kollias, K.F.; Maraslidis, G.S.; Sarigiannidis, P.; Fragulis, G.F. Application of machine learning on eye-tracking data for autism detection: The case of high-functioning adults. AIP Conf. Proc. 2024, 3220, 050012. [Google Scholar] [CrossRef]

- R, K.K.; Muthuramalingam, A.; Shankar Iyer, R.; S R, S.; P, V.; M F, A.S.; Priya N, S. Revolutionizing autism spectrum disorder diagnosis: Harnessing the power of machine learning and MRI for enhanced neurodevelopmental care. In Proceedings of the 2024 2nd international conference on artificial intelligence and machine learning applications theme: Healthcare and internet of things (AIMLA), Namakkal, India, 15–16 March 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Song, J.; Chen, Y.; Yao, Y.; Chen, Z.; Guo, R.; Yang, L.; Sui, X.; Wang, Q.; Li, X.; Cao, A.; et al. Combining radiomics and machine learning approaches for objective ASD diagnosis: Verifying white matter associations with ASD. arXiv 2024. [Google Scholar] [CrossRef]

- Jayawardana, Y.; Jaime, M.; Jayarathna, S. Analysis of temporal relationships between ASD and brain activity through EEG and machine learning. In Proceedings of the 2019 IEEE 20th international conference on information reuse and integration for data science (IRI), Los Angeles, CA, USA, 30 July–1 August 2019; pp. 151–158. [Google Scholar] [CrossRef]

- Bhaskarachary, C.; Najafabadi, A.J.; Godde, B. Machine learning supervised classification methodology for autism spectrum disorder based on resting-state electroencephalography (EEG) signals. In Proceedings of the 2020 IEEE signal processing in medicine and biology symposium (SPMB), Philadelphia, PA, USA, 5 December 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Carette, R.; Cilia, F.; Dequen, G.; Bosche, J.; Guerin, J.L.; Vandromme, L. Automatic autism spectrum disorder detection thanks to eye-tracking and neural network-based approach. In Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, Proceedings of Internet of Things (IoT) Technologies for HealthCare (HealthyIoT 2017), Angers, France, 24–25 October 2017; Ahmed, M.U., Begum, S., Fasquel, J.B., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 225, pp. 75–81. [Google Scholar] [CrossRef]

- Zhao, Z.; Tang, H.; Zhang, X.; Qu, X.; Hu, X.; Lu, J. Classification of children with autism and typical development using eye-tracking data from face-to-face conversations: Machine learning model development and performance evaluation. J. Med. Internet Res. 2021, 23, e29328. [Google Scholar] [CrossRef] [PubMed]

- Akter, T.; Ali, M.H.; Khan, M.I.; Satu, M.S.; Moni, M.A. Machine learning model to predict autism investigating eye-tracking dataset. In Proceedings of the 2021 2nd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 5–7 January 2021; IEEE: New York, NY, USA, 2024; pp. 383–387. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).