Artificial Intelligence for Historical Manuscripts Digitization: Leveraging the Lexicon of Cyril †

Abstract

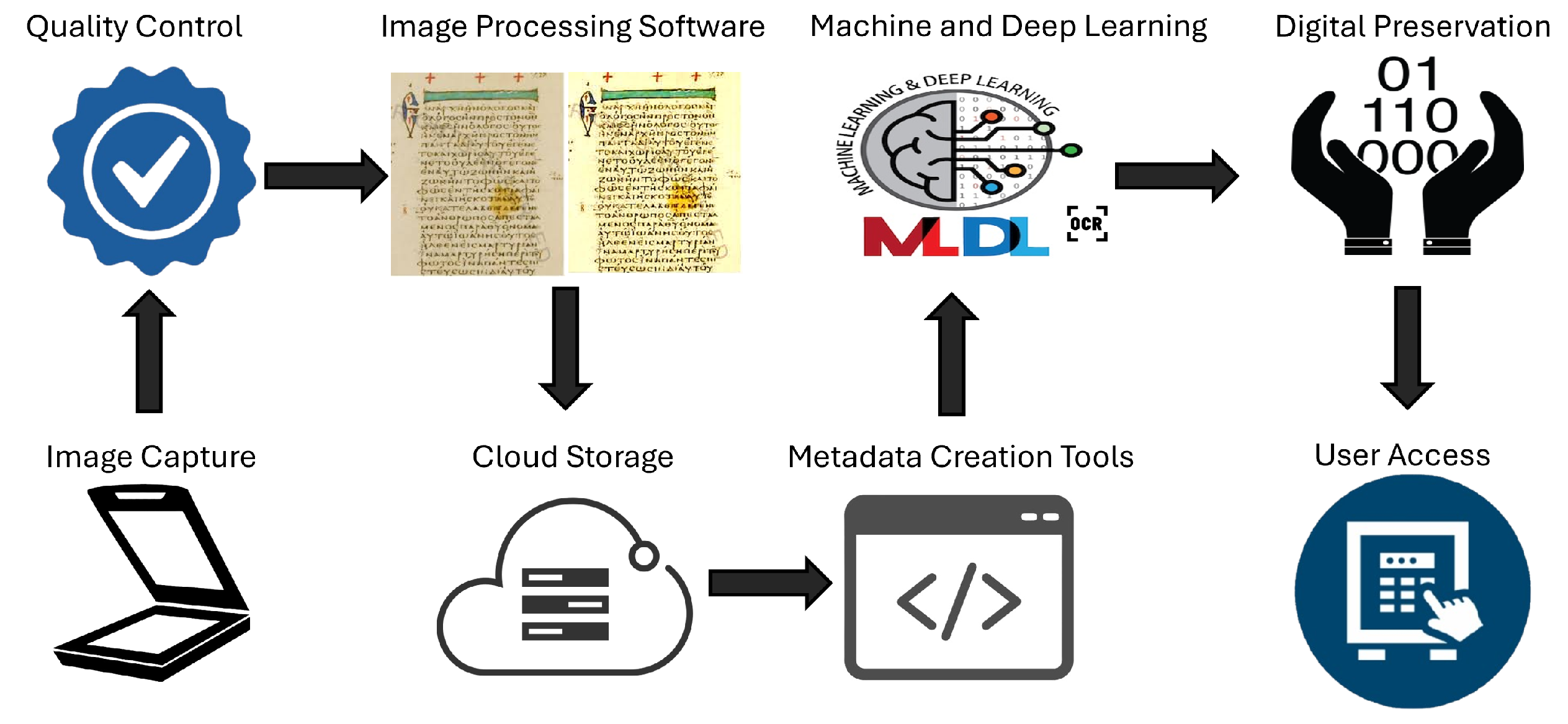

1. Introduction

- A detailed study concerning the digitization pipeline of historical manuscripts and the importance of AI in improving performance.

- A qualitative and quantitative experimentation based on Cyril’s Lexicon.

- A direct search tool for the content and data, e.g., metadata, comments, drafts, and transcriptions of Cyril’s Lexicon to support philological research and the preservation of cultural heritage.

2. Background on Manuscripts Digitization

2.1. Image Capture

2.2. Quality Control

2.3. Image Processing

2.4. Cloud Storage

2.5. Metadata Creation

2.6. Machine and Deep Learning Analysis

2.7. Digital Preservation and User Access

3. Digitization of Cyril’s Lexicon

3.1. Cyril’s Lexicon

3.2. Image Capture

3.3. Metadata of Interest

3.4. Annotation Procedure

3.5. Deep Learning as Annotation Assistant

3.6. A Graphical User Interface for Metadata and Transcription Management

- Book selection and metadata management: Users can select a specific lexicon (book) from the interface. Once selected, the GUI allows the input and management of metadata information corresponding to the chosen lexicon.

- Page selection and metadata assignment Users can navigate through the pages of the selected book, opening any page within the GUI. For each page, metadata can be entered or updated as needed.

- Transcription and annotation editing: Based on the information in the CSV files, the GUI enables users to add metadata or transcribe the content of entries, titles, scholions, and decorations. While transcribing, the corresponding page is displayed in the GUI, and bounding boxes indicating the annotated items are depicted, providing a visual reference for accuracy.

3.7. Search Engine

3.8. Main Challenges

4. Discussion

4.1. Impact of Digitizing Cyril’s Lexicon

4.2. AI in Preserving Cultural Heritage

4.3. Ethical Issues

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Reynolds, L.D.; Wilson, N.G. Scribes and Scholars: A Guide to the Transmission of Greek and Latin Literature; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Ioakeimidou, D.; Moutsis, S.N.; Evangelidis, K.; Tsintotas, K.A.; Nastou, P.E.; Perdiki, E.; Gkinidis, E.; Tsoukatos, N.; Tsolomitis, A.; Konstantinidou, M.; et al. Cyril’s Lexicon Layout Analysis Through Deep Learning. In Proceedings of the IEEE International Conference on Imaging Systems and Techniques, Tokyo, Japan, 14–16 October 2024; pp. 1–6. [Google Scholar]

- Lopatin, L. Library digitization projects, issues and guidelines: A survey of the literature. Libr. Tech. 2006, 24, 273–289. [Google Scholar] [CrossRef]

- Pandey, R.; Kumar, V. Exploring the Impediments to Digitization and Digital Preservation of Cultural Heritage Resources: A Selective Review. Preserv. Digit. Technol. Cult. 2020, 49, 26–37. [Google Scholar] [CrossRef]

- Liu, J.; Ma, X.; Wang, L.; Pei, L. How Can Generative Artificial Intelligence Techniques Facilitate Intelligent Research into Ancient Books? Acm J. Comput. Cult. Herit. 2024, 17, 1–20. [Google Scholar] [CrossRef]

- Quirós, L.; Vidal, E. Reading order detection on handwritten documents. Neural Comput. Appl. 2022, 34, 9593–9611. [Google Scholar] [CrossRef]

- Perino, M.; Pronti, L.; Moffa, C.; Rosellini, M.; Felici, A. New Frontiers in the Digital Restoration of Hidden Texts in Manuscripts: A Review of the Technical Approaches. Heritage 2024, 7, 683–696. [Google Scholar] [CrossRef]

- Wei, D.; An, S.; Zhang, X.; Tian, J.; Tsintotas, K.A.; Gasteratos, A.; Zhu, H. Dual Regression for Efficient Hand Pose Estimation. In Proceedings of the IEEE International Conference on Robotics and Automation, Philadelphia, PA, USA, 23–27 May 2022; pp. 6423–6429. [Google Scholar]

- An, S.; Zhang, X.; Wei, D.; Zhu, H.; Yang, J.; Tsintotas, K.A. FastHand: Fast monocular hand pose estimation on embedded systems. J. Syst. Archit. 2022, 122, 102361. [Google Scholar] [CrossRef]

- Kansizoglou, I.; Misirlis, E.; Tsintotas, K.; Gasteratos, A. Continuous emotion recognition for long-term behavior modeling through recurrent neural networks. Technologies 2022, 10, 59. [Google Scholar] [CrossRef]

- Perdiki, E. Preparing Big Manuscript Data for Hierarchical Clustering with Minimal HTR Training. J. Data Min. Digit. Humanit. 2023. [Google Scholar] [CrossRef]

- Kansizoglou, I.; Bampis, L.; Gasteratos, A. Deep feature space: A geometrical perspective. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6823–6838. [Google Scholar] [CrossRef]

- Konstantinidis, F.K.; Kansizoglou, I.; Tsintotas, K.A.; Mouroutsos, S.G.; Gasteratos, A. The role of machine vision in industry 4.0: A textile manufacturing perspective. In Proceedings of the IEEE International Conference on Imaging Systems and Techniques, Kaohsiung, Taiwan, 24–26 August 2021; pp. 1–6. [Google Scholar]

- Oikonomou, K.M.; Kansizoglou, I.; Gasteratos, A. A hybrid reinforcement learning approach with a spiking actor network for efficient robotic arm target reaching. IEEE Robot. Autom. Lett. 2023, 8, 3007–3014. [Google Scholar] [CrossRef]

- Ioakeimidou, D.; Chatzoudes, D.; Chatzoglou, P. Assessing data analytics maturity: Proposing a new measurement scale. J. Bus. Anal. 2025, 8, 55–69. [Google Scholar] [CrossRef]

- Zhong, Z.; Wang, J.; Sun, H.; Hu, K.; Zhang, E.; Sun, L.; Huo, Q. A hybrid approach to document layout analysis for heterogeneous document images. In Proceedings of the International Conference on Document Analysis and Recognition, San José, CA, USA, 21–26 August 2023; pp. 189–206. [Google Scholar]

- Jarlbrink, J.; Snickars, P. Cultural heritage as digital noise: Nineteenth century newspapers in the digital archive. J. Doc. 2017, 73, 1228–1243. [Google Scholar] [CrossRef]

- Schofield, R.; King, L.; Tayal, U.; Castellano, I.; Stirrup, J.; Pontana, F.; Earls, J.; Nicol, E. Image reconstruction: Part 1–understanding filtered back projection, noise and image acquisition. J. Cardiovasc. Comput. Tomogr. 2020, 14, 219–225. [Google Scholar] [CrossRef] [PubMed]

- Perrin, J.M. Digitizing Flat Media: Principles and Practices; Rowman & Littlefield: Lanham, MD, USA, 2015. [Google Scholar]

- Lynn Maroso, A. Educating future digitizers: The Illinois Digitization Institute’s Basics and Beyond digitization training program. Libr. Tech. 2005, 23, 187–204. [Google Scholar] [CrossRef]

- Shashidhara, B.; Amith, G. A review on text extraction techniques for degraded historical document images. In Proceedings of the Second International Conference on Advances in Information Technology (ICAIT), Chikkamagaluru, India, 24–27 July 2024; Volume 1, pp. 1–8. [Google Scholar]

- Verhoeven, G. Multispectral and hyperspectral imaging. In The Encyclopedia of Archaeological Sciences; John Wiley & Sons, Inc: Hoboken, NJ, USA, 2018; pp. 1–4. [Google Scholar]

- Mazzocato, S.; Cimino, D.; Daffara, C. Integrated microprofilometry and multispectral imaging for full-field analysis of ancient manuscripts. J. Cult. Herit. 2024, 66, 110–116. [Google Scholar] [CrossRef]

- Earl, G.; Basford, P.; Bischoff, A.; Bowman, A.; Crowther, C.; Dahl, J.; Hodgson, M.; Isaksen, L.; Kotoula, E.; Martinez, K.; et al. Reflectance transformation imaging systems for ancient documentary artefacts. In Proceedings of the Electronic Visualisation and the Arts, London, UK, 6–8 July 2011. [Google Scholar]

- Lech, P.; Matera, M.; Zakrzewski, P. Using reflectance transformation imaging (RTI) to document ancient amphora stamps from Tanais, Russia. Reflections on first approach to their digitalisation. J. Archaeol. Sci. Rep. 2021, 36, 102839. [Google Scholar] [CrossRef]

- Qian, Q.; Gunturk, B.K. Extending depth of field and dynamic range from differently focused and exposed images. Multidimens. Syst. Signal Process. 2016, 27, 493–509. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, Y.; Zhang, Y.; Ozcan, A. Color calibration and fusion of lens-free and mobile-phone microscopy images for high-resolution and accurate color reproduction. Sci. Rep. 2016, 6, 27811. [Google Scholar] [CrossRef]

- Suissa, O.; Elmalech, A.; Zhitomirsky-Geffet, M. Text analysis using deep neural networks in digital humanities and information science. J. Assoc. Inf. Sci. Technol. 2022, 73, 268–287. [Google Scholar] [CrossRef]

- Jones, C.; Duffy, C.; Gibson, A.; Terras, M. Understanding multispectral imaging of cultural heritage: Determining best practice in MSI analysis of historical artefacts. J. Cult. Herit. 2020, 45, 339–350. [Google Scholar] [CrossRef]

- Pande, S.D.; Jadhav, P.P.; Joshi, R.; Sawant, A.D.; Muddebihalkar, V.; Rathod, S.; Gurav, M.N.; Das, S. Digitization of handwritten Devanagari text using CNN transfer learning–A better customer service support. Neurosci. Inform. 2022, 2, 100016. [Google Scholar] [CrossRef]

- Dulecha, T.G.; Fanni, F.A.; Ponchio, F.; Pellacini, F.; Giachetti, A. Neural reflectance transformation imaging. Vis. Comput. 2020, 36, 2161–2174. [Google Scholar] [CrossRef]

- Koho, M.; Burrows, T.; Hyvönen, E.; Ikkala, E.; Page, K.; Ransom, L.; Tuominen, J.; Emery, D.; Fraas, M.; Heller, B.; et al. Harmonizing and publishing heterogeneous premodern manuscript metadata as Linked Open Data. J. Assoc. Inf. Sci. Technol. 2022, 73, 240–257. [Google Scholar] [CrossRef]

- Alma’aitah, W.; Talib, A.Z.; Osman, M.A. Opportunities and challenges in enhancing access to metadata of cultural heritage collections: A survey. Artif. Intell. Rev. 2020, 53, 3621–3646. [Google Scholar] [CrossRef]

- Sulaiman, A.; Omar, K.; Nasrudin, M.F. Degraded historical document binarization: A review on issues, challenges, techniques, and future directions. J. Imaging 2019, 5, 48. [Google Scholar] [CrossRef] [PubMed]

- Chaitra, B.; Reddy, P.B. Digital image forgery: Taxonomy, techniques, and tools–a comprehensive study. Int. J. Syst. Assur. Eng. Manag. 2023, 14, 18–33. [Google Scholar] [CrossRef]

- Garai, A.; Biswas, S.; Mandal, S.; Chaudhuri, B.B. Dewarping of document images: A semi-CNN based approach. Multimed. Tools Appl. 2021, 80, 36009–36032. [Google Scholar] [CrossRef]

- Hernández, R.M.; Shaus, A. New technologies for tracing magical texts and drawings: Experience with automatic binarization algorithms. In Proceedings of the The Materiality of Greek and Roman Curse Tablets: Technological Advances, Institute for the Study of Ancient Cultures; University of Chicago: Chicago, IL, USA, 2022; pp. 33–43. [Google Scholar]

- Gupta, M.R.; Jacobson, N.P.; Garcia, E.K. OCR binarization and image pre-processing for searching historical documents. Pattern Recognit. 2007, 40, 389–397. [Google Scholar] [CrossRef]

- Wang, J.; Li, W. The construction of a digital resource library of English for higher education based on a cloud platform. Sci. Program. 2021, 2021, 4591780. [Google Scholar] [CrossRef]

- Tsintotas, K.A.; Moutsis, S.N.; Konstantinidis, F.K.; Gasteratos, A. Toward smart supply chain: Adopting internet of things and digital twins. In Proceedings of the AIP International Conference on ICT, Entertainment Technologies, and Intelligent Information Management in Education and Industry, Aizuwakamatsu, Japan, 23–26 January 2024; Volume 3220. [Google Scholar]

- Mansouri, Y.; Toosi, A.N.; Buyya, R. Data storage management in cloud environments: Taxonomy, survey, and future directions. Acm Comput. Surv. (Csur) 2017, 50, 1–51. [Google Scholar] [CrossRef]

- Yang, P.; Xiong, N.; Ren, J. Data security and privacy protection for cloud storage: A survey. IEEE Access 2020, 8, 131723–131740. [Google Scholar] [CrossRef]

- Persico, V.; Montieri, A.; Pescapè, A. On the Network Performance of Amazon S3 Cloud-Storage Service. In Proceedings of the 5th IEEE International Conference on Cloud Networking, Pisa, Italy, 3–5 October 2016; pp. 113–118. [Google Scholar]

- Bisong, E. An overview of google cloud platform services. In Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners; Apress: Berkeley, CA, USA, 2019; pp. 7–10. [Google Scholar]

- Collier, M.; Shahan, R. Microsoft Azure Essentials-Fundamentals of Azure; Microsoft Press: Redmond, WA, USA, 2015. [Google Scholar]

- Gupta, B.; Mittal, P.; Mufti, T. A review on amazon web service (aws), microsoft azure & google cloud platform (gcp) services. In Proceedings of the 2nd International Conference on ICT for Digital, Smart, and Sustainable Development, Jamia Hamdard, New Delhi, India, 27–28 February 2020; p. 9. [Google Scholar]

- Miryala, N.K.; Gupta, D. Big Data Analytics in Cloud–Comparative Study. Int. J. Comput. Trends Technol. 2023, 71, 30–34. [Google Scholar] [CrossRef]

- Jia, M.; Zhao, Y.C.; Zhang, X.; Wu, D. “That looks like something I would do”: Understanding humanities researchers’ digital hoarding behaviors in digital scholarship. J. Doc. 2024, 81, 24–55. [Google Scholar] [CrossRef]

- Dikaiakos, M.D.; Katsaros, D.; Mehra, P.; Pallis, G.; Vakali, A. Cloud computing: Distributed internet computing for IT and scientific research. IEEE Internet Comput. 2009, 13, 10–13. [Google Scholar] [CrossRef]

- Ganesan, P. Cloud-Based Disaster Recovery: Reducing Risk and Improving Continuity. J. Artif. Intell. Cloud Comput. 2024, 3, 2–4. [Google Scholar] [CrossRef]

- Gholami, A.; Laure, E. Security and privacy of sensitive data in cloud computing: A survey of recent developments. arXiv 2016, arXiv:1601.01498. [Google Scholar] [CrossRef]

- Ray, P.P. A survey of IoT cloud platforms. Future Comput. Inform. J. 2016, 1, 35–46. [Google Scholar] [CrossRef]

- Tsintotas, K.A.; Moutsis, S.N.; Kansizoglou, I.; Konstantinidis, F.K.; Gasteratos, A. The advent of AI in modern supply chain. In Proceedings of the Olympus International Conference on Supply Chains, Katerini, Greece, 24–26 May 2024; pp. 333–343. [Google Scholar]

- Humphrey, J. Manuscripts and metadata: Descriptive metadata in three manuscript catalogs: DigCIM, MALVINE, and Digital Scriptorium. Cat. Classif. Q. 2007, 45, 19–39. [Google Scholar] [CrossRef]

- Guéville, E.; Wrisley, D.J. Transcribing medieval manuscripts for machine learning. J. Data Min. Digit. Humanit. 2024, 10, 01. [Google Scholar] [CrossRef]

- Colla, D.; Goy, A.; Leontino, M.; Magro, D.; Picardi, C. Bringing semantics into historical archives with computer-aided rich metadata generation. J. Comput. Cult. Herit. (Jocch) 2022, 15, 1–24. [Google Scholar] [CrossRef]

- Ioakeimidou, D.; Symeonidis, S.; Chatzoudes, D.; Chatzoglou, P. From data to knowledge in four decades: A systematic literature review of human resource analytics. J. Manag. Anal. 2025, 12, 87–116. [Google Scholar] [CrossRef]

- Beals, M.; Bell, E. The atlas of digitised newspapers and metadata: Reports from Oceanic Exchanges. Loughborough 2020, 10, m9. [Google Scholar]

- Bellotto, A. Medieval manuscript descriptions and the Semantic Web: Analysing the impact of CIDOC CRM on Italian codicological-paleographical data. Dhq Digit. Humanit. Q. 2020, 14. [Google Scholar]

- Griffin, S.M. Epigraphy and paleography: Bringing records from the distant past to the present. Int. J. Digit. Libr. 2023, 24, 77–85. [Google Scholar] [CrossRef]

- Philips, J.P.; Tabrizi, N. Historical document processing: Historical document processing: A survey of techniques, tools, and trends. arXiv 2020, arXiv:2002.06300. [Google Scholar] [CrossRef]

- Moutsis, S.N.; Tsintotas, K.A.; Ioannis, K.; An, S.; Yiannis, A.; Gasteratos, A. Fall detection paradigm for embedded devices based on YOLOv8. In Proceedings of the IEEE International Conference on Imaging Systems and Techniques, Copenhagen, Denmark, 17–19 October 2023; pp. 1–6. [Google Scholar]

- Moutsis, S.N.; Tsintotas, K.A.; Kansizoglou, I.; Gasteratos, A. Evaluating the Performance of Mobile-Convolutional Neural Networks for Spatial and Temporal Human Action Recognition Analysis. Robotics 2023, 12, 167. [Google Scholar] [CrossRef]

- Lombardi, F.; Marinai, S. Deep learning for historical document analysis and recognition—A survey. J. Imaging 2020, 6, 110. [Google Scholar] [CrossRef]

- Khayyat, M.M.; Elrefaei, L.A. Towards author recognition of ancient Arabic manuscripts using deep learning: A transfer learning approach. Int. J. Comput. Digit. Syst. 2020, 90. [Google Scholar] [CrossRef]

- Hollaus, F.; Gau, M.; Sablatnig, R. Multispectral image acquisition of ancient manuscripts. In Proceedings of the 4th International Conference on Computational Intelligence for Modelling, Control and Automation (CIMCA 2012), Lemessos, Cyprus, 29 October–3 November 2012; pp. 30–39. [Google Scholar]

- Prathap, G.; Afanasyev, I. Deep learning approach for building detection in satellite multispectral imagery. In Proceedings of the 2018 international conference on intelligent systems (IS), Funchal, Portugal, 25–27 September 2018; pp. 461–465. [Google Scholar]

- Sullivan, M.J.; Easton Roger, J.; Beeby, A. Reading Behind the Lines: Ghost Texts and Spectral Imaging in the Manuscripts of Alfred Tennyson. Rev. Engl. Stud. 2025, 76, hgaf007. [Google Scholar] [CrossRef]

- Jayanthi, N.; Indu, S.; Hasija, S.; Tripathi, P. Digitization of ancient manuscripts and inscriptions—A review. In Proceedings of the Advances in Computing and Data Sciences: 1st International Conference, Ghaziabad, India, 11–12 November 2016; pp. 605–612. [Google Scholar]

- Owen, D.; Groom, Q.; Hardisty, A.; Leegwater, T.; Livermore, L.; van Walsum, M.; Wijkamp, N.; Spasic, I. Towards a scientific workflow featuring Natural Language Processing for the digitisation of natural history collections. Res. Ideas Outcomes 2020, 6, e50150. [Google Scholar] [CrossRef]

- Kahle, P.; Colutto, S.; Hackl, G.; Mühlberger, G. Transkribus—A Service Platform for Transcription, Recognition and Retrieval of Historical Documents. In Proceedings of the 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 04, pp. 19–24. [Google Scholar]

- Nockels, J.; Gooding, P.; Ames, S.; Terras, M. Understanding the application of handwritten text recognition technology in heritage contexts: A systematic review of Transkribus in published research. Arch. Sci. 2022, 22, 367–392. [Google Scholar] [CrossRef]

- Miloud, K.; Abdelmounaim, M.L.; Mohammed, B.; Ilyas, B.R. Advancing ancient arabic manuscript restoration with optimized deep learning and image enhancement techniques. Trait. Signal 2024, 41, 2203. [Google Scholar] [CrossRef]

- Assael, Y.; Sommerschield, T.; Prag, J. Restoring ancient text using deep learning: A case study on Greek epigraphy. arXiv 2019, arXiv:1910.06262. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Porikli, F.; Pang, Y. LightenNet: A convolutional neural network for weakly illuminated image enhancement. Pattern Recognit. Lett. 2018, 104, 15–22. [Google Scholar] [CrossRef]

- Wang, S.; Cen, Y.; Qu, L.; Li, G.; Chen, Y.; Zhang, L. Virtual Restoration of Ancient Mold-Damaged Painting Based on 3D Convolutional Neural Network for Hyperspectral Image. Remote Sens. 2024, 16, 2882. [Google Scholar] [CrossRef]

- Mao, H.; Cheung, M.; She, J. Deepart: Learning joint representations of visual arts. In Proceedings of the 25th ACM international conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1183–1191. [Google Scholar]

- Ravishankar, S.; Ye, J.C.; Fessler, J.A. Image reconstruction: From sparsity to data-adaptive methods and machine learning. Proc. IEEE 2019, 108, 86–109. [Google Scholar] [CrossRef]

- Kraken Security Labs. Homepage. 2025. Available online: https://kraken.re/main/index.html (accessed on 17 March 2025).

- Ocropus Developers. DUP-Ocropy Repository. Available online: https://github.com/ocropus-archive/DUP-ocropy (accessed on 17 March 2025).

- Wecker, A.J.; Raziel-Kretzmer, V.; Kiessling, B.; Ezra, D.S.B.; Lavee, M.; Kuflik, T.; Elovits, D.; Schorr, M.; Schor, U.; Jablonski, P. Tikkoun Sofrim: Making ancient manuscripts digitally accessible: The case of Midrash Tanhuma. Acm J. Comput. Cult. Herit. (Jocch) 2022, 15, 1–20. [Google Scholar] [CrossRef]

- Kiessling, B.; Tissot, R.; Stokes, P.; Ezra, D.S.B. eScriptorium: An open source platform for historical document analysis. In Proceedings of the International Conference on Document Analysis and Recognition Workshops, Sydney, Australia, 22–25 September 2019; Volume 2, p. 19. [Google Scholar]

- Jacsont, P.; Leblanc, E. Impact of Image Enhancement Methods on Automatic Transcription Trainings with eScriptorium. J. Data Min. Digit. Humanit. 2023. [Google Scholar] [CrossRef]

- Petrík, M.; Mataš, E.; Sabo, M.; Ries, M.; Matejčík, Š. Fast Detection and Classification of Ink by Ion Mobility Spectrometry and Artificial Intelligence. IEEE Access 2025, 13, 33379–33386. [Google Scholar] [CrossRef]

- López-Baldomero, A.B.; Buzzelli, M.; Moronta-Montero, F.; Martínez-Domingo, M.Á.; Valero, E.M. Ink classification in historical documents using hyperspectral imaging and machine learning methods. Spectrochim. Acta Part Mol. Biomol. Spectrosc. 2025, 335, 125916. [Google Scholar] [CrossRef]

- Ciambella, F. AI-Driven Intralingual Translation across Historical Varieties: Theoretical Frameworks and Examples from Early Modern English. Iperstoria 2024, 23, 15–30. [Google Scholar]

- Novac, O.C.; Chirodea, M.C.; Novac, C.M.; Bizon, N.; Oproescu, M.; Stan, O.P.; Gordan, C.E. Analysis of the application efficiency of TensorFlow and PyTorch in convolutional neural network. Sensors 2022, 22, 8872. [Google Scholar] [CrossRef] [PubMed]

- Guha, S. Doris: A tool for interactive exploration of historic corpora (Extended Version). arXiv 2017, arXiv:1711.00714. [Google Scholar] [CrossRef]

- Lian, Y.; Xie, J. The Evolution of Digital Cultural Heritage Research: Identifying Key Trends, Hotspots, and Challenges through Bibliometric Analysis. Sustainability 2024, 16, 7125. [Google Scholar] [CrossRef]

- Terras, M. Opening Access to collections: The making and using of open digitised cultural content. Online Inf. Rev. 2015, 39, 733–752. [Google Scholar] [CrossRef]

- Ioakeimidou, D.; Chatzoudes, D.; Symeonidis, S.; Chatzoglou, P. HRA adoption via organizational analytics maturity: Examining the role of institutional theory, resource-based view and diffusion of innovation. Int. J. Manpow. 2023, 44, 363–380. [Google Scholar] [CrossRef]

- Papanikolaou, D. Sacred, Profane, Troublesome, Adventurous: The Lexicon Cyrilli across Ages and Manuscripts. Bull. John Rylands Libr. 2020, 96, 1–18. [Google Scholar] [CrossRef]

- Tzutalin. LabelImg. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 17 March 2025).

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Cyril Lexicon. Available online: https://cyril-lexicon.aegean.gr/ (accessed on 17 March 2025).

- Gasteratos, A.; Moutsis, S.N.; Tsintotas, K.A.; Aloimonos, Y. Future Aspects in Human Action Recognition: Exploring Emerging Techniques and Ethical Influences. In Proceedings of the 40th Anniversary of the IEEE Conference on Robotics and Automation, Rotterdam, The Netherlands, 23–26 September 2024. [Google Scholar]

- Campanella, S.; Alnasef, A.; Falaschetti, L.; Belli, A.; Pierleoni, P.; Palma, L. A Novel Embedded Deep Learning Wearable Sensor for Fall Detection. IEEE Sens. J. 2024, 24, 15219–15229. [Google Scholar] [CrossRef]

| Image Capture | Image Preprocessing | Metadata Creation | Text Analysis |

|---|---|---|---|

| Automatic optimization of camera settings, real-time noise reduction and sharpening, Uncovering hidden text or underdrawings | Automates the transcription, optical character recognition (OCR) (ABBYY FineReader, Google Tesseract), handwritten text recognition (HTR) (Transkribus), Image enhancement and text reconstruction | Language identification; dates, keywords, and document structure; forgery detection and authenticity verification; layout analysis; identifying anomalies | Language translation, organizing manuscripts by themes, interactive exploration and visualization tools |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moutsis, S.N.; Ioakeimidou, D.; Tsintotas, K.A.; Evangelidis, K.; Nastou, P.E.; Tsolomitis, A. Artificial Intelligence for Historical Manuscripts Digitization: Leveraging the Lexicon of Cyril. Eng. Proc. 2025, 107, 8. https://doi.org/10.3390/engproc2025107008

Moutsis SN, Ioakeimidou D, Tsintotas KA, Evangelidis K, Nastou PE, Tsolomitis A. Artificial Intelligence for Historical Manuscripts Digitization: Leveraging the Lexicon of Cyril. Engineering Proceedings. 2025; 107(1):8. https://doi.org/10.3390/engproc2025107008

Chicago/Turabian StyleMoutsis, Stavros N., Despoina Ioakeimidou, Konstantinos A. Tsintotas, Konstantinos Evangelidis, Panagiotis E. Nastou, and Antonis Tsolomitis. 2025. "Artificial Intelligence for Historical Manuscripts Digitization: Leveraging the Lexicon of Cyril" Engineering Proceedings 107, no. 1: 8. https://doi.org/10.3390/engproc2025107008

APA StyleMoutsis, S. N., Ioakeimidou, D., Tsintotas, K. A., Evangelidis, K., Nastou, P. E., & Tsolomitis, A. (2025). Artificial Intelligence for Historical Manuscripts Digitization: Leveraging the Lexicon of Cyril. Engineering Proceedings, 107(1), 8. https://doi.org/10.3390/engproc2025107008