Abstract

To address the challenge of image matching posed by significant modal differences in remote sensing images influenced by snow cover, this paper proposes an innovative image transformation-based matching method. Initially, the Pix2Pix-GAN conversion network is employed to transform remote sensing images with snow cover into images without snow cover, reducing the feature disparity between the images. This conversion facilitates the extraction of more discernible features for matching by transforming the problem from snow-covered to snow-free images. Subsequently, a multi-level feature extraction network is utilized to extract multi-level feature descriptors from the transformed images. Keypoints are derived from these descriptors, enabling effective feature matching. Finally, the matching results are mapped back onto the original snow-covered remote sensing images. The proposed method was compared to well-established techniques such as SIFT, RIFT2, R2D2, and ReDFeat and demonstrated outstanding performance. In terms of NCM, MP, Rep, Recall, and F1-measure, our method outperformed the state of the art by 177, 0.29, 0.22, 0.21, and 0.25, respectively. In addition, the algorithm shows robustness over a range of image rotation angles from −40° to 40°. This innovative approach offers a new perspective on the task of matching multi-temporal snow-covered remote sensing images.

1. Introduction

Remote sensing image registration is a crucial prerequisite for various remote sensing applications. It establishes correspondence and alignment between two or more images of the same scene, captured from different sensors, at different times, and from different viewpoints. Multi-temporal remote sensing image registration enables the combined use of images taken at different times, thus generating larger datasets and leveraging complementary features. These images offer abundant texture information and spatial details and are extensively used in areas such as change detection [1], ground target detection [2], and disaster analysis [3,4]. In recent years, a growing number of scholars have started to focus on using snow remote sensing images for their research and analysis. They utilize multi-temporal snow remote sensing data to monitor daily snow changes [5,6,7], measure snow cover areas [8,9,10,11], perform snow segmentation [12,13,14,15,16], and conduct other related studies. Remote sensing data obtained from different time points provide significant support for these studies, all of which demand precise image matching.

Image matching refers to the process of mapping the images to be matched into the same spatial coordinate system as the reference image through spatial transformation. The goal is to identify and correspond similar or identical structures and content across two or more images [17,18]. Image matching is a fundamental core issue in fields such as remote sensing, computer vision, and medicine. As a result, it has led to the development of various specific tasks, including sparse feature matching, dense matching, image block matching, and graph matching. Presently, image matching methods are generally divided into three main categories: region-based, feature-based, and deep learning-based approaches [19].

Region-based matching techniques, commonly referred to as template matching, achieve alignment by assessing the similarity of corresponding windows in two images according to specific similarity measurement criteria [20]. Popular methods include Normalized Cross-Correlation [21] and Mutual Information [22]. These region-based techniques are frequently used in medical image matching and for the precise alignment of remote sensing images. Region-based matching methods have several notable drawbacks. Firstly, these methods often involve high computational complexity, particularly when dealing with high-resolution images or large regions, leading to significant computational demands. Additionally, region-based matching is sensitive to image noise, lighting variations, and background clutter, which can result in inaccurate matching outcomes. Traditional region-based matching methods also exhibit low robustness to scale changes, rotation, or viewpoint variations.

Feature-based methods start by detecting and describing features within images and then establish correspondences by comparing the similarity of these features. This approach is more reliable in handling image distortions and can mitigate some of the limitations associated with region-based methods. The Scale Invariant Feature Transform (SIFT) method is one of the most common and effective feature matching methods, offering advantages such as scale and rotation invariance [23]. It can reliably extract keypoints and generate descriptors to achieve image feature matching. SIFT has demonstrated good results in optical image matching but cannot handle images with significant modal feature differences. The RIFT2 (Radiation-variation Insensitive Feature Transform-2) matching method is an algorithm designed for multi-modal feature matching [24]. By addressing severe nonlinear radiation distortions, it achieves feature matching and has demonstrated good results in image matching tasks involving significant nonlinear radiation differences.

Nonetheless, traditional feature-based matching methods rely largely on hand-crafted descriptors, which are mainly suited for capturing low-level semantic details. This dependence poses substantial limitations when it comes to matching tasks involving multi-temporal remote sensing images [25]. In recent years, deep learning technology has attracted significant attention due to its notable advantages in high-level visual tasks that utilize semantic information and in low-level visual tasks that involve local feature information [26]. Many researchers have applied deep learning methods to image matching tasks, taking advantage of deep learning’s powerful feature extraction capabilities to extract complex deep features from remote sensing images for various studies [27,28,29,30]. The R2D2 (Repeatable and Reliable Detector and Descriptor) algorithm focuses on ensuring the consistency and stability of feature points across different images, guaranteeing accurate feature point matching under varying conditions [31]. The R2D2 algorithm adapts well to diverse image data and application scenarios, providing a high-performance feature extraction tool for tasks such as image matching and 3D reconstruction in computer vision. The RedFeat (Recoupled Detection and Description Loss Functions) method re-couples the independent constraints of detection and description in multi-modal feature learning through a mutually weighted strategy [32]. This method effectively learns feature representations in images and promotes information interaction and fusion across different modalities, achieving strong results in multi-modal image matching.

Large areas of snow cover in remote sensing images pose significant challenges to matching tasks. The presence of snow cover on the ground and surface objects reduces the intensity of local feature information in images, which complicates the extraction of reliable features. Additionally, snow disrupts the consistency of local features between two images that need to be matched, leading to geometric and feature discrepancies in objects originally consistent before being covered by snow. This results in local feature inconsistencies between images, presenting significant challenges for the matching process. Current matching methods predominantly focus on identifying common features between the images to be matched. However, this approach limits their effectiveness in snow-covered remote sensing images, where a significant portion of feature information is rendered unusable due to the snow cover. As a result, it becomes challenging to obtain a sufficient number of common feature points and descriptors, ultimately affecting the accuracy and reliability of the matching results. To address these challenges in snow-covered remote sensing images, this paper introduces a multi-temporal snow remote sensing image matching method utilizing transformation matching and develops a dataset specifically designed for research in snow remote sensing matching. First, using the Pix2Pix-GAN image transformation network from deep learning [33], the snow-covered images to be matched are converted into snow-free remote sensing images, reducing feature differences between the two images and easing the matching difficulty. Next, a deep feature extraction network suitable for the transformed snow-free remote sensing images is designed to extract consistent features between the two images, obtaining keypoints for matching. Finally, the mapping results of the matches are applied to the original snow-covered remote sensing images.

Specifically, the major contributions of this paper are as follows:

- We propose a novel method for matching snow-covered remote sensing images based on Pix2Pix image translation technology. By first transforming snow-covered images into snow-free images, we reduce the modal differences between the images and increase the number of shared features, making the matching task more feasible.

- For the matching of snow-free remote sensing images after transformation, we developed a Multi-layer Feature Extraction Network. This network extracts keypoints and descriptors from the transformed image pairs, enabling efficient and accurate feature matching.

- To demonstrate the superiority of our proposed method, we created a comprehensive snow-covered remote sensing image dataset for both training and testing by downloading images from Google Earth. We conducted extensive comparative experiments with other matching algorithms, demonstrating the advantages of our method.

2. Methodology of This Paper

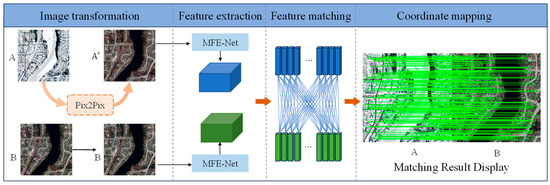

The process of the multi-temporal snow remote sensing image transformation matching method, illustrated in Figure 1, comprises four parts: image transformation, feature extraction, feature matching, and coordinate mapping.

Figure 1.

Flowchart of the method in this paper.

Snow coverage presents challenges to matching tasks; however, many residual features remain exposed. This study leverages these residual features by first conducting feature transformation learning. The snow-covered remote sensing image A is transformed into a snow-free remote sensing image A’, which closely resembles the snow-free remote sensing image B in modality and exhibits reduced feature discrepancies. This transformation is achieved using a trained Pix2Pix-GAN network. Next, we employ the proposed Multi-layer Feature Extraction Network (MFE-Net) to extract consistent features from images B and A’. These features are subsequently used for the matching task. Since the Pix2Pix network performs pixel-wise transformations, each pixel’s position remains consistent before and after the transformation. After obtaining the matching coordinate positions, the coordinates are directly mapped back to the snow-covered image A. This completes the feature matching task between the snow-covered image A and the snow-free image B.

2.1. Multi-Temporal Snow-Covered Remote Sensing Dataset

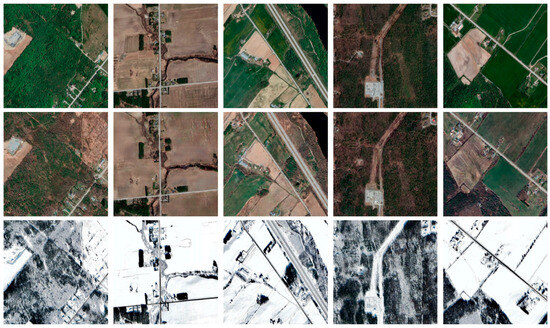

Currently, there are very few datasets specifically designed for the task of multi-temporal snow cover remote sensing image matching. To support in depth research on this topic, this paper constructs a dedicated multi-temporal snow-covered remote sensing dataset, as depicted in Figure 2. This dataset includes remote sensing images of various scenes, such as forests, farmland, villages, rivers, and towns. We created a total of 4680 pairs of 512 × 512 images and used them to form two datasets. The first dataset is for training and testing image translation networks, with 4000 image pairs for training and the remaining 680 pairs for testing. The test results are used to create the second dataset. The second dataset is used for training and testing image feature extraction networks, with 600 image pairs serving as the training data for the network.

Figure 2.

Multi-temporal snow-covered remote sensing dataset.

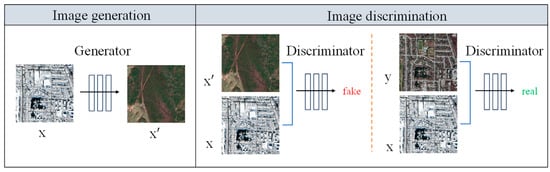

2.2. Image Transformation Techniques in Deep Learning

Pix2Pix-GAN is a deep learning-based image transformation network, primarily composed of a generator (generative model) and a discriminator (discriminative model), as illustrated in Figure 3. The generator is responsible for producing the corresponding target image based on the input image, while the discriminator evaluates the authenticity of the generated image. Through adversarial learning, a transformation relationship is established between the input image and the target image. In the Pix2Pix framework, the generator employs a U-Net architecture, which is an encoder–decoder structure that integrates multi-layer feature extraction with detail preservation. The skip connections in U-Net enable the network to directly utilize low-level features from the encoding phase during the decoding process, thereby enhancing the spatial resolution and local detail representation of the generated images. The discriminator adopts a PatchGAN architecture, which does not evaluate the entire image but instead performs binary classification on several small regions (patches) within the image. By assessing the authenticity of local regions, PatchGAN strengthens the generator’s ability to capture local structures and texture details. This fine-grained discrimination mechanism allows the generator to optimize local details more effectively, resulting in visually more realistic images.

Figure 3.

Schematic diagram of Pix2Pix-GAN image transformation.

The training data for the transformation network consists of pairs of images, each comprising a snow-covered remote sensing image and a snow-free remote sensing image. The generator and discriminator iteratively adjust the network parameters through adversarial training, progressively optimizing the quality of the generated snow-free remote sensing images. This process aims to bring the generated images closer to the authentic snow-free remote sensing images, thereby minimizing feature discrepancies and achieving tighter modality alignment.

The loss function of the network is as follows:

where

where x represents the input image, which is the real snow-covered remote sensing image; y denotes the real snow-free remote sensing image; and z is random noise. The objective function of the generator is composed of both loss and loss. The loss ensures pixel-level similarity between the generated image and the real image, while the loss drives the generator to produce realistic images that are difficult for the discriminator to differentiate.

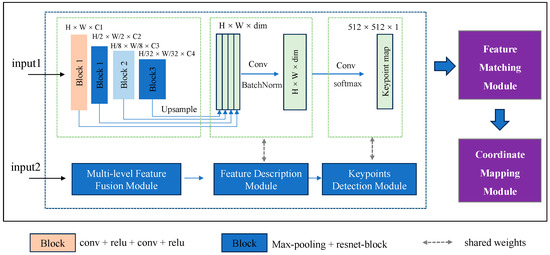

2.3. Multi-Level Feature Extraction Network

To extract consistent features between snow-free remote sensing images generated by the conversion process and real snow-free remote sensing images, this paper proposes the MFE-Net network. This network integrates both high-level semantic information and low-level detailed information from the images, producing 128-dimensional image feature maps for subsequent matching. MFE-Net is a hybrid Siamese network consisting of three components: the multi-level feature fusion module, the feature description module, and the feature point detection module, as illustrated in Figure 4. The network’s inputs are snow-free remote sensing images generated by the Pix2Pix-GAN and real snow-free remote sensing images. The upper half of Figure 4 illustrates the detailed module structure, while the lower half depicts the sequential relationships between the modules. The feature description module and point detection module share weights, which enhances the model’s performance and efficiency.

Figure 4.

Multi-level feature extraction network.

The number of convolutional layers in the network determines the level of feature abstraction: more layers produce more abstract features, whereas fewer layers retain more detailed local structural features. To balance feature abstraction with localization accuracy, ensuring that features possess both rich high-level semantic information and adequate localization precision, this paper designs a multi-level feature fusion module that integrates features at various scales. This approach aims to produce robust and more representative descriptors. Images first pass through several convolutional layers to extract features at four distinct scales and channel dimensions. These features are then resampled to a uniform size and summed along the channel dimension to integrate multi-layer information and produce the image descriptor. During the feature point detection phase, the network reduces the dimensionality of the features through convolutional operations to generate a score map of feature points. Non-maximum suppression is applied to select high-scoring positions as candidate matching points, with descriptors at these positions used for subsequent matching tasks.

3. Experimental Analysis

3.1. Experimental Setup

The proposed method is implemented using the PyTorch framework. The experiments are conducted using an NVIDIA RTX 3080 GPU with 12 GB of memory. The initial learning rate is set to 0.001, with each training session requiring 50 iterations. The Adam optimizer is employed for parameter optimization.

3.2. Matching Experiment Evaluation Metrics

We use the following metrics to evaluate the matching experiments: repeatable rate measures the consistency of detected feature points across different images; number of correct matches counts the number of accurate feature point correspondences; recall assesses the proportion of true positive matches among all possible matches; match precision evaluates the accuracy of the matched points; and F1-Measure provides a balanced measure of precision and recall.

- (1)

- Repeatable rate

The repeatable rate is determined by the proportion of detected correspondences to the total number of keypoints identified in both images. For a pair of images, keypoints are considered correspondences if their coordinates meet the condition outlined in Equation (4). Here, and represent the and keypoints in the first and second images, respectively. denotes a transformation relationship between the two images and is the distance threshold.

- (2)

- Number of correct matches

Assuming that our matching results consider keypoints and to be matched if the distance between their descriptors Da and DB is smaller than a predefined threshold T, then they are considered matched keypoints. If they satisfy Equation (4), and are considered a pair of correct matching points; otherwise, they are considered incorrect matching points.

- (3)

- Recall

Recall is defined as the proportion of correctly matched point pairs relative to the total number of true corresponding point pairs in the initial match set.

- (4)

- Match precision

Match precision is calculated as the ratio of correctly matched point pairs to the total number of both correct and incorrect matches in the results.

- (5)

- F1-Measure

The F1-Measure reflects the relationship between true corresponding points and correctly detected matched pairs by taking both recall and match precision into account.

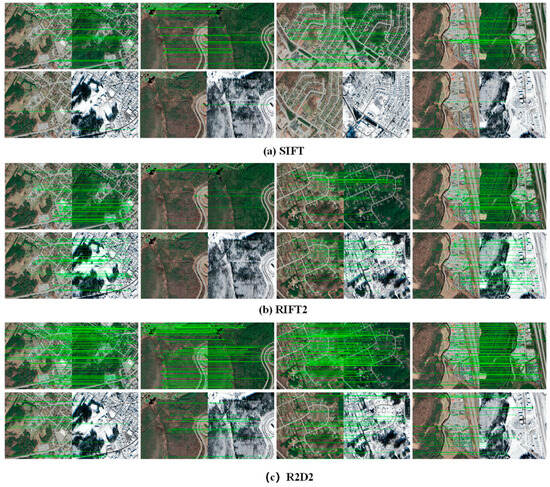

3.3. Impact of Snow on Matching

To investigate the impact of snow coverage on remote sensing image matching, this study compares three algorithms: SIFT and RIFT2 from traditional matching methods and R2D2 from deep learning approaches. A total of 36 sets of remote sensing images, each representing different scenes, are used for the matching tests. For each test, 1000 feature points are extracted with a matching error threshold of 3 pixels. The results are averaged across all experiments. The data and visual results are presented in Table 1 and Figure 5.

Table 1.

Effect of snow on matching algorithm.

Figure 5.

Effect of snow on matching algorithm.

Based on the results presented in Table 1 and Figure 5, it can be concluded that snow coverage significantly affects the performance of matching algorithms during image matching tasks within the same region. The presence of snow influences various metrics of the matching algorithms significantly. This analysis reveals that snow coverage impedes the extraction of consistent features between images, rendering it challenging for existing matching methods to effectively address such scenarios.

3.4. Snow Remote Sensing Image Transformation

To investigate the differences between snow-covered remote sensing images before and after conversion using the Pix2Pix network, a test is conducted with 30 datasets. The evaluation metrics employed include Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), and Mean Squared Error (MSE). Higher PSNR and SSIM values, along with lower MSE, indicate reduced differences between the two images and greater similarity. The visual results of the conversion are presented in Figure 6. For each dataset, the top row displays the real snow-covered remote sensing images, while the second row presents the generated snow-free images. The data results are summarized in Table 2. Group A represents the results of the calculations between snow-covered remote sensing images and real snow-free remote sensing images. Group B presents the results of the comparisons between generated snow-free remote sensing images and real snow-free remote sensing images. The results indicate that, following the conversion process, the feature differences between the images to be matched are reduced, and the modalities of the two images become more similar.

Figure 6.

Map of the results of remote sensing conversion of snow accumulation.

Table 2.

Evaluation of quantitative results before and after conversion.

3.5. Comparative Experiments

To evaluate the performance of the proposed multi-temporal snow remote sensing image transformation matching method, comparative experiments were conducted with R2D2, ReDFeat, RIFT2, and SIFT algorithms. Matching tests were conducted on 30 sets of multi-temporal snow remote sensing data, each featuring various scenes, and the results were averaged. The experiments were divided into three parts: the first part assessed the overall performance of the proposed method by keeping the number of extracted feature points and the pixel error threshold constant; the second part examined the effect of varying pixel error thresholds while maintaining a constant number of feature points; and the third part explored the impact of varying the number of extracted feature points while keeping the pixel error threshold constant. All methods employed fast nearest neighbor matching for feature correspondence.

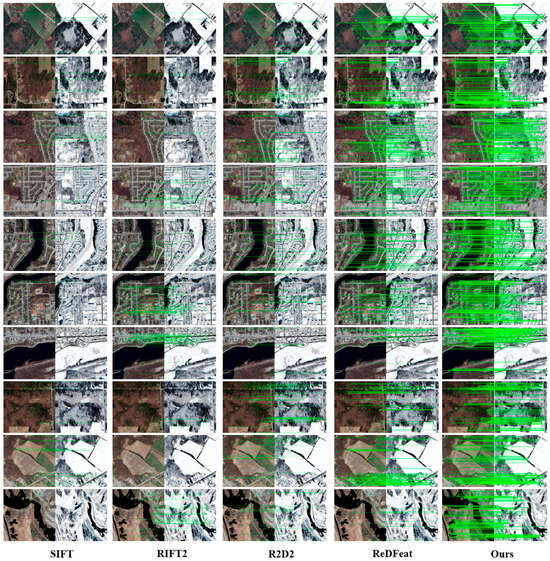

The results of the initial phase of the comparative experiments are presented in Figure 7 and Table 3. In these experiments, each algorithm extracted 1000 feature points for the matching task, with the pixel error threshold set to 3. The figures and tables demonstrate that the proposed method achieved superior results in terms of the number of correct matches and matching precision, outperforming R2D2, ReDFeat, RIFT2, and SIFT in these metrics.

Figure 7.

Matching results of different methods.

Table 3.

Quantitative results of different methods.

Traditional methods perform inadequately when dealing with snow-covered images because snow blurs local feature information, thereby increasing the feature differences between the images. This difficulty hinders traditional methods from effectively utilizing feature information to extract consistent features between the images. Although SIFT exhibits some degree of rotation and scale invariance, it struggles to provide effective descriptors for matching in the presence of snow and radiometric differences in remote sensing images. The RIFT2 method extracts features at a single scale and fails to leverage multi-level feature information effectively, rendering it inadequate for managing the significant noise introduced by snow. R2D2, designed for homogeneous images, is highly sensitive to nonlinear radiometric variations present in remote sensing images. Keypoint detection is significantly influenced by descriptors, and the differences caused by snow further complicate consistent feature extraction, making it unsuitable for remote sensing image matching tasks affected by snow coverage. While ReDFeat’s feature extraction network does not extensively focus on multi-level feature information, it still achieves notable matching results.

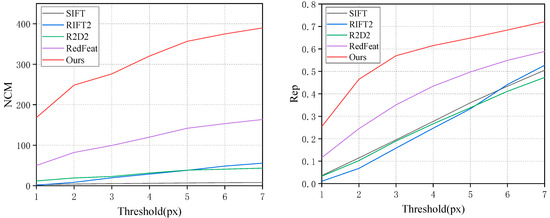

During the second phase of the experiments, the number of extracted feature points was kept constant at 1000, while the pixel error threshold ranged from 1 to 7. To achieve a greater number of correct matching pairs, the algorithm must be capable of extracting robust descriptors and repeatable keypoints. Thus, this phase employs keypoint repeatability and the count of correct matches as evaluation metrics to evaluate the performance of different algorithms. Figure 8 presents a line graph illustrating the experimental results, showing that the proposed method offers advantages in both keypoint repeatability and the number of correct matches. Over the range of pixel error thresholds from 1 to 7, our method shows an average increase of 189 in NCM and an average improvement of 0.16 in Rep compared to the ReDFeatd method. This indicates that our method can provide more robust descriptors and repeatable keypoints.

Figure 8.

Comparison test at different pixel thresholds.

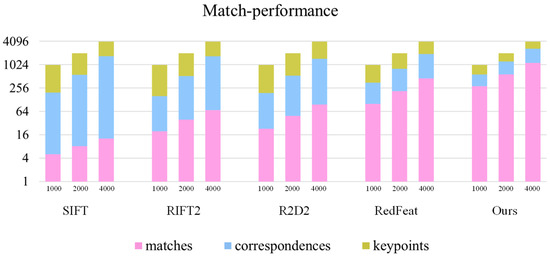

In the third phase of the experiments, the pixel error threshold was kept constant at 3 while varying the number of extracted feature points. Each algorithm extracted 1000, 2000, and 4000 feature points, and the number of matches and correspondences was recorded for each case. As shown in Figure 9, the proposed method outperformed the other algorithms in terms of both the number of matches s and correspondences. The average number of matches and correspondences achieved by the proposed method was 407 and 455 higher, respectively, than those of the second-best method across the different quantities of extracted feature points.

Figure 9.

Comparison test for extracting different number of feature points.

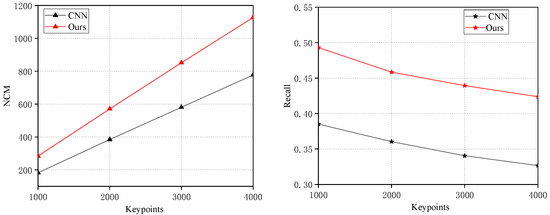

3.6. Ablation Study

The key component of the proposed method is the multi-level feature extraction network. To validate the advantages of this network, a comparison was conducted between the method utilizing the multi-level feature extraction network and a standard convolutional method without it, with the error threshold fixed at 3 and other structures and procedures held constant. As shown in Figure 10, the number of correct matches increases gradually with the number of extracted feature points for both methods. However, the method using the multi-level feature extraction network demonstrates superior performance, with an average NCM exceeding that of the other method by 227. Although both methods experience a decrease in recall as the number of extracted feature points increases, the multi-level feature extraction network method maintains an average recall that is 0.1 higher. The experimental results suggest that the multi-level feature extraction network is more effective at integrating information across different image scales, resulting in more robust feature descriptors and improved matching performance.

Figure 10.

Network ablation experiment.

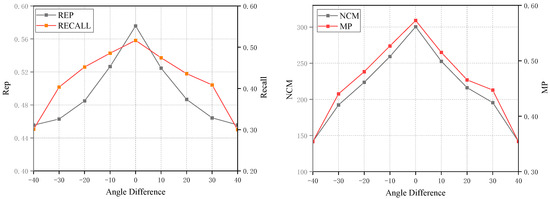

3.7. Algorithm Rotation Robustness Experiment

To assess the rotation robustness of the proposed method, 36 sets of images were chosen for evaluation. For each set, one image was rotated through various angles, and the experiments involved extracting 1000 feature points with a pixel threshold set to 3. As depicted in Figure 11, the evaluation encompassed the repeatability of matches, recall rate, matching precision, and the number of correct matches. The analysis reveals that, within a rotation range of 40°, the proposed method consistently achieves a high number of correct matches. This finding demonstrates that the algorithm exhibits a notable degree of rotation robustness, rendering it suitable for multi-temporal snow remote sensing image matching tasks involving varying rotation angles.

Figure 11.

Rotation robustness experiment of the algorithm.

4. Conclusions

To tackle the challenge of matching multi-temporal snow remote sensing images, this paper introduces an image transformation matching method. The approach initially employs a network to generate snow-free remote sensing images, thereby mitigating modality and feature differences between images. Subsequently, feature extraction is conducted to derive keypoints from the feature maps for matching, and ultimately, the matched coordinates are mapped back to the original snow-covered remote sensing images. By leveraging deep learning-based image transformation networks, the snow remote sensing image matching problem is effectively transformed into a snow-free remote sensing image matching problem. In addition, we developed a dataset matched to snow remote sensing images for comparison tests. The experimental results demonstrate that the proposed method consistently outperforms well-established techniques such as SIFT, RIFT2, R2D2, and ReDFeat, achieving significant improvements in key performance metrics. Specifically, it showed superior performance with increases of 177 in NCM, 0.29 in MP, 0.22 in Rep, 0.21 in recall, and 0.25 in F1-score. Furthermore, the method exhibited strong robustness across a range of image rotation angles from −40° to 40°, underscoring its effectiveness and reliability in challenging conditions. Despite some potential local inaccuracies in the image transformation network’s output, which may pose challenges in utilizing image information in specific regions during subsequent matching processes, the overall performance of multi-temporal snow remote sensing image matching has been markedly enhanced by this image transformation matching method.

Although the proposed method primarily addresses feature extraction challenges arising from significant modality differences due to snow coverage, it does not fully address the algorithm’s robustness under large rotation angles. Consequently, the next step will involve investigating multi-temporal snow remote sensing image matching across various rotation angles to enhance the algorithm’s robustness and practicality.

Author Contributions

Conceptualization, Z.F.; methodology, Z.F. and J.Z.; software, J.Z.; validation, J.Z.; formal analysis, J.Z.; investigation, J.Z.; resources, B.-H.T. and J.Z.; data curation, Z.F.; writing—original draft preparation, J.Z.; writing—review and editing, J.Z. and Z.F.; visualization, J.Z.; supervision, Z.F.; project administration, B.-H.T.; funding acquisition, Bo-Hui Tang. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Major scientific and technological projects of Yunnan Province [202202AD080010]; The Yunnan Fundamental Research Projects under Grant [202301AT070463]; the Key Labora-tory of State Forestry and Grassland Administration on Forestry and Ecological Big Data Open Fund Priority Project [2022-BDK-01].

Data Availability Statement

The data in this study are available on request from the correspond ing author.

Acknowledgments

We utilized AI-assisted technology for language editing, specifically OpenAI’s ChatGPT. We would also like to express our sincere gratitude to Wang Leiguang from the Institute of Big Data and Artificial Intelligence at Southwest Forestry University for providing valuable data support.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, H.; Qi, Z.; Shi, Z. Remote sensing image change detection with transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5607514. [Google Scholar] [CrossRef]

- Zhang, J.; Lei, J.; Xie, W.; Fang, Z.; Li, Y.; Du, Q. SuperYOLO: Super resolution assisted object detection in multimodal remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5605415. [Google Scholar] [CrossRef]

- Ge, J.; Tang, H.; Yang, N.; Hu, Y. Rapid identification of damaged buildings using incremental learning with transferred data from historical natural disaster cases. ISPRS J. Photogramm. Remote Sens. 2023, 195, 105–128. [Google Scholar] [CrossRef]

- Han, W.; Zhang, X.; Wang, Y.; Wang, L.; Huang, X.; Li, J.; Wang, S.; Chen, W.; Li, X.; Feng, R.; et al. A survey of machine learning and deep learning in remote sensing of geological environment: Challenges, advances, and opportunities. ISPRS J. Photogramm. Remote Sens. 2023, 202, 87–113. [Google Scholar] [CrossRef]

- Wang, Y.; Gu, L.; Li, X.; Gao, F.; Jiang, T.; Ren, R. An improved spatiotemporal fusion algorithm for monitoring daily snow cover changes with high spatial resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5413617. [Google Scholar] [CrossRef]

- He, L.; Xue, B.; Hui, F.; Xu, S.; Chen, Z.; Cheng, X. Towards daily snow depth estimation on arctic sea ice during the whole winter season from passive microwave radiometer data. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4300615. [Google Scholar] [CrossRef]

- Wang, Q.; Ma, Y.; Li, J. Snow cover phenology in Xinjiang based on a novel method and MOD10A1 data. Remote Sens. 2023, 15, 1474. [Google Scholar] [CrossRef]

- Cannistra, A.F.; Shean, D.E.; Cristea, N.C. High-resolution CubeSat imagery and machine learning for detailed snow-covered area. Remote Sens. Environ. 2021, 258, 112399. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, J. Monitoring Snow Cover in Typical Forested Areas Using a Multi-Spectral Feature Fusion Approach. Atmosphere 2024, 15, 513. [Google Scholar] [CrossRef]

- Thaler, E.A.; Crumley, R.L.; Bennett, K.E. Estimating snow cover from high-resolution satellite imagery by thresholding blue wavelengths. Remote Sens. Environ. 2023, 285, 113403. [Google Scholar] [CrossRef]

- Cordes, K.; Broszio, H. Camera-Based Road Snow Coverage Estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4011–4019. [Google Scholar]

- Hu, K.; Zhang, E.; Xia, M.; Weng, L.; Lin, H. Mcanet: A multi-branch network for cloud/snow segmentation in high-resolution remote sensing images. Remote Sens. 2023, 15, 1055. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Automated cloud, cloud shadow, and snow detection in multitemporal Landsat data: An algorithm designed specifically for monitoring land cover change. Remote Sens. Environ. 2014, 152, 217–234. [Google Scholar] [CrossRef]

- Xia, M.; Li, Y.; Zhang, Y.; Weng, L.; Liu, J. Cloud/snow recognition of satellite cloud images based on multiscale fusion attention network. J. Appl. Remote Sens. 2020, 14, 032609. [Google Scholar] [CrossRef]

- Vachmanus, S.; Ravankar, A.A.; Emaru, T.; Kobayashi, Y. Multi-modal sensor fusion-based semantic segmentation for snow driving scenarios. IEEE Sens. J. 2021, 21, 16839–16851. [Google Scholar] [CrossRef]

- Baumer, J.; Metzger, N.; Hafner, E.D.; Daudt, R.C.; Wegner, J.D.; Schindler, K. Automatic Image Compositing and Snow Segmentation for Alpine Snow Cover Monitoring. In Proceedings of the 2023 10th IEEE Swiss Conference on Data Science (SDS), Zurich, Switzerland, 22–23 June 2023; pp. 77–84. [Google Scholar]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image matching from handcrafted to deep features: A survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Fu, Z.; Qin, Q.; Luo, B.; Wu, C.; Sun, H. A local feature descriptor based on combination of structure and texture information for multispectral image matching. IEEE Geosci. Remote Sens. Lett. 2018, 16, 100–104. [Google Scholar] [CrossRef]

- Zhang, S.; Fu, Z.; Liu, J.; Su, X.; Luo, B.; Nie, H.; Tang, B.-H. Multilevel attention Siamese network for keypoint detection in optical and SAR images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5404617. [Google Scholar] [CrossRef]

- Ye, Y.; Bruzzone, L.; Shan, J.; Bovolo, F.; Zhu, Q. Fast and robust matching for multimodal remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9059–9070. [Google Scholar] [CrossRef]

- Martinez, A.; Garcia-Consuegra, J.; Abad, F. A correlation-symbolic approach to automatic remotely sensed image rectification. In Proceedings of the IEEE 1999 International Geoscience and Remote Sensing Symposium, IGARSS’99 (Cat. No. 99CH36293), Hamburg, Germany, 28 June–2 July 1999; pp. 336–338. [Google Scholar]

- Kern, J.P.; Pattichis, M.S. Robust multispectral image registration using mutual-information models. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1494–1505. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Li, J.; Shi, P.; Hu, Q.; Zhang, Y. RIFT2: Speeding-up RIFT with a new rotation-invariance technique. arXiv 2023, arXiv:2303.00319. [Google Scholar]

- Wang, S.; Quan, D.; Liang, X.; Ning, M.; Guo, Y.; Jiao, L. A deep learning framework for remote sensing image registration. ISPRS J. Photogramm. Remote Sens. 2018, 145, 148–164. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Ye, Y.; Tang, T.; Zhu, B.; Yang, C.; Li, B.; Hao, S. A multiscale framework with unsupervised learning for remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5622215. [Google Scholar] [CrossRef]

- Xie, H.; Zhang, Y.; Qiu, J.; Zhai, X.; Liu, X.; Yang, Y.; Zhao, S.; Luo, Y.; Zhong, J. Semantics lead all: Towards unified image registration and fusion from a semantic perspective. Inf. Fusion 2023, 98, 101835. [Google Scholar] [CrossRef]

- Quan, D.; Wang, S.; Huyan, N.; Li, Y.; Lei, R.; Chanussot, J.; Hou, B.; Jiao, L. A concurrent multiscale detector for end-to-end image matching. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 3560–3574. [Google Scholar] [CrossRef]

- Nie, H.; Fu, Z.; Tang, B.-H.; Li, Z.; Chen, S. A Multiscale unsupervised orientation estimation method with transformers for remote sensing image matching. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6000905. [Google Scholar] [CrossRef]

- Revaud, J.; De Souza, C.; Humenberger, M.; Weinzaepfel, P. R2d2: Reliable and repeatable detector and descriptor. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Deng, Y.; Ma, J. ReDFeat: Recoupling detection and description for multimodal feature learning. IEEE Trans. Image Process. 2022, 32, 591–602. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).