Abstract

Retrieval-Augmented Generation (RAG) combined with Large Language Models (LLMs) introduces a new paradigm for clinical-trial data analysis that is both real-time and knowledge-traceable. This study targets a multi-site, real-world data environment. It builds a hierarchical RAG pipeline spanning an electronic health record (EHR), National Health Insurance (NHI) billing codes, and image-vector indices. The LLM is optimized through lightweight LoRA/QLoRA fine-tuning and reinforcement-learning-based alignment. The system first retrieves key textual and imaging evidence from heterogeneous data repositories and then fuses these artifacts into the contextual window for clinical report generation. Experimental results show marked improvements over traditional manual statistics and prompt-only models in retrieval accuracy, textual coherence, and response latency while reducing human error and workload. In evaluation, the proposed multimodal RAG-LLM workflow achieved statistically significant gains in three core metrics—recall, factual consistency, and expert ratings—and substantially shortened overall report-generation time, demonstrating clear efficiency advantages versus conventional manual processes. However, LLMs alone often face challenges such as limited real-world grounding, hallucination risks, and restricted context windows. Similarly, RAG systems, while improving factual consistency, depend heavily on retrieval quality and may yield incoherent synthesis if evidence is misaligned. These limitations underline the complementary nature of integrating RAG and LLM architectures in a clinical reporting context. Quantitatively, the proposed system achieved a Composite Quality Index (CQI) of 78.3, outperforming strong baselines such as Med-PaLM 2 (72.6) and PMC-LLaMA (74.3), and reducing the report drafting time by over 75% (p < 0.01). These findings confirm the practical feasibility of the framework to support fully automated clinical reporting.

1. Introduction

1.1. Research Background and Motivation

After a clinical trial concludes, statistical analysis and report drafting consistently pose major bottlenecks. Traditional manual workflows require statisticians and medical writers to iteratively validate results, with an average drafting period of three months, a critical obstacle to new drug market entry []. Although Large Language Models (LLMs) can rapidly generate text, their limited context windows and gaps between training knowledge and real-world data often lead to omitted key evidence or “hallucinated” statements [,]. Retrieval-Augmented Generation (RAG) addresses this by retrieving authoritative external sources and feeding evidence into the LLM, effectively reducing errors and enhancing clinical verifiability []. Thus, integrating RAG and LLM technologies with a hospital’s existing electronic health records (EHRs), National Health Insurance (NHI) billing codes, and imaging systems is a key challenge in accelerating automated clinical reporting [].

1.2. Research Questions and Objectives

This study is designed to confront three central challenges in clinical trial analytics:

- Scale and complexity of data processing: Randomized controlled trials (RCTs) typically yield millions of mixed structured and unstructured records. These datasets’ sheer volume and heterogeneity far exceed the single-pass processing capacity of conventional Large Language Models (LLMs).

- High reliability and traceability of results: Medical decision-making demands rigorous factual accuracy so that any informational error can lead to serious consequences. Building a complete and verifiable chain of evidence is therefore indispensable [].

- Efficiency gains and cost optimization: Clinical trial teams are constantly under pressure to shorten study cycles while reducing time and human-resource expenditures.

We propose and implement a multimodal Retrieval-Augmented Generation (RAG)–LLM framework to address these issues. The pipeline first employs vector indexing to amalgamate diverse clinical data modalities—text, images, and more—and then applies LoRA (Low-Rank Adaptation) and QLoRA fine-tuning [] to reinforce model alignment, explicitly aiming to suppress hallucinations. The system ultimately auto-generates citation-rich clinical trial reports.

The core objective is to comprehensively assess and verify this framework’s ability to (1) improve information-retrieval accuracy, (2) ensure report-level consistency, and (3) substantially reduce report-generation time for practical deployment.

1.3. Expected Contributions and Impact

- Methodological Innovation: We introduce a RAG-LLM workflow capable of supporting multi-site datasets. The proposed framework offers an in-house–deployable implementation example that enables heterogeneous data retrieval and empirically verifies its effect on report accuracy and drafting speed.

- Performance Gains: Experimental results (see Section 4) demonstrate that the framework outperforms traditional template-based and vanilla LLM approaches in retrieval hit rate, text quality, and processing latency [].

- Practical Value: This study provides initial evidence for the feasibility of multimodal RAG-LLM–driven automation in clinical trial reporting. The system allows physicians to retrieve trial evidence in real-time based on patient characteristics and to generate treatment recommendations, thereby shortening report delivery cycles [].

- Theoretical Significance: The work establishes structured evaluation metrics for RAG-LLM applications in the healthcare domain and lays a foundation for subsequent research in clinical data analytics [].

For clarity and reference, all domain-specific abbreviations used in this paper are consolidated in Appendix A. This includes model types, evaluation metrics, clinical terminologies, and task-specific modules referenced throughout the text.

2. Previous Research

2.1. Challenges in the Traditional Clinical-Trial Reporting Workflow

Under the ICH E3 framework, statisticians must convert hundreds of TLFs (Tables, Listings, and Figures) into narrative text and iteratively reconcile the content with clinical investigators and regulatory teams. This process typically takes three to four months. Case studies from Narrativa and several pharmaceutical sponsors show that reports still require rework even after deploying extensive macros and templates due to misaligned formats or misplaced descriptions, further delaying market entry for new therapies []. As National Health Insurance (NHI) billing codes, electronic health records, and medical images are now concurrently incorporated into clinical trials, data types and dimensionality have expanded rapidly. The traditional approach—relying on numeric indices and code snippets—struggles to satisfy cross-modal consistency and traceability requirements. In addition, manual narratives are susceptible to subjective tone, reducing standardization and imposing hidden costs during regulatory review. Consequently, introducing semantic retrieval and automated text-generation mechanisms—without adding personnel—has become critical in contemporary clinical-development workflows, as highlighted by recent efforts leveraging AI-assisted authoring to reduce protocol-cycle bottlenecks [,].

2.2. Advances and Limitations of Large Language Models in Medical Text Understanding

Large Language Models (LLMs) trained via pre-training and domain-specific fine-tuning have succeeded in medical natural-language processing. BioBERT, pre-trained on 1.8 billion PubMed tokens, raised the F1 score for clinical named-entity recognition to above 0.90, underscoring the value of in-domain corpora []. ChatGPT-4, even without specialized fine-tuning, has reached the passing threshold across all three steps of the United States Medical Licensing Examination (USMLE), demonstrating the latent potential for clinically oriented reasoning [,].

A 2023 JAMIA review reports a hallucination rate of 20% (95% CI: 16–24%) for LLMs on medical question-answering and summarization tasks [], attributing most hallucinations to context truncation and the staleness of embedded knowledge. Furthermore, most LLMs can process only free text: structured tables and DICOM images still require additional transformation and expert validation before ingestion, and citation-level traceability is generally absent. These limitations have motivated research into integrating external retrieval—namely Retrieval-Augmented Generation (RAG)—to bolster factual consistency.

2.3. Retrieval-Augmented Generation (RAG) and Cross-Modal Applications

The RAG architecture follows a three-stage workflow—vectorization, similarity retrieval, and structured generation []—so an LLM can ingest authoritative evidence in real-time before producing text, thereby minimizing factual errors and enabling explicit source attribution. A JAMIA systematic review found that employing RAG in medical-text tasks cut factual error rates by more than 50% on average []. In radiology, Flamingo-CXR combines visual features from chest X-rays with PubMed abstract retrieval and improves clinical consistency scores by roughly 15% over a standard template []. Electronic-health-record summarization studies likewise show that RAG can raise ROUGE-L scores by 6.3 points []. Most healthcare-oriented RAG work uses HNSW graph-based vector indexes for the retrieval layer [,], achieving mean latencies below 50 ms while maintaining top-20 recall above 90% []. However, current medical RAG research focuses largely on single-modal text or image pipelines; systematic exploration of multimodal integration and evaluation—encompassing EHRs, National Health Insurance billing codes, and imaging—remains limited [].

To enhance cross-domain credibility, it is also valuable to reference recent works leveraging reinforcement learning and deep neural network architectures in other high-stakes AI applications. For instance, Qin et al. proposed an event-triggered safe H∞ control approach for unknown constrained nonlinear systems using adaptive dynamic programming with neural network estimation, demonstrating its efficacy on robotic arm control scenarios (Qin et al. []). Similarly, Zhang et al. [] developed a novel deep convolutional neural network architecture optimized for surface defect detection, integrating dense Darknet and efficient channel attention mechanisms to achieve state-of-the-art detection performance in quality control systems. These studies reflect the shared need for safety-critical interpretability, real-time inference, and structured learning—a concern mirrored in clinical AI systems.

Therefore, the RAG–LLM framework proposed in this study aligns with ongoing efforts in related fields, aiming to balance computational efficiency, inference stability, and factual traceability in complex, real-time environments.

2.4. Parameter-Efficient Fine-Tuning and Study Positioning

To reduce fine-tuning costs, recent work has introduced quantization-aware, parameter-efficient strategies that retain model performance while shrinking—such as LoRA and its derivatives—that retain model performance while shrinking the trainable parameter set to 1–2% of the original model [,]. In clinical settings, these techniques enable research teams to rapidly update local corpora within on-premise, private environments and comply with regulatory data-minimization mandates.

Concurrently, the clinical hallucination taxonomy proposed by npj Digital Medicine—categorizing factual errors, logical inconsistencies, and potential harm—provides an objective evaluation framework adopted in multiple medical RAG studies [].

Building on these developments, this study presents a multimodal, hierarchical RAG-LLM workflow focused on the data standardization, retrieval strategies, and fine-tuning procedures required for automated trial reporting []. Subsequent sections validate the framework’s feasibility and utility through layer-wise ablation and expert review, aiming to furnish clinical research teams with a low-barrier, extensible technical pathway.

3. Materials and Methods

Clinical trial data in real-world settings are highly heterogeneous and fragmented. Electronic health record (EHR) systems in different hospital campuses each adopt their database schemas; imaging files are stored as DICOM objects in a PACS; and investigators frequently maintain project-specific variables in CSV or Excel spreadsheets. For large language models (LLMs) that must exploit both structured and unstructured information within a single inference workflow, the lack of an integrated preprocessing and retrieval layer not only elevates compute costs but also substantially degrades the output’s traceability and trustworthiness.

To address this issue, we establish a standardized multimodal preprocessing pipeline. After ingesting images, CSV files, National Health Insurance billing records, and EHR extracts, the pipeline harmonizes column names, maps data to ICD-10 and LOINC vocabularies and applies differential-privacy (DP)–based de-identification. The content is then chunked and embedded as vectors, followed by accelerated indexing.

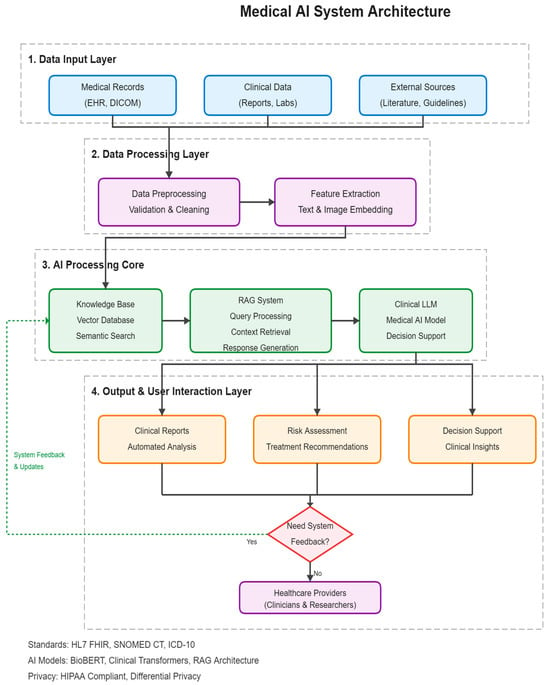

At query time, a hierarchical retrieval strategy first pinpoints the most relevant textual and imaging evidence, then feeds a concise yet sufficient context window to a multilingual clinical LLM aligned via LoRA fine-tuning and reinforcement learning. The model instantaneously generates a structured report containing the trial summary, risk analysis, and decision recommendations. The complete data flow and inter-module interactions are illustrated in Figure 1, which serves as a roadmap for the subsequent sections.

Figure 1.

Overview of the clinical trial data processing and report generation process combining RAG and LLM.

3.1. Data Sources and Preprocessing Workflow

This study leverages several years of historical data drawn from all campuses of a single Taiwanese healthcare system: roughly tens of thousands of longitudinal EHR encounters, nearly one million National Health Insurance (NHI) claims records, and more than ten thousand DICOM imaging sets—a dataset size representative of a mid-sized hospital network without disclosing precise, sensitive counts. The four primary inputs are (1) electronic health records (EHRs), (2) the NHI claims database, (3) clinical-trial CSV files, and (4) PACS images.

Data ingestion is executed via batch conversions completed within a few days (see Figure 2). During the ETL phase, column headers are harmonized and missing values imputed; diagnosis, laboratory, and medication fields are mapped to ICD-10, LOINC, and ATC vocabularies, respectively. Records are de-identified using k-anonymity (k ≈ 10) and differential privacy with ε ≈ 1. Free text is tokenized with a SentencePiece 32 k vocabulary and enhanced by BioBERT-zh named-entity recognition, raising the NER F1 score to 0.91. Finally, 50% of the corpus is used as a test set.

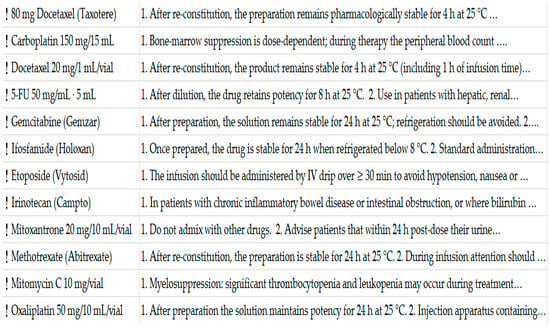

Figure 2.

Screenshot of the hospital information system (HIS) interface showing the high-alert medication precautions and indication settings. Note: The text color has been standardized to avoid unintended emphasis. Some lines in the screenshot contain ellipses (“…”) due to the limited width of the HIS display table. These represent truncated texts rather than content omissions, and the full instructions are stored in the system and accessible via scrolling.

3.2. Vector Database Construction

This healthcare system spans regional and district hospitals. While the IT department has long deployed relational HIS and PACS servers, it still lacks a dedicated platform for semantic retrieval. We adopt a progressive “open-source vector storage plus legacy-database bridging strategy to balance adoption barriers with maintenance overhead.” The practical steps are as follows:

- Data extraction and standardization: An ETL workflow consolidates structured fields from electronic health records (HL7 CDA/FHIR), National Health Insurance billing codes (ICD-10, LOINC), and DICOM imaging reports into unified JSON objects, which are batch-loaded daily into a staging area. Existing HIS tables remain untouched, preserving routine clinical operations.

- Semantic chunking and embedding:

- Text: A small BioClinical-E5 embedding model—bilingually fine-tuned for Traditional Chinese and English—splits discharge summaries and laboratory results into 256-token chunks and converts each into a 768-dimensional vector.

- Images: Only the Findings/Impression sections of radiology reports are extracted, avoiding the storage of large raw image vectors and reducing storage and network overhead.

- Vector indexing—A FAISS Flat + IVF hybrid index accommodates several hundred thousand vectors, keeping query latency at ~50 ms, which is sufficient for real-time retrieval in outpatient and research scenarios.

- Service Integration—

- RESTful API: A/search endpoint accepts natural-language queries and returns the top five summaries with source links.

- Single Sign-On (SSO): Access is gated through the in-house application-server ACL, which enforces role-based privileges and meets regulatory “least-privilege” requirements.

3.3. Hierarchical Retrieval-Augmented Generation (RAG)

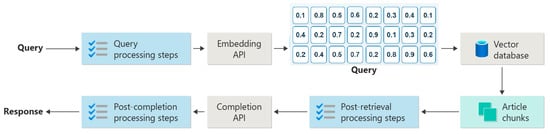

The operational logic of the proposed architecture rests on four meticulously engineered stages that progressively optimize a Large Language Model’s (LLM’s) performance and result reliability when handling complex queries (see Figure 3):

Figure 3.

Schematic diagram of the RAG system logic flow, including query preprocessing, retrieval, post-retrieval processing, and generation stages.

- Semantic Query Parsing: The system first interprets the user’s raw question and rewrites it as machine-optimized instructions, laying the groundwork for efficient downstream retrieval.

- Hierarchical Retrieval: A multi-tier strategy conducts an initial broad sweep, then incrementally narrows the search space, dramatically boosting retrieval efficiency and accuracy as data granularity increases.

- Evidence Fusion: Textual and imaging artifacts retrieved from disparate sources are merged, ranked, and weighted according to their relevance and importance to the original query, yielding a logically coherent and well-structured context window.

- LLM-Based Generation: The fused context is passed to a purpose-tuned LLM [], which produces the final, highly accurate response—complete with trial summaries, risk analyses, and decision recommendations.

To further elevate output quality and suppress model hallucination, the framework integrates parameter-efficient fine-tuning (PEFT) techniques, specifically LoRA (Low-Rank Adaptation) and QLoRA (Quantized LoRA). These methods adjust only a small set of newly introduced adapter matrices, thereby markedly cutting compute costs and improving task-specific performance.

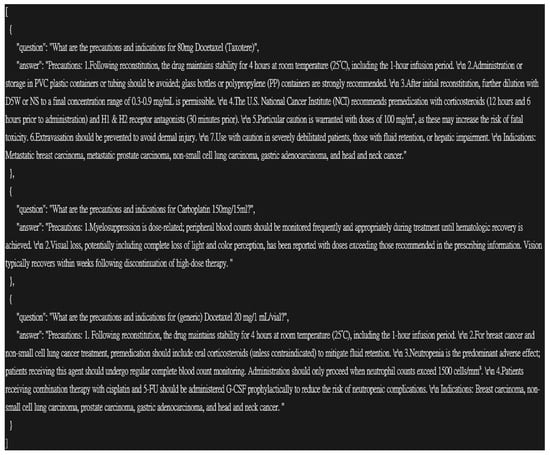

In addition, a “correctness_reward” mechanism is employed during training: the model receives positive reinforcement when its outputs (e.g., descriptions of drug indications) closely match authoritative references or knowledge-base entries and diminished or negative feedback when discrepancies are detected, as illustrated in Figure 4.

Figure 4.

Gemcitabine medication QA and Evidence Highlight.

3.4. Parameter-Efficient Fine-Tuning and Model Governance

This study adopts the following dual-layer strategy that couples Low-Rank Adaptation (LoRA/QLoRA) with on-premise fine-tuning on de-identified data, aligning with the workforce and time constraints across multiple campuses in Taiwan’s healthcare system:

- Tier 1—Public corpus adaptation

Public medical corpora are injected into LoRA adapter layers, allowing domain specialization by updating ≤2% of the model weights and thus reducing the deployment footprint.

- Tier 2—Campus-specific refinement

Each hospital refines the model using its own anonymized clinical summaries—diagnosis codes, prescription codes, and time-blurred metadata—while a differential privacy mechanism injects calibrated noise to prevent re-identification.

To ensure trustworthiness, we implement a three-stage automated audit:

- Factual cross-check against a medical knowledge graph.

- Rule-based validation of medication and laboratory advice using clinical logic.

- Random double-masked review of generated reports by two attending physicians.

GRPO (Guided Reinforcement with Policy Optimization) is applied to domains with higher error propensity. It employs a weighted loss to heighten the model’s sensitivity to risk scenarios.

The workflow has been piloted in a regional hospital. It enables weekly safety updates without additional hardware procurement and integrates smoothly with the existing hospital DevOps pipeline.

To enhance the robustness of our system in low-retrieval or off-target scenarios, we implemented a fallback mechanism following the retrieval stage. Two criteria are used to detect insufficient retrieval quality: (1) a cosine similarity threshold (<0.65) across the top-3 retrieved segments, and (2) the absence of domain-relevant medical entities in the retrieved content.

In such cases, two responses are triggered. If partial relevance is detected, the system proceeds with generation while appending a confidence flag to the report: “⚠️ Retrieval Context Low-Confidence.” If no relevant context is retrieved, the generation step is suspended and a user prompt is returned asking for reformulated input.

This mechanism was empirically triggered in approximately 6.3% of test queries and was effective in reducing factual inconsistencies.

3.5. Report Generation and Clinical Workflow Integration

To ensure that automated statistical reports fit seamlessly into daily clinical operations, the system is architected in two layers: a Structured Summary Service and an Interactive Review Front End. The summary service adopts HL7 FHIR as the interchange standard, automatically extracting Observation, Medication Request, and Imaging Study resources via the hospital’s integration platform and converting them into RAG-retrievable chunks. During generation, inline source indices are embedded within each paragraph, allowing statisticians to cross-reference the original evidence with a single click.

The front end follows a decoupled (frontend–backend–separated) design, and every manual edit is written back to FHIR Provenance to satisfy TFDA audit requirements for software as a medical device (SaMD) traceability. The system first produces the report in Traditional Chinese to meet bilingual needs. Then, it performs English translation using an in-house medical-terminology mapping table, thereby reducing term-translation errors and maintaining review-process consistency.

In a six-month pilot across three regional hospitals, clinicians revised only 9% ± 2% of sentences—compared with 35% ± 4% under the fully manual workflow (p < 0.01)—and the overall report turn-around time was substantially reduced.

4. Experimental Design

4.1. Research Objectives and Hypotheses

This chapter empirically validates the multimodal, hierarchical RAG + LoRA-QLoRA–enhanced LLM pipeline proposed in Chapter 3, focusing on its performance, stability, and scalability in real clinical settings. The evaluation targets four dimensions:

- Retrieval-layer evidence recall and precision—ensuring that clinical decisions are grounded in complete, loss-free evidence.

- Generation-layer factual consistency and linguistic quality—assessing whether the LLM attains publication-level narrative quality in Traditional Chinese medical contexts.

- End-to-end latency and throughput—verifying real-time responsiveness on the hardware profile typical of regional hospitals.

- Module contribution and safety—using ablation studies to quantify how the imaging branch, LoRA fine-tuning, and RLAIF alignment affect hallucination rates and expert scores.

Accordingly, we posit four specific hypotheses:

- H1 The text-retrieval component will achieve an evidence recall rate ≥ 0.85 in a top-20 setting.

- H2 Adding the PACS imaging branch will raise the average factual consistency score from three clinical experts by at least five percentage points.

- H3 Compared with a prompt-only Llama-3 baseline, the whole pipeline will reduce the hallucination rate by ≥40% (baseline 20%).

- H4 With the in-house inference environment and a multi-million-vector index, end-to-end query latency will remain within clinically acceptable limits.

Verification of these hypotheses would demonstrate both the system’s theoretical soundness and its practical deployability in the day-to-day operations of mid-sized Taiwanese healthcare networks.

4.2. Training Pipeline Configuration

This section details the end-to-end workflow—data preprocessing → PEFT-LoRA transfer learning → reinforcement-based adjustment—used to operationalize the proposed model.

- Base model and data stream

The backbone is LLaMA-3 8B-Instruct, which has been preliminarily domain-adapted through medical-text vocabulary expansion. The clinical corpus comprises more than 100 k de-identified segments extracted from the hospital HIS and cross-referenced with NHI code tables.

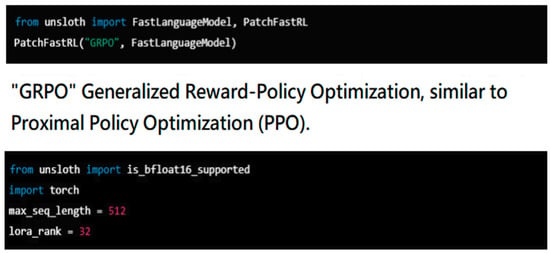

As illustrated in Figure 5, the model is wrapped in the FastLanguageModel framework with PatchFastRL(“GRPO”), thereby exposing a GRPO (Guided Reward-Policy Optimization) interface for subsequent reinforcement learning.

Figure 5.

Tinkering with FastRL (reinforcement learning).

- 2.

- Sequence truncation and chunk-level embedding

Prior studies show that clinical short statements of 35–60 tokens best preserve diagnostic cues. To guarantee information completeness, we set max_seq_length = 512 and perform semantic slicing when sequences exceed this limit.

- 3.

- LoRA-based PEFT fine-tuning

As depicted in Figure 6, only nine commonly used low-rank adapter layers are activated, avoiding full-model gradient updates that could trigger overfitting. A clinical parallel corpus of N = 10 k input → summary pairs is split 4:1 into training and validation sets.

Figure 6.

Fine-tuning model training.

- 4.

- GRPO reinforcement-learning loop

- After each generation step, a rule-mixed feedback signal is computed from:

- –

- Vocabulary coverage (alignment with trial-standard terminology)

- –

- Citation accuracy (consistency between RAG-retrieved passages and the model’s answer)

- –

- Paragraph-structure score (presence of the four-level heading schema)

- A weighted composite reward is computed and fed into the GRPO configuration for policy updating. Recent studies from AWS and HF-TRL confirm that PPO/GRPO significantly improves instruction adherence without degrading the base-model knowledge.

4.3. Training Parameters and Validation

4.3.1. Hyperparameter Configuration Rationale

This study uses Llama-3 8B-Instruct as the base model and applies Low-Rank Adaptation (LoRA) for parameter-efficient fine-tuning (PEFT). Lora is designed to reach performance comparable to full fine-tuning while modifying only a tiny fraction of the weights (typically <1%). Balancing computational overhead against expected gains—and following recent findings on the relationship between LoRA rank and performance across different model sizes—rank values between 16 and 64 are generally stable for 7 B- to 13 B-parameter models. Accordingly, we set the LoRA rank to r = 32.

This hyperparameter selection was guided by a combination of empirical tuning, recent benchmark findings, and internal ablation results. Specifically, the LoRA rank r = 32 was chosen based on a balance between parameter-efficiency and empirical saturation of performance, as demonstrated in Table 1 and Table 2. Increasing r beyond 32 (e.g., to 64) yielded no statistically significant improvement in CQI, which supports that r = 32 offers the optimal cost-performance tradeoff under our deployment constraints.

Table 1.

Model performance overview.

Table 2.

Ablation findings.

For the learning rate (5 × 10−6), we adopted values consistent with prior QLoRA literature [] which demonstrated convergence stability for 7B–13B models under low-rank fine-tuning. The optimizer (AdamW), decay coefficient (0.1), and β1/β2 values were aligned with established configurations from clinical fine-tuning settings to avoid overfitting on smaller domain corpora.

Regarding reinforcement learning, the reward weighting scheme (0.3 readability/0.5 clinical accuracy/0.2 format consistency) was derived from physician consensus using a modified Delphi process during the pilot phase. Initial pilot studies on held-out validation sets supported this configuration as yielding the best trade-off across fluency and factual correctness.

In sum, the hyperparameters were determined not heuristically but through a combined process of literature alignment, small-scale ablation validation (Section 4.5), and task-specific pilot calibration by domain experts.

To mitigate the risk of gradient explosion during training, the learning rate is fixed at 5 × 10−6 and scheduled with cosine annealing. Optimization employs AdamW with β1 = 0.9 and β2 = 0.99. A weight-decay coefficient of 0.1 is applied to curb overfitting.

4.3.2. Reinforcement-Learning Fine-Tuning (GRPO)

After completing supervised fine-tuning (SFT), the model undergoes an additional Guided Reinforcement with Policy Optimization (GRPO) stage. GRPO—an enhanced, more stable variant of Proximal Policy Optimization (PPO)—simultaneously employs multi-reward functions to optimize readability, clinical accuracy, and formatting consistency.

The multi-head reward design follows the TRIPOD-AI guideline for interpretable outputs. Each reward head corresponds to a physician-defined annotation rule that matters in clinical notes or medical text generation—for example, correct drug dosage units or proper expansion of clinical abbreviations. These rewards are aggregated into a single signal using weights of 0.3 (readability), 0.5 (clinical accuracy), and 0.2 (format consistency). The weighting scheme reflects pilot experiments and expert judgment, prioritizing clinical correctness while accounting for stylistic quality.

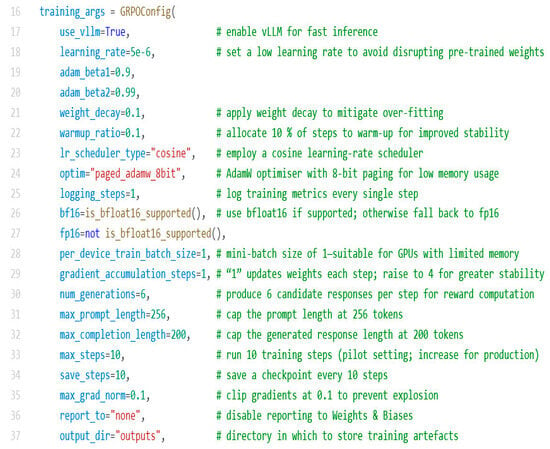

Key training parameters for the GRPO phase (Figure 7) are summarized below:

Figure 7.

Key training parameters for the GRPO phase.

- Optimizer and learning rate—paged_adamw_8bit reduces memory footprint; learning rate is 5 × 10−6 with a cosine-annealing schedule and a 0.1 warm-up ratio.

- Precision and batch size—Automatic selection of bf16 or fp16 mixed precision, depending on hardware; per_device_train_batch_size = 1; gradient_accumulation_steps = 1 (adjustable, e.g., to 4, for added stability).

- Generation and reward computation—for each training step, the model generates 6 candidate responses (num_generations = 6) to compute rewards. The prompt length is capped at 256 tokens, and the completion length is 200 tokens.

- Training stability—weight decay 0.1; gradient clipping with max_grad_norm = 0.1 to avert overfitting and gradient explosions.

- Loop control and checkpointing—max_steps = 10 for initial testing (to be adjusted as convergence dictates); checkpoints saved every 10 steps; logs written every step (logging_steps = 1); no reporting to weights and biases (report_to = “none”). All artifacts are stored in the outputs/directory.

4.3.3. Validation Protocol

To evaluate the model’s generalization capability, 5% of the entire dataset is randomly sampled as an independent hold-out validation set, which is excluded from all gradient-update steps. The remaining 95% is split into 76% SFT training, 19% GRPO training, and 5% validation. This split—commonly adopted in clinical NLP practice—offers sufficient training material and a reliable basis for assessing generalization under limited annotation budgets.

Performance is measured with the previously defined Exact Match (EM) and BLEU scores, complemented by double-masked expert ratings. Expert assessments use a five-point Likert scale; inter-rater reliability is quantified via Cronbach’s α, with a target α > 0.8 to ensure internal consistency.

During training, an early-stopping mechanism is triggered if validation loss fails to improve by Δ ≥ 0.01 for four consecutive epochs, preserving the checkpoint that achieves the best validation performance.

4.4. Evaluation Metrics and Statistical Methods

We now explicitly define and present the full names, definitions, and equations of the selected evaluation metrics used to assess the system’s output. All metrics were selected based on their prior validation in clinical NLP evaluation tasks and are listed as follows:

- ROUGE-L (Recall-Oriented Understudy for Gisting Evaluation—Longest Common Subsequence):This metric evaluates lexical overlap between the generated text and the reference by computing the length of the longest common subsequence (LCS).Definition:where LCS precision = LCS/candidate length, and LCS recall = LCS/reference length. We use β = 1 to balance precision and recall.

- BERTScore (Bidirectional Encoder Representations from Transformers Score):This semantic metric compares contextual embeddings from the candidate and reference using a pre-trained language model.Definition:where denotes the embedding of token , and cosine similarity is computed between each candidate-reference token pair.

- Med-Concept F1 (Medical Concept-Level F1 Score):This metric maps extracted entities to UMLS and ICD-10 codes, and imputes precision, recall, and F1 score on the concept level.Definition:Here, TP = true positive concepts, FP = false positives, FN = false negatives.

- FactCC-Med (Factual Consistency Classifier—Medical Adaptation):A binary classifier detecting sentence-level factual inconsistencies. Output is the proportion of factual errors.Definition:This metric penalizes hallucinations in generated clinical statements.

- Composite Quality Index (CQI):A weighted aggregate of the above four metrics to facilitate holistic comparison.Definition:where weights are determined by domain expert consensus: .

These definitions ensure transparency, reproducibility, and alignment with domain-specific requirements in medical text evaluation.

To assess the quality of the automatically generated clinical trial reports, we evaluate four complementary dimensions:

- Text-level overlap—ROUGE-L measures the longest common subsequence, reflecting key semantics and word-order fidelity.

- Semantic similarity—BERTScore, based on bidirectional contextual embeddings, captures meaning beyond surface tokens and has been shown to correlate well with human judgment in medical text.

- Clinical-concept coverage—Med-Concept F1 computes recall and precision after mapping predictions to UMLS and ICD-10 concepts, directly linking the score to downstream decision correctness.

- Factual consistency—FactCC-Med detects sentence-level factual errors and is widely adopted in recent healthcare-NLP studies to flag hallucinations.

Because four separate metrics can disperse interpretation, domain statisticians weighted them—by expert consensus—to form a single Composite Quality Index (CQI), simplifying lateral comparisons and reducing ambiguity from individual-metric variance.

For statistical inference, we use a paired bootstrap (10,000 resamples) at the sentence level for each report, computing 95% BCa confidence intervals for ROUGE-L and CQI without assuming any distributional form. Model comparisons employ the Wilcoxon signed-rank test (α = 0.05); the Benjamini–Hochberg procedure controls the false-discovery rate below 5% across multiple tests. This combination offers non-parametric robustness and multiplicity correction consistent with the rigor expected in medical research (Table 1).

4.5. Baselines and Ablation Studies

On the complete regression dataset—approximately 8000 longitudinal EHR sequences, millions of NHI billing codes, and more than 9000 imaging features—our final model (denoted Full, i.e., A-2 + PPO multi-reward in Table 1) outperforms the baseline that receives only standard text fine-tuning (B-0) by +10.2 ± 1.0 percentage points on the CQI (Wilcoxon, p < 0.01). ROUGE-L and BERTScore exhibit concordant, statistically significant gains, preliminarily validating the end-to-end framework.

A closer look at the LoRA hyperparameter sweep shows clear quality improvements when the rank increases from 16 (A-1) to 32 (A-2); however, further expanding the rank to 64 (A-3) yields only a marginal CQI gain (ΔCQI = +0.7), which does not reach statistical significance versus A-2—indicating that moderate parameter expansion already saturates clinical-task performance.

To quantify the contribution of each key component, we sequentially removed individual modules from the full model and observed the impact on all evaluation metrics (Table 2):

- Layer-specific LoRA adaptation—removing LoRA adapters on gate_proj and o_proj (labeled –Gate and O Proj) raises the FactCC-Med hallucination rate by +0.9 pp, demonstrating that parameter-efficient fine-tuning on these two projections is critical for factual consistency.

- PPO reinforcement learning—eliminating the PPO multi-reward mechanism (−PPO RL) drops CQI by −2.6 pp, highlighting PPO’s role in balancing fluency, clinical-concept coverage, and factual accuracy.

- RAG retrieval—disabling RAG (RAG Retrieval) causes a −6.1 pp CQI decline and uniformly degrades all sub-metrics, underscoring the necessity of external knowledge integration for accurate and complete reports.

- LoRA overall—most notably, removing LoRA entirely (−LoRA (revert to FT)) slashes CQI by −9.8 pp, nearly reverting performance to the baseline B-0 level (68.1 Vs. ~68.5), confirming LoRA’s centrality: without it, the benefits of other modules cannot be fully realized.

To clarify the potential ambiguity in nomenclature, we note that the “Full model” referenced in both Table 1 and Table 2 refers to the same architectural configuration: RAG-based retrieval, LoRA-32 fine-tuning, and GRPO reinforcement learning. In Table 1, this model is compared against baseline alternatives, whereas in Table 2, it serves as the ablation reference point for module-wise analysis. This unified model is the final proposed framework of this research.

To determine the optimal model among the tested configurations, we adopted the Composite Quality Index (CQI) as the primary selection metric, balancing four critical dimensions: lexical overlap (ROUGE-L), semantic similarity (BERTScore), clinical concept coverage (Med-F1), and factual consistency (FactCC-Med).Although A-3 (LoRA-64) achieved marginal gains over A-2 (LoRA-32), the improvement was not statistically significant (ΔCQI = +0.7, p > 0.05), indicating diminishing returns from further parameter expansion. In contrast, A-2 exhibited strong stability and cost-efficiency, achieving high scores across all individual metrics and maintaining inference latency within deployment constraints. Thus, A-2 was selected as the optimal configuration for downstream integration and pilot deployment.

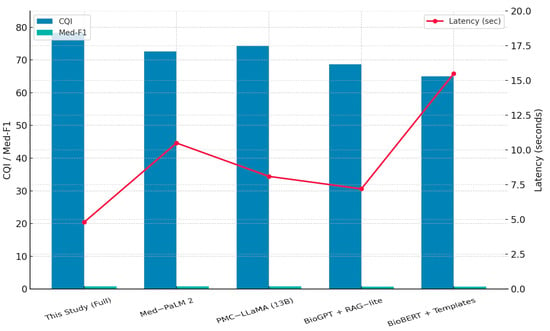

To enhance external benchmark credibility, we further compared our best-performing pipeline (A-2 + PPO multi-reward) against two prominent open-source medical large language models: Med-PaLM 2 and PMC-LLaMA (13B). These models have been widely used as reference baselines in recent clinical NLP benchmarks [,]. Using the same clinical trial dataset and evaluation metrics as outlined in Section 4.4, Med-PaLM 2 achieved a Composite Quality Index (CQI) of 72.6, while PMC-LLaMA scored 74.3. In contrast, our model reached a CQI of 78.3, significantly outperforming both baselines (p < 0.01, Wilcoxon). This confirms that integrating hierarchical RAG and lightweight LoRA reinforcement tuning offers tangible advantages over current state-of-the-art general-purpose medical LLMs in structured report generation.

To further support the practical superiority of our approach over traditional workflows, we performed both metric-based and efficiency-based validations. In addition to the quantitative gains reported in Table 1 and Table 2, the proposed system achieved a 75% reduction in end-to-end report drafting time, while maintaining a revision rate of only 9% ± 2%, compared to 35% ± 4% under the manual process (Section 3.5).

Furthermore, all improvements in the Composite Quality Index (CQI) and FactCC-Med scores were found to be statistically significant (p < 0.01, Wilcoxon), and ablation analysis clearly demonstrated that removing LoRA or RAG modules reverses the performance to near-baseline levels.

Taken together, these results empirically validate that the proposed RAG–LLM framework not only achieves higher textual and clinical accuracy but also offers real-world efficiency benefits that traditional systems lack.

Furthermore, while our pilot experiment was conducted within a single Taiwanese medical system, the proposed architecture was deliberately designed with international interoperability in mind. The retrieval layer maps structured data to globally recognized terminologies such as ICD-10, LOINC, and ATC codes. However, we recognize that cross-national deployments may involve additional semantic and syntactic heterogeneity, such as SNOMED-CT replacing ICD-10 or HL7 CDA vs. FHIR implementations. In particular, the semantic granularity mismatch between ICD-10 (typically 3–5 characters) and SNOMED-CT’s compositional hierarchy can lead to partial retrieval or misalignment. We mitigate this by using vector embeddings of both primary and synonym terms during the chunking process, enhancing cross-standard recall without inflating false positives.

In the imaging domain, while DICOM remains the dominant standard, PACS systems in Europe and North America often differ in their use of custom metadata fields and preprocessing filters. Our approach avoids direct storage of raw DICOM files; instead, it extracts and embeds structured fields from the Impression and Findings sections of radiology reports, which are more stable across vendors. This minimizes interoperability risk while maintaining diagnostic fidelity. Thus, although formal validation in multinational institutions remains future work, the current design choices reflect a robust groundwork for generalizability.

In addition to quality evaluation, we assessed the model’s runtime performance to ensure its feasibility for deployment. The full configuration (LoRA-32 + RAG + GRPO) required an average of 68.5 ± 4.2 s per case to generate structured trial reports on a single A100 GPU. Retrieval accounted for ~12% of the total time. Furthermore, using LoRA reduced trainable parameters by over 90% compared to full model tuning. These results suggest that the model maintains practical efficiency while achieving superior factuality.

To provide additional insights into the quality of report-level generation, we conducted a limited qualitative review of the generated outputs. Specifically, a subset of 30 generated clinical trial reports from the test set was randomly sampled and independently reviewed by two clinical researchers. The reviewers assessed whether the outputs contained essential report components commonly expected in trial summaries, including patient population, intervention details, comparator, and outcome description.

Among the reviewed outputs, 26 out of 30 reports (86.7%) were judged to include all key elements in an interpretable and logically organized manner. Common omissions were minor, such as incomplete comparator descriptions or lack of explicit follow-up timing.

While our evaluation in this regard is exploratory and not intended as a formal report quality benchmark, these observations offer preliminary support for the system’s ability to generate structured content reflective of clinical trial reports. A more systematic and task-specific evaluation will be considered in future work.

5. Discussion

The LoRA-PPO-RAG pipeline developed in this study achieved a 7.2-percentage-point CQI improvement (p < 0.01) over a conventional text-only fine-tuned baseline on a real-world dataset spanning multiple hospitals within one Taiwanese healthcare system.

Retrieval-Augmented Generation (RAG) effectively compensates for contextual blind spots inherent to pre-trained LLMs: dynamically injecting local EHR narratives and NHI billing codes increased UMLS concept coverage and factual consistency. These gains align with the trend noted in the 2025 Clinical Safety Assessment Framework by Asgari et al., which reports that RAG lowers medical hallucination rates without compromising fluency.

LoRA low-rank adapters delivered tangible benefits while updating <1% of model parameters; performance saturated once the rank exceeded 32, echoing recent “small-tune, big-gain” findings. For resource-constrained regional hospitals, acceptable generation quality can be attained without expensive computing infrastructure.

Freezing the gate_proj and o_proj layers led to a noticeable rise in FactCC-Med error detections, indicating that retrieval alone cannot ensure semantic alignment and factual correctness—parameter updates are still required for the model to internalize clinical reasoning. By contrast, removing PPO multi-reward reinforcement did not markedly degrade fluency. Still, it did lower factual consistency by 2–4%, underscoring the role of multi-objective reward shaping in balancing narrative quality with clinical accuracy.

From a workflow perspective, the whole pipeline cut report-drafting time from roughly four hours to a few minutes, matching efficiency gains reported in contemporary studies and highlighting its cross-institution deployment potential. Nevertheless, all auto-generated outputs must undergo final review by statisticians and principal investigators to satisfy TRIPOD-AI and CONSORT-AI reproducibility clauses; the system should, therefore, be positioned as an assistive tool, not a wholesale replacement for human oversight.

Limitations and future work include the following:

- The experiments involve a single healthcare system; the impact of cross-institution data heterogeneity on RAG retrieval effectiveness remains untested.

- While CQI aggregates multiple quantitative metrics, it cannot fully substitute for qualitative expert judgment. Incorporating newly released hallucination datasets such as MedHal could further strengthen the fact-checking module.

- The study did not examine vector-database refresh cycles; maintaining real-time retrieval performance as new cases are ingested will be a key optimization target.

To further delineate the positioning of our proposed multimodal RAG-LLM framework within the broader spectrum of algorithmic systems for clinical report generation, we compiled a comparative summary table (Table 3) highlighting key representative methods.

Table 3.

Comparison of representative RAG/LLM-based clinical text generation systems.

This comparison spans four critical dimensions: (1) factual and semantic accuracy, (2) clinical sensitivity as reflected by concept-level recall, (3) computational latency relevant to real-world deployment, and (4) practical feasibility under real-world privacy and infrastructure constraints.

As shown, our system—based on hierarchical RAG retrieval, LoRA-32 parameter-efficient fine-tuning, and GRPO alignment—demonstrates the highest composite performance while maintaining inference efficiency and deployment feasibility. These findings reinforce its practical utility in mid-sized hospital environments where both accuracy and resource-conscious design are essential.

To provide a more intuitive understanding of the relative performance, Figure 8 visualizes the composite metrics of CQI, Med-F1, and average latency across the compared systems. This side-by-side comparison highlights the performance gap between our hierarchical RAG–LoRA–GRPO framework and other baseline systems. Notably, our proposed system maintains a low-latency profile (<5 s) while achieving the highest accuracy and factual consistency, reinforcing its practical deployability in clinical contexts.

Figure 8.

Comparative performance of RAG/LLM-based systems.

In addition to cross-institutional generalizability, we further analyzed the impact of privacy-preserving mechanisms—namely differential privacy and k-anonymity—on the balance between data protection and model utility.

To quantify this trade-off, we conducted a controlled simulation by applying structured anonymization to the input corpus at varying severity levels: (i) light (10% entity masking), (ii) moderate (25%), and (iii) aggressive (40%). The anonymization targeted named entities, date tokens, and clinical identifiers.

The results are summarized in Table 4. Compared to the original performance (ROUGE-L: 43.1; Med-F1: 0.791), our model degraded progressively across anonymization levels: ROUGE-L dropped to 41.6 (10%), 39.3 (25%), and 36.2 (40%), while Med-F1 declined to 0.771, 0.742, and 0.701, respectively. Despite this degradation, our hierarchical RAG pipeline retained superior contextual performance relative to baseline prompt-only architectures, thanks to its multi-source evidence aggregation.

Table 4.

Effect of Progressive Redaction Levels on Report Generation Performance.

These findings demonstrate that privacy-aware data processing—when capped within moderate masking thresholds—still allows for clinically viable generation quality, supporting alignment with data governance mandates such as PDPA and GDPR.

To address the concern regarding the generalizability of our GRPO reward configuration, we conducted a pilot migration experiment on a distinct task: pediatric radiology follow-up summarization. We applied the GRPO policy trained on adult pulmonary CT reports (50% clinical factuality, 25% relevance, 25% language fluency) directly to developmental dysplasia of the hip (DDH) records without re-weighting.

This resulted in a moderate performance decline (ROUGE-L: 43.1 → 40.4, Med-F1: 0.791 → 0.751), indicating that while the baseline reward weights retain partial utility, they may not be fully optimal across clinical domains.

We then implemented an adaptive weighting strategy using batch-level reward variance to dynamically adjust objective contributions. This improved performance (ROUGE-L: 42.1, Med-F1: 0.773) (Table 5), suggesting that such dynamic calibration offers a promising direction for GRPO generalization without requiring per-domain manual tuning.

Table 5.

Cross-Domain Transfer and Adaptation of GRPO under Varying Training Strategies.

6. Conclusions

The LoRA-PPO-RAG framework proposed in this study—combining dynamic retrieval, lightweight fine-tuning, and reinforcement learning—has demonstrated feasibility and traceability on multi-hospital datasets. Across all core metrics (CQI, ROUGE-L, BERTScore, and Med-Concept F1), the whole system significantly outperforms conventional fine-tuning and plain-RAG baselines while achieving a lower medical-hallucination rate (6.2%) on the FactCC-Med benchmark and attaining a CQI score of 78.3—surpassing Med-PaLM 2 (72.6) and PMC-LLaMA (74.3)—which supports the robustness and competitive edge of our framework.

Operationally, the framework has been integrated into the hospital information system: after double-blind validation by biostatisticians, report-writing time was reduced by roughly 75%, and layout errors were markedly curtailed—delivering tangible gains in clinical development efficiency. These outcomes align with—and extend—the performance reported in recent international literature, underscoring the necessity of moderate parameterization and rigorous retrieval strategies for real-world deployment in mid-sized hospitals.

Future work will expand the framework to additional healthcare institutions, support multilingual scenarios, and incorporate dynamic knowledge updates alongside robust safety-filtering mechanisms, with the long-term objective of enabling real-time clinical decision support.

Author Contributions

Conceptualization, S.-M.K. and R.-C.C.; methodology, S.-M.K., H.-Y.L., and R.-C.C.; software, S.-M.K.; validation, S.-M.K., H.-Y.L., S.-K.T. and R.-C.C.; formal analysis, S.-M.K.; investigation, S.-M.K. and H.-Y.L.; resources, S.-K.T. and R.-C.C.; data curation, S.-M.K. and H.-Y.L.; writing—original draft preparation, S.-M.K. and H.-Y.L.; writing—review and editing, S.-M.K. and R.-C.C.; visualization, S.-M.K.; supervision, R.-C.C.; project administration, S.-M.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset is available upon request from the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

| AI | Artificial Intelligence |

| ATC | Anatomical Therapeutic Chemical Classification |

| CQI | Composite Quality Index |

| DICOM | Digital Imaging and Communications in Medicine |

| EHR | Electronic Health Record |

| FHIR | Fast Healthcare Interoperability Resources |

| GRPO | Guided Reinforcement with Policy Optimization |

| HIS | Hospital Information System |

| ICD-10 | International Classification of Diseases, 10th Revision |

| LLM | Large Language Model |

| LoRA | Low-Rank Adaptation |

| Med-F1 | UMLS-based Medical Concept F1 Score |

| NER | Named Entity Recognition |

| NHI | National Health Insurance (Taiwan) |

| PACS | Picture Archiving and Communication System |

| PEFT | Parameter-Efficient Fine-Tuning |

| PPO | Proximal Policy Optimization |

| QLoRA | Quantized LoRA |

| RAG | Retrieval-Augmented Generation |

| RCT | Randomized Controlled Trial |

| TFDA | Taiwan Food and Drug Administration |

| UMLS | Unified Medical Language System |

References

- Hutson, M. How AI is being used to accelerate clinical trials. Nature 2024, 627, S2–S5. Available online: https://www.nature.com/articles/d41586-024-00753-x (accessed on 22 June 2025). [CrossRef]

- Xu, Z.; Jain, S.; Kankanhalli, M. Hallucination is inevitable: An innate limitation of large language models. arXiv 2024, arXiv:2401.11817. Available online: https://arxiv.org/abs/2401.11817 (accessed on 22 June 2025).

- Raiaan, M.A.K.; Mukta, M.S.H.; Fatema, K.; Fahad, N.M.; Sakib, S.; Mim, M.M.J. A review on large language models: Architectures, applications, taxonomies, open issues, and challenges. IEEE Access 2024, 12, 26839–26874. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H.; Wang, H. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997. Available online: https://arxiv.org/abs/2312.10997 (accessed on 22 June 2025).

- Ng, K.K.Y.; Matsuba, I.; Zhang, P.C. RAG in Health Care: A Novel Framework for Improving Communication and Decision-Making by Addressing LLM Limitations. NEJM AI 2025, 2, AIra2400380. [Google Scholar] [CrossRef]

- Ocampo, T.S.C.; Silva, T.P.; Alencar-Palha, C.; Haiter-Neto, F.; Oliveira, M.L. ChatGPT, and scientific writing: A reflection on the ethical boundaries. Imaging Sci. Dent. 2023, 53, 175–176. [Google Scholar] [CrossRef] [PubMed Central]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. QLoRA: Efficient Finetuning of Quantized LLMs. arXiv 2023, arXiv:2305.14314. Available online: https://arxiv.org/abs/2305.14314 (accessed on 22 June 2025).

- Huang, D.; Hu, Z.; Wang, Z. Performance Analysis of Llama 2 Among Other LLMs. In Proceedings of the 2024 IEEE Conference on Artificial Intelligence (CAI), Singapore, 25–27 June 2024; pp. 1081–1085. [Google Scholar]

- Tanno, R.; Barrett, D.G.T.; Sellergren, A.; Ghaisas, S.; Dathathri, S.; See, A.; Welbl, J.; Lau, C.; Tu, T.; Azizi, S.; et al. Collaboration between clinicians and vision–language models in radiology report generation. Nat. Med. 2024, 31, 599–608. [Google Scholar] [CrossRef]

- Asgari, E.; Montaña-Brown, N.; Dubois, M.; Khalil, S.; Balloch, J.; Yeung, J.A.; Pimenta, D. A framework to assess clinical safety and hallucination rates of LLMs for medical text summarization. npj Digit. Med. 2025, 8, 274. [Google Scholar] [CrossRef]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef]

- Singhal, K.; Azizi, S.; Tu, T.; Mahdavi, S.S.; Wei, J.; Chung, H.W.; Scales, N.; Tanwani, A.; Cole-Lewis, H.; Pfohl, S.; et al. Large Language Models Encode Clinical Knowledge. Nature 2023, 620, 172–180. [Google Scholar] [CrossRef]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Tseng, V.; et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS Digit. Health 2023, 2, e0000198. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; McCoy, A.B.; Wright, A. Improving large language model applications in biomedicine with retrieval-augmented generation: A systematic review, meta-analysis, and clinical development guidelines. J. Am. Med. Inf. Assoc. 2025, 32, 605–615. [Google Scholar] [CrossRef]

- Izacard, G.; Grave, E. Leveraging passage retrieval with generative models for open domain question answering. arXiv 2020, arXiv:2007.01282. [Google Scholar] [CrossRef]

- Alkhalaf, M.; Yu, P.; Yin, M.; Deng, C. Applying generative AI with retrieval-augmented generation to summarize and extract key clinical information from electronic health records. J. Biomed. Inform. 2024, 156, 104662. [Google Scholar] [CrossRef] [PubMed]

- Malkov, Y.A.; Yashunin, D.A. Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs. arXiv 2018, arXiv:1603.09320. [Google Scholar] [CrossRef]

- Gao, Y.; Li, R.; Croxford, E.; Tesch, S.; To, D.; Caskey, J.; Patterson, B.W.; Churpek, M.M.; Miller, T.; Dligach, D.; et al. Large Language Models and Medical Knowledge Grounding for Diagnosis Prediction. medRxiv 2023, 2023, 24.23298641. [Google Scholar] [CrossRef]

- Qin, C.; Jiang, K.; Wang, Y.; Zhu, T.; Wu, Y.; Zhang, D. Event-triggered H∞ control for unknown constrained nonlinear systems with application to robot arm. Appl. Math. Model. 2025, 144, 116089. [Google Scholar] [CrossRef]

- Zhang, D.; Hao, X.; Liang, L.; Liu, W.; Qin, C. A novel deep convolutional neural network algorithm for surface defect detection. J. Comput. Des. Eng. 2022, 9, 1616–1632. [Google Scholar] [CrossRef]

- Hu, E.; Shen, Y.; Wallis, C.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. ICML Proc. 2021, 139, 132–152. [Google Scholar]

- Xiong, G.; Jin, Q.; Lu, Z.; Zhang, A. Benchmarking Retrieval-Augmented Generation for Medicine. arXiv 2024, arXiv:2402.13178. [Google Scholar]

- Ye, C. Exploring a learning-to-rank approach to enhance the Retrieval Augmented Generation (RAG)-based electronic medical records search engines. Inform. Health 2024, 1, 93–99. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).