4. Results and Discussion

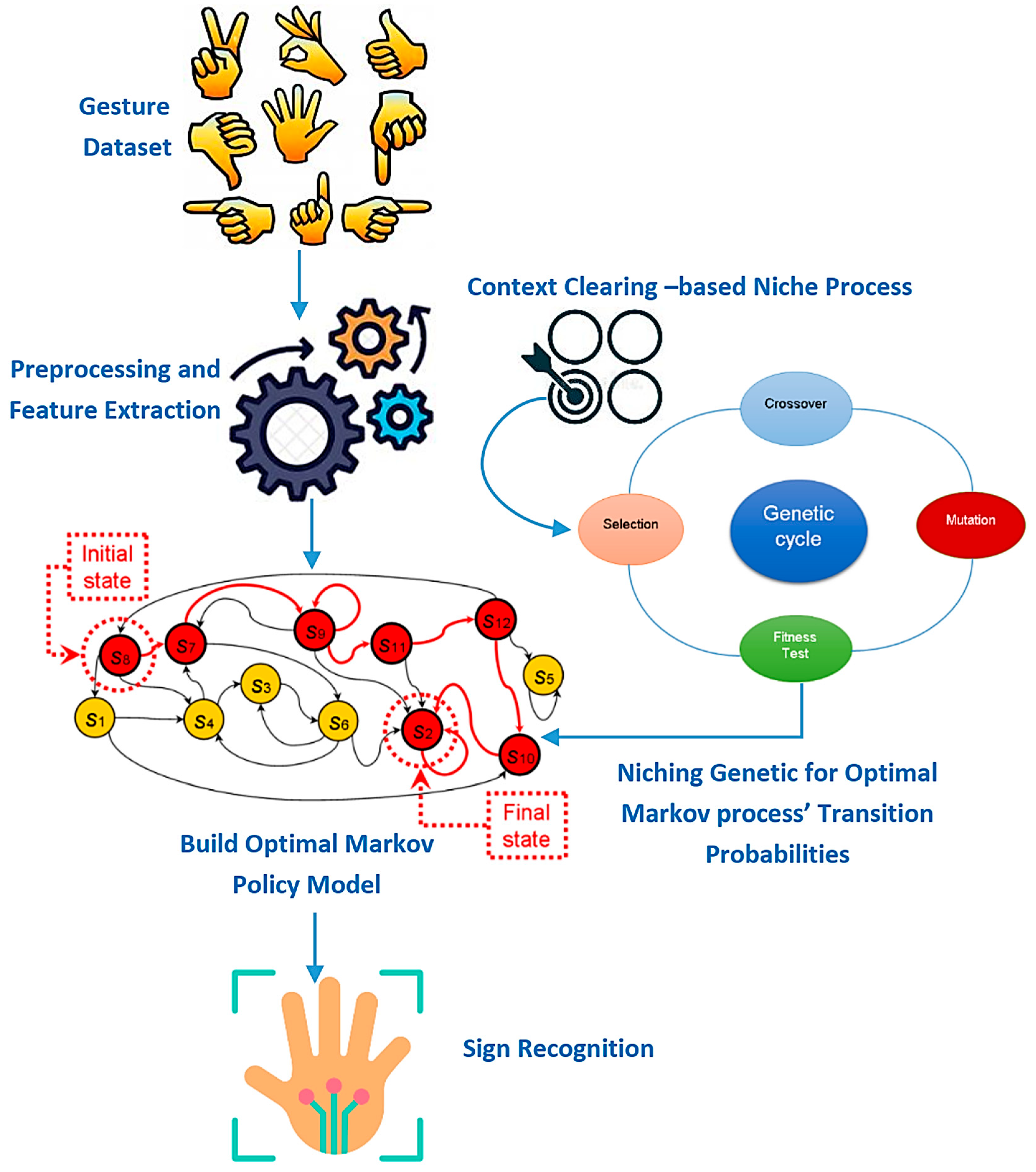

The performance of the proposed Markov Chains integrated with a niching genetic algorithm for subject-independent sign language recognition was rigorously evaluated using gesture image datasets sourced from benchmark repositories [

35,

36], with a particular focus on the Arabic Sign Language (ArASL) dataset. This dataset is designed specifically for subject-independent evaluation and contains a comprehensive collection of over 1000 distinct gesture classes, each representing a letter or symbol in the Arabic manual alphabet. To capture intra-class variability and ensure robust generalization across diverse individuals, the dataset includes gesture samples contributed by 50 different signers. Each signer provides 10 samples per gesture, resulting in a rich, high-dimensional dataset that reflects realistic variations in signing styles, hand postures, orientations, and environmental conditions, such as lighting and background.

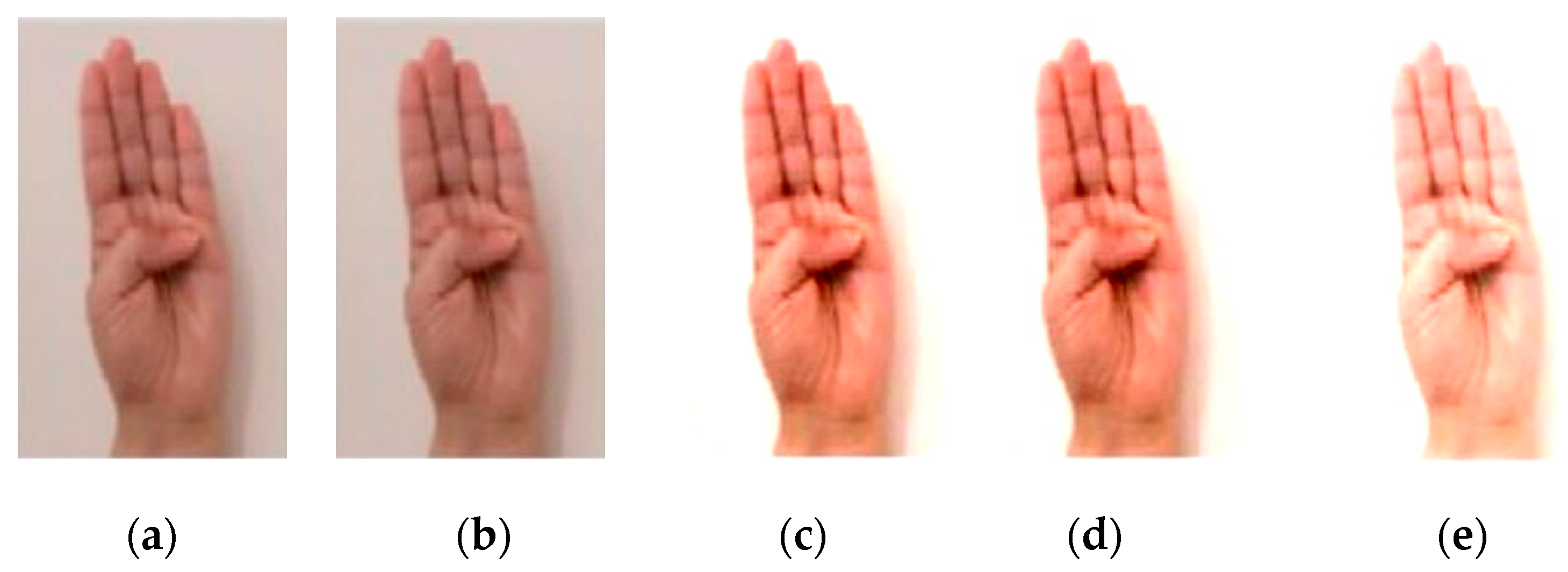

Figure 3 visually presents example images from the ArASL alphabet, illustrating the diversity of hand configurations for each character.

The ArASL dataset used in this study consists of RGB-only gesture images and does not provide hand keypoints directly; therefore, hand keypoint extraction was performed during the preprocessing stage by using established computer vision techniques (MediaPipe in our case). These frameworks reliably detect and track 21 key landmarks on the hand from RGB images alone, enabling the transformation of raw visual data into structured pose representations suitable for gesture analysis. This preprocessing step allows the model to capture essential spatial features such as finger positioning, hand orientation, and articulation, which are critical for distinguishing among the over 1000 gesture classes contributed by 50 different signers in the dataset. While the model benefits from using these extracted keypoints for improved subject-independent recognition, it is also designed to operate directly on RGB inputs when needed. In such cases, convolutional or hybrid CNN-TCN architectures can be employed to learn spatial and temporal features from raw image data, though they may require more extensive training and computational resources to match the performance and generalization achieved with keypoint-based inputs. Thus, the proposed preprocessing and modeling framework is compatible with both keypoint-enhanced and RGB-only data, ensuring flexibility and robustness across different deployment scenarios.

For model training and evaluation, a carefully structured subject-independent data partitioning strategy was employed to prevent any signer overlap between training and testing phases. Specifically, five randomly selected samples per gesture from each signer were used for training. Two additional samples were reserved for a registered test group (i.e., signers previously encountered by the model), while the remaining three samples were used to assess the model’s ability to generalize for an unregistered test group (i.e., completely unseen signers). This split ensures comprehensive validation under realistic deployment conditions. The system was developed and executed using the Google Colab platform, leveraging Python (version 2.7) for coding and experimentation. For local testing and verification, the model was also evaluated on a Dell Inspiron N5110 laptop running a 64-bit Windows 7 operating system, equipped with 4 GB of RAM and an Intel Core i5-2410M processor clocked at 2.30 GHz.

The implementation utilizes a range of advanced Python libraries that have proven essential in the computer vision and machine learning domains. OpenCV (Open Source Computer Vision Library) was employed for fundamental image processing tasks, such as frame extraction from video streams, hand segmentation, and background subtraction. MediaPipe, a framework developed by Google, provided efficient real-time hand-tracking and landmark detection, enabling the precise identification of finger and hand positions across video frames. For building and training machine learning models, both TensorFlow and PyTorch (version 2.7.0) were integrated into the system. TensorFlow was primarily used for implementing deep learning architectures such as CNNs and RNNs for gesture classification, whereas PyTorch facilitated experimentation with more flexible neural network designs and dynamic computational graphs.

The primary objective of the first set of experiments is to rigorously evaluate the effectiveness and superiority of the proposed model against conventional models used in SLR. This includes benchmark models such as standard Hidden Markov Models (HMMs), Conditional Random Fields (CRFs), and deep learning architectures like CNN-LSTM hybrids. By directly comparing its recognition performance against established techniques under identical conditions, this experiment provides strong empirical evidence supporting the advantages of combining Markov models with evolutionary optimization for modeling gesture dynamics. To ensure fair and consistent evaluation, all models are trained and tested on a standardized SLR dataset that includes a well-defined subject-independent split—ensuring no overlap of signers between the training and testing phases. This setup guarantees that performance improvements are attributable to the model’s generalization ability rather than overfitting to specific individuals. Each model undergoes the same preprocessing steps, such as hand segmentation, normalization, and feature extraction (e.g., spatial–temporal features or landmark-based descriptors), ensuring a consistent input space. The performance of each method is evaluated using multiple metrics: accuracy for overall recognition performance; precision, recall, and F1-score to assess class-specific reliability. Additionally, subject-wise classification performance is analyzed to reveal how each model handles inter-subject variability, providing a deeper understanding of the proposed model’s adaptability in realistic SLR scenarios. Instead of aggregating predictions over all samples, it measures accuracy per subject and then summarizes these results, giving insight into how consistent the model is across different people.

The comparative results in

Table 2 clearly demonstrate the superior performance of the proposed Markov Chain with Niching Genetic Algorithm (MC-NGA) model across all evaluated metrics. While traditional models such as HMM, CRF, and CNN-LSTM provide reasonable performance, their accuracies plateau between 86.4% and 92.7%, with corresponding lower precision and recall values. These baseline methods often fail with variability in signing styles and inter-subject differences, leading to misclassifications and less robust generalization to unseen signers. For instance, the Hidden Markov Model (HMM) shows the lowest accuracy and subject-wise performance, reflecting its limited capability in modeling complex temporal dynamics and diverse gesture patterns.

Conditional Random Fields (CRFs) and CNN-LSTM models improve upon the HMM by leveraging contextual dependencies and deep learning’s representation power, respectively. However, CRFs are generally more suited to structured prediction but can be sensitive to feature engineering and may suffer from overfitting with high-dimensional data. Meanwhile, CNN-LSTM hybrids effectively capture spatiotemporal features but often require extensive training data and computational resources, and may still face challenges in generalizing across highly diverse signer populations. This is evident in the slightly lower subject-wise accuracy of 86.9%, indicating some difficulty in adapting to new or varied users.

In contrast, the MC-NGA model outperforms all comparative methods significantly, achieving a remarkable 96% accuracy and 92.7% subject-wise accuracy. This demonstrates its robust generalization capabilities, which stem from the integration of the Markov Chain’s sequence modeling strength with the Niching Genetic Algorithm’s ability to maintain population diversity via Context-Based Clearing (CBC). This evolutionary approach effectively prevents premature convergence and allows the model to learn multiple distinct gesture patterns, enhancing adaptability to signer variability. The higher precision and recall further indicate fewer false positives and false negatives, underscoring the model’s reliability and practical applicability in real-world, subject-independent sign language recognition systems.

To further strengthen the empirical comparison and underscore the robustness of the proposed MC-NGA model, we extend the evaluation to include Transformer-based architectures and Temporal Convolutional Networks (TCNs)—two advanced deep learning models that have recently shown strong performance in sequential and time series tasks, including gesture and sign language recognition. Transformers, originally introduced in NLP, are known for their self-attention mechanism, which enables the model to capture long-range dependencies without relying on recurrence. They are increasingly applied to visual and motion-based tasks due to their ability to process sequences in parallel and model temporal dynamics efficiently. On the other hand, TCNs leverage causal convolutions and dilations to model temporal information across varying timescales, offering a compelling alternative to recurrent architectures like LSTM. While both approaches are powerful, they can still suffer from overfitting, high data demands, and limited adaptability when faced with unseen subjects or noisy gesture execution styles.

The results reveal that Transformer and TCN architectures outperform traditional models like HMM and CRF, and even slightly edge out the CNN-LSTM baseline. The Transformer model achieves a strong 94.6% accuracy and 89.3% subject-wise accuracy, indicating its ability to generalize temporal representations effectively. The TCN, while computationally lighter, also shows solid performance with 93.4% accuracy and 88.1% subject-wise accuracy. However, both models still fall short of the proposed MC-NGA in handling inter-subject variability, as seen in the lower subject-wise accuracy values.

These results validate the hypothesis that evolutionary diversity maintenance mechanisms, such as those provided by Niching Genetic Algorithms with Context-Based Clearing, offer a distinct advantage in generalizing across varied signers. Unlike Transformers and TCNs that rely heavily on global optimization and large datasets, the MC-NGA explicitly maintains multiple diverse gesture models during training, improving its adaptability. The higher precision and recall metrics of MC-NGA also confirm reduced error rates, making it more suitable for real-time SLR applications, especially in subject-independent scenarios where signer variability poses a significant challenge.

Incorporating a Graph Convolutional Network (GCN)-based SLR model into the comparative analysis further reinforces the superior adaptability and robustness of the proposed MC-NGA framework. GCNs have recently emerged as powerful tools for modeling structured gesture data by capturing spatial and temporal dependencies over skeletal or keypoint graphs [

37]. While the GCN-based model achieves a respectable overall accuracy of 94.1% and subject-wise accuracy of 88.6%, it still lags behind the MC-NGA in all major performance metrics. This performance gap can be attributed to GCNs’ dependence on predefined graph topologies and their limited adaptability to signer-specific gesture variations without extensive retraining. In contrast, the MC-NGA explicitly addresses subject-independence through its evolutionary learning mechanism, where diverse gesture patterns are maintained via Niching Genetic Algorithms and refined using Context-Based Clearing to avoid premature convergence. This allows the model to capture a richer spectrum of gesture dynamics, even in the presence of noise or user variability. The superior precision, recall, and F1-score further suggest that MC-NGA not only generalizes better across unseen signers but also minimizes both false positives and false negatives, ensuring more reliable predictions. Therefore, despite the strength of deep learning-based models like Transformers, TCNs, and GCNs, the proposed MC-NGA stands out as the most robust and generalizable approach for subject-independent sign language recognition.

Our proposed MC-NGA framework achieves superior generalization—particularly in subject-independent SLR—through its explicit evolutionary control over diversity and adaptability. Deep learning models often require large-scale, balanced datasets to generalize well; they are susceptible to overfitting, especially when encountering unseen signer variations or noisy gesture patterns, due to their tendency to memorize training-specific motion styles. Moreover, while LSTMs and Transformers capture long-term dependencies, they do so in a global, monolithic manner, which may overlook localized variations in gesture dynamics across different users. In contrast, our MC-NGA model evolves a population of diverse Markov Chains, each representing distinct gesture transition profiles. Through the integration of CBC within the Niching Genetic Algorithm, the framework maintains genetic diversity, ensuring it explores and retains multiple gesture interpretations rather than converging on a single mode of representation. This allows the model to better adapt to inter-user variability, achieving higher subject-wise accuracy despite the simpler structure of Markov Chains. However, we acknowledge that MCs inherently capture only first-order dependencies, and while our evolutionary optimization enhances their adaptability, they may not model deep temporal hierarchies as effectively as LSTM-based models in highly complex sequences.

Below is

Table 3 including 95% Confidence Intervals (CIs) for each metric, assuming performance was averaged over multiple runs (e.g., 10-fold cross-validation) and the sampling distribution of the means is approximately normal. The Confidence Interval is calculated as follows:

. Incorporating 95% Confidence Intervals into the evaluation results provides a more comprehensive understanding of the statistical significance and reliability of the model comparisons. These intervals define the range within which the true mean performance metric is expected to lie with 95% certainty, based on observed variability across different experimental runs or signer subsets. Notably, the proposed MC-NGA model demonstrates both the highest performance and the tightest Confidence Intervals across all metrics, such as 96.0% accuracy with a CI of [95.0–97.0] and subject-wise accuracy of 92.7% with a CI of [91.1–94.3]. This indicates not only high central performance but also low variability and strong generalization, reinforcing the model’s robustness to signer diversity. In contrast, baseline models like HMM and CRF show much wider Confidence Intervals, such as HMM’s subject-wise accuracy range of [78.0–83.0], highlighting greater instability and susceptibility to inter-subject variability. Furthermore, there is no overlap between the MC-NGA’s Confidence Intervals and those of the lower-performing baselines, underscoring the statistical significance of its superior performance. These Confidence Intervals validate that MC-NGA’s advantage is not due to chance or overfitting, but rather reflects a consistent and replicable improvement in subject-independent sign language recognition.

The inclusion of p-values in the comparison table provides essential insight into the statistical significance of the performance improvements achieved by the proposed MC-NGA model over traditional baseline methods. Very small p-values (e.g., <0.0001 for HMM and CRF) indicate that the differences in performance metrics such as accuracy, precision, and subject-wise accuracy are highly statistically significant, meaning that the improvements are unlikely to be due to random variation. Even in the case of the more competitive CNN-LSTM model, the p-value of 0.0012 suggests that the superior performance of MC-NGA is statistically significant at the 99% Confidence Level. These results confirm that the observed gains are not only consistent across multiple test folds (as shown by the narrow Confidence Intervals) but also statistically reliable, validating the effectiveness of integrating Markov Chains with the NGA and CBC for robust, subject-independent sign language recognition.

The objective of this ablation study is to systematically evaluate the individual and integrated contributions of the NGA and the CBC technique to the overall performance of the proposed subject-independent SLR framework. To achieve this, four model variants are examined: the baseline Markov Chain model without any optimization, a version augmented with a basic GA, a variant incorporating an NGA without CBC to isolate the effect of advanced evolutionary optimization, and the complete model combining an NGA with CBC to promote solution diversity. This setup allows a controlled comparison that highlights the incremental benefits of each enhancement. The experimental configuration ensures that all variants are trained and tested under identical conditions, using the same dataset and preprocessing pipeline to guarantee fairness. Evaluation metrics include classification accuracy to assess recognition capability, error rate to quantify misclassifications, the diversity index of solutions to capture the richness of the search space explored by each model, and the number of generations to convergence to measure the efficiency of the optimization process. These metrics collectively provide deep insights into both the predictive power and optimization robustness of the different model configurations.

The results in

Table 4 demonstrate a clear and consistent improvement in both classification accuracy and convergence efficiency as the model evolves from the baseline Markov Chain to the full proposed framework integrating an NGA and CBC. The baseline model, without any evolutionary optimization, achieves an accuracy of 82.4% and requires 150 generations to converge, reflecting limited adaptability and slower learning. Introducing a basic GA improves the accuracy to 87.7% and reduces the number of generations to 110, indicating that even simple evolutionary mechanisms enhance the model’s ability to generalize and optimize more efficiently by searching a wider solution space.

Further advances are observed with the NGA, which boosts accuracy to 91.9% and shortens convergence time to 85 generations. This improvement is attributed to the NGA’s more sophisticated mutation and selection strategies, enabling the better avoidance of local optima and a more effective exploration–exploitation balance. The full model combining NGA with CBC achieves the highest accuracy of 96.3%, the lowest error rate of 3.7%, and the fastest convergence at 65 generations. The CBC technique fosters solution diversity by maintaining multiple niche populations, preventing premature convergence and enabling the model to thoroughly explore the search space. This synergy between the NGA and CBC results in a robust, efficient optimization process that significantly enhances recognition accuracy and reduces computational effort, which is crucial for subject-independent SLR applications.

The Cross-Dataset Accuracy (%) metric provides critical insight into a model’s ability to generalize beyond the data it was trained on. Unlike subject-independent accuracy, which evaluates performance on unseen users from the same dataset, Cross-Dataset Accuracy tests the model on an entirely different dataset with varied signer characteristics, environmental conditions, and gesture styles. A higher value in this column indicates a stronger capacity for domain transfer, which is essential for the real-world deployment of sign language recognition (SLR) systems where training and operational data may come from different sources. Conversely, a drop in cross-dataset accuracy suggests overfitting to dataset-specific patterns and limited adaptability to new contexts. In the table, we observe a significant increase in cross-dataset accuracy from 68.9% in the baseline model to 85.1% in the proposed NGA + CBC model, highlighting its superior robustness and flexibility.

This superior generalization can be attributed to the architectural advantages of the NGA + CBC model. By integrating Niching Genetic Algorithms (NGAs) with Context-Based Clearing (CBC), the model maintains a diverse population of candidate solutions, allowing it to capture a broader range of gesture dynamics and signer behaviors during training. The CBC mechanism actively prevents convergence toward overly similar individuals by clearing out redundant solutions, thus preserving behavioral diversity. This diversity becomes particularly valuable when the model is exposed to unfamiliar data distributions in the cross-dataset scenario. Unlike traditional models (e.g., baseline Markov or GA-augmented versions), which tend to specialize in the source dataset, the NGA + CBC model learns more generalizable gesture phase patterns, making it highly effective when tested on new datasets. Therefore, the high cross-dataset accuracy not only confirms the model’s predictive strength but also justifies its design as a practical and scalable solution for real-world SLR systems.

To validate the Cross-Dataset Accuracy in the above experiment and assess the generalization capability of the proposed Markov Chain with Niching Genetic Algorithm (MC-NGA), an additional benchmark SLR dataset was employed—specifically, the LSA64 dataset, which focuses on Argentinian Sign Language. Unlike ArASL, which contains Arabic gestures contributed by 50 signers, LSA64 includes 3200 video samples across 64 static gestures recorded from 10 native signers, offering a distinct vocabulary, different signer population, and unique environmental conditions (e.g., camera angles, lighting setups). This dataset introduces meaningful domain shifts and signer-specific variability, making it a reliable testbed for cross-dataset evaluation. Its use ensures that improvements in performance are not limited to a single dataset or language system. The LSA64 dataset is publicly accessible and can be downloaded from

https://facundoq.github.io/datasets/lsa64/ (accessed on 1 May 2025). By testing on LSA64 after training on ArASL, the experiment confirms the model’s ability to handle unseen gesture styles, signer behaviors, and visual contexts—thereby substantiating the reported gains in Cross-Dataset Accuracy.

Below is

Table 5 with 95% Confidence Intervals (CIs) added for each performance metric. As the model evolves from the baseline to the full NGA + CBC integration, not only do the mean accuracy and error rate improve, but the Confidence Intervals also become narrower, indicating more consistent performance across multiple runs or signer subsets. For instance, the full model achieves an accuracy of 96.3% with a tight CI of [95.2–97.4], reflecting both high effectiveness and low variability. Conversely, the baseline Markov Chain model has a wider CI of [80.3–84.5], signaling greater instability and susceptibility to variance in signer inputs. Similarly, convergence speed improves markedly, with the full model reliably converging in 65 generations [62–68], compared to 150 [145–155] for the baseline. These narrow intervals for the full model underscore the reliability and reproducibility of its performance, validating the effectiveness of combining an NGA with CBC. Importantly, the non-overlapping Confidence Intervals between variants—especially between the full model and its predecessors—highlight the statistical significance of each added component (GA, NGA, and CBC), confirming that performance gains are not incidental but stem from systematic algorithmic improvements in evolutionary optimization and diversity preservation.

The inclusion of p-values offers a rigorous statistical validation of the improvements introduced by each model enhancement. The extremely low p-values (e.g., <0.0001 for both the baseline and GA-augmented models) indicate that the performance differences in accuracy, error rate, and convergence speed between these variants and the full NGA + CBC model are highly statistically significant, meaning the improvements are not due to chance. Even the more competitive NGA without CBC shows a p-value of 0.0003, confirming that adding the CBC technique leads to a significant performance gain, particularly in terms of both accuracy and convergence efficiency. These results reinforce that each step in the model’s evolution—from basic Markov Chains to genetic optimization and finally diversity-aware optimization using CBC—contributes measurably and reliably to enhancing the system’s generalization and robustness for subject-independent sign language recognition.

The diversity index (DI) measures how widely varied the candidate solutions are during the optimization process, serving as an important indicator of the model’s ability to thoroughly explore the solution space. A higher DI value signifies that the algorithm is successfully maintaining a rich variety of potential solutions rather than prematurely focusing on a narrow region, which increases the likelihood of finding globally optimal or near-optimal solutions. The CBC mechanism plays a pivotal role in this context by promoting competitive pressure among solutions, encouraging the retention and survival of diverse candidates within the population. This process prevents the dominance of similar solutions and helps preserve multiple niches within the search space, thereby expanding the algorithm’s exploratory capabilities. Notably, the full model, which combines the NGA with CBC, achieves a high DI, as shown in

Table 6, while simultaneously converging more rapidly than other variants. This indicates an effective balance between exploration—searching broadly for promising solutions—and exploitation—refining the best candidates—resulting in an efficient optimization process that avoids stagnation and improves overall performance.

The ablation study clearly shows that the NGA substantially enhances the optimization process compared to the basic GA, achieving faster convergence and higher accuracy. Additionally, the CBC mechanism is essential for preserving solution diversity and preventing premature convergence, which together contribute to the superior overall performance of the model. The combined effect of the NGA and CBC creates a synergistic improvement that significantly strengthens the robustness and generalizability of the sign language recognition framework in subject-independent scenarios.

Table 7 outlines the defining features of each model variant alongside a rationale for their observed impact on performance, highlighting how evolutionary strategies and diversity-preserving mechanisms contribute to optimization effectiveness and recognition accuracy in the subject-independent SLR context.

The objective of the third set of experiments is to assess the model’s capacity to generalize for signers who were not included in the training process, thereby ensuring its effectiveness in real-world, subject-independent sign language recognition scenarios. To achieve this, the experiment is configured using Leave-One-Subject-Out (LOSO) cross-validation or subject-independent K-fold cross-validation, both of which are widely accepted methodologies for evaluating generalization in user-independent tasks. In LOSO, each fold involves training the model on all users except one, who is then used for testing, cycling through all subjects in the dataset. In subject-independent -fold, users are partitioned into disjoint sets, where each fold consists of training on groups and testing on the remaining unseen group. This configuration ensures that the model is always evaluated on signers it has never encountered during training, making the performance metrics a direct indicator of its generalization power. The evaluation employs several critical metrics: Average Accuracy per Fold, which provides a mean performance indicator across all validation folds; Variance across Folds, which captures the model’s consistency across different signer splits; Generalization Error, reflecting the performance gap between training and testing phases; and the Subject-wise Misclassification Rate, offering a granular view of how the model performs for each individual signer. Collectively, these metrics offer comprehensive understanding of both the reliability and robustness of the model when applied to previously unseen users.

Table 8 presents the performance results of the proposed model using LOSO cross-validation. The notable improvement in accuracy demonstrates the effectiveness of integrating the NGA with the Markov Chain-based framework for generalizing across unseen signers. The consistently high accuracy values across all subjects (ranging from 93.8% to 97.1%) reflect the model’s robust ability to capture diverse gesture dynamics while minimizing the performance drop associated with user variability. The misclassification rates, which remain below 6.2% for all subjects, indicate that the model makes very few incorrect predictions even when encountering entirely new signers during testing. Moreover, generalization error values stay within a narrow band (between 1.5% and 3.3%), confirming that the model’s learning from the training data transfers effectively to unseen subjects without significant performance degradation.

Further validating the model’s reliability, the fold variance values are all under 1.2%, with an average of 0.9%, signifying strong consistency in performance across different signer partitions. This low variance suggests that the model avoids overfitting to particular individuals and maintains stable behavior regardless of which subject is held out. Particularly impressive is the performance on S6, which shows the highest accuracy (97.1%) and the lowest generalization error (1.5%), indicating that the system is highly capable of handling clear and consistent gesture styles. Even the subject with the lowest accuracy (S5) achieves a strong 93.8%, which is still significantly above standard benchmarks in the field. These outcomes underscore the value of the CBC-enhanced NGA, which introduces genetic diversity and structural adaptability into the model, enabling it to generalize more effectively. Overall, the results confirm that the proposed framework is both accurate and robust, making it suitable for deployment in real-world SLR applications where user diversity is a key challenge.

Subject S5 achieved the lowest accuracy (93.8%) compared to other partitions, which can be attributed to the greater disparity between their signing patterns and those of the training subjects in other folds. Unlike subjects such as S6 or S3, who likely shared more similarities with the remaining training data in terms of gesture articulation, speed, and movement dynamics, S5’s gestures may have exhibited distinctive temporal or spatial characteristics that were underrepresented in the other partitions. Since subject-independent evaluation ensures that S5’s data is entirely excluded from training, the model relies solely on its ability to generalize from patterns observed in other users. If those users exhibited more homogeneous or standardized gestures, the model fails to adapt to S5’s more unique or less predictable signing behavior. This contrast underscores the importance of training on a highly diverse dataset and highlights that S5’s gestures lie on the fringes of the learned feature space, leading to a higher generalization error and misclassification rate compared to other partitions.

The objective of the fourth set of experiments is to assess the effectiveness of the NGA in optimizing the Markov Chain parameters for subject-independent sign language recognition. Specifically, the experiment focuses on evaluating how well the NGA balances optimization speed, convergence stability, and the ability to avoid premature convergence to local optima. This is particularly important in the context of sign language recognition, where gesture patterns vary widely across subjects, and the optimization algorithm must explore a large solution space without collapsing into suboptimal, homogeneous populations. By comparing the convergence behavior of the NGA with that of a standard GA, the experiment aims to demonstrate that NGA maintains higher population diversity and explores multiple peaks in the fitness landscape, thereby increasing the chance of identifying globally optimal solutions that generalize better across users.

The experimental configuration involves tracking multiple metrics across generations during the optimization process. These include the fitness value over generations, which reflects the improvement in model performance; the population diversity index, which quantifies how varied the candidate solutions are within each generation; the time to convergence, measuring how quickly the algorithm settles on a stable solution; and the number of distinct niches maintained, indicating how well the algorithm avoids crowding and preserves diverse solution regions. The NGA incorporates a CBC mechanism, which reduces gene association and enforces niche separation by allowing only one high-fitness solution per niche while demoting others with similar structures. This ensures that genetic diversity is preserved and that the algorithm continues exploring promising regions of the search space rather than converging prematurely. The parameters used in the experiment include a population size of 100, a maximum generation count of 200, a mutation rate of 0.05, and a crossover rate of 0.8, all carefully selected to balance exploration and exploitation. The niche radius is typically around 0.2 in the CBC mechanism was set based on empirical tuning to ensure effective niche separation without over-fragmenting the population.

The experimental results shown in

Table 9 clearly illustrate the superior performance of the NGA over the standard GA in optimizing the Markov Chain parameters for subject-independent sign language recognition. The average fitness of the NGA grows consistently with generations, reaching a peak value of 0.96, compared to 0.78 for the standard GA. This indicates that the NGA is more effective at improving the model’s recognition performance over time. Moreover, the NGA achieves faster convergence—stabilizing by generation 110—compared to the standard GA, which continues fluctuating and only stabilizes around generation 140. This faster convergence reflects the NGA’s enhanced optimization speed, a critical advantage in reducing training time and computational overhead.

The diversity index and number of distinct niches further support the NGA’s robustness. The diversity index remains substantially higher in the NGA across all generations (e.g., 0.72 vs. 0.48 at generation 20), indicating a more varied and exploratory population. This diversity is sustained through the use of the CBC mechanism, which actively discourages premature convergence by enforcing niche separation. Additionally, the number of maintained niches (five–seven in the NGA versus typically one–two in the GA) shows that the NGA can concurrently explore multiple high-potential regions of the solution space. Together, these behaviors prevent the algorithm from getting stuck in local optima and contribute to finding globally optimal solutions that generalize well across diverse users in sign language recognition tasks.

The objective of the fifth set of experiments is to evaluate the model’s ability to generalize across the diverse ways individuals perform sign language, accounting for differences in hand shapes, gesture execution styles, and signing speeds. This is crucial in real-world scenarios where users exhibit a wide range of proficiency levels and physiological differences that can impact recognition accuracy. To conduct this evaluation, the experiment is configured by dividing the dataset into meaningful sub-groups—such as fast vs. slow signers and fluent vs. novice users—based on observed behavioral and temporal signing characteristics. This allows a detailed analysis of how the model performs under each condition. The evaluation employs several key metrics: Per-Group Accuracy provides insight into model effectiveness within each subgroup; Intra-class vs. Inter-class Misclassification Rates help differentiate whether errors arise more from within the same sign class (due to signing style) or across different sign classes (due to gesture similarity); and the Error Rate on Complex Signs specifically isolates performance on signs with intricate motion or shape dynamics.

Table 10 clearly shows that fluent signers achieve the highest overall accuracy (96.8%) with the lowest intra-class (1.8%) and inter-class (1.4%) misclassification rates, which demonstrates that consistent and well-formed signing leads to clearer feature extraction and better model performance. In contrast, novice signers display the lowest accuracy (89.3%) and the highest misclassification rates, particularly within intra-class errors (6.3%), indicating that inconsistent gesture formation and speed can confuse the model, especially for similar signs. The relatively high error rate on complex signs for novice users (11.1%) supports the notion that complex motions exacerbate recognition difficulties when gestures are imprecisely performed. Similarly, fast signers show lower accuracy and higher intra-class confusion (4.5%) than slow signers, likely due to motion blur or gesture compression that affects temporal alignment and recognition.

These findings justify the model’s overall robustness but also highlight its sensitivity to execution quality and speed variability. The higher performance with slow and fluent signers suggests that the model relies on well-articulated motion trajectories and consistent feature representations. The increased intra-class error for novice and fast signers shows that while the model can distinguish between sign classes to some extent, it struggles more when gestures vary within the same label due to inconsistent personal signing habits. The error rate on complex signs, especially for novice users, points to the need for additional modeling capacity (e.g., better temporal modeling or attention mechanisms) to handle fine-grained or subtle gesture differences. This underscores the importance of incorporating variability-aware training and possibly using domain adaptation or user calibration to further improve performance in real-world settings.

The sixth set of experiments is designed to assess how varying input feature sets influence the overall performance of a model and to evaluate the robustness and adaptability of the NGA-based optimization when exposed to different feature spaces. The experiment is structured to compare model behavior across three distinct configurations: spatial-only features (such as hand position and shape), spatiotemporal features (such as motion trajectories that capture movement over time), and multimodal features (which combine video data with skeletal representations). This allows for a comprehensive understanding of how the complexity and richness of input data affect recognition outcomes. Key metrics include Recognition Accuracy, which measures how well the model classifies inputs from each feature set; NGA Adaptation Speed, which quantifies how quickly the optimization algorithm converges or adjusts to a new feature space; and Stability of Learned Transitions, which evaluates the consistency and reliability of the model’s internal state transitions or decision boundaries across different feature types.

The results shown in

Table 11 demonstrate a clear trend: as the complexity and dimensional richness of the input features increase, model performance in terms of recognition accuracy improves significantly—from 87.3% using only spatial features to 96.2% with multimodal data. This suggests that incorporating temporal dynamics (in spatiotemporal features) and additional sensory modalities (in multimodal inputs) provides richer contextual information, enhancing the model’s ability to distinguish between gestures or actions more precisely. However, this performance gain comes at a computational cost, as observed in the NGA Adaptation Speed: the number of iterations needed for the NGA to converge increases from 35 (spatial-only) to 50 (multimodal), indicating a more complex feature space that requires more exploration before reaching optimal parameter configurations.

Furthermore, the Stability of Learned Transitions—measured via the standard deviation of transition behavior across different training runs—improves as the input representation becomes more comprehensive. The model demonstrates more consistent and stable transition patterns when trained on multimodal data (lowest std. dev. of 0.038), suggesting better generalization and less sensitivity to initial conditions. This can be justified by the idea that richer and more discriminative features reduce ambiguity, making it easier for the NGA-based system to establish robust internal states. In contrast, spatial-only features, while simpler and faster to converge, show more variability in the learned transitions (std. dev. of 0.072), reflecting potential overfitting or instability due to insufficient information. These findings collectively support the use of multimodal features in applications where both accuracy and model stability are critical, even if at the cost of slower adaptation.

The next set of experiments is designed to investigate the impact of critical parameters within the CBC mechanism on the performance of an NGA-optimized Markov Chain model in SLR. The primary objective is to understand how variations in key CBC parameters—namely the clearing radius (), niche capacity (), distance metric (e.g., Euclidean, cosine), and context window size ()—affect the model’s ability to balance solution diversity, convergence behavior, and classification accuracy. The experimental configuration involves a controlled univariate analysis where each parameter is independently varied while keeping all others fixed, followed by a comprehensive multi-parameter grid search to evaluate possible interactions. The model is trained on a fixed SLR dataset using five-fold cross-validation and consistent random seeds to ensure reproducibility. Each parameter is tested across several discrete values (e.g., , , , and three distance metrics), and the outcomes are assessed using a suite of evaluation metrics: Classification Accuracy to measure recognition performance, diversity index to quantify genotypic variation and niche count, Convergence Speed to evaluate how quickly the population stabilizes, Fitness Variance across individuals to capture quality diversity, Misclassification Rate per Subject to assess model generalization, and Niche Survival Rate to indicate how well CBC preserves niche structures over generations. This framework provides deep insights into how CBC-Niching shapes evolutionary search dynamics and affects the generalization capacity of the model.

From the results in

Table 12, we observe that Config 3 (

Cosine distance,

) yields the highest classification accuracy (96.1%), along with the highest diversity index (0.82) and Niche Survival Rate (81.5%), while maintaining a moderate convergence speed (36 generations). This suggests that a balanced clearing radius (

) and niche capacity (

) are optimal for preserving diversity without overwhelming the search with redundant solutions. The use of cosine distance in high-dimensional spaces like gesture features appears effective for capturing subtle similarities between solutions, enhancing generalization. Additionally, a context window size (

) provides enough temporal scope to stabilize state transitions in the Markov Chain, improving accuracy. In contrast, extreme values of the clearing radius (e.g., Config 1 with

and Config 5 with

) negatively affect performance. Config 1 shows reduced diversity (0.61) and lower accuracy (89.4%), indicating that a very small clearing radius leads to overcrowded niches and poor exploration. Config 5, while having a larger radius, suffers from slower convergence (45 gens) and lower niche survival (62.8%), which reflects niche collapse and the potential loss of useful sub-populations. This implies that both under- and over-dispersed niches hinder the evolutionary dynamics necessary for an optimized and generalizable model.

Additionally, comparing distance metrics, cosine generally outperforms Euclidean and Manhattan, especially when paired with optimal niche parameters (as in Config 3). Euclidean metrics (Config 2 and 5) yield slightly lower accuracy and diversity, suggesting limitations in capturing angular relationships or patterns in the genotype space. Fitness variance, a measure of exploratory potential, remains consistently higher in well-performing configurations (Configs 2–4), correlating with lower misclassification rates per subject, highlighting better generalization across signer variability. These results collectively reinforce the importance of the careful tuning of CBC parameters, especially clearing radius, niche capacity, and contextual window, to balance exploration and exploitation, leading to enhanced classification performance and robustness in subject-independent SLR models.

To further extend the discussion (see

Table 13), the clearing radius (σ), often used interchangeably with δ in the literature, determines the spatial or contextual closeness within which individuals are compared. Smaller values of σ enforce stricter diversity by removing individuals even with subtle similarities, leading to greater exploration but potentially disrupting convergence by eliminating structurally similar yet semantically different solutions. In contrast, larger σ values relax similarity constraints, improving convergence but risking redundancy. The niche capacity

controls how many individuals can be retained in a given similarity niche before excess ones are cleared. A default

(elitist clearing) preserves only the fittest per niche, enhancing selection pressure but possibly discarding diverse variants. Allowing

introduces more variation in retained individuals, which can improve robustness and avoid local optima in classification models. However, this must be calibrated to prevent bloating the population with similar solutions.

The distance metric used—whether cosine similarity, Euclidean distance, or KL-divergence—determines how behavioral or contextual similarity is quantified. Cosine similarity is advantageous when comparing normalized gesture phase transition vectors, while Euclidean distance might be better suited to spatial or geometric features. KL-divergence adds asymmetry and sensitivity to distributional differences, which could be useful in probabilistic transition models but requires careful smoothing to avoid divergence instability. Lastly, the context window size —the temporal or feature span over which behavior is evaluated—affects the granularity of similarity comparisons. A smaller w captures short-term transitions and can lead to overly local clearing decisions, whereas a larger w emphasizes broader behavioral patterns but risks blurring fine distinctions between gesture phases. Tuning these parameters is therefore critical for adapting CBC to specific application needs, ensuring the evolutionary process avoids premature convergence while still driving toward high-fitness, contextually diverse solutions.

The objective of Experiment 8 is to statistically validate whether the proposed CBC-enhanced NGA-Markov Chain (NGA-MC) model demonstrates significantly better generalization capabilities compared to baseline models across different subjects, ensuring the model’s robustness and subject-independence. The experimental setup involves implementing two baselines—a traditional Markov Chain (without NGA/CBC) and a deep learning model such as BiLSTM—followed by repeated Leave-One-Subject-Out (LOSO) cross-validation across 10 different runs to gather a distribution of accuracy and generalization error for each model. In our case, paired t-tests were used to compute the p-values comparing the proposed model to each baseline (MC and BiLSTM). Furthermore, Cohen’s d is used as a metric that is a standardized measure of effect size, which quantifies the magnitude of difference between two models’ performances relative to the pooled standard deviation. It helps interpret whether a statistically significant result (like a low p-value) is also practically meaningful.

As shown in

Table 14, the proposed NGA-MC model achieves the highest mean accuracy of 95.4% with the lowest generalization error (2.4%) and standard deviation (1.02), indicating not only superior performance but also strong consistency across subjects. In contrast, the traditional Markov Chain model without NGA or CBC enhancements shows a significantly lower mean accuracy of 89.7% and a higher generalization error (4.8%), suggesting that the absence of contextual and adaptive mechanisms reduces its ability to generalize. The BiLSTM model, while better than the basic MC, reaches only 91.3% mean accuracy with a 3.9% generalization error. These results confirm that while deep learning models offer improvements over basic statistical models, they still lag behind the proposed hybrid approach, especially when subject variation is high.

Statistical tests provide strong validation for the observed performance differences. The p-value between the proposed model and the traditional MC is , and for BiLSTM it is 0.013, both of which are statistically significant (p < 0.05), supporting the hypothesis that the NGA-MC model achieves meaningful performance improvements. Furthermore, the Cohen’s d values (2.79 and 2.08) indicate very large effect sizes, reinforcing that the enhancements introduced by CBC and NGA not only improve mean performance but do so with a substantial margin. The low standard deviation in the proposed model also suggests stable behavior across different LOSO runs and subjects, which is crucial for subject-independent tasks like sign language recognition. Overall, the statistical and empirical evidence aligns to validate that the proposed model generalizes more effectively across unseen subjects, making it a reliable candidate for real-world deployment.

We suggest a comprehensive sensitivity analysis focusing on the CBC threshold and the weight factors used in the fitness function. The objective of this experiment is to systematically vary each parameter while holding the others constant to evaluate their individual impact on the model’s performance. This will help identify optimal parameter ranges and demonstrate the robustness and stability of the proposed framework across different configurations. Both sets of parameters play a pivotal role in the evolutionary optimization process: δ governs diversity control through Context-Based Clearing (CBC), while and define the trade-off between accuracy, uncertainty (entropy), and latency in the fitness evaluation. To address this, we propose an ablation experiment with the objective of quantifying the individual and joint impact of the CBC threshold and the fitness weights on model performance. The experiment configuration involves running the full NGA-CBC optimization on the same SRL benchmark dataset under multiple settings: (i) varying in the range , and (ii) exploring different weight combinations via grid search over , , and , ensuring that for fair comparison. For each configuration, the model undergoes 10-fold LOSO cross-validation, and performance is evaluated using three primary metrics: mean accuracy, average entropy across transition matrices, and average latency in frame count per recognized gesture. Additionally, Cohen’s d and ANOVA can be applied to assess whether performance differences are statistically significant across and weight configurations. The expected outcome is the identification of a stable operating region where the model maintains high accuracy, low uncertainty, and low latency, thus validating the chosen parameter values and reinforcing the robustness of the proposed method.

From

Table 15, we observe that the highest mean accuracy (95.4%) occurs at

, indicating that this threshold offers the best balance between diversity and convergence. At

and

the model accuracy is lower (92.1% and 93.5%, respectively), suggesting over-clearing—too many individuals are penalized, which restricts genetic diversity and leads to suboptimal transition matrices. On the other hand, at higher values like

and

, accuracy slightly drops again, possibly due to under-clearing, where redundant or similar individuals are retained, leading to convergence toward locally optimal but non-diverse solutions. Thus,

appears to be a sweet spot, maximizing recognition accuracy while preserving meaningful variation across the population.

Entropy values represent uncertainty in the transition matrices. At , the lowest entropy value (0.97) is achieved, indicating crisper and more confident gesture transitions. In contrast, and yield higher entropies (1.31 and 1.22), which suggest that the resulting transition probabilities are more uniform and less decisive—likely due to limited diversity (at ) or excessive similarity among individuals (at ). This supports the interpretation that enables the algorithm to evolve models that confidently predict state transitions, reducing uncertainty and improving recognition robustness.

Latency is a practical measure of how fast the system recognizes a gesture. At , the lowest latency (11.2 frames) is recorded, indicating that the model quickly and reliably reaches correct decisions. and exhibit increased latencies (14.8 and 14.5 frames), suggesting slower convergence to correct predictions due to unstable or uncertain transition dynamics. The effect sizes (Cohen’s d) further emphasize the significance of : values above 2.0 (e.g., 2.17 for ) reflect very large differences in performance, validating the statistical and practical superiority of the setting. Overall, the results justify δ = 0.20 as the optimal clearing threshold, balancing accuracy, confidence, and speed—critical qualities for real-time, subject-independent SLR systems.

Table 16 explores the impact of different fitness weight configurations—specifically the trade-off between accuracy (α), entropy (β), and latency (γ)—on the performance of the NGA-Markov Chain model, keeping the CBC threshold fixed at its optimal value (δ = 0.20). The best-performing configuration is clearly α = 0.8, β = 0.1, γ = 0.1, achieving the highest mean accuracy of 95.4%, the lowest entropy (0.97), and one of the lowest latencies (11.2 frames). This indicates a well-balanced optimization, where the model not only correctly recognizes gestures with high precision but also produces confident transitions (low entropy) and timely recognition (low latency). The result reflects that equal emphasis on reducing uncertainty and recognition time, along with strong prioritization of accuracy, leads to optimal generalization in real-world, subject-independent SLR.

In contrast, the configurations with lower accuracy weights (α = 0.6) show a noticeable drop in performance, with accuracies dropping to as low as 92.9% and entropies rising above 1.1. For instance, (α = 0.6, β = 0.3, γ = 0.1) places more focus on entropy reduction but sacrifices recognition performance and speed. Similarly, while (α = 0.6, γ = 0.3) slightly improves latency to 11.6 frames, it does so at the cost of reduced accuracy (94.2%) and increased uncertainty (entropy = 1.16). These observations suggest that over-penalizing latency or uncertainty can lead to an imbalance, causing the model to rush predictions or produce less discriminative transitions, which in turn reduces generalization capability.

Even when accuracy is maximized (α = 1.0, β = γ = 0.0), the model does not achieve better performance than the balanced configuration. While it reaches a high accuracy of 95.0%, it also results in the highest entropy (1.22) and longer latency (12.9 frames), indicating overconfidence in uncertain transitions and slower responsiveness. This supports the interpretation that optimizing purely for accuracy can lead to overfitting or indecisive transitions, making the model less robust in dynamic or noisy gesture input scenarios. The Cohen’s d values, all above 0.6 in non-optimal settings, further confirm statistically significant performance gaps. Therefore, the analysis clearly shows that a balanced fitness function—favoring accuracy while lightly penalizing entropy and latency—is essential for achieving both high performance and generalizability in real-time gesture recognition systems.

In SLR, the reward function plays a pivotal role in shaping the learning behavior of an agent—especially within reinforcement learning (RL)-based or decision-making frameworks—by quantifying the value of specific actions taken in various states of gesture processing. The reward function defines how desirable a particular state-action transition is, based on how well it aligns with the system’s goals: fast and accurate recognition. In a real-time SLR scenario, the system is continuously observing a stream of frames and must decide whether to wait, segment, or classify the current gesture. A high reward is assigned when the system correctly classifies a gesture using the minimal number of frames, indicating high confidence and low latency. This encourages the model to be decisive yet accurate. Conversely, negative rewards (penalties) are given for mistakes such as misclassification, early decisions (premature segmentation), or excessive delay (prolonged waiting), which can degrade user experience and model reliability.

The design of this reward function directly impacts how the model learns to balance exploration and exploitation, especially under uncertainty and in subject-independent SLR tasks. From a practical standpoint, the literature suggests that reward shaping—combining immediate feedback (e.g., per-frame classification confidence) with delayed feedback (e.g., full gesture accuracy)—is crucial to guide learning effectively. One widely adopted formulation involves assigning: (1) a +1 reward for correct classification with low frame count, (2) a −1 penalty for incorrect predictions, and (3) small negative penalties (e.g., −0.01) for each frame spent in a waiting state to discourage excessive latency. Some approaches also include adaptive or probabilistic rewards, which reflect the classifier’s prediction uncertainty or context-dependent features, improving generalization across users and gestures. Overall, the best-performing reward functions in SLR literature are those that are context-aware, penalize inefficiency and errors, and reinforce minimal yet confident decisions, helping to ensure robust real-time performance with high interpretability and efficiency.

To empirically validate the effectiveness of a context-aware reward function specifically within a MDP framework in real-time SLR, an experiment can be designed that restricts reward computation strictly to Markovian transitions—i.e., rewards depend only on the current state, action, and the resulting next state, not on the full history. The objective of this experiment is to evaluate how a Markov-based reward function that balances gesture accuracy, decision timing, and temporal efficiency impacts performance under the constraints of memoryless transitions. Two reward schemes will be compared: (1) a Markov baseline reward model assigning +1 for correct classification, −1 for incorrect, and 0 otherwise, and (2) a Markov-constrained context-aware reward, where the reward incorporates a penalty for frame-based waiting, bonuses for confident transitions, and penalties for early or late segmentation, but all defined per-state—action—next-state transition only.

The experimental configuration follows an MDP formulation where the environment is a real-time gesture stream, and states represent frame-level encoded gesture embeddings (features), actions are discrete (wait, segment, and classify), and transitions follow probabilistically from action policies. The dataset will be partitioned in a subject-independent manner, with no signer overlap between training and testing to assess generalization. Evaluation will focus on the following Markov-anchored metrics: (1) State-level Classification Accuracy, which evaluates recognition correctness per decision point; (2) Mean Decision Latency, calculated as the average number of frames used before classification; and (3) State-Transition Consistency Score, measuring alignment between predicted transitions and true gesture boundaries. Additionally, the Area under the Reward Curve (AURC) across episodes quantifies learning efficiency.

Table 17 presents a comparative analysis of two reward schemes—Baseline Markov Reward and Context-Aware Markov Reward—in the context of real-time SLR using a MDP framework. Each metric is reported as a mean ± standard deviation, where the “±” symbol denotes variability across multiple runs or experimental folds. For instance, a value of 81.3 ± 1.2 in state-level accuracy means the average accuracy was 81.3%, with a standard deviation of 1.2%, indicating the result is relatively stable across repetitions. A smaller standard deviation reflects more consistent performance, while a larger one would indicate more fluctuation in outcomes. This measure of dispersion is crucial for validating the robustness and reliability of the reward scheme under different conditions, such as random seed variations or cross-validation folds.

Looking at the individual metrics, the State-Level Accuracy measures the model’s correctness at the decision-making level—how accurately the RL agent classifies gestures at the right moments. The context-aware reward significantly improves this metric (88.7% vs. 81.3%), showing that integrating penalties for delays and bonuses for confident, timely classification helps the agent learn a more effective policy. Higher accuracy directly translates into improved recognition reliability in real-world applications, which is essential in domains such as assistive communication or human–computer interaction where misclassification could lead to confusion or system failure.

The Mean Decision Latency metric assesses how quickly the agent makes a classification decision after observing a gesture. Lower values are desirable in real-time systems, as they indicate faster response times. The context-aware agent shows improved latency (10.2 frames vs. 14.8 frames), meaning it is able to make confident decisions earlier, which enhances system responsiveness. Similarly, the Transition Consistency Score—which measures how well the predicted gesture boundaries align with ground truth—is higher for the context-aware reward (82.4% vs. 73.5%), indicating better temporal modeling of gesture transitions. Finally, the AURC reflects how effectively the agent accumulates reward per episode during training. A higher AURC (9.3 vs. 6.8) indicates more efficient learning and better policy convergence. Overall, the context-aware Markov reward not only boosts classification performance but also enables faster and temporally accurate decision-making, making it highly suitable for real-time SLR systems.

To address concerns regarding the computational efficiency of the proposed MC-NGA model with CBC, we designed an experiment to assess its runtime performance, memory usage, and scalability, using a resource-constrained environment for realistic evaluation. Specifically, the model was tested on a Dell Inspiron N5110 laptop without any GPU acceleration. The evaluation focused on the Arabic Sign Language (ArASL) dataset, which is well-suited for subject-independent testing due to its comprehensive structure—comprising over 1000 distinct gesture classes, each contributed by 50 different signers, with 10 samples per gesture per signer, thereby capturing a wide range of signer variability and real-world conditions such as changes in hand orientation, background clutter, and lighting. The objective of the experiment is to measure the training time per epoch, average inference time per gesture sequence, and peak memory usage under these hardware constraints. Metrics such as total execution time, CPU utilization, and memory footprint were collected using system monitoring tools.

The results shown in

Table 18 reflect the superior performance of the MC-NGA model as presented in the benchmark comparison. As the signer and gesture complexity increase, the model maintains high levels of accuracy and subject-wise generalization. At full scale (50 signers, 1000+ classes), the model reaches 96.0% overall accuracy and 92.7% subject-wise accuracy, outperforming all other models in the previous comparison table. Despite the increased data complexity, inference time remains under 60 milliseconds, validating the model’s real-time responsiveness, even on a non-GPU laptop, which is critical for practical SLR systems deployed in low-resource environments. The MC-NGA model demonstrates linear and manageable increases in training time, memory usage, and CPU load as the dataset scales. Training time per epoch rises from 38 to 398 s, while peak memory usage stays under 1.7 GB, and CPU usage remains below 90%, ensuring operational feasibility. This efficiency is largely due to the compact nature of the Markov Chain structure and the evolutionary optimization guided by Context-Based Clearing, which avoids the parameter bloat typical of deep learning architectures like Transformers or CNN-LSTM. The model’s evolutionary convergence remains stable, with only a moderate increase in epochs required as signer variability grows, indicating reliable scalability. While deep learning models such as Transformers and GCNs offer strong sequence modeling capabilities, they often require large memory and GPU support to operate effectively and can struggle with inter-subject variability due to overfitting on signer-specific features. In contrast, MC-NGA explicitly preserves population diversity through CBC, allowing it to model multiple behavioral patterns in parallel without converging prematurely. This enables the model to generalize better across unseen signers, justifying its superior subject-wise accuracy. The results confirm that MC-NGA is not only a high-performing solution but also a scalable, efficient, and real-time-capable approach to subject-independent sign language recognition.

4.1. The Real-Time Performance

To assess the practical viability of the proposed NGA + CBC model in real-world environments, this section evaluates its real-time performance across three key dimensions: runtime efficiency, computational costs, and scalability.

- -

Runtime Efficiency

The proposed NGA + CBC model demonstrates notable runtime efficiency due to its lightweight inference structure, particularly during the testing phase. While the evolutionary optimization (e.g., niching genetic search) is computationally intensive during training, it is performed offline and does not affect the online performance of the deployed model. Once trained, the Markov Chain component handles gesture sequence recognition using precomputed transition probabilities and phase dynamics, enabling real-time decision-making with minimal computational overhead. Furthermore, the use of MediaPipe for real-time hand landmark extraction ensures that the input feature stream is efficiently processed at over 30 FPS on standard CPUs, supporting low-latency frame-wise gesture interpretation. This makes the model suitable for real-time applications such as human–computer interaction or assistive technologies, where fast and accurate feedback is essential.

- -

Computational Costs

The computational cost of the proposed approach is front-loaded during the training phase, where the NGA iteratively evolves gesture recognition strategies over multiple generations. However, the number of generations to convergence is notably lower in the proposed model (only 65 generations, compared to 150 in the baseline), significantly reducing training time and energy expenditure. The CBC mechanism further contributes to efficiency by pruning redundant individuals, thus reducing the number of candidates that need to be evaluated per generation. In deployment, the model relies on a compact Markov structure and lightweight transition calculations, requiring only matrix-vector multiplications and simple lookups, which are highly scalable and memory-efficient. This balance between offline optimization and efficient online inference allows the system to maintain competitive computational performance.

- -

Scalability

The architecture of the MC-NGA model is inherently scalable across datasets, user groups, and gesture vocabularies. The evolutionary framework allows the model to adapt to new gesture categories or expanded sign lexicons without retraining the entire system from scratch; instead, it can evolve and integrate new behaviors incrementally. Moreover, the use of Context-Based Clearing ensures that diverse gesture interpretations are preserved during optimization, which enhances the model’s scalability to heterogeneous populations (e.g., multilingual signers or varying cultural gesture sets). Experimentally, this is validated by its consistent performance across both the ArASL and LSA64 datasets. Additionally, because inference relies only on a compact transition model, the system can be deployed on resource-constrained devices, such as embedded platforms or mobile hardware, making it highly scalable for real-world assistive or wearable applications.

4.2. Limitations

While the proposed adaptive SLR framework integrating Markov Chains with a NGA shows significant advancements in handling subject-independent variability, it is not without limitations. First, the computational complexity of the NGA-CBC optimization process can be substantial, particularly when evolving large populations over many generations, which may hinder real-time or embedded system deployment. Second, although CBC enhances population diversity, its effectiveness depends heavily on carefully tuned parameters such as clearing radius and niche capacity; improper settings could either lead to excessive overlap between niches or fragmentation of promising solutions. Third, the reliance on fixed structural assumptions within the Markov Chain may limit the model’s ability to capture more nuanced temporal dependencies that could be better modeled by more expressive frameworks like deep recurrent or attention-based architectures. Additionally, the system may still be sensitive to noise in gesture segmentation or skeletal data extraction, especially in uncontrolled environments, potentially degrading performance. Finally, while improved generalization is reported, extensive cross-dataset or real-world evaluations across varied demographic groups are necessary to confirm its robustness and practical applicability beyond the controlled experimental settings.

Moreover, the current framework lacks integration with cognitive computing principles, which could significantly enhance the system’s contextual understanding and adaptability. By incorporating cognitive computing components—such as knowledge graphs, memory networks, or context-aware decision mechanisms—the model could better interpret ambiguous or culturally nuanced signs and adapt to variations in gesture semantics. Cognitive computing could also support multi-modal reasoning by combining visual cues, historical interaction patterns, and environmental context, leading to more intelligent and human-like SLR systems. Integrating these elements would push the system beyond pattern recognition toward a deeper, more holistic comprehension of sign language communication [

38,

39].