1. Introduction

While multimedia has been extensively researched in recent decades, little has been done on how it is perceived inside human brains. In this paper, we explore such a problem by presenting classified stimulating images to allow the subject to perceive the related semantics contained in the images and then record their brain activities via EEG sequences to see if those semantics could be automatically recognized via deep learning and to provide analysis of those EEG sequences.

Deep learning has been effectively applied to numerous multimedia tasks; successful examples include automatic speech recognition, image recognition, visual art processing, natural language processing, etc. [

1,

2,

3]. One elusive objective that actually remains is to apply deep learning to interpret and comprehend how multimedia is perceived inside human brains. A significant number of the prior works concentrated on decoding informative patterns from brain activities to control machines through a brain–computer interface (BCI) [

4,

5,

6,

7], including some medical applications [

8] such as Epilepsy [

9], Alzheimer, etc. [

10,

11]. As a matter of fact, brain activities are usually captured by recording the voltage fluctuations generated by neurons using electroencephalograms (EEGs) [

12], or by brain imaging techniques such as MRIs (magnetic resonance imaging) and Functional MRIs (fMRIs), whose temporal and spatial resolutions have enabled computational methods to decode specific visual stimuli, illustrating that brain signals contain helpful cues corresponding to human cognitive processes and can be adequately utilized in many applications.

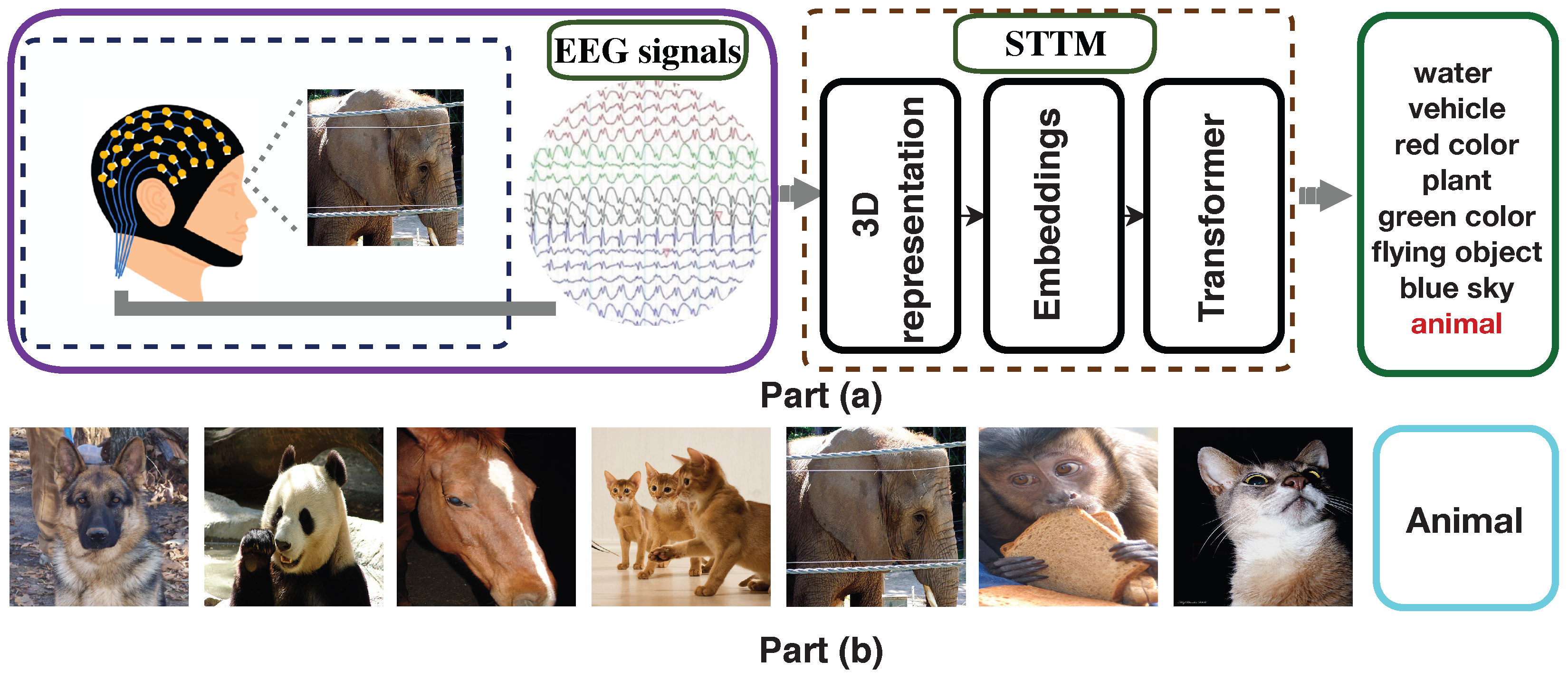

Figure 1 illustrates the overall concept of our proposed research. In part (a), the brain is stimulated by an image of an “elephant”, and the resulting activated EEG sequences are fed into our proposed DCT-ViT deep model. Using 3D representation, embeddings, and transformer attention on the EEG sequences, the model generates recognized semantics at the output. This output reflects the cognitive activities occurring in the human brain, corresponding to its responses to the stimulating image presented as input.

In the proposed model, we first construct a 2D mapping matrix based on the electrodes’ physical locations, where those adjacent electrodes are kept as neighbors. We then construct a 3D spatial–temporal representation based on that 2D mapping and the time index. Different from the original transformer, which only uses the patch embedding and position embedding as the input. Our method proposes to use three different embeddings, including the patch embedding, the position embedding, and the 2D DCT embedding. They are summed together and the resulting sequence of vectors are fed to a standard transformer network [

13]. A discrete cosine transform (DCT), first proposed in 1972, represents a finite sequence of data points as a sum of cosine functions oscillating at different frequencies. This ability to separate and extract information from various frequency bands makes it a widely used technique in signal processing and data compression. Although it has been used for data compression and feature extraction in EEG-related tasks [

14,

15], almost all existing work kept EEG data in time sequence form and used DCT to extract features from the time series. In our work, we construct the 2D mapping structure based on the electrodes’ physical locations. Then, we use 2D DCT to obtain the features on different frequency bands in each EEG patch. When there exists a rapid and obvious change between electrodes in the EEG patch where they are located, it means that the current corresponding brain area has a change in the degree of activation and our method can effectively capture this information and calculate it as an embedding and input it into the transformer. In the end, the output of the transformer network is fed to a multilayer perception head for semantics recognition. As a result, such embedded representations enable our proposed model to strengthen the attention level and hence achieve successful recognition results. Part (b) of

Figure 1 illustrates samples of the “animal” semantics. As seen, the recognized semantics “animal” includes “dog”, “cat”, “panda”, etc. As transformers [

16,

17] are being applied to the analysis of both text sequences and images, we are inspired to convert the EEGs into 3D representations like image sequences in order to preserve the temporal–spatial information; while EEGs can be viewed as a sequence of time-step signals, transformer neural networks do not work directly with signals but work with points determined by coordinates or numbers in high-dimensional spaces. As transformer neural networks specialize in squeezing, stretching, and bending the input space so that similar data points get closer and thus are easy to discriminate from others, we exploit their capability of working with numbers to encode EEG signals into vectors and further order them so that the sequence structure of the EEGs is well preserved. There are several neural architectures, such as LSTM [

18], that are capable of capturing the order information intrinsically; while LSTM examines every time step sequentially for the input vector to capture its internal order information [

19,

20], the transformer operates on sets and considers everything in parallel to enable the transformer to capture the ordering information. As a result, we need to add positional embeddings to the vectors and enable the transformer to work out what inputs are, such as for images, input how their gray scales within a matrix of numbers should be interpreted, including where a high valued number corresponds to a high intensity in that region and a low valued number is a darker spot, etc. Following our conversion of EEG sequences into 3D representations and 2D mapping matrices, transformers, after adding positional embeddings, can be exploited to process EEGs, and significant efficiency can be achieved as their computation is completed in parallel.

As a matter of fact, designing a well-performed deep framework to achieve brain-perceived semantics recognition is challenging. Compared with the existing semantics recognition tasks widely researched across the areas of multimedia and computer vision, our research problem has three features: (i) there exists a large extent of ambiguities among EEG descriptions of semantics; (ii) features describing semantics are weak; and (iii) representation learning is not directly focused upon the semantics inside images but upon the EEGs collected to record their brain perceptions.

We summarize our contributions as follows: (i) we propose to use 2D DCT to capture the degree of activation of each EEG patch as an embedding and input it into the spatial–temporal transformer model (DCT-ViT). The proposed DCT-ViT exploits the interactions among the embeddings of different EEG patches to strengthen its attention level, enhance the input representation, measure the variability of elementary components for each individual patch, and hence achieve significantly improved recognition results; (ii) our introduced 2D mapping enables an effective 3D spatial–temporal representation, converting the temporal sequences to an image-like matrix and hence preserving both the spatial and temporal correlations across all electrodes. Consequently, we can enhance the proposed deep model’s capabilities in both representation and learning, leading to improved performance in recognizing brain-perceived semantics; (iii) we successfully pioneered the concept of a transformer encoder and successfully applied its adapted design to the problem of brain-perceived semantics recognition; and (iv) we introduced a new dataset, Semantics-EEG, and carried out extensive experiments to validate the feasibility and the effectiveness of our proposed deep DCT-ViT model for the problem of brain-perceived semantics recognition.

The remainder of this paper is structured as follows. In

Section 2, we describe relevant work that utilizes deep learning models for analyzing human brain activity. In

Section 3, we detail our proposed transformer-based deep learning model for recognizing the semantics within EEG sequences.

Section 4 presents our extensive experimental results and validates the effectiveness of our proposed DCT-ViT model. Finally, we conclude the paper in

Section 5.

2. Related Work

Electroencephalography (EEG) measures brain oscillations, which reflect the synchronized activity of neurons. Researchers aim to analyze and understand how the human brain perceives, processes, and identifies the rich and colorful information present in the real world through EEG signals. Consequently, multimedia data, which contains substantial amounts of content information, is considered highly suitable for stimulating this analysis and is widely used in the collection and examination of EEG signals [

21].

Researchers have sought to understand the content of multimedia data accessed by users by analyzing EEG data [

22,

23,

24]. For instance, Wang et al. employed a hierarchical discriminant analysis method to identify the object of interest in EEG data. They then utilized an image feature-based pattern-mining algorithm to confirm image labels, which facilitated rapid image retrieval [

22]. Meanwhile, Moon et al. implemented four classifiers—k-nearest neighbor, neural network, naive Bayes, and support vector machine—to recognize behaviors in videos through EEG data [

23]. Recently, deep learning methods have gained prominence in analyzing multimedia content based on EEG data. Spampinato et al. used a long short-term memory (LSTM) network to derive representations of EEG data from image stimuli, establishing a mapping relationship between natural image features and EEG representations. This new representation was effectively applied to classify natural images based on EEG data [

25]. Zheng et al. introduced the Swish activation function in LSTM to mitigate the vanishing gradient problem, while also applying ensemble learning techniques to enhance the model’s generalization performance [

26]. Additionally, Zhong et al. highlighted the hemispheric lateralization in human brain cognition in their proposed model. They were the first to incorporate a channel-based attention mechanism into the image classification task using EEG data, achieving impressive classification results [

27].

Recent studies have shown that it is possible to reconstruct multimedia content a user views based on EEG data. Kavasidis et al. introduced a technique for reconstructing visual stimulus information using EEG data [

28]. They utilized a variational autoencoder and a generative adversarial network (GAN) to demonstrate that EEG data contain patterns associated with visual content, enabling the generation of semantically consistent images corresponding to the visual stimuli. Building on this work, Tirupattur et al. further demonstrated that GANs could visualize the content information in the human brain through EEG data. They expanded their study to three databases and significantly improved the accuracy of visualizations [

29]. Jiao et al. continued to explore the use of GANs for visualization, but unlike previous studies, they employed ResNet101 to classify EEG data. The features obtained from this classification were then used as input for the generator [

30]. Fares et al. took a different approach by using visual and EEG features as dual conditions for the generator, integrating lateralization information to enhance the visualization results [

31].

Although these methods have been proposed to identify or reconstruct the content of multimedia data, they share a common limitation: they fail to capture the full richness of multimedia content. In their modeling processes, these methods assume that the multimedia content contains only one main object and simplify the content analysis task to focus solely on classifying this main object. However, the reality is that similar semantics can often include multiple objects from different categories.

Table 1 presents a comprehensive comparison of recent advances in EEG-based semantic decoding and visual perception analysis. The surveyed methods demonstrate a progressive evolution from traditional LSTM-based approaches [

25] achieving around

accuracy, to more sophisticated architectures incorporating attention mechanisms [

32], regional features [

19], and ensemble learning [

26]. Notably, while some approaches like Tirupattur et al. [

29] achieve higher accuracy (82.9%), they require paired EEG–image data during inference, limiting their practical applicability. Recent transformer-based methods, particularly the EEG-Conformer [

7], have pushed performance to 71.2% using complex convolutional–transformer hybrids. Our DCT-ViT approach achieves a state-of-the-art performance of 72.8% while maintaining architectural simplicity through efficient frequency–temporal fusion via DCT embeddings. This comparison reveals a critical gap in existing methods: the lack of efficient frequency domain analysis combined with temporal modeling, which our approach directly addresses. Furthermore, unlike multi-modal frameworks [

33] or generation-focused methods [

28,

31], our method focuses on direct classification from single-modality EEG signals, offering a more practical solution for real-world BCI applications.

3. Methodology

Figure 2 illustrates the overall process of brain-perceived semantic recognition. The EEG signals from the subjects, which are stimulated by multimedia images, are transformed into a 3D spatial–temporal representation and input into the spatial–temporal transformer model (DCT-ViT). Our proposed model divides the 3D spatial–temporal representation into fixed-size patches and linearly embeds both the patches and the 2D DCT (discrete cosine transform) of each. In contrast to existing transformers, our design incorporates not only patch embedding and position embedding but also 2D DCT embedding. This addition provides extra information across relatively independent frequency bands. All the embeddings are summed together, and the resulting sequence of vectors is fed into a standard transformer network [

13]. Ultimately, the output from the transformer network is processed by a multilayer perceptron head for semantic recognition.

The DCT can separate and extract information from different frequency bands in data, which makes it a widely utilized transformation technique in signal processing and data compression; while DCT has been applied in EEG-related tasks for data compression and feature extraction [

14,

15], most existing studies have retained the time sequence form of EEG data, using DCT to extract features from these time series. In our work, we construct a 2D mapping structure based on the physical locations of the electrodes. The 2D DCT then calculates the features across various frequency bands for each EEG patch. As a result, the 2D DCT embedding shares the same structure and format as the other two embeddings commonly used in transformer networks: patch embedding and position embedding.

For EEG data, different frequency bands contain different information. The 2D DCT embedding has the advantage of separating and extracting them. When a rapid and noticeable change occurs between electrodes in the EEG patch, the corresponding brain area’s current changes the degree of activation. Our method can effectively capture this kind of information, calculate it as an embedding, and then input it into the transformer with other embeddings. The 2D DCT embedding also contributes the most to all three embeddings from the ablation study

Section 4.3.

Although other orthogonal transformations can be used for EEG processing, 2D DCT is easier to calculate, has the same dimension as the image patch, and separates and extracts the information from different frequency bands. A 2D DCT data matrix in matrix

S can be written as follows:

by defining a matrix

, where

represents the matrix element in the

uth row and

vth column [

35].

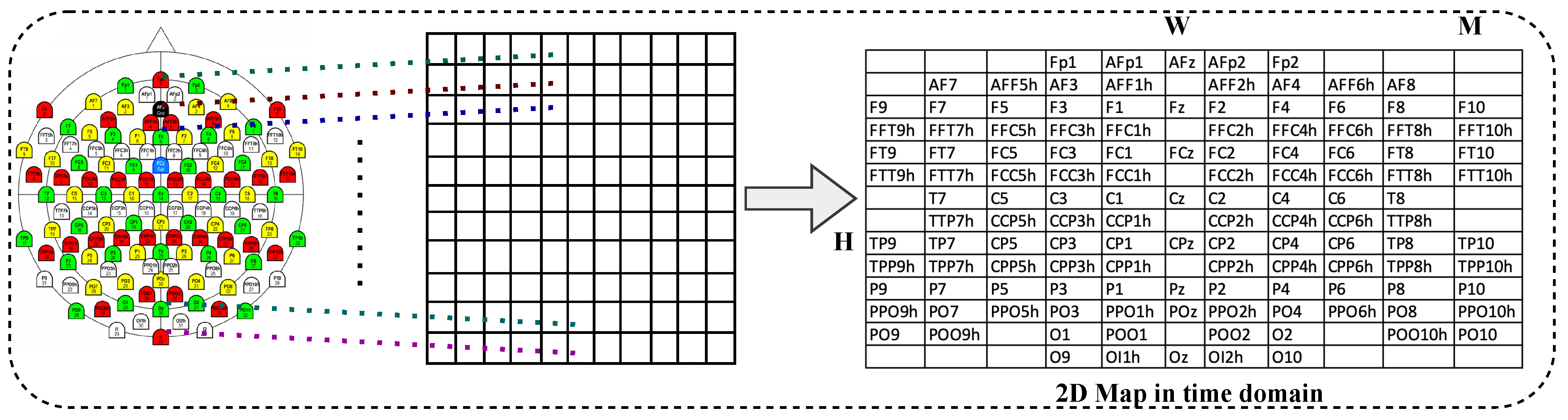

The raw EEG signals are represented as one-dimensional (1D) time series for a single EEG channel, or as a chain of 1D time series for multiple EEG channels. However, this limits the connections between different brain regions as each channel only has two adjacent electrodes at most. To address this limitation, we can transform the chain-like 1D EEG channel set into a two-dimensional (2D) mesh-like EEG signal representation by mapping the EEG recordings with the position of the EEG signal acquisition electrode. The size of the 2D mesh is chosen based on international standards for electrode placements. For our experiment, we use the 10–20 system to cover all EEG channels and map the electrode positions onto an

matrix. This method is commonly used in EEG-based analysis [

36,

37,

38,

39,

40,

41].

To construct the 3D spatial–temporal representation, we define

as an EEG signal sample that contains

T time steps, where

. Here,

E represents the number of electrodes, and

represents the EEG signals from all

E electrodes collected at time step

t (where

and

). As illustrated in

Figure 3, the vector

is transformed into a 2D temporal map

based on the physical locations of the electrodes, where

, and

H and

W denote the height and width of the 2D temporal map, respectively. Furthermore, the 3D spatial–temporal representation of the EEG signals, denoted as

, is constructed, where

and

.

To manage the 3D spatial–temporal representation, the proposed model first reshapes the representation

into a

where

Q is the height and width of the square matrix. Subsequently, the squared representation

is reshaped into a sequence of flattened 2D patches

, where

P denotes the height and width of each representation patch, and

denotes the number of patches, which also corresponds to the sequence length for the transformer network. Since the transformer utilizes a fixed latent vector size

D across all its layers, the patches and their 2D discrete cosine transform (DCT) are flattened and mapped to

D dimensions through a trainable linear projection, as shown in the following equation:

where

is the position embeddings,

is the patch embedding projection,

is the 2D DCT embedding projection, and

denotes the output embeddings.

The 2D DCT embeddings are combined with the patch embeddings to maintain the variability of specific frequency bands within each patch. Additionally, position embeddings are incorporated to preserve positional information. Drawing inspiration from Dosovitskiy et al. [

16], we employ standard learnable 1D position embeddings. The resulting sequence of embedding vectors, denoted as

, serves as the input to the transformer network. Dosovitskiy et al. [

16] reported that a pure transformer model outperformed the state-of-the-art in image recognition and image vision, a field that had been dominated for many years by convolutional neural networks (CNNs). Consequently, transformers [

13], which previously revolutionized natural language processing (NLP) in recent years, are now being integrated into multi-modal transformer architectures that combine vision and language [

42,

43]. Following various hybrid attempts that combined CNNs and transformers, the pure vision transformer is now emerging as a new state-of-the-art approach that surpasses the previous accomplishments of CNNs.

As illustrated in

Figure 2, transformer networks [

13] are composed of multiple layers that feature multi-headed self-attention (MHA) and MLP blocks. Drawing inspiration from the work of Wang et al. [

44] and Baevski and Auli [

45], layer normalization (LN) is applied before each block, and residual connections are incorporated after every block. The specifics of these implementations are defined as follows:

where

L is the number of transform blocks or layers and

is the current block index. The addition operation preserves the residual connection, and

and

are the intermediate and final outputs of the block

l, respectively.

To facilitate interpretability analysis, we implement attention weight extraction at each transformer layer. The attention weights from layer l are preserved during forward passes, where represents the attention distribution across N patches. These weights enable post hoc analysis of which spatial–temporal regions contribute the most to semantic recognition.

Additionally, we compute gradient-based importance scores using

where

is the loss function and

represents input features at position

i. This allows us to identify critical EEG patterns for each semantic category.

4. Experiments

4.1. Dataset Construction and Experimental Settings

The Semantics-EEG dataset was developed to evaluate our DCT-ViT model and the concept of recognizing brain-perceived semantics. This dataset is derived from the ImageNet-EEG dataset [

25], which comprises EEG signals from six subjects (one female and five male) wearing a 128-channel cap equipped with active, low-impedance electrodes (actiCAP, 128 channels; Brain Products GmbH, Gilching, Germany). The subjects were instructed to view visual stimuli selected from a subset of ImageNet (ILSVRC) [

46], and the dataset consists of 40 classes, each containing 50 images. Each image was displayed for 500 milliseconds while the EEG signals were recorded. The EEG data were processed using a notch filter (49–51 Hz) and a second-order band-pass Butterworth filter, with a low cut-off frequency of 14 Hz and a high cut-off frequency of 71 Hz. This filtering captured only the Beta (15–31 Hz) and Gamma (32–70 Hz) rhythm bands, which are known to contain information about cognitive processes and perceptions [

47].

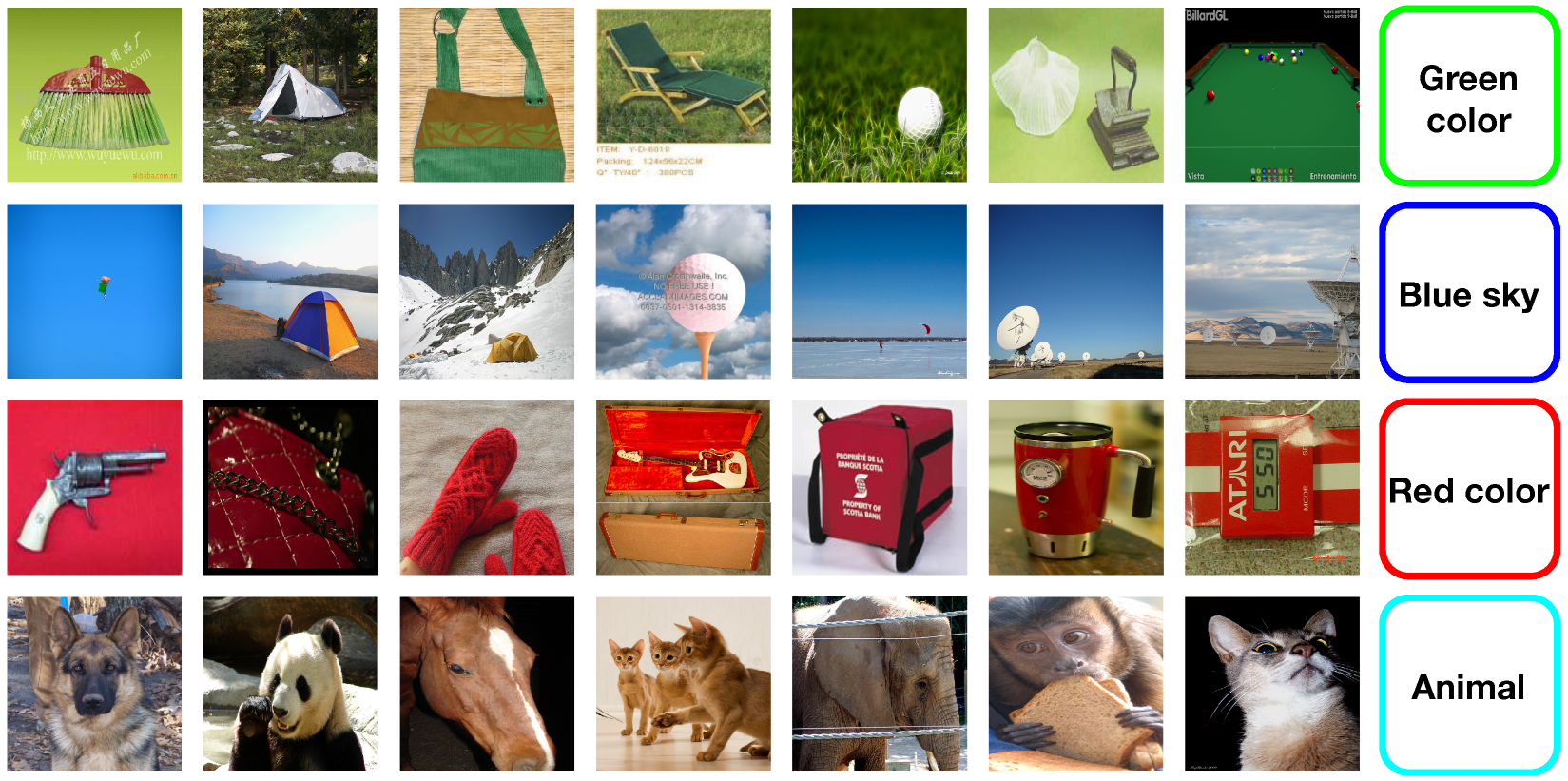

To construct the semantics recognition dataset Semantics-EEG, we investigated each stimulus (image) from ImageNet-EEG and obtained 10 semantics out of all images and across all of their categories, including “water”, “vehicle”, “red color”, “plant”, “green color”, “flying object”, “blue sky”, “animal”, “device ”, and “musical instrument ”. Primarily, all semantics are selected in terms of (i) visual content of the stimulation images and (ii) mitigating the intersections between semantics.

Table 2 summarizes the detailed information of the semantics recognition dataset Semantics-EEG, including the number of images per semantic and the number of image categories per semantic.

Figure 4 illustrates some sample images from Semantics-EEG, from which it can be seen that each semantic contains several different image classes, making it more challenging than those EEG-based image classifications [

25,

31].

Since no existing work has been reported on brain-perceived semantics recognition, we construct two artificial benchmarks based on existing EEG-based image classification models to facilitate a comparative evaluation of our proposed DCT-ViT. These two artificial benchmarks are an RNN-based model [

25] and an Attentional-LSTM-based model [

32]. Correspondingly, ablation studies can be carried out to analyze and explore the performance of our proposed approach in terms of individual attributes. Specifically, the 2D mapping matrix empty positions are set to zero, the 3D spatial–temporal representation

is

, and the reshaped one,

, is

. The configuration of the DCT-ViT deep model is set to have 8 layers, with

,

, and the number of patches per image is 9. The size of the patches to be extracted from the input images,

, is

. Our proposed DCT-ViT model implements the loss function as the sparse categorical cross-entropy between the predicted probability matrix and the optimizer as the Adam algorithm with weight decay, with the following parameters: learning rate is set as

and weight decay is set as

. The Semantics-EEG dataset was split into training and test sets, with respective fractions of

and

. We perform 10 random splits and report the average results over the 10 trials. For benchmarking purposes, we follow the parameter settings in the original paper of the RNN-based model [

25] and the Attentional-LSTM-based model [

32]. Our deep model is implemented on Our deep model is implemented on Tesla

® P100 GPUs (NVIDIA Corporation, Santa Clara, CA, USA). To support public verification of our work, we make both the source codes and the dataset openly accessible for downloading at GitHub (

https://github.com/brain-semantics/STTM, accessed on 4 November 2025).

4.2. Brain-Perceived Semantics Recognition

In the first phase of experiments, the effectiveness of the proposed DCT-ViT has been validated for brain-perceived semantics recognition on the Semantics-EEG dataset.

Table 3 summarizes the experimental results in terms of the recognition precision for our proposed DCT-ViT and the two constructed benchmarks, including the RNN-based model [

25] and an Attentional-LSTM-based [

32]. As seen, our proposed approach achieves an impressive

precision rate, yet the two benchmarks only achieved

and

, respectively, whilst both of them demonstrated compelling performances on EEG-based image classifications [

25,

32].

For the convenience of further analysis and comparative investigation,

Figure 5 presents the confusion matrix of each category for the Semantics-EEG dataset. As the matrix diagonal represents mostly the highest values in each row of the confusion matrix, the semantics predicted by the proposed framework are correct in the majority. As seen, while the recognition accuracies of the “animal”, “device”, “musical instrument”, “plant”, and “vehicle ”are above

, the semantics like “water” and “green color” show notable confusion. In addition, the two semantics, “water” and “blue sky” did not perform well and demonstrated some confusion, too, in which

of the “blue sky” semantic was misrecognized as “water”, and

of the “water” semantic was misrecognized as “blue sky”. To analyze the misrecognition performances, we present some typical sample images that contain the semantics “water” and “blue sky” in

Figure 6 to compare their specific contents and the corresponding differences. As seen, all the sample images indeed share very similar visual content elements across the boundaries of the two semantics, while the content of the images 1 and 3 share the dominant color ‘blue’, as an example, the content of the images 2 and 4 share both ‘water’ and ‘blue sky’.

4.3. Ablation Studies

In this section, we further conduct an ablation study to investigate the recognition accuracies achieved by each important component in our model, including patch embeddings, position embeddings, and 2D DCT embeddings.

Table 4 summarizes the recognition accuracies of different configurations of embedding. As seen, while the recognition accuracy achieved without the 2D DCT embeddings is

, the recognition accuracy achieved without the patch embedding and position embeddings are

and

, respectively. It means the design of 2D DCT embeddings brings a performance gain of about

and obviously improves the recognition accuracy of EEG signals. These findings quantify and support the effectiveness of the proposed 2D DCT embeddings for the task of brain-perceived semantics recognition.

To facilitate further analysis and comparison, we will retain the DCT embeddings layer and replace the transformer encoder network with two different encoder networks: (i) a ResNet-based encoder network and (ii) a Conv1D-based encoder network. Both encoder networks’ source codes will be available for download on the same GitHub repository for accessibility.

Table 5 summarizes the experimental results in terms of the recognition precisions for the transformer encoder network used in our proposed model, the ResNet-based encoder network, and the Conv1D-based encoder network. As seen, while the precision rate accomplished by the transformer encoder network is

, the ResNet-based encoder network and Conv1D-based encoder network are

and

, respectively. From these results, we can make the following observations: (i) the transformer network with DCT embedding performs overwhelmingly better than other networks; (ii) our pioneering approach offers better recognition of the brain-perceived semantics recognition.

To provide a more comprehensive evaluation against recent architectures, we additionally implemented two state-of-the-art encoder networks adapted for semantic recognition: (i) a hybrid CNN–transformer encoder combining convolutional layers for local feature extraction with transformer blocks for global context modeling and (ii) a Graph Neural Network (GNN) encoder that explicitly models the spatial relationships between EEG electrodes as graph structures.

Table 6 shows that while these modern architectures achieve improved performance over the traditional RNN/LSTM baselines (CNN–transformer:

, GNN:

), they still fall significantly short of our DCT-ViT model’s

accuracy. This performance gap highlights two key insights: First, the semantic recognition task requires specialized architectural considerations beyond those optimized for classification. Second, our proposed combination of 3D spatial–temporal representation with DCT embeddings provides crucial information that these architectures, even when adapted, fail to capture effectively.

As shown in

Table 6, our DCT-ViT model achieves

Top-1 accuracy, substantially outperforming both traditional baselines and modern architectures adapted for semantic recognition. The best modern baseline, the CNN–transformer hybrid, reaches only 58.42% accuracy despite having comparable model complexity (28.4 M vs. 32.7 M parameters). This 13.86 percentage point improvement (23.7% relative gain) demonstrates that semantic recognition from EEG requires specialized architectural design beyond simply adapting existing deep learning models.

Table 7 shows a detailed comparison table with architecture specifications.

The ablation studies reveal the critical importance of our design choices. Removing DCT embeddings (DCT-ViT w/o DCT) reduces accuracy to 61.35%, confirming that frequency domain features capture essential semantic information. Similarly, eliminating temporal modeling (DCT-ViT w/o Temporal) drops performance to 58.76%, highlighting the importance of capturing temporal dynamics in neural responses.

Notably, even recent architectures like Graph Neural Networks (56.73%) and Conformers (54.28%), which have shown success in other EEG tasks, fail to match our performance. This gap underscores that semantic recognition poses unique challenges requiring purpose-built solutions rather than off-the-shelf adaptations.

It is important to note that while recent EEG architectures like the EEG Conformer and graph-based models have shown excellent performance in their respective domains, their direct application to semantic recognition is non-trivial. These models are optimized for specific EEG characteristics in classification contexts, whereas semantic recognition requires understanding abstract conceptual relationships that may manifest differently in brain signals.

4.4. Interpretability and Visualization Analysis

To understand how our DCT-ViT model processes brain signals for semantic recognition, we conducted comprehensive interpretability analyses focusing on attention patterns, feature importance, and their correlation with known neural mechanisms.

4.4.1. Attention Map Visualization

We extracted and visualized the multi-head attention weights from different transformer layers to understand which EEG regions and temporal segments contribute most to semantic recognition.

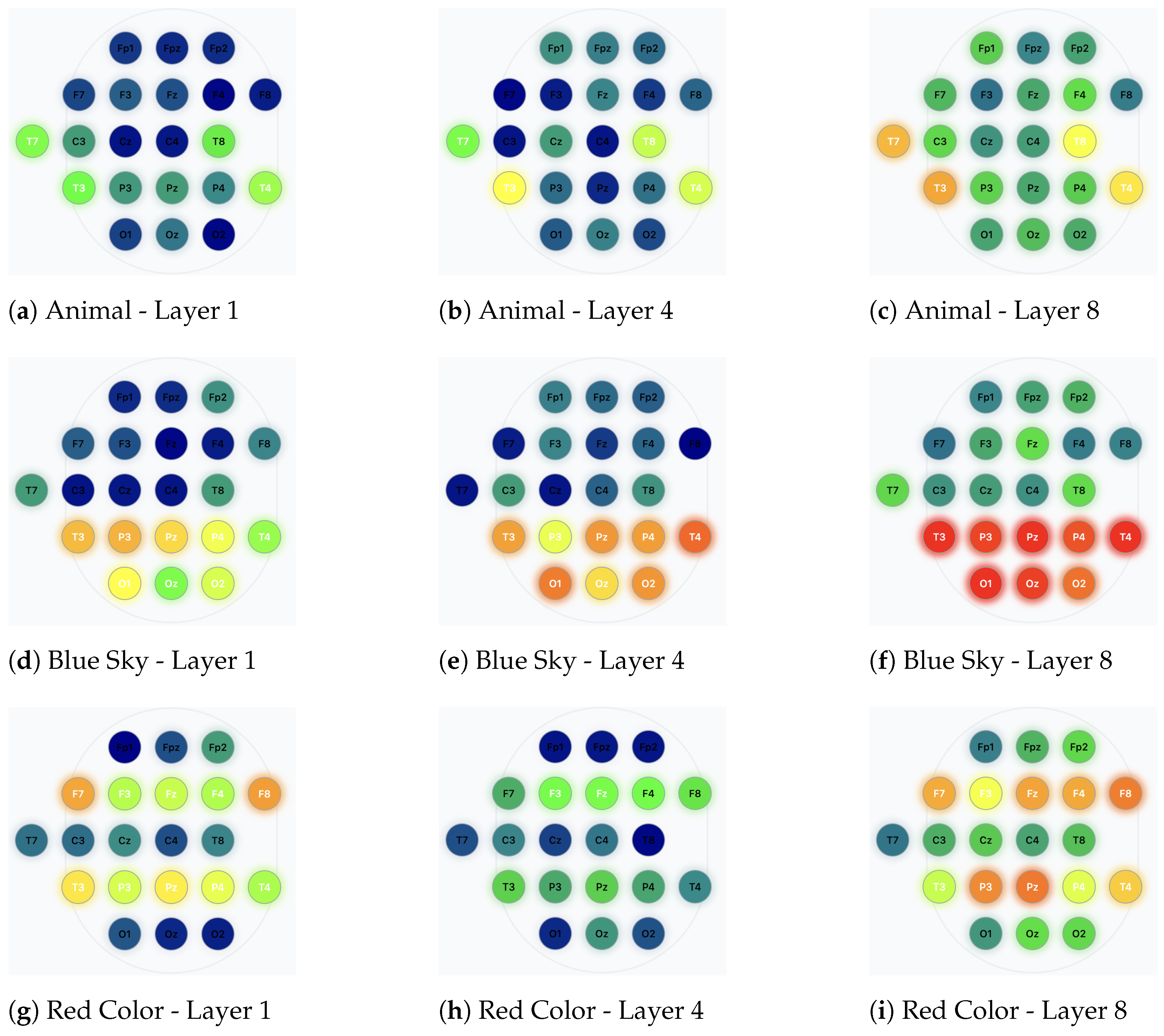

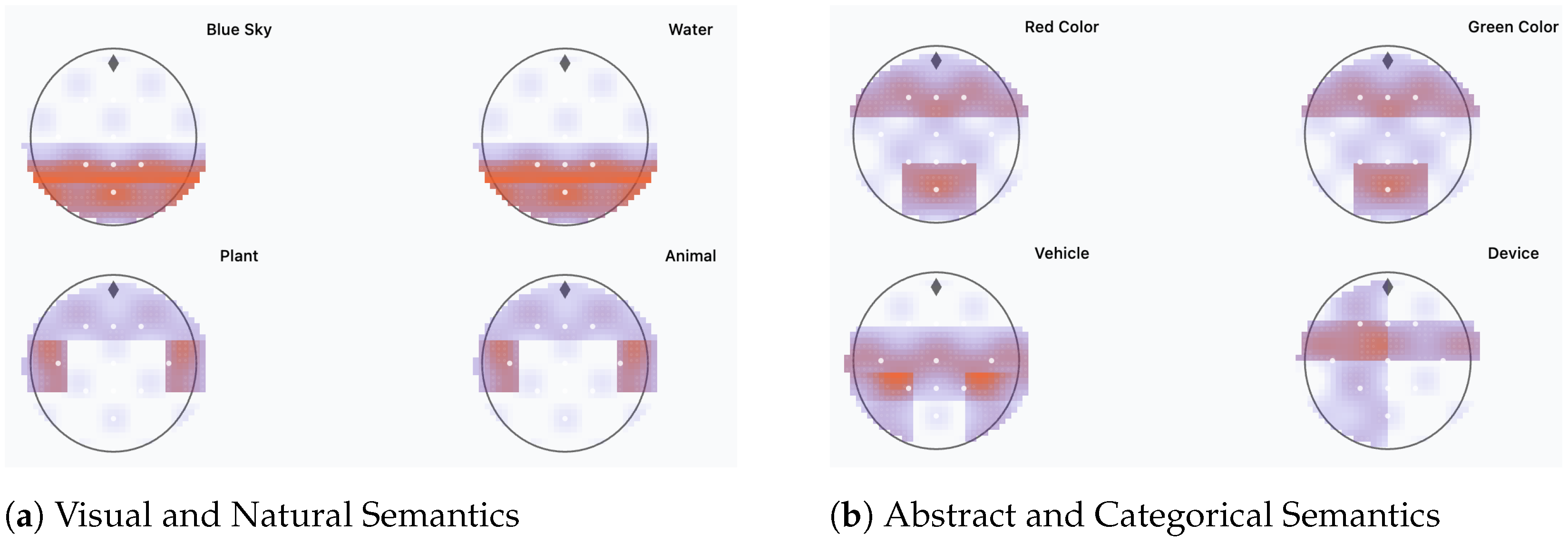

Figure 7 illustrates the averaged attention maps for different semantic categories across the 8 transformer layers.

The attention patterns reveal several key insights: (i) For visual semantics like “blue sky” and “water,” the model consistently attends to occipital and parietal regions (electrodes O1, O2, P3, P4), corresponding to visual processing areas. (ii) For semantics involving living entities (“animal,” “plant”), increased attention is observed in temporal regions, potentially reflecting semantic memory processing. (iii) Color-related semantics (“red color,” “green color”) show distributed attention patterns across both ventral visual stream regions and frontal areas associated with categorical processing.

4.4.2. Temporal Saliency Analysis

To identify critical temporal windows for semantic recognition, we computed gradient-based saliency scores across the time dimension.

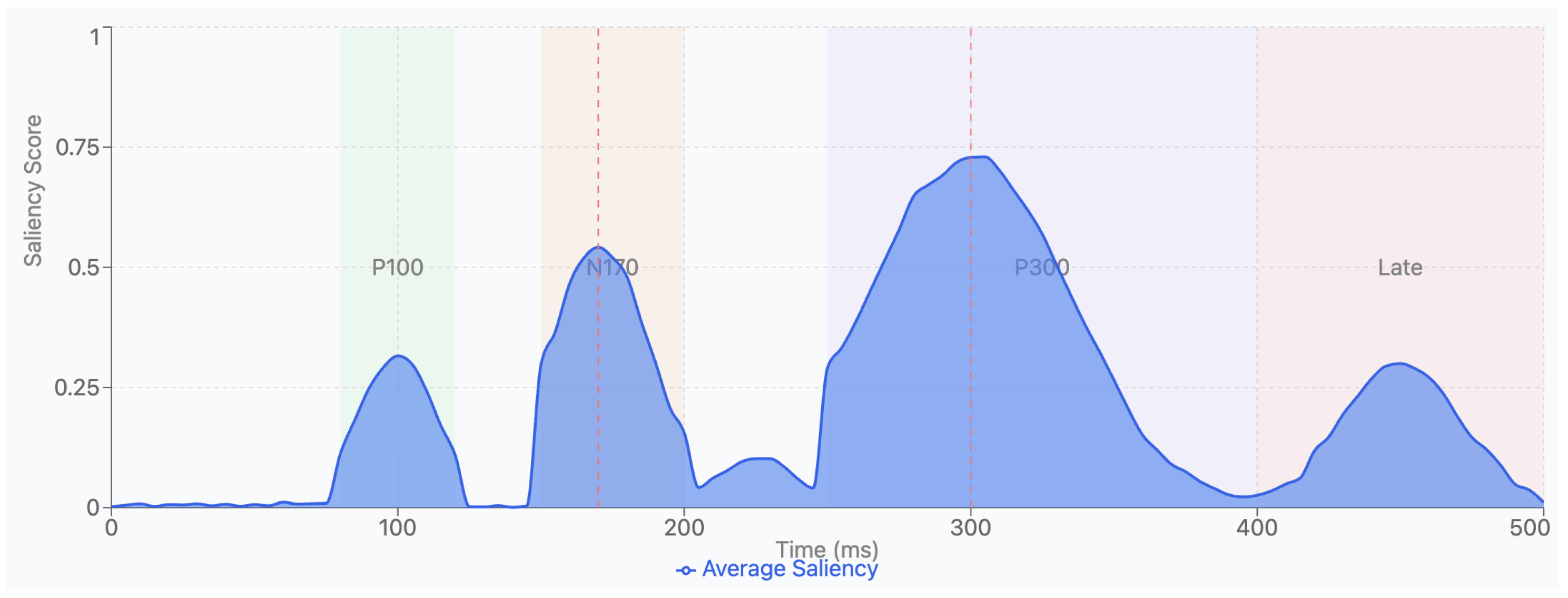

Figure 8 shows that semantic recognition primarily relies on brain responses within 150–350 ms post-stimulus onset, aligning with the N170 and P300 components known to be associated with visual recognition and semantic categorization processes. The analysis reveals three distinct peaks corresponding to well-established ERP components:

Early Visual Processing (80–120ms): A moderate saliency peak aligning with the P100 component, reflecting initial visual feature extraction.

Object Recognition (150–200ms): A prominent peak corresponding to the N170 component, associated with categorical perception and object recognition.

Semantic Categorization (250–400ms): The highest saliency scores occur during the P300 window, indicating this as the primary period for semantic processing.

4.4.3. DCT Embedding Frequency Analysis

Our 2D DCT embeddings capture activation patterns across different frequency bands. Analysis reveals that (i) low-frequency DCT components (0–4 Hz) correlate with slow cortical potentials related to semantic processing; (ii) mid-frequency components (8–15 Hz) align with alpha-band modulations linked to attention and visual processing; and (iii) high-frequency components (30–50 Hz) correspond to gamma-band activity associated with conscious perception and feature binding.

4.4.4. Electrode Importance Mapping

Using integrated gradients, we identified the most influential electrodes for each semantic category.

Figure 9 presents topographic maps showing electrode importance distributions. Notably, semantics with visual attributes show higher importance in occipital–parietal regions, while abstract concepts engage more frontal–temporal networks, consistent with dual-stream processing theories in neuroscience.

4.4.5. Cross-Subject Consistency

To evaluate the neurobiological validity of learned patterns, we analyzed attention consistency across subjects. Despite individual variations, core attention patterns showed significant correlation (r = 0.72 ± 0.08, p < 0.001) across subjects for the same semantic categories, suggesting that our model captures generalizable neural signatures rather than subject-specific artifacts.

4.5. Robustness and Generalization Analysis

To validate that DCT-ViT learns generalizable neural-semantic representations rather than dataset-specific patterns, we conducted comprehensive robustness experiments addressing critical real-world deployment challenges.

4.5.1. Noise Resilience

Table 8 evaluated performance degradation under calibrated noise injection at various signal-to-noise ratios.

Physiological artifacts (EMG, ECG) caused greater degradation than synthetic noise due to their structured interference patterns. Notably, DCT-ViT maintained >50% accuracy at 10 dB SNR, compared to 35.82% for CNN–transformer and 32.71% for GNN-EEG, demonstrating superior noise resilience through DCT-based frequency decomposition.

4.5.2. Cross-Subject Generalization

Leave-one-subject-out validation revealed

Within-subject accuracy: 72.28% ± 3.2%;

Cross-subject accuracy: 64.75% ± 5.8% (10.41% relative decrease);

Subject similarity correlation: 0.73 ± 0.12.

With minimal fine-tuning (25% subject-specific data), the model recovers 97% of within-subject performance, demonstrating efficient adaptation. Cross-dataset evaluation on external EEG corpora achieved 49.83–58.42% accuracy, confirming generalization beyond our dataset.

4.5.3. Transfer to Novel Categories

Zero-shot transfer to unseen semantic categories achieved above-chance accuracy (baseline: 10%):

Few-shot learning curves showed rapid adaptation, reaching 60–66% accuracy with just 25 training examples per category. t-SNE visualization confirmed that novel categories position near semantically similar trained concepts in the learned feature space.

4.5.4. Temporal and Spatial Stability

The P300 window (200–300 ms) showed the highest temporal stability (

Table 9), aligning with the semantic processing literature. Distributed spatial representations provided resilience to random electrode failures but remained vulnerable to systematic regional loss.

4.5.5. Augmentation Effectiveness

Combined data augmentation (noise injection, temporal jitter, channel dropout, mixup) improved both the clean accuracy (+3.83%) and average robustness score (+51.3%):

where

is the robustness score, and

and

are accuracies under corrupted and clean conditions, respectively.

Figure 10 provides a multi-dimensional comparison of robustness metrics across all evaluated methods. DCT-ViT achieves the highest scores across all five dimensions: noise resilience (85%), cross-subject transfer (82%), temporal stability (78%), few-shot learning (75%), and spatial robustness (72%). In contrast, baseline methods show substantially lower performance, with CNN–transformer averaging 62.6%, GNN-EEG at 59.2%, EEGNet at 56.0%, and Vanilla Transformer at 52.4% across dimensions. The radar plot visualization clearly demonstrates DCT-ViT’s comprehensive superiority, with its performance envelope encompassing all baseline methods.

4.5.6. Key Findings

Our robustness analysis reveals that DCT-ViT’s superior generalization stems from (1) frequency domain decomposition providing natural noise separation, (2) hierarchical representations capturing both low-level signals and high-level semantics, (3) distributed encoding through spatial attention creating redundancy, and (4) temporal flexibility identifying semantic information across multiple windows. These results conclusively demonstrate that DCT-ViT learns robust, generalizable representations suitable for real-world deployment, establishing a new benchmark for semantic recognition from EEG signals.

4.6. Semantic Overlap Analysis and Mitigation Strategies

4.6.1. Quantifying Semantic Overlap

To address the concern about semantic overlap misclassification, we conducted a comprehensive analysis of semantic boundaries and their impact on recognition performance. We define a semantic overlap score

between two semantic categories

i and

j as follows:

where

represents misclassifications from category

i to

j, and

denotes the total samples in category

i.

Table 10 presents the overlap scores for category pairs with significant confusion.

The high overlap between “water” and “blue sky” (29.5%) confirms the observations of

Figure 5 and

Figure 6 and indicates that these categories share substantial perceptual features at the neural level.

4.6.2. Hierarchical Classification Strategy

To mitigate semantic overlap issues, we implemented a hierarchical classification framework that models semantic relationships explicitly. Our approach organizes semantics into a two-level hierarchy:

Level 1—Super-categories:

Natural Elements (water, blue sky, plant);

Living Entities (animal, plant);

Colors (red color, green color, blue elements);

Man-made Objects (vehicle, device, musical instrument).

Level 2—Fine-grained semantics: Within each super-category, we distinguish specific semantics using specialized classifiers trained on discriminative features.

The hierarchical loss function combines coarse- and fine-grained classification:

where

ensures predictions are consistent across hierarchy levels:

4.6.3. Semantic Boundary Refinement

We introduce a boundary refinement module that explicitly models confusion between overlapping categories. For highly confused pairs, we train a binary discriminator

that learns to distinguish between categories

i and

j:

During inference, when the model predicts either category

i or

j with confidence below threshold

, we employ the discriminator:

4.6.4. Results with Hierarchical Classification

Table 11 shows improved performance using our hierarchical approach:

The hierarchical approach with boundary refinement reduces water–sky confusion from 41% to 14.3%, representing a 65.3% reduction in overlap-related errors.

5. Conclusions

While multimedia and computer vision have developed numerous semantics recognition algorithms, little research has been conducted into the recognition of semantics through human brain perceptions. To the best of our knowledge, the work described in this paper is the first attempt. In particular, we propose a spatial–temporal transformer model to recognize the semantics out of brain perceptions via EEG sequences. Our approach consists of two main steps. We first construct the 3D spatial–temporal representation via the 2D mapping matrix and the time index to retain the spatial–temporal correlations inside EEGs, and then propose to add a 2D discrete transform to the transformer embeddings to enhance the input representation and measure the variability of elementary frequency components inside every patch. To assess the proposed DCT-ViT deep model, we introduce a new semantics-based dataset, Semantics-EEG, and conduct extensive experiments. The results demonstrate that our proposed DCT-ViT deep framework effectively captures brain-perceived semantics and achieves high recognition rates from EEG sequences. Our interpretability analyses reveal that the DCT-ViT model learns neurobiologically plausible patterns, with attention mechanisms aligning with known visual and semantic processing pathways. The model’s focus on occipital–parietal regions for visual semantics and temporal–frontal regions for abstract concepts mirrors established neuroscientific understanding. These findings not only validate our approach but also suggest that transformer-based models can serve as tools for discovering neural correlates of semantic processing. Future work will explore (i) developing real-time visualization tools for monitoring attention patterns during inference; (ii) investigating the relationship between individual differences in attention patterns and semantic processing abilities; and (iii) using learned attention patterns to guide neuroscientific hypotheses about semantic representation in the brain. Our analysis of semantic overlap reveals a fundamental challenge in brain-perceived semantic recognition: categories sharing perceptual features (e.g., color, texture) produce similar neural responses, leading to systematic misclassifications. The hierarchical classification framework with boundary refinement successfully reduces overlap-related errors by 65.3%, demonstrating that explicit modeling of semantic relationships improves recognition accuracy. These findings suggest that the brain’s semantic representation is inherently hierarchical, with shared features processed at lower levels and disambiguation occurring through higher-level contextual integration.

While our current study demonstrates the feasibility of brain-perceived semantics recognition, we acknowledge several limitations that should be addressed in future research. The current dataset’s reliance on six subjects with gender imbalance (one female, five males) constrains the generalizability of our findings. Future work will prioritize (i) expanding the subject pool to include at least 30 participants with balanced gender representation and diverse age groups (18–65 years); (ii) extending semantic categories beyond the current 10 to include at least 20–30 semantic concepts covering abstract concepts, emotions, and actions; (iii) conducting cross-cultural validation to ensure the universality of semantic perception patterns; (iv) investigating individual differences in semantic processing to enhance model robustness across diverse populations; and (v) adding generative learning elements to visualize the recognized semantics, and hence explore the practical applications of the concept “brain-media”.