Development and Validation of a Questionnaire to Evaluate AI-Generated Summaries for Radiologists: ELEGANCE (Expert-Led Evaluation of Generative AI Competence and ExcelleNCE)

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Participants

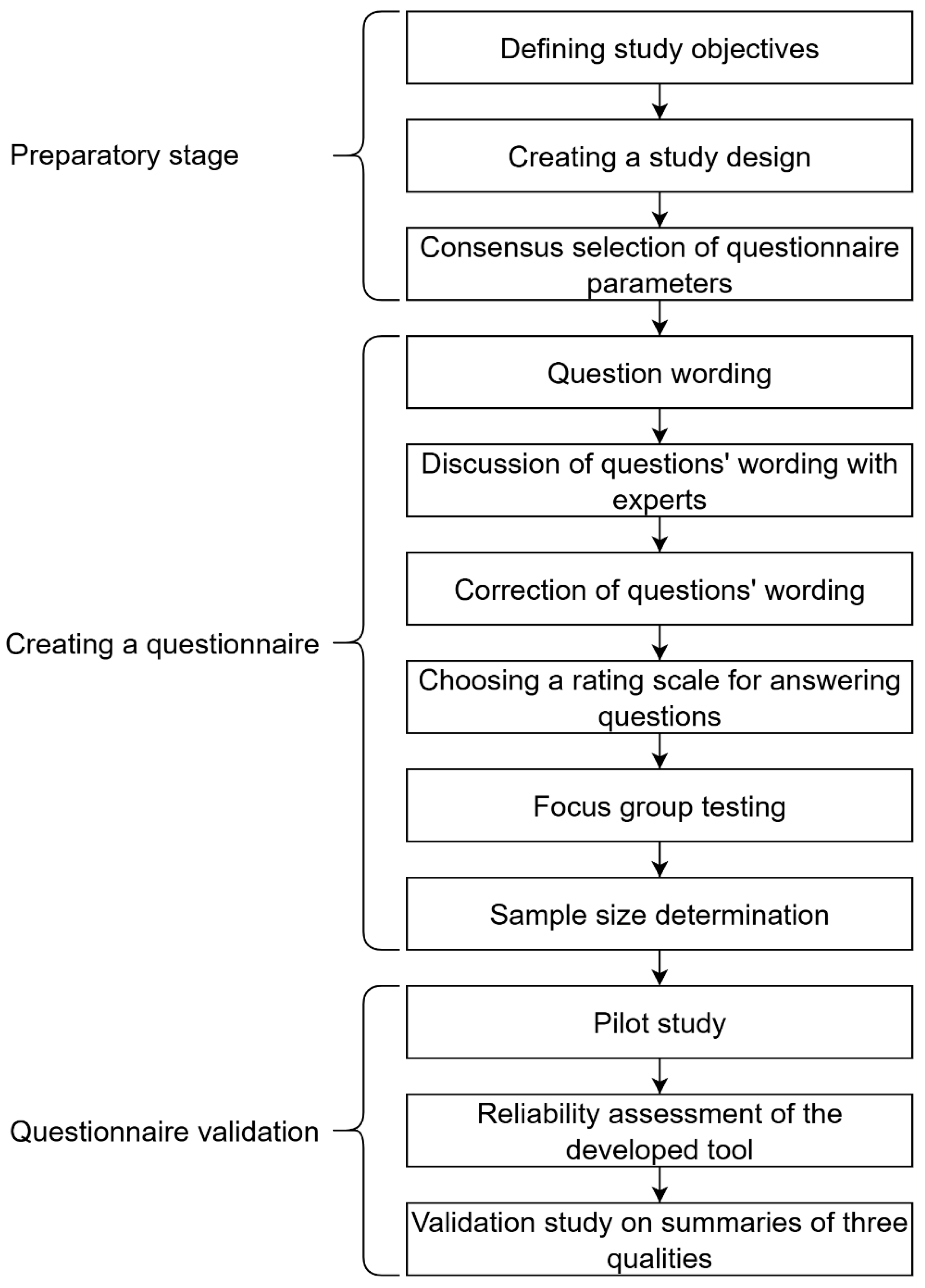

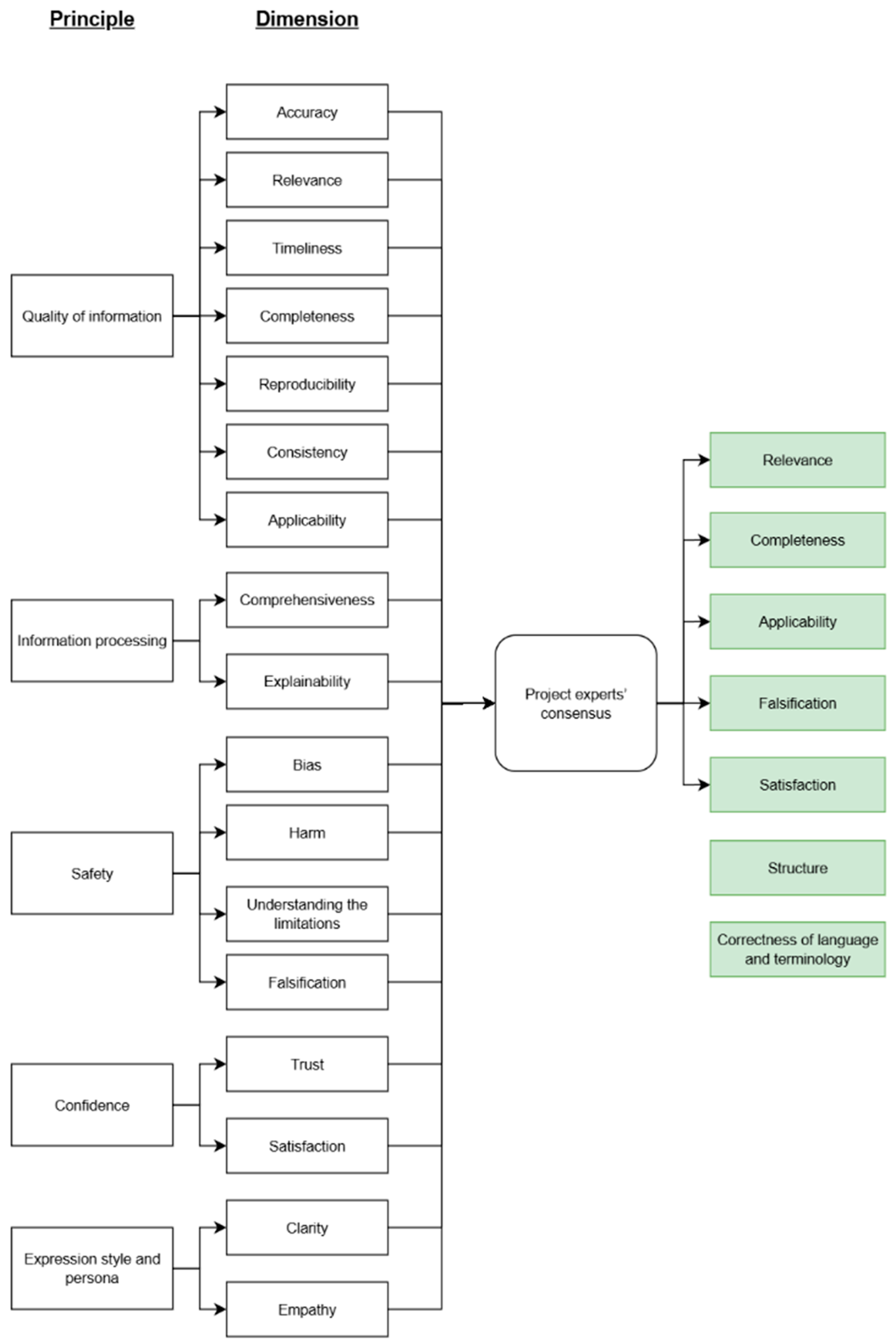

2.2. Stage One: Preparatory

- -

- Relevance: alignment of the response with the query;

- -

- Completeness: inclusion of all necessary information;

- -

- Applicability: the practical value of the response to the user;

- -

- Falsification: presence of false information and/or hallucinations;

- -

- Satisfaction: the respondent’s overall satisfaction with the quality of the summary;

- -

- Structure: presentation of the text as a coherent and logically organized unit;

- -

- Correctness of language and terminology: accuracy of language and terminology.

2.3. Stage Two: Creating a Questionnaire

- (1)

- Textual descriptors assigned to each score;

- (2)

- Textual descriptors provided only for the polar responses, with intermediate scores chosen by proximity.

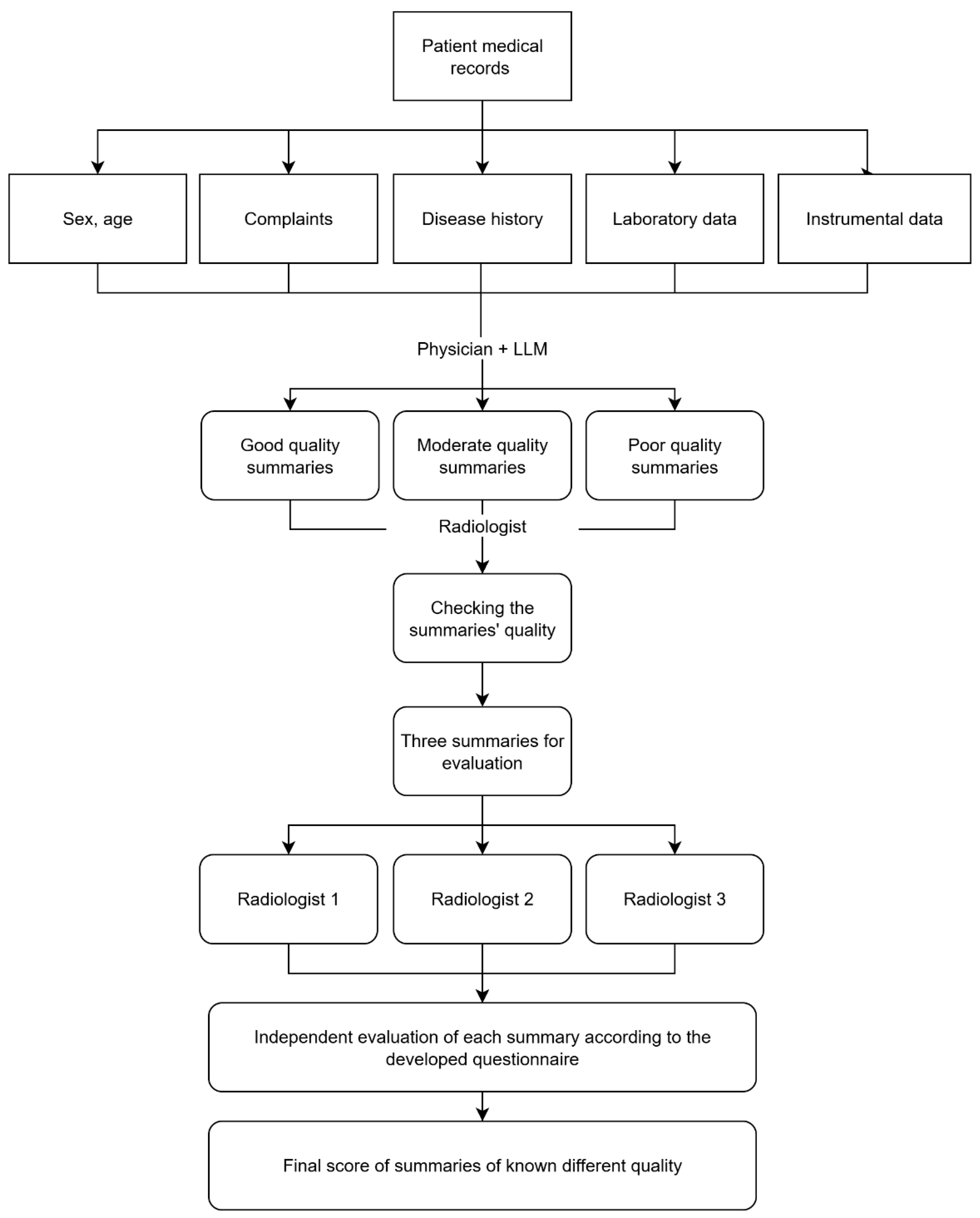

2.4. Stage Three: Questionnaire Validation

- -

- Complaints prompting the abdominal CT scan.

- -

- Medical history.

- -

- Patient medical history (comorbidities, lifestyle factors, family history, surgeries).

- -

- Laboratory findings.

- -

- Instrumental findings.

2.5. Sample Size Calculation for the Validation Study

2.6. Statistical Data Analysis

3. Results

3.1. Pilot Testing

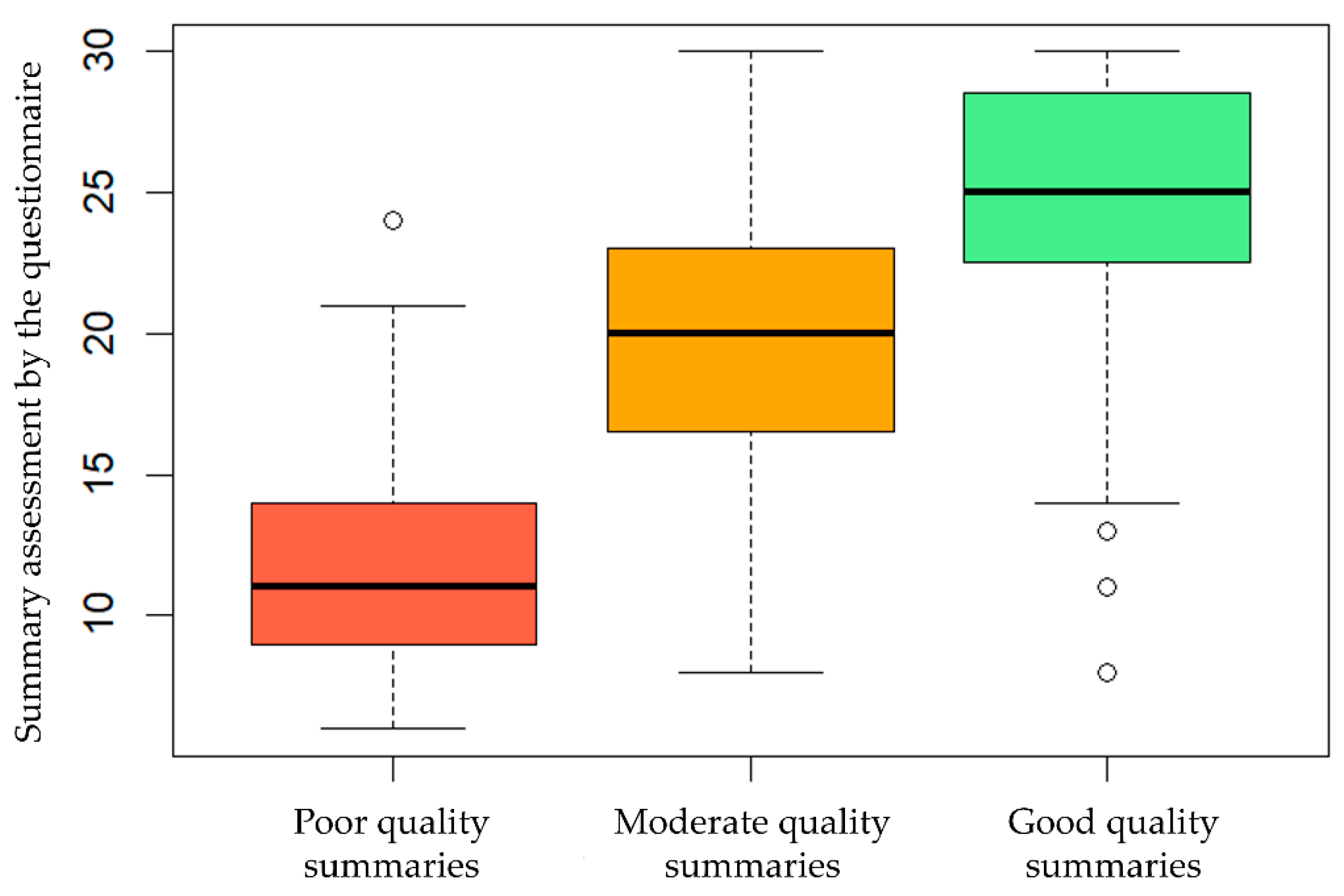

3.2. Validation Study

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| LLM | Large Language Model |

| ELEGANCE | Expert-Led Evaluation of Generative AI Competence and ExcelleNCE |

| ROUGE | Recall-Oriented Understudy for Gisting Evaluation |

| BLEU | BiLingual Evaluation Understudy |

| METEOR | Metric for Evaluation of Translation with Explicit ORdering |

| QUEST | Quality of Information, Understanding and Reasoning, Expression Style and Persona, Safety and Harm, Trust, and Confidence |

| QAMAI | Quality Assessment of Medical Artificial Intelligence |

| CT | Computed Tomography |

| IQR | Interquartile Range |

| AIPI | Artificial Intelligence Performance Instrument |

| CLEAR | Culturally and Linguistically Equitable AI Review |

| ATRAI | Attitude of Radiologists Toward Radiology AI |

Appendix A

| Gender, age | Male, 75 years old | ||

| Examinations by medical specialists | 10 April 2025 R10.4—Abdominal pain (ICD-10 code). Examination by a general practitioner. Primary diagnosis: R10.4—Abdominal pain. Preliminary diagnosis: Chronic pancreatitis, relapse? Gallstone disease, acute cholecystitis? Peptic duodenal ulcer, relapse? Complaints: Diffuse, severe abdominal pain, intensifying after the meal, no relief after defecation; nausea, general weakness. Disease history: Abdominal pain occurred a week before the examination. The patient associates it with a violation of the diet, consumption of fatty food. The patient took ibuprofen 400–800 mg /day for a week for pain relief with a short-term effect. No allergy. General examination. Heart rate: 94 bpm. Pulse rate: 94 bpm. Respiratory rate: 24 breaths/min. Blood pressure: 135/80 mmHg. Skin: normal color. Edema: absent. Chest: Lung auscultation: vesicular breathing (normal). Wheezing: none. Heart sounds are muffled, the rhythm is regular, and there is no heart murmur. Abdominal cavity: The abdomen is symmetrical and distended; the painful area is localized in the epigastrium on superficial palpation; and pain is noted in all parts of the abdomen on deep palpation. There is no stool for two days. Urination is normal. Recommendations: Abdominal CT scan, stat! Abdominal sonography, stat! Surgeon consultation, stat! CBC, general urinalysis, ALT, AST, ALP, GGT, total bilirubin, and creatinine cito! | ||

| 9 September 2024 C34.3—Malignant neoplasm of lower lobe, bronchus, or lung (ICD-10 code). Oncologist’s examination Protocol type: confirmed malignant neoplasm. Complaints at the time of examination: shortness of breath, pain in the chest on the right during a physical activity. No chronic pain syndrome. Patient medical history: Suspected right lower lobe lung cancer since May 2018. On 31 October 2018, a right lower lobectomy was performed at the Regional Clinical Hospital. Histological examination revealed grade 3 (G3) cancer, poorly differentiated and high-grade, with no lymph node metastases detected. Immunohistochemical examination: G3 squamous cell carcinoma. Adjuvant treatment (additional chemotherapy or radiation therapy after the surgery) is not indicated. Heredity: the father had lung cancer (code C34). Concomitant diseases: acute myocardial infarction in 2005, hypertension, and benign prostatic hyperplasia. He suffered a coronavirus infection in December 2020–January 2021 and was treated on an outpatient basis. From 16 February to 20 February 2021, he received a treatment at the clinical research hospital. Endoscopic polypectomy of two polyps was performed on 17 February 2021. Histopathological examination: colon polyp tubular adenoma with low-grade dysplasia (benign intestinal tumor). He has been smoking for over 60 years, one pack a day. Height 187 cm, weight 100 kg. Instrumental studies: Positron emission tomography combined with computed tomography (PET-CT) of 22 June 2020: diffuse increase in metabolic activity in the area of the postoperative scar, SUVmax 2.15. Masses appeared in the rectum with SUVmax 14.62 (SUV is an indicator of the accumulation intensity of a radiopharmaceutical drug, reflecting the process activity). Fibrocolonoscopy of 21 July 2020: dolichosigma, epithelial masses in the descending colon and rectum. Biopsy: tubular adenoma of colon. The chest CT scan of 16 February 2021, is unremarkable. PET-CT scan of 16 August 2021: degenerative changes in the spine, 6th, 7th, and 8th rib fractures on the right side (surgical access), postoperative changes in the chest wall, SUVmax 2.69 without dynamics, and no new masses were detected. Contrast-enhanced chest CT scan of 18 August 2022, showed signs of chronic bronchitis, partial bronchial obstruction with discharge, its postoperative deformation, and hypoventilation of the right lung middle lobe. Conclusion: fiberoptic bronchoscopy is indicated. Additionally, chronic obstructive pulmonary disease and bilateral chronic pyelonephritis were revealed (according to kidney sonography, there are no structural changes). Thyroid sonography of 26 October 2022: right lobe—TI-RADS 3: several isoechoic nodes (TI-RADS 2) of 5–6 mm in diameter with smooth clear margins and perinodular blood flow are visualized; in its middle third part, a cystic multi-chamber formation of 17 × 15 × 16 mm (TI-RADS 3) with smooth clear margins and loci of perinodular blood flow is localized. Left lobe—TI-RADS 1. On 26 October 2022, a sonography of the postoperative scar located in the right intercostal space was performed: In the middle third, a rounded isoechoic formation of 11 × 7 mm with smooth, clear margins and a hypoechoic border is localized above the scar; there are no signs of blood flow on color Doppler mapping (granuloma?). A hypoechoic formation of 7.5 × 5.0 mm with unclear and irregular margins is localized under the scar; there is no blood flow on color Doppler mapping (metastasis?). Esophagogastroduodenoscopy on 26 November 2022: distal reflux esophagitis, signs of atrophic gastritis, acute gastric erosions, stomach epithelial formation, bulbitis. Histological examination of 5 December 2022: gastric hyperplastic polyp with acute erosion. Fibrocolonoscopy of 26 November 2022: colon diverticulosis, colon epithelial formations. Chest CT scan of 1 September 2023: a condition after the right lower lobectomy. Staples are detected in the stump of the lower lobe bronchus and paramediastinal. An obvious solid induration has appeared around the staples, the adjacent segmental bronchi are constricted, a solid substrate has appeared in the medial middle lobe bronchus, and the middle lobe is hypoventilated. The bronchopulmonary lymph node anteriorly from the infiltrate has enlarged from 5 mm up to 9 mm along the short axis. There are no focal and infiltrative changes in the remaining parts of the right lower lobe and in the left lung. PET-CT scan of 19 September 2023: No signs of the active tumor process are identified. There is no progression compared to 2021. Chest CT scan of 13 April 2024: A negative dynamic is noted—increasedatelectasis of the right middle lobe. Fibrobronchoscopy of 20 May 2024: Signs of cicatricial deformation and granulomas around the suture material are identified during a bronchial stump examination. A biopsy was taken; the histological examination result of 24 May 2024, is chronic bronchitis. There are single cells suspected of atypia. PET-CT scan of 28 June 2024: there is no data for tumor progression, no changes compared to 2023. Thoracic oncologist examination on 13 August 2024. The patient is in satisfactory condition and has clear consciousness, ECOG scale 1 (heavy work is limited; light or sedentary work is permissible). Primary diagnosis: C34.3—Malignant neoplasm of lower lobe, bronchus, or lung. Clinical diagnosis: Right lower lobe lung cancer, stage IB (cT2aN0M0). A condition after the surgery (lobectomy in October 2018). Hyperplasia of the right supraclavicular lymph nodes since 2019. Suspected cancer progression since April 2024. Confirmation method: morphological (histology). Morphological type: squamous cell carcinoma. Recommendations: interval contrast-enhanced chest CT scan, follow-up visit with test results. Since the creatinine level is increasing, a general practitioner or nephrologist consultation is recommended at the local clinic. | |||

| Instrumental diagnostics | PET-CT scan with fluorine-18 fluorodeoxyglucose (18F-FDG) was performed on 28 June 2024. The examination was conducted from the frontal-parietal zone to the plantar surface. Physiological distribution of the radiopharmaceutical is noted in the brain, kidneys, partially along the ureters, bladder, and the intestinal loops. Head and neck. No foci of pathological accumulation of the radiopharmaceutical were detected. No pathological formations were identified in the brain tissue. The ventricles are not dilated; the median structures are not displaced. No pathological changes in the neck soft tissues were identified. The thyroid gland has a heterogeneous structure due to the presence of a hypodense lesion measuring 17 mm without pathological accumulation of the radiopharmaceutical in the right lobe. Neck and supraclavicular lymph nodes are not enlarged. Chest. No pathological increase in accumulation of radiopharmaceutical was noted in the organs and soft tissues of the chest. There are no focal and infiltrative changes in the pulmonary parenchyma. There is a condition after the right lower lobectomy; pathological formations and focal accumulation of the radiopharmaceutical in the stump area are not detected. Partial atelectasis of the right lung middle lobe without focal abnormal FDG uptake is noted. The lumens of the trachea and large bronchi are visible except for the right lower lobe. No fluid was detected in the pleural cavities. Intrathoracic lymph nodes are not enlarged, with no abnormal accumulation of the radiopharmaceutical. The heart and mediastinal vascular structures are unchanged. No effusion in the pericardial cavity was detected. Atherosclerotic changes in the walls of the thoracic aorta and coronary arteries are noted. Abdomen and pelvis. No pathological increase in radiopharmaceutical accumulation was noted in the organs and tissues of the abdominal cavity, retroperitoneal space, and pelvis. The stomach is insufficiently filled; no reliable pathological changes in its walls are observed. The liver is not enlarged and has a homogeneous structure. The parenchyma density is 45 Hounsfield units (HU). Intrahepatic and extrahepatic ducts and vessels are not dilated. The gallbladder is unchanged; no radio-opaque stones were detected. The pancreas is not enlarged, the structure shows age-related changes, the pancreatic duct is not dilated. The spleen is not enlarged; the structure is unchanged. The adrenal glands are not enlarged. The kidneys are typically located, and the perinephric tissues are indurated and stringy, which are post-inflammatory changes. The pyelocaliceal system and ureters are not dilated. No stones were found along the urinary tract. The urinary bladder is not full enough. No pathological formations or focal accumulation of the radiopharmaceutical are reliably detected along the rectum. Skeletal system and soft tissues. No pathological accumulation of radiopharmaceuticals was observed in the skeleton bones. No bone-destructive or bone-traumatic changes were detected on top of degenerative changes, most pronounced in the right shoulder joint. Fracture of the right seventh rib with the pseudoarthrosis (without any dynamics). Scar postoperative changes without hyperfixation of radiopharmaceuticals in the soft tissues of the lateral surface of the right chest wall at the level of the fifth intercostal space remain (decrease in the size of granulomas in dynamics). Edema of the subcutaneous tissue of the right shin is noted. Radiation dose: 47.50 mSv. | ||

| Laboratory tests | Laboratory test result of 26 September 2024: Hemoglobin A1c (glycated hemoglobin) 6.5% (normal range is 3.5–6%) | Laboratory test result of 30 January 2025: Hemoglobin A1c (glycated hemoglobin) 6.6% (normal range is 3.5–6%) | Laboratory test result of 12 September 2024: Urea 7.85 mmol/L (normal range is 2.8–7.2 mmol/L) |

| Discharge summary | DISCHARGE SUMMARY Date: 5 April 2025, 08:24 Length of stay: 1 day Admitting diagnosis: M54.1 Radiculopathy. Degenerative dystrophic disease of the lumbar spine. Spondyloarthrosis. Facet joint syndrome. Discharge diagnosis: M54.1 Radiculopathy. Degenerative dystrophic disease of the lumbar spine. Spondyloarthrosis. Facet joint syndrome. Complaints at admission: Pain in the lumbar spine. Restricted movements in the lumbar spine due to pain. History of present illness: According to the patient, the pain syndrome is long-lasting. The disease is constantly present. He is receiving a treatment. A clinical examination was performed. Examination results: Lumbosacral spine MRI—degenerative dystrophic changes in the spine. Admission status: Neurological status: Wakefulness level is clear consciousness. Orientation in space, time, and one’s own personality is preserved. Meningeal symptoms are absent. Pupils are symmetrical. Pupillary reaction to light is preserved. Visual fields are unchanged. The face is symmetrical. Speech is normal. There is no limb muscular paresis. There are no disturbances in sensitivity. The Romberg test is negative. There are no tension symptoms. The general condition is satisfactory. The skin is of normal color. Subcutaneous fat is moderately developed. Respiratory system: The breathing type is independent. The respiratory rate is 24 breaths/min. The breathing rhythm is regular. The breathing pattern is vesicular (normal). There is no wheezing. Cardiovascular system: Blood pressure is 120/80 mmHg, heart rate is 78 bpm. Gastrointestinal tract: The tongue is pink and moist, without plaque. The abdomen is not enlarged, rounded, symmetrical, participates in breathing. On palpation, the abdomen is soft and painless. There are no symptoms of peritoneal irritation. Genitourinary system: Urination is regular. Local status: There are restricted movements in the lumbar spine due to pain and tension of paraspinal muscles of the lumbar spine. The “arch” symptom is positive. Pain on palpation of the facet joints; the pain intensity is 7 points according to the visual analog pain scale. Treatment: Periarticular drug injection 4 April 2025. In the operating room, under aseptic conditions, an X-ray-guided (in direct and oblique projections) medial branch block test of the right L2–L5 facet joints was performed. Spinocan needle 22 G of 120 mm length was sequentially inserted to: 1. the transition point of the right L5 transverse process into the superior articular process; 2. the transition point of the right L4 transverse process into the superior articular process; 3. the transition point of the right L3 transverse process into the superior articular process; 4. the transition point of the right L2 transverse process into the superior articular process. 0.5 mL of ropivacaine 0.2% and 1 mg of dexamethasone were injected into each point. An aseptic bandage was applied. During the procedure, vital signs were stable and neurological status was unchanged. Conclusion: A follow-up consultation at the outpatient Pain Management Center, if necessary, not earlier than in 2 weeks. Recommendations: Annual checkup (preventive medical examination). | ||

| Good-quality summary | Complaints that prompted the abdominal CT scan: Diffuse severe abdominal pain, intensifying after the meal, without relief after defecation. Nausea, general weakness. There is no stool for two days. Disease history: Pain occurred a week before the examination; the patient associates it with eating fatty foods. He took ibuprofen 400–800 mg/day with a short-term effect. Relevant medical history: Right lower lobe lung cancer, stage IB, rT2aN0M0 according to the TNM classification. A condition after the surgery (October 2018). Hyperplasia of the right supraclavicular lymph nodes since May 2019. Suspected cancer progression (April 2024). Laboratory data (current, no more than six months before the study): none. Instrumental study data relevant to the abdominal CT scan: PET-CT scan of 28 June 2024. Conclusion: There is no evidence of the presence of increased metabolic activity foci typical for a neoplastic process with pathological accumulation of fluorine-18 fluorodeoxyglucose. Postoperative changes in the right anterior chest wall were noted. In comparison with the PET-CT scan of 19 September 2023, there are no structural dynamics, pathological foci with increased metabolism were not detected, and reliable data on the progression of the underlying disease were not obtained. | ||

| Moderate-quality summary | A 75-year-old patient complains of abdominal pain that intensifies after the meal. Nausea, general weakness, and the absence of stool for two days are noted. The pain occurred about a week ago after breaking the diet. The patient took ibuprofen with a temporary effect. Physician examination: pain all over the abdomen, especially in the epigastrium. He was referred for the abdominal CT scan urgently. From the medical history: right lung cancer; he underwent lobectomy; no relapse has been currently detected. Chronic pancreatitis, gastritis, bulbitis, and hyperplastic gastric polyp. Tubular adenoma and diverticular disease of the colon were also detected; fibrocolonoscopy and esophagogastroduodenoscopy were previously performed. There are concomitant diseases: arterial hypertension, ischemic heart disease, atrial fibrillation, chronic kidney disease, erythremia, and dyscirculatory encephalopathy. Decreased glucose tolerance is noted. The glycated hemoglobin level is 6.6%. He has had a coronavirus infection; he is currently under the observation of a pulmonologist. He suffers from dorsopathy and the lumbar pain syndrome. He underwent facet joint block injections. The complaints remain, the general condition is stable, the score on the Eastern Cooperative Oncology Group (ECOG) scale is 1 point (heavy work is limited, and light work is permissible). | ||

| Poor-quality summary | Complaints: The patient complains of the absence of stool for two days. Disease history: There is no edema. Breathing and lung characteristics are assessed as normal (vesicular breathing). Heart sounds are muffled, the rhythm is regular, and there is no heart murmur. The abdomen is symmetrical and slightly bloated. The painful area is localized in the epigastrium on superficial palpation. Pain is noted in all parts of the abdomen on deep palpation. Urination is normal. The patient’s medical history (concomitant diseases, bad habits, family history, and surgeries). Status: Restrictions of movement due to pain. Tension of the lumbar paraspinal muscles. Positive “arch” symptom and pain on palpation of the facet joints. Pain intensity assessment on a visual analog scale is 7 points. Laboratory data are not specified in the provided text. Instrumental examinations: The patient was referred for the abdominal CT scan. Results of the lumbosacral MRI scan: degenerative and dystrophic changes in the spine. | ||

Appendix B. ELEGANCE Questionnaire

- To what extent does the LLM output aligned with the query?

| The result does not address the query; the information is useless. | |

| The result addresses the query in part, but key aspects are missing. | |

| The result corresponds to the query, though some details are absent. | |

| The result is close to the expectations; key aspects are taken into account, but there are minor flaws. | |

| The result perfectly meets the query; all key aspects are taken into account, and the information is useful and accurate. |

- 2.

- Does the LLM provide not only the explicitly requested data but also additional information relevant to completing the task?

| There is no additional information; the result is limited to only the explicitly requested data. | |

| There is some additional information, but not enough for the sake of completeness. | |

| The main additional information is taken into account, but there are some omissions. | |

| Most of the important additional information is included; the result is close to the expectations. | |

| All possible additional information that may be important is taken into account. |

- 3.

- To what extent does the LLM result help solve the task?

| The result is useless or not in alignment with the task. | |

| The result is not completely useless, but it does not allow solving the task. | |

| The result is generally useful, but there are some flaws. | |

| The result is close to optimal with minor flaws (e.g., redundant data). | |

| The result is ideal for solving the task. |

- 4.

- To what extent is the LLM-generated text clear, logical, and well structured?

| The text is unclear; there is no logic in the presentation. | |

| The text is partially understandable, but the logic and structure are weak. | |

| The text is generally clear, but there are some flaws in the logic or structure. | |

| The text is clear, logical, and structured; the flaws are minimal. | |

| The text is perfectly clear, logical, and structured. |

- 5.

- To what extent is the LLM-generated text linguistically and terminologically accurate?

| The text contains many errors in language and terminology, which makes it unusable. | |

| The text contains significant errors in language or terminology that make it difficult to understand. | |

| The text is mostly correct, but there are some errors or linguistic inaccuracies. | |

| The text is almost completely correct; errors are minimal and do not affect understanding. | |

| The text perfectly conforms to the norms of language and professional terminology; there are no errors. |

- 6.

- How satisfied are you with the result in terms of usefulness, clarity, and fulfillment of your expectations for LLM-based medical record summarization?

| The result does not meet expectations at all. | |

| The result partially meets expectations but has significant shortcomings. The information is only marginally useful; key aspects are missing. | |

| The result generally meets expectations, but there are some flaws. The information is useful but requires further improvement or clarification. | |

| The result is close to expectations; minor flaws do not affect the overall usefulness. | |

| The result fully meets expectations. |

- 7.

- Does the LLM response contain any information absent from the provided patient medical records?

- Yes

- No

References

- Vasilev, Y.A.; Reshetnikov, R.V.; Nanova, O.G.; Vladzymyrskyy, A.V.; Arzamasov, K.M.; Omelyanskaya, O.V.; Kodenko, M.R.; Erizhokov, R.A.; Pamova, A.P.; Seradzhi, S.R.; et al. Application of large language models in radiological diagnostics: A scoping review. Digit. Diagn. 2025, 6, 268–285. [Google Scholar] [CrossRef]

- Bednarczyk, L.; Reichenpfader, D.; Gaudet-Blavignac, C.; Ette, A.K.; Zaghir, J.; Zheng, Y.; Bensahla, A.; Bjelogrlic, M.; Lovis, C. Scientific Evidence for Clinical Text Summarization Using Large Language Models: Scoping Review. J. Med. Internet Res. 2025, 27, e68998. [Google Scholar] [CrossRef]

- Vasilev, Y.A.; Kozhikhina, D.D.; Vladzymyrskyy, A.V.; Shumskaya, Y.F.; Mukhortova, A.N.; Blokhin, I.A.; Suchilova, M.M.; Reshetnikov, R.V. Results of the Work of the Reference Center for Diagnostic Radiology with Using Telemedicine Technology. Zdravoohran. Ross. Fed. 2024, 68, 102–108. [Google Scholar] [CrossRef]

- Van Veen, D.; Van Uden, C.; Blankemeier, L.; Delbrouck, J.-B.; Aali, A.; Bluethgen, C.; Pareek, A.; Polacin, M.; Reis, E.P.; Seehofnerová, A.; et al. Adapted Large Language Models Can Outperform Medical Experts in Clinical Text Summarization. Nat. Med. 2024, 30, 1134–1142. [Google Scholar] [CrossRef] [PubMed]

- Van Veen, D.; Van Uden, C.; Blankemeier, L.; Delbrouck, J.B.; Aali, A.; Bluethgen, C.; Pareek, A.; Polacin, M.; Reis, E.P.; Seehofnerová, A.; et al. Clinical Text Summarization: Adapting Large Language Models Can Outperform Human Experts. Res. Sq. 2023, rs.3.rs-3483777. [Google Scholar] [CrossRef]

- Tang, L.; Sun, Z.; Idnay, B.; Nestor, J.G.; Soroush, A.; Elias, P.A.; Xu, Z.; Ding, Y.; Durrett, G.; Rousseau, J.F.; et al. Evaluating Large Language Models on Medical Evidence Summarization. Npj Digit. Med. 2023, 6, 158. [Google Scholar] [CrossRef]

- Tang, L.; Goyal, T.; Fabbri, A.; Laban, P.; Xu, J.; Yavuz, S.; Kryscinski, W.; Rousseau, J.; Durrett, G. Understanding Factual Errors in Summarization: Errors, Summarizers, Datasets, Error Detectors. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Long Papers. Association for Computational Linguistics: Toronto, ON, Canada, 2023; Volume 1, pp. 11626–11644. [Google Scholar]

- Barbella, M.; Tortora, G. Rouge Metric Evaluation for Text Summarization Techniques. SSRN J. 2022. [Google Scholar] [CrossRef]

- Reiter, E. A Structured Review of the Validity of BLEU. Comput. Linguist. 2018, 44, 393–401. [Google Scholar] [CrossRef]

- Lavie, A.; Agarwal, A. Meteor: An Automatic Metric for MT Evaluation with High Levels of Correlation with Human Judgments. In Proceedings of the Second Workshop on Statistical Machine Translation, Prague, Czech Republic, 23 June 2007; pp. 228–231. [Google Scholar]

- Datta, G.; Joshi, N.; Gupta, K. Analysis of Automatic Evaluation Metric on Low-Resourced Language: BERTScore vs BLEU Score. In Speech and Computer; Prasanna, S.R.M., Karpov, A., Samudravijaya, K., Agrawal, S.S., Eds.; Springer International Publishing: Cham, Switzerland, 2022; Volume 13721, pp. 155–162. ISBN 9783031209796. [Google Scholar]

- Singhal, K.; Azizi, S.; Tu, T.; Mahdavi, S.S.; Wei, J.; Chung, H.W.; Scales, N.; Tanwani, A.; Cole-Lewis, H.; Pfohl, S.; et al. Publisher Correction: Large Language Models Encode Clinical Knowledge. Nature 2023, 620, E19. [Google Scholar] [CrossRef]

- Sivarajkumar, S.; Kelley, M.; Samolyk-Mazzanti, A.; Visweswaran, S.; Wang, Y. An Empirical Evaluation of Prompting Strategies for Large Language Models in Zero-Shot Clinical Natural Language Processing: Algorithm Development and Validation Study. JMIR Med. Inform. 2024, 12, e55318. [Google Scholar] [CrossRef]

- Chiang, C.-H.; Lee, H. Can Large Language Models Be an Alternative to Human Evaluations? In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Long Papers. Association for Computational Linguistics: Toronto, ON, Canada, 2023; Volume 1, pp. 15607–15631. [Google Scholar]

- Song, H.; Su, H.; Shalyminov, I.; Cai, J.; Mansour, S. FineSurE: Fine-Grained Summarization Evaluation Using LLMs. arXiv 2024, arXiv:2407.00908. [Google Scholar]

- Tam, T.Y.C.; Sivarajkumar, S.; Kapoor, S.; Stolyar, A.V.; Polanska, K.; McCarthy, K.R.; Osterhoudt, H.; Wu, X.; Visweswaran, S.; Fu, S.; et al. A Framework for Human Evaluation of Large Language Models in Healthcare Derived from Literature Review. npj Digit. Med. 2024, 7, 258. [Google Scholar] [CrossRef] [PubMed]

- Vaira, L.A.; Lechien, J.R.; Abbate, V.; Allevi, F.; Audino, G.; Beltramini, G.A.; Bergonzani, M.; Boscolo-Rizzo, P.; Califano, G.; Cammaroto, G.; et al. Validation of the Quality Analysis of Medical Artificial Intelligence (QAMAI) Tool: A New Tool to Assess the Quality of Health Information Provided by AI Platforms. Eur. Arch. Otorhinolaryngol. 2024, 281, 6123–6131. [Google Scholar] [CrossRef]

- Okuhara, T.; Ishikawa, H.; Ueno, H.; Okada, H.; Kato, M.; Kiuchi, T. Influence of high versus low readability level of written health information on self-efficacy: A randomized controlled study of the processing fluency effect. Health Psychol. Open 2020, 7, 2055102920905627. [Google Scholar] [CrossRef]

- Ebbers, T.; Kool, R.B.; Smeele, L.E.; Dirven, R.; den Besten, C.A.; Karssemakers, L.H.E.; Verhoeven, T.; Herruer, J.M.; van den Broek, G.B.; Takes, R.P. The Impact of Structured and Standardized Documentation on Documentation Quality; a Multicenter, Retrospective Study. J. Med. Syst. 2022, 46, 46. [Google Scholar] [CrossRef]

- Appelman, A.; Schmierbach, M. Make No Mistake? Exploring Cognitive and Perceptual Effects of Grammatical Errors in News Articles. Journal. Mass Commun. Q. 2018, 95, 930–947. [Google Scholar] [CrossRef]

- Lozano, L.M.; García-Cueto, E.; Muñiz, J. Effect of the Number of Response Categories on the Reliability and Validity of Rating Scales. Methodology 2008, 4, 73–79. [Google Scholar] [CrossRef]

- Koo, M.; Yang, S.-W. Likert-Type Scale. Encyclopedia 2025, 5, 18. [Google Scholar] [CrossRef]

- Soper, D.S. A-Priori Sample Size Calculator for Structural Equation Models [Software]. 2025. Available online: https://www.danielsoper.com/statcalc (accessed on 1 September 2025).

- Comrey, A.L.; Lee, H.B. A First Course in Factor Analysis, 2nd ed.; Psychology Press: London, UK, 1992. [Google Scholar] [CrossRef]

- Vasilev, Y.; Vladzymyrskyy, A.; Mnatsakanyan, M.; Omelyanskaya, O.; Reshetnikov, R.; Alymova, Y.; Shumskaya, Y.; Akhmedzyanova, D. Questionnaires Validation Methodology; State Budget-Funded Health Care Institution of the City of Moscow “Research and Practical Clinical Center for Diagnostics and Telemedicine Technologies of the Moscow Health Care Department”: Moscow, Russia, 2024; Volume 133. [Google Scholar]

- Brown, T.A. Confirmatory Factor Analysis for Applied Research. In Methodology in the Social Sciences, 2nd ed.; The Guilford Press: New York, NY, USA; London, UK, 2015; ISBN 9781462517794. [Google Scholar]

- Shou, Y.; Sellbom, M.; Chen, H.-F. Fundamentals of Measurement in Clinical Psychology. In Comprehensive Clinical Psychology; Elsevier: Amsterdam, The Netherlands, 2022; pp. 13–35. ISBN 9780128222324. [Google Scholar]

- Lechien, J.R.; Maniaci, A.; Gengler, I.; Hans, S.; Chiesa-Estomba, C.M.; Vaira, L.A. Validity and Reliability of an Instrument Evaluating the Performance of Intelligent Chatbot: The Artificial Intelligence Performance Instrument (AIPI). Eur. Arch. Otorhinolaryngol. 2024, 281, 2063–2079. [Google Scholar] [CrossRef]

- Sallam, M.; Barakat, M.; Sallam, M. Pilot Testing of a Tool to Standardize the Assessment of the Quality of Health Information Generated by Artificial Intelligence-Based Models. Cureus 2023, 15, e49373. [Google Scholar] [CrossRef]

- Vasilev, Y.A.; Vladzymyrskyy, A.V.; Alymova, Y.A.; Akhmedzyanova, D.A.; Blokhin, I.A.; Romanenko, M.O.; Seradzhi, S.R.; Suchilova, M.M.; Shumskaya, Y.F.; Reshetnikov, R.V. Development and Validation of a Questionnaire to Assess the Radiologists’ Views on the Implementation of Artificial Intelligence in Radiology (ATRAI-14). Healthcare 2024, 12, 2011. [Google Scholar] [CrossRef]

| Dimension | Method | Thresholds |

|---|---|---|

| Internal consistency | Cronbach’s alpha | ≤0.5—unacceptable >0.5—poor >0.6—questionable >0.7—acceptable >0.8—good >0.9—excellent [25] |

| Construct validity | Confirmatory factor analysis | Comparative Fit Index (CFI) ≥0.9 Root Mean Square Error of Approximation (RSMEA) < 0.08 Standardized Root Mean Squared Residual (SRMR) < 0.08 Tucker–Lewis Index (TLI) ≥ 0.9 [26] |

| Differentiation by known groups | Univariate nonparametric analysis of variance (Kruskal–Wallis rank test) | p-value < 0.05 |

| Dimension | Question | Evaluation System |

|---|---|---|

| Relevance | To what extent does the LLM output aligned with the query? | 5-point Likert scale |

| Completeness | Does the LLM provide not only the explicitly requested data but also additional information relevant to completing the task? | 5-point Likert scale |

| Applicability | To what extent does the LLM result help solve the task? | 5-point Likert scale |

| Falsification | Does the LLM response contain any information absent from the provided patient medical records? | Binary yes/no answer |

| Satisfaction | How satisfied are you with the result in terms of usefulness, clarity, and fulfillment of your expectations for LLM-based medical record summarization? | 5-point Likert scale |

| Structure | To what extent is the LLM-generated text clear, logical, and well structured? | 5-point Likert scale |

| Correctness of language and terminology | To what extent is the LLM-generated text linguistically and terminologically accurate? | 5-point Likert scale |

| Factor | Dimension | Standardized Factor Loadings | p-Value |

|---|---|---|---|

| F1 | Relevance | 0.95 | <0.001 |

| Completeness | 0.86 | <0.001 | |

| Applicability | 0.96 | <0.001 | |

| Falsification | 0.45 | <0.001 | |

| Satisfaction | 0.97 | <0.001 | |

| F2 | Structure | 0.90 | <0.001 |

| Grammatical correctness | 0.79 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vasilev, Y.A.; Vladzymyrskyy, A.V.; Omelyanskaya, O.V.; Alymova, Y.A.; Akhmedzyanova, D.A.; Shumskaya, Y.F.; Kodenko, M.R.; Blokhin, I.A.; Reshetnikov, R.V. Development and Validation of a Questionnaire to Evaluate AI-Generated Summaries for Radiologists: ELEGANCE (Expert-Led Evaluation of Generative AI Competence and ExcelleNCE). AI 2025, 6, 287. https://doi.org/10.3390/ai6110287

Vasilev YA, Vladzymyrskyy AV, Omelyanskaya OV, Alymova YA, Akhmedzyanova DA, Shumskaya YF, Kodenko MR, Blokhin IA, Reshetnikov RV. Development and Validation of a Questionnaire to Evaluate AI-Generated Summaries for Radiologists: ELEGANCE (Expert-Led Evaluation of Generative AI Competence and ExcelleNCE). AI. 2025; 6(11):287. https://doi.org/10.3390/ai6110287

Chicago/Turabian StyleVasilev, Yuriy A., Anton V. Vladzymyrskyy, Olga V. Omelyanskaya, Yulya A. Alymova, Dina A. Akhmedzyanova, Yuliya F. Shumskaya, Maria R. Kodenko, Ivan A. Blokhin, and Roman V. Reshetnikov. 2025. "Development and Validation of a Questionnaire to Evaluate AI-Generated Summaries for Radiologists: ELEGANCE (Expert-Led Evaluation of Generative AI Competence and ExcelleNCE)" AI 6, no. 11: 287. https://doi.org/10.3390/ai6110287

APA StyleVasilev, Y. A., Vladzymyrskyy, A. V., Omelyanskaya, O. V., Alymova, Y. A., Akhmedzyanova, D. A., Shumskaya, Y. F., Kodenko, M. R., Blokhin, I. A., & Reshetnikov, R. V. (2025). Development and Validation of a Questionnaire to Evaluate AI-Generated Summaries for Radiologists: ELEGANCE (Expert-Led Evaluation of Generative AI Competence and ExcelleNCE). AI, 6(11), 287. https://doi.org/10.3390/ai6110287