1. Introduction

The rapid development of Mobile Financial Services (MFSs) has changed the dynamics of consumer access to finance for markets in both developed and emerging economies by allowing for low-cost, real-time transactions. In emerging markets, many individuals and small businesses access their first formal financial services through MFS as a means to develop their future financial practices; the MFSs enable advances in financial inclusion [

1] Nonetheless, rapid growth has also resulted in increased exposure to fraud, cyberattacks, and privacy violations. Identity theft, phishing, and unauthorized access to accounts continue to be major challenges, even in jurisdictions with weak regulatory protections, creating serious concerns for sustaining user trust [

2]. Rule-based models for fraud detection, in use for many years in the banking industry, are less effective at identifying emerging threats. Rule-based models are generally reactive and poorly positioned to identify sophisticated and adaptive attacks [

3]. AI-enabled cybersecurity products offer more responsive and predictive features, which can provide real time anomaly detection, adaptive fraud avoidance, and personalized security. However, a range of issues have been raised concerning AI, particularly around explainability, accountability, and data governance. Users are hesitant to use AI-enabled “black box” systems, which are difficult to interpret, with poor transparency regarding the logic that the system uses. These concerns suggest an emerging tension between the technology-utilization effectiveness of AI-based systems and the social, ethical, and cultural context of those systems [

4].

Research has shown that AI has the ability to positively impact fraud detection, transaction monitoring, and risk management in the financial services industry. For instance, machine learning models have been found to lower the rates of false positives in anomaly detection, when compared to the previous approaches [

5], in addition to the data protection potential presented by advances in natural language processing and federated learning [

6]. Though not exhaustive, quite a lot of this work focuses on developed financial systems. Our understanding of AI-based fraud detection and privacy protection tools in emerging markets is still rather limited, and hence the perceptions around the usage of these tools, digitally literate systems, cultural attitudes toward privacy, and fragmented regulatory structures may also considerably affect outcomes [

1,

2].

Trust is integral to these considerations. The Technology Acceptance Model (TAM), the Unified Theory of Acceptance and Use of Technology (UTAUT), and the Privacy Calculus framework give a great perspective for these considerations in analyzing adoption; they identified consistent determinants of the perception of trust, perceived usefulness, and perceived risk as the three most salient factors in determining whether or not to adopt new financial technologies [

7]. It follows that, in MFS, the likelihood of adoption of AI-enabled tools will be whether the user has confidence in it being useful and transparent. Adoption is unlikely if sufficient explanation and data safeguards are not made available. In other words, no matter the potential for this technology to be technically better, it is impossible to assess or determine trust features [

4]. Another consideration is that any actual advancements in financial inclusion will be precarious without some level of trust and privacy provision [

4,

8].

The industry context supports these findings. While previous investigations indicated that no companies that emerged were exclusively focused on AI-enabled fraud detection, several firms—Feed Zai, Feature space, and SAS—were developing some sophisticated systems that have been collectively used in financial services [

5]. These platforms show that the use of AI to prevent fraud at scale is viable across many financial services companies. However, use within emerging market MFS environments is uneven. Infrastructure constraints, more lax data protection regimes, and cultural differences in privacy concepts all complicate the transferability of these sophisticated systems [

2]. Further, while there are technical solutions, they rely on addressing users’ beliefs about fairness, transparency, and accountability in implementing those solutions.

Although there is increasing interest from the academic and industry realms, there remains significant research void. In research on AI in financial services, studies frequently depict the technical performance metrics while obfuscating the intentions of end-users, especially in under-resourced markets. In similar ways, research on financial inclusion studies access and affordability and neglects even the underpinnings of security and privacy necessary for sustained adoption [

1,

9]. Only a handful of studies systematically intersect these points of view to study the intersection of AI-based cybersecurity to underpin fraud detection and address concerns around privacy as a way to build trust in emerging markets.

The present study aims to fill this gap by investigating users’ perspectives on the use of AI in cybersecurity in mobile financial services (MFSs). The study considers three interconnected questions: users’ views on the role of AI in fraud detection; the privacy and transparency concerns that influence users’ uptake of AI; and the relativity of users’ trust in mobile financial services, given cultural and regulatory contexts. In the study, we will move from a quantitative survey of user perspectives to qualitative analysis of the open-ended responses in order to create an integrated perspective of the opportunities and challenges presented by AI-enabled MFS platforms. The contribution we make consists of two parts: first, we provide empirical evidence on user concerns and user dynamics to support usage in a developing market; and second, we contribute to the field theory by connecting the Technology Acceptance Model and the Privacy Calculus framework to understand how trust and risk are perceived when using AI-enabled tools.

The rest of this article is organized as follows. The next section reviews the literature relating to AI in mobile financial services, digital inclusion, cybersecurity, and trust. Then, we consider the methodology used to collect and analyze the data, including research design, sampling strategy, and methods of analysis. The results section contains the quantitative findings from the survey and the qualitative results, before the discussion contextualizes these results in terms of relevant theory and practice. The article will conclude with a review of contributions, implications for banks, fintech developers, and policymakers, and suggestions for future research.

2. Literature Review

2.1. AI in Cybersecurity: Fraud Detection Techniques

Artificial Intelligence (AI) plays a fundamental role in modern fraud detection, and Mobile Financial Services (MFSs) are no exception. Traditional machine learning techniques, for example, Random Forests, Support Vector Machines (SVMs), and Gradient Boosted Decision Trees (GBDTs), have shown great performance in detecting anomalous transactions in highly imbalanced datasets [

5]. For instance, GBDT models demonstrated in real-world MFS transaction logs fraud detection precision rates over 90% [

5]. Recently, a part of the literature resorted to assembling ensembles such as XGBoost and LightGBM, along with Explainable AIs (XAI) and Explainable AI techniques like SHAP (SHapley Additive explanations) and LIME (Local Interpretable Model-agnostic Explanations). They provide very high predictive accuracy (i.e., AUC > 0.99), and they accomplish this with interpretability to regulatory and practitioner issues [

1,

10].

Another noteworthy prospect is Federated Learning (FL), which enables financial institutions to collaboratively train AI models for fraud detection without directly sharing sensitive customer data, while not sharing sensitive customer data directly. This decentralized approach will enhance fraud detection performance and privacy [

6]. Federated Learning could also be used in conjunction with XAI methods, so that institutions would be able to interpret and audit their fraud detection decisions across federated and distributed environments [

3,

6]. Being able to use FL with XAI will be critical to assess transparency and accountability in real world applications.

A key limitation of this body of research, while there have been considerable technical advances in AI for finance, is that many papers ignore socio-technical considerations, such as explanation, regulatory, and user trust, which will all affect adoption in emerging markets [

5].

2.2. Mobile Financial Services Security: Challenges and Responses

MFS platforms in emerging market contexts are subject to specific endemic threats, including SIM-swap attacks, phishing, malware, and social engineering scams [

11]. Traditional methods of defence, such as encryption, PIN codes, and static rule-based monitoring fall short against adaptive adversaries [

5,

11]. Their use is also unsuitable for resource constraints when fraudsters target the underdeveloped digital literacy of users.

Notions of AI-based anomaly detection, which have only recently emerged, can track systems dynamically in real-time as opposed to static systems [

12]. There can be additional methods involving agent governance measures, customer awareness and education, and know-your-customer (KYC). Implementing systemic means in the context of MFS, especially in emerging markets, neglects issues of absent infrastructure, a gap in suited regulatory context, and regulation enforcement [

11].

Synthetic data generation tools, such as PaySim and RetSim, have been employed to model realistic MFS fraud scenarios, allowing research based on simulations when there was little actual data available [

12]. While the simulation tools overcome data scarcity issues, the professor will need to consider their possible use of highly varied cultural and regulatory settings.

2.3. Privacy in Emerging Markets: Cultural and Regulatory Dimensions

Emerging markets experience privacy concerns significantly more than other economies, primarily as a result of lax regulation and low levels of digital literacy [

13]. Several studies note that users tend to distrust platforms where there is perceived opacity, especially when their intimate data is handled by a third-party in the case of agents [

11]. Research in Sub-Saharan Africa has confirmed that agent-assisted registration channels are especially vulnerable to abuses of KYC practices that can result in unauthorized communication or use of data and further expose the user to fraud [

11].

To reduce these risks, researchers have suggested privacy-preserving protocols, including secure multiparty computation, differential privacy, and KYC verification systems based on FL [

6,

14]. The aim of these strategies is to seek an appropriate balance between efficiency and privacy preservation to restore trust. However, empirical applications of these solutions to date in real-world mobile finance (MFS) contexts are scarce.

2.4. Explainable Artificial Intelligence (XAI): Types and Relevance

A significant concern regarding AI-assisted fraud detection is the “black box” aspects of deep learning models. XAI enables approaches to explain the decision of AI, which is critical in terms of user trust and government compliance [

2].

Feature Attribution Methods (e.g., SHAP, LIME): Show which of the input variables have the strongest influence on the decision [

15].

Surrogate Models (e.g., decision trees approximating neural networks): Try to provide a simpler interpretable model that mimics the original decision-making process.

Visualization Techniques (e.g., heatmaps, saliency maps): Assist regulators and analysts in tracking down specific anomalies within regulators’ financial datasets.

Rule-based XAIs (e.g., Anchors, counterfactual explanations): Provide human-readable rules explaining why a transaction was identified as at risk.

At present, feature attribution methods dominate the body of research on MFS fraud detection, but there are limitations in explaining temporal dependencies in sequential data. On the other hand, surrogate models often sacrifice accuracy in order to provide interpretability. Therefore, a balanced integration of different XAI methods is warranted in order to obtain trustworthy fraud indicators in financial systems [

3].

2.5. Theoretical Foundations: TAM, Privacy Calculus, and UTAUT

The Technology Acceptance Model (TAM) [

16], Privacy Calculus Theory [

17], and the UTAUT [

7] are frameworks that complement each other for understanding user adoption of AI in MFSs. Whereas TAM focuses on perceived usefulness and ease of use, Privacy Calculus considers the costs–benefits trade-off with perceived risks versus benefits [

16,

17], and UTAUT includes social influence, facilitating conditions, and demographic moderators.

The models examined above are useful on their own, but none of these models fully captures the socio-cultural and ethical considerations of adopting AI in these emerging markets. Notably, TAM and Privacy Calculus are limited in that they underestimate the importance of cultural norms and institutional trust, while UTAUT is limited in that it does not adequately contextualize algorithmic fairness or transparency [

4]. This study therefore employs a hybrid framework, integrating trust, explainability, and institutional legitimacy with the three dominant models.

2.6. Research Gap

The literature review highlighted three main gaps:

Lack of user perspectives in emerging markets—The fraud detection literature appears to value accuracy in algorithms predominantly when studying user adoption or user perspectives. The studies in the literature do not consider issues such as digital literacy or trust (cultural) issues related to adoption [

13].

Limited application of integrated theoretical models—There are few empirical studies employing a combined TAM–Privacy Calculus–UTAUT theoretical model to investigate and understand people’s adoption of AI in MFSs [

7].

Insufficient mention of explainability and ethical issues—A majority of research regarding AI initiated fraud detection has omitted how XAI, transparency, and ethical design could engender user trust in poorly regulated markets [

4].

This study will fill some of these voids by conducting a mixed-methods study that will examine users’ perceptions of AI-driven fraud detection, privacy, and institutional trust using a combined TAM–Privacy Calculus–UTAUT theoretical model.

2.7. Research Contribution

This research contributes to three major ways. First, it provides empirical data from the perspective of emerging markets for exploring users’ perceptions of AI-based fraud detection, privacy, and transparency. Second, it engages in theoretical advancement by operationalizing a hybrid TAM–Privacy Calculus–UTAUT framework for use in the future. Third, it provides practical implications underlining the importance of transparency through the use of XAI, culturally sensitive educational plans that address digital inequality, and also advance policy frameworks to authoritatively strengthen institutional legitimacy. Taken together, it connects technological innovation with user-centred adoption in digital finance.

An overview of representative studies on AI-driven fraud detection approaches, their methods, and limitations is provided in

Table 1, which synthesizes the key findings from prior work.

3. Methodology

3.1. Research Design

The study employed a convergent parallel mixed-methods design, combining quantitative survey data with qualitative open-ended responses through a two-phase qualitative process, which began with the quantitative survey phase and ended with a qualitative response phase. Thus, both sets of data were collected at the same time, analyzed separately, and integrated together during the interpretation. The convergent parallel mixed-methods process increases internal validity and serves to complement the perspectives on the socio-technical issues of user trust, privacy, and AI uptake in mobile financial services (MFSs). Mixed methods is a better fit for FinTech/money/social finance contexts requiring both statistical generalizability and contextual detail [

7].

3.2. Participants and Sampling

A total of 151 respondents signed up from three emerging economies—Kenya, Nigeria, and Bangladesh. Requirements for eligibility were (i) respondents should be 18 or older, (ii) use mobile financial services, and (iii) live in an emerging economy. Targeted advertisements on social media, WhatsApp, and Facebook fintech communities and professional networks were used to recruit participants. A purposive stratified convenience sampling design ensured some diversity in age, gender, education level, where they lived (rural/urban), and digital literacy. A non-probabilistic sampling design will limit the statistical generalizability of the findings but assures inclusion of relevant features of the participant group. A thematic iteration was conducted for inferences to build reliability, and confirmatory thematic analysis recorded data saturation of the reputational responses in qualitative reports [

18].

3.3. Instruments and Measures

The survey instrument was created by modifying validated scales from the Technology Acceptance Model (TAM), Unified Theory of Acceptance and Use of Technology (UTAUT), and Privacy Calculus Theory.

Quantitative items measured were perceived usefulness, perceived ease of use, trust in AI security, perceived privacy risk, adoption intention, and facilitating conditions. Participants were using a 5-point Likert scale (i.e., 1 = strongly disagree, 5 = strongly agree).

Qualitative prompts explored experiences were related to fraud, concerns regarding privacy, perceptions of AI transparency, and trust in authorities.

The pilot (n = 15) confirmed breadth of survey wording, average completion time (<10 min), and suitability of digital literacy for the survey. The complete survey items can be found in

Appendix A.

3.4. Data Collection Procedures

Data collection was conducted between March and June 2025 via Google Forms and Qualtrics. Participants were recruited voluntarily and anonymously; small incentives (mobile credit) were offered in some cases where ethically permissible. The completion rate was comparable to prior fintech adoption surveys [

19].

3.5. Data Analysis

3.5.1. Quantitative Analysis

Reliability: Cronbach’s alpha values exceeded 0.70 for all constructs (trust = 0.81; perceived usefulness = 0.79; privacy risk = 0.75; adoption intention = 0.83).

Construct validity: Confirmatory factor analysis (CFA) showed an adequate fit with all factor loadings >0.60.

Structural analysis: We used Partial Least Squares Structural Equation Modelling (PLS-SEM) to analyze the model. The model fit was defined by SRMR < 0.08, R2 values > 0.50 for critical constructs, and positive Q2 predicted relevance. The hypotheses were tested, with regression paths (e.g., AI trust to adoption, β = 0.63, p < 0.01).

Tables summarizing Cronbach’s alpha, CFA loadings, and SEM results are provided in

Appendix B.

3.5.2. Qualitative Analysis

Open-ended responses were analyzed using [

20] six-phase thematic analysis in NVivo. Two coders independently coded transcripts, achieving an interrater reliability of κ = 0.77. Emergent themes included algorithmic opacity, fear of misuse of personal data, cultural expectations of institutional trust, and calls for regulatory transparency.

3.5.3. Integration of Findings

Findings were integrated using a joint display matrix, which embedded qualitative themes within quantitative results. For example, even though regression analysis indicated that transparency was a strong predictor of adoption, qualitative responses revealed that users frequently experienced “explainability” as “fairness.” This also reflects cultural interpretations of trust within the implementation context.

3.6. Supplementary Mini-Systematic Review

To strengthen methodological rigour, a mini-systematic review of AI-based fraud detection studies (2019–2024) was conducted within the IEEE Xplore, Springer, Elsevier, and MDPI databases, following established systematic review guidelines [

20]. Fifteen peer-reviewed articles were reviewed and synthesized in

Table A2 (

Appendix C), specifically in terms of (1) fraud detection accuracy, (2) XAI methods, and (3) privacy-preserving aspects. This triangulation addresses the limitation of not being able to assess the technical model performance based solely on user survey data.

3.7. Ethical Considerations

This study adhered to the Declaration of Helsinki and MDPI ethical standards. No sensitive personal data were collected, and participation was voluntary and anonymized; therefore, formal ethical approval was not needed under the UK Policy Framework for Health and Social Care Research (Section 2.3.15). Informed consent to participate was provided electronically. Data were stored on securely encrypted platforms that could only be accessed by the principal investigator. The survey was shortened, translated, and included explanations of technical terms for low-literate respondents.

3.8. Trustworthiness and Limitations

Several strategies enhanced validity:

Data triangulation (quantitative + qualitative + mini-systematic review).

Peer debriefing to minimize researcher bias.

Member checks completed with some respondents to determine there was qualitative accuracy.

Low multicollinearity confirmed with VIF < 2.5.

Limitations include (i) non-random sampling, limiting generalizability; (ii) cross-sectional design, preventing causal inference; (iii) self-report bias, especially in sensitive domains like fraud; and (iv) possible comprehension barriers despite translation and simplification. Nonetheless, the combination of survey, qualitative insights, and systematic review increases the robustness of findings.

4. Results

This section presents descriptive findings (with four merged chart panels), followed by measurement reliability checks, structural equation modelling (SEM) outcomes, subgroup analyses, qualitative themes, and comparative reflections with prior research.

4.1. Descriptive Statistics

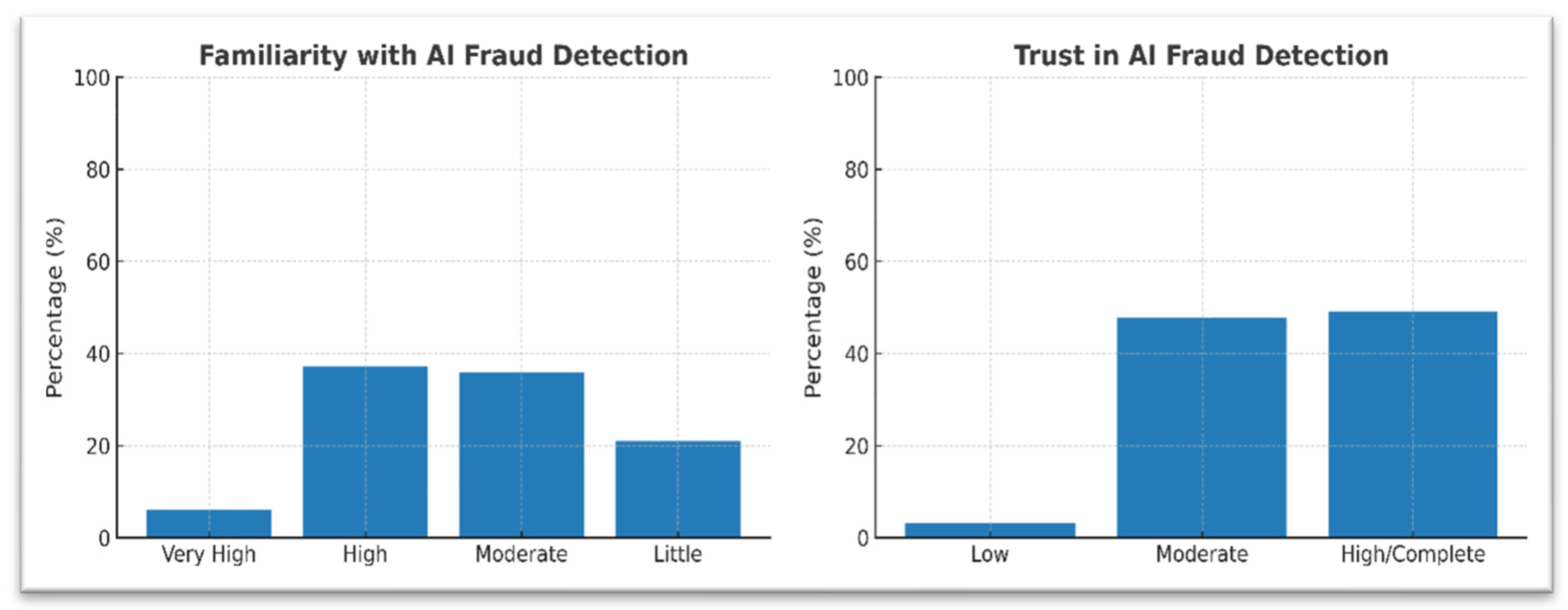

4.1.1. Familiarity and Trust in AI Fraud Detection (Figure 1)

As shown in

Figure 1, respondents displayed varied levels of familiarity and trust in AI-based fraud detection systems. On the baseline awareness level, the findings were positive: 37.1% of respondents reported being high useful awareness of AI-based fraud detection, 35.8% had moderate awareness, 21.0% had limited awareness, and only 6.0% reported very high awareness. No one reported absolutely no awareness.

Figure 1.

Familiarity and trust levels in AI-based fraud detection among mobile financial services users (n = 151).

Figure 1.

Familiarity and trust levels in AI-based fraud detection among mobile financial services users (n = 151).

Trust in AI-based fraud detection indicated a cautious optimism: Almost 49% of respondents reported an exceptionally high or complete level of trust (scores 4–5), 47.7% rated their trust level moderate (score 3), and only 3.3% indicated low trust. No respondents indicated “no trust at all.” This indicated that there is a fairly widespread familiarity with AI, but trust can only be given through supporting transparency and fairness.

4.1.2. Confidence and Usage Frequency (Figure 2)

As illustrated in

Figure 2, respondents reported varying levels of confidence in AI-driven fraud detection, which were closely associated with their frequency of usage of mobile financial services. Confidence was indicative of high levels: 54.9% felt very or extremely confident using AI-secured MFSs, 31.1% moderately confident, and 13.9% slightly confident.

Figure 2.

Confidence in using AI-secured mobile financial services and frequency of usage among respondents.

Figure 2.

Confidence in using AI-secured mobile financial services and frequency of usage among respondents.

These usage patterns demonstrated that MFSs could be seen as a daily necessity in everyday life. Specifically, 50.3% of respondents indicated they engaged in MFSs daily, while 43.7% used it weekly, and less than 6% of respondents recorded using MFSs monthly or rarely. Strong levels of confidence combined with habitual use highlighted a strong base for future uptake of MFSs and reflected UTAUT predictions around condition facilitation and habitual use shaping and strengthening intention.

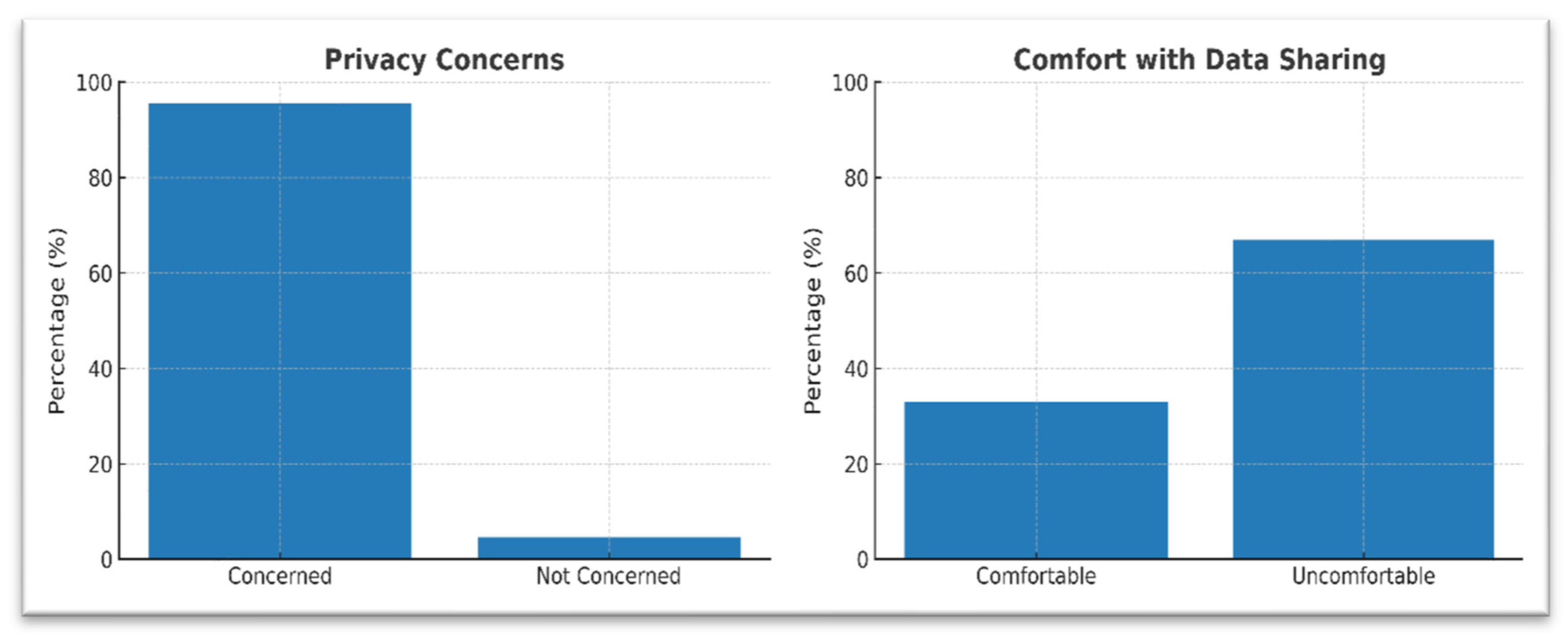

4.1.3. Privacy Concerns and Data-Sharing Attitudes (Figure 3)

Figure 3 highlights the extent of users’ privacy concerns and their corresponding attitudes toward data-sharing practices in mobile financial services. Privacy concerns were virtually universal: 95.4% of respondents expressed concern regarding personal data used in MFSs, while only 4.6% stated they did not have any concerns. Likewise, 66.9% of respondents were uncomfortable sharing personal data with financial applications, and 33.1% were comfortable.

Figure 3.

Privacy concerns and attitudes toward data sharing among mobile financial services users.

Figure 3.

Privacy concerns and attitudes toward data sharing among mobile financial services users.

This amplifies what we refer to as Privacy Calculus: Users recognize the value of AI’s potential, but that intersects with perceived costs and the possibilities of exploitation. In this case, a quiet harvesting of personal data and enforcement unpredictability may affect the rate of adoption.

4.1.4. Perceived Effectiveness and Transparency (Figure 4)

The findings presented in

Figure 4 demonstrate respondents’ perceptions of the effectiveness of AI-based fraud detection and their satisfaction with the transparency of these systems. The respondents overwhelmingly rated AI as superior to rule-based systems: 91.2% gave above-average ratings (scores 4–5). In comparison, evaluations of transparency were less than enthusiastic: 54.3% neutral, 29.8% satisfied, and 16% dissatisfied.

Figure 4.

Perceived effectiveness of AI-based fraud detection and satisfaction with transparency among mobile financial services users.

Figure 4.

Perceived effectiveness of AI-based fraud detection and satisfaction with transparency among mobile financial services users.

Qualitative comments (see

Section 4.6) also highlighted the role of false positives (reported by ~27% of users) in eroding trust in AI, even when they believed it was technically effective. This discrepancy shows the importance of explainability for ongoing use of a solution.

4.2. Reliability and Validity of Constructs

The reliability and validity assessment of the measurement model is presented in

Table 2, which reports factor loadings, Cronbach’s alpha, composite reliability, and average variance extracted (AVE), confirming that the constructs meet accepted psychometric standards.

4.3. Structural Equation Modelling (SEM)

The outcomes of the structural equation modelling are summarized in

Table 3, where the standardized path coefficients and their significance levels provide empirical evidence for the hypothesized relationships.

4.4. Subgroup Analysis

Findings show urban users and younger participants report higher trust and adoption. Low literacy associates with higher perceived risk, and fraud victims report lower transparency satisfaction, consistent with false-positive frustration.

4.5. Integration with Theoretical Frameworks

The results adhere to three different theoretical frameworks.

Privacy Calculus: Users recognize the benefits of AI fraud detection (91.2% saw it as more effective than conventional detection) but saw privacy risks (95.4% ranked as high risk); hence, there was less willingness among users to use AI fraud detection. As is the issue in the privacy and trust literature, the benefits do not outweigh the perceived losses (see Table 5).

TAM/UTAUT: Perceived usefulness emerged as the strongest driver of trust, which ultimately predicts intention to adopt, while perceived ease of use in this case was not significant when trust and risk were accounted for. Although ease of use is a factor in adoption, it appears that trust is salient in security-sensitive contexts, and perceived usefulness will trump ease of use.

XAI (Explainable AI): Transparency and explainability were significant predictors of trust in AI fraud detection. This suggests that if AI’s behaviours include transparency in communicating decisions, user confidence can be maximized, and, as a result, the negative effects of errors and false positives would be minimized.

Overall, the integration shows that adoption is not only a matter of technical effectiveness but also of managing privacy risks and improving transparency to strengthen trust.

4.6. Qualitative Themes

Quantitative analysis of open-ended responses to the survey (Q13–Q20) were conducted inductively using Braun and Clarke’s thematic coding framework. Coding and stemming were performed in NVivo. Three main themes emerged: Explainable AI decisions, privacy and data controls, and socio-regulatory context. The themes added context for the quantitative results which assisted in making the association between risk and trust perceptions in adopting AI.

Theme 1—Explainable AI Decisions

Participants frequently referenced the lack of explainability of AI-based fraud detection as a common concern. Many expressed their frustration in knowing nothing about why certain transactions were considered risky or when an account had been temporarily blocked. One participant explained their confusion:

“I want to know why my transfer was flagged—was it the amount, location or something else?”

This describes a clear difference we see in the quantitative results where a total of 91.2% of participants believed AI was performing adequately (see

Figure 4), while only 29.8% said they felt very satisfied with AI transparency (see

Figure 4). Some participants described that Explainable AI (XAI) would again build their confidence by providing more quality/specificity in the alert and also some real-time communications.

Theme 2—Privacy and Data Ownership

Concerns about silent data collection were prevalent. Users seemed uncomfortable with the ability of the application to collect things like location, contact lists, and any related metadata without a clear path to consent. One user simply wrote:

“Apps collect location and contacts without clearly telling me, who sees this and for how long?”

This further reinforces the quantitative results of users having privacy concerns with 94.4% (see

Figure 3). Overall, these responses reflect the Privacy Calculus trade-off: users see the potential value of AI in being able to extract fraud, but they perceive high privacy risks, limiting adoption. Calls for standardized consent, clear policies, and data minimization were common.

Theme 3—Socio-regulatory Context

Trust in AI was heavily influenced by socio-regulatory context, meaning the social trust framework of society (legitimacy of regulators) and the cultural context. Respondents from countries with stronger enforcement of data protection laws reported greater trust (mean trust level 7), while other respondents were more suspicious of institutions because there was no enforcement. One respondent explained:

“When rules are enforced, it is easier to trust AI, particularly if banks have to report incidents transparently”

This theme highlights why subgroup differences were present (

Table 3), with urban and digitally literate individuals reporting significantly greater trust compared to rural, low literacy groups. More importantly, for the purposes of this STF, regulatory trust and institutional transparency were key drivers of intention to adoption.

To explore heterogeneity across user groups, subgroup analyses were conducted, and the results are presented in

Table 4, demonstrating significant differences in trust and adoption across demographic categories.

Integration of Themes

These themes triangulated the quantitative findings:

Explainability accounts for the dissatisfaction toward transparency after speaking highly of how effective the AI is.

The concerns of privacy explain the 66.9% of participants who felt discomfort sharing their personal data.

The regulatory context connects subgroups and variations in trust and adoption. In the final analysis, the qualitative evidence demonstrated that embracing AI-secured MFSs in emerging markets requires not only a technical but also a social and institutional understanding.

4.7. Comparative Analysis with Prior Studies

A comparative overview with prior studies is provided in

Table 5, highlighting areas of convergence and divergence between the present findings and those reported in earlier research.

5. Discussion

5.1. Theoretical Integration

This study contributes to theoretical debates on technology adoption in mobile financial services (MFSs) by integrating three dominant models—Technology Acceptance Model (TAM), Privacy Calculus, and the Unified Theory of Acceptance and Use of Technology (UTAUT). The results confirm that trust serves as the central mediator between perceived usefulness and adoption intention. In line with TAM [

16], users who perceived AI systems as effective in detecting fraud were significantly more likely to trust and subsequently adopt such solutions. However, ease of use—traditionally a strong determinant in TAM—was not significant once trust and privacy risks were considered. This suggests that in security-critical contexts such as financial services, trust outweighs usability, a finding consistent with recent extensions of TAM in high-risk domains.

The results also strongly support the Privacy Calculus framework [

17]; despite recognizing the superior effectiveness of AI compared to rule-based systems (91.2% agreement), users’ high levels of privacy concern (95.4%) significantly reduced their willingness to adopt (see

Table 5). This highlights the cost–benefit trade-off central to Privacy Calculus: perceived gains in fraud protection are offset by fears of data misuse, opaque data-sharing practices, and weak regulatory enforcement.

Finally, UTAUT [

7] is particularly useful for exploring contextual drivers. The subgroup analysis shows that urban users and digitally literate users had higher trust and adoption levels, whereas lower-literate users and users that had been defrauded had higher risk levels and lower satisfaction in transparency. Our findings add to UTAUT’s related existing literature as well, because although our analysis highlights the importance of facilitating conditions and social influence as constructs in UTAUT, we also show that culture-related expectations of fairness and institutional credibility are related to perceived ease of states and adoption.

5.2. Novel Contribution

This study represents a novel contribution by developing a hybrid framework that concurrently brings together TAM, Privacy Calculus, and UTAUT, while also integrating trust, transparency, and institutional legitimacy. Most of the models and frameworks from prior studies have an individual application of TAM, Privacy Calculus, or UTAUT. The combined power of these theoretical perspectives signifies a unique way to examine the multi-dimensional attributes of AI adoption especially in a fast-growing market context. To illustrate this, we point out that, on its own, TAM could explain perceived usefulness, Privacy Calculus could explain the limitations of effectiveness alone of AI for adoption, and UTAUT could help contextualize demographic and cultural moderating influences. In combination, we provide a holistic socio-technical model of AI trust and adoption that furthers both scholarly and practitioner work.

5.3. Comparison with Industry Practices

Our findings also provide a useful counterpoint to some in the industry. Companies like Feed Zai, Feature space, and SAS have deployed systems that are proven highly successful at fraud detection, typically using opaque ensemble or deep learning models. They work, technically. But their adoption is limited if users do not have confidence in them and their transparency. Moreover, around 27% of respondents to this study indicated false positives as sources of frustration, demonstrating how a lack of trust can erode use of even technically better algorithms when the decision-making is opaque (see

Table 5). This makes the case for adding Explainable AI (XAI) mechanisms, such as SHAP or LIME explanations, into fraud detection platforms that can take a system beyond just algorithmic accuracy and provide a way to generate user confidence in the algorithm.

Also, federated learning (FL) presents an option for industry practices to align with the public’s expectations. For example, FL enables users to collaboratively train models together without data sharing of any data. FL manages both security and privacy concerns, and XAI provides an explainable mechanism across institutions. Companies that offer convoluted industry solutions without such options will lose users in emerging markets where trust in institutions is fragile.

5.4. Unifying Trust and Privacy in AI Banking

An overarching theme found throughout our materials is that dimension of trust and privacy are inseparable dimensions of AI adoption in financial services. This means that technical excellence alone is inadequate; adoption at the consumer level rests on the users’ perception that the system is transparent, fair, and respects their data rights. This reflects the increased academic focus on AI being trustworthy—demanding fairness, accountability, and explainability as design characteristics.

Our evidence implies that trust in AI is determined not only by perceptions of individual usefulness and risk, but also by national cultural norms and regulatory credibility. Critically, respondents from jurisdictions with strong regulatory enforcement expressed more confidence in institutional reliability than respondents from jurisdictions where regulatory enforcement was weak. In fact, they expressed scepticism that institutions with weak regulatory pressures would act reliably. This suggests that adoption cannot be understood without consideration of wider institutional ecosystems.

The confluence of Explainable AI (XAI), ensemble learning (EL), and federated learning (FL) provides visible paths to resolve the tensions that arise from the intersection of ethical obligations to maintain the privacy of deterred clients and consumer expectations for reliable fraud detection systems. Through EL methods, we maintain the predictive accuracy of detection; through XAI methods, we increase system transparency without loss of user and client privacy; and through FL methods, we preserve the privacy of users, clients, and markets in dispersed contexts. Collectively, the three approaches provide a technical triad for managing efficiency, and user trust may affect competing needs. For policymakers (regulators) and executives (financial institutions) alike, the central message is clear; fraud detection systems powered by AI are only effective if they lower fraud losses and develop explained and privacy preserving systems trusted by local cultural.

5.5. Summary

In sum, this study demonstrates that adoption of AI-based fraud detection in MFSs is driven less by usability and more by the trust–privacy calculus embedded in socio-cultural and regulatory environments. By integrating TAM, Privacy Calculus, and UTAUT with emerging XAI and FL approaches, we offer both a theoretical and practical roadmap for developing trustworthy AI in digital finance.

6. Conclusions

This study explored how Artificial Intelligence (AI) can strengthen fraud detection and safeguard privacy in Mobile Financial Services (MFSs) across emerging markets. Using a mixed-methods design that combined survey evidence, qualitative insights, and a supplementary mini-systematic review, the research provided both statistical robustness and contextual depth.

Contribution

This study also offers three major contributions. First, the study provided empirical evidence that while users recognize that AI is much more effective in fraud prevention (91.2% collective rank of AI being better than pre-AI systems), it cannot be adopted because privacy is an important consideration (95.4% concern over privacy). Second, the study has theorized for the first time in research design theoretical improvements to the TAM while also incorporating Privacy Calculus and a UTAUT theory into its means of understanding the adoption as a function of trust, transparency, and risk perceptions, not ease of use. Third, the study will guide practical recommendations for banks, fintech developers, and policymakers as it is essential to adopt Explainable AI (XAI), use federated learning to limit privacy risks, and ensure that a path to alignment with cultural and regulatory realities are important considerations for future banking practices and public confidence, trust, and use of fintech banking.

Limitations

The study’s limitations should be noted. First, while purposive sampling is effective, it limits generalizability. Also, cross-sectional research does not allow for causal inference. The self-report measures may be subject to either recall bias or desirability bias. Additionally, while the mini-systematic review strengthens methodological rigour, it cannot substitute for technical benchmarking of fraud detection models in real-world environments.

Future Work

Future research should expand on these findings by conducting longitudinal and experimental studies to establish causal relations, expand the geographic lens to assess regional variation, and embed field experiments on XAI-enabled fraud detection technologies with industry partners. Building on the gap–solution scale, future research should identify user-centred explainability features, privacy-preserving AI designs, and regulatory co-design practices to establish novel pathways and practices for user adoption of technical innovations.

Closing Note

The research ultimately shows that the potential of AI in digital finance goes beyond the accuracy of detecting fraud. Indeed, the potential lies somewhat with the extent to which elements of trust, transparency, and privacy protection are incorporated into models of adoption. This research through operationalising a hybrid TAM–Privacy Calculus–UTAUT framework adds to theory and practice by providing pathways for secure, trusted, and inclusive financial innovation in emerging markets.

7. Practical Implications

Practical Recommendations

This research has provided several practical recommendations to policymakers, financial firms, and fintech developers looking to build trust and encourage take-up of AI-based fraud detection tools in emerging economies:

Embed Explainable AI (XAI): Fraud detection systems should allow for a visible and interpretable decision pathway in order to demystify credibility and build trust.

Adopt Privacy-Preserving Architectures: Privacy-preserving and secure fraud detection techniques, like federated learning and differential privacy, can generate valid fraud detection systems while protecting user data.

Localize User Education: Culturally adapted awareness campaigns are one possible avenue toward bridging the issue of gaps in digital literacy, which can build user’s understanding of risk, protections, and the role of AI.

Enhance Regulatory Oversight: Transparency in reporting standards, independent audits, and strong enforcement of data protection laws are all necessary for institutional legitimacy.

Design for User-Centric Trust: Features demonstrating fairness, accountability, and consent by local standards can enhance not only adoption but also long-term engagement.

Technical innovation, awareness and cultural adaption, and regulatory reform can create secure, transparent, and inclusive digital finance ecosystems that promote both financial inclusion and a level of resilience to fraud.

Author Contributions

Conceptualization, E.M.; methodology, E.M.; investigation, E.M.; formal analysis, E.M.; data curation, E.M.; writing—original draft preparation, E.M.; writing—review and editing, E.M.; critical feedback and supervision, F.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was funded by the authors.

Institutional Review Board Statement

Ethical review and approval were waived for this study in accordance with the UK Policy Framework for Health and Social Care Research (Section 2.3.15) because it posed minimal risk and collected no identifiable personal data.

Informed Consent Statement

Informed consent was obtained electronically from all participants. Participation was voluntary, and no personally identifiable information was collected.

Data Availability Statement

The data are not publicly available due to privacy and ethical restrictions. Anonymized survey data supporting the findings of this study can be made available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Survey Questionnaire

This appendix contains the complete survey instrument used in the study “AI-Driven Cybersecurity in Mobile Financial Services: Enhancing Fraud Detection and Privacy in Emerging Markets”.

The questionnaire comprised three sections: demographic information, quantitative closed-ended questions, and qualitative open-ended questions.

Section 1—Demographic Information

Name (*)

Mail (*)

Occupation

Section 2—Quantitative Questions (Closed-Ended)

Not familiar at all

Slightly familiar

Moderately familiar

Very familiar

Extremely familiar

- 2.

Please rate your trust in AI technologies protecting your financial data from fraud.

No trust at all (1)—Complete trust (5)

- 3.

How confident are you in using AI-secured mobile financial services?

Not confident—Extremely confident

- 4.

Do you have concerns about your data privacy when using mobile financial services?

Yes/No

- 5.

How effective do you think AI is compared to traditional methods in detecting fraud?

Much less effective (1)—Much more effective (5)

- 6.

How satisfied are you with the transparency of AI security measures from mobile financial services?

Very dissatisfied (1)—Very satisfied (5)

- 7.

How likely are you to recommend AI-secured mobile financial services to others?

Very unlikely (1)—Very likely (7)

- 8.

Have you experienced fraud while using mobile financial services?

Yes/No

- 9.

How comfortable are you sharing personal data with mobile financial apps?

Very uncomfortable—Very comfortable

- 10.

Rank these cybersecurity features by importance to you (1 = most important)

AI-based fraud detection

Two-factor authentication

Data encryption

Regular security updates

Privacy policy transparency

- 11.

What is the biggest barrier to adopting AI-secured mobile financial services?

Lack of trust in AI

Privacy concerns

Lack of awareness

Technical difficulties

Other (please specify)

- 12.

How often do you use mobile financial services?

Daily

Weekly

Monthly

Rarely

Never

Section 3—Qualitative Questions (Open-Ended)

- 13.

Describe your understanding of AI’s role in fraud detection for mobile financial services.

- 14.

Can you share any personal or observed experiences related to fraud or security breaches in mobile financial services?

- 15.

What specific privacy concerns do you have when using mobile financial apps, and why?

- 16.

How do you believe mobile financial service providers could improve their AI-based cybersecurity measures?

- 17.

In your opinion, what factors would increase your trust in AI-driven security systems in mobile banking?

- 18.

What information or communication would you find helpful from mobile financial services regarding their AI security?

- 19.

How do local cultural, social, or regulatory factors influence your trust and use of AI-powered mobile financial services?

- 20.

What recommendations would you give to mobile financial service companies to better protect users from fraud and privacy risks?

Appendix B. Participant Demographics

Table A1 presents the demographic characteristics of the 151 survey respondents from emerging markets (Kenya, Nigeria, and Bangladesh).

Table A1.

Demographic profile of survey participants (n = 151).

Table A1.

Demographic profile of survey participants (n = 151).

| Category | Subcategory | Percentage (%) |

|---|

| Frequency of Mobile Financial Service Use | Daily | 50.3 |

| | Weekly | 43.7 |

| | Monthly | 4.6 |

| | Rarely | 1.3 |

| | Never | 0.0 |

| Experience with AI-Secured Mobile Financial Services | Very familiar | 37.1 |

| | Moderately familiar | 35.8 |

| | Slightly familiar | 21.0 |

| | Extremely familiar | 6.0 |

| | Not familiar | 0.0 |

| Occupational Background (grouped) | Technology and IT | Software Developers, Cybersecurity Professionals, Data Scientists, Network Engineers, AI Researchers, UX Designers, Web Developers, QA Engineers, Cloud Engineers, Database Administrators, IT Consultants, Ethical Hackers, Systems Analysts, Smart Home Automation Experts, AI Prompt Engineers, AR Interface Developers, etc. |

| | Finance and Business | Investment Bankers, Financial Analysts, Financial Advisors, Insurance Agents, Corporate Lawyers, Consultants, Entrepreneurs, Business Owners, Sales Assistants, Shopkeepers, Brand Consultants |

| | Healthcare | Doctors, Nurses, Neurosurgeons, Pharmacy Assistants, Psychologists |

| | Education and Academia | Teachers, High School Teachers, University Professors, School Administrators, Researchers |

| | Creative and Media | Graphic Designers, Illustrators, Digital Content Creators, Journalists, Singers, Actors, Interior Designers, Influencers |

| | Other Professions | Pilots, Drivers, Mechanics, Tailors, Electricians, Preachers, Cashiers, Security Guards, Delivery Personnel, Office Assistants, Homemakers using MFS |

Appendix C. Mini-Systematic Review of AI-Driven Fraud Detection Studies (2019–2025)

Table A2 presents a concise systematic review of studies published between 2019 and 2025 that investigate AI-driven techniques for fraud detection, with a particular focus on mobile financial services and digital banking. The table highlights the methods applied, datasets used, and reported performance metrics.

Table A2.

Mini-Systematic Review.

Table A2.

Mini-Systematic Review.

| Authors | Methods | Key Findings | Limitations |

|---|

| [6] | Ensemble deep learning | Improved detection accuracy vs. rule-based | High computational cost |

| [5] | ML + anomaly detection | Early fraud signals identified | Lack of explainability |

| [2] | Federated learning | Enhanced privacy, reduced data centralization | Communication overhead |

| [3] | Hybrid AI and regulatory tech | Trust enhanced via regulatory compliance | Limited testing in emerging markets |

| [13] | Survey study | Users distrust opaque platforms | No technical model testing |

| [4] | TAM + privacy calculus + AI | User trust shaped adoption decisions | Region-specific, small sample |

| [11] | XAI + stacking ensemble | Higher accuracy + explainability | Higher accuracy + explainability |

References

- Donovan, K. Mobile money for financial inclusion. In Information and Communications for Development 2012: Maximizing Mobile; World Bank: Washington, DC, USA, 2012; pp. 61–73. [Google Scholar]

- Arner, D.W.; Barberis, J.; Buckley, R.P. Fintech and regtech: Impact on regulators and banks. J. Bank. Regul. 2020, 21, 7–25. [Google Scholar] [CrossRef]

- Awosika, T.; Shukla, R.M.; Pranggono, B. Transparency and privacy: The role of explainable AI and federated learning in financial fraud detection. J. Inf. Secur. Appl. 2023, 78, 103552. [Google Scholar] [CrossRef]

- Hernandez-Ortega, B. The Role of Post-Use Trust in the Acceptance of a Technology: Drivers and Consequences. Technovation 2011, 31, 523–538. [Google Scholar] [CrossRef]

- Ali, A.; Abd Razak, S.; Othman, S.H.; Eisa, T.A.E.; Al-Dhaqm, A.; Nasser, M.; Elhassan, T.; Elshafie, H.; Saif, A. Financial Fraud Detection Based on Machine Learning: A Systematic Literature Review. Appl. Sci. 2022, 12, 9637. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P. An extended Privacy Calculus model for e-commerce transactions. Inf. Syst. Res. 2006, 17, 61–80. [Google Scholar] [CrossRef]

- Jack, W.; Suri, T. Risk sharing and transactions costs: Evidence from Kenya’s mobile money revolution. Am. Econ. Rev. 2014, 104, 183–223. [Google Scholar] [CrossRef]

- Almalki, F.; Masud, M. Financial fraud detection using explainable AI and stacking ensemble methods. Expert Syst. Appl. 2025, 242, 123456. [Google Scholar] [CrossRef]

- Osabutey, E.L.C.; Jackson, T. Mobile money and financial inclusion in Africa: Emerging themes, challenges and policy implications. Technol. Forecast. Soc. Change 2024, 202, 123339. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, T.; Zhang, H.; Wang, X. Year-over-Year Developments in Financial Fraud Detection via Deep Learning: A Systematic Literature Review. arXiv 2025, arXiv:2502.00201. [Google Scholar] [CrossRef]

- Blumenstock, J.; Kohli, R. Big data privacy in emerging market fintech and financial services: A research agenda. Inf. Syst. J. 2023, 33, 789–812. [Google Scholar] [CrossRef]

- Sowon, K.; Munyendo, C.W.; Klucinec, L.; Maingi, E.; Suleh, G.; Cranor, L.F.; Fanti, G.; Tucker, C.; Gueye, A. Design and evaluation of privacy-preserving protocols for agent-facilitated mobile money services in Kenya. Comput. Secur. 2024, 133, 103569. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. In Advances in Neural Information Processing Systems (NeurIPS 2017); NIPS Foundation: La Jolla, CA, USA, 2017; pp. 4765–4774. [Google Scholar]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Culnan, M.J.; Bies, R.J. Consumer privacy: Balancing economic and justice considerations. J. Soc. Issues 2003, 59, 323–342. [Google Scholar] [CrossRef]

- Guest, G.; Namey, E.; Chen, M. A simple method to assess and report thematic saturation in qualitative research. PLoS ONE 2020, 15, e0232076. [Google Scholar] [CrossRef]

- Asif, M.; Khan, M.N.; Tiwari, S.; Wani, S.K.; Alam, F. The Impact of Fintech and Digital Financial Services on Financial Inclusion in India. J. Risk Financ. Manag. 2023, 16, 122. [Google Scholar] [CrossRef]

- Higgins, J.P.; Thomas, J.; Chandler, J.; Cumpston, M.; Li, T.; Page, M.J.; Welch, V.A. (Eds.) Cochrane Handbook for Systematic Reviews of Interventions, 2nd ed.; Wiley: Hoboken, NJ, USA, 2019. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).