Abstract

In Notre-Dame de Paris’ digital twin, the massive data is characterized by its variability in terms of production and documentation. The question of provenance appears as the missing link in digital heritage data and a fortiori in the provenance of knowledge. The problem can be formulated as follows: the heterogeneity of data means variability as multi-device, multitemporal, multiscalar, with spatial granularity, and multi-layered and semantic complexity. The objective of this article is to improve the quality and consistency of paradata and to bridge the practical gap between mass 3D digitization and mass data enrichment in the data lineage of cultural heritage digital collections. FAIR principles, provenance, and context are keys in the data management workflows. We propose an innovative solution to integrate provenance and context seamlessly into these workflows, enabling more cohesive and reliable data enrichment. In this article, we use both conceptual modeling and quick prototyping: we posit that existing conceptual models can be used as complementary modules to document the provenance and context of research activity metadata. We focus on three models, namely the W7, the PROV ontology, and the CIDOC CRM. These models express different aspects of data and knowledge provenance. The use case from Notre-Dame de Paris’ research demonstrates the validity of the proposed hybrid modular conceptual modeling to dynamically manage the Provenance Level of Detail in cultural heritage data.

1. Introduction

Since 2017, the term “fake news” has emerged as a powerful descriptor of the radical shift in how society engages with information, truth, and reality, often referring to entirely false stories or unfavorable news framed as false [1]. This phenomenon has highlighted the urgent need for enhanced media literacy and, more critically, for robust information and data literacy to navigate an increasingly complex informational landscape. Another layer of blurriness is added with the widespread use of generative AI models [2]. In this context, open science has become a pivotal movement, aiming to make scientific processes more transparent, inclusive, and democratic [3], by ensuring that research outputs (publications, data, physical samples, and software) are universally accessible. Researchers in the 21st century bear a democratic duty to uphold research integrity and ethical standards, emphasizing the importance of data and knowledge provenance to ensure credibility, accountability, and trust in their work. In today’s digital age, the management and use of data lies at the heart of many disciplines. In this article, we explore this question of open science, data, and knowledge provenance in the fields of heritage sciences, conservation, and restoration. The origin and lineage of data, its modeling, and its curation are all necessary links in establishing a coherent, well-argued scientific narrative.

The starting point of this article is twofold: first, the availability of massive data of Notre-Dame de Paris’ research; second, the variability of data and the way they are produced. If Notre-Dame’s data were somehow narrated to us, it would reveal a rich and complex story, built from data curation, modeling, and enrichment [4]. These often hidden processes are essential to transform raw data into actionable, understandable knowledge, and knowledge engineering plays a crucial role in this transformation. The availability of massive 3D digitization data poses a significant challenge in bridging the gap between raw model acquisition and meaningful data enrichment. This problem is exacerbated by the following:

- The variability in how 3D models are produced and acquired, as well as the lack of a standardized mechanism for presenting the provenance and contextual information of these datasets.

- The same fuzzy variability exists for the enrichment and knowledge production workflows: different annotation tools and processes, different types of semantics, multiple scales of annotation of a feature, object, activity of an object.

The problem can be formulated as follows: the heterogeneity of data means variability as multi-device, multitemporal, multiscalar with spatial relations, and multi-layered semantic complexity. There is a pressing need for a simple yet robust model to structure data provenance and context in the cultural heritage field, accommodating n-dimensional analysis and interpretation. Such modeling would address the challenges of managing complex datasets while ensuring clarity and consistency in documenting processes and origins.

Because of the struggle to unify scattered information across different contexts, the objective of this article is bridging the practical gap between mass 3D digitization and mass data enrichment in the data lineage. FAIR (Findable, Accessible, Interoperable, Reusable) principles, provenance, and context become key aspects in the cultural heritage data management workflows [5]. We propose an innovative solution for data integration based on provenance and context knowledge, promoting more cohesive and reliable data enrichment.

The digital era gives a new meaning to T.S. Eliot’s poem “The Rock” [6], “Where is the wisdom we have lost in knowledge? Where is the knowledge we have lost in information?”, one must also ask: Where is the information we have lost in data? This reflection underlines the ongoing challenge of converting raw data into comprehensible, actionable information. We face the same common problems of siloed data and missing ways of translating between the meaning around their data. Data therapy [7], knowledge engineering, knowledge graphs, and symbolic artificial intelligence offer solutions for overcoming these obstacles. Interdisciplinary research is essential, as is the use of standardized vocabularies and thesauri, to guarantee a faithful and enriched representation of knowledge. Ontological modeling and data enrichment make information interoperable between humans and machines. In this article, we show that existing conceptual models document the facets of provenance and context in research activity. Instead of using ontologies a posteriori for late-stage data transformation, we propose to anticipate it in the data workflows to facilitate the modeling, capturing, and enriching metadata. Conceptual modeling and quick prototyping are complementary parts of the demonstration, presenting either more theoretical aspects or more implementation-specific ones.

There exist different models to express different aspects of data provenance. The CIDOC CRM is a conceptual cornerstone for cultural heritage data. While the CIDOC CRM and W7 are event-centric models, PROV supports the expressivity for lineage. Building on previous works about the description of cultural heritage workflows with provenance metadata [8] and the application of the W7 model for photogrammetric onsite acquisition metadata [9], we extend metadata and paradata documentation to photogrammetry processing, annotation workflows, and more broadly to research activities in cultural heritage (CH) and heritage sciences (HS). It ensues the creation of streamlined metadata-paradata schemas for photogrammetry acquisition, processing, and data enrichment by annotation. The proposed metadata schemas are compatible with the model W7, the ontologies of PROV and CIDOC CRM. Advocating for the reuse of existing standards, this work demonstrates the benefit of this hybrid model for provenance resulting from the W7 model, the PROV and the CIDOC CRM ontologies.

The use case from Notre-Dame de Paris’ research shows the potential of the proposed hybrid modular modeling to manage the variability and complexity of data provenance. During the course of the research on Notre-Dame de Paris, the digital ecosystem has evolved to archive, manage, visualize, and annotate data from researchers. The ecosystem lacks automated mechanisms for presenting the provenance of data and processes, gathering scattered information in different contexts of knowledge production. In this article, we show that the provenance information constitutes a fundamental layer for making data accessible and interoperable regardless of the data formats, tools, or technological stacks. The main obstacle remains the difficulty of gathering consistent provenance metadata across the diversity of datasets and convincing data producers to invest time and effort in documenting high-quality metadata. Furthermore, there exists the challenge of automating the matching between the model presented and data from the digital ecosystem. We describe the use of the semantic artefacts (ontology, metadata schemas) and the data transformation workflows to make the experiment transferable to other use cases and contexts.

Provenance documentation outlines both a problem and a solution, presenting a comprehensive model that balances simplicity with flexibility. This article also explores the experiment’s potential for generalizability across various contexts, offering a scalable framework to support diverse applications in heritage preservation and beyond.

This article is organized into seven sections. The next section presents the research context (Section 2) and use case (Section 3): it lays out the complexity of the problem at stake in a real-life example. The state-of-the-art section (Section 4) presents the current existing research on the questions of data provenance and lineage, the related ontologies, and how it applies in cultural heritage workflows. Next, the section of conceptual modeling (Section 5) presents the potential of using a hybridization of existing ontologies to track data and knowledge provenance. The implementation section (Section 6) presents the implementation of the model and the results achieved in the considered use case. The results are then analyzed in the discussion section (Section 7) to highlight the innovative aspects of the work proposed in this article, as well as the limits of this approach. The conclusion section (Section 8) offers a synthetic overview and future perspectives.

2. Collaborative Research Context of Notre-Dame de Paris

The research context in the Notre-Dame de Paris’ research has been tight with the operational activities of restoration that took place after the 2019-fire [10]. The Etablissement Public pour la Restauration de Notre-Dame de Paris (ENDP) and the restoration architects welcomed the presence of researchers during the consolidation and renovation activities [11]. The restoration project would benefit from the researchers’ expertise and findings about a monument that was little studied, despite being a very well-known monument. The renovation period would give access to so far inaccessible parts and elements of the cathedral. Since the beginning, research has accompanied the restoration activities. The methodology of emergency documentation in the context of Notre-Dame de Paris (NDP) has been tailored to the fieldwork constraints and restoration schedule [12,13]. Between 2019 and 2024, Notre-Dame de Paris was a complex working site, where the Etablissement Public was in charge of overseeing and coordinating the restoration with the three restoration architects leading the restoration projects of different parts of the cathedral, and all the companies that intervened onsite. Alongside the restoration project, the research groups have worked together [14] for documenting the remains and artefacts. The Laboratoire de Recherche des Monuments Historiques (LRMH) had a key role: it participated in all the working groups (WGs) and managed the daily photographic monitoring of sorting operations in the cathedral using a metadata documentation workflow [15]. In parallel to the restoration, the research has been conducted by researchers and experts of a variety of profiles and coming from diverse public-funded institutions such as research laboratories, universities, etc. It represents nearly 200 researchers organized in 9 thematic WGs, namely the Acoustics WG, Wood WG, Heritage Emotions (Ethnography) WG, Metal WG, Stone and Mortar WG, Decoration and Polychromy WG, Structures (Civil engineering) WG, Stained Glass WG, and Digital Data WG. The interdisciplinarity and heterogeneity of research topics of the different working groups in Notre-Dame de Paris’ is reflected in their production of data. In the worksite, the data production can be either digital or analog, but in the end, even analog data is ingested in the ecosystem of the Digital Data WG.

The Notre-Dame de Paris (NDP) Digital Data WG has a transversal role in the scientific research on NDP [16]. It is in charge of the digital infrastructure and software of the NDP ecosystem, gathering several tools to promote digital data related to the cathedral. It accompanies and participates in the unprecedented effort to collect, aggregate, and analyze the research data [17,18]. This work is not limited to producing spectacular 3D digitization, sometimes mistakenly called a digital twin. It is a living, interconnected data infrastructure capable of representing the building in all its complexity: form, appearance, material, history, transformations, and interpretations. The approach developed by the Digital Data WG builds an augmented, interoperable, and evolving heritage object in a logic of co-construction and knowledge sharing [19]. It constitutes an exceptional collection: more than 200,000 documentary photographs (before, after the fire, and during restoration), 5000 3D lasergrammetry stations, 300 technical drawings, 5000 documentary sources, 22 temporal states (during the recovery of the remains and during the restoration work), nearly 2000 remains digitized in 3D, and more than a million images used for photogrammetry at various scales. But this work is not limited to collecting or visualizing these resources: it involves structuring this data to produce knowledge. A software platform based on open web technologies has been developed to manage the entire data life cycle with an emphasis on structuring, semantic enrichment, and collaborative stewardship. Esmeralda, Archeogrid, Opentheso, Aïoli, 3DHop, and nDame 3D viewer are tools whose developers have joined forces to create the nDame digital ecosystem. The Aïoli platform, based on photogrammetry, is dedicated to 2D and 3D semantic annotation for the documentation of heritage objects [20,21]. Collaborative semantic annotation scenarios have been tested on a wide range of subjects (conservation reports, stratigraphic analyses, locating lapidary signs, dendrochronological analyses, etc). The nDame Viewer [22] helps in the visualization and analysis methods with multidisciplinary perspectives on the same object of study. It focuses on the n-dimensional (nD) nature of the data beyond the 4D (spatial + temporal) dimensions. The ambition is to navigate in such nD space in order to offer members of the scientific site a cross-semantic, spatial, and temporal entry to the resources available. Various approaches to making data available through new exploration modalities have been tried out and are still under study, notably through the joint exploitation of knowledge graphs and 3D web visualization tools [23]. Opentheso is a software component of the nDame ecosystem for the management of controlled vocabularies and thesauri [24,25]. It aims at centralizing and enriching data indexing. Diving deeper into this question of interoperability of tools and data in the digital ecosystem, the next section presents a use case that will illustrate the provenance gap in complex CH workflows.

3. Use Case

We describe the use case that will serve as the basis for the whole article. The use case is a beam from Notre-Dame de Paris’ site inventoried as the artifact Timber 4076. This section presents the archaeological and architectural elements that this artifact is associated with. This case study is representative because it gathers several datasets from different actors and has a complex provenance. This section explains how the datasets have been produced (survey method and field operation, processing, and enrichment by annotation). It illustrates the challenge of data and knowledge provenance that is at the core of this article.

The artifact Timber 4076 is a 4.88 m long tie beam made of wood that was originally located in the North-facing slope of the choir’s vaults (Figure 1). It has been dated by dendrochronological analysis to the year 1165. Charred as the outside as the other timber remains, the element was left fragile and polluted by lead. The remainder was then extracted from the site in three pieces, named A, B, and C (Figure 2): a cut was made with a chainsaw to separate sections B and C. Conversely, the separation between section A and B is the result of a breakage of the remains due to their fragile condition.

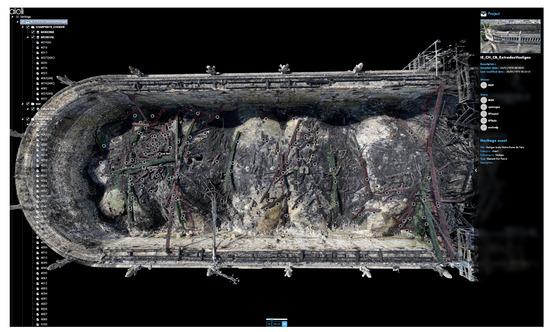

Figure 1.

Annotations of the timber artefacts inventory, located on the choir’s vaults, before removal, based on data established by the wood and carpentry WG. Timber 4076 can be seen on the far right of the image, annotated in red (©Roxane Roussel, Digital Data WG, CNRS).

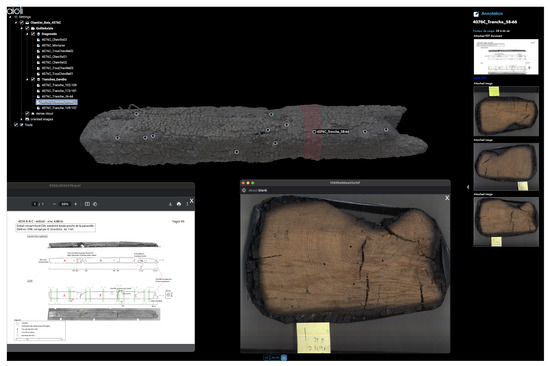

Figure 2.

Timber 4076 was cut into three parts to be removed from Notre-Dame de Paris. These three parts were digitized separately, and virtually reassembled to reconstruct the whole artefact (©Florent Comte, Digital Data WG, CNRS).

Timber 4076 was scanned in three parts (4076-A, B, and C), corresponding to the cuts made in Notre-Dame to remove the beam [13]. The digitization of Timber 4076 and all the other artefacts in charred wood is quite complex using image-based photogrammetry for two reasons: their shape, mainly developed on a single axis, does not allow turning around the subject because of the lack of accuracy in some parts; their color, dark grayish burned wood, is hard to capture in pictures with a standard light setting.

The photogrammetry was carried out using the arch-shaped digitization structure (Figure 3). It is equipped with 6 cameras (CANON 650D-24 mm lens). It is especially designed to digitize oblong objects. A set of proprietary photogrammetric software (Metashape) markers complement the acquisition setup to facilitate the 3D model reconstruction.

Figure 3.

Photogrammetric digitization device with an inverted-U mount (©Florent Comte, Digital Data WG, CNRS).

The three parts of Timber 4076 are digitized individually, in two rounds for each element. During the first round of data acquisition, the first half of the beam is digitized. Then, the object is flipped upside down. The second round records the parts that are occluded in the initial round, with enough overlap on the shared elements to help an automatic alignment during the reconstruction of the whole artefact. Two sets of pictures are created, called subsetA and subsetB. The three parts of the beam are processed separately using an internal Metashape semi-automatic pipeline for the whole photogrammetric processing [26]. Each subset is processed individually to record spatial positions and intrinsic parameters of each subset. The subsets are aligned together to spatialise the whole combined set. The typical photogrammetric processing (sparse cloud, optimization, dense cloud, mesh) is calculated for the whole object. At the end, the three exported .obj files are virtually reassembled to reconstruct the entire artefact using an open-source 3D scene management software (Blender), and a unified transformation matrix is recorded. The pipeline takes into account the diverse uses of the data: the script automatically generates the result in obj, ply, nxz (3DHop), and potree format. Once the processing is complete, each fragment is exported in OBJ and Ply formats for direct use, as well as in potree, Nexus, and Aïoli formats for specific visualization and annotation purposes. A long-term data archive is created as well. The automatization of the digitization workflow has encompassed three elements that are usually considered separately: the data acquisition, the data processing workflow, and the data archiving.

The data acquisition and processing constitute a massive image-based 3D documentation of the cathedral, its structures, and its elements [27]. It is used as the basis for enrichment workflows, such as 2D-3D annotations with the Aïoli tool [28]. Aïoli is an image annotation tool that uses photogrammetric data (photographs and 3D point cloud) to produce annotations of an image by selecting an area in the photograph and assigning attributes and descriptions to it. This annotation is automatically projected in the 3D dataset and back to the overlapping images [20,21]. The content and data modeling, (i.e., fields, layers, groups, and projects organization) are left for the users to decide how they want to organize the annotations’ structure.

The documentation of Aïoli photogrammetric scenes and their associated 2D/3D annotations are created in two different scales and contexts.

(1) Aïoli annotation projects are created at the architectural scale from the overview data as a 3D-model or images produced by a drone survey of the nave and choir’s vaults after the fire made by Art Graphique et Patrimoine (AGP). This dataset is used to identify and locate the remains that are still on site after the fire. The annotations on these projects (Figure 1) present an inventory of timber remains produced by the Wood WG, comprising the identification and location of 305 elements, respectively, 190 on the nave and 115 on the choir. The annotation’s description sheet gathers general information about the identified remains, including: provenance in the cathedral, specific ID, main archaeological observations, structural role of the timber, date, and forest provenance. It is accompanied by a link redirecting the user to the Aïoli scene of the individual digitized remains (Figure 4). The figure (Figure 1) represents the location of its fall on the vault after it burnt during the fire (Timber 4076 post-fire location of the artefact). This information is crucial because it is as close as we could get to the provenience information if we were in an archaeological excavation.

Figure 4.

Annotations and joint files for the Aïoli project of timber 4076C, based on data produced by the wood and carpentry working group, representing the location of the slices in the remain and the condition report (dowel, holes, mortise) (©Roxane Roussel, Digital Data WG, CNRS).

(2) The scale of the objects and building elements is considered: after the digitization process, artefacts are annotated individually. The scientists in the Wood WG have carried out condition reports and dendrochronological analysis on the remains, for which they need to cut the artifact to form slices. By analysing the annual rings, they are able to document and date the pieces to the nearest year. This process is documented by a series of photographs and the identification of the slices. For each object, a summary sheet of the information is produced: condition report, measurements, location of the slice, plotted on the various drawn and photographed profiles of the remains. This data is then annotated at the scale of the artifact, in the case of Timber 4076 A/B/C. A first layer documenting the location and thickness of the slices is defined. In the example of vestige 4076C (shown in Figure 4), 5 slices are made. Their position and thickness are specified in the annotation title and in the description sheet. Photographs of both sides of the slices are also added as joint files. A second layer is created for the condition report corresponding to the observations made before the wood was cut. In the example of this fragment, dowels, holes, and mortises are observed. Summary sheets of the studies carried out on the wood have also been added as attachments. Currently, there are 138 Aïoli projects for the individual wooden remains in Aïoli [29]. These annotations are established in close collaboration with the Wood WG in order to select the pertinent information to document in the platform, as well as validating the content of annotations.

This section aimed to present the use case of this article to frame the need for traceability of provenance in the research context. The use case that we present in this article is a representative sample of NDP data because it is an object of study involving different actors and WGs. It is both multiscalar and multitemporal. For the sake of this article, it has the advantage of remaining simple enough (a beam element in three parts, Timber 4076 A/B/C), while the question of provenance can be fully examined. The next section is dedicated to presenting the state of the art for the question of provenance for data in digital heritage workflows.

4. State-of-the-Art Section

4.1. CH Photogrammetry Workflow

In the last decade, the CH field of cultural heritage has witnessed significant maturity in the adoption of photogrammetric techniques, transitioning from isolated applications to the creation of massive datasets that enable at-scale digitization [30]. This evolution underscores a paradigm shift, where the emphasis lies in leveraging photogrammetry not just for individual artifacts but for comprehensive, large-scale documentation of diverse heritage sites and objects toward multimodal approaches [31]. However, some challenges persist, like the inherent variability of objects and contexts, which demand adaptable workflows. Ensuring the sustainability and reliability of photogrammetric processes is critical, as practitioners strive to overcome key obstacles associated with image-based photogrammetry, such as variability in lighting, texture, and scale. By addressing these issues, the CH field and Heritage Sciences continue to refine their methodologies, enabling robust and scalable digitization efforts [32]. Integration workflows for 3D digitization use custom metadata schemas or ontology for capturing the metadata about 3D scanning processes [33]. The authors points out the stopgap that metadata can currently only be collected by manual inputs, and the modeling would need to be expanded for other digitization techniques. The next section will examine this question of workflow documentation in CH beyond the 3D digitization practice.

4.2. CH Provenance Documentation and Workflows

In the MAP-CNRS laboratory, the question of documentation for CH workflows has been investigated by multiple researchers from different perspectives. This section proposes a synthetic overview that are part of the methodological background of this work. In [8], the recording of paradata in CH workflows is shown to be as necessary as the technical metadata: they proposed a generic reproducible workflow, metadata documentation, and database structure compatible with various digitization techniques and research activities. Focusing on image-based modeling operational workflow, refs. [9,34] proposed to embed the acquisition metadata and paradata in the image itself through a QR code. Encoding the metadata in the images from the start is an interesting idea in principle, but the implementation falls short with a major capacity bottleneck to overcome. The QR code allows only the embedding of a unique identifier (UUID), linked to an external metadata container. MEMoS is the proposed metadata container for photogrammetric acquisition [9]. The limit of this approach lies in the intrinsic lack of human motivation and time to fill metadata forms. Experience shows that the loss of information by human error remains one of the main obstacles. In the continuity of documentation, the Memoria Database project is an exploratory information system dedicated to the documentation of research activities [35,36]. The workflows are recorded using diagrams to represent the processes. A large effort of documentation has been made on collecting and structuring the professional vocabulary about research activities as a nomenclature of activities [37]. Currently, Memoria is a closed system for internal use. The data and SI are not yet interoperable. Since 2024, the project Metareve [38] has taken a different approach and implemented an API to mine the metadata and paradata from audio or text sources. The objective is to facilitate the automatic extraction of metadata and their ingestion into the existing information systems and workflows. Bringing together the questions of data provenance and knowledge provenance, the semantic consistency for CH artefacts allows tracking artefact biographies at the data level using Linked Open Data and data mapping with formal ontologies [39]. Several works highlight that the conceptual modeling step is essential in the implementation of a knowledge base about cultural heritage, focusing on iconography [40], material alteration [41], virtual reconstruction [23,42,43,44], building visual inspections and quantitative results of mechanical simulation scenarios [45], archaeological stratigraphy and material microstratigraphy [46].

The current tendency is a two-pronged movement: it aims at solidifying automated computational workflows to alleviate the burden of documentation, while maintaining semantic consistency and specificity [25,47]. These research perspectives show complementary facets and lineage of provenance about heterogeneous data. The common ground of these research works can be formulated as two key needs. Firstly, one needs gathering and synthesizing diverse, massive, and heterogeneous information, without degrading its quality, expressivity, and specificity. Secondly, since the information is decoupled from its support, it means that the data is versatile and transformable in different formats, digital objects, visualizations, etc. In both key needs, data curation, knowledge representation, and semantic modeling play a central role.

4.3. Data Provenance and Knowledge Provenance

To manage the data heterogeneity in the CH domain, Tzitzikas and Marketatis [48] advocate for digital preservation: “Digital material has to be preserved against loss, corruption, hardware/software technology changes, and advances in the knowledge of the community”. In their book, they use the metaphor of Cinderella and the glass slipper to illustrate this quest for provenance: tracing the history of an object or piece of data and following its trajectory and transformations represents a challenge reminiscent of that of finding the rightful owner of the slipper. The increase in data production is getting faster with exponentially expanding possible workflows. It results in a rapid increase in the data volumes with heterogeneous formats and data types, which makes digital preservation and provenance a pressing matter more than ever. The data quality (and usefulness) is directly correlated with the quality of paradata [49]. The reusability and intelligibility of data require documenting the processes and practices of data, which is what one calls paradata. The authors identify categories of paradata temporal approaches (prospective, in situ, retrospective), and categories of paradata artefacts (structured metadata, narratives, snapshots, diagrammatic representations, and standard procedures) [50]. In this article, we investigate how to document paradata in CH workflows. In the literature, the term paradata is a synonym for provenance metadata. The question of provenance is rooted in the CH disciplines like museology, archaeology, or art history. The provenance is sought and established for material objects, either object provenance in a museum or provenience in archaeological research. Similarly, for digital objects, the data provenance and data lineage is structured in computer science. There are similarities between both material objects (provenance/provenience) and digital ones (data provenance) [5]. Whether objects are digital or physical, we can assume a similar approach to dealing with their provenance beyond the diverging disciplines, methodologies, or technologies.

What is the reason to focus on knowledge provenance rather than data provenance? Unlike data provenance, knowledge provenance "includes source meta-information, which is a description of the origin of a piece of knowledge, and knowledge process information, which is a description of the reasoning process used to generate the answer” [51]. We build on a data archaeology approach [52]: “[it] consists of segmenting classes with potentially complex descriptions, viewing and comparing data, and saving and reusing work (both the results of analyses and the techniques used to generate the results). Useful knowledge is discovered through a dynamic process of interacting with the data as hypotheses are formed, investigated, and revised”. This methodology is made possible with a critical dialogue in relation to the data producers and users. It ties the technical metadata aspects with the qualitative contextual paradata elements to elicit the knowledge provenance of a dataset beyond simply the data provenance. This semantic enrichment falls under the idea of digital maieutics [4].

4.4. W7

Initially conceptualized as a business model [53], W7 proved useful in the data provenance tracking and analysis. The W7 model allows specifying context information about the What, When, Where, How, Who, Which, and Why. It is called W7 for the 7 “W” questions. It is both a generic and extensible model to capture the provenance metadata in various domains. In [54], the authors examine the potential of the W7 model in the context of digital self-publishing. They show that the model allows conducting data analyses on the quickly evolving digital self-publishing industry. This application of the W7 demonstrates its flexibility to adapt to specific contexts and problems [55]. In [56], the W7 model is explored for the documentation of scientific research workflows. The model has proven its versatility: the W7 model is used to guide the data modeling of photogrammetric data acquisition [9,34]. Some challenges remain open: the granularity of documentation, the data status, the provenance computing, the semantics of provenance, and the provenance storage/application. These challenges frame the conceptual and prototypal objectives of this article.

4.5. PROV

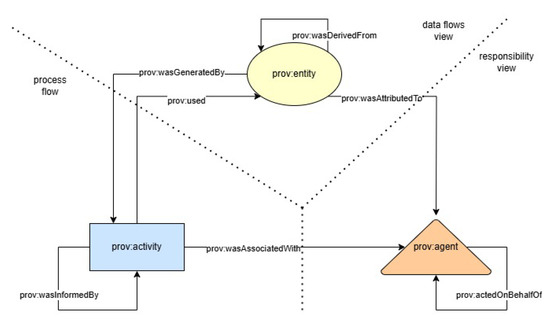

The PROV specification is a recognized standard in the World Wide Web Consortium (W3C) dedicated for the provenance interchange on the web [57]. In PROV (Figure 5), the provenance comes from the information about entities, activities, and people involved in producing a piece of data or thing [58]. The provenance information is often used to form assessments about the quality, reliability, or trustworthiness of data. In the PROV family of models, PROV-DM is the conceptual data model that presents the core model that constitutes the foundation for the W3C Provenance (PROV) family of specifications [59]. PROV-O is the PROV Ontology, an OWL2 ontology allowing the mapping of the PROV data model to RDF [60]. Three main components, prov:entity, prov:activity, and prov:agent, express, respectively, the data flow view, the process flow, and the responsibility view. Each component is in relation to the other two and allows derivations of components from components.

Figure 5.

Overview of the PROV components and relations, adapted from [61] (©Packer, Heather S., Dragan, Laura and Moreau, Luc).

4.6. Proliferation of Standards and Ontologies

For data interoperability and integration, one needs standards as consistent ways to document data and workflows. The goal of this article is not to propose another ontology but to make the most of multiple existing ontologies, considering the maximum reuse principle of ontology design and creation. Standards and norms can be perceived as restrictive and rejected because they might not be adapted to specific cases. The meme (Figure 6) shows this never-ending pernicious cycle of standards proliferation. Ontologies are no exception. The number of ontologies that have been proposed recently in the domain of cultural heritage is very high and growing [62,63,64]. There are a plethora of semantic artefacts, but they are rarely used beyond the initial context in which they were created [65]. The necessity to use existing standards is rather obvious. In the CH domain, the CIDOC CRM is an ISO norm as domain ontology for data integration [66].

Figure 6.

The standard conundrum ©Randall Munroe, Standards (xkcd #927).

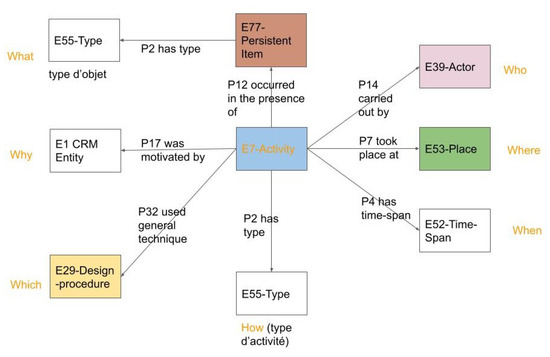

As shown previously (Section 4.4 and Section 4.5), the W7 or PROV have their own merits to express provenance information. The question is not anymore how to align data to one ontology but how to align data to these different ontological models and how to switch from one ontology to another depending on the needs. In the case of ontologies, the proliferation of single-use project driven ontologies has formed a new challenge: ontology matching [67] and ontology alignment. The integration question is shifting from data-to-ontology to ontology-to-ontology. In [68,69], nine scenarios are designed for building ontology networks. In [70], the expression modular ontology modeling is coined for the representational granularity of an ontology defined by the modeling choices. In [71], this issue is also called the ontology silo problem to characterize having data organized by different ontologies and de facto constitutes independent semantic data silos. The authors propose a mapping between PROV-O and the Basic Formal Ontology (BFO). Likewise, in a previous work [39], we have adopted a similar perspective to demonstrate the compatibility between the CIDOC CRM ontology and the W7 model. Since the CIDOC CRM and W7 have in common to be event-oriented, the Figure 7 represents the W7 model expressed in CIDOC CRM. The figure presents the transcription in CIDOC CRM classes and properties if one wishes to retrieve information about the different W-questions of the W7 model. A CIDOC CRM-compatible query path is proposed for each of the W-branches: the semantics of the W7 model is covered by the scope of CIDOC CRM classes and properties. In the next section, the challenge of provenance in cultural heritage guides the conceptual modeling with the existing ontologies: W7, PROV, and CIDOC CRM.

Figure 7.

The metadata model W7 (Who, Where, When, What, How, Which, and Why) expressed in CIDOC CRM (©Anaïs Guillem, Digital Data WG, CNRS) [39].

5. Conceptual Modeling: Hybrid Model of Provenance and Interoperability

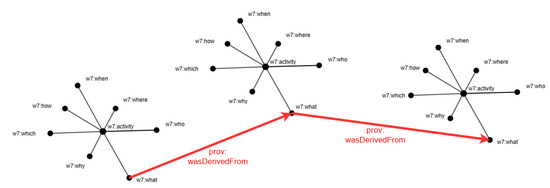

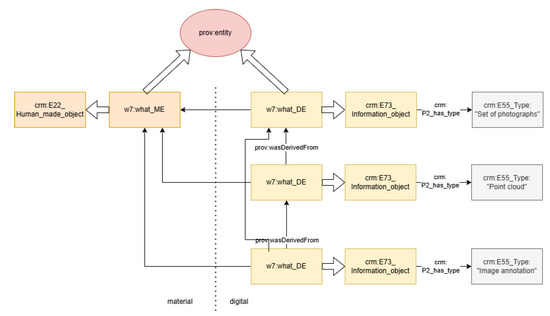

Multiple models express different facets of provenance. This section explores how these models tackle the question of provenance and in which way they overlap, if any. The objective is to formalize provenance in CH as something multifaceted, and not being stuck in a one-sided ontological frame. The event-oriented CIDOC CRM ontology is keen to document activities, while PROV captures the provenance information, sequential nature, and lineage of activities, tying people and objects (Figure 8). W7 is not an ontology as such but is a model that facilitates understanding of the context of an activity. Following the NeOn methodology, we explore the scenarii 2 (reusing and re-engineering non-ontological resources), 3 (reusing ontological resources), and 4 (reusing and re-engineering ontological resources) [69]. This section aims at reconciling these ontological and non-ontological resources to find ontological knots that shift from one model to the other.

Figure 8.

The recording of W7-based metadata of three activities and the relation of derivation of an entity expressed by the property prov:wasDerivedFrom. For example, the three activities related to acquisition, processing, and annotation starting from Timber 4076 as w7:what as material entity to the w7:what as digital entities resulting from these activities (photogrammetry photography dataset and photogrammetry 3D point cloud) (©Anaïs Guillem, Digital Data WG, CNRS).

5.1. W7: Semantic Artefact for Provenance Information

W7 is useful when dealing with a single activity and recording. The documentation is simple enough: the questions What, When, Where, How, Who, Which, and Why are fundamental questions in any natural language. In [55], the W7 model is used for data provenance or data lineage as follows:

What denotes an event that affected something during its lifetime; When refers to the time at which the event occurred; Where, is the location of the event; How, is the action leading up to the event; Who, refers to agent involved in the event; Which, is the program or instrument used in the event; and Why, the reason for the event. In certain cases, they may also refer to a group of entities, for example, a group of agents or the use of multiple instruments.

We choose to extend the W7 model [55]. The first change is the declaration of w7:activity (Figure 8). The semantics of What can shift: the modeling choice is to cluster digital entities and material ones under the What, insofar as the material object is the object of study and the multiple digital objects are derivatives but ultimately refers to the material object (Figure 8). The What dimension describes both material and digital entities involved in the process. In the photogrammetric acquisition, the material entity is the extrados of the cathedral choir, while the digital entity consists of a collection of photographic images. In photogrammetric processing, the input uses the aforementioned images, but the output consists of a dense point cloud (with relative oriented images), reflecting the data transformation through processing.

Aside from this change in the meaning of the What, the W7 framework remains stable and ensures the traceability of research activities across seven key dimensions, for example, as follows:

- The Who identifies the actors involved, such as Art Graphique et Patrimoine (AGP) for acquisition and Roussel Roxane (MAP-CNRS) for processing and annotation.

- The When and Where dimensions provide temporal and spatial references, linking datasets to precise moments (acquisition in 2019, processing in 2022) and locations (Notre-Dame de Paris for acquisition, CNRS campus in Marseille for processing and annotation).

- The How documents methodologies: drone-based image acquisition for photogrammetry processing to generate point clouds.

- The Which details technical configurations (number of images captured, camera specifications) and software used.

- The Why contextualizes these activities, linking them to broader research objectives, like the documentation of post-fire structural condition or the location annotation of wooden remains that have fallen on the vaults.

The What question is central in digital cultural heritage. It echoes the recent research topic about digital twins in CH that examines the relation between the material and the digital, as well as the information exchange between the two [43,72]. Although one might call it HC4 Heritage Digital Twin [73], prov:entity, or w7:what, as we show in this article, we want to be able to answer the following key competency questions about the What, the material object or its digital associated surrogates (Table 1):

Table 1.

Competency Questions (CQs) of Provenance.

In the sections research context (Section 2) and use case (Section 3), the heterogeneous nature of the data has been highlighted. This heterogeneity goes together with different levels of documentation. Due to the operational context of the restoration, the creation of data and documentation has been tied to the restoration activities. Suffice it to say, there are gaps in the provenance information that need to be filled. It is not always possible to retrieve the complete provenance information. The W7 model works as a metadata checklist that is flexible and generic enough for adjusting to what domain experts could need.

5.2. Modular Modeling of Provenance: W7, PROV, and CIDOC CRM

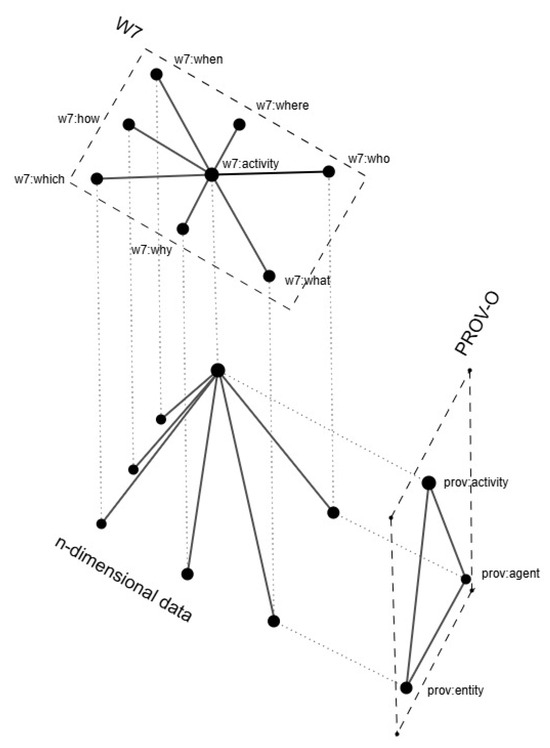

As seen above, the n-dimensional nature of the data, the need for comprehending and representing the fuzzy information, and a well-accepted guideline of maximum reuse of existing models, interfacing existing models like PROV, W7, and CIDOC CRM, becomes necessary. W7 and CIDOC are event-oriented; PROV is object-oriented. Both aspects are facets of provenance. CIDOC CRM is verbose in terms of modeling [74], whereas PROV is more economic. There is a difference in granularity of modeling: W7 is generic, while CIDOC CRM is more specific to the CH domain and is a pillar of CH ontology modeling. There is a need for openness and interface with other conceptual models.

The core innovative aspect of this article lies in the idea that n-dimensional data can be expressed and transformed in different views depending on the way one wishes to analyze the data (Figure 9). Different ontologies are represented as different frames that let you explore different aspects, in the same way that spatial projections allow you to visualize different views of a geometrical space. The data mapping to an ontological model is like a projection with a specific frame. For example, the provenance information of n-dimensional data can be expressed with different ontological models like PROV, W7, or CIDOC CRM. Each of the models acts as an ontological frame that creates predefined views. When we consider a plan or in a section, the chosen projection allows the representation of part of the elements; some other elements can exist, but they might not be visible in the representation. Similarly, what is not covered by the scope of the ontology or might not be properly or represented at all in the chosen view. The same data mapped with PROV, W7, or CIDOC CRM present different facets and granularity of the provenance information, depending on the ontology it is mapped with. The metaphor ends as soon as we consider the number of dimensions, the geometry has three dimensions, whereas the n-dimensional data has many dimensions as semantic facets.

Figure 9.

Interfacing models of provenance (©Anaïs Guillem, Digital Data WG, CNRS).

5.3. Persistence and Referenceability of Semantic Resources

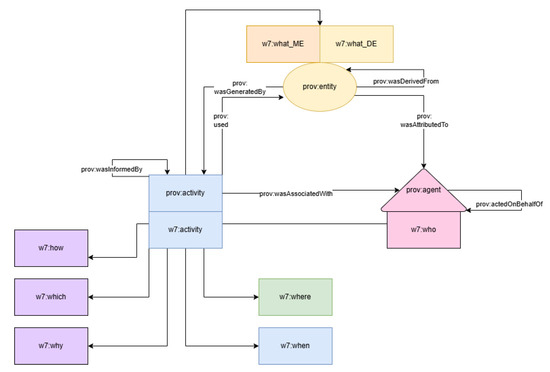

To guarantee data uniqueness, persistence, disambiguation, and referenceability, unique identifiers (ARK-based URIs) are assigned to the following key elements in the research workflow (Figure 10). This structured assignment of identifiers allows for a coherent representation of research workflows and data provenance. The construction of these URIs follows a sustainable and evolutive approach for the provenance documentation. The usual documentation approach, which has already been validated, relies on established controlled vocabularies [25] and structured repositories to define and standardize key entities such as Who (actors) [75], What (material and digital entities) [76,77], Where (places and spaces) [78], and How (techniques for analysis and observation) [79]. The implementation of controlled vocabularies and SKOS thesauri as semantic artefact references is further shown in Section 6.2.

Figure 10.

Hybrid model based on PROV-O and W7. Material objects (ME for Material Entity) (artefacts/building etc) and digital objects (DE for Digital Entity) are clustered as prov:entity (©Anaïs Guillem, Digital Data WG, CNRS).

By aligning with frameworks like CIDOC CRM and PROV (Table 2), this method ensures semantic interoperability across CH and HS data. The integration of PROV and CIDOC CRM is automatically inferred (Figure 11) whenever possible. For instance, a detected processing step is associated with a prov:Activity, while its resulting 3D model is linked to a crm:E73_Information_Object.

Table 2.

Overview of the key elements of provenance.

Figure 11.

Hybrid model based on PROV-O, CIDOC CRM and W7 expressing the relationships about the What: between material objects (ME for Material Entity) (artefacts/building etc) and the digital objects (DE for Digital Entity) resulting from digitization and digital derivations (©Anaïs Guillem, Digital Data WG, CNRS).

5.4. Paradata Adaptor W* (PAWs)

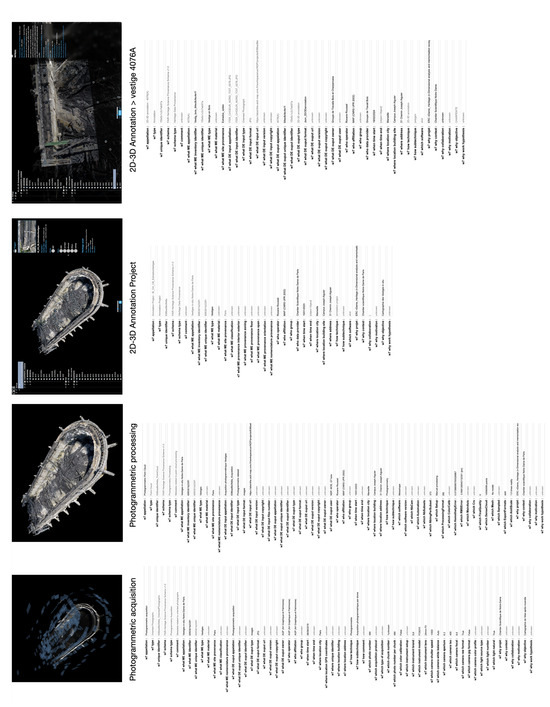

The modular conceptual modeling of provenance is supported by the metadata Paradata Adaptor W* (PAWs). Following the use case, this complementary documentation [80] comprises the PAW metadata schemas for the different activities: data acquisition, data processing, and Aïoli annotation (Figure 12). They are based on the W7 model clustered in the different W*-branches. Applying to our case study, the metadata collection is guided by metadata schemas PAWs. Once the required information is identified in agreement with the user’s expertise, it is important to choose an appropriate storage and communication format as well as a way to validate the data. The last few years have seen the growing use of json for this, thanks to its lightweight nature. A json document can be validated with the help of a json Schema, which defines its structure. Interestingly, a json Schema is itself available in json format. PAWs are json pre-mapped with the W7 model representing diverse activities, granularity of metadata documentation, and various patterns in the provenance.

Figure 12.

Collection of metadata sets (belonging to interconnected activities—photogrammetric acquisition/photogrammetric processing/annotation project/single annotation) for validating and enriching data provenance (©Anaïs Guillem, Livio De Luca, Digital Data WG, CNRS).

5.5. Provenance Levels of Detail (PLoD)

In this paper, we define the granularity for information as Semantic Level of Detail (SLoD). A Level of Detail (LoD) is generally used in the architecture, engineering, and construction industry to characterize the level of information available and necessary in relation to scale and the advancement of the design project. This use has shifted from material to digital with the use of LoD as the characterization of the accuracy or precision of 3D models and Building Information Modeling (BIM). In the case of Notre-Dame de Paris, the different perspectives of the research context lead us to broaden this concept of LoD to incorporate different granularity of meaning, that is, semantics. It is what we call Semantic Level of Detail. More specifically, we are interested in the question of provenance and posit the Provenance Levels of Detail (PLoD). The Provenance Level of Detail can be defined as different degrees in complexity and granularity about the provenance knowledge both in terms of digital entities and material ones (Table 3). In the table, 4 Provenance Levels of Details (PLoD 1, PLoD 2, PLoD 3, and PLoD 4) are set out using different semantic artefacts. They describe different complexity and granularity of provenance in the documentation.

Table 3.

The definition of the Provenance Levels of Details (PLoDs) and the associated semantic artefacts.

Why do we need to define and use these Provenance Levels of Detail (Table 3)?

In PLoD 1, the provenance information about Who is very generic. For example, w7:who, it can be a person, an institution, or a person that does something on behalf of someone else, etc., because the W7 model clusters the information about Who. This looks evident to humans and human queries, where the questions like Who is responsible for an activity can be easily answered, without any distinction between people or organizations. If the model W7 is more accessible for non-specialists, it is also prone to guide the data modeling and identify metadata documentation gaps. It is easily transferable and applicable in different contexts.

However, this becomes challenging information to represent for machine readability. Hence, we need to look for more granular provenance information using models that can generalize different entities, considering them often as agents responsible for the Who aspect of W7. In this case, one might need PLoD 2, PLoD 3, or PLoD 4. The provenance information granularity and precision is linked to the semantic artefacts it has been structured with. The W7 limits are stringent: the W7 model semantics have not been formalized compared with proper ontologies, which have been theoretically studied in detail, for example, their expressivity or intension of concepts. The W7 model may lead to inconsistencies in use. For example, the What is sometimes understood as the activity or as a thing. In the use of this model, semantic inconsistencies are common between How and Which. This fuzziness is challenging. Expert knowledge is required to clearly classify the different aspects of an activity or an event. This is the role of PLoD 2, to record user-dependent information with specific metadata schemas. PLoD2 bridges the metadata specification based on W7 model (PAWs) and expert knowledge. From the integration perspective, W7 is not expressive enough; one needs a mapping or ontology matching with other formal ontologies, such as CIDOC CRM as the CH domain ontology and PROV as the W3C Provenance model. In this case, PLoD 3 is indicated for describing provenance information based on PROV specification and PLoD 4 for CIDOC CRM-compatible information.

The Provenance Level of Details characterizes the various degrees of knowledge and information of provenance that we have to manipulate in CH and HS documentation. It also gives a frame for the data curation and validation at different moments of the digital data life cycle.

6. Implementation

This section focuses on the transition from the metadata files to a property graph dedicated to provenance exploration. The implementation relies on a Neo4J graph database. The semantic modeling of provenance is tested in a proof-of-concept implementation: it allows testing metadata and paradata enrichment through parsing/mapping and expert-knowledge infusion. For automating the matching between the conceptual model and data from the Aïoli database, we present the following elements: (Section 6.1) building the graph database, (Section 6.2) the Aïoli API, (Section 6.3) the use of Paradata Adaptor W* (PAWs) schemas, (Section 6.4) parsing and creating a graph from key-value matching, (Section 6.5) provenance queries, and (Section 6.6) graph visualization.

6.1. Building the Graph Database

The integration of heterogeneous data sources presents a fundamental challenge in the mapping and transformation of data [81]. Our approach combines structured metadata, partially automatically retrieved from Aïoli, and qualitative metadata partially manually provided by experts (Figure 12). This challenge stems from the coexistence of distinct categories of information with different granularity. To address this challenge of multilevel provenance interrelations, we considered various database architectures that are characterized by their own distinct strengths and limitations [82,83]. Since the main need is quick prototyping and exploration of data, we opted for a Neo4J property graph database. The ingestion of provenance metadata into Neo4J is performed using APOC procedures (Awesome Procedures On Cypher), a library for Neo4j that provides advanced functions for data transformation, conversion, and import/export operations. In our case, APOC performs the mapping of structured json documents into a labeled property graph, without the need for manual Cypher scripting. This includes dynamic creation of nodes, relationships, and properties from nested json structures using procedures such as apoc.load.json and apoc.create.node.

The implementation of a model requires the ability to flexibly match structured metadata with evolving data sources. In our case, we are faced with reconciling key-value structures originating from heterogeneous sources. The prototyping aims at building a mechanism capable of interpreting and integrating structured records to a property-graph database, ensuring consistency and extensibility.

6.2. Building the Aïoli API

We have developed a semi-automated pipeline that hybridizes extracted and manual metadata for graph representation (Figure 12). The Aïoli API built from OpenAPI specifications [84] and Swagger tool [85]. The API gives access to the data from the Aïoli NoSQL (CouchDB) database. The Aïoli-specific metadata is parsed into PAW-compliant records through a 2-step semi-automated alignment process.

(1) The first step is the automatic retrieval of technical provenance metadata extracted from the Aïoli data model. For example:

- The What (object or digital resource) is extracted from file references such as digital entity DE (pointCloud.path, orientedImages.path, and annotation identifiers) or material entity ME (Heritage asset);

- The Who derives from metadata fields such as authorId, groupId, or user profiles associated with annotations, and processed images and point clouds;

- The When is drawn from created or updated timestamps (heritage asset, project creation with point clouds and oriented images, annotation embedded in the project documents;

- The Where may be inferred from the heritageAsset.siteCode, geolocation metadata, or annotation positioning (2D and 3D positions in image or scene geometry);

- The Which (instrument or input) includes technical parameters such as camera models (camera.model), internal calibration data, or software used in the processing pipeline (in the case of Aïoli we use the processing logs);

- The How (method or process) is partially inferred from the project structure (file naming conventions), but often requires expert validation;

- The Why (purpose or intent) is generally not explicit in the original dataset and is captured through manual input in a dedicated interface.

(2) The second step is the infusion of human expert provenance knowledge to complement the technical metadata. An interactive web interface assists users in inspecting and editing activity records for dimensions requiring interpretation or missing. Provenance metadata of an annotation layer can be manually cross-checked against the information automatically extracted. This validation process prevents inconsistencies and enhances data reliability. This hybrid strategy, automated where possible and curated when necessary, ensures both semantic expressiveness and provenance consistency.

When loading json-based metadata, all data needs to be aligned with existing curated resources (Section 5.3), checking that all referenced identifiers exist and correspond to known entities. Beyond recognizing unique identifiers, we apply Natural Language Processing (NLP) techniques using spaCy. As an experimental setting, an initial test has been conducted using similarity-based matching metrics Levenshtein distance [86] for entity disambiguation to establish correspondences between normalized keys and values, which may exhibit varying degrees of normalisation. The Levenshtein distance is useful for correcting typos or small variations, but other distance metrics should be explored for semantic disambiguation on a large scale [87,88]. It constitutes an identified limit that needs to be addressed in future works.

6.3. Use of Paradata Adaptor W* (PAWs) Schemas

The structured metadata schemas PAWs (Section 5.4) [80] based on the W7 model allow experts to enrich a given dataset with custom paradata. For handling large volumes of heterogeneous data, the metadata associated with each activity (Figure 12) is stored in a structured json format following the PAWs schemas. This means that additional nodes and relationships can be generated as new metadata fields become relevant or as further dimensions of the PAW* schemas are explored, recording paradata clustered with W-questions (i.e., How, Which, Why). The system is designed to allow re-parsing and integration at any time and ensures that the knowledge base can evolve iteratively, integrating both newly acquired data and refined semantic interpretations over time. It accommodates granularity: annotations can be processed at different semantic levels of detail. By linking data through provenance, this method allows for the integration of previously disconnected annotations, the execution of complex queries that combine human-curated data with automatically extracted metadata.

6.4. Populating the Graph from Key-Value and Mapping PROV, CIDOC CRM, and W7, Leveraging Different PLoDs

The major nodes chosen for this approach are Material Entity (ME), Digital Entity (DE), Activity (Ac), Agent, and Location. Each node in the graph is described with structured properties aligned with CIDOC CRM and PROV ontologies. At the origin, metadata is encapsulated in the Activity (Ac) nodes following our proposal of extended W7 scheme (Section 5). In this encapsulated form, the key provenance information corresponds to PLoD 1 (Section 5.5 and Table 3).

The provenance information is progressively expanded using PAWs with more detailed paradata, which matches the PLoD 2. It creates through distinct nodes like: Material Entities (ME) describe physical heritage objects through properties like material, type, site provenance, and nomenclature provenance. Digital Entities (DE) include more attributes such as type, format, owner, version, and identifiers. Activities (Ac) are documented with a name, a type, and a unique identifier. Agents and Locations are extracted based on mentions in the activity metadata, with at least a name and optionally more contextual details. When the data is transformed using PROV, it conforms to PLoD 3. When the data is mapped with CIDOC CRM, the provenance information coincides with PLoD 4. The same logic is applied to edges, for example, HAS_AGENT is mapped to crm:P14_carried_out_by and prov:wasAssociatedWith.

6.5. Provenance Queries

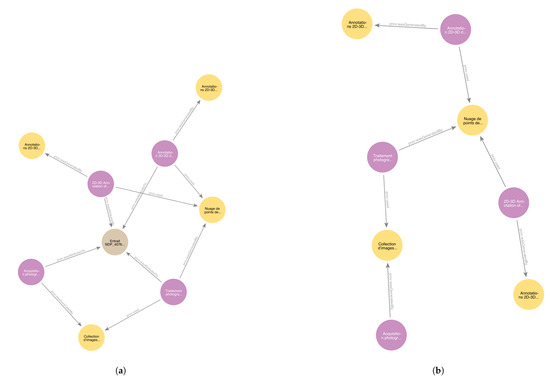

Provenance knowledge captures the links between different scientific processes, data outputs or inputs, and contributing researchers. The tracking of scientific workflows highlights dependencies between heritage artifacts, digital representations, and actors of the knowledge enrichment. In addition to their labels and basic properties, the resulting nodes and relationships carry explicit mappings to PROV and CIDOC CRM entities, stored as properties such as provClass, crmClass, provRelation, or crmProperty. While only simple Cypher queries are shown in this paper for clarity, the system supports more advanced patterns, such as recursive traversals across prov:wasDerivedFrom paths or semantic filters on crm:E55_Type which allow users to construct views of the knowledge provenance chains (Figure 13). In the following table (Table 4), we list the queries in Cypher and sample query results, built on our initial competency questions presented in Table 1.

Figure 13.

(a) Test query CQ2: Show me the lineage of derivations from the material object to the digital objects about Timber 4076-A? (b) Test query CQ3: What is the sequence of activities and the sequence of results of these activities together about extrados voûte? (©Anaïs Guillem, Livio De Luca, Digital Data WG, CNRS).

Table 4.

Competency questions (CQs), queries in Cypher, and query result sample.

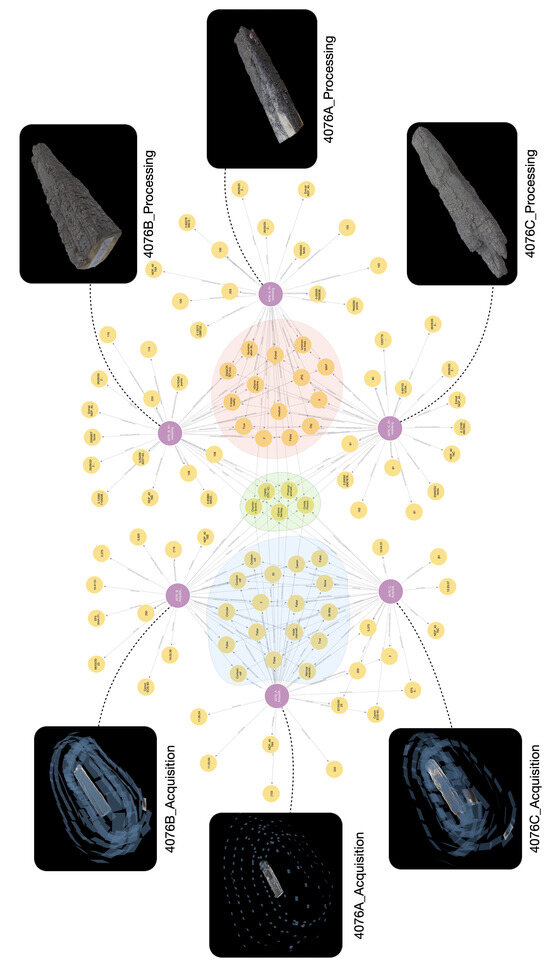

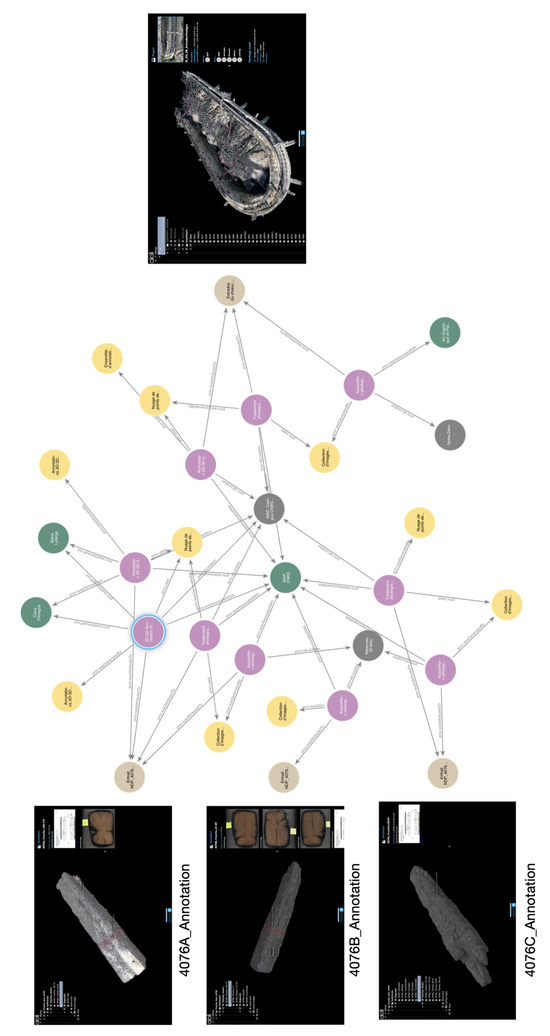

6.6. Graph Visualization

The provenance metadata has been parsed and validated, and it is visualized as a graph-based representation (Figure 14). The figure displays the common information, i.e., matching values belonging to the acquisition or processing as clusters for the artefacts Timber 4076 A/B/C.

Figure 14.

Different photogrammetric setup for the 3 artefacts Timber 4076 (A/B/C) clusters represent the common setting/configurations-matching values-belonging to activities related to the acquisition/processing of the 3 parts of the artefact: blue for the photogrammetric acquisition, red for the photogrammetric processing, green for all activities (©Anaïs Guillem, Livio De Luca, Digital Data WG, CNRS).

The visualization of these connections enhances interpretability, providing a clear representation of the workflows, methodologies, and scientific processes involved in the study of cultural heritage. The graph visualization (Figure 15) illustrates the integration of heterogeneous data related to use case from the perspective of provenance. The resulting provenance graph (Section 6.4) connects the activities (acquisition, processing, annotation), to the material entities (vestiges, architectural elements) or the digital entities (point clouds, images, annotations), and the agents (researchers, institutions). It illustrates the potential that represents massive heterogeneous data integration from the perspective of provenance.

Figure 15.

Resulting graph with related digital entities and annotation projects for the use case (©Anaïs Guillem, Livio De Luca, Digital Data WG, CNRS).

7. Discussion

This paper started by identifying the issue of standards proliferation and the ontology-to-ontology mapping challenge (Section 4.6). One could ask about the usefulness of using a custom intermediate schema (PAW*) based on W7 and mapped with the ontologies of PROV and CIDOC CRM (Section 5). W7 may appear an insufficient specification to represent the provenance granularity. Choosing W7 centralizes the mapping on PAWs, intermediate custom schemas whose stability and documentation are in question. An alternative solution could have been to build a json template already natively calibrated on PROV and CIDOC CRM entities intended to be described. The question is valid, the methodology and examples of similar workflows exist and have already been put in practice [89]. Approaches are far from incompatible: similarly, in the Linked Art project, a hybrid model (LUX) is based on the CIDOC CRM ontology and used as a modeling template. Our approach brings something new as W7 and PAWs specify what one could consider as various Provenance Levels of Detail (PLoD). The Provenance Level of Detail records different degrees of complexity and granularity of the provenance knowledge. It intuitively makes sense for humans. It gives the intrinsic and relevant provenance information because the model W7 itself is built on fundamental interrogative elements (W-questions) that trigger some information about the What, the When, the Where, the Who, the How, the Which, and the Why. The provenance knowledge is established with a methodology of data archaeology (Section 4.3). It means that some information of provenance is based on observation and technical metadata, while others emerge during discussions with the data producers. The provenance information has different granularity and precision. It helps the documentation of datasets with little or without metadata to supplement some provenance information at some degree. It lowers this initial step that has been identified as the main obstacle and facilitates the discussion about metadata documentation with non-specialists. It also has the flexibility of documenting detailed metadata with higher granularity. This flexibility makes it possible to change the Provenance Level of Detail, depending on the information available.

8. Conclusions

Bridging the provenance gap in the data and research activities is possible, once the question of provenance is understood beyond the only aspect of data provenance and examines the knowledge provenance as well. From developing the model of modular provenance to the visualization of the resulting provenance graph, we demonstrated how to reconstruct an understanding of the lineage of data from heterogeneous data and activities and how to reconstruct the coherent provenance knowledge of the case study. The PAW W7-framework allows capturing contextual provenance metadata with various levels of precision and granularity. The flexibility of the W7 model and the metadata schemas PAWs allows to use provenance relations to bridge the knowledge gap. One of the key strengths of this approach is its ability to support multi-dimensional querying and exploratory analysis, navigating across the full provenance chain, from raw photogrammetric data to annotated 3D data, ensuring traceability and contextual integrity. It enables fine-grained queries. It presents a major advancement in the documentation and analysis of heritage data, bridging the gap between metadata management and meaningful interpretation.

In the logic of data exploration and scientific research, the innovation lies in the conceptual modeling of this modular provenance (Section 5). Cultural heritage data is inherently complex and evolving. It requires a granular, modular, dynamic approach to complement the structured identification of entities. This article shows how to lay the foundational layer of provenance knowledge for CH data conceptualized as a Provenance Level of Detail (PLoD) (Section 5.5). The exploration of the Provenance Levels of Detail is possible thanks to the modular semantic modeling that allows projecting n-dimensional data using different ontological frames (Figure 9). The implementation supports this demonstration with a real-life data example (Section 6).

This is where our methodology makes possible, thereafter, a worthy exploration of heterogeneous data beyond disciplinary dogma or expert specialties. It is the direction of future work. By analyzing the data further, we aim to detect emerging patterns and new conceptual entities not yet formalized in the existing ontologies. New concepts can be stabilized and documented, refining the scope of heritage knowledge representation. This iterative refinement is possible thanks to the proposed metadata schemas that remain flexible, extensible, and versatile to capture newly observed complexities, bridging structured semantic models with evolving research insights. Metadata and data are not just static descriptive elements but a rich source of knowledge. By analyzing recurrent patterns and their distributions across datasets, we can identify trends or concepts that require analysis and semantic stabilization. The provenance knowledge that is conceptualized and presented in this article gives the semantic depth for the digital artefacts to deserve to be called digital twin. It becomes a new form of heritage: living, interconnected, intelligible, and open to a plurality of scientific and cultural perspectives that intersect, confront, and complement each other there. In this way, the digital twin constitutes a veritable cathedral of knowledge: it becomes a digital space built collectively to connect and share knowledge about Notre-Dame de Paris.

Author Contributions

Conceptualization: A.G.; data curation: A.G., R.R., F.C., A.P. and L.D.L.; formal analysis: A.G.; methodology: A.G.; software: L.D.L. and V.A.; validation: A.G.; investigation, A.G., V.A. and L.D.L.; resources—photogrammetric acquisition, F.C., resources—Aïoli annotations: R.R.; data curation: A.G., R.R., F.C. and L.D.L.; writing—original draft preparation: A.G. (all manuscript), R.R. (section use case), F.C. (section use case), and L.D.L. (section implementation); writing—editing: A.G.; writing—review: A.G., L.D.L., V.A. and A.P.; visualization: A.G. and L.D.L.; supervision: A.G. and L.D.L.; funding acquisition: L.D.L. and V.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by ERC-2021-ADG ERC nDame_Heritage (Grant agreement ID: 101055423), the ANR project: Paris Seine Graduate School of Humanities Creation Heritage (PSGS-HCH - ANR-17-EURE-0021), and CNRS MITI interdisciplinary program Teatime project.

Data Availability Statement

The supplementary documentation—metadata json schemas PAWs (Paradata Adaptor W*)—is available upon request during the review period and will be open at the time of the publication of the article: https://gitlab.huma-num.fr/gt-cidoc-crm/w7/PAW_schemas [80] with the associated data samples.

Acknowledgments

In this section, the authors would like to acknowledge collaborators: Kévin Réby (K.R.), Pascal Benistant (P.B.), Daouda Ngom (D.N.), Simon Paolorsi (S.P.), Aurore Pfitzmann (A.PF.). Contributors roles: resources: P.B. and D.N.; software: D.N. and S.P.; writing—review: K.R.; project administration: A.PF.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ember, S. This Is Not Fake News (but Don’t Go by the Headline). The New York Times, 3 April 2017. [Google Scholar]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual, 3–10 March 2021; ACM: New York, NY, USA, 2021; pp. 610–623. [Google Scholar] [CrossRef]

- UNESCO. UNESCO Recommendation on Open Science; UNESCO: Paris, France, 2021. [Google Scholar] [CrossRef]

- Guillem, A. Si les données de Notre-Dame nous étaient contées... Données ouvertes, ingénierie des connaissances et maïeutique numérique. In Proceedings of the n-Dame 2024: Une Cathédrale de Données Numériques et Connaissances Pluridisciplinaires, Marseille, France, 19–21 June 2024. [Google Scholar]

- Guillem, A.; Lercari, N. Global Heritage, Knowledge Provenance, and Digital Preservation: Defining a Critical Approach. In Preserving Cultural Heritage in the Digital Age-Sending Out an S.O.S.; Lercari, N., Wendrich, W., Porter, B.W., Burton, M.M., Levy, T.E., Eds.; Equinox eBooks Publishing: London, UK, 2022; pp. 26–41. [Google Scholar]

- Eliot, T.S. The Rock; Houghton Mifflin Harcourt: Boston, MA, USA, 2014. [Google Scholar]

- Kari, K. What Over 7 Years of Building Enterprise Knowledge Graphs Has Taught Me About Theory and Practise. In Proceedings of the Extended Semantic Web Conference (ESWC 2024), Inter IKEA Systems B.V., Hersonissos, Greece, 26–30 May 2024. [Google Scholar]

- Carboni, N.; Bruseker, G.; Guillem, A.; Castañeda, D.B.; Coughenour, C.; Domajnko, M.; de Kramer, M.; Calles, M.R.; Stathopoulou, E.; Suma, R. Data provenance in photogrammetry through documentation protocols. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Volume III-5, pp. 57–64. [Google Scholar] [CrossRef]

- Pamart, A.; De Luca, L.; Véron, P. A metadata enriched system for the documentation of multi-modal digital imaging surveys. Stud. Digit. Herit. 2020, 6, 1–24. [Google Scholar] [CrossRef]

- Georgelin, A.G. Preface. J. Cult. Herit. 2024, 65, 1. [Google Scholar] [CrossRef]

- Dillmann, P.; Liévaux, P.; De Luca, L.; Magnien, A.; Regert, M. The CNRS/MC Notre-Dame scientific worksite: An extraordinary interdisciplinary adventure. J. Cult. Herit. 2024, 65, 2–4. [Google Scholar] [CrossRef]

- Chaoui-Derieux, D. Entre matériaux effondrés et vestiges, table de tri et base de données, opérations préventives et projets de recherche: Itinéraire physique et sémantique d’une cathédrale de données archéologiques. In Proceedings of the n-Dame 2024: Une Cathédrale de Données Numériques et Connaissances Pluridisciplinaires, Marseille, France, 19–21 June 2024. [Google Scholar]

- Zimmer, T.; Chaoui-Derieux, D.; Leroux, L.; Bouet, B.; Azéma, A.; Syvilay, D.; Maurin, E.; Mousset, F. From debris to remains, an experimental protocol under emergency conditions. J. Cult. Herit. 2024, 65, 5–16. [Google Scholar] [CrossRef]

- Ball, P. The huge scientific effort to study Notre-Dame’s ashes. Nature 2020, 577, 153–155. [Google Scholar] [CrossRef] [PubMed]

- Malavergne, O. La construction d’un modèle d’écosystème numérique: Actions documentaires et communautés. In Proceedings of the n-Dame 2024: Une Cathédrale de Données Numériques et Connaissances Pluridisciplinaires, Marseille, France, 19–21 June 2024. [Google Scholar]

- Abergel, V.; Arese, P.; Blettery, E.; Brédif, M.; Christophe, S.; Comte, F.; De Luca, L.; Eberlin, A.; Espinasse, L.; Gouet-Brunet, V.; et al. Le rôle transversal du groupe de travail “données numériques”. In Proceedings of the Naissance et Renaissance D’une cathédrale-Notre-Dame de Paris Sous L’oeil des Scientifiques, Paris, France, 22–24 April 2024. [Google Scholar]

- Néroulidis, A.; Pouyet, T.; Tournon, S.; Rousset, M.; Callieri, M.; Manuel, A.; Abergel, V.; Malavergne, O.; Cao, I.; Roussel, R.; et al. A digital platform for the centralization and long-term preservation of multidisciplinary scientific data belonging to the Notre Dame de Paris scientific action. J. Cult. Herit. 2024, 65, 210–220. [Google Scholar] [CrossRef]

- De Luca, L.; Abergel, V.; Guillem, A.; Malavergne, O.; Manuel, A.; Néroulidis, A.; Roussel, R.; Rousset, M.; Tournon, S. L’écosystème numérique n-dame pour l’analyse et la mémorisation multi-dimensionnelle du chantier scientifique Notre-Dame-de-Paris. In Proceedings of the SCAN’22-10e Séminaire de Conception Architecturale Numérique, Lyon, France, 19–21 October 2022. [Google Scholar]

- Journée-atelier «Venez Avec Votre Objet Patrimonial (à) Augmenter»; EquipEx+ ESPADON; Auditorium Palissy; Fondation des Sciences du Patrimoine: Paris, France, 2025.

- Abergel, V.; Manuel, A.; Pamart, A.; Cao, I.; De Luca, L. Aïoli: A reality-based 3D annotation cloud platform for the collaborative documentation of cultural heritage artefacts. Digit. Appl. Archaeol. Cult. Herit. 2023, 30, e00285. [Google Scholar] [CrossRef]

- Manuel, A.; Véron, P.; De Luca, L. 2D/3D semantic annotation of spatialized images for the documentation and analysis of cultural heritage. In Proceedings of the 14th Eurographics Workshop on Graphics and Cultural Heritage, Eurographics, Genova, Italy, 5–7 October 2016. [Google Scholar]

- Abergel, V. Visualisation interactive de données multidimensionnelles. In Proceedings of the n-Dame 2024: Une Cathédrale de Données Numériques et Connaissances Pluridisciplinaires, Marseille, France, 19–21 June 2024. [Google Scholar]

- Guillem, A.; Samuel, J.; Gesquière, G.; De Luca, L.; Abergel, V. Versioning Virtual Reconstruction Hypotheses: Revealing Counterfactual Trajectories of the Fallen Voussoirs of Notre-Dame de Paris Using Reasoning and 2D/3D Visualization. In The Semantic Web: ESWC 2024 Satellite Events; Meroño Peñuela, A., Corcho, O., Groth, P., Simperl, E., Tamma, V., Nuzzolese, A.G., Poveda-Villalón, M., Sabou, M., Presutti, V., Celino, I., et al., Eds.; Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2025; Volume 15345, pp. 201–219. [Google Scholar] [CrossRef]

- Rousset, M. Opentheso, pour la gestion des vocabulaires contrôlés. In Proceedings of the n-Dame 2024: Une Cathédrale de Données Numériques et Connaissances Pluridisciplinaires, Marseille, France, 19–21 June 2024. [Google Scholar]

- Guillem, A.; Réby, K.; Samuel, J.; Malavergne, O.; Rousset, M.; Abergel, V.; Gesquière, G.; De Luca, L. LLMtheso: A Neuro-Symbolic AI Workflow with LLMs and SKOS to Transform Dirty Data into a Curated Thesaurus. (n.d.) manuscript in preparation.

- Comte, F.; Gattet, E. De l’acquisition à l’archivage: Solutions matérielles et logicielles intégrées pour la numérisation 3D du patrimoine de Notre-Dame. In Proceedings of the n-Dame 2024: Une Cathédrale de Données Numériques et Connaissances Pluridisciplinaires, Marseille, France, 19–21 June 2024. [Google Scholar]