1. Introduction

School dropout, defined as the premature interruption of formal education, arises from a complex interplay of socioeconomic, personal, and institutional factors. Dropping out of school has several negative consequences. At the individual level, it limits earning potential, employability, and social mobility. At the macroeconomic level, it impacts the distribution of human capital, contributing to income inequality and hindering socioeconomic advancement, thus perpetuating cycles of poverty and inequality [

1]. As UNICEF [

2] notes, disrupting the educational process deprives children and adolescents of the essential skills necessary to develop their potential, achieve economic independence, and actively participate in society. School dropout is also associated with a range of social problems, including a lower quality of life, poor health, and gender inequality. Furthermore, Wood et al. [

3] highlight that high school dropout has been associated with higher rates of unemployment, incarceration, and mortality.

Developing early warning systems for dropout identification empowers educators by facilitating the identification of at-risk students. This, in turn, allows educators to plan effective interventions to mitigate this serious problem. Such models have been successfully implemented worldwide [

4,

5,

6,

7], and their importance is underscored by [

2].

One of the inherent challenges in predicting student dropout is the imbalance between classes. The number of students who drop out is typically much lower than those who remain in school, creating a significant data imbalance. As Fernández et al. [

8] point out, this imbalance can negatively impact predictive performance. According to Chen et al. [

9], classification models typically assume a balanced class distribution. However, class imbalance poses a challenge, as traditional algorithms tend to favor more frequent classes, potentially neglecting the less common ones. This imbalance leads to a variation in the performance of the learning algorithms, known as performance bias [

9].

To address the challenges posed by imbalanced datasets, numerous methodologies have been proposed, intervening at different stages of the modeling process. These solutions can be broadly classified into data-level approaches, algorithm-level techniques, or hybrid strategies [

9,

10].

Data-level approaches directly modify the training data to rebalance class distributions, often through techniques like oversampling minority classes or undersampling majority classes. A key advantage of these methods is their independence from specific learning algorithms, allowing easy integration with a variety of models [

9]. However, Altalhan et al. [

10] point out that this approach may discard potentially useful information or introduce noise through synthetic data.

Algorithm-level approaches, on the contrary, focus on adapting or developing machine learning algorithms to be more responsive to underrepresented groups [

9]. Methods within this class encompass cost-sensitive learning, ensemble methods, and algorithm-specific adjustments [

10]. While effective, these algorithms may not fully exploit the available data or may require complex adjustments for different algorithms. Finally, hybrid approaches strategically combine the advantages of both data-level and algorithm-level techniques to further improve performance. However, combining multiple techniques makes hybrid approaches complex, potentially leading to overfitting, increased computational demands, and a greater need for careful design. For a comprehensive overview of all possibilities to tackle imbalanced problems, see [

9,

10].

In this paper, we focus on data-level approaches for four reasons: (i) they can be used with any classifier; (ii) ease of implementation; (iii) direct control over class distribution; and (iv) widespread use, as indicated by the literature review. Numerous balancing techniques have been developed, including Random Undersampling (RUS), Synthetic Minority Oversampling Technique (SMOTE) [

11], and Random Oversampling Examples (ROSE) [

12], among others. SMOTE is frequently considered a benchmark balancing technique [

9], as it attempts to address class imbalance by creating synthetic data points for the minority class.

Despite the inclination to use balancing techniques, some dropout classification models do not address the imbalance directly. These models, nevertheless, have demonstrated a satisfactory ability to identify at-risk students [

13,

14,

15,

16,

17,

18,

19]. In contrast, other researchers automatically incorporate balancing methods into their dropout models [

13,

20,

21]. Some have even investigated the best balancing method by comparing distinct approaches [

22,

23]. Still other works examine the efficiency of balancing methods by comparing models trained with and without these algorithms [

24,

25,

26,

27,

28,

29,

30,

31]. These studies suggest that the effect of the balancing methods is still an ongoing question.

A key conclusion from these works is that there is no universally optimal solution to the problem of imbalanced data. Some models built without balancing achieve strong results, while other studies find evidence supporting or refuting the use of balancing methods. Thus, testing various methods to choose the most appropriate for the data at hand would be the safest approach.

This research investigates the potential of simple balancing methods to improve the performance of classification models in predicting school dropouts for all state public high schools in Espírito Santo, Brazil, focusing on students in grades one, two, and three. Given the potential impact of imbalanced data on classifier performance, we tested three distinct balancing methods: RUS, SMOTE, and ROSE. RUS was chosen because of its simplicity and computational efficiency, while we chose SMOTE and ROSE to compare a traditionally used technique with an innovative one. While SMOTE is widely used and often considered a benchmark [

8], ROSE offers a different approach by creating new data using statistical kernels, potentially expanding the decision region [

12]. Furthermore, we noted a lack of applications of ROSE in the context of student dropout prediction. For classification in this study, we selected three prominent machine learning models: Logistic Regression (LR), Random Forest (RF), and Naive Bayes (NB). Logistic Regression was chosen for its inherent interpretability, allowing for a clear understanding of feature importance through its coefficients. Random Forest was selected for its ability to achieve high predictive accuracy by leveraging ensemble learning techniques. Finally, Naive Bayes was included due to its computational efficiency, ease of estimation, and demonstrated effectiveness in high-dimensional problems. These three models have been widely applied in the literature for predicting student dropout. We evaluated the performance of these classifiers after calibrating them with and without data balancing algorithms.

The results indicated that the inherent class imbalance in dropout data presents a significant challenge to effective model training. However, results also demonstrated that data balancing techniques, particularly RUS and ROSE, can improve model performance in terms of the majority of the metrics considered. While LR exhibits notable stability across different balancing techniques, RF models show greater variability, with RUS and ROSE generally producing the best results. Ultimately, we highlight the importance of carefully considering class imbalance and selecting appropriate balancing techniques, as the optimal choice may vary depending on the academic year and performance metric.

3. Methodology

In this section, we present the classifiers used (LR, RF, and NB), as well as a brief introduction to the balancing methods employed (RUS, SMOTE, and ROSE). The dependent variable is defined as

, where 1 represents the dropout and 0 otherwise. As predictors, we used a set of personal information, school infrastructure, and administrative data regarding student performance and attendance during the first quarter of the year. The goal is to forecast whether the student may drop out during the entire year.

Table 1 lists all the predictors considered.

It is important to note that several models were estimated. Some were calibrated using the original imbalanced data, while others were trained with data balanced using the methods employed in this research. For this reason, for each specific grade of secondary school, twelve configurations were tested: (I) LR with LASSO, calibrated using the original imbalanced data; (II) RF, calibrated with the original data (imbalanced); (III) NB, calibrated with the original data (imbalanced); (IV) LR with LASSO, calibrated using RUS; (V) RF, calibrated with RUS; (VI) NB, calibrated with RUS; (VII) LR with LASSO, calibrated with SMOTE; (VIII) RF, calibrated with SMOTE; (IX) NB, calibrated with SMOTE; (X) LR with LASSO, calibrated with ROSE; (XI) RF, calibrated with ROSE; (XII) NB, calibrated with ROSE.

3.1. Classifiers

3.1.1. Logistic Regression

Logistic Regression is a common classifier and has been applied to several classification problems, such as dropout forecasting [

18,

20,

29], credit risk prediction [

33,

34], bankruptcy prediction [

35], oil market efficiency [

36], and other applications. Mathematically, considering a binary dependent variable

, the logistic regression is given by the following

where

is a constant,

is a column vector of coefficients,

is a column vector of

k predictors, and

represents the linear combination of predictor variables. We used Logistic Regression in conjunction with the LASSO (

least absolute shrinkage and selection operator), a technique used to select relevant variables. The primary advantage is the simultaneous estimation of model parameters and selection of the most relevant variables, while discarding irrelevant ones. Formally, the log-likelihood function is given by the following:

where

indicates the sample size, and

is the tuning parameter that controls the shrinkage, usually obtained by k-fold cross-validation. For more details, see [

37,

38]. In this research, we employed 5-fold cross-validation to select both the optimal hyperparameters (by minimizing the area under the ROC curve) and the probability threshold for converting predictions into binary outcomes.

3.1.2. Random Forest

Random Forests are tree-based ensemble methods built on the concept of stratification and segmenting predictor space [

37]. They can be used for regression and classification problems [

37,

38]. Applications of RF are, for instance, credit modeling [

33,

34], dropout identification [

20,

29], stock market trading prediction [

39], water temperature forecasting [

40], and others. In essence, Random Forests aggregate a large number of Decision Trees and combine their individual predictions to obtain a final classification or prediction. Bootstrap and other ensemble methods are commonly employed in the construction of these trees.

Calibrating a Random Forest model typically involves tuning three key hyperparameters: the number of trees (

), the minimum node size (

), and the number of features sampled at each split (

). The number of trees determines the size of the ensemble, with too few trees potentially limiting the ability to capture complex patterns and too many trees potentially leading to overfitting [

41]. The number of features sampled at each split controls the randomness of the model, promoting diversity among the trees. The minimum node size dictates the minimum number of observations required in a terminal node to be considered for further splitting [

42].

We employed a grid search to explore different hyperparameter values and compute the error on the validation set, with 5-fold cross-validation to find the optimal hyperparameters by minimizing the area under the ROC curve. We ran tests with a sequence of trees in intervals of 100, ranging from 100 to 1000, and fitted the best model using the caret package in R [

43]. The hyperparameter mtree was selected in intervals of 1, ranging from 1 to the total number of variables minus one. Finally, the node size varied from 1 to 20.

3.1.3. Naive Bayes

According to [

38], the Naive Bayes classifier is particularly suitable for high-dimensional explanatory variables. The applications of NB models are, for instance, in dropout forecasting [

14], lemon disease detection [

44], basketball game outcomes [

45], and medical problems [

46], among others. It is based on Bayes’ theorem, which, for a binary classification problem where

and

, states the following:

To apply this theorem, we can use training data to estimate

and

. These estimates then allow us to determine

for any new instance of the predictor vector

. However, estimating the distribution related to

can be challenging, especially in high-dimensional spaces. The Naive Bayes Classifier addresses this challenge by making a key assumption: given a class

Y, the features in

are conditionally independent. This simplification allows the classifier to estimate the class-conditional marginal densities separately for each feature. For continuous features, this is typically performed using one-dimensional kernel density estimation. For discrete predictors, an appropriate histogram estimate can be used [

38]. Under this independence assumption, Equation (

3) can be rewritten as follows:

In this research, we employed a grid search approach for hyperparameter tuning of the Naive Bayes model. The tuned hyperparameters included the bandwidth of the kernel density estimation and the Laplace smoothing parameter, which addresses the issue of zero probabilities for unseen categories during training. To ensure robust model evaluation and selection, we implemented a 5-fold cross-validation procedure. For the Naive Bayes model, we did not optimize a threshold for transforming probabilities into binary predictions.

3.2. Balancing Methods

In this section, we briefly introduce the methods employed in this research: the Random Undersampling (RUS), synthetic minority oversampling technique (SMOTE) [

8,

11], and random oversampling examples (ROSE) [

12]. For a more comprehensive understanding of these methods, see [

47,

48].

Random Undersampling involves constructing a balanced sample by randomly removing individuals from the majority class, thereby reducing its size while preserving all elements of the minority class. This approach decreases the overall sample size.

Figure 1 illustrates this simple approach.

Chawla et al. [

11] proposed the SMOTE, which interpolates neighboring elements of the minority class to create synthetic elements. Consequently, the algorithm augments the minority class by incorporating simulated data while randomly removing elements from the majority class. According to the authors, this approach results in a larger and less specific decision region, thus enhancing the quality of the estimation.

Figure 2 illustrates the construction of the final sample.

While SMOTE effectively addresses class imbalance by generating synthetic minority class instances, it suffers from key limitations highlighted [

9,

15]. The potential for generating duplicate synthetic samples, especially within complex or overlapping datasets, can lead to redundancy and overfitting. Furthermore, original SMOTE overlooks the underlying data distributions, potentially creating synthetic samples that do not accurately represent the true minority class and are not well-suited for high-dimensional data. To address these shortcomings, numerous variations of SMOTE have been developed, such as Borderline-SMOTE, ADASYN, SMOTE-N, among others [

10,

29].

The ROSE was proposed by [

12] and is based on the principles of SMOTE. The main distinction lies in the generation of the synthetic. In ROSE, the authors replace the interpolation technique with statistical kernels, thus overcoming SMOTE’s drawback of neglecting the underlying data distribution. This enables an expansion of the decision region beyond interpolation, resulting in newly generated data that differs from the original but remains statistically equiprobable. As a result, the decision region is expanding [

12].

4. Experiments

4.1. Dataset

The dataset used in this study comprises variables collected from the Sistema Estadual de Gestão Escolar do Espírito Santo (SEGES) (State School Management System of Espírito Santo, translated freely) and the Instituto Nacional de Estudos e Pesquisas Educacionais Anísio Teixeira (INEP) (National Institute for Education Studies and Research Anísio Teixeira, translated freely).

Table 1 lists all 49 variables used.

The objective is to classify students for the 2020 academic year, using data acquired shortly after the conclusion of the first academic quarter. This classification enables the calculation of student dropout probabilities during 2020 for students enrolled in the first, second, and third years of high school across all state schools in Espírito Santo, Brazil. To this end, the model was calibrated using data from the first academic quarters of 2018 and 2019. Furthermore, the calibrated model was also employed to forecast the dropout rate for 2022, thereby evaluating the predictive accuracy of the model against two distinct datasets. The results for 2022 are presented in

Appendix A.1 through

Table A1,

Table A2,

Table A3 and

Table A4.

4.2. Results

The methods described above were used to forecast student dropout in 2020 for the first, second, and third years of high school in all public state schools in Espírito Santo, Brazil. To assess whether balancing methods improve forecasting metrics, we estimated twelve distinct models for each grade: (I) LR with LASSO, calibrated using the original imbalanced data; (II) RF, calibrated with the original data (imbalanced); (III) NB, calibrated with the original data (imbalanced); (IV) LR with LASSO, calibrated using RUS; (V) RF, calibrated with RUS; (VI) NB, calibrated with RUS; (VII) LR with LASSO, calibrated with SMOTE; (VIII) RF, calibrated with SMOTE; (IX) NB, calibrated with SMOTE; (X) LR with LASSO, calibrated with ROSE; (XI) RF, calibrated with ROSE; (XII) NB, calibrated with ROSE. The results presented in this section refer to models calibrated with data from 2018 and 2019, with the aim of forecasting dropout in 2020. In addition to this dataset, we also used the same calibrated models to predict school dropout in 2022. The results of this second exercise are presented in the

Appendix A.1.

Table 2 displays the number of dropouts and non-dropouts in our data set for the first, second, and third years of high school in 2018, 2019, and 2020. As can be observed, the dataset shows a significant class imbalance.

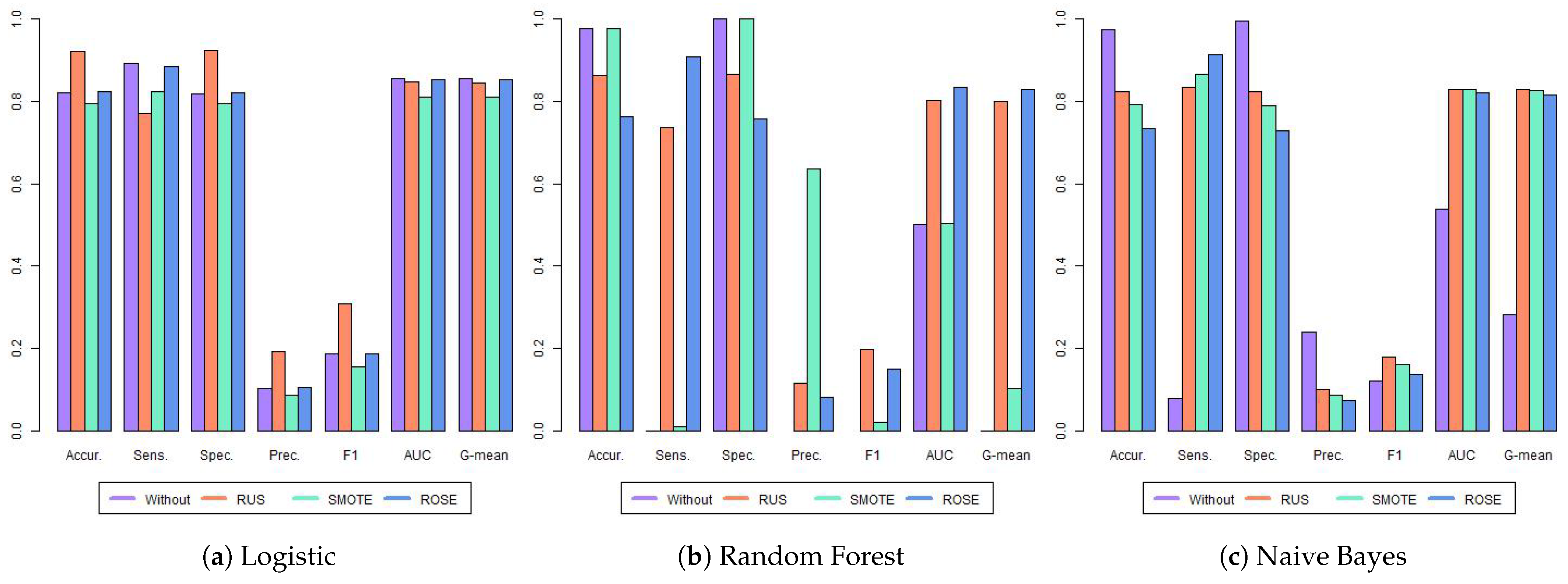

Table 3 and

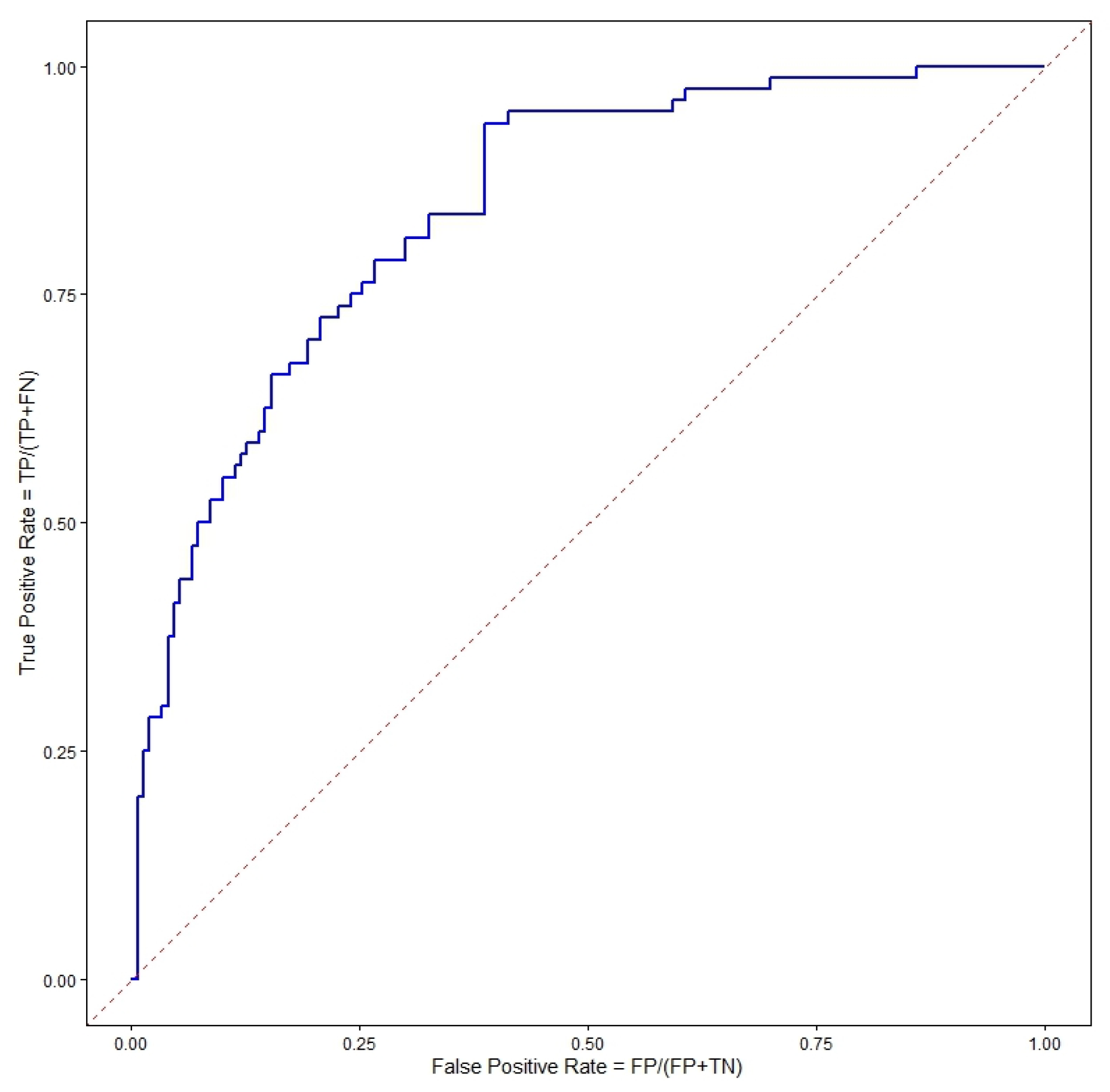

Figure 3 present the results for all twelve models. The highest value for each metric is highlighted in bold. The metrics for RF, NB, and RF with SMOTE models were not considered, as these models have significant limitations in accurately identifying dropout cases. The mathematical definition of each metric is based on the confusion matrix (

Table A5) and ROC curve (

Figure A1). For more details, see

Appendix A.2. Comparing the three models estimated with the original data—LR, RF, and NB—it is evident that RF and NB struggled to identify students at risk of dropping out, exhibiting sensitivities approaching or reaching zero. This also prevented the calculation of precision and F1 scores for the RF. This issue stemmed from the severe imbalance in the data set, as shown in

Table 2. The LR model performed better with the imbalanced data, achieving a sensitivity of 0.791 and an AUC of 0.819.

When training the models with balanced data, we observed an increase in sensitivity across all models, except for the RF with SMOTE. The RF with SMOTE still faced considerable challenges in identifying students who dropped out, with a sensitivity of only 0.021. This resulted in very high accuracy (0.967) and perfect specificity, but at the expense of practical usefulness for identifying at-risk students.

Taking into account the F1, AUC, and G-mean metrics, which are commonly used for imbalanced datasets and when looking for a metric that balances different aspects of model performance, the best results were achieved by NB with SMOTE, RF with ROSE, and RF with ROSE, respectively. The metrics were for NB SMOTE (F1: 0.318, AUC: 0.788, and G-mean: 0.788) and RF with ROSE (F1: 0.215, AUC: 0.822, and 0.821), respectively. While AUC scores varied across most models, the differences were relatively small (ranging from 0.778 to 0.822). Notable exceptions were the RF trained on the original and SMOTE-balanced data and the NB model trained on the original data, all of which exhibited poor performance. The LR and NB models demonstrated notable stability across different data balancing techniques, with AUC scores ranging from 0.778 to 0.821 and 0.788 to 0.806, respectively, indicating low variance in performance. In contrast, the Random Forest (RF) models exhibited high variability, performing well with RUS and ROSE but poorly with the original data and SMOTE.

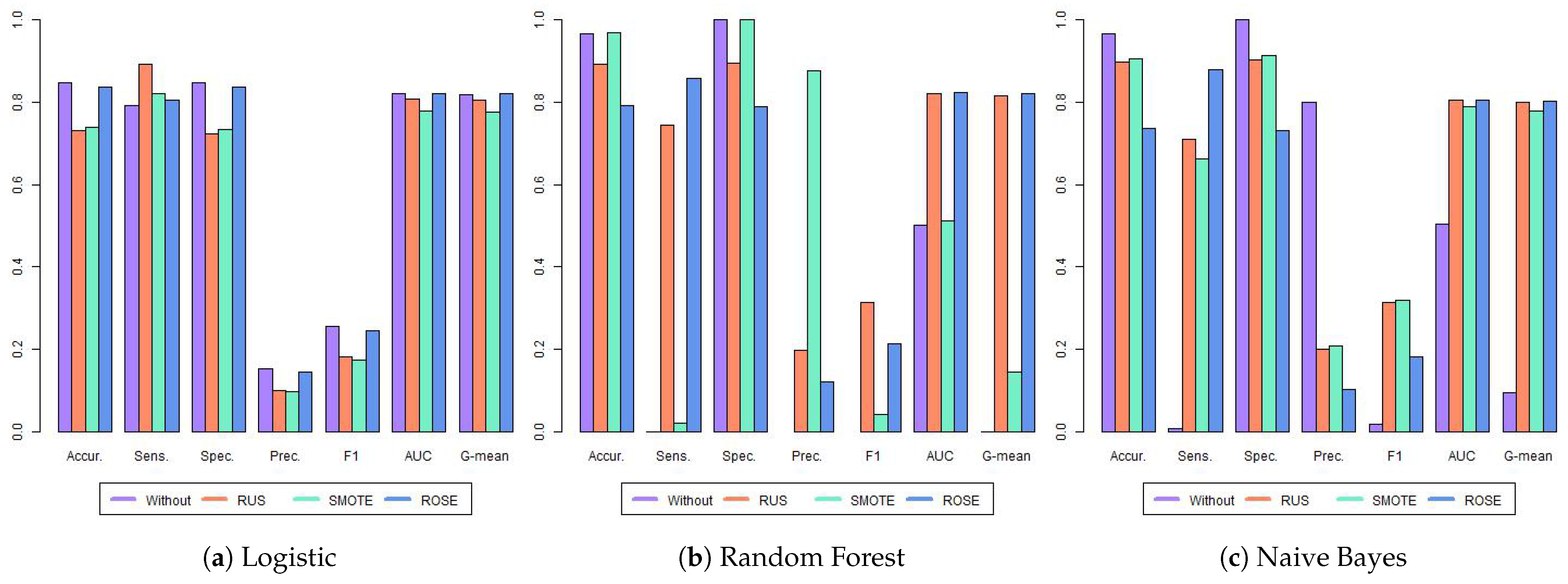

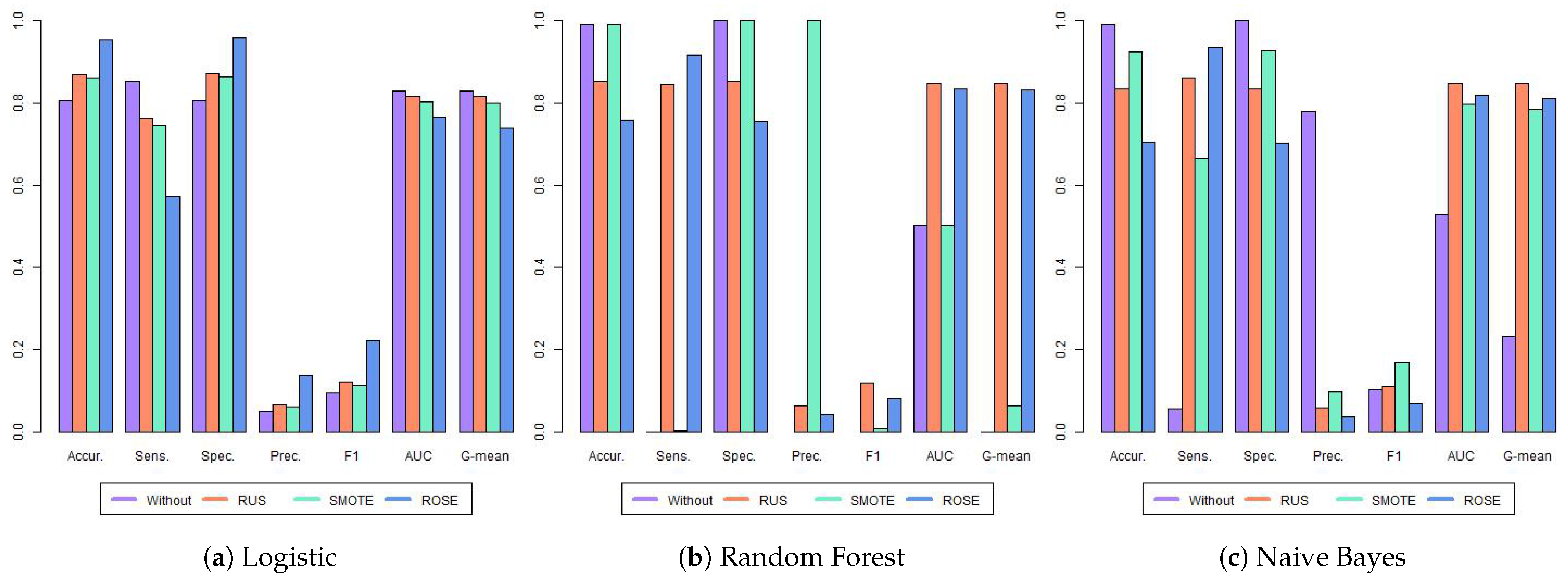

Taking into account the results for the second year of high school,

Figure 4 and

Table 4 show the results for the twelve models. The highest value for each metric is highlighted in bold, following the criterion adopted in

Table 3. Comparing the LR, RF, and NB models trained on the original (unbalanced) data, we observed a similar pattern to that found in the first grade. The RF and NB models again exhibited poor performance in identifying at-risk students, with near-zero sensitivity. However, the LR model performed substantially better on the imbalanced data, achieving AUC and G-mean values of 0.855 and 0.854, respectively. Furthermore, LR with RUS yielded the best F1-score.

Finally,

Table 5 and

Figure 5 present the results for the third year of high school for 2020. Using the original imbalanced data, the LR model maintained a similar level of performance as in the first and second grades, with an accuracy of 0.806, sensitivity of 0.852, and AUC of 0.829. This consistency suggests the LR model is robust across different high school grades. In contrast, the RF and NB models continued to perform poorly on the original data, exhibiting near-zero sensitivity. This mirrors the findings from previous grades, suggesting that RF and NB models struggle to identify at-risk students when the data is highly imbalanced.

When applying the balancing techniques, we found that the highest AUC was achieved by the RF with RUS. Conversely, the best accuracy, specificity, precision, and F1 were attained by LR with ROSE. Regarding G-mean, there is a tie between the two models (RF with RUS and NB with RUS). Once again, Random Forest with SMOTE showed a poor performance in identifying dropouts.

Table 6 presents the optimal configuration for each metric and academic year. As observed, data balancing techniques improved the majority—approximately 95%—of the metrics analyzed. The only exception was the AUC and the G-mean metric for the second year of high school, which showed no improvement with any balancing methods.

Considering only the best-performing configurations, models without balancing algorithms achieved the top result in just 10% of cases. SMOTE was among the best in 19% of the cases, RUS in 33%, and ROSE in 38%. Regarding classifiers, LR was the most prevalent, appearing in 52% of the top configurations, while RF and NB comprised 23% and 28%, respectively.

4.3. Conclusions

This paper aims to evaluate the effect of data balancing algorithms on the performance of predictive models applied to a highly imbalanced education dataset. To achieve this goal, we explore three classification approaches (LR, NB, and RF) in conjunction with three balancing techniques (RUS, SMOTE, and ROSE). In addition, the classifiers were trained on the original unbalanced data, allowing a direct comparison of the effectiveness of each approach.

The methods described above were trained with data from the first quarter of 2018 and 2019 and, subsequently, were used for forecasting student dropout in 2020 for the first, second, and third grade of high school in all state schools in Espírito Santo, Brazil. In addition, as a second exercise, we also employed the same estimated models to forecast student dropout in 2022.

Our results demonstrated that the inherent class imbalance in dropout data poses a significant challenge to effective model training. In particular, the RF and the NB struggled to identify at-risk students when trained on the original imbalanced data, as evidenced by its low sensitivity.

However, the application of data balancing techniques, particularly RUS and ROSE, could improve the performance of the models. Restricting analysis to the optimal configurations identified, for the year 2020, Random Undersampling (RUS) was observed in 33% of instances, with ROSE present in 38%. The SMOTE algorithm was utilized in 19% of the configurations, while classifiers trained directly on imbalanced data yielded superior results in only 10% of cases. In 2022, the optimal strategies consisted exclusively of ROSE (24%) and RUS (76%).

The findings of this study highlight the potential of balancing methods to improve predictive performance. However, choosing the best algorithm is not straightforward and depends on the metrics considered. In most cases, RUS and ROSE showed the best performance, but it is important to note that all methods could potentially decrease performance. This could be because the algorithms considered, such as those employing Random Undersampling, potentially discard valuable information from the majority class, hindering accurate estimation. Similarly, oversampling techniques risk generating duplicate or unrepresentative synthetic samples, particularly within complex or overlapping datasets. To address these limitations, further investigation into alternative data balancing techniques is recommended, with particular emphasis on techniques derived from SMOTE. Moreover, the exploration of algorithm-level methods for highly imbalanced data, such as cost-sensitive learning or ensemble methods, warrants further study. Future research should also consider exploring alternative modeling approaches tailored to imbalanced data. Specifically, testing different link functions within the framework of Logistic Regression presents a promising avenue for improvement. By employing link functions that better accommodate skewed class distributions, it may be possible to develop more robust and accurate classification models, minimizing the need for aggressive data balancing.

These findings have practical implications for the development of early warning systems for school dropout. The use of data balancing techniques and careful selection of the model can significantly improve the ability to accurately identify at-risk students, enabling targeted interventions. It is important to note that the optimal balancing technique can vary depending on the academic year and the specific performance metric. In general, this research underscores the importance of carefully considering class imbalance and selecting appropriate balance techniques for the development of accurate and effective school dropout prediction models.