Abstract

In the presence of multicollinearity, the ordinary least squares (OLS) estimators, aside from BLUE (best linear unbiased estimator), lose efficiency and fail to achieve minimum variance. In addition, these estimators are highly sensitive to outliers in the response direction. To overcome these limitations, robust estimation techniques are often integrated with shrinkage methods. This study proposes a new class of Kibria Ridge M-estimators specifically developed to simultaneously address multicollinearity and outlier contamination. A comprehensive Monte Carlo simulation study is conducted to evaluate the performance of the proposed and existing estimators. Based on the mean squared error criterion, the proposed Kibria Ridge M-estimators consistently outperform the traditional ridge-type estimators under varying parameter settings. Furthermore, the practical applicability and superiority of the proposed estimators are validated using the Tobacco and Anthropometric datasets. Overall, the new proposed estimators demonstrate good performance, offering robust and efficient alternatives for regression modeling in the presence of multicollinearity and outliers.

1. Introduction

In regression analysis, the ordinary least squares (OLS) estimator is one of the most widely used techniques, relying on the assumptions that the explanatory variables are independent and that the error terms are homoscedastic and devoid of outliers. In the presence of multicollinearity and outliers in the predictor variables, OLS estimates become unstable, often exhibiting wrong signs and inflated variances that lead to incorrect inferences. Multicollinearity, a condition characterized by strong linear relationships among predictors, was first introduced by Ragnar Frisch in the 1930s [1]. It has since emerged as a fundamental area of interest in econometrics and regression modeling [2]. Severe multicollinearity reduces the clarity of regression coefficient interpretation, increases their standard errors, and lowers the overall precision and reliability of the model. Although ridge regression may enhance prediction accuracy through variance reduction, its dominance over OLS is not universal; it depends on the quantity of multicollinearity, the signal-to-noise ratio, and the error variance. As a result, it becomes difficult to discern the distinct contributions of each predictor, which could have a negative impact on model inference and prediction. Ridge Regression (RR) [3] provides a strong substitute to get around these restrictions by providing a controlled bias via a shrinkage parameter. This modification lowers variation, lessens the negative impacts of multicollinearity, and improves the general stability and accuracy of the coefficient estimations. More precisely, RR adds a bias term that is managed by the ridge tuning parameter . The selection of k has a direct impact on the estimator’s mean squared error (MSE) and is essential in establishing the trade-off between bias and variance. Various methods for selecting the optimal k have been proposed in the literature, reflecting the continuous evolution of ridge-type estimators, see e.g., Hoerl and Kennard [3], Kibria [4], Khalaf et al. [5], and Dar and Chand. [6]. Outliers are observations that significantly depart from the typical data pattern. They can cause heteroscedasticity and skew model estimate. Gujarati and Porter [7] highlighted that such extreme observations may violate the constant variance assumption, thereby compromising the reliability of OLS estimates. The presence of outliers not only biases parameter estimates but also inflates residual variability, making traditional estimation techniques less effective.

To address the dual challenge of multicollinearity and outliers, Silvapulle [8] proposed the robust ridge or ridge M-estimator (RM), which combines the principles of RR and M-estimation. By incorporating robust loss functions and adaptive ridge parameters, the RM estimator enhances stability in the presence of both multicollinearity and data contamination. Recent studies have extended this framework, suggesting modified ridge M-estimators (MRM) that yield improved performance in terms of MSE and robustness, e.g., Ertas [9], Yasin et al. [10], Majid et al. [11], Wasim et al. [12], Alharthi [13,14], and Akhtar et al. [15].

To address the challenges posed by multicollinearity and outliers, this study introduces a class of Kibria-type ridge M-estimators designed to achieve robustness and stability under such adverse conditions. Following the structure of Kibria [4], we proposed five ridge-type estimators that simultaneously address high predictor intercorrelation and data contamination, thereby improving estimation efficiency and reliability. Using extensive simulations and two real-world datasets, the performance of the proposed estimators is compared with that of well-known existing estimators through the MSE criterion.

The remainder of this paper is structured as follows: Section 2 elaborates on the methodological framework, providing an overview of the existing ridge-type estimators and the formulation of the proposed estimators. Section 3 outlines the Monte Carlo simulation design used to evaluate the performance of the estimators. Section 4 presents a detailed analysis of the simulation findings along with their interpretation. Section 5 demonstrates the applicability and practical relevance of the proposed estimators through two real-data examples. Finally, Section 6 summarizes the key findings and concludes the study with possible directions for future research.

2. Methodology

Consider the standard multiple linear regression model:

where is an vector of response variable and

is a matrix (also known as design matrix) of the observed regressors, is a vector of unknown regression parameters, and is an vector of random errors. is independently and identically distributed with a zero mean and a covariance matrix , where represents an identity matrix of order n. The OLS estimator can be estimated as

which depends primarily on the characteristics of the matrix , that is, when the predictors are perfectly correlated, it is impossible to find the inverse of the matrix , so that the matrix becomes ill-conditioned [16,17].

To address this issue, Hoerl and Kennard [3] introduced the concept of the RR. In this method, a non-negative constant, known as the ridge parameter (k), is added to the diagonal elements of . This adjustment helps control the bias of the regression estimates towards the mean of the response variable, thereby stabilizing the estimator variability and mitigating the effects of multicollinearity. The RR estimator is defined as

Then, the equation can be written as

where . The estimator may exhibit bias; however, it generally has a lower MSE compared to the OLS estimator.

In the presence of multicollinearity and outliers, the MSE of both the OLS and RR estimators are inflated. To address this issue, Silvapulle [8] proposed a robust ridge M-estimator (RRM) by substituting with in Equation (3), as follows:

where is called M-estimator which can be obtained by solving the equation and , where are the residuals and obtained as and is a function used to adjust how much weight each residual contributes to the overall fitting process. This function also depend on whether the estimator has good large sample theory [18]. The above equations together describe the conditions under which the robust estimator achieves a balance between the residuals and the predictor variables, with function ensuring that outliers have a limited effect on this balance. According to Montgomery et al. [19], s is a robust estimate of scale. A popular choice for s is the median absolute deviation defined as:

The tuning constant 0.6745 makes s an approximately unbiased estimator of if n is large and the error distribution is normal. The choice of function depends on the desired level of robustness and the type of deviations from the classical assumptions (e.g., outliers, heavy tails) [20].

2.1. Canonical Form

Suppose that Q is a () orthogonal matrix such that and , where contains the eigenvalues of the matrix . Then, the canonical form of model (1) is expressed as:

Given that , the vector of estimates can be expressed as , where . Moreover, the MSE of is equal to the MSE of . The canonical form for Equations (2)–(4) can be written as:

where , for j=1,2, …, p. Following [21], the MSE of above estimators are given as follows:

where represents the least square estimator of error variance of the model 1, is the value of and is the eigenvalue of the matrix , are the diagonal elements of the matrix . Furthermore, ref. [21] showed that

if , where

2.2. Existing Estimators

This section describes some of the popular and widely used ridge estimators defined in Equations (7)–(13). The pioneer work of [3] introduced the estimator of as the ratio of estimated error variance and estimate of using OLS as

where .

Later, ref. [3] suggested the following estimator to determine a single value for k,

where .

In another study, ref. [22] proposed the ridge parameter by using harmonic mean of as

Furthermore, ref. [4] introduced five additional ridge estimators, which are defined as follows:

where GM stands for geometric mean.

where HM stands for harmonic mean.

where max stands for maximum.

where min stands for minimum.

2.3. Proposed Estimators

Previous studies by McDonald and Galarneau [23], McDonald [24], and Majid et al. [25] demonstrated that the optimal choice of the biasing parameter for the RM is primarily influenced by several factors, including the degree of multicollinearity, presence of outliers, number of predictors, error variance, the underlying error distribution, and sample size. According to Suhail et al. [26], when datasets exhibit severe multicollinearity combined with a large number of predictors, substantial error variance, and large sample sizes, the conventional RM estimators often fail to achieve the minimum MSE. To address these limitations, it becomes essential to develop improved RM estimators that maintain efficiency under adverse conditions, such as high multicollinearity, large error variance, increased sample size, and the presence of influential outliers in the response direction.

Following the suggestion of Silvapulle [8], the robust version can be obtained by replacing with and with . Using Huber function [20], can be obtained as

where denotes the Huber weight function, n is the sample size, and p is the number of predictors. Building upon this idea, we propose the following robust versions of the ridge estimators originally introduced by Kibria [4].

where , , max, and min are defined as mentioned above. Each of these proposed estimators incorporates a robust measure of dispersion () and modified eigenvalue structure (), thereby enhancing stability and reliability in the presence of multicollinearity and influential observations.

3. Simulation Setup

A simulation-based investigation was carried out in which the datasets were deliberately constructed to reflect predefined levels of multicollinearity. Using these datasets, the performance of the ridge estimators and the proposed ridge M-estimators was evaluated. The simulation study generated data sets that varied across several factors: the number of regressors (), the sample size (), the degree of multicollinearity among predictors (), and the presence of outliers in the response variable. Additionally, different levels of error variance were considered by setting , enabling a thorough evaluation of estimator performance in a range of data scenarios. Following ref. [23] and the more recent implementations in ref. [27], the predictor variables were generated as

where are independent draws from the standardized normal distribution, and controls the pairwise correlation between predictor variables. To study different levels of multicollinearity. The dependent variable was generated by the usual linear model

where . In line with ref. [26], and ref. [27], we set and chose the coefficient vector to be the normalized eigenvector corresponding to the largest eigenvalue of the matrix so that .

- To examine robustness, we contaminated the response by introducing outliers by considering 10% and 20% contamination levels. For each contamination level, a corresponding fraction of observations was replaced by outliers whose errors were drawn from a distribution with inflated variance. Concretely, if the nominal error variance is , the contaminated error variance was taken as

The MSE criterion has been used to compare the performance of estimators. Mathematically, it is given as

where indicates the number of Monte Carlo runs. The objective is to achieve an MSE value close to zero. The MSEs of the ridge estimator are influenced by the shrinkage parameter k, which in turn depends on factors such as the sample size n, the number of predictors p, the variance , and the correlation .

4. Results and Discussion

Table 1 lists the MSE values of the OLS, ridge-type (RR, HKB, KIB1–KIB5), M-estimator, and proposed Kibria M-type estimators (KIB.M.1–KIB.M.5) under various levels of multicollinearity () and error variances () for , , and a 10% outlier contamination in the response direction. The results indicate that the OLS and conventional ridge estimators (RR, HKB) exhibit substantially higher MSE values under strong multicollinearity and higher variance conditions. In contrast, the proposed KIB.M estimators, particularly KIB.M.1 and KIB.M.2 consistently yield smaller MSE values across most settings, demonstrating greater robustness to outliers and efficiency compared to existing estimators. These findings highlight the improved performance of the proposed estimators, especially when both multicollinearity and outliers are present in the data.

Table 1.

Estimated MSE with , , and 10% outlier in response direction.

Table 2 displays the MSE values of the OLS, ridge-type, M-, and proposed KIB.M estimators for the case of , , and 10% outliers in the response (second scenario). The results are reported under varying degrees of multicollinearity () and different error variances (). As expected, the MSE values of the conventional estimators (OLS, RR, and HKB) increase sharply with higher and , indicating their sensitivity to multicollinearity and outlier contamination. In contrast, the proposed KIB.M estimators, particularly KIB.M.1, KIB.M.2, and KIB.M.3, maintain smaller and more stable MSE values across all conditions indicating their improved efficiency and robustness properties when compared with traditional and ridge-type estimators. The results further confirm that the proposed estimators perform consistently well even when sample size increases, demonstrating their reliability and adaptability under different error structures for large sample.

Table 2.

Estimated MSE with , , and 10% outlier in response direction.

Table 3 tabulates the MSE values of different estimators when considering , , and 10% outliers in the response (third scenario) with varying levels of multicollinearity () and different error variances (). It is evident that the MSE values of OLS, RR, and HKB estimators increase rapidly with higher correlation and larger error variance, reflecting their sensitivity to multicollinearity and the presence of outliers. Conversely, the proposed KIB.M estimators, particularly KIB.M.1, KIB.M.2, and KIB.M.3, continue to demonstrate superior performance with substantially lower MSE values across all settings. This indicates that the proposed estimators are not only robust to outliers but also maintain their efficiency as the sample size increases. Overall, the results confirm that the KIB.M family performs consistently better than traditional and ridge-type estimators in large-sample scenario affected by multicollinearity and outlier contamination.

Table 3.

Estimated MSE with , , and 10% outlier in response direction.

Table 4, Table 5 and Table 6 present the estimated MSEs of the OLS, ridge-type, M, and proposed KIB.M estimators under varying conditions of sample size (), number of explanatory variables () with the presence of 10% outliers in the response direction. Each scenario was analyzed for different degrees of multicollinearity () and error variances ().

Table 4.

Estimated MSE with , , and 10% outlier in response direction.

Table 5.

Estimated MSE with , , and 10% outlier in the response direction.

Table 6.

Estimated MSE with , , and 10% outlier in the response direction.

From Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6, one can note that the MSE values of OLS, RR, and HKB estimators increase when both multicollinearity and error variance increased which reflecting their sensitivity to such adverse conditions. On the other hand, the proposed KIB estimators, and especially their M-type extensions (KIB.M.1–KIB.M.5), exhibit remarkable stability and consistently achieve lower MSE values across all configurations. Moreover, as the sample size increases from to , the proposed estimators show improved efficiency, maintaining robustness when the predictorrs are increased from () to (), confirming their ability to effectively handle both multicollinearity and contamination in the data. Furthermore, the results reveal that the compatibility of the KIB.M family becomes more pronounced with higher error variance and stronger correlation levels, where traditional estimators tend to break down. Overall, the findings validate the robustness, efficiency, and practical reliability of the proposed KIB.M estimators, demonstrating their effectiveness in both low- and moderate-dimensional regression frameworks under outlier-influenced environments.

In case 20% outliers are introduced in the response direction, the performance of various estimators in terms of MSE is listed in Table 7 considering , , , and . These results indicate that the MSE values of the OLS, RR, and HKB estimators further deteriorate with both higher and larger , confirming their lack of robustness to outliers and multicollinearity. In contrast, the proposed KIB.M estimators exhibit improved stability, particularly at higher correlation levels, with significantly lower MSEs compared to the rest. To be more specific, KIB.M.1, KIB.M.2, KIB.M.3 and KIB.M.5 demonstrate outstanding performance across all contamination levels, maintaining remarkably low MSE values even when the data are heavily influenced by outliers suggesting their strong robustness and efficiency. These results show that while classical estimators deteriorate rapidly under high contamination, the proposed KIB.M estimators preserve their accuracy and reliability, making them highly effective for regression models affected by both multicollinearity and outlier contamination.

Table 7.

Estimated MSE with , , and 20% outlier in response direction.

In the case of increased sample size and 20% outliers in the response direction, one can see that the OLS, RR, and HKB estimators again exhibit considerably high MSEs, particularly when and increase, indicating that they remain highly sensitive to both multicollinearity and contamination. In Table 8, the proposed KIB.M estimators provide notable improvements in estimation accuracy, with substantially reduced MSE values across most scenarios, especially for high-correlation cases. Notably, KIB.M.1, and KIB.M.5 consistently outperform others across all combinations of and , showing remarkable consistency and reliability even under strong multicollinearity and heavy contamination. Overall, the findings validate that the proposed KIB.M estimators retain efficient performance in the presence of both high correlation among predictors and substantial outliers, outperforming traditional and ridge-type estimators by a significant margin.

Table 8.

Estimated MSE with , , and 20% outlier in response direction.

Table 9, Table 10, Table 11 and Table 12 summarize the estimated MSE values for various estimators under the presence of 20% outliers in the response, across different sample sizes (), numbers of predictors (), error variances (), and correlation levels (). Note that the OLS, RR, and HKB perform poorly, exhibiting large MSEs that increase sharply with higher correlation and error variance. This indicates their vulnerability to both multicollinearity and contamination. On the contrary, the proposed KIB estimators, particularly their M-type variants (KIB.M.1–KIB.M.5), consistently demonstrate superior robustness and stability across all scenarios. The KIB.M.1 and KIB.M.5 estimators, in particular, achieve the smallest MSE values even in extreme conditions ( and ). These results confirm that the proposed estimators effectively mitigate the adverse effects of multicollinearity and outliers, outperforming conventional methods and maintaining reliable efficiency in contaminated data environments.

Table 9.

Estimated MSE with , , and 20% outlier in response direction.

Table 10.

Estimated MSE with , , and 20% outlier in response direction.

Table 11.

Estimated MSE with , , and 20% outlier in response direction.

Table 12.

Estimated MSE with , , and 20% outlier in response direction.

Table 13 lists the MSEs of various estimators when the error term follows a heavy-tailed standardized Cauchy distribution with location 0 and scale 1. The table compares the results for two sample sizes ( and ) with two dimensions ( and ) and four multicollinearity levels (). From these results, one can see the OLS estimator performs extremely poorly in all cases, yielding very large MSE values, particularly as approaches unity. This degradation occurs because OLS is highly sensitive to both multicollinearity and heavy-tailed noise, leading to unstable coefficient estimates and inflated variances. The RR and HKB estimators offer moderate improvements but still exhibit substantial MSEs when is high, indicating limited robustness to Cauchy errors. Among the conventional ridge-type estimators, the KIB family (KIB1–KIB5) shows notable stability, with a substantial reduction in MSEs as compared to OLS, RR, and HKB. Notably, the KIB5 estimator consistently provides the lowest MSEs among its group, suggesting that its penalty structure effectively mitigates multicollinearity while offering some resistance to heavy-tailed errors.

Table 13.

MSE of the estimators from heavy-tailed distribution, i.e., Standardized Cauchy distribution.

When robustness is introduced through the M-estimation framework (KIB.M.1–KIB.M.5), the performance improves further. The M-based versions yield remarkably smaller MSEs, especially for higher values, highlighting their improved performance to handle outliers and non-Gaussian noise. In particular, the KIB.M.5 estimator demonstrates the minimum MSEs in almost all scenarios, showing excellent robustness and stability across different settings of n, p, and . In summary, the results indicate that the OLS, RR, and HKB estimators are highly unstable in the presence of Cauchy-distributed errors. The proposed KIB estimators outperform classical ridge estimators under heavy-tailed conditions. The robust versions (KIB.M series), especially KIB.M.5, achieve the smallest MSE values, confirming their effectiveness for regression models affected by both multicollinearity and outliers. These findings emphasize the importance of incorporating robustness through M-estimation techniques when dealing with non-normal, heavy-tailed error distributions, such as the Cauchy case.

Finally, Table 14 listed a summary of the best-performing proposed estimators that yield the minimum MSE under varying conditions of contamination, sample size (n), and dimensionality (p). The results are organized according to the percentage of outliers (10% and 20%) and the error variance () levels. From this table, it can be observed that the performance of the proposed KIB.M estimators remains stable across different configurations, demonstrating robustness in the presence of heavy-tailed errors and outliers. Specifically, KIB.M.1, KIB.M.2, and KIB.M.5 frequently appear as the estimators with the smallest MSE values, indicating their strong resistance to outlier contamination and model irregularities.

Table 14.

Summary table identifying the best proposed estimators having minimum MSE.

5. Real Applications

The application of the proposed estimators is demonstrated on two real data sets, namely the tobacco data and the anthropometric data. The performance of the estimators on real data is evaluated using the mean squared prediction error (MSPE) defined as

where is the observed value of the response variable and is the prediction obtained by fitting the model after deleting the observation.

5.1. Empirical Example-1: Tobacco Data

To illustrate the performance of the proposed estimators, we consider the well-known tobacco data, previously analyzed by [21,26]. The dataset consists of observations of various tobacco blends. The percentage concentrations of four important chemical components are treated as predictor variables, while the amount of heat generated by the tobacco during smoking is taken as the response variable. The regression model for this data can be written as

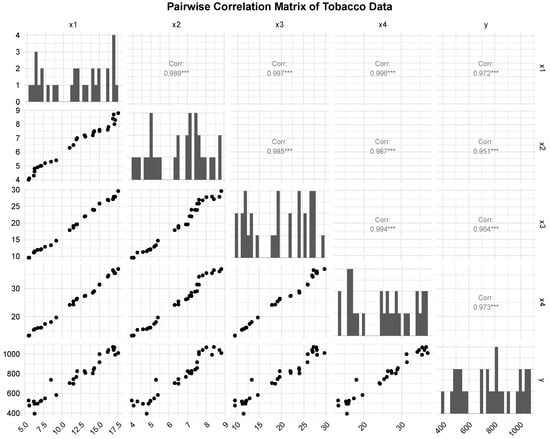

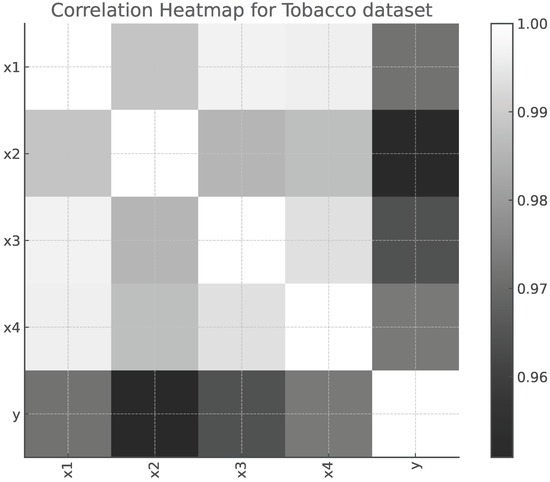

where y represents the heat generated, denote the chemical concentrations, and is the random error term.Figure 1 displays the pairwise correlations among the predictor variables. It can be seen that the predictors are strongly positively correlated with each other, which suggests the presence of multicollinearity. Additionally, in Figure 2, the correlation matrix is displayed as a heatmap, which facilitates quick identification of the pattern and intensity of relationships. The eigenvalues of the correlation matrix are

From these, the condition number is calculated as

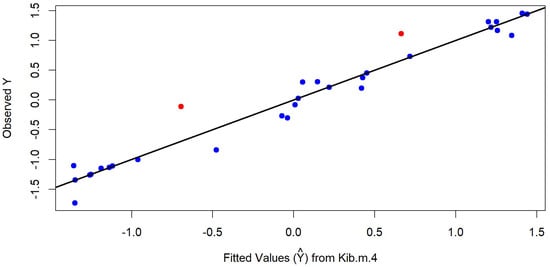

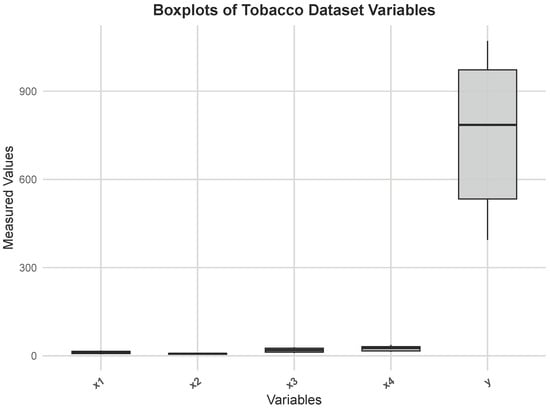

and the condition index is found to be . Both of these values clearly indicate the existence of severe multicollinearity among the predictors. Furthermore, based on the covariance matrix and influence diagnostics, three observations were identified as outliers [28]. The diagnostic plots in Figure 3 and Figure 4 supports the employment of a KIB.M.4 by confirming the existence of outliers (red dots) in response direction, that are distinct from the fitted model (red dots and line). This plot shows how the estimator is unaffected by these extreme data points, resulting in a regression fit that is more reliable. Such influential points, combined with the severe multicollinearity, make the tobacco data a challenging real dataset and a suitable choice for evaluating the robustness and efficiency of new ridge-type estimators.

Figure 1.

Correlation matrix of tobacco data. The color gradient and clustering pattern clearly highlight relationships and multicollinearity among predictors.

Figure 2.

Correlation heatmap of tobacco data. The color gradient clearly highlight relationships and multicollinearity among predictors.

Figure 3.

Diagnostic plot of KIB.M.4 estimator (observed vs. predicted values) using tobacco dataset.

Figure 4.

Boxplots of tobacco variables.

Table 15 presents the MSPEs comparison for the OLS, ridge-type (RR, HKB, and KIB1-KIB5), and proposed Kibria M-type estimators (KIB.M.1-KIB.M.5) based on the tobacco dataset. In particular, KIB.M.4 estimator yield the smallest MSPE value (1.6300), demonstrating improved performance in terms of both stability and predictive accuracy. Table 16 presents the (95%) confidence interval for tobacco dataset.

Table 15.

MSPE comparison of OLS, ridge-type, and proposed Kibria M-estimators for the tobacco dataset.

Table 16.

Confidence interval bounds () for tobacco dataset.

5.2. Empirical Example-2: Anthropometric Data (Aged 2–19 Years)

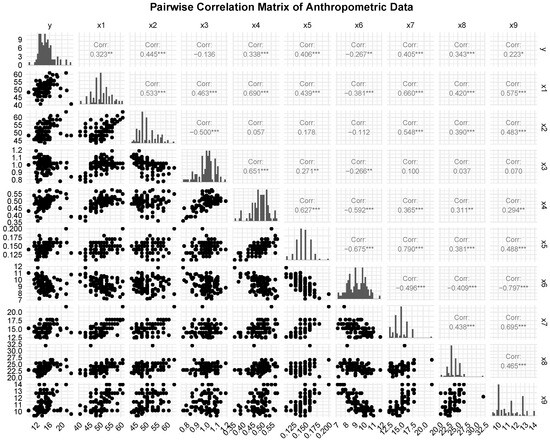

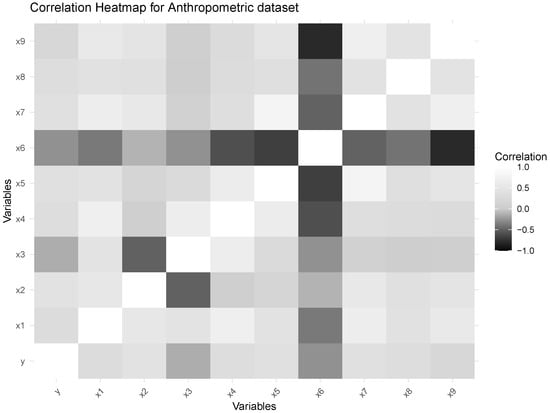

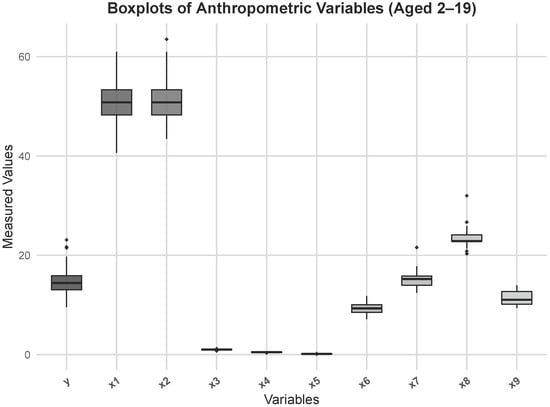

To further evaluate the efficiency of the proposed estimators, we considered another real dataset titled “Anthropometric Data Aged 2–19”, which contains anthropometric measurements for individuals aged between 2 and 19 years. The dataset is available in Aslam, Muhammad (2020) [“Anthropometric-Data-Aged-2-19-Pak”, Mendeley Data, V1, doi: 10.17632/sxgymx5xjm.] and can be accessed from: https://data.mendeley.com/datasets/sxgymx5xjm/1 (accessed on 8 November 2025). The dataset includes the predictor variables like waist circumference (WC, cm), height for age (HpC, cm), mid–upper arm circumference (MUAC, cm), neck circumference (NC, cm), wrist circumference (WrC, cm), arm–to–height ratio (AHtR), waist–to–hip ratio (WHpR), waist–to–height ratio (WHtR), and hip–to–waist ratio (HWrR). The dependent variable is body mass index (BMI, kg/m2). To investigate the relationships among the predictors, a correlation matrix was constructed, and depicted in Figure 5. The figure clearly illustrates that the explanatory variables are highly correlated with one another, suggesting the presence of multicollinearity. The linear associations between the anthropometric variables in the dataset are visually shown by the correlation heatmap in Figure 6, which further confirms the presence of multicollinearity. The boxplot in Figure 7 further confirms the presence of extreme values in the dataset. Moreover, the estimated error variance for the anthropometric data was found to be , which reflects a certain level of random variation or noise in the dataset. The corresponding eigenvalues are

whereas the condition number of the anthropometric data is calculated as

which highlighting potential multicollinearity issues. Additionally, the condition number of the design matrix was , which far exceeds 1000, indicating that the matrix is severely ill-conditioned.

Figure 5.

Correlation matrix of anthropometric variables (Aged 2–19). The color gradient and clustering pattern highlight relationships and multicollinearity among predictors (The correlation plot is based on standardized anthropometric data).

Figure 6.

Correlation heatmap of anthropometric variables (aged 2–19). The color gradient highlights relationships and multicollinearity among predictors.

Figure 7.

Boxplots of anthropometric variables (Aged 2–19). The points above the boxes represent potential outliers across the anthropometric measures (Data values are standardized for comparability).

Table 17 tabulates the MSPE values for the estimators used in this study. The proposed Kibria estimators (KIB.M.1–KIB.M.5) show improved performance over the traditional methods. These proposed estimators achieve notably lower MSPE values, suggesting that the incorporation of robust M-estimation principles enhances resistance to outlier influence and multicollinearity. Overall, the KIB.M.4 estimator yields the smallest MSPE (), implying improved predictive accuracy and robustness. These findings support the conclusion that the proposed Kibria M-estimators outperform both the OLS and standard ridge-type estimators in the anthropometric dataset, offering more reliable estimates of regression coefficients under model imperfections. Table 18 shows the 95% confidence interval of the anthropometric dataset.

Table 17.

MSPE comparison of OLS, ridge-type, and proposed Kibria M-estimators for the anthropometric dataset.

Table 18.

Confidence interval bounds (95%) for the anthropometric dataset.

6. Conclusions

In this study, we explored the combined impact of multicollinearity and response-direction outliers on the performance of several classical and newly proposed estimators. It was observed that the traditional OLS and RR estimators do not perform efficiently in terms of the MSE when multicollinearity coexists with outliers in the response direction. Although robust M-estimators are generally employed to handle such issues, their performance deteriorates when severe multicollinearity and large error variance are present simultaneously. To overcome these limitations, five new modified Kibria M-estimators have been proposed.

Extensive Monte Carlo simulations indicate that the proposed estimators, particularly KIB.M.1, KIB.M.3, and KIB.M.5, outperform existing OLS, RR, and RM estimators by yielding lower MSE values across various levels of multicollinearity and data contamination. Additional simulations under heavy-tailed error distributions, specifically the standardized Cauchy distribution, further confirm the robustness of the proposed estimators. In this challenging scenario, where traditional estimators such as OLS, RR, and HKB produced extremely large MSEs due to the infinite variance of the Cauchy errors, the KIB.M estimators exhibited remarkable stability. Notably, KIB.M.5 tends to perform best, and achieved the lowest MSE across all configurations of sample size, number of predictors, and multicollinearity levels, highlighting its exceptional resilience to both multicollinearity and heavy-tailed noise. Furthermore, analyses of two real datasets, using MSPE criterion, demonstrate that the KIB.M estimators, particularly KIB.M.4, deliver the most stable and efficient estimates, outperforming all competing methods.

Future research may extend the proposed methodology in several directions. First, applying the proposed estimators to generalized linear models or high-dimensional settings would broaden their applicability. Second, developing adaptive data-driven approaches for optimal biasing parameter selection could enhance computational efficiency. Finally, exploring Bayesian or machine learning-based frameworks for robust ridge-type estimation may further improve robustness and predictive accuracy in the presence of complex data structures and severe multicollinearity.

Author Contributions

Conceptualization, H.N. and I.S.; methodology, I.S. and D.W.; software, H.N. and D.W.; validation, S.A.; formal analysis, H.N.; investigation, S.A.; resources, I.S.; data curation, D.W.; writing—original draft preparation, I.S. and H.N.; writing—review and editing, S.A. and D.W.; visualization, H.N.; supervision, I.S.; project administration, I.S. and S.A.; funding acquisition, I.S. and H.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Tobacco data are available from [21,26]. “Anthropometric Data Aged 2–19” can be accessed from: https://data.mendeley.com/datasets/sxgymx5xjm/1 (accessed on 8 November 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Frisch, R. Wirtschaftsrechnung und Input-Output-Analyse; G. Fischer Verlag: Jena, Germany, 1934. [Google Scholar]

- Belsley, D.A.; Kuh, E.; Welsch, R.E. Regression Diagnostics: Identifying Influential Data and Sources of Collinearity; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Hoerl, A.E.; Kennard, R.W. Ridge regression: Applications to nonorthogonal problems. Technometrics 1970, 12, 69–82. [Google Scholar] [CrossRef]

- Kibria, B.M.G. More than hundred (100) estimators for estimating the shrinkage parameter in a linear and generalized linear ridge regression models. J. Econom. Stat. 2022, 2, 233–252. [Google Scholar] [CrossRef]

- Khalaf, G.; Månsson, K.; Shukur, G. Modified ridge regression estimators. Commun. Stat.-Theory Methods 2013, 42, 1476–1487. [Google Scholar] [CrossRef]

- Dar, I.S.; Chand, S. New heteroscedasticity-adjusted ridge estimators in linear regression model. Commun. Stat.-Theory Methods 2024, 53, 7087–7101. [Google Scholar] [CrossRef]

- Gujarati, D.N.; Porter, D.C. Basic Econometrics, 5th ed.; McGraw-Hill Irwin: New York, NY, USA, 2009. [Google Scholar]

- Silvapulle, M.J. Robust ridge regression based on an M-estimator. Aust. J. Stat. 1991, 33, 319–333. [Google Scholar] [CrossRef]

- Ertaş, H. A modified ridge m-estimator for linear regression model with multicollinearity and outliers. Commun. Stat.-Simul. Comput. 2018, 47, 1240–1250. [Google Scholar] [CrossRef]

- Yasin, S.; Salem, S.; Ayed, H.; Kamal, S.; Suhail, M.; Khan, Y.A. Modified Robust Ridge M-Estimators in Two-Parameter Ridge Regression Model. Math. Probl. Eng. 2021, 2021, 1845914. [Google Scholar] [CrossRef]

- Majid, A.; Amin, M.; Aslam, M.; Ahmad, S. New robust ridge estimators for the linear regression model with outliers. Commun. Stat.-Simul. Comput. 2023, 52, 4717–4738. [Google Scholar] [CrossRef]

- Wasim, D.; Zaman, Q.; Ahmad, M.; Kibria, B.G. Mitigating multicollinearity and outliers in regression: Comparison of some new and old robust ridge M-estimators. J. Stat. Comput. Simul. 2025, 95, 3526–3547. [Google Scholar] [CrossRef]

- Alharthi, M.F. Novel Data-Driven Shrinkage Ridge Parameters for Handling Multicollinearity in Regression Models with Environmental and Chemical Data Applications. Axioms 2025, 14, 812. [Google Scholar] [CrossRef]

- Alharthi, M.F.; Akhtar, N. Newly Improved Two-Parameter Ridge Estimators: A Better Approach for Mitigating Multicollinearity in Regression Analysis. Axioms 2025, 14, 186. [Google Scholar] [CrossRef]

- Akhtar, N.; Alharthi, M.F.; Khan, M.S. Mitigating Multicollinearity in Regression: A Study on Improved Ridge Estimators. Mathematics 2024, 12, 3027. [Google Scholar] [CrossRef]

- Shah, I.; Sajid, F.; Ali, S.; Rehman, A.; Bahaj, S.A.; Fati, S.M. On the performance of jackknife based estimators for ridge regression. IEEE Access 2021, 9, 68044–68053. [Google Scholar] [CrossRef]

- Shaheen, N.; Shah, I.; Almohaimeed, A.; Ali, S.; Alqifari, H.N. Some modified ridge estimators for handling the multicollinearity problem. Mathematics 2023, 11, 2522. [Google Scholar] [CrossRef]

- Zhou, Q.; Cook, R.; Zou, G. Large sample properties of Huber M-estimators in linear regression models. J. Stat. Theory Pract. 2024, 18, 45–68. [Google Scholar]

- Montgomery, D.C.; Peck, E.A.; Vining, G.G. Introduction to Linear Regression Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2021. [Google Scholar]

- Huber, P.J.; Ronchetti, E.M. Robust Statistics; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Ertaş, A. A Robust Ridge M-Estimator for the Linear Regression Model. Commun. Stat.-Simul. Comput. 2018, 47, 2938–2949. [Google Scholar] [CrossRef]

- Hoerl, A.E.; Kannard, R.W.; Baldwin, K.F. Ridge regression: Some simulations. Commun. Stat.-Theory Methods 1975, 4, 105–123. [Google Scholar] [CrossRef]

- McDonald, G.C.; Galarneau, D.I. A Monte Carlo evaluation of some ridge-type estimators. J. Am. Stat. Assoc. 1975, 70, 407–416. [Google Scholar] [CrossRef]

- McDonald, R.P. Handbook of Multivariate Experimental Psychology, 2nd ed.; Springer: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- Majid, M.; Danish, M.; Haq, S.U.; Naz, H. A robust two-parameter ridge estimator for handling multicollinearity and heteroscedasticity. Commun. Stat.-Simul. Comput. 2021, 50, 2581–2595. [Google Scholar] [CrossRef]

- Suhail, M.; Chand, S.; Kibria, B.G. Quantile-based robust ridge m-estimator for linear regression model in presence of multicollinearity and outliers. Commun. Stat.-Simul. Comput. 2021, 50, 3194–3206. [Google Scholar] [CrossRef]

- Shabbir, M.; Chand, S.; Iqbal, F. A new ridge estimator for linear regression model with some challenging behavior of error term. Commun. Stat.-Simul. Comput. 2024, 53, 5442–5452. [Google Scholar] [CrossRef]

- Rousseeuw, P.J. Robust estimation and identifying outliers. Handb. Stat. Methods Eng. Sci. 1990, 16, 11–16. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).