Abstract

The two-parameter distribution known as the Kumaraswamy distribution is a very flexible alternative to the beta distribution with the same (0,1) support. Originally proposed in the field of hydrology, it has subsequently received a good deal of positive attention in both the theoretical and applied statistics literatures. Interestingly, the problem of testing formally for the appropriateness of the Kumaraswamy distribution appears to have received little or no attention to date. To fill this gap, in this paper, we apply a “biased transformation” methodology to several standard goodness-of-fit tests based on the empirical distribution function. A simulation study reveals that these (modified) tests perform well in the context of the Kumaraswamy distribution, in terms of both their low size distortion and respectable power. In particular, the “biased transformation” Anderson–Darling test dominates the other tests that are considered.

1. Introduction

The two-parameter distribution introduced by Kumaraswamy [1] is a very flexible alternative to the beta distribution with the same (0,1) support. Originally proposed for the analysis of hydrological data, it has subsequently received a good deal of attention in both the theoretical and applied statistics literature. For example, Sundar and Subbiah [2], Seifi et al. [3], Ponnambalam et al. [4], Ganji et al. [5], and Courard-Hauri [6] provide applications in various fields, and theoretical extensions are implemented by Cordeiro and Castro [7], Bayer et al. [8], and Cordeiro et al. [9], among others.

The distribution function for a random variable, X, that follows the Kumaraswamy distribution is as follows:

which can be inverted to give the quantile function as follows:

The corresponding density function is as follows:

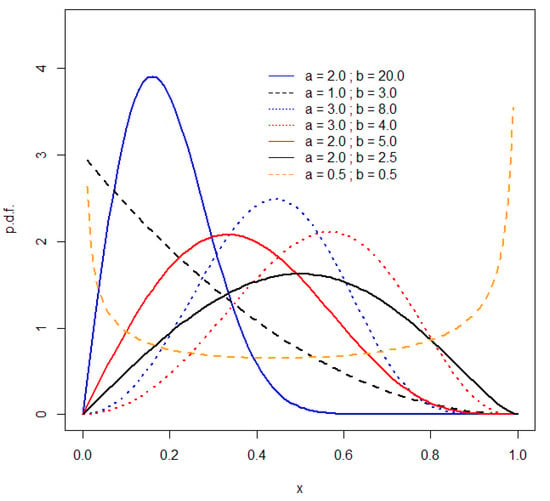

where ‘a’ and ‘b’ are both shape parameters. Some examples of the forms that this density can take are illustrated in Figure 1. In particular, similarly to the beta density, is unimodal if and ; uniantimodal if and ; increasing (decreasing) in x if and ( and ); and constant if . Nadarajah [10] notes that the Kumaraswamy distribution is in fact a special case of the generalized beta distribution proposed by McDonald [11].

Figure 1.

Kumaraswamy densities.

Jones [12] notes that the r’th central moment of the Kumaraswamy distribution exists if and is given by the following:

where B (., .) is the complete beta function; and from (2), the median of the distribution is as follows:

See Jones [12], Garg [13], and Mitnik [14] for a detailed discussion of the additional properties of the Kumaraswamy distribution.

These properties, compared with those of the beta distribution, are considered by many to give the Kumaraswamy distribution a competitive edge. For example, compared with the formula for the cumulative distribution function of the beta distribution, the invertible closed-form expression in (1) is seen by some as being advantageous in the context of computer-intensive simulation analyses and modelling based on quantiles. The latter consideration is of particular interest in the context of regression analyses. Beta regression, based on the closed-form mean of the distribution, is well established (e.g., Ferrari and Cribari-Neto [15]), but robust regression based on the median is impractical. In contrast, the median of the Kumaraswamy distribution has a simple form, given in (5), and so robust regression based on this distribution is straightforward. See Mitnik and Baek [16] and Hamedi-Shahraki et al. [17], for example.

Interestingly, the problem of testing formally for the appropriateness of the Kumaraswamy distribution appears to have received little or no attention in the literature. Goodness-of-fit tests based on the empirical distribution function (EDF) are obvious candidates, but their properties are unexplored for this distribution. Raschke [18] observed that such tests were unavailable for the beta distribution, and he proposed a “biased transformation” that he then applied to the test of Anderson and Darling [19,20] to fill this gap. He also used this approach to construct an EDF test for the gamma distribution. Subsequently, Raschke [21] provided extensive simulation results that favoured the use of the “bias-transformed” Anderson–Darling test over various other tests based on the EDF, such as those of Kuiper [22] and Watson [23], the Cramér–von Mises test (Cramér [24]; von Mises [25]), and the Kolmogorov–Smirnov test (Kolmogorov [26]; Smirnov [27]).

In this paper, we apply Raschke’s methodology to the problem of constructing EDF goodness-of-fit tests for the Kumaraswamy distribution, and we compare the performances of several such standard tests in terms of both size and power. We find that Raschke’s method performs well in this context, with the Kolmogorov–Smirnov and Cramér–von Mises tests exhibiting the least size distortion, and the Anderson–Darling test being a clear choice in terms of power against a wide range of alternatives.

In the next section, we introduce the “biased transformation” testing strategy suggested by Raschke and describe the five well-known EDF tests that we consider in this paper. Section 3 provides the results of a simulation experiment that evaluates the sizes and powers of the tests, and an empirical application is included in Section 4. Some concluding remarks are presented in Section 5.

2. Raschke’s “Biased Transformation” Testing

In very simple terms, the procedure proposed by Raschke involves the use of a transformation that converts the problem of testing the null hypothesis that the data follow the Kumaraswamy distribution into one of testing the null hypothesis of normality. The latter, of course, is readily performed using standard EDF tests. More specifically, the steps involved are as follows (Raschke [21]):

- (i)

- Assuming that the data, X, follow the Kumaraswamy distribution, estimate the shape parameters, a and b, using maximum likelihood (ML) estimation. See Lemonte [28] and Jones [12] for details of the ML estimator for this distribution;

- (ii)

- Using these parameter estimates, generate a sample of Y, where , is the distribution function for the standard normal distribution, and is given in (1);

- (iii)

- Obtain the ML estimates of the parameters of the normal distribution for Y;

- (iv)

- Apply an EDF test for normality to the Y data;

- (v)

- For a chosen significance level, α, reject “X is Kumaraswamy” if “Y is Normal” is rejected.

We consider five standard EDF tests for normality at step (iv), with the n values of the Y data in ascending order. See Stephens [29] for more details. The first two of these tests are based on the two quantities , and . The Kolmogorov–Smirnov test statistic is , and Kuiper’s test statistic is , where . In each case, is rejected if the test statistic exceeds the appropriate critical value.

Further, defining , the Cramér–von Mises test statistic is given by . Similarly, if , the Watson test statistic is defined as . Finally, the Anderson–Darling test statistic is defined as , where . Again, for these last three tests, the null hypothesis is rejected if the test statistic exceeds the appropriate critical value. In the next section, we consider nominal significance levels of α = 5% and α =10%. The critical values for the five tests are from Table 4.7 of Stephens [29]. They are reported in the last row of Table 1 in the next section, to the degree of accuracy provided by Stephens.

Table 1.

Simulated sizes of the EDF tests for various shape parameter values *.

3. A Simulation Study

Using Raschke’s “biased transformation”, each of the five EDF tests for the Kumaraswamy null hypothesis has been evaluated in a simulation experiment, using R (R Core Team [30]). In all parts of the Monte Carlo study, 10,000 Monte Carlo replications were used. The ‘univariateML’ package (Moss and Nagler [31]) was used for obtaining the ML estimates of the Kumaraswamy distribution in step (i), and the ‘GoFKernel’ package (Pavia [32]) was used to invert the distribution in step (ii) in the last section. Random numbers for the truncated log-normal and triangular distribution were generated using the ‘EnvStats’ package (Millard and Kowarik [33]), while those for the Kumaraswamy distribution itself were generated using the ‘VGAM’ package (Yee [34]). The ‘trapezoid’ package (Hetzel [35]) and the ‘truncnorm’ package (Mersmann et al. [36]) were used to generate random variates from the trapezoidal and truncated normal distributions, respectively, and the R base ‘stats’ package was used for the beta variates. Finally, random variates from the truncated gamma distribution were generated using the ‘cascsim’ package (Bear et al. [37]), and those for the truncated Weibull distribution were obtained using the ‘ReIns’ package (Reynkens [38]). The R code that was used for both parts of the simulation experiment is available for download from https://github.com/DaveGiles1949/r-code/blob/master/Kumaraswmay%20Paper%20EDF%20Power%20Study.R (accessed on 5 March 2024).

In the first part of the experiment, we investigate the true “size” of each of the five EDF tests for various sample sizes (n) and a selection of values of the parameters (a and b) of the null distribution. As noted above, the tests are applied using nominal significance levels of both 5% and 10%, and we are concerned here with the extent of any “size distortion” that may arise.

The results obtained with seven representative (a,b) pairs and sample sizes ranging from n = 10 to n = 100 are shown in Table 1. The corresponding Kumaraswamy densities appear in Figure 1. The simulated sizes of all of the tests are very close to the nominal significance levels in all cases. This result is very encouraging and provides initial support for adopting the “biased transformation” EDF testing strategy for the Kumaraswamy distribution.

Of the five tests considered, the Kolmogorov–Smirnov test performs best, in terms of the least absolute difference between the nominal and simulated sizes in 16 of the 36 cases at the 5% nominal level and 10 of the 36 cases at the 10% nominal level, as shown in Table 1. In the latter case, it is outperformed by the Cramér–von Mises test, which dominates for 14 of the 36 cases that are considered. Further, there is a general tendency for the simulated sizes of all of the tests to exceed the nominal significance levels when , while the converse is true (in general) when . An exception is when both of the distributions’ parameters equal 0.5, in which case the density is uniantimodal. These size distortions are generally small, but their direction has implications for the results relating to the powers of the tests.

The second part of the Monte Carlo experiment investigates the powers of the five tests against a range of alternative hypotheses. The latter all involve distributions on the (0,1) interval, with some distributions truncated accordingly. It should be noted that the simulated powers that are reported are “raw powers” and are not “size-adjusted”. That is, the various critical values that are used are those reported at the end of Table 1. In practical applications, this is how a researcher would proceed.

The results of this part of the study are reported in Table 2. The sample sizes range from n = 10 to n = 1000. A wide range of parameter values was considered for each of the alternative distributions, and a representative selection of the results that were obtained are reported here. For the truncated log-normal distribution, “meanlog” is the mean of the distribution of the non-truncated random variable on the log scale, and “sdlog” is the standard deviation of the distribution of the non-truncated random variable on the log scale. For the trapezoidal distribution, m1 and m2 are the first and second modes, n1 is the growth parameter, and n3 is the decay parameter.

Table 2.

Simulated powers of the EDF tests against various alternative hypotheses.

One immediate result that emerges is that, with only two exceptions, all of the tests are “unbiased” in all of the settings considered. That is, the power of the test exceeds the nominal significance level. The only exceptions that were encountered are when the alternative distribution is truncated log-normal, with both parameters equal to 0.5 and with a sample size of n = 10. This is a very encouraging result. A test that is “biased” has the unfortunate property that it rejects the null hypothesis less frequently when it is false than when it is true. Moreover, as the various tests are “consistent”, their powers increase as the sample size increases, for any given case.

The results in Table 2 also provide overwhelming support for the Anderson–Darling test in terms of power. Interestingly, this result is totally consistent with the conclusion reached by Raschke [21] for the same “biased transformation” EDF tests in the context of the beta distribution. This may reflect that fact that the latter distribution and the Kumaraswamy distributions have densities that are capable of following very similar shapes, depending on the values of the associated parameters. Moreover, Stephens [29] recommends the Anderson–Darling test over other EDF tests in general.

The Anderson–Darling test has the highest power among all five tests, in all cases, except for very small samples when the alternative distribution is trapezoidal with the parameters m1 = 1/4 and m2 = 3/4; when n1 = n3 = 3; and for the truncated Weibull alternative with n = 10. Of the other tests under study, the Cramér–von Mises test ranks second in terms of power, followed by Watson’s test and the Kolmogorov–Smirnov test. We find that Kuiper’s test is the least powerful, in general.

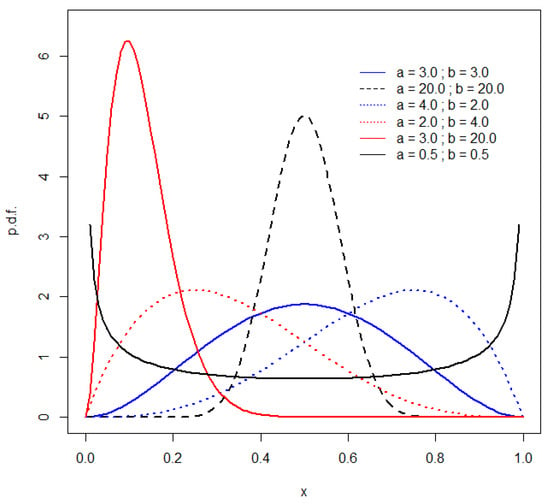

As was noted in Section 1, the density for the Kumaraswamy distribution can take shapes very similar to those of the beta density, as the values of the two shape parameters vary in each case. The densities for the alternative beta distributions that are considered in the power analysis are depicted in Figure 2 and may be compared with the Kumaraswamy densities in Figure 1. This similarity suggests that there may be instances in which the proposed EDF tests have relatively low power. If the data are generated by a beta distribution whose characteristics can be mimicked extremely closely by a Kumaraswamy distribution with the same, or similar, shape parameters, the tests may fail to reject the latter distribution. An obvious case in point is when the values of both of these shape parameters are 0.5, and the densities of both distributions are uniantimodal, though not identical. As can be seen in Figure 1, the density for the Kumaraswamy distribution is slightly asymmetric in this case, while its beta distribution counterpart is symmetric. The relatively low power of all of the EDF tests, even for n = 1000, in this case can be seen in the last section of Table 2.

Figure 2.

Beta densities.

In view of these observations, we have considered a wide range of different values for the shape parameters associated with the beta distributions that are taken as alternative hypotheses in the power analysis of the EDF tests. A representative selection of the results appears in Table 2. There, we see that although the various tests have modest power when the data are generated by beta (2,4), beta (4,2), and beta (3,3) distributions, they perform extremely well against several other beta alternatives.

Although the degree of size distortion associated with the use of Stephens’ critical values exhibited in Table 1 is generally quite small, a researcher may choose to simulate exact (bias-corrected) critical values for the various EDF tests. It is important to note that such values will depend on the samples size, n, and on the values of the shape parameters, a and b, associated with the Kumaraswamy distribution. In a practical application, the first of these values would be known, and estimates of the shape parameters could be obtained from the sample values in question.

However, the powers of the various tests will also depend on these three values, as well as on the characteristics of the distribution associated with the alternative hypothesis. This complicates the task of illustrating these powers when the critical values are simulated, but Table 3 provides a limited set of results. These results focus on relatively small sample sizes, as the size distortion becomes negligible for large n values. A selection of the alternative hypotheses covered in Table 2 is chosen for further investigation, and the values of the Kumaraswamy shape parameters are chosen to provide the similarity between their shapes and the associated alternatives’ densities. The critical values themselves are obtained as the 90th and 95th percentiles of 10,000 simulated values of each test statistic under the null distribution. There is a different critical value for every entry in Table 3, so they are not reported individually. The powers themselves are then simulated from a further 10,000 replications under the alternative hypothesis, as was the case for the results in Table 2.

Table 3.

Simulated powers of the EDF tests with simulated critical values *.

Ranking the various tests in terms of the power results in Table 3 leaves our previous conclusions unchanged. The Anderson–Darling test emerges as the preferred choice. The powers based on the simulated critical values tend to be smaller than their counterparts in Table 2. This is consistent with the earlier observation that the size distortion emerging in that table was positive for small sample sizes.

4. Empirical Applications

To illustrate the effectiveness of the “biased transformation” Anderson–Darling test, we present two applications with actual (economic) data. The R code and associated data files can be downloaded from https://github.com/DaveGiles1949/r-code/blob/master/Kumaraswamy%20Paper%20Applications.R (accessed on 5 March 2024).

4.1. The Hidden Economy

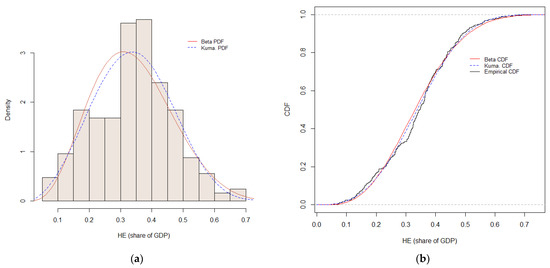

The first application uses data for the size of the so-called “hidden economy” or “underground economy” for 158 countries in each of the years from 1991 to 2017. These data measure the size of the hidden economy (HE) relative to the value of gross domestic product (GDP) in each country and are reported by Medina and Schneider [39]. These ratios range from 0.0543 for Switzerland to 0.5578 for Bolivia, with a mean of 0.2741 and a standard deviation of 0.1120. A random sample of size n = 250 was obtained from this population of 2329 values, using the “sample” command in R with replacement.

When a Kumaraswamy distribution is fitted to the sample data, the estimates of the two shape parameters are 2.8832 and 15.4084. See Figure 3a,b. The value for the “biased transformation” Anderson–Darling statistic is 0.6313, which is less than both the asymptotic and simulated 5% critical values of 0.7520 and 0.7298, respectively. So, at this significance level, we would not reject the hypothesis that the data follow a Kumaraswamy distribution. If a beta distribution is fitted to the data, the estimates of the two shape parameters are 4.3809 and 8.5369. The corresponding Anderson–Darling statistic (using the “biased transformation” and the beta distribution) is 1.3585. This exceeds both the asymptotic and simulated 5% critical values of 0.7520 and 0.7638, respectively, leading us to reject the hypothesis that the data follow a beta distribution. These two test results support each other and allow us to discriminate between the potential distributions.

Figure 3.

(a) Hidden economy densities. (b) Distribution functions.

4.2. Food Expenditure

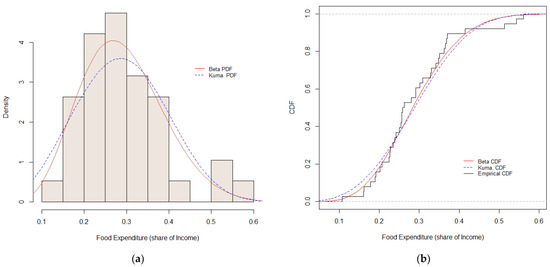

The second application uses data for the fraction of household income that is spent on food. A random sample of 38 households is available in the “FoodExpenditure” data-set in the ‘betareg’ package for R (Zeileis et al. [40]). In our sample, the observations range from 0.1075 to 0.5612 in value, and the sample mean and standard deviation are 0.2897 and 0.1014, respectively. When a Kumaraswamy distribution is fitted to the data, the estimates of the two shape parameters are 2.9546 and 26.9653. See Figure 4a,b. The Anderson–Darling statistic is 0.8521, which exceeds the asymptotic and simulated 5% critical values of 0.7520 and 0.7415, respectively. This supports the rejection of the hypothesis that the data are Kumaraswamy-distributed. Fitting a beta distribution to the data yields estimates of 6.0721 and 14.8224 for the shape parameters. The corresponding Anderson–Darling statistic is 0.5114. The asymptotic and simulated 5% critical values are 0.7520 and 0.7700, respectively, suggesting that the hypothesis that the data are beta-distributed cannot be rejected at this significance level.

Figure 4.

(a) Food expenditure densities. (b) Distribution functions.

5. Conclusions

The Kumaraswamy distribution is an alternative to the beta distribution, which has been applied in statistical studies in a wide range of disciplines. Its theoretical properties are well established, but the literature lacks a discussion of formal goodness-of-fit tests for this distribution. In this paper, we have applied the “biased transformation” methodology suggested by Raschke [18] to various standard tests based on the empirical distribution function and investigated their performance for the Kumaraswamy distribution.

The results of our simulation experiment, which focuses on both the size and power of these tests, can be summarized as follows. The “biased transformation” EDF goodness-of-fit testing strategy performs well for the Kumaraswamy distribution against a wide range of possible alternatives, though it needs to be treated with caution against certain beta distribution alternatives. In all cases, the Anderson–Darling test clearly emerges as the most powerful test of those considered and is recommended for practitioners.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable.

Data Availability Statement

See the links referenced within the text of the paper.

Acknowledgments

I would like to thank the two anonymous reviewers whose helpful comments led to several improvements to the paper. I am also most grateful to Friedrich Schneider for supplying the data from Medina and Schneider [39] in electronic format.

Conflicts of Interest

The author declares no conflict of interest.

References

- Kumaraswamy, P. A generalized probability density function for double-bounded random processes. J. Hydrol. 1980, 46, 79–88. [Google Scholar] [CrossRef]

- Sundar, V.; Subbiah, K. Application of double bounded probability density function for analysis of ocean waves. Ocean Eng. 1989, 16, 193–200. [Google Scholar] [CrossRef]

- Seifi, A.; Ponnambalam, K.; Vlach, J. Maximization of Manufacturing Yield of Systems with Arbitrary Distributions of Component Values. Ann. Oper. Res. 2000, 99, 373–383. [Google Scholar] [CrossRef]

- Ponnambalam, K.; Seifi, A.; Vlach, J. Probabilistic design of systems with general distributions of parameters. Int. J. Circuit Theory Appl. 2001, 29, 527–536. [Google Scholar] [CrossRef]

- Ganji, A.; Ponnambalam, K.; Khalili, D.; Karamouz, M. Grain yield reliability analysis with crop water demand uncertainty. Stoch. Environ. Res. Risk Assess. 2006, 20, 259–277. [Google Scholar] [CrossRef]

- Courard-Hauri, D. Using Monte Carlo analysis to investigate the relationship between overconsumption and uncertain access to one’s personal utility function. Ecol. Econ. 2007, 64, 152–162. [Google Scholar] [CrossRef]

- Cordeiro, G.M.; Castro, M. A new family of generalized distributions. J. Stat. Comput. Simul. 2010, 81, 883–898. [Google Scholar] [CrossRef]

- Bayer, F.M.; Bayer, D.M.; Pumi, G. Kumaraswamy autoregressive moving average models for double bounded environmental data. J. Hydrol. 2017, 555, 385–396. [Google Scholar] [CrossRef]

- Cordeiro, G.M.; Machado, E.C.; Botter, D.A.; Sandoval, M.C. The Kumaraswamy normal linear regression model with applications. Commun. Stat.-Simul. Comput. 2018, 47, 3062–3082. [Google Scholar] [CrossRef]

- Nadarajah, S. On the distribution of Kumaraswamy. J. Hydrol. 2008, 348, 568–569. [Google Scholar] [CrossRef]

- McDonald, J.B. Some Generalized Functions for the Size Distribution of Income. Econometrica 1984, 52, 647. [Google Scholar] [CrossRef]

- Jones, M. Kumaraswamy’s distribution: A beta-type distribution with some tractability advantages. Stat. Methodol. 2009, 6, 70–81. [Google Scholar] [CrossRef]

- Garg, M. On Distribution of Order Statistics from Kumaraswamy Distribution. Kyungpook Math. J. 2008, 48, 411–417. [Google Scholar] [CrossRef]

- Mitnik, P.A. New properties of the Kumaraswamy distribution. Commun. Stat.-Theory Methods 2013, 42, 741–755. [Google Scholar] [CrossRef]

- Ferrari, S.; Cribari-Neto, F. Beta Regression for Modelling Rates and Proportions. J. Appl. Stat. 2004, 31, 799–815. [Google Scholar] [CrossRef]

- Mitnik, P.A.; Baek, S. The Kumaraswamy distribution: Median-dispersion re-parameterizations for regression modeling and simulation-based estimation. Stat. Pap. 2013, 54, 177–192. [Google Scholar] [CrossRef]

- Hamedi-Shahraki, S.; Rasekhi, A.; Yekaninejad, M.S.; Eshraghian, M.R.; Pakpour, A.H. Kumaraswamy regression modeling for Bounded Outcome Scores. Pak. J. Stat. Oper. Res. 2021, 17, 79–88. [Google Scholar] [CrossRef]

- Raschke, M. The Biased Transformation and Its Application in Goodness-of-Fit Tests for the Beta and Gamma Distribution. Commun. Stat.-Simul. Comput. 2009, 38, 1870–1890. [Google Scholar] [CrossRef]

- Anderson, T.W.; Darling, D.A. Asymptotic theory of certain “goodness of fit” criteria based on stochastic processes. Ann. Math. Stat. 1952, 23, 193–212. [Google Scholar] [CrossRef]

- Anderson, T.W.; Darling, D.A. A test for goodness of fit. J. Am. Stat. Assoc. 1954, 49, 300–310. [Google Scholar] [CrossRef]

- Raschke, M. Empirical behaviour of tests for the beta distribution and their application in environmental research. Stoch. Environ. Res. Risk Assess. 2011, 25, 79–89. [Google Scholar] [CrossRef]

- Kuiper, N.H. Tests concerning random points on a circle. Proc. K. Ned. Akad. Wet. A 1962, 63, 38–47. [Google Scholar] [CrossRef]

- Watson, G.S. Goodness-of-fit tests on a circle. I. Biometrika 1961, 48, 109–114. [Google Scholar] [CrossRef]

- Cramér, H. On the composition of elementary errors. Scand. Actuar. J. 1928, 1928, 13–74. [Google Scholar] [CrossRef]

- von Mises, R.E. Wahrscheinlichkeit, Statistik und Wahrheit; Julius Springer: Vienna, Austria, 1928. [Google Scholar]

- Kolmogorov, A. Sulla determinazione empirica di una legge di distribuzione. G. dell’Ist. Ital. Attuari 1933, 4, 83–91. [Google Scholar]

- Smirnov, N. Table for Estimating the Goodness of Fit of Empirical Distributions. Ann. Math. Stat. 1948, 19, 279–281. [Google Scholar] [CrossRef]

- Lemonte, A.J. Improved point estimation for the Kumaraswamy distribution. J. Stat. Comput. Simul. 2011, 81, 1971–1982. [Google Scholar] [CrossRef]

- Stephens, M.A. Tests based on EDF statistics. In Goodness-of-Fit Techniques; D’Augustino, R.B., Stephens, M.A., Eds.; Marcel Dekker: New York, NY, USA, 1986; pp. 97–194. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2024; Available online: https://www.R-project.org/ (accessed on 5 March 2024).

- Moss, J.; Nagler, T. Package ‘univariateML’. 2022. Available online: https://cran.r-project.org/web/packages/univariateML/univariateML.pdf (accessed on 5 March 2024).

- Pavia, J.M. Package ‘GoFKernel’. 2022. Available online: https://cran.r-project.org/web/packages/GoFKernel/GoFKernel.pdf (accessed on 5 March 2024).

- Millard, S.P.; Kowarik, A. Package ‘EnvStats’. 2023. Available online: https://cran.r-project.org/web/packages/EnvStats/EnvStats.pdf (accessed on 5 March 2024).

- Yee, T. Package ‘VGAM’. 2023. Available online: https://cran.r-project.org/web/packages/VGAM/VGAM.pdf (accessed on 5 March 2024).

- Hetzel, J.T. Package ‘trapezoid’. 2022. Available online: https://cran.r-project.org/web/packages/trapezoid/trapezoid.pdf (accessed on 5 March 2024).

- Mersmann, O.; Trautmann, H.; Steuer, D.; Bornkamp, B. Package ‘Truncnorm’. 2023. Available online: https://cran.r-project.org/web/packages/truncnorm/truncnorm.pdf (accessed on 5 March 2024).

- Bear, R.; Shang, K.; You, H.; Fannin, B. Package ‘Cascsim’. 2022. Available online: https://cran.r-project.org/web/packages/cascsim/cascsim.pdf (accessed on 5 March 2024).

- Reynkens, T. Package ‘ReIns’. 2023. Available online: https://cran.r-project.org/web/packages/ReIns/ReIns.pdf (accessed on 5 March 2024).

- Medina, L.; Schneider, H. Shedding Light on the Shadow Economy: A Global Database and the Interaction with the Official One; CESifo Working Paper No. 7981; Ludwig Maximilian University of Munich: Munich, Germany, 2019. [Google Scholar] [CrossRef]

- Zeileis, A.; Cribari-Neto, F.; Gruen, B.; Kosmidis, I.; Simas, A.B.; Rocha, A.V. Package ‘betareg’. 2022. Available online: https://cran.r-project.org/web/packages/betareg/betareg.pdf (accessed on 5 March 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).