Abstract

The well-known inspection paradox or waiting time paradox states that, in a renewal process, the inspection interval is stochastically larger than a common interarrival time having a distribution function F, where the inspection interval is given by the particular interarrival time containing the specified time point of process inspection. The inspection paradox may also be expressed in terms of expectations, where the order is strict, in general. A renewal process can be utilized to describe the arrivals of vehicles, customers, or claims, for example. As the inspection time may also be considered a random variable T with a left-continuous distribution function G independent of the renewal process, the question arises as to whether the inspection paradox inevitably occurs in this general situation, apart from in some marginal cases with respect to F and G. For a random inspection time T, it is seen that non-trivial choices lead to non-occurrence of the paradox. In this paper, a complete characterization of the non-occurrence of the inspection paradox is given with respect to G. Several examples and related assertions are shown, including the deterministic time situation.

1. Introduction

The inspection paradox, also known as the waiting time paradox or renewal paradox, describes a paradoxical effect where observing a running renewal process with events occurring at specific times leads to atypical findings, in the sense that the observed time interval between events may be longer than the other intervals. For example, this happens when the events in question are incoming claims of an insurance company and we arbitrarily select a time to observe the process (without knowledge of any claim arrival times). The time we select specifies an interval between two successive claims and we record the length of this time interval. It is stochastically larger than a regular (unobserved) interval between two successive incoming claims.

A renewal process can be used to model the times of incoming claims, where the waiting times between successive claims, called interarrival times, are modeled as realizations of independent and identically distributed non-negative random variables with cumulative distribution function F, for example. Thus, in a realized renewal process based on a non-degenerate distribution, we observe interarrival times of different lengths and, when inspecting the process at a certain time t, it will be very likely to observe a comparably larger time interval (cf. Feller [1], p. 13).

This paradoxical effect arises in various scenarios, such as waiting for a bus or a train (cf. Feller [1], p. 12–14, Masuda and Porter [2]), observing the lifetimes of identical batteries (cf. Ross [3], p. 460), in connection with sampling bias (cf. Stein and Dattero [4]), and in stochastic resetting (cf. Pal et al. [5]). In a medical context, Jenkins et al. [6] discussed how the perception of regularly occurring phase singularities, which are indicators for cardiac fibrillation, is influenced by the inspection paradox. They found that visual observation may systematically oversample phase singularities that last longer (potentially leading to errors) and that longer windows of observation can minimize the effect.

Much attention has been paid to the study of the inspection paradox, its properties, and implications (see, e.g., Gakis and Sivazlian [7], Angus [8], Ross [9]). In particular, considering a random variable for the time of inspection instead of a deterministic time leads to insights regarding the quantification of the effect (see, e.g., Kamps [10]). Herff et al. [11] derived an inequality for the length of the inspection interval with a random time and Rauwolf and Kamps [12] gave a general representation for the expected inspection interval length, which served as the basis for the main results in this work. Several explicit examples with random time and applications to earthquake and geyser data can be found in the literature (see, e.g., Liu and Peña [13], Rauwolf and Kamps [12]).

In the case of a deterministic inspection time t, the inspection paradox does not occur for a trivial choice of interarrival times having a degenerate distribution, i.e., for deterministic interval lengths. Moreover, it does not occur if the smallest possible interarrival time is larger than t; i.e., if the inspection is performed prior to the first event. However, for a random inspection time T with a left-continuous distribution function G, it is seen that there are examples with non-trivial choices of the distribution functions F and G where the paradox does not appear, meaning that the length of the inspection interval is also distributed as F.

In this general situation, we give a complete characterization of the non-occurrence of the inspection paradox with respect to the choice of G, as well as results for F in the classical case of degenerate G. The use of an additional random inspection time led to effects and a conclusion regarding the classical case with deterministic time, where non-occurrence of the paradoxical situations only happened for degenerate distributions.

In Section 2, we briefly recap the classical inspection paradox. Renewal processes with random time T are discussed in Section 3, along with examples. Section 4 contains a complete characterization of settings with respect to the distribution function G of T. A case with degenerate time t is studied in Section 5 regarding situations with non-occurrence of the inspection paradox leading to degenerate interarrival times.

2. The Classical Inspection Paradox Inequality

In order to formally introduce the inspection paradox, we first briefly recapitulate concepts of renewal processes. An introduction to renewal processes can be found in, e.g., Cox [14], Feller [1], Ross [3], Pinsky and Karlin [15], Mitov and Omey [16], and Kulkarni [17].

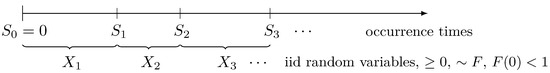

Let , , … be a sequence of non-negative, independent, and identically distributed (iid) random variables on some probability space with a common distribution function F, . These random variables will be called interarrival times in the following. Then, the sequence of occurrence times given by

defines a renewal process (see Figure 1). The corresponding renewal counting process is denoted by , where

counts the number of occurrences up to time t. In particular, holds for all and for all .

Figure 1.

Renewal process.

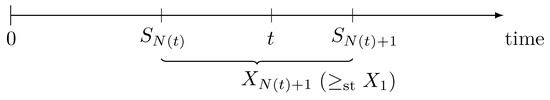

When we inspect a renewal process at some fixed time , exactly renewals have already taken place. The last renewal prior to t was at time and the subsequent renewal will occur at time . The renewal interval covering t is referred to as the “inspection interval” and its length is given by (see Figure 2). Representations for the survival function and the expected value of the inspection interval length can be found in the literature (see, e.g., Gakis and Sivazlian [7], pp. 44–45).

Figure 2.

Inspection interval.

The inspection paradox of renewal theory then states that

(cf. Angus [8], Ross [3]), which means that the inspection interval is stochastically larger than a common renewal interval, i.e., . Consequently, in terms of expected values, the mean inspection interval length exceeds the mean length of any regular renewal interval, in the sense that

No paradoxical effect occurs in the trivial case where the interarrival times have a degenerate distribution, i.e., , , for some , as equality holds in the inspection paradox, i.e., for all , and all events in the corresponding renewal process take place perfectly on time. In other words, all time intervals (including the inspected interval) have precisely the same length. Up to this point, it has remained open whether this is the only example in which equality holds for fixed t. The answer will be provided in the following sections by means of a generalization to a random inspection time T, for which the equality in (1) and (2) is characterized.

3. The Inspection Paradox with a Random Inspection Time

Instead of a fixed point in time , we can also consider a random variable to model the time of inspection. Let T be such a random inspection time, i.e., a non-negative random variable that is independent of the renewal process and has a left-continuous distribution function G given by , .

Then, (1) implies that the paradoxical effect occurs as in the classical inspection paradox with

i.e., , and from (2) we conclude

for the inspection paradox in terms of expectations.

In Section 2, we saw that equality in (1) in the fixed time case, i.e., for the choice , happens when the interarrival times have a degenerate distribution. The following example shows that, in a trivial case but for non-degenerate distributions of and T, equality in (3) and (4) holds true. Thus, introducing a random inspection time can lead to other cases with equality in (3) and (4).

Example 1.

Consider a Binomial renewal process with interarrival times having a geometric distribution on , i.e.,

and a random inspection time T with a two-point distribution, i.e., with . Since for , we have , and thus holds. The situation is trivial in the sense that the inspection is made prior to . In particular, the distribution function G is constant on the support of the interarrival times with for all .

On the other hand, in many well-known examples, Inequality (1) is strict for and so is (3); e.g., this is the case for a Poisson process with exponentially distributed interarrival times. The same holds true in connection with random inspection times; we refer to Liu and Peña [13] who discussed the choice of an exponentially distributed random inspection time.

In fact, the gap between the expected inspection interval length and a common expected interval length can be quantified for any choice of distribution. Rauwolf and Kamps [12] derived the following representation

where is a measurable function such that all expected values and integrals are well-defined and exist finitely. If is monotone non-decreasing, then all covariance terms are non-negative. In particular, this leads to a representation for the expected inspection interval length by choosing , ,

and to a representation for by choosing , , . Thus, Inequalities (3) and (4) can be derived from (5), and choosing leads to formulae in the classical case from Section 2.

As in Example 1, the introduction of a random inspection time T leads to various other possible and non-degenerate cases with non-occurrence of the inspection paradox. Concerning the identification of these respective situations, Formula (5) offers the option to examine cases where all covariance terms are equal to zero. On the other hand, Formula (5) also facilitates the study of situations with possible large gaps between and , say. For examples, we refer to [12].

In the following discussion, we will need the left and right endpoints of the support of the interarrival times, the formal introduction of which is given in Notation 1.

Notation 1.

Let X be a random variable with right-continuous distribution function F. Let be the quantile function defined by , . Then, the left and right endpoints α and ω of the support of X are denoted by

The support of X then lies in the interval if or in , otherwise, and will be denoted by .

The following result by Behboodian [18] given in Lemma 1 can be utilized to determine whether a covariance of two functions of a random variable is zero. In particular, this will be applied to determine whether the covariance terms in (5) are positive or zero. An alternative proof can be found in Rauwolf [19].

Lemma 1

(cf. [18], Theorem 2). Let X be a non-negative and non-degenerate random variable with support and probability distribution P. Let and be two monotone non-decreasing, measurable functions such that exists finitely. Then,

If none of the functions and in Lemma 1 are constant on , then the covariance of and is strictly positive.

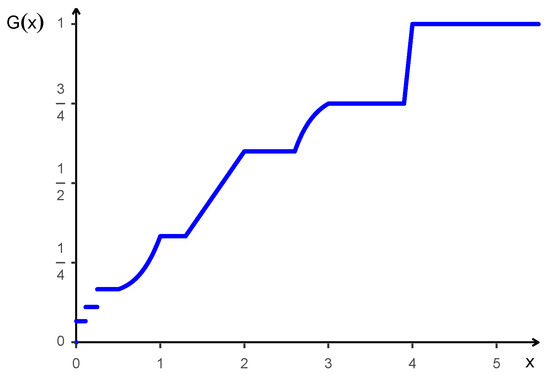

Based on the representation with the covariance terms, we present an example of a renewal process with absolutely continuous interarrival times and a particular choice for the distribution function G of the random inspection time such that holds.

Example 2.

Let the interarrival times , , have a uniform distribution on the interval with a density function f given by , . Furthermore, let the random inspection time T have the left-continuous distribution function G given in Figure 3. The function G is constant on the interval , i.e., on the support of , and therefore, an application of Lemma 1 yields covariance . Similarly, G is constant on the support of the occurrence times , , … from which equality in Equation (5) follows for any choice of φ. In between the supports of and , , the form of the distribution function G (left-continuous version) is arbitrary.

Figure 3.

A distribution function G with non-occurrence of the inspection paradox.

The equality in Example 1 can also be derived by applying Lemma 1. This approach facilitates finding new explicit examples with equality—especially in the case of random inspection times which allow for other possibilities than in the classical inspection paradox with a degenerate time .

4. Non-Occurrence of the Inspection Paradox

A general result regarding non-occurrence of the inspection paradox can be derived on the basis of Representation (5) with a random inspection time. This is realised in Theorem 1 and the result is applied to the special cases of equality in (3) and (4); see SubSection 4.2 and Remark 3, respectively. Either result can be used to decide whether a strict inequality holds for specific choices of distribution functions F and G. In particular, the condition for equality is easy to check and can therefore be utilized without calculating, e.g., the expected value of explicitly.

4.1. General Results

This subsection is concerned with determining distributions for and T with distribution functions F and G, respectively, such that holds, given that is not a constant function. Lemma 2 serves as a key component in the discussion and states that given the support endpoints of the interarrival times, we can explicitly calculate the smallest index n for which the succeeding occurrence times have overlapping supports.

Throughout, is called an atom of (the distribution of) if .

Lemma 2.

Let the interarrival times have a left support endpoint and right support endpoint . Then, there exists a natural number at which the supports of two consecutive occurrence times and , , overlap in the sense that . In particular, κ is given by

Proof.

Assuming that there is no point of overlap for any , i.e., that

leads to which is a contradiction to the assumption that . Therefore, there exists a natural number for which the supports of successive occurrence times with large enough indices overlap. If for some , then

If the right support endpoint is of the form for some and or is an atom of , then the first point of overlap is at , i.e., the supports of and touch (). If neither nor are atoms of , then the first overlap happens for the supports of and (i.e., ). □

The trivial case is excluded in Lemma 2 as the support of , covered by the interval (or ), is a subset of the support of , covered by (or ), for all . In this case, the supports of all occurrence times overlap.

We will now determine cases with equality of the expected values in (5), i.e., non-occurrence of the inspection paradox, under the general assumption that G is a left-continuous distribution function.

Theorem 1.

Let the interarrival times have left support endpoint , finite right support endpoint and let κ be defined as in Lemma 2.

Let be a measurable, monotone non-decreasing function such that all expected values and integrals are well-defined and exist finitely. Furthermore, assume that φ is not constant on -almost surely.

Let T be a non-negative random variable with left-continuous distribution function G that is independent of the interarrival times .

Then, is equivalent to

where are constants and , , are functions such that G is a left-continuous distribution function.

If , then is equivalent to , , if α is not an atom of , and to , , , if α is an atom of .

Proof.

With Equation (5), is equivalent to

since and G are both monotone non-decreasing, and thus all covariances are non-negative. For , applying Lemma 1 yields

for some constant due to the assumption that is not constant on -almost surely. First, assume that , i.e., is not an atom of . Since G as a distribution function is monotone non-decreasing and assumed to be left-continuous, we have for all . With the same arguments, we obtain for a general

for a constant by using Lemma 1. This implies for all , due to the monotonicity and continuity of the distribution function G. Furthermore, the sequence of constants needs to satisfy for all (since G is monotone non-decreasing) and (since ).

With Lemma 2, is the index where the supports overlap, and thus for all . If , then , and thus the distribution function G has to be of the form , . Otherwise, the distribution function G has to be of the form

where and , , are functions such that for all , , and such that are monotone non-decreasing and left-continuous.

If , then necessarily has to hold for all , . Thus, for G as in (7) to be left-continuous at the points , the additional assumption , , is needed.

For the opposite implication, noticing that G as in (7) is constant on the support of all occurrence times and applying Lemma 1, we see that the covariances are all equal to zero. This leads to equality of the expected values. □

We note that the constant as introduced in Lemma 2 indicates the kind of support the interarrival times have. For example, can correspond to the case , where may be infinite. In this case, the distribution of the random inspection time T can be chosen as , or among others. On the other hand, must be finite for .

Remark 1.

The case for (which would correspond to in Theorem 1) is excluded, because it is already well-known from the literature (cf. Section 2) that and that the expected values are always equal, i.e.,

The equality remains when introducing a random variable T. This can also be derived with the following argument: If , then for all implies for all and for any choice of the distribution function G, leading to equality for any φ.

Theorem 1 can be applied to any interarrival distribution. In particular, only the left support endpoint and the right support endpoint of the interarrival times are of interest. Given a specific interarrival distribution and a distribution function G, Theorem 1 establishes whether or not the inspection paradox occurs.

Example 3.

The case is a particular one (see Lemma 2 with ). According to Theorem 1 where α is supposed to not be an atom of , i.e., , the inspection paradox does not occur if G is given by

with and chosen such that G is left-continuous.

Here, for , T may have a two-point distribution on and may be uniformly distributed on the interval .

In the case of α being an atom of , i.e., , G is given by

and has to be fulfilled.

Furthermore, the following example derived from the results of Theorem 1 shows that, in the degenerate time case with , equality in (1) or (2) can also take place for non-degenerate interarrival times. Nevertheless, this situation is irrelevant, since the inspection time coincides with the lower bound of the support of .

Example 4.

For absolutely continuous interarrival times with a left endpoint (i.e., α is not an atom of ) and right endpoint , which corresponds to the case , we have equality for any φ satisfying the assumptions of Theorem 1, since , , is of the form (7) with .

Therefore, choosing and for in Theorem 1, we obtain an example for which

holds even though the interarrival times have a distribution other than the degenerate distribution. Consequently, requiring equality for a single z and t is not sufficient in general to derive a characterization for the distribution of the interarrival times. This case will be considered in detail in Section 5.

The case that was not included in Theorem 1 is studied separately in the following theorem.

Theorem 2.

Let the non-degenerate interarrival times have a left support endpoint , where . Let be a measurable, monotone non-decreasing function such that all expected values and integrals are well-defined and exist finitely. Furthermore, assume that φ is not constant on -almost surely.

Let T be a non-negative random variable with a left-continuous distribution function G that is independent of the interarrival times .

Then, holds if and only if T has a degenerate distribution in 0 for every .

Proof.

Analogously to the proof of Theorem 1, must hold for all and for all , wherefore we obtain for all . Thus, G is a distribution function of the degenerate distribution in 0. The opposite direction follows directly from an application of Lemma 1. □

Remark 2.

If in Theorem 2 and is the left-continuous version of the distribution function of the degenerate distribution in 0, then the first covariance is positive and an inspection paradox occurs. This is the case as G is not constant -almost surely on the support of the interarrival times.

In the above situation with and , we find

and thus .

4.2. Equality of the Survival Functions

Choosing , , , in Theorem 1 yields distributions with equality in the inspection paradox, in the sense that . In general, a random inspection time T having a distribution function of the particular form (7) is sufficient for equality in the inspection paradox. More precisely, the inspection paradox does not appear in this case and both random variables and are identically distributed, as stated in the following corollary.

Corollary 1.

Let the interarrival times have a left support endpoint and right support endpoint . If T has a distribution function of the form (7), then

i.e., .

Proof.

Since the G given in (7) is constant on the supports of and of for all , applying Lemma 1 yields for all and for all as in the proof of Theorem 1. Therefore, with

and are identically distributed. □

In the characterization of Theorem 1, the function is assumed to be non-constant on -almost surely. Therefore, for the function to take both values 0 and 1 on the support of with positive probability, we require .

Corollary 2.

Let the interarrival times have a left support endpoint , right support endpoint and let . Let T be a non-negative random variable with left-continuous distribution function G that is independent of the interarrival times . If there is a such that takes both values 0 and 1 on with positive probability and

then G is of the form (7).

Proof.

This follows from Theorem 1 for the choice , . □

Corollary 2 shows that equality for an appropriate value of z is enough to determine the general form of the distribution function G (on finite intervals). Thus, if we have equality of the survival functions of and for this , then T is of the form (7) and Corollary 1 yields .

Remark 3.

The inspection paradox is also discussed in terms of expected values, as we have seen in Section 2 and Section 3. With the choice , , it follows under the assumptions of Theorem 1 that equality of the expected values holds if and only if G is of the form (7).

Similarly, equality of the moments, i.e., , also determines the distribution of T. This can be obtained from Theorem 1 via the choice , , .

5. Equality in the Degenerate Time Case

As discussed in Section 2 and in Remark 1, no paradoxical effect appears for the inspection interval length given degenerate interarrival times, regardless of whether the inspection time is random or deterministic. On the other hand, degenerate interarrival times are not the only example with this property (cf. Example 4). In this section, we further study equality in the inspection paradox by means of a fixed sequence of inspection times.

The following Theorem 3 states that having such a sequence of fixed times for which equality holds is sufficient for the interarrival times to have a degenerate distribution.

Theorem 3.

Let the interarrival times have a distribution function F with . Let be a sequence of monotone increasing times (, ) with , such that

Then, almost surely for some , i.e., , .

Proof.

We assume that the interarrival times have a left support endpoint and right support endpoint and define if and if . Thus, it is assumed that the support contains at least two values and this is shown to lead to a contradiction in the following. Due to Representation (6) with , equality holds if and only if

In the case , an application of Lemma 1 yields

From (8), we obtain that necessarily has to lie in a bounded interval, i.e., . Let , . As in the proof of Theorem 1, the case leads to

Due to Lemma 2, there exists a such that for all . This implies

i.e., the supports of , , … cover all real numbers greater than or equal to . Since the unbounded sequence consists of monotone increasing real numbers, there exists a smallest with and an with such that .

If , then the indicator function

has a jump and is not constant for -almost all and -almost all . Concerning the occurrence time , we obtain , which is in contradiction to the assumed equality.

If , then lies in the preceding interval with , since this interval overlaps with the interval due to . Again, the indicator function is not constant for -almost all and -almost all . In consequence, is a contradiction to the equality.

If , then lies in the subsequent interval , which implies , leading to a contradiction.

In conclusion, must hold -almost surely and there exists an with almost surely and , . The constant has to be positive due to . □

Note that having only a finite number of times with equality in Theorem 3 does not suffice to derive that the interarrival times have a degenerate distribution. If there was a largest , then could have a support lying to the right of and consisting of more than one number and we would still have equality.

The same arguments as in Theorem 3 lead to an analogous result in case of the classical inspection paradox inequality for a sequence of time points. In a similar way to the main result in Section 4, Theorem 3 and the following Corollary 3 can be combined by using a function that is assumed to be not constant almost surely on the support of the interarrival times.

Corollary 3.

Let the interarrival times have the distribution function F with . Let be a sequence of monotone increasing times (, ) with . Let such that takes values 0 and 1 on with positive probability and

Then, almost surely for some , i.e., , .

We note that in both Theorem 3 and Corollary 3, we did not assume that any particular point lies in the support of an occurrence time, as this assumption alone does not suffice (in Example 4, the time t lies in the support of ). In order to infer that the interarrival times have a degenerate distribution based on only one time point t, it is necessary to assume that t lies in the support of an occurrence time whose support overlaps with both the support of the preceding and the succeeding occurrence time. This is formally stated in Corollary 4.

Corollary 4.

Let the interarrival times have a distribution function F with . Assume that there exists a with and a such that . Then,

implies almost surely, i.e., , .

Proof.

Due to the equality of the expected values and to Representation (6), the covariances must be equal to zero for all . Assume that the support of contains at least two different points, then and holds due to Lemma 2. As in the proof of Theorem 3, we can infer that t lies in the inner of either the support of , of or of , respectively, which leads to a contradiction. Thus, implies ; i.e., has a degenerate distribution in t. Since is the sum of k iid interarrival times, we then have almost surely. □

6. Conclusions

By considering a random inspection time in a renewal counting process, interesting effects come into play and new insights are gained regarding the distribution of the random time. With respect to the well-known inspection paradox, non-trivial choices of this distribution and the distribution of the interarrival times lead to non-occurrence of the paradox in contrast to the situations for a deterministic time. In the general case, a complete characterization of the (non-)occurrence of the inspection paradox with respect to G is given.

Author Contributions

Conceptualization, D.R. and U.K.; methodology, D.R.; validation, D.R. and U.K.; formal analysis, D.R.; investigation, D.R.; writing—original draft preparation, D.R.; writing—review and editing, D.R. and U.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| iid | independent and identically distributed |

| P-almost surely |

References

- Feller, W. An Introduction to Probability Theory and Its Applications, 2nd ed.; John Wiley & Sons Inc.: New York, NY, USA, 1971; Volume II. [Google Scholar]

- Masuda, N.; Porter, M.A. The waiting-time paradox. Front. Young Minds Math. 2021, 8, 582433. [Google Scholar] [CrossRef]

- Ross, S.M. Introduction to Probability Models, 10th ed.; Academic Press: London, UK, 2010. [Google Scholar]

- Stein, W.E.; Dattero, R. Sampling bias and the inspection paradox. Math. Mag. 1985, 58, 96–99. [Google Scholar] [CrossRef]

- Pal, A.; Kostinski, S.; Reuveni, S. The inspection paradox in stochastic resetting. J. Phys. A Math. Theor. 2022, 55, 021001. [Google Scholar] [CrossRef]

- Jenkins, E.V.; Dharmaprani, D.; Schopp, M.; Quah, J.X.; Tiver, K.; Mitchell, L.; Xiong, F.; Aguilar, M.; Pope, K.; Akar, F.G.; et al. The inspection paradox: An important consideration in the evaluation of rotor lifetimes in cardiac fibrillation. Front. Physiol. 2022, 13, 920788. [Google Scholar] [CrossRef] [PubMed]

- Gakis, K.G.; Sivazlian, B.D. A generalization of the inspection paradox in an ordinary renewal process. Stoch. Anal. Appl. 1993, 11, 43–48. [Google Scholar] [CrossRef]

- Angus, J.E. The inspection paradox inequality. SIAM Rev. Soc. Ind. Appl. Math. 1997, 39, 95–97. [Google Scholar]

- Ross, S.M. The inspection paradox. Probab. Eng. Inf. Sci. 2003, 17, 47–51. [Google Scholar] [CrossRef]

- Kamps, U. Inspection paradox. In Encyclopedia of Statistical Sciences, 3(Update); Kotz, S., Read, C.B., Banks, D.L., Eds.; John Wiley & Sons Inc.: New York, NY, USA, 1999; pp. 364–366. [Google Scholar]

- Herff, W.; Jochems, B.; Kamps, U. The inspection paradox with random time. Stat. Pap. 1997, 38, 103–110. [Google Scholar] [CrossRef]

- Rauwolf, D.; Kamps, U. Quantifying the inspection paradox with random time. Am. Stat. 2023, 77, 274–282. [Google Scholar] [CrossRef]

- Liu, P.; Peña, E.A. Sojourning with the homogeneous Poisson process. Am. Stat. 2016, 70, 413–423. [Google Scholar] [CrossRef] [PubMed]

- Cox, D.R. Renewal Theory; Methuen & Co.: London, UK, 1962. [Google Scholar]

- Pinsky, M.A.; Karlin, S. An Introduction to Stochastic Modeling, 4th ed.; Academic Press: Burlington, VT, USA, 2011. [Google Scholar]

- Mitov, K.V.; Omey, E. Renewal Processes; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Kulkarni, V.G. Modeling and Analysis of Stochastic Systems, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Behboodian, J. Covariance inequality and its applications. Int. J. Math. Educ. Sci. Technol. 1994, 25, 643–647. [Google Scholar] [CrossRef]

- Rauwolf, D. Renewal Processes with Random Time. Ph.D. Thesis, RWTH Aachen University, Aachen, Germany, 2023. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).