Abstract

The integration of IT services is a critical challenge for public organizations that seek to modernize their operational ecosystems and strengthen mission-oriented processes. In the field of fiscal oversight, supreme audit institutions (SAIs) increasingly require systematized and interoperable service architectures to ensure transparency, accountability, and effective public resource control. However, existing literature reveals persistent gaps concerning how service integration models can be deployed and validated within complex government environments. This study describes an enterprise architecture-driven service integration model designed and evaluated within the Office of the General Comptroller of the Republic of Colombia (Contraloría General de la República, CGR). The study tests the hypothesis that an Enterprise Architecture-driven integration model provides the necessary structural coupling to align technical IT performance with the legal requirements of fiscal oversight, which is an alignment that typically does not appear in generic governance frameworks. The methodological approach followed in this study combines an IT service management maturity assessment, process analysis, architecture repository review, and iterative validation sessions with institutional stakeholders. The model integrates ITILv4 (Information Technology Infrastructure Library), TOGAF (The Open Group Architecture Framework), COBIT (Control Objectives for Information and Related Technologies), and ISO20000 into a coherent framework tailored to the operational and regulatory requirements of an SAI. Results show that the proposed model reduces service fragmentation, improves process standardization, strengthens information governance, and enables a unified service catalog aligned with fiscal oversight functions. The empirical validation demonstrates measurable improvements in service delivery, transparency, and organizational responsiveness. The study contributes to the field of applied system innovation by: (i) providing an integration model, which is scientifically grounded and evidence-based, (ii) demonstrating how hybrid governance and architecture frameworks can be adapted to complex public-sector environments, and (iii) offering a replicable approach for SAIs that seek to modernize their technological service ecosystems through enterprise architecture principles. Future research directions are also discussed to provide guidelines to advance integrated governance and digital transformation in oversight institutions.

1. Introduction

1.1. Context and Institutional Motivation

Digital transformation has become a strategic priority for public administration offices worldwide. This is particularly true for institutions responsible for the guarantee of accountability, transparency, and the effective use of public resources. Supreme Audit Institutions (SAIs) have an increasing dependence on integrated, data-driven technological ecosystems to execute fiscal oversight functions within complex administrative and territorial environments [1,2,3]. While these ecosystems manage critical data, their lack of interoperability represents a direct risk to fiscal accountability. The isolation of mission-critical information prevents the achievement of a unified view of public spending, which increases the latency between the occurrence of fiscal irregularities and their detection by oversight mechanisms.

Similar to the case of other SAIs, the mission of the Office of the General Comptroller of the Republic of Colombia (Contraloría General de la República, CGR) relies heavily on information and communication technologies that support auditing, monitoring, and fiscal responsibility processes [4].

Despite this dependence, heterogeneous platforms, fragmented service provision, and limited interoperability are still persistent challenges for public-sector organizations [5]. Before this study, several mission-critical systems in CGR, such as the financial auditing system, the internal control platform, and the citizen complaints system, operated in isolation. This situation results in duplicated processes, data silos, inconsistent service levels, and limited traceability. Other shortcomings generated by this isolation are incomplete service catalogs, the absence of an explicit definition of service levels, outdated infrastructure, and a lack of unified monitoring and capacity-management mechanisms.

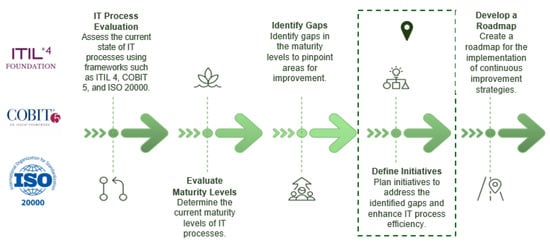

To address these issues, CGR launched the R&D&I project CGR-407-2024, aimed at designing and developing an integrated service-management model grounded in internationally recognized governance and architecture frameworks—specifically ITILv4 [6], TOGAF [7], COBIT [8], and ISO20000 [9]. The initiative behind the named project aligns with the CGR Strategic Plan 2022–2026 [10] and the institutional IT Strategic Plan (PETI) 2022–2026 [11]. This strategy emphasizes interoperability, citizen-centered value creation, and technological modernization. Within this strategic context, CGR defined objectives focused on (i) consolidating an integrated IT service-management model, (ii) strengthening information security and business continuity, (iii) improving reliability and operational efficiency (e.g., availability , mean time to repair—MTTR h), and (iv) promoting innovation and knowledge management across technical and oversight units.

However, designing an integration model that adopts for implementation the artifacts of TOGAF, ITILv4, and COBIT within a national fiscal oversight institution is a non-trivial task. These frameworks originate from corporate environments and require contextual reinterpretation to achieve alignment with public-value, legal compliance, transparency, and accountability demands. The challenge associated to this alignment motivates the scientific contribution of this research.

1.2. Related Work and Research Gap

Digital transformation has been widely recognized as a catalyzer for improving the value, efficiency, and citizen participation in public services [12,13,14]. Prior works show that digital transformation leads to administrative restructuring [15], process redesign [16,17], greater proximity to citizens [3,18], and enhanced governance maturity [19,20]. European studies highlight that digital modernization correlates with improved governance indicators and reduced corruption [21,22]. Nevertheless, limited public availability of structured data often restricts the empirical assessment of these transformations for their application in different contexts.

The “limited availability” of IT services is an aspect that might result in the suspension of real-time monitoring of public contracts. This suspension will leave as consequence gaps in the institutional oversight chain. In a similar way, the lack of standardized practices does not just affect efficiency, but it compromises the integrity and legal validity of digital audit evidence, because inconsistent procedures can be challenged during judicial stages of fiscal responsibility processes.

Recent research has explored how digital transformation enhances public-sector performance. However, the efforts presented in those pieces of work tend to conceptualize transformation at a macro-institutional level, without offering operational integration models. For example, Yang et al. [23] develop a production network model to demonstrate how digital transformation improves governmental efficiency, emphasizing the importance of coordinated digital initiatives across departments. Similarly, Shibambu and Ngoepe [24] analyze digital transformation in South Africa and find that limited legislation, weak strategies, and inconsistent implementations limit improvements in public service delivery.

Despite these studies highlighting structural obstacles and the need for coordinated digital strategies, they do not propose comprehensive governance or architectural models aiming at achieving such coordination. In contrast, our work moves beyond diagnosing systemic deficiencies and introduces a structured service integration model that bridges strategy, governance, enterprise architecture, and IT service management. This bridging is achieved through the combined application of TOGAF, ITILv4, and COBIT. This approach provides an actionable pathway to address fragmentation, ensuring not only conceptual but operational alignment between digital transformation and institutional performance.

More specialized approaches focus on architecture-driven service improvement, although these remain scoped within citizen-centric or municipal contexts rather than institutions of fiscal oversight. Singh et al. [25] developed a process model using TOGAF, which incorporates citizen feedback into local public service improvement, highlighting capacity, risk, and adoption challenges at the practitioner level. Likewise, Waara [26] evaluates 18 digital government maturity models and concludes that most fail to integrate citizen-centric considerations into the structure of digital government maturity. This evaluation reveals gaps in frameworks that focus on guiding comprehensive transformation. Compared with these contributions, our work extends architectural and maturity-oriented perspectives towards a more complex institutional environment: a supreme audit institution (CGR). The model presented in this paper reinterprets Enterprise Architecture (EA), IT service management, and governance frameworks within a context where transparency, traceability, accountability, and information integrity are mission critical. Rather than focusing exclusively on citizen-service feedback or maturity assessment, this study articulates a multi-framework and empirically validated integration model capable of supporting mission-critical oversight processes. The result of this work represents a significant contribution, providing a unique and transferable model for public institutions that exhibit high regulatory and operational complexity.

Other studies emphasize the socio-organizational dimension of digital transformation, showing that cultural adaptation and institutional adoption are key factors of success [27]. The methodologies described in these studies provide relevant insights for evaluating service integration models, although they remain underused in oversight institutions.

Despite these contributions, the literature reveals a clear research gap in the integration of IT governance, enterprise architecture, and service-management frameworks within SAIs. Indeed, TOGAF, COBIT, and ITILv4 have been individually applied in public-sector modernization. Nevertheless, prior research does not examine their combined, context-aware application in organizations responsible for fiscal control, where traceability, legal compliance, and operational accountability impose unique constraints [28,29].

Existing studies lack validated models that:

- Strengthen IT service integration within oversight bodies.

- Incorporate baseline maturity assessments and measurable governance improvements.

- Demonstrate how technological integration supports fiscal supervision and public-value creation.

Moreover, few works characterize the maturity conditions or performance targets needed to evaluate transformation outcomes in multi-level, heterogeneous administrative environments such as CGR. This gap underscores the need for a structured, evidence-based integration model tailored to the mission-critical demands of fiscal oversight.

While general EA frameworks like TOGAF provide structural blueprints, they lack the legal-technical coupling required for the specific evidential standards of supreme audit institutions. Existing models fail to address the “semantic gap” between IT service performance and the legal validity of fiscal data. Our study resolves this theoretical deficiency by introducing a service integration model for SAIs that prioritizes “legal traceability” as a core architectural constraint, moving beyond operational efficiency toward the preservation of public value through digital governance.

1.3. Aim and Contribution

The aim of this research is to present the design and conceptually validate a service integration model driven by EA principles, that improves IT governance, service reliability, and public-value generation in CGR. The model is based on the integrated application of TOGAF, ITILv4, and COBIT, and it is aligned with the strategic digital transformation goals of the institution [30].

Unlike traditional EA applications that prioritize commercial agility or alignment driven to profit, this model differs operationally by placing regulatory compliance and the chain of custody for fiscal data at its core. It transforms standard architectural phases into a structured mechanism for ensuring that every IT service component directly supports the legal validity of audit findings, which is a requirement unique to oversight contexts.

This study makes three primary scientific contributions:

- A novel, context-aware integration model: We present a model that adopts ITILv4, TOGAF, and COBIT, specifically for the mission, regulatory constraints, and oversight functions of an SAI, which is a contribution absent in current literature.

- An empirically grounded architecture: The model is derived from diagnostic assessments, stakeholder engagement, maturity analysis, and iterative validation within CGR, ensuring relevance and applicability.

- A replicable framework for SAIs: The proposed model provides conceptual and procedural foundations that can be adopted by other oversight institutions seeking to modernize their technological service ecosystems.

Rather than asking “how” to integrate services, this study tests the proposition that standard IT governance (e.g., COBIT/ITIL) is insufficient for SAIs unless mediated by a service layer driven by EA principles that translates operational metrics into “public value” metrics specific to audit processes. Our hypothesis is that this architectural mediation is the critical factor for overcoming the fragmentation that currently characterizes oversight systems in the public sector.

While this paper focuses on the discussion of the designed and structured phases, since deployment is ongoing, it establishes the baseline for future empirical evaluation of efficiency, interoperability, and governance impacts.

This study offers a replicable framework for other SAIs. This contribution is grounded in institutional theory, specifically addressing the need for the adoption of standardized, professional practices that enhance the institutional legitimacy of oversight bodies. By providing a validated roadmap, the model seeks to help similar public organizations navigate the pressures of digital transformation while maintaining the rigorous governance structures required by their legal mandates.

Finally, the novelty of this study is not simply discussing the aggregation of TOGAF, ITIL, and COBIT. It is the formalization of a “legal–technical interface”. While pre-existing frameworks focus on value delivery and risk management in a commercial sense, they are fundamentally silent on the principles of evidential weight and constitutional independence, which are crucial to SAIs. The model presented in this study generates a new conceptual artifact by “re-coding” ITIL service actions into “audit-ready” processes, where every technical state change is mapped to a legal validity requirement. This synthesis creates a Generative Governance Model that provides a new theoretical lens for studying IT in SAIs.

2. Background

The core mission of CGR revolves around the functions of fiscal oversight and public resource control. These are functions that increasingly depend on effective and scalable technological support. In response to the growing complexity of service delivery and the demand for transparency and efficiency, CGR has decided to work on the development of an integrated service model that aligns its IT capabilities with its institutional objectives.

To support this initiative, CGR strategically adopted internationally recognized frameworks as the foundation for the development of its integration model. COBIT provides the structure for IT governance, ITIL and ISO/IEC 20000 [9] offer best practices for service management, and TOGAF guides the development of enterprise architecture. The convergence of these frameworks within the integration model enables CGR to optimize service delivery, standardize processes, and ensure that IT services are consistent with the mission, vision, and strategic transformation goals of the organization.

This foundational alignment has been critical in shaping a model, which is both technically robust and adaptable to evolving public sector demands. The alignment ensures that technological services function as enablers of institutional value and strengthen the commitment of CGR to innovation, accountability, and digital transformation.

2.1. TOGAF Framework

TOGAF is a framework that allows structuring a high-level architecture to align IT services in an organization. It offers an integral vision of the current architecture and facilitates the planning and implementation of an objective architecture. This objective architecture has as goal to maximize effectiveness and efficiency in a service delivery layer.

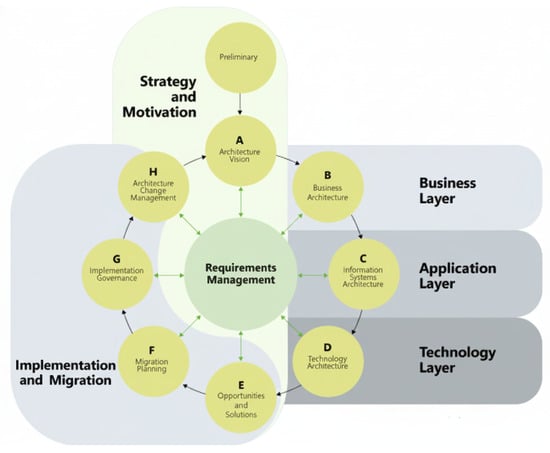

The core architectural layers, as shown in Figure 1, provide the structural hierarchy for the digital assets of SAIs. The business layer defines strategic objectives. The information systems layer, which is further divided into data and applications, which supports business processes. Finally, the technological layer specifies the required infrastructure.

Figure 1.

TOGAF core layers [7]. This hierarchy ensures that technological investments are subordinated to the data integrity requirements and business objectives of fiscal oversight.

TOGAF defines ADM (Architecture Development Method), which provides a guide for every stage of the development, from the definition of the view to the implementation and change management. ADM ensures the correct alignment of the business and institutional objectives and promotes interoperability.

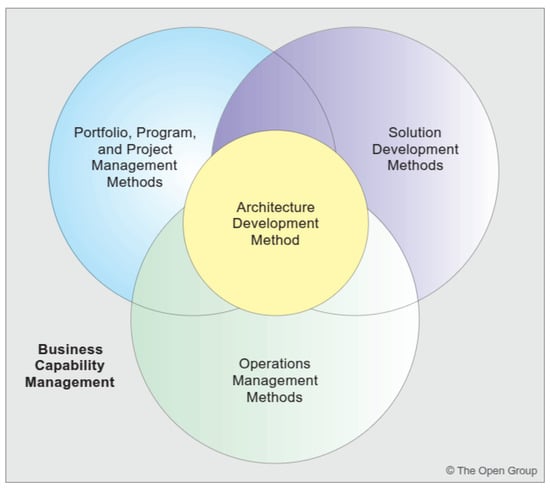

Rather than a standard implementation, for the model presented in this study, the TOGAF ADM was tailored to CGR by prioritizing the preliminary phase for its alignment with the institutional PETI 2022-2026. Phase B (business architecture) was specifically modified to model the “service catalog” rather than as commercial offerings, but as legal-technical support functions aimed at auditors. Consequently, the Enterprise Continuum of TOGAF was utilized to classify existing “legacy” audit systems as foundation architectures that evolved into the presented service integration model. Figure 2 describes how to integrate different management frameworks with an enterprise architecture defined in TOGAF.

Figure 2.

Integration of frameworks. This mapping demonstrates how COBIT provides the governance “Why”, while TOGAF and ITIL provide the architectural “How” and operational “What”, respectively, for the CGR context [7].

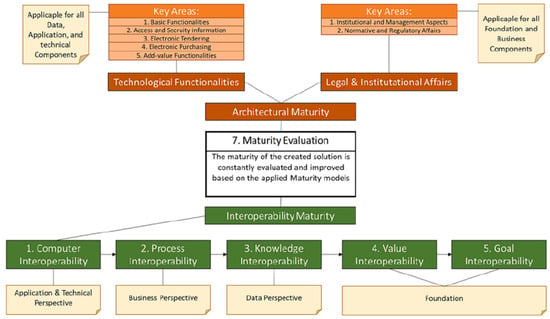

For the first approach in the application of TOGAF, it is necessary to assess the maturity level of the organization under consideration, as presented by different authors [31,32,33]. From this assessment, it is possible to obtain artifacts that measure the maturity and effectiveness of services and policies, for the establishment of contracts among services. Maturity assessment models classify key areas in two main domains: One centered on legal and organizational interoperability and the other focused on technical interoperability. Maturity assessment models allow for an integral evaluation of different factors, make it easier to diagnose the current situation and the identification of improvement opportunities in the systems to be used for digital contracts [32]. The innovation model presented in this paper offers a five-level structure integrated to assess the advances in the interoperability according to the elements defined by Gotschalk et al. [33]. Each level establishes indicators that contribute to determining whether the expected interoperability indicators have been achieved. When completing these levels, it is possible to obtain a quantitative assessment of the maturity of the systems that need to interoperate. The model for maturity assessment is presented in Figure 3.

Figure 3.

Maturity model [31].

2.2. ITIL

The Information Technology Infrastructure Library (ITIL) is a globally adopted framework driving best practices in IT Service Management (ITSM). Its current iteration, ITILv4, was officially released in 2019 and has since driven the alignment of IT capabilities with strategic business objectives, with a contemporary emphasis on digital transformation, agility, and value co-creation [34]. ITIL adoption is useful for the optimization of operational IT processes, with a special focus on user satisfaction and efficiency on service management. This adoption contributes to the improvement of the responsiveness in projects, which ensures an experience with quality at each point of the interaction within the defined best practices. To achieve a good understanding of the current situation, it is important to consider the main categories that comprise the practices of ITIL, their scope and benefits.

The ITIL framework organizes its 34 management practices into three main categories: general management, service management, and technical management. This organization provides a structured and flexible foundation for companies and institutions to align their service management capabilities with strategic and operational needs. Each category contributes to a different dimension of service value: from organizational coordination and governance to end-to-end service design and delivery, and the technical enabling required for modern digital infrastructures. Rather than isolating technical execution from management tasks, this classification advocates for integrated thinking and interdisciplinary collaboration.

- General Management Practices: They reflect capabilities traditionally associated with enterprise-level administration adapted to support IT service management. These practices include areas such as governance, strategy management, continuous improvement, and workforce management. These practices also improve the alignment of IT initiatives with business objectives, foster a culture of accountability, and provide mechanisms to embed long-term value generation across organizational processes. Finally, general management practices contribute to risk mitigation and regulatory compliance, while supporting robust decision-making in dynamic environments [35].

- Service Management Practices: They form the operational core of ITIL, addressing the full life cycle of IT services. Service management practices cover all the stages, from design and transition to delivery and support. These practices emphasize service quality, user experience, and continuous feedback, enabling organizations to provide consistent and reliable value to stakeholders. By promoting standardized approaches to incident handling, service configuration, availability management, and customer engagement, the practices proposed in ITIL contribute to reducing service disruption and improving responsiveness to evolving demands. Moreover, service management practices support the development of adaptive service models, which are critical in fast-paced digital economy present in nowadays societies [36,37].

- Technical Management Practices: They focus on the effective and secure operation of IT infrastructures and platforms supporting organizational services. This set of practices provides guide on deployment, monitoring, and technical support, ensuring resilience and scalability of the technological components that sustain service delivery. By applying these practices, organizations can drive infrastructure management, reduce technical debt, and adopt modern approaches such as automation and infrastructure-as-code [38]. Technical management practices are essential to guarantee service and business continuity, especially in hybrid or cloud-native environments where system reliability is a key aspect [39].

Together, these three categories of practices contribute to a unified service management strategy. Rather than treating governance, service quality, and technical execution as separate concerns, ITIL fosters an integrated management culture that promotes agility, transparency, and value co-creation. This holistic perspective is particularly relevant for organizations undergoing digital transformation, where complex interdependencies among people, processes, and technology demand comprehensive and adaptive governance models [36]. By adopting the practice-based structure of ITIL, organizations can enhance their operational maturity while remaining responsive to changes and the dynamic nature of their processes.

2.3. COBIT

COBIT (Control Objectives for Information and Related Technologies) is a comprehensive governance and management framework for enterprise IT, developed and maintained by ISACA. COBIT provides a structured approach for aligning IT processes and services with business objectives, ensuring that organizations obtain value from their IT investments while effectively managing risks and resources. COBIT is designed to be adaptable across different industries and organizational contexts, serving as a reference model for the integration of IT governance within corporate management structures [40].

At its core, COBIT is based on a set of five main principles that guide the design and implementation of governance systems [41]:

- Meeting stakeholder needs: That means ensuring enterprise IT governance aligns with the priorities and expectations of all stakeholders, to balance benefits, risk, and resource optimization.

- Covering the enterprise end-to-end: It corresponds to the integration of governance responsibilities across the entire organization and its processes, not limited to the IT function, but encompassing all business and technological areas.

- Applying a single, integrated framework: This principle refers to leveraging COBIT as an overarching framework that can harmonize with other standards, such as ITIL, ISO/IEC 27001 [42], and ISO/IEC 38500 [43].

- Enabling a holistic approach: That is, structuring governance through a set of interrelated components, including processes, organizational structures, policies, information flows, culture, and skills. This integrated vision, involving all the elements, is key for a true adoption within the enterprise

- Separating governance from management: It means to clearly distinguish governance activities (evaluating, directing, and monitoring) from management activities (planning, building, running, and monitoring operations).

COBIT is structured into conceptual elements that implement these principles [44]:

- Governance and Management Objectives: Grouped into five domains: Governance (evaluate, direct, and monitoring) and management (APO—Align, plan and organize; BAI—build, acquire and implement; DSS—deliver, service and support; MEA—monitor, evaluate and assess), which collectively cover strategy, planning, execution, support, and performance monitoring.

- Components of a Governance System: These are enablers such as processes, organizational structures, policies and procedures, information, culture, ethics, behavior, services, infrastructure, and human resources. These components interact among them to ensure effective governance.

- Performance Management: These are mechanisms to assess the capability and maturity of governance and management practices. This assessment contributes to supporting continuous improvement.

- Design Factors: They include organizational characteristics (e.g., strategy, risk profile, compliance requirements) that influence how the governance system should be tailored to organizational needs.

- Goals Cascade: This is a translation mechanism from stakeholder drivers and needs into specific enterprise goals, and subsequently into aligned governance and management objectives.

By integrating these principles and elements, COBIT provides organizations with a comprehensive and adaptable methodology for designing, implementing, and continuously improving IT governance systems. Its structured approach supports both compliance with regulatory requirements and the creation of sustainable business value leveraging technology resources [41].

Nevertheless, general governance models (e.g., COBIT 2019) often struggle in SAI environments because they assume a unified organizational goal centered on value delivery. In contrast, SAIs operate under a dual-logic system: they must provide technical service stability while simultaneously ensuring independent oversight. Traditional models driven by architecture often fail here because they do not consider the Socio-Technical friction created when IT integration transparency conflicts with the necessary confidentiality of ongoing fiscal investigations. Our presented model contributes to filling this gap by aligning these conflicting logics through a specialized service-layer abstraction.

2.4. Framework Application and Institutional Integration

The integration of TOGAF, ITILv4, and COBIT within CGR went beyond theoretical alignment. Each framework addressed a specific component of the transformation process, and it was validated through field data and technical analysis performed during the Structuring Phase of the Service Integration Model. The phases adopted for the integration and adoption of industry frameworks in the institutional context follow a methodological approach according to the principles of the Design Science Research (DSR) Methodology [45].

From TOGAF, the institution adopted the Architecture Development Method (ADM) to design a reference model linking business, data, and technology architectures. ADM guided the unification of mission-critical systems, enabling interoperability and process traceability.

ITILv4 practices were adopted and integrated through 23 formalized management procedures, including incident, problem, capacity, service catalog, and information security. These procedures standardized response times, escalation mechanisms, and service availability indicators across different technical domains.

COBIT 2019 provided the governance layer to align service management with institutional strategy. Governance objectives and performance indicators were established for decision-making, risk mitigation, and resource optimization. The performance management principles of COBIT have been employed to define measurable Key Performance Indicators (KPIs) such as Service Level Agreement (SLA) compliance, service continuity, and user satisfaction, among others.

The analytical validation of the proposed model combined the TRL assessment and an IT service-management maturity evaluation. This validation made evident partial process institutionalization. The metrics obtained from this assessment supported the prioritization of improvement initiatives and the construction of a five-phase service integration roadmap comprising diagnosis, design, development, implementation, and monitoring.

3. Methodology

This section presents the methodological framework adopted for the development and validation of the service integration model. Aligned with the scientific expectations of systems innovation research, the methodology is grounded on the principles of Design Science Research (DSR), which is a methodology widely used to develop, implement, and evaluate technological and organizational artifacts that address complex real-world problems [45]. This artifact, as conceptually outlined in Section 5 serves as the target architecture that synthesizes the requirements identified during the diagnosis phase with the structural components of TOGAF, COBIT, and ITIL. DSR provides a structured approach for ensuring rigor, empirical grounding, and reproducibility of results, which is essential in the context of digital transformation in the public sector.

3.1. Design Science Research (DSR) Approach

Under the DSR methodology, knowledge is generated through the creation and evaluation of an innovative artifact intended to solve a well-identified problem. In this study, the artifact is the service integration model (detailed in Section 5), designed to address the fragmentation, inconsistent governance practices, and low service maturity present in the technological ecosystem of CGR.

In the DSR framework, two interconnected spaces guide the research process:

- Problem Space: where diagnosis, data collection and governance assessment allow to analyze needs, constraints, and contextual requirements of CGR.

- Design and Validation Space: where maturity assessments, expert validation, and verification of alignment with institutional priorities allow the construction and evaluation of an integration model using TOGAF, COBIT, and ITILv4 artifacts.

This dual perspective ensures that the proposed artifact is both theoretically grounded, with feasible practical application within the institutional environment of a Supreme Audit Institution.

To fully operationalize the DSR approach, this study distinguishes between the local artifact (the CGR integration model) and the artifact abstraction (a generalizable framework for SAIs). The justificatory knowledge is drawn from institutional theory (ensuring legitimacy) and socio-technical systems theory (balancing technical tools with organizational behavior). The following design principles (DP) were extracted:

- DP1 (Modularity): Decouple audit data from specific applications to ensure long-term availability.

- DP2 (Traceability): Every IT service action must map to a fiscal oversight requirement.

- DP3 (Transparency): Performance metrics must be accessible to non-technical stakeholders to reduce bureaucratic resistance.

As detailed in Table 1, this research distinguishes between the specific implementation at the CGR and the higher-level artifact abstraction. Thus, we ensure that the findings are not merely a contextual report but a generalizable contribution to the field of systems innovation.

Table 1.

Operationalization of the design science research (DSR) framework: from local implementation to artifact abstraction.

3.2. Overall Design and Stages

The methodological process has been organized into four structured stages aligned with DSR cycles of problem identification, design, and evaluation:

- Institutional Diagnosis: Analysis of the technological ecosystem, governance structures, and interoperability challenges in CGR.

- Data Collection and Stakeholder Engagement: Gathering qualitative and quantitative evidence from institutional documents, interviews, and surveys.

- Maturity and Readiness Assessment: Evaluation of the service practices aligned with ITILv4 and COBIT governance capabilities.

- Integration Modeling and Validation: Development of the service integration model and its conceptual validation through expert sessions and alignment with the strategic plans of CGR.

Each stage integrated multiple sources of evidence to ensure triangulation and methodological rigor.

3.3. Timeline of the Model Development

The research to develop the model presented in this paper was executed through an integrated lifecycle that aligned the scientific rigor of DSR with the institutional needs of CGR. The timeline below details the execution of the model phases:

- Phase 1: Institutional Diagnosis and Evaluation (Initiation): This phase began with the launch of the R&D&I project CGR-407-2024. It was driven by the institutional need to modernize fragmented systems (auditing, internal control, and citizen complaints) as outlined in the 2022–2026 strategic cycle.

- Phase 2: Primary Research and Data Collection: Quantitative data collection was centered around an institutional survey conducted among CGR officials. The survey assessment was aligned with the current PETI 2022–2026 strategy to ensure the findings reflected contemporary technological needs and digital transformation goals.

- Phase 3: Maturity Assessment and Readiness Assessment: Following data collection, a baseline maturity assessment was performed using COBIT 2019 and ITILv4. This assessment (detailed in Table 2 and Table 3) established the current state of the IT governance and service capabilities of the institution for the current strategic period.

Table 2. Descriptive summary of survey results by analytical dimension.

Table 2. Descriptive summary of survey results by analytical dimension. Table 3. Current COBIT 2019 maturity levels at the CGR: baseline results from institutional diagnostic assessment ().

Table 3. Current COBIT 2019 maturity levels at the CGR: baseline results from institutional diagnostic assessment (). - Phase 4: Integration Modeling and Validation: The conceptual validation of the service integration model (the DSR artifact) will take place during the “structuring phase”. This phase involves iterative validation sessions with the CGR IT governance board, and it will be finalized for submission during 2026.

The research process has not been limited to a theoretical application of frameworks. It has been a contextual reinterpretation (as noted in Section 2.4). The modeling phase has explicitly integrated the 23 formalized management procedures from ITILv4 with the TOGAF ADM architecture designed specifically for the SAI environment. This integration ensures that the validation stage is not limited to abstract approval, but it will be incorporated according to the actual operational and regulatory requirements of fiscal oversight.

3.4. Data Sources and Collection Methods

Following an approach based on mixed methods, data collection has combined qualitative and quantitative instruments to ensure a comprehensive assessment of IT service performance and governance.

- Document Analysis: Review of institutional IT policies, strategic plans (PECGR 2022–2026 and PETI 2022–2026), service management procedures, and architecture repositories.

- Semi-structured Interviews: Conducted with directors and process leaders from OSEI and DIARI to identify pain points, dependencies, and service expectations.

- Survey Instrument: The survey instrument (Appendix A) comprises 44 items. While perception-based items use a five-point Likert scale (1 = strongly disagree, 5 = strongly agree), operational capability items (marked in Appendix A) utilize a categorical scale to verify the formalization and existence of IT management practices. Both types of data were integrated into the maturity assessment scores presented in Section 4.2.To ensure a comprehensive evaluation, a mixed-scale approach was employed:

- −

- Strategic and Performance Dimensions (Items 1–5, 7–10, etc.): These items assessed institutional perception and maturity using a five-point Likert scale, ranging from “strongly disagree” (1) to “Strongly Agree” (5).

- −

- Operational and Capability Dimensions (Items 6, 11, 17, 23, 29, 35, 41): These items focused on the existence and formalization of specific ITILv4 and COBIT 2019 practices. They were measured using a categorical scale (e.g., Yes/No/In Progress or frequency-based options) to provide a binary or ordinal baseline for maturity calculations, which were subsequently normalized to the 0.0–5.0 scale presented in the results.

- Quantitative Maturity Assessments: Application of ITILv4 maturity models and Technology Readiness Level (TRL) scales to evaluate operational readiness for integrated service management.

The survey demonstrated strong internal consistency (Cronbach’s ), and thematic grouping ensured alignment with the management practices presented in ITILv4.

3.5. Sampling Design and Population Stratification

CGR comprises approximately 6500 officials distributed across central and regional offices. The sample size for the survey was estimated using the finite population correction formula:

where , , , and . The calculated optimal sample size was 363. The final valid sample consisted of 130 respondents (2.0% of the population), which remains suitable for exploratory institutional studies.

While the theoretical optimal sample was calculated at 363 respondents (95% CI; 5% MoE), the final study reached a sample of 130 active officials. This participation rate is common in high-security public sector environments where access to specialized technical staff is restricted. Despite the lower absolute number, the sample achieved a high degree of representativeness, as it included the primary process owners and decision-makers from the OSEI (Office of Information Systems) and DIARI (Information, Analysis, and Immediate Reaction Direction), who possess the specific technical knowledge required to evaluate ITILv4 and COBIT maturity. Furthermore, with , the study maintains a margin of error of approximately 8.5%, which is statistically acceptable for exploratory diagnostic research in Enterprise Architecture.

While the response rate represents approximately 2% of the total institutional workforce, the sampling strategy transitioned from a simple random approach to purposive sampling of key informants. The number 130 respondents are not a general cross-section but are concentrated in the OSEI and DIARI units. These groups, particularly auditors and IT governance leads, are emphasized because they are considered the “process owners” of the service integration model. In DSR, representativeness is achieved through the depth of expertise regarding the environment of the artifact rather than sheer volume. Auditors were prioritized because they are the end-users of the fiscal evidence that the model aims to protect, ensuring that the validation is grounded in the core constitutional mission of CGR.

A stratified random sampling strategy was implemented to ensure proportional representation across job roles, departmental affiliations, and geographic regions. Random selection was conducted digitally to minimize selection bias.

To ensure comparability between the five-point Likert items (perception) and the categorical items (practice verification), a normalization and weighting procedure was applied. For categorical items, a “Yes” response was assigned a value of 5.0, “Partial” 2.5, and “No” 0.0. Scores were then aggregated by organizational unit (OSEI and DIARI) using an arithmetic mean with equal weighting, as both units represent critical pillars of the integration model. To mitigate response bias from the low response rate in certain technical areas, scores were cross-referenced with the qualitative findings from the semi-structured interviews, ensuring that the final maturity level reflected both the quantitative data and the institutional reality.

3.6. Conduction of Interviews

Semi-structured interviews were conducted with directors and process leaders from the OSEI and DIARI units. Interview sessions were designed to identify operational dependencies, critical service bottlenecks, and strategic expectations for the integration model. Specifically, these interviews followed a protocol focused on four lines: (i) current system fragmentation, (ii) critical data flows for fiscal oversight, (iii) governance constraints, and (iv) desired service levels. The qualitative data gathered were used to:

- Contextualize the Problem Space: By validating the “isolated” operation of the mission-critical systems like the financial auditing and citizen complaints platforms.

- Inform the Architecture Design: By ensuring the TOGAF ADM application correctly maps the 23 formalized ITILv4 procedures to the actual technical domains of the SAI.

- Linking of findings: By providing qualitative depth to the low maturity scores (below 2.0) found in the quantitative COBIT and ITILv4 assessments.

3.7. Maturity and Readiness Assessment Procedure

To evaluate IT governance and service capability, assessments were performed using:

- ITILv4 Practice Maturity: Evaluation of organization and people, information and technology, partners and suppliers, and value streams and processes. Each domain was rated on a maturity scale with five levels. Table 4 summarizes the level descriptions.

Table 4. Baseline maturity scores for ITILv4 practices: assessment of the current state via technical survey and qualitative interviews.

Table 4. Baseline maturity scores for ITILv4 practices: assessment of the current state via technical survey and qualitative interviews. - COBIT 2019 Process Capability: Complementary evaluation of governance processes using the maturity scale of COBIT (0 to 5), as shown in Table 3.

- Technology Readiness Level (TRL) Analysis: Assessment of prototype readiness, controlled pilots, and operational feasibility for each service domain.

Two evaluators conducted the assessments independently and consolidated their results through validation sessions with the CGR IT Governance Board. This approach ensured the correct triangulation of findings.

3.8. Analytical Instruments

Several complementary tools have been used to support systematic analysis:

- Compliance Matrices: For the mapping of CGR processes to COBIT control objectives and ITILv4 practices.

- Dependency Models: For the identification of data flows, shared services, and interoperability points among the core systems of CGR.

- Process Maps: For the modeling of incident, problem, capacity, change, and catalog management workflows.

These tools enabled structured reasoning about capabilities, bottlenecks, and integration opportunities, thus forming the analytical basis for model formulation.

3.9. Link with Institutional Results

The diagnostic results revealed dependencies among platforms, infrastructure constraints, and maturity gaps across the technological ecosystem of CGR. These findings are presented in Section 4 and directly informed the architecture and design principles of the service integration model introduced in Section 5. The methodological approach thus ensures that the proposed artifact is both empirically grounded and aligned with the strategic, operational, and governance needs of the institution.

4. Key Findings

This section presents the key findings in the Structuring stage as a previous step for the development of the service integration model. The process followed is presented in Figure 4. The Innovation Model for Service Integration is based on assessing the maturity level and identifying an improvement plan to enhance service efficiency and effectiveness.

Figure 4.

Structuring stage of the design model process.

4.1. Survey Data Analysis and Descriptive Results

The institutional survey conducted among CGR officials provided a multidimensional view of the current state of technological services. The analysis grouped results into seven analytical dimensions: (i) availability and access to IT services, (ii) technical support quality, (iii) technological infrastructure, (iv) information security and data protection, (v) communication and user attention, (vi) innovation and continuous improvement, and (vii) overall satisfaction. Table 2 summarizes the main quantitative findings.

The results in Table 1 reveal a critical bottleneck: the “communication and innovation” dimension received the lowest scores across both DIARI and OSEI. This indicates that while technical capabilities exist, there is a siloed culture that resists the transparency required for service integration. For the transformation goals of CGR, this implies that a change management strategy must accompany the technological deployment of the model. Otherwise, the architectural integration will lack the institutional acceptance necessary to sustain its interoperability.

Across all dimensions, responses indicate a predominantly positive institutional perception of IT services. The mean scores across the seven dimensions range between 3.8 and 4.5, confirming a globally favorable evaluation, with relatively low dispersion (SD < 0.8), suggesting internal consistency in user responses. The highest mean corresponds to technical support quality, while the lowest values are associated with communication and infrastructure, consistent with open-ended feedback.

Qualitative responses reinforced these statistical findings. Users value service availability, security, and staff responsiveness, but emphasize the need for modernization of equipment, improved connectivity, and stronger interoperability among institutional platforms. They also stress the importance of broader training in cybersecurity and effective communication during service changes or incidents. The expressed demand for tools and automation based on artificial intelligence indicates a high level of technological awareness among staff and alignment with the digital transformation strategy of CGR (PETI 2022–2026).

Rather than simple technical gaps, the low maturity levels in service design and problem management (as seen in Table 2) reflect an institutional reactivity. This reactive posture directly compromises the reliability of audit reports, as IT incidents are handled in isolation instead of being analyzed for systemic patterns. In consequence, the model presented in this study is not just an optimization, but a strategic correction intended to shift the CGR from a reactive mode to a preventive, governance structure oriented to services.

In summary, the descriptive analysis reveals that, while CGR maintains strong operational performance and high user satisfaction in key service dimensions, the most critical improvement areas relate to technological infrastructure, communication, and innovation. These findings provide the empirical foundation for the improvement roadmap and the design of specific key performance indicators (KPIs) in the presented service integration model.

4.2. Analysis of the Current State of Technological Service Delivery in CGR

CGR relies heavily on information technologies to support critical functions such as fiscal oversight, data management, and evidence analysis. However, this reliance on IT services also introduces operational risks, as the institution lacks robust continuity plans to mitigate potential failures. Without well-structured disaster recovery mechanisms, technological disruptions could significantly impact the ability of CGR to fulfill its mission.

The data presented in Table 2, Table 3 and Table 4 represent the baseline quantitative diagnosis of the technological ecosystem in CGR. These values have been obtained through the following iterative process:

- Quantitative Assessment: Applying the 44-item construct to the study sample () to generate scores for seven ITILv4 and COBIT 2019 dimensions.

- Qualitative Triangulation: Refining the “Comment” columns through semi-structured interviews with process leaders from OSEI and DIARI units to identify root causes of low scores.

- Target Definition: Aligning the performance targets (Table 4) with the requirements established in the institutional PETI 2022–2026 and the CGR-407-2024 project milestones.

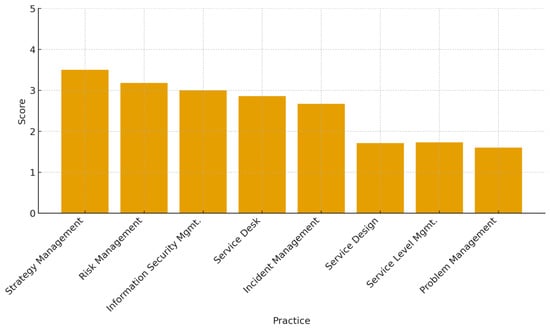

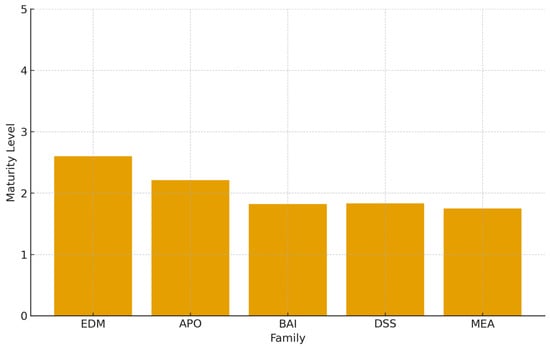

Table 3 shows the baseline COBIT 2019 maturity levels across the five governance families at CGR. The findings reveal that, although EDM (Evaluate, Direct, Monitor) shows relative progress with a maturity level of 2.60, mainly due to the existence of governance committees and decision-making mechanisms, other families remain below 2.5. Particularly, BAI, DSS, and MEA present the largest improvement opportunities, as they lack institutionalized processes for change enablement, continuity, and performance monitoring. These results confirm the need for a unified integration model governed under a formal architecture and service-management framework.

A major challenge faced by CGR is the lack of complete service integration across its various IT systems. Currently, technological services operate in isolated and fragmented environments, leading to redundancies, inefficiencies, and limited interoperability. The absence of a centralized service integration framework prevents a streamlined approach to data management and cross-functional operations, restricting the ability of the institution to leverage digital transformation effectively. The addressing of these issues requires a strategic approach to IT service consolidation and governance. Table 4 highlights the maturity status of selected ITILv4 practices at CGR. The interview data confirmed that the low quantitative scores for “problem management” were linked to a lack of root-cause analysis procedures identified by process leaders. Strategic domains such as strategy management and risk management score above 3.0, making evident a strong alignment with institutional priorities and ongoing capacity planning efforts. However, practices with greater operational impact on service continuity, such as service design, service level management, and problem management display maturity levels below 2.0. This gap reflects the heterogeneous definition of workflows and the lack of standardized SLA negotiation and measurement, which directly affects user experience and fiscal oversight operations.

The results indicate a critical vulnerability in problem management (0.95) and service design (1.20). In the context of a supreme audit institution, low maturity in problem management implies that recurring technical failures are not being root-cause analyzed, leading to a high risk of data corruption or loss during incident recovery. This directly impacts audit reliability, as the lack of a stable service design means new auditing tools are being deployed without the necessary security controls or performance baselines. In consequence, the proposed integration model prioritizes the stabilization of these domains to ensure that the recovery of fiscal evidence is not only fast (MTTR) but also forensically sound and consistent.

An evaluation of IT process maturity in CGR, based on the Technology Readiness Level (TRL) model, indicates that the institution currently operates at a TRL level between 5 and 6. While testing environments and quality assurance mechanisms exist, full integration into an operational setting remains incomplete. Essential elements such as a service catalog, development processes, and infrastructure management frameworks have been implemented. However, fragmentation across different IT components limits their effectiveness, particularly in high-demand and mission-critical scenarios. Additionally, the absence of structured disaster recovery procedures and the lack of regular IT resilience testing further underscore the need for a higher level of technological maturity and preparedness. Advancing IT maturity in CGR requires the implementation of a standardized service model, incorporating frameworks such as ISO 22301 [46] for business continuity and ITILv4 and COBIT for IT governance and process integration.

While CGR has made notable efforts in digital transformation, there remains a partial disconnection between strategic objectives and tactical IT implementations across its departments. Despite modernization initiatives being in place, a lack of alignment between governance structures and operational execution reduces the efficiency of these advancements. To bridge this gap, it is essential to establish a more cohesive IT governance strategy that connects long-term institutional goals with practical, day-to-day IT service management.

Furthermore, Table 5 shows a sample of the current catalog of IT service indicators defined by the CGR. A total of 51 KPIs were identified: 37 operational (72%), 11 project-level (22%), and 3 strategic (6%). While this demonstrates a significant effort in process measurement, the concentration of indicators at the operational level suggests a limited capability to measure public-value outcomes, a critical requirement for fiscal accountability institutions. Strengthening strategic KPIs (e.g., audit-traceability metrics, service contribution to oversight effectiveness) would enable the model to better demonstrate institutional impact.

Table 5.

Selected service performance indicators from the institutional catalog () and their strategic targets.

From the total catalog of 51 KPIs managed by the CGR, the indicators shown in Table 5 were selected based on their high impact on the “delivery and support” macro-component of the proposed model. These metrics focus on availability and resolution times, which were identified as the most significant pain points during the diagnostic phase.

Taken together, these baseline results provide a precise and measurable starting point for the adopted service integration model. They validate the relevance of implementing a structured, framework-based approach, combining TOGAF, ITILv4, and COBIT, to enhance service reliability, visibility, and alignment with the CGR’s mission of safeguarding public resources.

4.3. Reinterpreting Framework Concepts for Public Governance

The adaptation of managerial frameworks such as TOGAF, ITILv4, and COBIT to the public sector requires a contextual reinterpretation of their core concepts. In CGR, these frameworks were not applied in their original corporate form, but they were adjusted to align with the principles of transparency, accountability, and public value creation that govern public administration. This subsection clarifies how key concepts were redefined and operationalized within this institutional setting.

The contextualization of these frameworks for the CGR involved specific operational modifications:

- TOGAF ADM Tailoring: Phase B (Business Architecture) was modified to include a “Legal-Technical Traceability” sub-phase. This ensured that every IT service defined in the catalog was mapped not just to a business process, but to a specific article of the Colombian fiscal oversight law.

- COBIT Operationalization: Performance metrics were shifted from corporate return of investment to “oversight capability”. For example, the COBIT metric “percent of IT-enabled business programs” was adopted at CGR as “percentage of digital audit artifacts with automated chain-of-custody verification”.

- ITILv4 Adaptation: The “service request management” practice was specifically integrated with the CGR-407-2024 security protocols, requiring multi-factor authentication and role-based access for any data modification involving fiscal evidence.

Business Analysis. In the context of CGR, business analysis was reinterpreted as the study of fiscal oversight, auditing, and citizen-service processes rather than commercial or profit-oriented activities. The analysis focused on understanding how information flows across audit, administrative, and strategic domains, identifying bottlenecks that affected the timeliness and reliability of fiscal control actions. This process informed the redesign of workflows in its core platforms, linking business rules directly to audit traceability and citizen accountability. Thus, “business” refers to the public mission and statutory functions of the institution, emphasizing social and institutional outcomes instead of market performance.

Similarly, Figure 5 shows that practices influencing user service experience, such as Service Level Management and problem management, score below 2.0, explaining the current limitations in SLA compliance and service restoration. In contrast, Strategy and Risk Management show better maturity levels, which reflects the CGR’s strong strategic governance but also highlights a tactical–operational execution gap.

Figure 5.

ITILv4 practice maturity levels at the CGR.

Strategy Management. Within CGR, strategy management was aligned with national and institutional planning instruments, including the Digital Transformation Plan and the Institutional Strategic Plan (PETI 2022–2026). Instead of pursuing competitive advantage, strategic alignment sought to ensure that technological initiatives supported policy compliance, regional equity, and service accessibility. This redefinition made it possible to integrate TOGAF’s architectural planning phases with public-sector priorities such as territorial coverage and citizen trust.

Performance Management. Within the reinterpretation of “performance management”, a critical limitation was identified in the current institutional KPI catalog. As shown in the synthesized selection in Table 4, the distribution is heavily skewed toward operational metrics: 72% of the indicators are operational/technical, 22% are tactical, and only 6% are strategic. This “measurement gap” suggests that while the CGR can monitor the “health” of its systems, it currently lacks the metrics to quantify how IT service integration directly enhances fiscal oversight outcomes or public value creation. Consequently, the presented model establishes the architectural foundation necessary to evolve these indicators toward more strategic and oriented to impact measures.

The COBIT 2019 principles of performance management have been adapted to public governance metrics. Measures of transparency, service continuity, responsiveness, and institutional credibility replaced Traditional efficiency indicators (cost reduction, return on investment). The Key Performance Indicators (KPIs) defined in the CGR’s IT Service Model included dimensions such as service availability (>98%), user satisfaction, and compliance with information security and data protection regulations. These indicators were designed to strengthen decision-making and accountability rather than to maximize revenue or operational profit.

Figure 6 complements the maturity analysis by illustrating the relative performance of the governance families. The gap between EDM and the other domains makes visible the challenge of operationalizing governance intents into consistent service delivery and measurement practices across all units. This visual evidence reinforces the findings of Table 3 and confirms that transformation efforts must prioritize APO, BAI, DSS, and MEA capabilities to ensure effective fiscal oversight.

Figure 6.

Baseline maturity levels across COBIT 2019 governance families at the CGR.

Knowledge and Innovation Management. The emphasis of ITILv4 on continuous improvement and organizational learning was reframed as a mechanism for institutional capacity building. Knowledge management practices focused on documenting good practices, lessons learned, and process standardization to facilitate institutional memory and ensure continuity of oversight services. Innovation management was directed towards the sustainable modernization of public services, emphasizing open data, digital participation, and citizen-centered value generation.

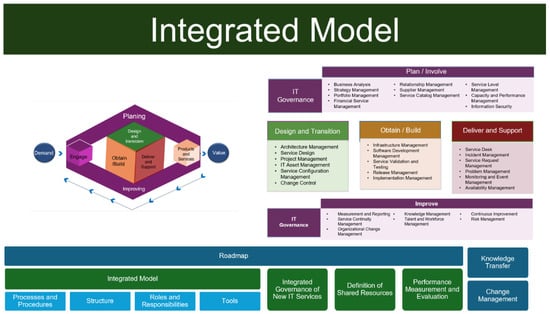

5. Service Integration Model

The presented service integration model constitutes the scientific artifact developed through the Design Science Research methodology described in Section 3. The model is designed to strengthen efficiency, interoperability, and governance of IT services in CGR by integrating architectural, governance, and service-management practices derived from TOGAF, ITILv4, and COBIT. It deploys a structured and replicable blueprint that aligns technological services with institutional objectives and mission-critical fiscal oversight functions.

5.1. Model Overview and Design Logic

The design of the model follows a layered and iterative structure grounded in enterprise architecture principles. Four macro-components—Engagement, Design and Transition, Obtain/Build, and Delivery and Support—constitute the core of the model. These components encapsulate the full lifecycle of IT services, from strategic alignment and planning to service development, operation, and continuous improvement. Figure 7 provides an integrated visualization of these elements.

Figure 7.

Proposed service integration model for SAIs. The figure highlights the four macro-components: Governance (COBIT), Architecture (TOGAF), Operations (ITIL), and the unified performance layer.

Each macro-component implements specific architectural decisions, governance mechanisms, and practices aligned with ITILv4:

- In the context of CGR, the engagement component implements semi-structured feedback loops identified in the diagnosis, while design and transition focuses specifically on the migration of legacy audit workflows into the new interoperable state. By focusing on these SAI-specific applications, the model avoids the generalities of standard ITIL implementations as described in Section 2.

- Obtain/Build: Addresses infrastructure provisioning, software implementation, prototyping, and service validation. This phase aligns with TRL readiness criteria and the Build–Test cycles of systems engineering.

- Delivery and Support: Ensures operational performance through incident management, service desk operation, request fulfillment, monitoring, and performance measurement. It corresponds to service value chain activities of ITILv4.

A continuous improvement loop integrates governance mechanisms such as risk management, service continuity, and performance evaluation. Knowledge transfer and organizational change practices facilitate sustainable adoption of technological innovations.

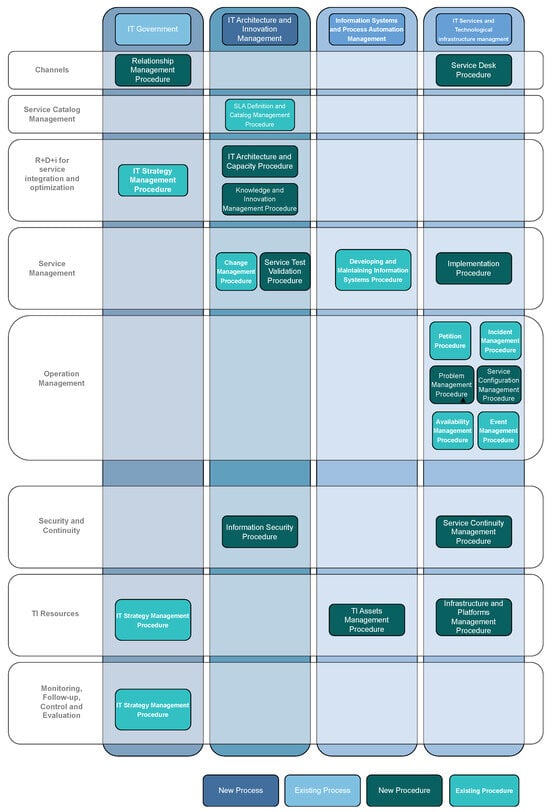

5.2. Architecture and Value Flow Model

The architectural design of the model is grounded in the ITILv4 service value chain, adapted to the institutional environment of CGR to optimize resources, strengthen interoperability, and enhance both employee and citizen experience. Figure 8 illustrates the value-flow representation of the model.

Figure 8.

Architecture flow and procedural connections. The diagram illustrates the life cycle of a service request: from the business layer (strategy/policy) through the data layer (audit evidence) to the technology layer (infrastructure).

To provide clarity on the contribution of the model, Figure 8 distinguishes between pre-existing institutional assets and new architectural components. The technology layer (cloud/on-premise servers) and certain application silos represent existing components. In contrast, the integration layer, the unified service catalog, and the cross-cutting governance APIs are entirely new artifacts designed in this study to bridge previously isolated systems. This architecture is what enables the transition from fragmented IT to an integrated service model.

The architecture is organized around four strategic domains:

- IT Governance: guides decision-making through policies, risk management, continuity planning, and accountabilities defined in COBIT.

- Architecture and Innovation Management: orchestrates the design of organizational, data, application, and technology architectures following TOGAF ADM.

- Information Systems and Automation: manages core platforms, business processes, and interoperability services.

- IT Services and Infrastructure: oversees operational delivery, infrastructure provisioning, and reliability management.

Across these domains, eight operational stages structure the flow of value creation: (1) channels, (2) service catalogue, (3) R&D&I, (4) service management, (5) operation, (6) security and continuity, (7) IT resources, and (8) monitoring, follow-up control and evaluation.

6. Assessment and Validation Framework

The performance assessment framework evaluates the extent to which the presented service integration model strengthens efficiency, interoperability, and governance of IT services within CGR. Consistent with the Design Science Research methodology, the assessment focuses on determining how the artifact performs within its intended environment and whether it addresses the organizational challenges identified in the problem space. The evaluation integrates quantitative indicators, qualitative evidence, and institutional deliverables, ensuring a multi-perspective validation of the model’s effectiveness.

6.1. Evaluation Dimensions

Four analytical dimensions were defined to assess the impact of the model and alignment with the strategic objectives of CGR:

- People and Organization: user satisfaction, usability, adoption levels, and organizational alignment.

- Information and Technology: system performance, data quality, security controls, interoperability, and platform reliability.

- Partners and Suppliers: contractual performance, risk management, and alignment with service levels defined in SLAs.

- Value and Process Flows: consistency of service workflows, process standardization, and governance indicators linked to fiscal oversight functions.

These dimensions reflect the ITILv4 four-dimension model and the evaluation of governance and management capabilities of COBIT.

6.2. Key Performance Indicators

A balanced scorecard of Key Performance Indicators (KPIs) was defined across three strategic areas: administrative efficiency, technological interoperability, and user satisfaction. KPIs include:

- Administrative Efficiency: average service response time, mean time to repair (MTTR), SLA compliance rate.

- Technological Interoperability: system integration rate, data synchronization reliability, service availability.

- User Satisfaction and Adoption: perceptions of service quality, system usability, communication effectiveness, and overall satisfaction scores.

To address the identified imbalance where current metrics are heavily skewed toward operational performance, the described model introduces a strategic pivot in performance management. This pivot transitions the focus from technical “uptime” to Strategic Fiscal-Impact KPIs that align IT performance with the constitutional mandate of CGR. Specifically, three new categories are proposed for the next implementation phase:

- Audit velocity that measures the reduction in time required to compile fiscal evidence through automated service integration.

- Forensic integrity rate that quantifies the percentage of digital artifacts with a cryptographically verified chain of custody.

- Public value impact that correlates IT service availability with the successful recovery of public funds in high-priority sectors. These indicators ensure that the architecture does not simply exist to support IT, but to directly enhance the efficiency of fiscal oversight.

These indicators provide a quantitative basis for evaluating institutional performance and for benchmarking improvement after the full deployment of the integration model. Unified ITSM tools facilitate transversal measurement and real-time monitoring of service performance.

The tracking of KPIs during the pilot phase (Q3–Q4 2024) was conducted through the Integrated Management System (SIG) of CGR. Data were extracted directly from the ITSM tool (GLPI) and cross-referenced with logs from the DIARI data center, to ensure accuracy. To ensure objectivity, an independent validation was performed by the internal control office and the IT governance committee. These bodies acted as “third-party” evaluators, verifying that the reported improvements in MTTR and service availability were not technical anomalies but reflected actual operational stability. This independent oversight ensures that the pilot results are robust and free from the bias associated to developers, which are often found in DSR artifact evaluation.

6.3. Analytical Evidence and Validation Results

Empirical validation of the model was achieved through the triangulation of field diagnostics, survey data, maturity assessments, and architectural analyses. Qualitative evidence from the interviews was triangulated with survey data to define the Improvement Roadmap, specifically the prioritization of service availability targets (>=98%) and MTTR reductions.

Four analytical components structure the evidence base:

- Infrastructure Diagnosis: Identified documentation gaps, absence of standardized monitoring mechanisms, and heterogeneous hardware conditions across regional offices.

- User Experience Analysis: Revealed fragmentation of IT services between central and territorial offices, inconsistent communication channels, and limited visibility of service procedures.

- Maturity Assessment: ITILv4 and ISO 20000–1 evaluations rated the IT service management of CGR at Level 2, with Technology Readiness Levels (TRL) between five and six, indicating functional prototypes and controlled pilots ready for integration.

- Improvement Roadmap: Defined measurable targets such as service availability above 98%, MTTR below 24 h, periodic SLA reviews, and progressive standardization of operational workflows.

These findings confirm the relevance of the model and provide baseline conditions for subsequent operational evaluations once full deployment is completed.

6.4. Framework Alignment and Institutional Outputs

The application of TOGAF, ITILv4, and COBIT created a coordinated set of architectural, operational, and governance deliverables. Table 6 summarizes the alignment between frameworks, implementation activities, and institutional outputs.

Table 6.

Alignment of frameworks and institutional deliverables within CGR.

6.5. Institutional Impact and Readiness

The assessment confirms that the model enhances organizational readiness for integrated IT service management by:

- Establishing a unified architectural vision aligned with the mission of CGR,

- Reducing fragmentation in processes and data flows,

- Improving traceability, transparency, and governance mechanisms,

- Enabling a structured transition toward digital maturity.

While the deployment phase is ongoing, the validated baseline demonstrates that the model is technically feasible, operationally relevant, and capable of supporting the strategic objectives of interoperability, accountability, and digital transformation of CGR.

7. Discussion

The results presented in Section 4 provide empirical evidence of the heterogeneous maturity landscape within the technological and governance ecosystem of CGR. Table 3 and Figure 6 show that COBIT governance families exhibit a marked imbalance: while the EDM domain reaches a relatively higher maturity level (2.60), reflecting structured decision-making mechanisms, APO, DSS, BAI, and MEA remain below 2.5. This finding indicates that, despite governance intentions being formalized at the strategic level, they have not yet fully translated into standardized operational execution. This is a challenge widely documented in public-sector digital transformation literature, where institutional inertia and fragmented workflows often limit the adoption of governance frameworks.

The results of the pilot phase confirm the main hypothesis. By implementing the “service integration model”, CGR has been able to close the identified “measurement gap” (where 72% of metrics were operational). The shift toward Strategic Fiscal-Impact KPIs (Section 6.2) demonstrates that EA mediation effectively translates technical stability into institutional oversight capability, thus validating the theoretical expectation that an integration driven by architecture is the primary driver of digital legitimacy in SAIs.

Operational performance results reinforce this diagnosis. As shown in Table 4 and Figure 5, the maturity of several ITILv4 practices (particularly Service Level Management, Incident Management, and problem management) remains below the levels required for stable, resilient service delivery. These weaknesses directly affect service continuity, monitoring capacity, and user experience. Survey feedback from institutional users further confirms these limitations, highlighting the need to improve SLA definition, incident routing, and root-cause analysis practices, which are critical components for safeguarding the integrity of systems supporting fiscal oversight activities.

Beyond operational efficiency, digital transformation in fiscal oversight institutions must ultimately contribute to public-value outcomes. As a baseline for the integration model, a prioritized selection of indicators was extracted from the comprehensive catalog of the institution, which includes 51 IT service KPIs. Table 5 presents a representative sequence of these indicators, specifically those most critical to the mission of fiscal oversight, showing their status against the defined strategic targets. Nevertheless, the distribution of indicators in Table 5 reveals that 72% of KPIs are operational, while only 6% are strategic. This imbalance limits the ability of CGR to demonstrate how IT service improvements strengthen transparency, accountability, and trust—key dimensions of fiscal governance. Increasing the proportion of mission-oriented indicators (e.g., audit cycle times, traceability levels, territorial equity metrics) would enhance the capacity of the institution to link technological performance with oversight results, an aspect emphasized in recent public-sector innovation studies.

Taken together, these findings make evident the need and motivation for the service integration model proposed in this study. By integrating TOGAF for architectural standardization, COBIT for governance and measurement, and ITILv4 for service management, the model provides a unified, evidence-based framework capable of reducing process fragmentation and improving service traceability, interoperability, and governance alignment. Validation sessions conducted with the CGR Architecture and IT Governance Committees further strengthened institutional ownership, increasing the likelihood of sustainable adoption and long-term maturity advancement.

It is important to note that the present evaluation corresponds to the structuring and development phases of the model (Phases 1 to 3 described in Section 3.3). Full operational validation will take place during 2026 once the newly designed workflows, dashboards, and SLAs are implemented across all territorial dependencies. At that stage, improvements in availability (≥90%), MTTR (<4 h), incident resolution times, and user satisfaction will provide quantitative evidence of the effectiveness of the model. Future research should also compare the evolution in CGR with other fiscal oversight bodies in Latin America and OECD countries, promoting cross-institutional learning and testing the model’s international transferability.

To mitigate the low maturity identified in the BAI and DSS domains, the CGR has initiated a phased “remediation roadmap” (2025–2026). This involves transitioning from ad-hoc software acquisition to a Service-Oriented Architecture (SOA) approach, where new modules must pass a “maturity gate” before deployment.