Explainable Recommendation of Software Vulnerability Repair Based on Metadata Retrieval and Multifaceted LLMs

Abstract

1. Introduction

- RQ1: What are the factors of embedding and retrieval that affect the recommendations of code-repairing strategies using LLMs? We consider data characteristics to be the key to influencing the embedding and retrieval quality. We develop and evaluate retrieval strategies to present variations in handling data characteristics. These strategies become the base for quantitatively measuring the retrieval accuracy of embedding strategies.

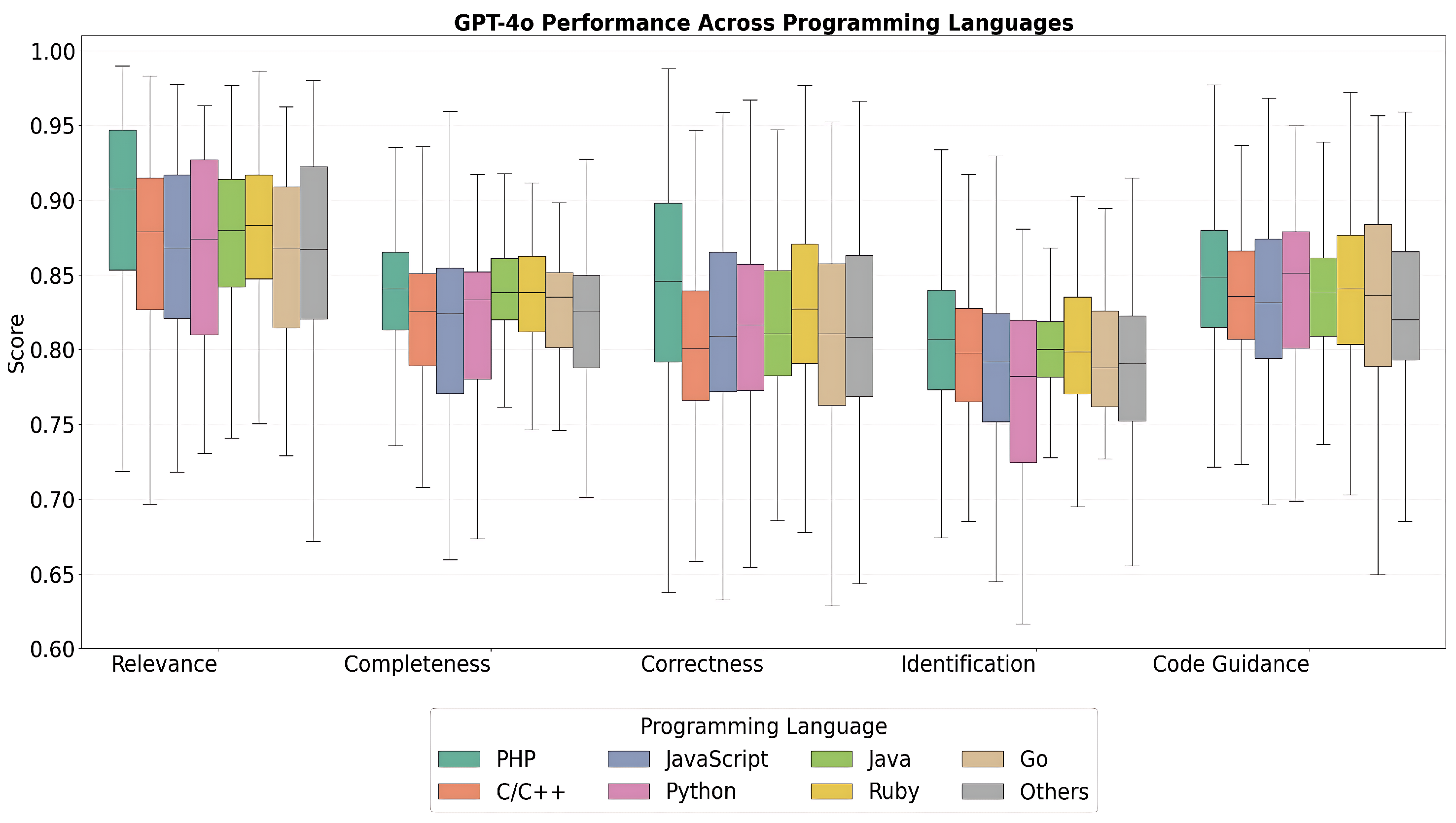

- RQ2: Does the proposed code vulnerability recommendation pipeline operate in a language-agnostic manner? We investigate whether the recommendations generated over different programming languages show consistent quality of repair recommendations without the over- or under-performance of any particular language.

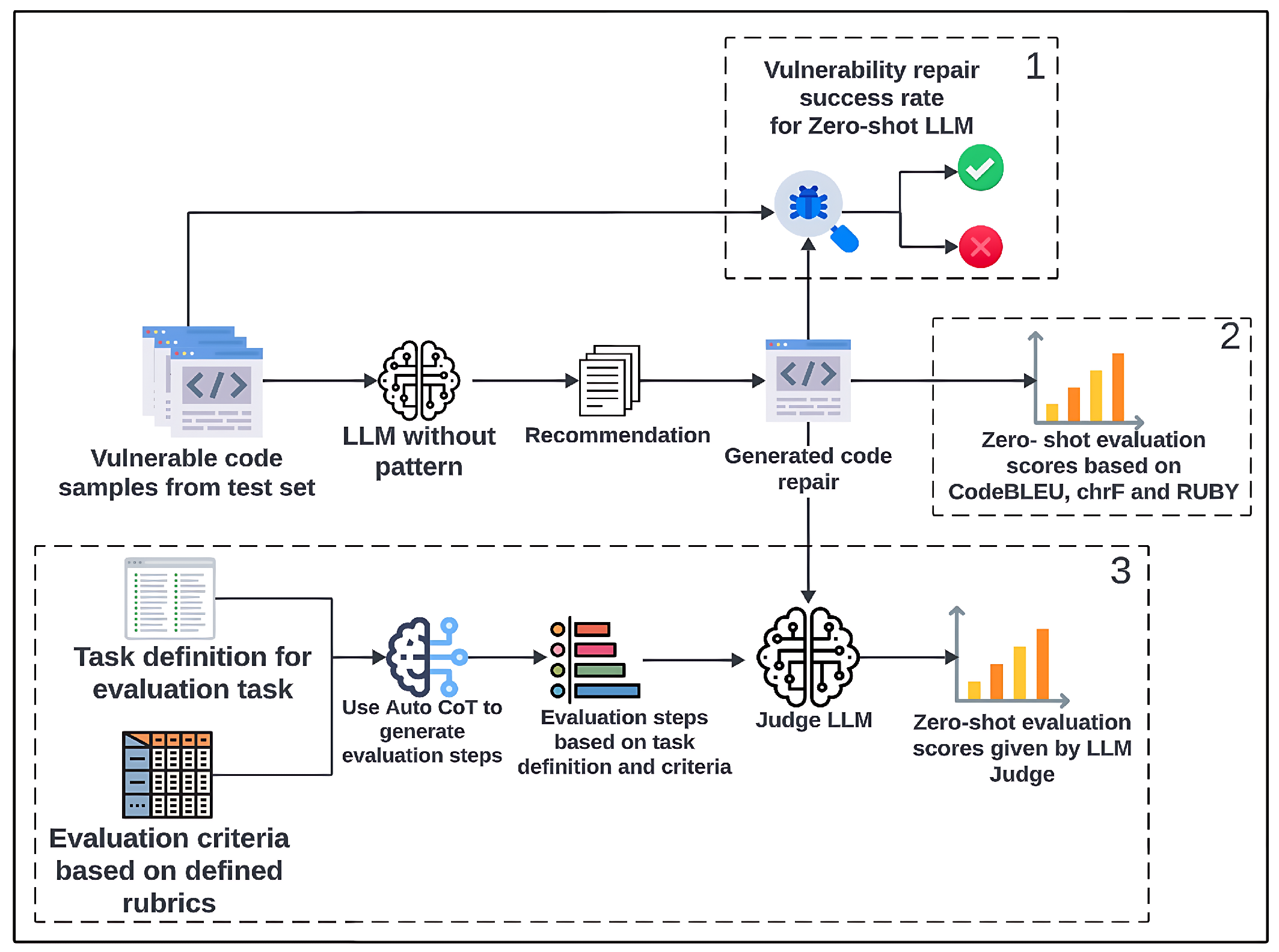

- RQ3: How do different evaluation methods affect the assessment of code-repairing recommendations? We investigate the limitations of using reference-based metrics such as CodeBLEU, chrF, and RUBY to assess code repairs based on the LLM recommendations. We also investigate the ability of an LLM-as-a-Judge to directly evaluate the recommendations to overcome the limitations of the SOTA metrics and provide alignment with the human manual inspection process.

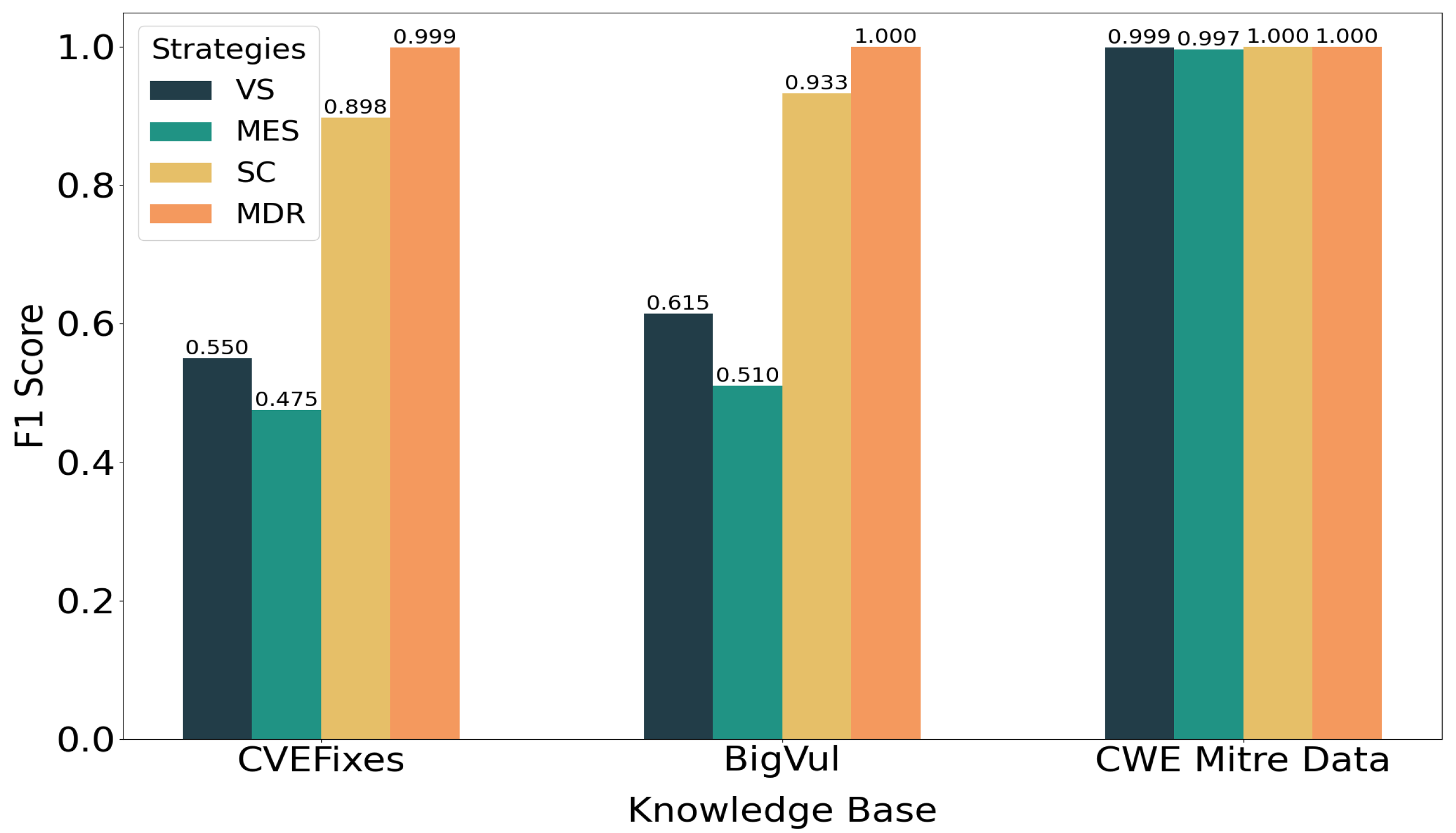

- Code Retrieval Strategies: We explore and evaluate a set of retrieval strategies, including vanilla, metadata embedding, segmented context, and metadata-driven retrieval, that capture the data characteristics of the knowledge bases, such as token overlapping, metadata uniqueness, syntactical diversity, and semantical similarity. These strategies are evaluated to assess their impact on the quality of recommendations to determine which strategy is best suited for our use case.

- LLM-based Pipeline for Vulnerability Repair Recommendations: We propose a retrieval and augmentation pipeline that retrieves domain-specific contextual information from an open knowledge base, augments the retrieved context into LLM prompts, and generates actionable recommendations.

- LLM-based Recommendation Assessment: We utilize an LLM-as-a-Judge framework to evaluate recommendations based on criteria aligned with the manual inspection process. Our evaluation spans comprehensive scenarios, multiple LLM models, and a diverse set of metrics, providing a robust assessment of recommendation quality.

2. Background and Related Works

3. Retrieval and Augmentation Strategy for Code Repair Recommendations

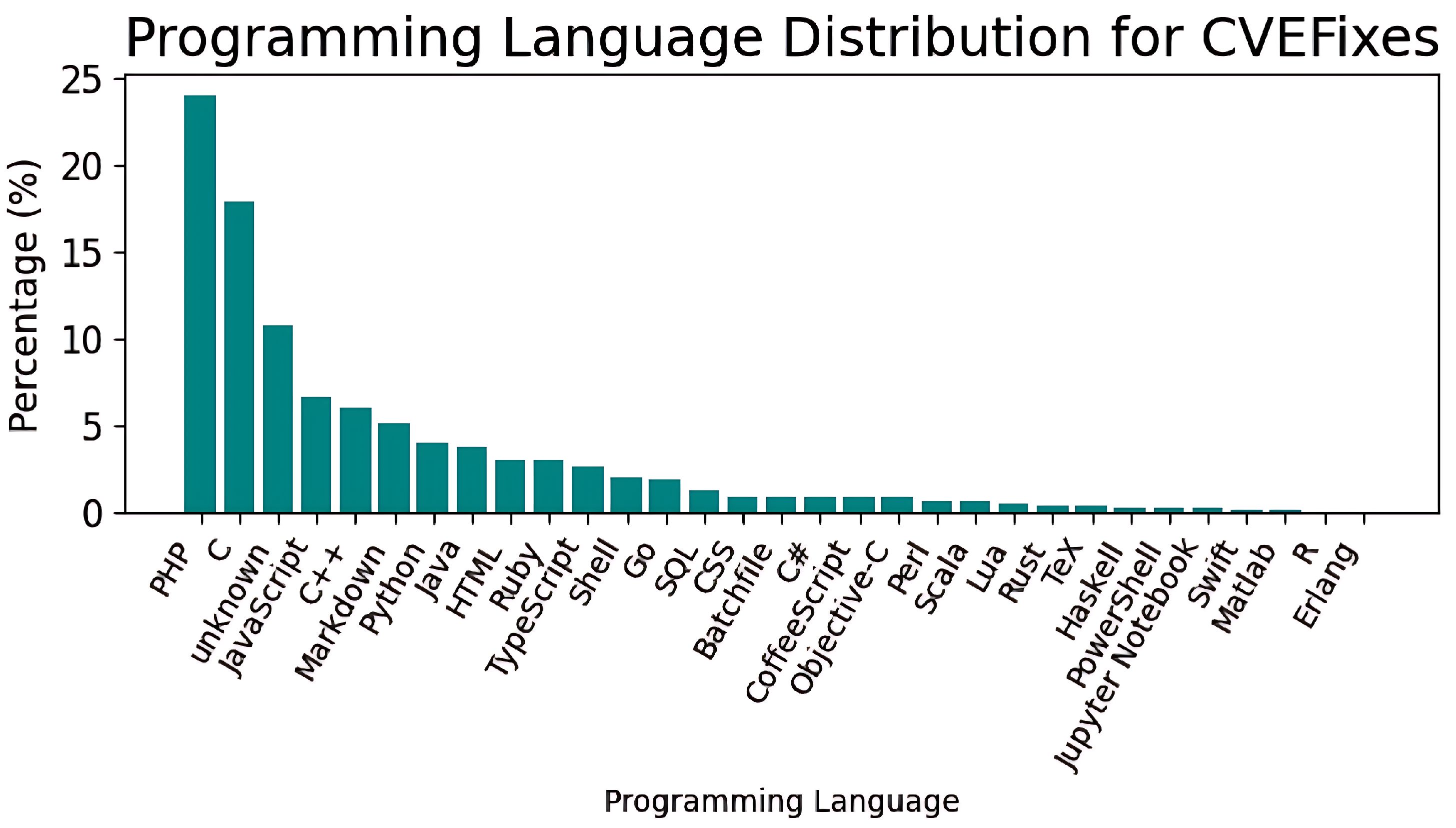

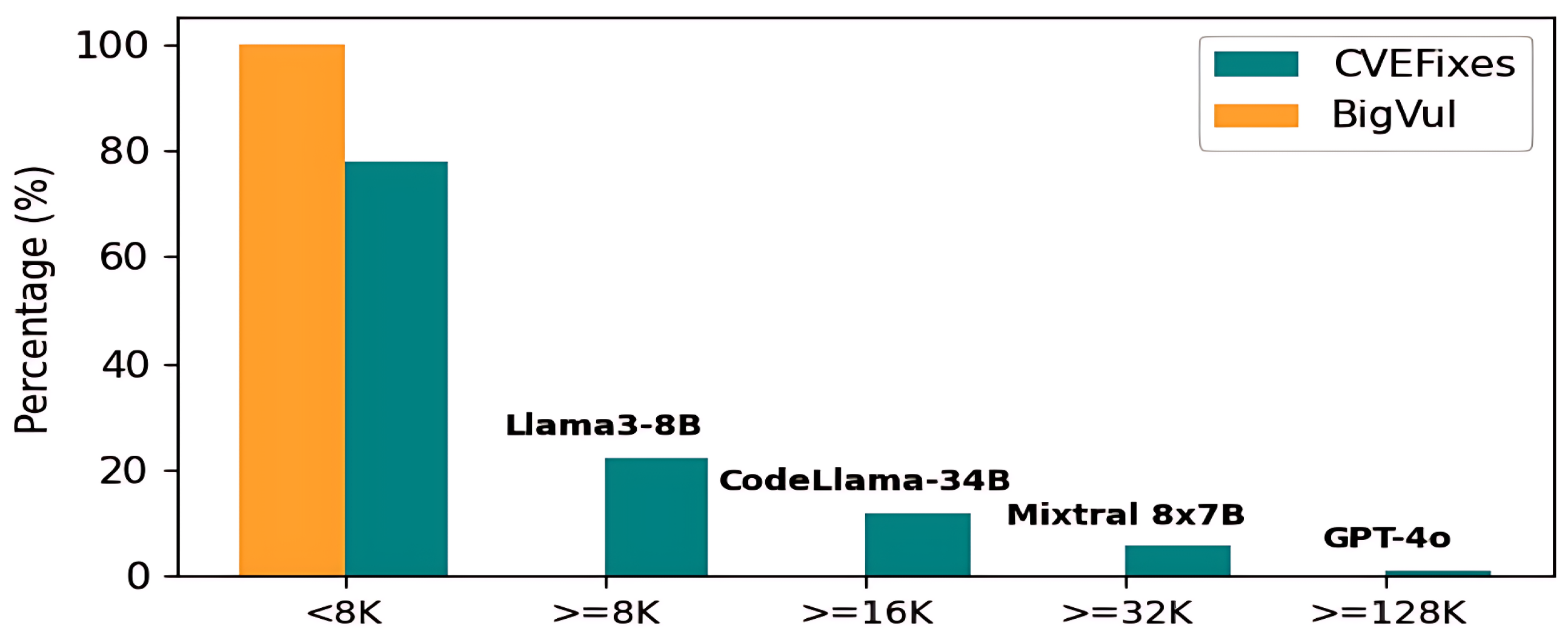

3.1. Data Characteristic Analysis

3.2. Retrieval and Augmentation Strategies

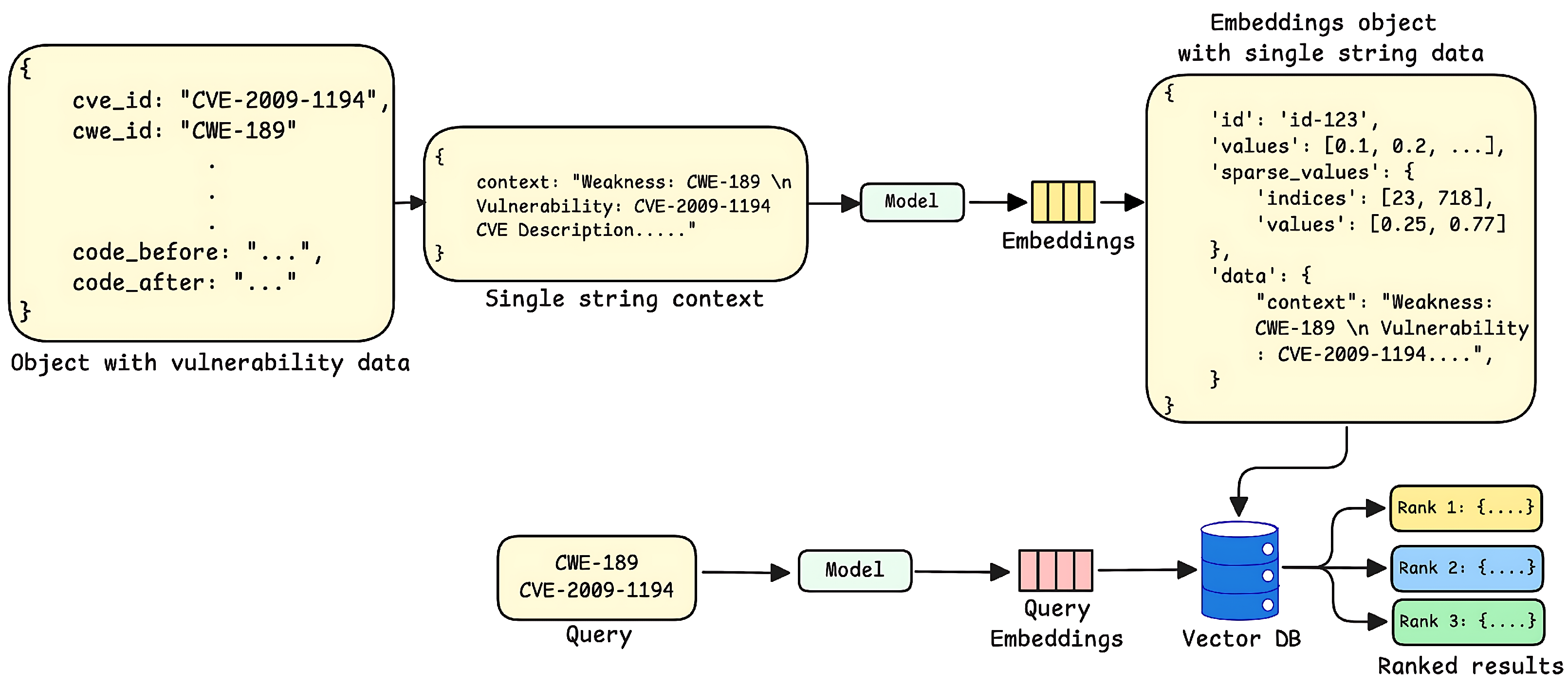

3.2.1. Vanilla Strategy

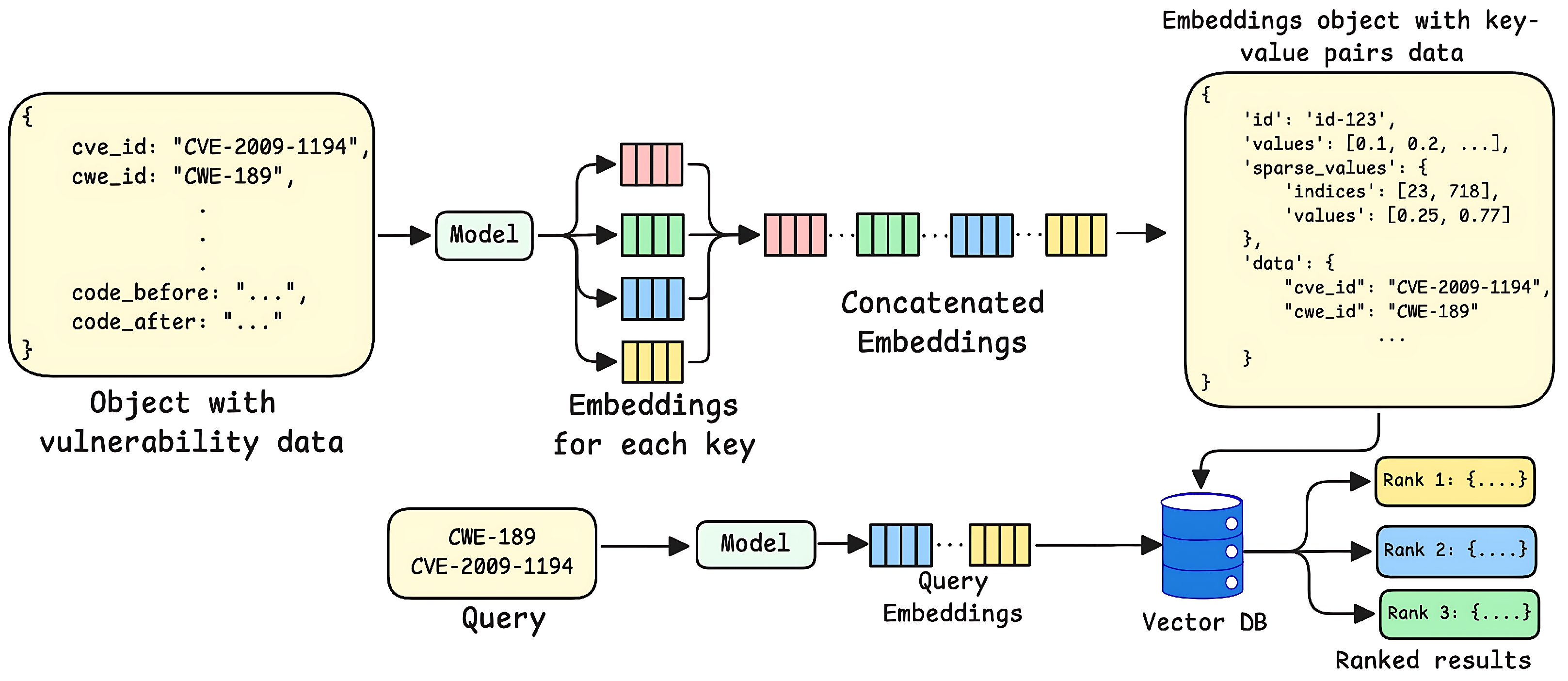

3.2.2. Metadata Embedding Strategy

3.2.3. Segmented Context Strategy

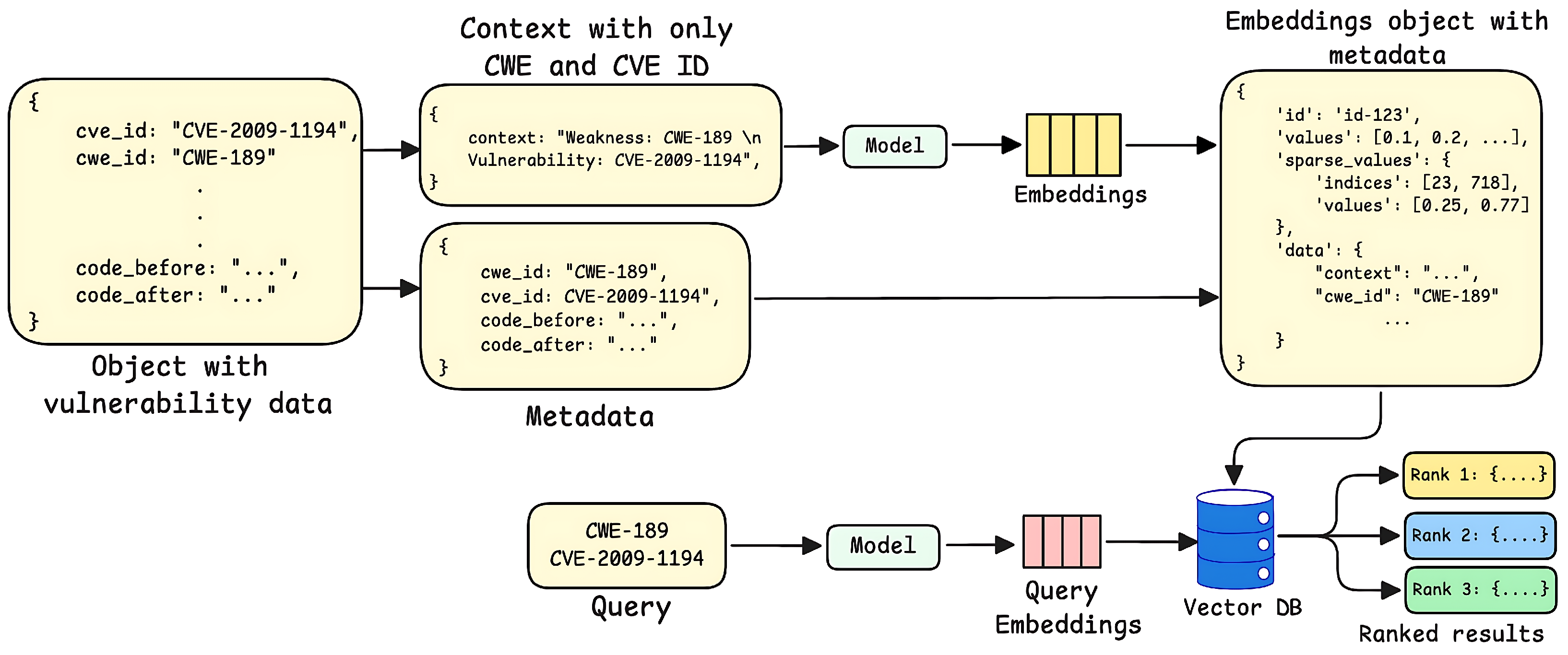

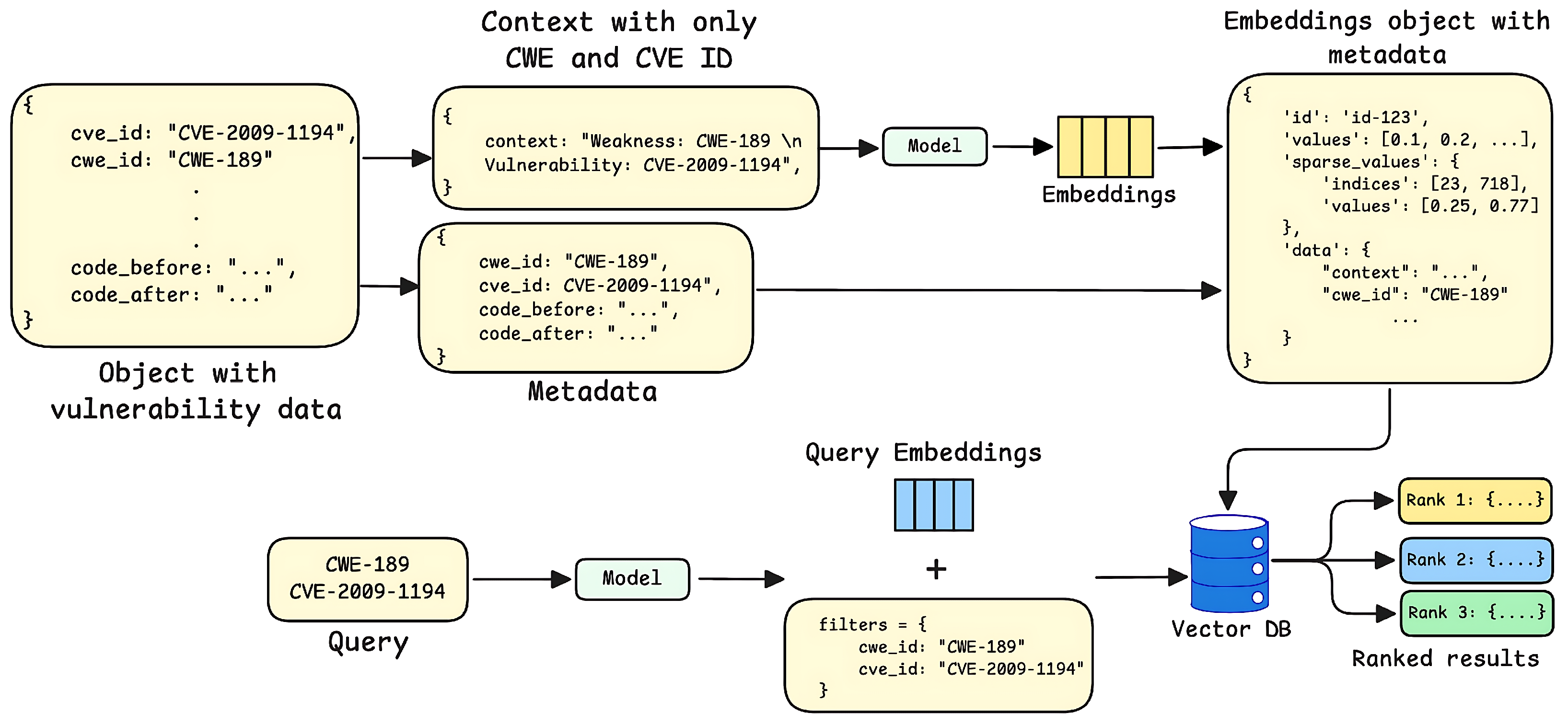

3.2.4. Metadata-Driven Retrieval Strategy

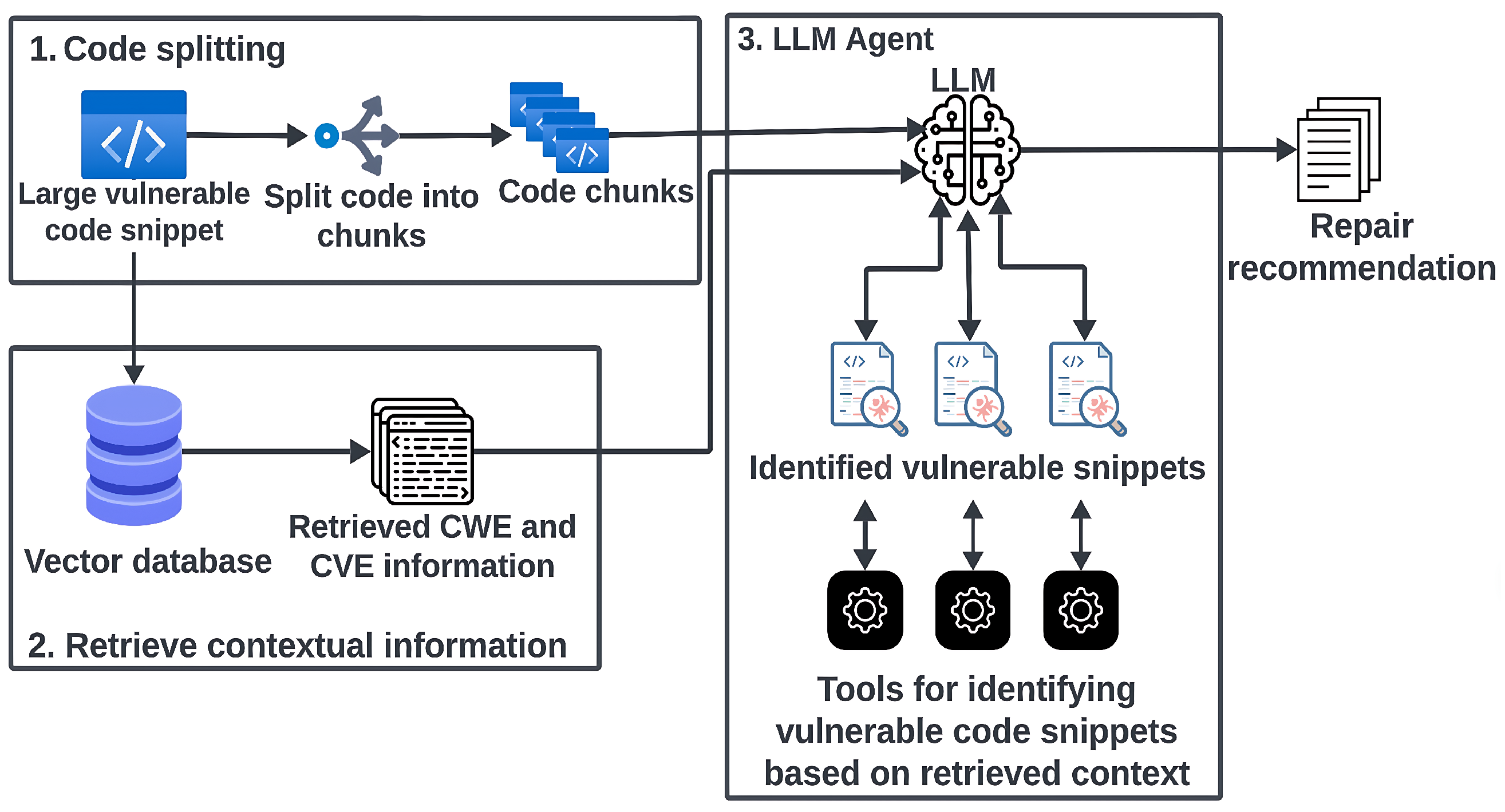

3.3. The Code Repair Recommendation Pipeline

3.3.1. Relevant Context Retrieval

3.3.2. Code Pre-Processing and Chunking

3.3.3. Recommendation Generation

4. Experimental Setup

4.1. Baseline Establishment

4.2. The Each-Choice-Coverage Strategy of Selecting LLMs

4.2.1. High-Performance Proprietary Models

4.2.2. Instruction-Tuned Open-Source Models

4.2.3. Mixture-of-Experts Model

4.2.4. Code-Specialized Models

4.2.5. Extension to Other Models

4.3. Reference-Based Metrics Evaluation of the Repaired Code

4.4. Supervised Fine Tuning vs. Retrieval Strategies

5. Identifying Limitation of Metrics Based Assessment

5.1. Manual Inspection for Metrics Limitation

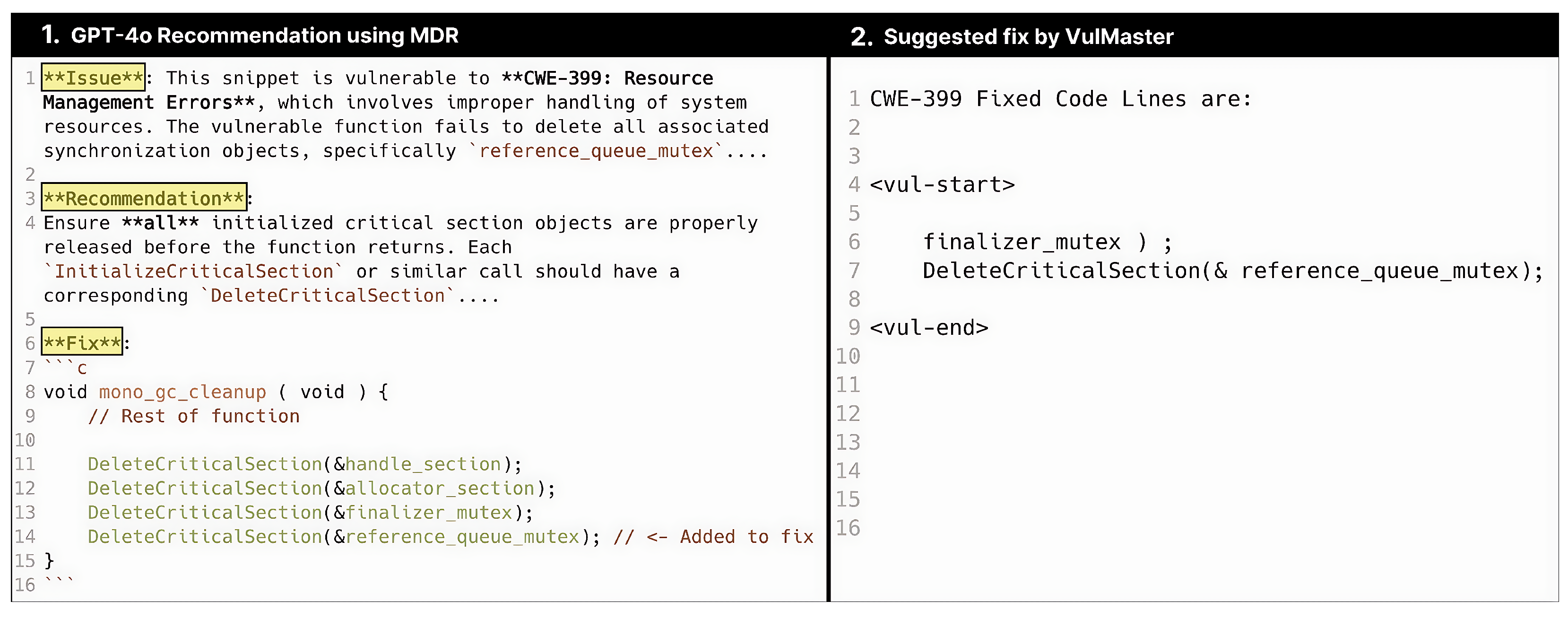

5.1.1. Accurate Retrieval with a Relevant Repair

5.1.2. Inaccurate Retrieval with an Irrelevant Repair

5.1.3. Inaccurate Retrieval with No Repair

5.2. Assessment Using Static Code Analysis Tools

5.3. Comparison with SOTA

6. LLM-Based Assessment

6.1. Evaluation Criteria and LLM Metrics for Code Repair Recommendations

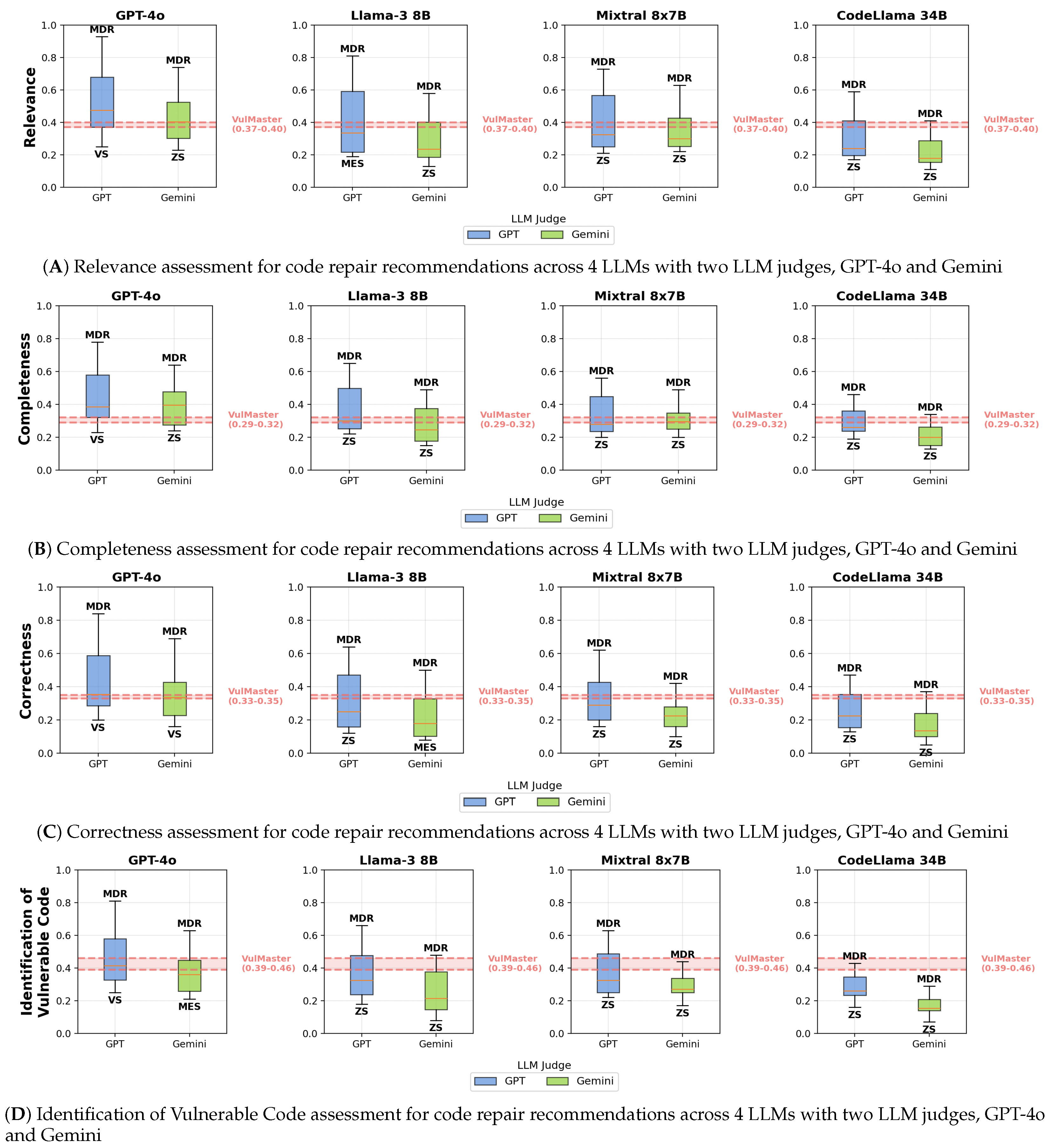

6.1.1. Relevance

6.1.2. Completeness

6.1.3. Correctness

6.1.4. Identification of Vulnerable Code

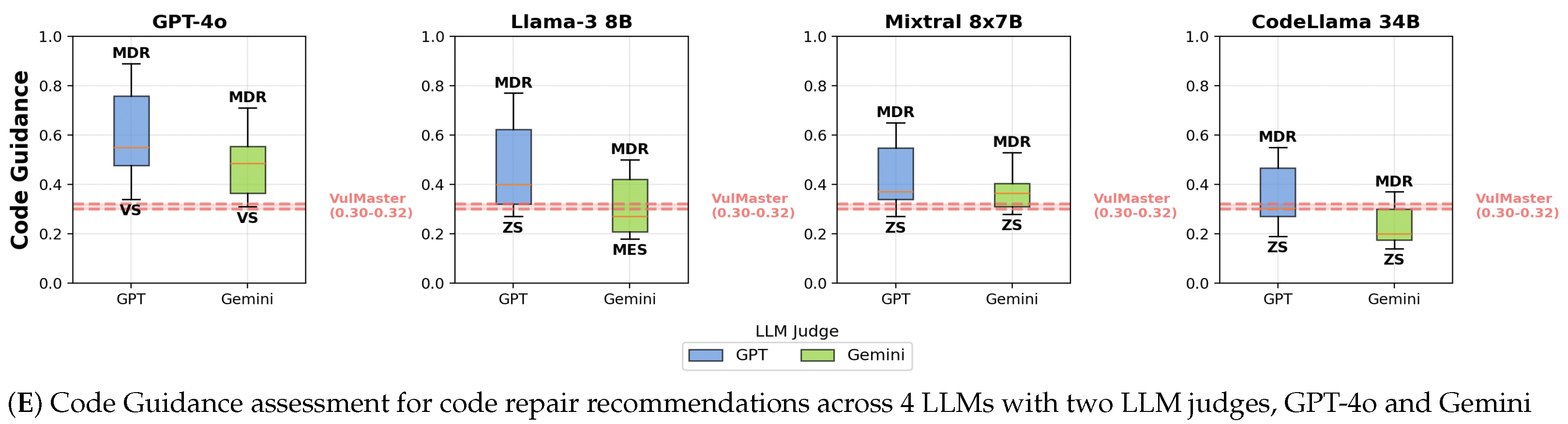

6.1.5. Code Guidance

6.2. The Pipeline of LLMs Assessment

You will be given a recommendation written for repairing a code vulnerability. Your task is to evaluate the recommendation on Relevance, ensuring that the solution is appropriate for the context of the vulnerability.

- Determine whether the recommendation directly addresses the vulnerability described in the context.

- Assess whether the recommendation is practical and relevant for the specific issue identified.

- Identify any irrelevant or generic information that makes the recommendation less applicable to the specific context of the vulnerability.

- Provide a clear method for scoring the recommendation based on its relevance and applicability using a scale from 1 to 5.

- Penalize the recommendation if the CVE and CWE do not match or if the suggestion is generic, irrelevant, or impractical.

6.3. Scoring the Recommendations

6.4. Assessment Results by LLM-as-a-Judge

6.5. Detecting Self-Alignment Bias of GPT-4o

- is the mean score assigned by GPT-4o to its own (original) recommendation.

- is the mean score for the perturbed versions of the recommendation.

- is the pooled standard deviation of the scores.

7. Guidelines for Reproducible and Extensible Adoption

8. Threats to Validity

9. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- NIST. NVD—Vulnerabilities. 2019. Available online: https://nvd.nist.gov/vuln (accessed on 8 August 2025).

- Octoverse 2024: The State of Open Source. Available online: https://github.blog/news-insights/octoverse/octoverse-2024/ (accessed on 8 August 2025).

- Homaei, H.; Shahriari, H.R. Seven Years of Software Vulnerabilities: The Ebb and Flow. IEEE Secur. Priv. 2017, 15, 58–65. [Google Scholar] [CrossRef]

- Shahriar, H.; Zulkernine, M. Mitigating program security vulnerabilities: Approaches and challenges. ACM Comput. Surv. 2012, 44, 11. [Google Scholar] [CrossRef]

- Zhou, X.; Kim, K.; Xu, B.; Han, D.; Lo, D. Out of Sight, Out of Mind: Better Automatic Vulnerability Repair by Broadening Input Ranges and Sources. In Proceedings of the IEEE/ACM 46th International Conference on Software Engineering, New York, NY, USA, 14–20 April 2024. [Google Scholar] [CrossRef]

- Zou, D.; Wang, S.; Xu, S.; Li, Z.; Jin, H. μ VulDeePecker: A Deep Learning-Based System for Multiclass Vulnerability Detection. IEEE Trans. Dependable Secur. Comput. 2021, 18, 2224–2236. [Google Scholar] [CrossRef]

- Jiang, N.; Lutellier, T.; Tan, L. CURE: Code-Aware Neural Machine Translation for Automatic Program Repair. In Proceedings of the 43rd International Conference on Software Engineering, Madrid, Spain, 22–30 May 2021; pp. 1161–1173. [Google Scholar] [CrossRef]

- Fu, M.; Tantithamthavorn, C.; Le, T.; Nguyen, V.; Phung, D. VulRepair: A T5-based automated software vulnerability repair. In Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, New York, NY, USA, 14–16 November 2022; pp. 935–947. [Google Scholar] [CrossRef]

- Berabi, B.; Gronskiy, A.; Raychev, V.; Sivanrupan, G.; Chibotaru, V.; Vechev, M.T. DeepCode AI Fix: Fixing Security Vulnerabilities with Large Language Models. arXiv 2024, arXiv:2402.13291. [Google Scholar] [CrossRef]

- Hou, X.; Zhao, Y.; Liu, Y.; Yang, Z.; Wang, K.; Li, L.; Luo, X.; Lo, D.; Grundy, J.C.; Wang, H. Large Language Models for Software Engineering: A Systematic Literature Review. arXiv 2023, arXiv:2308.10620. [Google Scholar] [CrossRef]

- Mashhadi, E.; Hemmati, H. Applying CodeBERT for Automated Program Repair of Java Simple Bugs. arXiv 2021, arXiv:2103.11626v2. [Google Scholar] [CrossRef]

- Ren, S.; Guo, D.; Lu, S.; Zhou, L.; Liu, S.; Tang, D.; Zhou, M.; Blanco, A.; Ma, S. CodeBLEU: A Method for Automatic Evaluation of Code Synthesis. arXiv 2020, arXiv:2009.10297. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.T.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive NLP tasks. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 6–12 December 2020. [Google Scholar]

- OpenAI. Hello GPT-4o. 2024. Available online: https://openai.com/index/hello-gpt-4o/ (accessed on 6 December 2024).

- Meta. Meta-Llama-3-8B-Instruct. 2024. Available online: https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct (accessed on 6 December 2024).

- Jiang, A.Q.; Sablayrolles, A.; Roux, A.; Mensch, A.; Savary, B.; Bamford, C.; Chaplot, D.S.; de las Casas, D.; Hanna, E.B.; Bressand, F.; et al. Mixtral of Experts. arXiv 2024, arXiv:2401.04088. [Google Scholar] [CrossRef]

- Rozière, B.; Gehring, J.; Gloeckle, F.; Sootla, S.; Gat, I.; Tan, X.E.; Adi, Y.; Liu, J.; Sauvestre, R.; Remez, T.; et al. Code Llama: Open Foundation Models for Code. arXiv 2024, arXiv:2308.12950. [Google Scholar] [CrossRef]

- Fan, J.; Li, Y.; Wang, S.; Nguyen, T.N. A C/C++ Code Vulnerability Dataset with Code Changes and CVE Summaries. In Proceedings of the 2020 IEEE/ACM 17th International Conference on Mining Software Repositories (MSR), Seoul, Republic of Korea, 29–30 June 2020; pp. 508–512. [Google Scholar] [CrossRef]

- Bhandari, G.; Naseer, A.; Moonen, L. CVEfixes: Automated collection of vulnerabilities and their fixes from open-source software. In Proceedings of the 17th International Conference on Predictive Models and Data Analytics in Software Engineering, Athens, Greece, 19–20 August 2021; pp. 30–39. [Google Scholar] [CrossRef]

- MITRE. CVE Website. 2025. Available online: https://www.cve.org/ (accessed on 8 October 2025).

- CWE—Common Weakness Enumeration. Available online: https://cwe.mitre.org/ (accessed on 8 October 2025).

- Chen, Z.; Kommrusch, S.; Monperrus, M. Neural Transfer Learning for Repairing Security Vulnerabilities in C Code. IEEE Trans. Softw. Eng. 2023, 49, 147–165. [Google Scholar] [CrossRef]

- Pearce, H.; Tan, B.; Ahmad, B.; Karri, R.; Dolan-Gavitt, B. Examining Zero-Shot Vulnerability Repair with Large Language Models. arXiv 2022, arXiv:2112.02125. [Google Scholar] [CrossRef]

- Russell, R.L.; Kim, L.; Hamilton, L.H.; Lazovich, T.; Harer, J.A.; Ozdemir, O.; Ellingwood, P.M.; McConley, M.W. Automated Vulnerability Detection in Source Code Using Deep Representation Learning. arXiv 2018, arXiv:1807.04320. [Google Scholar] [CrossRef]

- Wu, Y.; Jiang, N.; Pham, H.V.; Lutellier, T.; Davis, J.; Tan, L.; Babkin, P.; Shah, S. How Effective Are Neural Networks for Fixing Security Vulnerabilities. In Proceedings of the 32nd ACM SIGSOFT International Symposium on Software Testing and Analysis, Seattle, WA, USA, 17–21 July 2023; pp. 1282–1294. [Google Scholar] [CrossRef]

- Islam, N.T.; Khoury, J.; Seong, A.; Karkevandi, M.B.; Parra, G.D.L.T.; Bou-Harb, E.; Najafirad, P. LLM-Powered Code Vulnerability Repair with Reinforcement Learning and Semantic Reward. arXiv 2024, arXiv:2401.03374. [Google Scholar] [CrossRef]

- Joshi, H.; Cambronero, J.; Gulwani, S.; Le, V.; Radicek, I.; Verbruggen, G. Repair Is Nearly Generation: Multilingual Program Repair with LLMs. arXiv 2022, arXiv:2208.11640. [Google Scholar] [CrossRef]

- de Fitero-Dominguez, D.; Garcia-Lopez, E.; Garcia-Cabot, A.; Martinez-Herraiz, J.J. Enhanced automated code vulnerability repair using large language models. Eng. Appl. Artif. Intell. 2024, 138, 109291. [Google Scholar] [CrossRef]

- Evtikhiev, M.; Bogomolov, E.; Sokolov, Y.; Bryksin, T. Out of the BLEU: How should we assess quality of the Code Generation models? J. Syst. Softw. 2023, 203, 111741. [Google Scholar] [CrossRef]

- Fu, M.; Tantithamthavorn, C.; Nguyen, V.; Le, T. ChatGPT for Vulnerability Detection, Classification, and Repair: How Far Are We? arXiv 2023, arXiv:2310.09810. [Google Scholar] [CrossRef]

- Ahmed, T.; Ghosh, S.; Bansal, C.; Zimmermann, T.; Zhang, X.; Rajmohan, S. Recommending Root-Cause and Mitigation Steps for Cloud Incidents using Large Language Models. arXiv 2023, arXiv:2301.03797. [Google Scholar] [CrossRef]

- Bavaresco, A.; Bernardi, R.; Bertolazzi, L.; Elliott, D.; Fernández, R.; Gatt, A.; Ghaleb, E.; Giulianelli, M.; Hanna, M.; Koller, A.; et al. LLMs instead of Human Judges? A Large Scale Empirical Study across 20 NLP Evaluation Tasks. arXiv 2024, arXiv:2406.18403. [Google Scholar] [CrossRef]

- Zhu, L.; Wang, X.; Wang, X. JudgeLM: Fine-tuned Large Language Models are Scalable Judges. arXiv 2025, arXiv:2310.17631. [Google Scholar]

- Fu, J.; Ng, S.K.; Jiang, Z.; Liu, P. GPTScore: Evaluate as You Desire. arXiv 2023, arXiv:2302.04166. [Google Scholar] [CrossRef]

- Kim, T.S.; Lee, Y.; Shin, J.; Kim, Y.H.; Kim, J. EvalLM: Interactive Evaluation of Large Language Model Prompts on User-Defined Criteria. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; Volume 35, pp. 1–21. [Google Scholar] [CrossRef]

- Liu, Y.; Iter, D.; Xu, Y.; Wang, S.; Xu, R.; Zhu, C. G-Eval: NLG Evaluation using GPT-4 with Better Human Alignment. arXiv 2023, arXiv:2303.16634. [Google Scholar] [CrossRef]

- Hu, R.; Cheng, Y.; Meng, L.; Xia, J.; Zong, Y.; Shi, X.; Lin, W. Training an LLM-as-a-Judge Model: Pipeline, Insights, and Practical Lessons. arXiv 2025, arXiv:2502.02988. [Google Scholar] [CrossRef]

- Ye, J.; Wang, Y.; Huang, Y.; Chen, D.; Zhang, Q.; Moniz, N.; Gao, T.; Geyer, W.; Huang, C.; Chen, P.Y.; et al. Justice or Prejudice? Quantifying Biases in LLM-as-a-Judge. arXiv 2024, arXiv:2410.02736. [Google Scholar] [CrossRef]

- Doostmohammadi, E.; Norlund, T.; Kuhlmann, M.; Johansson, R. Surface-Based Retrieval Reduces Perplexity of Retrieval-Augmented Language Models. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers); Rogers, A., Boyd-Graber, J., Okazaki, N., Eds.; Association for Computational Linguistics: Toronto, ON, Canada, 2023; pp. 521–529. [Google Scholar] [CrossRef]

- Setty, S.; Thakkar, H.; Lee, A.; Chung, E.; Vidra, N. Improving Retrieval for RAG based Question Answering Models on Financial Documents. arXiv 2024, arXiv:2404.07221. [Google Scholar] [CrossRef]

- Yepes, A.J.; You, Y.; Milczek, J.; Laverde, S.; Li, R. Financial Report Chunking for Effective Retrieval Augmented Generation. arXiv 2024, arXiv:2402.05131. [Google Scholar] [CrossRef]

- LangChain. LangChain. 2024. Available online: https://www.langchain.com/ (accessed on 9 October 2024).

- Blog, N.T. Introduction to LLM Agents. 2023. Available online: https://developer.nvidia.com/blog/introduction-to-llm-agents/ (accessed on 4 December 2024).

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A Family of Highly Capable Multimodal Models. arXiv 2024, arXiv:2312.11805. [Google Scholar]

- The Claude 3 Model Family: Opus, Sonnet, Haiku. Available online: https://www-cdn.anthropic.com/de8ba9b01c9ab7cbabf5c33b80b7bbc618857627/Model_Card_Claude_3.pdf (accessed on 12 November 2024).

- Guo, D.; Zhu, Q.; Yang, D.; Xie, Z.; Dong, K.; Zhang, W.; Chen, G.; Bi, X.; Wu, Y.; Li, Y.K.; et al. DeepSeek-Coder: When the Large Language Model Meets Programming—The Rise of Code Intelligence. arXiv 2024, arXiv:2401.14196. [Google Scholar]

- Ding, N.; Chen, Y.; Xu, B.; Qin, Y.; Zheng, Z.; Hu, S.; Liu, Z.; Sun, M.; Zhou, B. Enhancing Chat Language Models by Scaling High-quality Instructional Conversations. arXiv 2023, arXiv:2305.14233. [Google Scholar] [CrossRef]

- xai. Grok OS. Available online: https://x.ai/news/grok-os (accessed on 10 March 2025).

- Du, N.; Huang, Y.; Dai, A.M.; Tong, S.; Lepikhin, D.; Xu, Y.; Krikun, M.; Zhou, Y.; Yu, A.W.; Firat, O.; et al. GLaM: Efficient Scaling of Language Models with Mixture-of-Experts. arXiv 2022, arXiv:2112.06905. [Google Scholar] [CrossRef]

- Sanseviero, O.; Tunstall, L.; Schmid, P.; Mangrulkar, S.; Belkada, Y.; Cuenca, P. Mixture of Experts Explained. 2023. Available online: https://huggingface.co/blog/moe (accessed on 12 November 2024).

- Wang, Y.; Wang, W.; Joty, S.; Hoi, S.C.H. CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation. arXiv 2021, arXiv:2109.00859. [Google Scholar]

- Matton, A.; Sherborne, T.; Aumiller, D.; Tommasone, E.; Alizadeh, M.; He, J.; Ma, R.; Voisin, M.; Gilsenan-McMahon, E.; Gallé, M. On Leakage of Code Generation Evaluation Datasets. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; Association for Computational Linguistics: Miami, FL, USA, 2024; pp. 13215–13223. [Google Scholar] [CrossRef]

- Jiang, X.; Wu, L.; Sun, S.; Li, J.; Xue, J.; Wang, Y.; Wu, T.; Liu, M. Investigating Large Language Models for Code Vulnerability Detection: An Experimental Study. arXiv 2025, arXiv:2412.18260. [Google Scholar]

- Du, X.; Zheng, G.; Wang, K.; Feng, J.; Deng, W.; Liu, M.; Chen, B.; Peng, X.; Ma, T.; Lou, Y. Vul-RAG: Enhancing LLM-based Vulnerability Detection via Knowledge-level RAG. arXiv 2024, arXiv:2406.11147. [Google Scholar]

- Popovic, M. chrF: Character n-gram F-score for automatic MT evaluation. In Proceedings of the Tenth Workshop on Statistical Machine Translation, Lisboa, Portugal, 17–18 September 2015. [Google Scholar]

- Tran, N.; Tran, H.; Nguyen, S.; Nguyen, H.; Nguyen, T. Does BLEU Score Work for Code Migration? In Proceedings of the 2019 IEEE/ACM 27th International Conference on Program Comprehension (ICPC), Los Alamitos, CA, USA, 25–26 May 2019; pp. 165–176. [Google Scholar] [CrossRef]

- GitHub. CodeQL Documentation. 2025. Available online: https://codeql.github.com/docs/ (accessed on 10 March 2025).

- Snyk. Developer Security. 2025. Available online: https://snyk.io/ (accessed on 10 March 2025).

- SonarSource. Advanced Security with SonarQube. 2025. Available online: https://www.sonarsource.com/solutions/security/ (accessed on 30 March 2025).

- Feng, Z.; Guo, D.; Tang, D.; Duan, N.; Feng, X.; Gong, M.; Shou, L.; Qin, B.; Liu, T.; Jiang, D.; et al. CodeBERT: A Pre-Trained Model for Programming and Natural Languages. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online, 16–20 November 2020; pp. 1536–1547. [Google Scholar] [CrossRef]

- Guo, D.; Ren, S.; Lu, S.; Feng, Z.; Tang, D.; Liu, S.; Zhou, L.; Duan, N.; Yin, J.; Jiang, D.; et al. GraphCodeBERT: Pre-training Code Representations with Data Flow. arXiv 2020, arXiv:2009.08366. [Google Scholar]

- Xu, F.F.; Alon, U.; Neubig, G.; Hellendoorn, V.J. A systematic evaluation of large language models of code. In Proceedings of the 6th ACM SIGPLAN International Symposium on Machine Programming, MAPS 2022, New York, NY, USA, 13 June 2022; pp. 1–10. [Google Scholar] [CrossRef]

- Nijkamp, E.; Pang, B.; Hayashi, H.; Tu, L.; Wang, H.; Zhou, Y.; Savarese, S.; Xiong, C. CodeGen: An Open Large Language Model for Code with Multi-Turn Program Synthesis. In Proceedings of the International Conference on Learning Representations, Online, 25–29 April 2022. [Google Scholar]

- Li, Z.; Lu, S.; Guo, D.; Duan, N.; Jannu, S.; Jenks, G.; Majumder, D.; Green, J.; Svyatkovskiy, A.; Fu, S.; et al. Automating code review activities by large-scale pre-training. In Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, ESEC/FSE 2022, New York, NY, USA, 14–18 November 2022; pp. 1035–1047. [Google Scholar] [CrossRef]

- Peng, J.; Cui, L.; Huang, K.; Yang, J.; Ray, B. CWEval: Outcome-driven Evaluation on Functionality and Security of LLM Code Generation. arXiv 2025, arXiv:2501.08200. [Google Scholar] [CrossRef]

- Confident AI. LLM Evaluation Metrics: Everything You Need for LLM Evaluation. 2024. Available online: https://www.confident-ai.com/blog/llm-evaluation-metrics-everything-you-need-for-llm-evaluation (accessed on 2 December 2024).

- Microsoft. Evaluation Metrics Built-In. Available online: https://learn.microsoft.com/en-us/azure/ai-foundry/concepts/evaluation-metrics-built-in?tabs=warning (accessed on 6 March 2025).

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A Survey on Evaluation of Large Language Models. arXiv 2023, arXiv:2307.03109. [Google Scholar] [CrossRef]

- Friel, R.; Sanyal, A. Chainpoll: A high efficacy method for LLM hallucination detection. arXiv 2023, arXiv:2310.18344. [Google Scholar] [CrossRef]

- Galileo. Completeness. Available online: https://docs.galileo.ai/galileo/gen-ai-studio-products/galileo-guardrail-metrics/completeness (accessed on 6 March 2025).

- Lemos, R. Patch Imperfect: Software Fixes Failing to Shut Out Attackers. Available online: https://www.darkreading.com/vulnerabilities-threats/patch-imperfect-software-fixes-failing-to-shut-out-attackers (accessed on 6 March 2025).

- Magazine, I. Google: Incomplete Patches Caused Quarter of Vulnerabilities, in Some Cases. Available online: https://www.infosecurity-magazine.com/news/google-incomplete-patches-quarter/#:~:text=Google (accessed on 6 March 2025).

- Galileo. Correctness. Available online: https://docs.galileo.ai/galileo/gen-ai-studio-products/galileo-guardrail-metrics/correctness (accessed on 6 March 2025).

- Qi, Z.; Long, F.; Achour, S.; Rinard, M. An analysis of patch plausibility and correctness for generate-and-validate patch generation systems. In Proceedings of the 2015 International Symposium on Software Testing and Analysis, ISSTA 2015, New York, NY, USA, 13–17 July 2015; pp. 24–36. [Google Scholar] [CrossRef]

- Liu, P.; Liu, J.; Fu, L.; Lu, K.; Xia, Y.; Zhang, X.; Chen, W.; Weng, H.; Ji, S.; Wang, W. Exploring ChatGPT’s capabilities on vulnerability management. In Proceedings of the 33rd USENIX Conference on Security Symposium SEC ’24, Philadelphia, PA, USA, 14–16 August 2024. [Google Scholar]

- Liu, M.; Wang, J.; Lin, T.; Ma, Q.; Fang, Z.; Wu, Y. An Empirical Study of the Code Generation of Safety-Critical Software Using LLMs. Appl. Sci. 2024, 14, 1046. [Google Scholar] [CrossRef]

- Diener, M.J. Cohen’s d. In The Corsini Encyclopedia of Psychology; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2010; p. 1. [Google Scholar] [CrossRef]

| Dataset | Strategy | GPT-4o | Llama-3 8B | Mixtral 8 × 7B | CodeLlama 34B | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CodeBLEU | chrF | RUBY | CodeBLEU | chrF | RUBY | CodeBLEU | chrF | RUBY | CodeBLEU | chrF | RUBY | ||

| BigVul | Zero-shot | 0.62 | 0.77 | 0.53 | 0.57 | 0.67 | 0.52 | 0.50 | 0.65 | 0.45 | 0.52 | 0.54 | 0.36 |

| Vanilla | 0.67 | 0.82 | 0.50 | 0.62 | 0.72 | 0.49 | 0.53 | 0.69 | 0.45 | 0.55 | 0.60 | 0.35 | |

| Metadata Embedding | 0.69 | 0.85 | 0.55 | 0.63 | 0.72 | 0.58 | 0.57 | 0.70 | 0.50 | 0.57 | 0.52 | 0.33 | |

| Segmented Context | 0.66 | 0.81 | 0.49 | 0.68 | 0.81 | 0.55 | 0.54 | 0.72 | 0.46 | 0.55 | 0.62 | 0.37 | |

| Metadata-driven Retrieval | 0.70 | 0.89 | 0.60 | 0.69 | 0.84 | 0.59 | 0.58 | 0.75 | 0.46 | 0.56 | 0.59 | 0.42 | |

| CVEFixes | Zero-shot | 0.29 | 0.33 | 0.26 | 0.26 | 0.27 | 0.31 | 0.24 | 0.22 | 0.24 | 0.31 | 0.34 | 0.28 |

| Vanilla | 0.31 | 0.40 | 0.32 | 0.31 | 0.29 | 0.30 | 0.29 | 0.25 | 0.31 | 0.33 | 0.41 | 0.32 | |

| Metadata Embedding | 0.32 | 0.41 | 0.36 | 0.28 | 0.30 | 0.32 | 0.28 | 0.32 | 0.32 | 0.30 | 0.38 | 0.34 | |

| Segmented Context | 0.39 | 0.39 | 0.32 | 0.35 | 0.33 | 0.31 | 0.29 | 0.38 | 0.31 | 0.31 | 0.40 | 0.30 | |

| Metadata-driven Retrieval | 0.43 | 0.41 | 0.39 | 0.40 | 0.35 | 0.34 | 0.39 | 0.40 | 0.37 | 0.34 | 0.42 | 0.33 | |

| Zero-Shot | Metadata-Driven Retrieval | |||

|---|---|---|---|---|

| Dataset | Fixed Files | Resolved Issues | Fixed Files | Resolved Issues |

| BigVul | 102 (28%) | 151 (33%) | 192 (52.6%) | 235 (51.2%) |

| CVEFixes | 481 (58.4%) | 2996 (73%) | 702 (85.32%) | 3827 (92.3%) |

| Model | EM (%) | CodeBLEU | chrF | RUBY |

|---|---|---|---|---|

| CodeLlama 34B + MDR | 3.7 | 0.45 | 0.47 | 0.38 |

| Mixtral 8 × 7B + MDR | 4.3 | 0.51 | 0.55 | 0.42 |

| Llama-3 8B + MDR | 5.0 | 0.53 | 0.58 | 0.46 |

| GPT-4o + MDR | 10.2 | 0.57 | 0.65 | 0.49 |

| CodeBERT [60] | 7.3 | 0.22 | N/A | N/A |

| GraphCodeBERT [61] | 8.1 | 0.17 | N/A | N/A |

| PolyCoder [62] | 9.9 | 0.30 | N/A | N/A |

| CodeGen [63] | 12.2 | 0.30 | N/A | N/A |

| Codereviewer [64] | 10.2 | 0.38 | N/A | N/A |

| CodeT5 [51] | 16.8 | 0.35 | N/A | N/A |

| VRepair [22] | 8.9 | 0.32 | N/A | N/A |

| VulRepair [8] | 16.8 | 0.35 | N/A | N/A |

| VulMaster [5] | 20.0 | 0.41 | N/A | N/A |

| Criteria | Score 1 | Score 2 | Score 3 | Score 4 | Score 5 |

|---|---|---|---|---|---|

| Relevance | Irrelevant and not applicable to the identified issue. | Partially relevant but has limited applicability. | Generally relevant and mostly applicable, with some minor issues. | Relevant and applicable, with minor improvements possible. | Highly relevant, very applicable, and practical to implement. |

| Completeness | Very incomplete, lacking thoroughness, and missing vital aspects. | Partially complete, with significant gaps in thoroughness. | Generally complete and adequately thorough, with some minor gaps. | Complete and thorough, with minor improvements possible. | Very complete, highly thorough, and comprehensive in its approach. |

| Correctness | Very inaccurate, with multiple errors and unreliable information. | Somewhat inaccurate, with several errors that impact its reliability. | Generally accurate, with minor errors that do not significantly impact its reliability. | Accurate, with minor errors, with most of the information being reliable. | Highly accurate and free of errors, with all information being reliable. |

| Id. of Vulnerable Code | Does not identify any specific vulnerable code. | Vaguely identifies the vulnerable code with unclear details. | Generally identifies the vulnerable code, with some clarity issues. | Correctly identifies the vulnerable code, with explanations missing a few details. | Specifically identifies and clearly explains the vulnerable code. |

| Code Guidance | No relevant code snippets are provided in the recommendation. | The provided code snippets are irrelevant or unhelpful. | Includes some relevant snippets, but there are minor issues with clarity or usefulness. | Provides relevant and helpful snippets, with minor improvements possible. | Provides highly relevant and helpful snippets, offering exemplary guidance. |

| Dataset | Strategy | REL | COM | COR | IVC | CG |

|---|---|---|---|---|---|---|

| BigVul | VS | 0.594 | 0.314 | 0.435 | 0.387 | 0.433 |

| MES | 0.641 | 0.497 | 0.515 | 0.553 | 0.668 | |

| SC | 0.404 | 0.231 | 0.694 | 0.446 | 0.528 | |

| MDR | 0.669 | 0.567 | 0.701 | 0.431 | 0.340 | |

| CVEFixes | VS | 0.510 | 0.585 | 0.373 | 0.222 | 0.294 |

| MES | 0.430 | 0.635 | 0.609 | 0.625 | 0.601 | |

| SC | −0.218 | 0.383 | 0.031 | −0.162 | −0.010 | |

| MDR | 0.317 | 0.530 | 0.465 | 0.678 | 0.603 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amoah, A.A.; Liu, Y. Explainable Recommendation of Software Vulnerability Repair Based on Metadata Retrieval and Multifaceted LLMs. Mach. Learn. Knowl. Extr. 2025, 7, 149. https://doi.org/10.3390/make7040149

Amoah AA, Liu Y. Explainable Recommendation of Software Vulnerability Repair Based on Metadata Retrieval and Multifaceted LLMs. Machine Learning and Knowledge Extraction. 2025; 7(4):149. https://doi.org/10.3390/make7040149

Chicago/Turabian StyleAmoah, Alfred Asare, and Yan Liu. 2025. "Explainable Recommendation of Software Vulnerability Repair Based on Metadata Retrieval and Multifaceted LLMs" Machine Learning and Knowledge Extraction 7, no. 4: 149. https://doi.org/10.3390/make7040149

APA StyleAmoah, A. A., & Liu, Y. (2025). Explainable Recommendation of Software Vulnerability Repair Based on Metadata Retrieval and Multifaceted LLMs. Machine Learning and Knowledge Extraction, 7(4), 149. https://doi.org/10.3390/make7040149