Abstract

Aerial tracking is an important service for many Unmanned Aerial Vehicle (UAV) applications. Existing work has failed to provide robust solutions when handling target disappearance, viewpoint changes, and tracking drifts in practical scenarios with limited UAV resources. In this paper, we propose a closed-loop framework integrating three key components: (1) a lightweight adaptive detection with multi-scale feature extraction, (2) spatiotemporal motion modeling through Kalman-filter-based trajectory prediction, and (3) autonomous decision-making through composite scoring of detection confidence, appearance similarity, and motion consistency. By implementing dynamic detection-tracking coordination with quality-aware feature preservation, our system enables real-time operation through performance-adaptive frequency modulation. Evaluated on VOT-ST2019 and OTB100 benchmarks, the proposed method yields marked improvements over baseline trackers, achieving a 27.94% increase in Expected Average Overlap (EAO) and a 10.39% reduction in failure rates, while sustaining a frame rate of 23–95 FPS on edge hardware. The framework achieves rapid target reacquisition during prolonged occlusion scenarios through optimized protocols, outperforming conventional methods in sustained aerial surveillance tasks.

1. Introduction

Single-target long-term UAV visual tracking aims to persistently locate and maintain the identity of a specific object in aerial video streams, which often span thousands of frames. This task begins with a single annotation (bounding box or segmentation mask) in the first frame only. The tracking system must adapt to dynamic aerial views and consistently provide accurate positional information, even as targets disappear and reappear, undergo deformations, or encounter changing illumination. Crucially, such systems require strict temporal constraints to fulfill real-time operational requirements in airborne platforms. Long-term single-target UAV visual tracking, as an open and compelling research domain, serves critical roles in numerous practical applications, including environmental monitoring [1], search and rescue [2], aerial vision tracking [3], sports analytics [4], and beyond.

In autonomous UAV tracking applications, the system architecture is typically divided into two core components: (1) persistent target tracking for continuous acquisition of positioning data, and (2) UAV flight control based on the obtained positioning information [5]. This paper primarily focuses on the former challenge, specifically investigating methodologies for maintaining persistent visual-based target tracking and acquiring continuous positioning data during long-term operations. The adoption of vision-centric solutions is necessitated by UAV payload limitations and stringent power constraints, which preclude the deployment of large-scale sensing modalities like LiDAR or millimeter-wave radar for target localization [6].

Based on our comprehensive investigation into single-target real-time persistent tracking in UAV systems, we have identified several critical challenges that remain underexplored or inadequately addressed in existing works. These challenges represent fundamental research gaps in achieving reliable long-duration aerial tracking:

- Single-module trackers exhibit limited adaptability in target disappearance scenarios. However, augmenting these systems with redundant verification modules introduces significant computational overhead, thereby compromising real-time operational feasibility.

- Validating tracking success remains particularly challenging under UAV-borne dynamic observation conditions, where substantial appearance variations and trajectory deviations induced by viewpoint-related geometric distortions frequently occur.

- Existing trackers lack efficient mechanisms for rapid and robust re-acquisition confidence estimation after disappearance, particularly under the severe viewpoint changes and scale variations characteristic of UAV tracking.

The proposed single-target long-term UAV visual tracking system in this paper comprises three principal modules: (1) a lightweight object detection and feature extraction module, (2) a multi-criteria assessment module, and (3) a motion-aware tracking module. In response to these core challenges, our work delivers the following key contributions:

- By incorporating a lightweight feature extraction network and efficient target information caching mechanisms, our approach achieves real-time detection of target disappearance and re-emergence while maintaining computational efficiency.

- Spatiotemporal feature encoding with motion pattern analysis preserves high-quality historical representations to mitigate appearance drift.

- The closed-loop feedback architecture among modules facilitates autonomous decision-making through performance-driven adaptation.

- In our architecture, the object detection and feature extraction module alternates with the motion-aware tracking module. This alternating execution enables performance enhancement via dynamic feature banks and historical trajectory queues, while also operating within computational constraints.

2. Related Work

This section reviews three distinct categories of long-term UAV visual tracking methodologies: correlation filter-based trackers, deep learning-based trackers, and hybrid trackers that leverage the complementary strengths of both approaches. We systematically analyze each category’s operational mechanisms and performance characteristics in aerial scenarios [7].

2.1. Correlation Filter-Based Trackers

Correlation filter-based trackers achieve efficient target localization through frequency-domain computations. The central concept involves training a filter template on the initial frame of a video sequence; this filter then generates maximal response values when correlated with target regions in subsequent frames. By performing convolutional operations between image patches and the filter in the frequency domain, these algorithms predict target positions within millisecond-level latency, making them particularly suitable for real-time mobile platforms (e.g., UAVs) with stringent computational constraints. Fu et al. [8] proposed a temporal regularization approach for multi-frame response maps, optimizing model updating through temporal correlation constraints across historical response maps, effectively mitigating error accumulation inherent in conventional single-frame update mechanisms. Zhang et al. [9] devised a global-target dual regression filter. This approach integrates global contextual information with local target features, achieving synergistic modeling. It effectively mitigates tracking drift caused by complex background interference. However, the dual-channel architecture increases computational complexity. The computational efficiency of correlation filter algorithms facilitates their deployment on UAV platforms. Despite notable advancements, existing methods have key limitations in long-term UAV tracking, especially when handling target disappearance/reappearance and appearance drift [7].

2.2. Deep Learning-Based Trackers

Researchers have increasingly employed deep learning-based methodologies to address long-term single-target UAV tracking challenges, spurred by rapid advancements in the field. This research thrust has yielded a proliferation of sophisticated deep learning-powered tracking algorithms that demonstrate notable performance improvements. Bertinetto et al. [10] learn generic similarity maps through cross-correlation between target template and search region feature representations and conduct online adaptive updates. Sun et al. [11] integrate Siamese networks with Transformer architectures and employ drift suppression to achieve efficient, robust real-time UAV tracking. Deep learning-based trackers demonstrate superior adaptability to rapid viewpoint changes and occlusion scenarios in UAV perspectives compared to correlation filter-based approaches. However, practical deployments reveal inherent limitations, including complex computational pipelines and numerous model parameters that require substantial computational resources, coupled with dependencies on large-scale training datasets and extended training periods to achieve operational efficacy.

2.3. Hybrid Trackers

Tracking algorithms have advanced substantially by incorporating object detection methodologies [12], enabling more robust long-term tracking. Cross-domain innovations like region proposal networks and feature pyramids further leverage these techniques to address target disappearance and scale changes. Huang et al. [13] integrated detection techniques to develop a two-stage detection architecture: the first stage employs a query-guided region proposal network to generate candidate regions, while the second stage utilizes a region-based CNN for precise localization. Several studies have adopted multi-tracker frameworks for performance enhancement. For instance, FGLT [14] employs both MDNet [15] and SiamRPN++ [16] trackers to verify tracking results, while a dedicated detection module rectifies tracking failures through error correction. Although existing studies [13,14] effectively mitigate single-tracker failures through error detection and recovery modules, their redundant architectures exhibit critical limitations. Specifically, multi-network parallelization exponentially increases model complexity, hindering compliance with UAV platforms’ lightweight deployment requirements. Furthermore, these approaches insufficiently address the balance between computational resources and real-time constraints. Nevertheless, the innovative approaches in heterogeneous feature fusion and failure compensation mechanisms provide valuable theoretical references for designing long-term UAV tracking algorithms.

3. Methodology

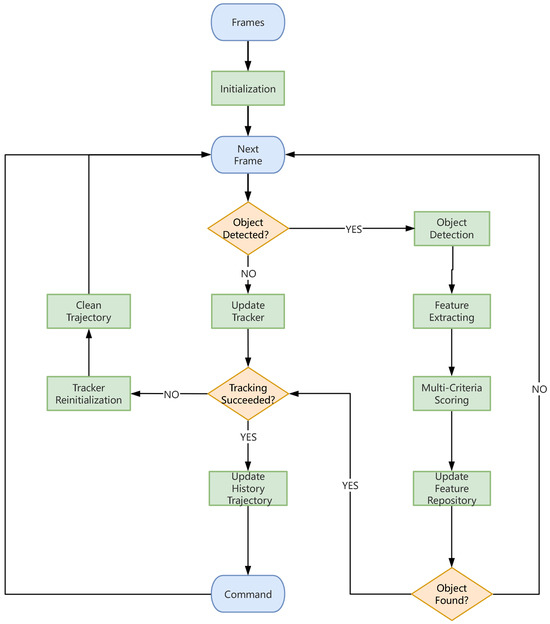

This section systematically elucidates the core architectural modules of the proposed framework, followed by a comprehensive exposition of the algorithmic workflow and the interdependencies among constituent elements. As shown in Figure 1, the closed-loop architecture integrates real-time detection-tracking-control pipelines with self-correction mechanisms, where multi-criteria scoring enables dynamic switching between detection and tracking branches based on environmental feedback.

Figure 1.

Closed-loop real-time detection-tracking-control system architecture.

3.1. System Overview

To achieve persistent aerial pursuit in long-term drone-following scenarios, we propose a real-time tracking system architecture comprising three synergistic modules.

The detection and feature extraction module employs a dual-phase operation: During initialization, the system accepts either a ground-truth bounding box for target specification or leverages gesture recognition to dynamically localize human subjects. In active tracking mode, this component generates candidate bounding boxes through continuous scene parsing. These detection outputs undergo rigorous evaluation in our multi-criteria scoring module, which quantifies target confidence through spatial-temporal consistency metrics and appearance similarity measures.

The tracking and motion perception module strategically integrates these assessments with intersection-over-union (IoU) analysis between optimal candidates and current tracking windows, implementing an adaptive tracker reinitialization protocol when divergence thresholds are exceeded. This core processor simultaneously maintains a trajectory buffer that archives historical motion patterns, enabling predictive movement analysis. Simultaneously, the degree of matching derived from the intersection-over-union (IoU) analysis inversely regulates the detection frequency, thus mitigating the excessive computational latency and energy consumption overhead caused by frequent detection.

The integrated framework establishes a closed-loop processing chain where detection validation informs tracking adjustments, while motion history enhances future detection reliability, significantly enhancing system robustness and tracking continuity in dynamic environments.

3.2. Motion-Aware Tracking Module

In this module, the implementation of our lightweight correlation filter-based filter tracker will be introduced. We selected CSR-DCF (Discriminative Correlation Filter with Channel and Spatial Reliability) [17], an advanced short-term tracker, as our tracker. By incorporating channel and spatial reliability, it effectively addresses occlusion and boundary effects while maintaining computational efficiency. This method has achieved state-of-the-art performance on multiple benchmark datasets, including VOT 2016 [18], VOT 2015 [18], and OTB-100 [19]. Correlation filter-based trackers, including Channel and Spatial Reliability Discriminative Correlation Filter (CSR-DCF), often underperform in long-term tracking scenarios due to unresolved model drift and the absence of long-term memory mechanisms. To mitigate these limitations, the proposed system integrates adaptively scheduled object detection and feature extraction modules to supervise the tracking process.

Whenever the multi-criteria scoring module produces an output (the optimal bounding box that meets the threshold criteria), the system computes between this optimal bounding box and the tracker’s current bounding box by:

where denotes the optimal bounding box from the multi-criteria scoring module, and represents the tracker’s current prediction. The is dynamically leveraged to regulate the detection interval by

where denotes the ceiling operator for discrete interval quantization, is the base interval multiplier (default: 5 frames), is the tracking confidence threshold (empirically set to 0.6), and defines the minimum detection interval (10 frames).

This control strategy implements dual operational modes:

- : The detection frequency is adaptively modulated, achieving a latency-energy trade-off through the relationship with tracking confidence.

- : The tracker is reinitialized using the optimal candidate bounding box , as sub-threshold IoU indicates potential tracking failure. This minimum interval guarantees prompt evaluation of tracker reinitialization.

This module employs the Kalman filter (KF) algorithm [20] to model target trajectories. The KF establishes a linear motion model integrating trajectory buffer and system dynamics for motion perception. This approach effectively addresses trajectory prediction challenges during short-term target occlusion or detection failures.

3.2.1. CSR-DCF Tracker

The Channel and Spatial Reliability Discriminative Correlation Filter (CSR-DCF) [17] enhances traditional correlation filtering with dual reliability mechanisms. Its core formulation minimizes the objective:

where denotes the filter for channel k, the feature map, and the channel reliability weights. The spatial reliability component is implemented through a binary mask that excludes non-target regions:

this algorithm enhances tracking robustness through three technical innovations: First, a channel reliability weighting mechanism based on feature response consistency dynamically evaluates confidence levels across feature channels. Second, a spatial reliability adaptation strategy employs binary region segmentation to handle partial occlusion via mask updating. Finally, a Fourier-domain optimization scheme reduces computational complexity to , achieving real-time performance while maintaining tracking precision. The tracker achieves real-time performance while maintaining robustness to deformation and illumination changes, with an update rule:

where controls model adaptation rate.

3.2.2. Motion Awareness

The motion awareness system combines a historical trajectory buffer with Kalman filtering to achieve robust motion prediction. A double-ended queue maintains an frames trajectory buffer containing motion states:

where represents target coordinates and denotes instantaneous velocities calculated through frame differencing:

The buffer implements FIFO replacement for new entries when exceeding capacity, marks invalid detections with null values , and preserves velocity continuity through differential calculations.

For physical motion modeling, an eight-dimensional Kalman filter with state vector

employs a constant velocity transition model:

the observation model focuses on positional measurements, with adaptive noise covariance matrices and . Velocity estimation derives from averaged historical displacements:

this integrated approach enables continuous motion tracking through temporary detection failures while maintaining physical plausibility constraints.

3.3. Detection and Feature Extraction Module

The detection and feature extraction module synergistically integrates three operational components: a YOLO-based detector generating geometrically validated candidate boxes , a MobileNetV2-powered feature extractor producing illumination-robust -normalized embeddings via depthwise separable convolutions [21], and a quality-aware feature repository maintaining discriminative templates through composite-score-driven FIFO renewal with configurable capacity . This integrated pipeline achieves real-time processing while enabling metric-aware matching and persistent identity preservation essential for occlusion-resilient multi-target tracking across variable scenarios.

3.3.1. Object Detection Framework

The detection system employs a multi-scale convolutional architecture integrated with cascaded prediction heads for precise human target localization. The framework incorporates dual filtering mechanisms to ensure operational reliability, comprising confidence thresholding (retaining proposals with detection scores and geometric constraints (filtering regions exhibiting abnormal aspect ratios . The detector generates candidate bounding box outputs formalized as , where denote the center coordinates, (w, h) represent the box dimensions, and c indicates the detection confidence score.

The selection of object detection frameworks was rigorously evaluated against four criteria: computational efficiency, detection accuracy, deployment flexibility, and real-time performance. The YOLO [22] series was ultimately adopted due to its optimal balance between latency-sensitive operations and precision-critical detection tasks.

3.3.2. Deep Feature Representation

The feature extraction framework employs MobileNetV2’s [21] depthwise separable convolution architecture to achieve efficient discriminative feature learning. Spatial normalization is accomplished through affine transformations that align input regions to canonical perspectives, followed by an embedding projection that generates L2-normalized 1280-dimensional feature vectors (). Adversarial training with illumination perturbation samples enhances robustness to lighting variations by minimizing feature space sensitivity to controlled brightness fluctuations. The inverted residual structure with linear bottlenecks enables effective feature reuse while maintaining computational efficiency, particularly through expansion ratios of six in critical layers. The feature mapping process formalizes as

where denotes the parametric feature extractor and represents the image region within the bounding box .

While our implementation utilizes MobileNetV2 for its optimal balance between accuracy and latency, the modular design permits substitution with alternative architectures, including EfficientNet [23], SqueezeNet [24], and GhostNet [25]. These candidate networks could be integrated through the standardized feature interface while maintaining system compatibility.

3.3.3. Quality-Aware Feature Repository

The system maintains a quality-aware feature repository to preserve the characteristics of the desired appearance, where denotes the capacity of the repository controlling the maximum stored templates. This circular buffer stores L2-normalized feature vectors extracted through a MobileNetV2 backbone [21] from Section 3.3.2.

The feature repository update mechanism utilizes the composite score from Section 3.4 Multi-criteria Scoring Module to determine template quality. The update policy follows:

where denotes the historical composite scores of the stored features. The replacement process maintains a fixed repository capacity through the replacement of First-In-First-Out (FIFO), where in our implementation. Update operations are triggered when the composite score exceeds a quality threshold . The system prioritizes replacement of features possessing minimal historical scores , establishing a dynamic equilibrium between feature freshness and representation quality.

The feature repository maintains discriminative target appearance representations to enhance system stability through dynamic template management. By preserving high-fidelity features, the repository enables robust detection of target disappearance/re-emergence events while mitigating long-term appearance drift via quality-controlled template renewal. This dual mechanism of persistence and adaptability ensures continuous identity preservation under occlusion/reappearance scenarios without compromising discrimination capability.

3.4. Multi-Criteria Scoring Module

The module establishes a composite scoring mechanism to evaluate candidate bounding boxes through four complementary criteria, ensuring reliable target validation in dynamic aerial scenarios. For each candidate with feature the total confidence score is computed as

where denote criterion weights ().

The detection confidence component directly adopts the detector’s output score from , enhanced through sigmoidal compression to emphasize high-confidence proposals while suppressing ambiguous detections.

The appearance similarity is quantified via maximum cosine similarity between candidate feature and reference templates in repository :

The temporal consistency score is defined as

where denotes intersection-over-union with the tracking box from frames earlier, represents elapsed frames since the last validated tracking update, and k controls temporal reliability decay rate (empirically frames). This formulation introduces an exponential decay term to address temporal reliability degradation, where increasing induces progressive score attenuation. The decay constant k governs the attenuation gradient—a smaller k accelerates score reduction for long intervals. When , the term effectively nullifies temporal consistency reliance, mandating appearance similarity dominance. The compound metric preserves short-term spatial coherence () while preventing erroneous propagation of outdated positional priors in long-term tracking scenarios.

The motion consistency evaluates positional alignment with Kalman-filtered predictions from Section 3.2:

The optimal candidate is selected through thresholded maximization:

where enforces minimum detection confidence. The existence confidence of the optimal candidate is evaluated through threshold comparison:

where denotes the existence confirmation threshold. For high-confidence matches (), the feature repository undergoes FIFO-based template renewal using as detailed in Section 3.3.2, while simultaneously resetting the persistence counter of trajectory buffer to maintain temporal constraints. Conversely, when , indicating a potential target disappearance, the system initiates emergency protocols: (1) complete reset of to prevent error propagation, and (2) schedules the next detection interval as .

This unified scoring framework achieves robust target validation in aerial tracking scenarios through static weighting of spatial, temporal, and appearance cues, combining detection confidence, template similarity, decayed spatial consistency, and motion alignment in fixed proportions to address occlusion resilience, motion blur tolerance, and long-term tracking stability without requiring dynamic weight adaptation.

3.5. Integrated Algorithmic Workflow

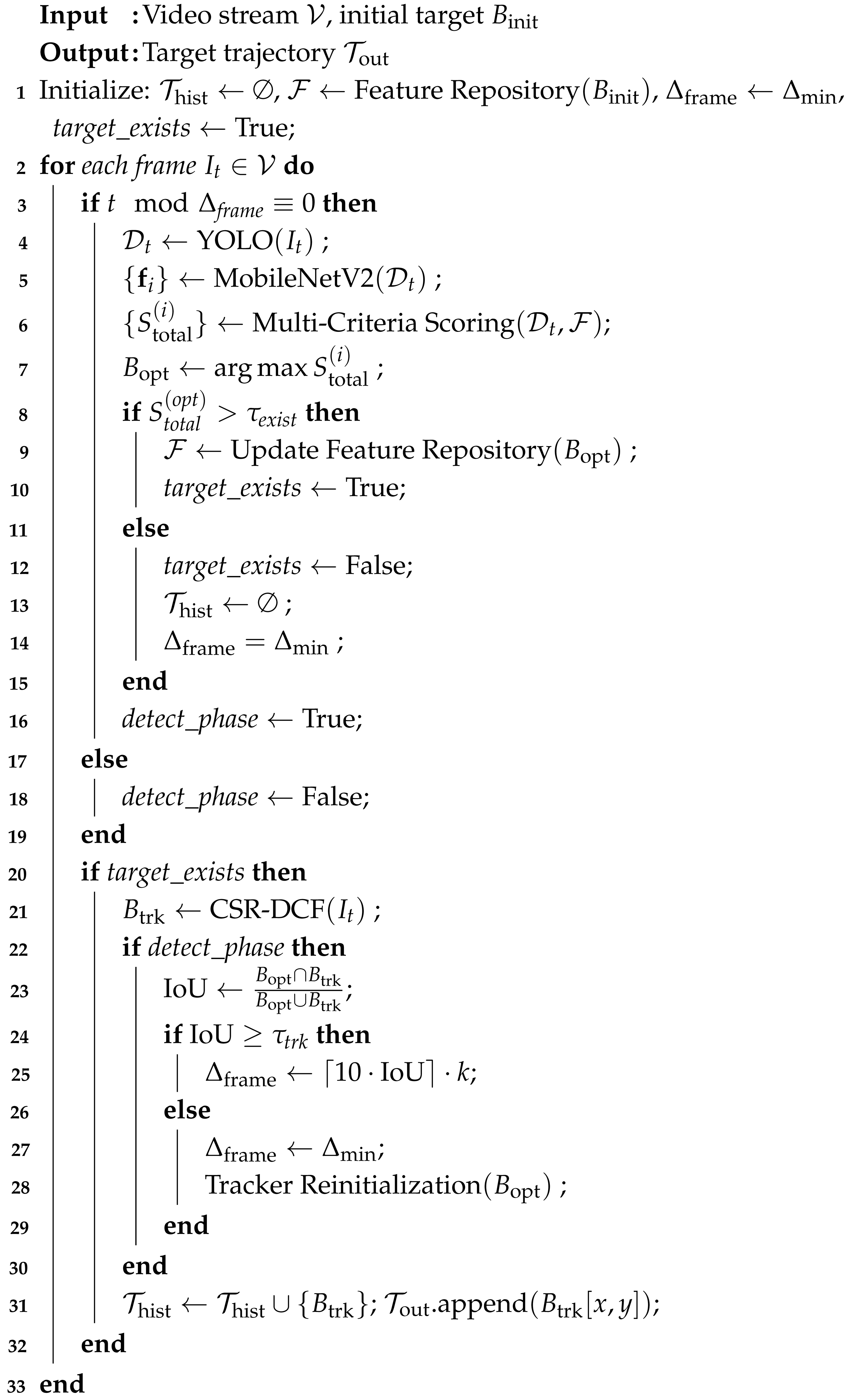

The proposed tracking framework operates through three cascaded decision layers, as formalized in Algorithm 1:

| Algorithm 1: Enhanced tracking algorithm with existence check. |

|

Triggered at intervals of frames, this layer executes

where geometric constraints filter non-human proposals. Each candidate is then scored by

with . The optimal candidate is selected through

Target persistence is validated via threshold comparison:

where defines the existence confidence threshold. Negative verification triggers

which operates under the condition. The tracker-detector consensus is evaluated by

with adaptive control rules

where establishes a tracking confidence threshold. Tracker reinitialization occurs when .

4. Implementation

We deployed our tracking algorithm within a closed-loop aerial tracking pipeline (Figure 2 and Figure 3) designed for autonomous target pursuit. Conceptually, this pipeline

Figure 2.

Real-time UAV visual tracking.

Figure 3.

Implementation.

- Captures real-time video via UAV-mounted cameras.

- Processes frames on edge nodes to execute tracking algorithms.

- Generates dynamic control commands for UAV trajectory adjustment.

This section details the hardware implementation and quantifies the system’s latency characteristics across these stages.

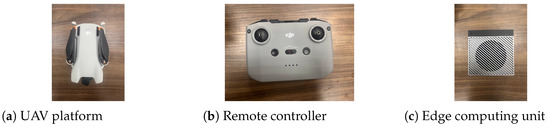

4.1. Hardware Platform

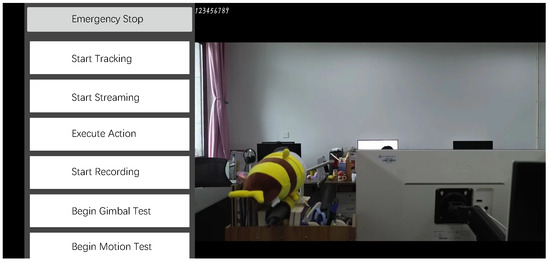

The system’s hardware architecture comprises three key components in Figure 4. The UAV transmits video streams via an RTSP protocol to a custom decoder, which sends processed YUV data through WebSocket to the edge server. Target coordinates (x, y, w, h) from detection algorithms generate three-axis control commands: horizontal adjustment uses bounding box center deviation, longitudinal control calculates compensation from target area changes, while altitude remains fixed for safety. Commands are queued and dispatched via a dedicated WebSocket thread. Control communication is implemented through a DJI MSDK-based Android app in Figure 5.

Figure 4.

System hardware components.

Figure 5.

Custom Android application developed using DJI Mobile SDK (MSDK).

4.1.1. UAV Platform

The The DJI Mini 3 UAV (SZ DJI Technology Co., Ltd., Shenzhen, China) served as the primary aerial sensing platform, equipped with a 1/1.3-inch CMOS sensor supporting 4 K/30 fps video acquisition. Configured to stream 1080 p (1920 × 1080) YUV 4:2:0 video via its three-axis mechanical gimbal, the system achieves stabilized dynamic scene capture. A hybrid positioning system integrating dual-frequency GPS and six-axis IMU provided geodetic coordinates under WGS84 datum with ±1.5 m horizontal positioning accuracy.

4.1.2. Remote Controller

The remote control system consisted of a DJI RC231 controller and an Android application developed with DJI MSDK. The RC231 received the UAV’s real-time 1080p@30fps video feed via OcuSync 2.0 and transmitted the video stream to the Android terminal through USB-C. The application employed the WebSocket protocol to send compressed video frames to the edge server, which performed tracking analysis and returned control commands (e.g., yaw adjustment, speed modulation). These commands are executed on the UAV through DJI-specific APIs like sendVirtualStickCommand.

4.1.3. Edge Computing Unit

The edge computing platform utilized the NVIDIA Jetson Orin Developer Kit (manufacturer: NVIDIA Corporation, Santa Clara, CA, USA. Detailed specs: 12-core ARM Cortex-A78AE CPU @2.0GHz, 2048-core Ampere GPU (4.0 TFLOPS), 32GB LPDDR5 RAM (204 GB/s), 64GB eMMC storage.) running Ubuntu 20.04.6 LTS (Focal Fossa) with Linux kernel 5.10.65. Its heterogeneous architecture combined a multi-core ARM CPU and a high-performance Ampere GPU with over 4 TFLOPS computational capacity, supported by high-bandwidth memory and solid-state storage. The software stack utilized CUDA for GPU acceleration, OpenCV for computer vision routines, and Python for algorithmic implementation (Detailed specs: 12-core ARM Cortex-A78AE CPU @2.0GHz, 2048-core Ampere GPU (4.0 TFLOPS), 32GB LPDDR5 RAM (204 GB/s), 64GB eMMC storage. Software versions: CUDA 11.4, OpenCV 4.5.5, Python 3.8.10).

4.2. Latency Characteristics

The end-to-end latency stems from three physical processes as detailed in Table 1.

Table 1.

Latency profiling of operational pipeline.

The round-trip latency of the control pipeline totals , decomposed into three sequential stages. Video acquisition contributes for sensor readout and OcuSync transmission. Data processing introduces for YUV 4:2:0 to RGB conversion, while edge communication accounts for , dominated by network variability. Total uncertainty derives from the root sum of squares (RSS)’s propagation of individual stage deviations.

5. Evaluation

We evaluated the performance of the proposed tracking-detection-scoring framework, focusing on filter-based trackers. These conventional trackers are enhanced with low computational overhead to enable effective real-time UAV deployment. Experimental analysis follows.

5.1. Experimental Setup

This subsection systematically describes the benchmark datasets and algorithmic parameter configurations, with hardware specifications detailed in Section 4.1.3.

5.1.1. Datasets

VOT-ST2019 [26] contains 60 short-term sequences annotated with rotated bounding boxes, explicitly incorporating six critical UAV challenges: occlusion, motion blur, viewpoint changes, scale variations, target disappearance/reappearance, and illumination changes.

OTB100 [27] provides 100 long-term sequences with axis-aligned bounding boxes and 11 challenge tags (scale/illumination changes). Its extended temporal span (500+ frames/seq) validates tracking consistency under simulated UAV communication delays (200–500 ms).

5.1.2. Parameter Settings

The algorithmic hyperparameters were empirically validated on benchmark datasets, with critical thresholds configured as follows. Threshold governs target validation, consistent with tracking standards where typically indicates correct localization. Threshold filters low-quality trajectories, enforcing strict positioning to maintain targets near bounding box centroids for stable tracking.

A sliding temporal window spanning seven consecutive frames enforces short-term motion consistency, and the feature repository maintains 20 prototypical feature vectors through FIFO replacement to optimize memory-representation trade-offs. The sliding temporal window requires strong temporal correlation, hence discards outdated motion data, while the feature repository stores long-term high-confidence features to mitigate appearance drift—its size is constrained to avoid excessive computational burden.

For the composite scoring metric in Equation (13), grid search optimization yielded the following weight coefficients: for detection confidence component , for appearance similarity , for motion consistency , and for . The optimal parameter settings vary across different datasets and scenarios. The values provided represent empirically determined defaults that typically yielded the best overall accuracy in our experimental evaluations.

5.2. Evaluation Metrics

Expected Average Overlap (EAO): Quantifies the expected average intersection-over-union (IoU) between predicted and ground-truth bounding boxes over the entire sequence, measuring long-term tracking robustness.

Failure Rate: Definition as the ratio of frames with . The absence of reset protocols makes this metric more rigorous, as temporary tracking failures cannot be artificially recovered.

Frames Per Second (FPS): The FPS quantifies the computational throughput of tracking algorithms by measuring processed frames per unit time.

5.3. Quantitative Comparison

To rigorously evaluate our enhancement framework’s generalization capability, we conducted cross-dataset validations on both VOT-ST2019 [26] and OTB100 [27] benchmarks, applying our methodology to four baseline trackers: CSR-DCF [17], Kernelized Correlation Filters (KCFs) [28], Multiple Instance Learning tracker (MIL) [29], and Generic Object Tracking Using Regression Networks (GOTURNs) [30]. The following analysis systematically quantifies performance improvements across different tracking paradigms.

5.3.1. Experimental Analysis on VOT-ST2019

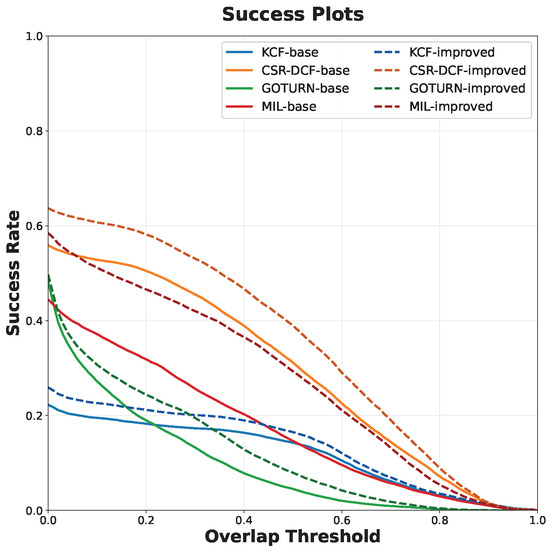

Our framework achieved a 27.94% EAO gain and 10.39% failure reduction on VOT-ST2019 by dynamically weighting detection-tracking outputs and integrating Kalman-filtered motion vectors. This enabled sustained 23–95 FPS tracking during occlusions. The success plots in Figure 6 reveal critical insights into tracking precision enhancement under our proposed methodology.

Figure 6.

Success plots of trackers with IOU metrics on VOT-st2019 [26].

The success rate curves exhibit systematic improvements across all overlap thresholds (0.2–0.8), with particularly notable gains in high-precision regimes (>0.5 IoU). For CSR-DCF-improved, the success rate at increases from 45.5% to 53.1% (relative improvement: 16.7%), indicating enhanced capability to maintain precise target localization. The performance enhancement stems from the synergistic integration between the detection–feature extraction module and the multi-criteria scoring architecture. The detection module employs categorical identification to discern target classes, while the feature extraction submodule generates discriminative embeddings through deep representation learning. These visual signatures are subsequently combined with motion consistency metrics and temporal correlation analyses in our multi-dimensional scoring module (Section 3.4) to compute composite confidence scores.

Our experimental validation on VOT-2019 Table 2 demonstrates statistically significant improvements in both Expected Average Overlap (EAO, 19.17–60.47%) and failure rate reduction (3.39–22.04%), while incurring minimal latency penalties of merely 0.64% to 8.88% across different tracker configurations. The framework with CSR-DCF achieves these advancements while maintaining real-time operational capability (>23 FPS), establishing an optimal trade-off between tracking precision, computational efficiency, and long-term robustness in aerial surveillance scenarios.

Table 2.

Performance comparison on VOT-ST2019 [26] with improvement rates.

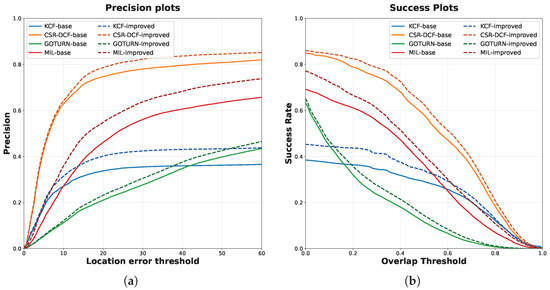

5.3.2. Experimental Analysis on OTB100

As demonstrated in Figure 7 and Table 3, our framework exhibits systematic performance improvements on the OTB100 benchmark. Experimental results reveal significant enhancements in Expected Average Overlap (EAO) ranging from 5.88% to 62.39% across improved trackers. Particularly noteworthy is the deep learning-based GOTURN tracker, which achieves a remarkable 62.39% EAO improvement (0.117 → 0.190), attributed to the synergistic optimization between motion consistency scoring mechanisms and deep feature representations. This enhancement maintains practical applicability through controlled frame rate reduction of merely 5.14% via dynamic detection scheduling, while simultaneously demonstrating the framework’s compatibility with deep learning paradigms.

Figure 7.

Performance evaluation on OTB100 benchmark. (a) Precision plot comparison on OTB100 dataset. (b) Success plot comparison on OTB100 dataset.

Table 3.

Performance comparison on OTB100 [27] with improvement rates.

Crucially, all enhanced algorithms maintain real-time operation (>14 FPS), with CSR-DCF and KCF preserving deployment feasibility at 30.83 FPS and 95.89 FPS, respectively, while achieving their respective EAO improvements. These results collectively validate the framework’s capability to enhance both traditional and deep learning-based tracking paradigms while maintaining operational practicality for aerial platforms.

5.4. Visualizing Algorithmic Gains

This section presents a visual comparative analysis conducted on the VOT-ST2019 dataset [26] to demonstrate our framework’s tracking enhancement capabilities. The evaluation focuses on two representative sequences exhibiting distinct challenges.

5.4.1. Pedestrian Interaction Scenario (Girl Sequence)

Figure 8 illustrates tracking performance under occlusion and scale variation. Our method exhibits significantly enhanced target re-acquisition capability during occlusion events compared to baseline methods. This performance advantage is empirically demonstrated through the sequential frames presented in Figure 8. When a white-clad male completely occludes the target (girl) in the test sequence, the baseline CSR-DCF method erroneously locks onto the distractor, as evidenced by bounding box drift toward the occluder—a failure attributed to insufficient discriminative feature persistence. Our framework addresses occlusion by maintaining a high-fidelity feature bank through discriminative scoring. Upon target loss, the detection mechanism rapidly identifies tracking inconsistencies and initiates re-detection to recover the true target. This architectural integration enables real-time identification of tracker failure states through continuous confidence metric analysis. Upon occlusion resolution (target fully re-emerging at Frame 121), the system demonstrates rapid recovery capability, achieving correct target reacquisition within 5 frames (Frame 126), thereby confirming the efficacy of our reinitialization protocol.

Figure 8.

Tracking performance on VOT-ST2019 girl sequence: comparative analysis of CSR-DCF and enhanced CSR-DCF. Green (ground truth), red (CSR-DCF-improved), and blue (baseline CSR-DCF).

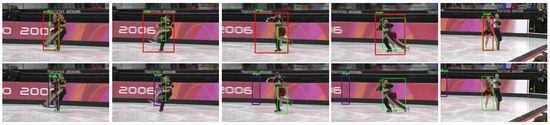

5.4.2. Dynamic Sports Scenario (iceskater2 Sequence)

As illustrated in Figure 9, our enhanced tracker maintains exceptional motion consistency during rapid rotations while preserving positional accuracy under high-speed motion. Comparative analysis reveals critical improvements in positional precision under high-speed motion, target retention under strict overlap constraints, and failure recovery robustness during abrupt motion discontinuities. This capability is rigorously validated in rotational entanglement scenarios where two ice-skaters perform prolonged embracing spins—under sustained heavy occlusion conditions, our enhanced CSR-DCF maintains continuous tracking of the target female skater, while the baseline method catastrophically drifts to the distractor. Crucially, during separation phases, our system demonstrates discriminative identification precision, successfully reacquiring the designated target through quality-driven feature prioritization as detailed in Section 3.3.3. This discriminative capacity originates from our multi-dimensional scoring mechanism that adaptively weights high-fidelity features while suppressing transient interference patterns during repository updates.

Figure 9.

Tracking performance on VOT-ST2019 iceskater2 sequence: comparative analysis of CSR-DCF and enhanced CSR-DCF. Green (ground truth), red (CSR-DCF-improved), and blue (baseline CSR-DCF).

6. Conclusions

Our framework achieves a 27.94% EAO gain and 10.39% failure rate reduction in Table 2 and Table 3 through three core innovations: First, adaptive detection-tracking coordination enables automatic failure recovery with <10% latency overhead. Detection modules identify tracking failures to trigger target re-acquisition, while tracking quality-regulated detection frequency optimizes computational efficiency. Second, motion-aware spatiotemporal modeling in Section 3.2 maintains continuity during occlusions by preserving motion cues for predictive position estimation. Third, quality-driven feature banks mitigate long-term appearance drift through discriminative template management. High-fidelity templates preserve multi-angle representations and enable continuous temporal renewal in Section 5.4.2.

Experimental validation confirms our framework’s capability to enhance various trackers (correlation filter-based and deep learning-based) across challenging aerial scenarios. Real-time performance varies (23– 30 FPS) due to computational surges during target re-acquisition, triggered by tracking interruptions in visually distorted scenes. Future work will extend this architecture to multi-target scenarios and integrate domain adaptation mechanisms for adverse weather operations.

Author Contributions

Conceptualization, Y.W.; formal analysis, Y.W.; investigation, Y.W.; methodology, Y.W.; software, Y.W. and J.H.; supervision, H.H. and Z.Z.; validation, Y.W. and D.H.; writing—original draft, Y.W.; writing—review and editing, H.H. and Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 62372094) and the Natural Science Foundation of Sichuan Province (2025YFHZ0197).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- De Smedt, F.; Hulens, D.; Goedeme, T. On-Board Real-Time Tracking of Pedestrians on a UAV. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Scherer, J.; Yahyanejad, S.; Hayat, S.; Yanmaz, E.; Andre, T.; Khan, A.; Vukadinovic, V.; Bettstetter, C.; Hellwagner, H.; Rinner, B. An Autonomous Multi-UAV System for Search and Rescue. In Proceedings of the First Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use, Florence, Italy, 18 May 2015; DroNet ’15. Association for Computing Machinery: New York, NY, USA, 2015; pp. 33–38. [Google Scholar] [CrossRef]

- Hao, J.; Zhou, Y.; Zhang, G.; Lv, Q.; Wu, Q. A Review of Target Tracking Algorithm Based on UAV. In Proceedings of the 2018 IEEE International Conference on Cyborg and Bionic Systems (CBS), Shenzhen, China, 25–27 October 2018; pp. 328–333. [Google Scholar] [CrossRef]

- Yang, C.; Yang, M.; Li, H.; Jiang, L.; Suo, X.; Mao, L.; Meng, W.; Li, Z. A survey on soccer player detection and tracking with videos. Vis. Comput. 2024, 41, 815–829. [Google Scholar] [CrossRef]

- Carli, R.; Cavone, G.; Epicoco, N.; Di Ferdinando, M.; Scarabaggio, P.; Dotoli, M. Consensus-Based Algorithms for Controlling Swarms of Unmanned Aerial Vehicles. In Ad-Hoc, Mobile, and Wireless Networks: 19th International Conference on Ad-Hoc Networks and Wireless, ADHOC-NOW 2020, Bari, Italy, 19–21 October 2020; Proceedings; Springer: Berlin/Heidelberg, Germany, 2020; pp. 84–99. [Google Scholar] [CrossRef]

- Yang, J.; Tang, W.; Ding, Z. Long-Term Target Tracking of UAVs Based on Kernelized Correlation Filter. Mathematics 2021, 9, 3006. [Google Scholar] [CrossRef]

- Sun, N.; Zhao, J.; Shi, Q.; Liu, C.; Liu, P. Moving Target Tracking by Unmanned Aerial Vehicle: A Survey and Taxonomy. IEEE Trans. Ind. Inform. 2024, 20, 7056–7068. [Google Scholar] [CrossRef]

- Fu, C.; Ye, J.; Xu, J.; He, Y.; Lin, F. Disruptor-Aware Interval-Based Response Inconsistency for Correlation Filters in Real-Time Aerial Tracking. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6301–6313. [Google Scholar] [CrossRef]

- Zhang, F.; Ma, S.; Qiu, Z.; Qi, T. Learning target-aware background-suppressed correlation filters with dual regression for real-time UAV tracking. Signal Process. 2022, 191, 108352. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the Computer Vision—ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Hua, G., Jégou, H., Eds.; Springer: Cham, Switzerland, 2016; pp. 850–865. [Google Scholar]

- Sun, X.; Wang, Q.; Xie, F.; Quan, Z.; Wang, W.; Wang, H.; Yao, Y.; Yang, W.; Suzuki, S. Siamese Transformer Network: Building an autonomous real-time target tracking system for UAV. J. Syst. Archit. 2022, 130, 102675. [Google Scholar] [CrossRef]

- Marvasti-Zadeh, S.M.; Cheng, L.; Ghanei-Yakhdan, H.; Kasaei, S. Deep Learning for Visual Tracking: A Comprehensive Survey. IEEE Trans. Intell. Transp. Syst. 2022, 23, 3943–3968. [Google Scholar] [CrossRef]

- Huang, L.; Zhao, X.; Huang, K. GlobalTrack: A Simple and Strong Baseline for Long-Term Tracking. Proc. AAAI Conf. Artif. Intell. 2020, 34, 11037–11044. [Google Scholar] [CrossRef]

- Wu, H.; Yang, X.; Yang, Y.; Liu, G. Flow Guided Short-Term Trackers with Cascade Detection for Long-Term Tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Nam, H.; Han, B. Learning Multi-Domain Convolutional Neural Networks for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of Siamese Visual Tracking with Very Deep Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Lukezic, A.; Vojir, T.; Cehovin Zajc, L.; Matas, J.; Kristan, M. Discriminative Correlation Filter with Channel and Spatial Reliability. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Cehovin, L.; Fernandez, G.; Vojir, T.; Hager, G.; Nebehay, G.; Pflugfelder, R. The Visual Object Tracking VOT2015 Challenge Results. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) Workshops, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online Object Tracking: A Benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Proceedings of Machine Learning Research. Chaudhuri, K., Salakhutdinov, R., Eds.; PMLR: New York, NY, USA, 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Koonce, B. SqueezeNet. In Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Apress: Berkeley, CA, USA, 2021; pp. 73–85. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features From Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WT, USA, 14–19 June 2020. [Google Scholar]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Pflugfelder, R.; Kamarainen, J.K.; Cehovin Zajc, L.; Drbohlav, O.; Lukezic, A.; Berg, A.; et al. The Seventh Visual Object Tracking VOT2019 Challenge Results. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Babenko, B.; Yang, M.H.; Belongie, S. Visual tracking with online Multiple Instance Learning. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 983–990. [Google Scholar] [CrossRef]

- Held, D.; Thrun, S.; Savarese, S. Learning to Track at 100 FPS with Deep Regression Networks. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 749–765. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).