Abstract

This review evaluates the use of unmanned aerial vehicles (UAVs) in detecting and managing water stress in specialty crops through thermal, multispectral, and hyperspectral imaging. Based on 104 scholarly articles from 2012 to 2024, the review highlights the advantages, limitations, and evolution of these imaging systems. Vineyards are the most studied crops for precision irrigation compared to other crops. The paper traces the shift from standalone imaging to multi-sensor fusion approaches, integrating vegetation indices and machine learning models for improved accuracy, resolution, and real-time stress assessment. It also addresses knowledge gaps such as scalability, payload constraints, and computational demands. Issues like flight altitude, sensor angle, and lighting conditions can lead to data inconsistencies, affecting water stress detection and decision-making. Emerging technologies like LiDAR, AI, and machine learning are proposed to enhance UAV data processing and stress detection. Future research should focus on developing automated data correction, multi-sensor fusion, and AI-driven real-time analysis to address sensor calibration and environmental factors. The review also advocates for integrating UAV data with satellite and ground sensors into smart irrigation systems to create a multi-scale monitoring framework, thereby advancing precision agriculture and water resource management.

1. Introduction

Changing climatic conditions and the urgent need to enhance food production to feed the growing global population, projected to reach 9.7 billion by 2050, make effective crop management crucial [1]. To meet this demand, food production must increase by 25–70%, with per-hectare yields potentially needing to double by 2050 [2,3]. However, global climate change, natural resource depletion, and anthropogenic activities pose significant challenges to agricultural sustainability, often leading to reduced yields [4]. In this context, precision agriculture has emerged as a promising approach to maintaining crop yields under varying growing conditions.

Precision agriculture relies extensively on remote sensing technologies to enable real-time field monitoring through imaging [5]. Historically, these images were primarily acquired via manned aircraft or satellites. However, recent technological advancements have led to a rapid increase in the use of unmanned aerial vehicles (UAVs) or drones for such applications [5]. UAVs offer numerous advantages, including fast, easy, and cost-effective data collection compared to traditional methods. Furthermore, their ultra-high-resolution data (a few centimeters) allow for precise mapping and monitoring of crop fields, enhancing resource use efficiency in agriculture [5]. Studies have demonstrated the exceptional potential of UAVs for a range of agricultural applications, including non-invasive diagnostics of abiotic and biotic stresses, automated phenotyping, nutrient deficiency assessments, and predictions of crop yield and quality [6,7,8,9].

Abiotic stress significantly impacts plant growth, yield, and quality. Plants respond to both biotic and abiotic stresses through a variety of morphological, physiological, and metabolic changes, occurring across spatial scales from the DNA level (nanometers) to the entire field (kilometers) [10]. At the field scale, non-destructive and quantitative UAV remote sensing methods are gaining popularity. UAVs have revolutionized crop management under abiotic stress conditions, advancing remote sensing and precision agriculture to new heights. These technologies not only facilitate the early detection of crop stress but also enable data collection across entire fields to monitor physiological responses throughout the growing season [10].

Numerous reviews have explored the applications of UAVs in managing crop stress. For example, in [11], the authors examined UAV-equipped remote sensors for monitoring crop water stress, highlighting the utility of indices derived from leaf and canopy reflectance and temperature. Their review also emphasized the effectiveness of UAV thermal imagery in capturing field stress variability and suggested that incorporating chlorophyll fluorescence measurements could enhance the accuracy of water stress detection [11]. Similarly, in [12], the authors reviewed UAV applications for monitoring abiotic and biotic stresses, including water stress, nutrient stress, pest and disease detection, and weed management. The review identified critical considerations, such as selecting appropriate imaging types, accounting for external factors, and ensuring access to high-quality ground truth data. In [12], the authors also noted that advancements in UAV technologies and machine learning techniques could address these challenges in the near future.

In a meta-review [13], the authors assessed the capacity of UAV remote sensing for water stress detection, identifying thermal imaging as the most effective approach for such applications. They highlighted the utility of thermal and vegetation indices, particularly the Crop Water Stress Index (CWSI), as reliable tools for real-time irrigation management [13]. In [14], the authors expanded on these findings, emphasizing the role of multispectral, hyperspectral, and thermal imaging technologies in accurately detecting crop water stress. They also discussed the integration of UAV data with machine learning algorithms to enhance the precision and efficiency of water stress monitoring, providing a promising pathway for sustainable water management in agriculture [14]. Furthermore, in [15], the authors reviewed the application of high-throughput phenotyping using various imaging technologies, such as RGB, multispectral, and hyperspectral imaging. While their study highlighted the advantages and limitations of these technologies and explored machine learning advancements, it primarily focused on field crops, leaving a gap in research specific to specialty crops [15].

Despite the extensive body of work on UAV applications for abiotic stress detection, studies focusing on specialty crops remain limited [16]. Specialty crops—such as fruits, vegetables, tree nuts, dried fruits, and horticultural and floricultural crops—are cultivated for their unique attributes, including taste, appearance, and nutritional value. These crops play a significant role in the U.S. agricultural economy, accounting for approximately USD 84 billion in cash receipts in 2022 [17]. Due to their high value and profitability, specialty crops require intensive management, particularly under water stress conditions, which can severely reduce yields and compromise produce quality.

Water stress in specialty crops diminishes essential secondary metabolites responsible for flavor, aroma, and health benefits, hampers physiological processes such as photosynthesis and nutrient uptake, and increases susceptibility to pests and diseases [18,19,20,21,22]. These challenges highlight the critical need for efficient water resource management to balance water conservation with maintaining crop quality and productivity. Although UAV-based technologies have been extensively reviewed for non-specialty crops and specific specialty crops like fruit and nut orchards, comprehensive studies addressing a broader range of specialty crops are lacking [16].

Recent studies have explored future possibilities for UAV-based water stress detection in horticultural crops. For instance, in [23], the authors discussed UAV applications in detecting water stress and irrigation planning, while in [24], the authors examined water requirements for horticultural crops and proposed nature-based solutions such as mulching, organic amendments, and advanced technologies like sensors and predictive modeling to improve water management. However, these studies often focus on specific crops or general water stress management, leaving a significant gap in the literature regarding UAV applications across a diverse range of specialty crops.

Given the economic importance and management challenges of specialty crops, a focused review is essential. This paper aims to examine the current state of UAV technology based on thermal, multispectral, hyperspectral imaging, and fusion approaches for detecting water stress in specialty crops, highlight its potential for enhancing precision agriculture practices, and identify gaps in the existing research. It also proposes future research directions to optimize water stress management in these high-value crops through advanced remote sensing techniques.

2. Materials and Methods

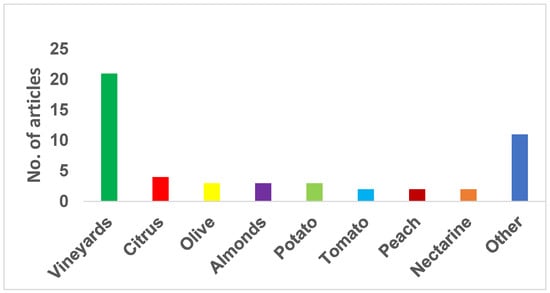

The literature for this review was sourced from Google Scholar. Keywords used in the search included “drone” or “UAV” or “UAVs” (both full terms and abbreviations) combined with “water stress” in specialty crops. The selected articles included peer-reviewed original research presenting field data on specialty crops, as well as recent meta-analyses. The search covered publications from 2012 to 2024, resulting in the collection of 104 papers for this review. The specialty crops covered in this review article included grapes (Vitis vinifera), olives (Olea europaea), almonds (Prunus dulcis), pistachios (Pistacia vera), pecans (Carya illinoinensis), nectarines (Prunus persica), peaches (Prunus persica), apples (Malus domestica), oranges (Citrus × sinensis), citrus (Citrus spp.), pomegranates (Punica granatum), strawberries (Fragaria × ananassa), African eggplant (Solanum aethiopicum), potatoes (Solanum tuberosum), tomatoes (Solanum lycopersicum), spinach (Spinacia oleracea), lettuce (Lactuca sativa), and tea (Camellia sinensis) (Figure 1). The structure and organization of this review paper are shown in Figure 2.

Figure 1.

Distribution of scientific articles used in this review paper across different specialty crops.

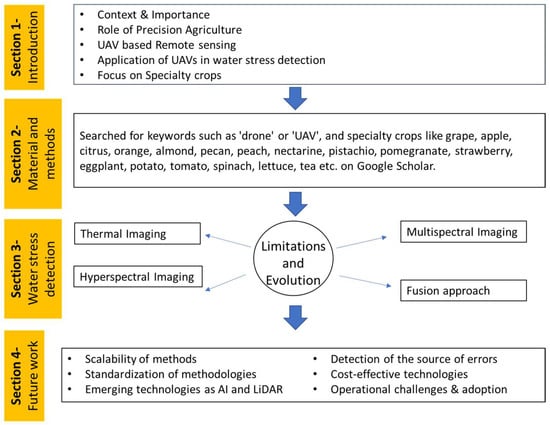

Figure 2.

A stepwise framework illustrating the organization of the review paper, encompassing key sections from the introduction to future research directions on UAV-based water stress detection in specialty crops.

3. Water Stress Management

Agriculture accounts for approximately 70% of global water consumption, with nearly 45% of the world’s food production dependent on irrigated lands [11]. This underscores the critical importance of efficient irrigation management to optimize water use and sustain agricultural productivity. UAVs have emerged as powerful tools for monitoring water stress and assessing crop water status. By leveraging advanced sensing technologies, UAVs provide accurate, real-time data that are pivotal for the effective management of irrigation resources.

Water stress detection in crops primarily relies on two methods: temperature-based and reflectance-based indices. Temperature-based indices are particularly valuable for identifying pre-visual plant stress responses, enabling early intervention. Conversely, reflectance-based indices are more effective at detecting late-stage stress, which may already impact crop performance [25]. UAVs equipped with multispectral and thermal imaging sensors, in combination with ground-based sensors, offer a robust platform for automating irrigation practices. These technologies are further enhanced by the Internet of Things (IoT), which serves as a transformative approach to sustainable crop management through data-driven irrigation strategies [26].

The integration of automation and computational tools presents opportunities to develop smart technologies capable of real-time data processing and informed decision-making. This review explores the principles and applications of UAV-based imaging technologies, including airborne thermal imaging, multispectral imaging, hyperspectral imaging, and the fusion of these modalities. By examining their roles in precision water management, the subsequent sections highlight both the potential and limitations of these advancements in revolutionizing irrigation management and supporting sustainable agricultural practices.

3.1. Thermal Imaging

Thermal imaging is a remote sensing technique that measures heat emitted by objects and converts it into temperature data [27]. In plants, water stress often induces stomatal closure to reduce transpiration, leading to an increase in leaf temperature. This temperature rise can be reliably detected using various kinds of thermal imaging cameras, making it an effective tool for identifying water stress conditions (Figure 3, Table 1) [23,25].

Figure 3.

Various thermal cameras used for remote sensing applications include the FLIR TAU II, FLIR Systems (Wilsonville, OR, USA), Thermoteknix Miricle 370 K, Thermoteknix Systems Ltd. (Waterbeach, Cambridge, UK), FLIR A65, FLIR Systems (Wilsonville, OR, USA), and FLIR A35 TIR, FLIR Systems (Wilsonville, OR, USA).

Table 1.

Specifications of various thermal imaging camera models, including resolution, wavelength, scale, temporal resolution, and waveband characteristics.

Water stress is a critical factor affecting the yield and quality of specialty crops, including grapes, olives, orchard trees, and nut trees. In high-value crops like grapes, the adoption of remote sensing technologies has significantly advanced the field of precision viticulture [36]. Since water plays a crucial role in determining grape yield and berry composition, thermal imaging technologies have become integral for monitoring water status. Numerous studies have demonstrated the successful application of drone-based thermal imaging for water management in vineyards [36,37,38]. Furthermore, climate change has heightened the importance of implementing deficit irrigation strategies and precision irrigation management to enhance water use efficiency. In heterogeneous fields, the initial step in precision water management is the identification of areas most sensitive or resistant to water stress. High-resolution remote sensing has proven effective for assessing water status and crop performance, particularly in commercial orchards with multiple crop species, thereby enabling optimized irrigation practices tailored to spatial variability within fields.

Accurate assessment of plant water status is critical, as it directly influences plant yield and quality. Advances in UAV thermal imaging have revolutionized water stress monitoring, allowing for high-resolution data collection at greater temporal and spatial scales [39]. Traditional methods for detecting water stress are often limited by variable field conditions, inefficiency, and labor-intensive processes [14]. Similarly, widely used instruments such as the pressure chamber, although effective, are constrained by their requirement for manual operation and their ability to characterize the water status of only individual plants. Remote sensing technologies address these limitations by enabling the measurement of canopy temperature, which is directly linked to plant transpiration and, consequently, to water stress conditions.

The use of canopy temperature as a water stress indicator has been a foundational principle in agricultural research for decades. Among these approaches, the CWSI has emerged as one of the most commonly employed and normalized indicators [39]. CWSI remains a critical tool for assessing crop water status, optimizing irrigation strategies, and improving water use efficiency. Extensively applied in numerous studies, CWSI has been used to determine crop water status [40], schedule precision irrigation [38], and identify field areas prone to water stress under low-frequency irrigation systems [39]. Additionally, it has been utilized to identify representative trees for consistent monitoring in orchard water management through thermal remote sensing [28]. The integration of low-cost UAVs equipped with thermal imaging capabilities has further enhanced the practicality and utility of CWSI. UAVs enable the acquisition of high-resolution thermal imagery during irrigation cycles, enabling the accurate and efficient calculation of CWSI across diverse agricultural landscapes. TVI, TCI, NDWI, and TIR-WSI indices were also explored for their use in water stress detection [41,42,43]. This advancement supports targeted irrigation practices and significantly improves the precision of water resource management.

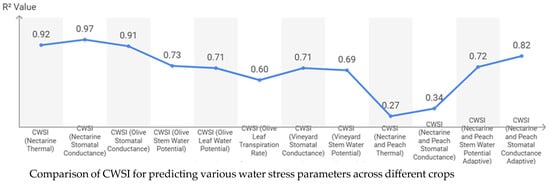

A study in pistachio fields (Pistachia vera cv. Kerman) in Madera County, California, exemplified UAV-based thermal imaging’s potential for optimizing irrigation. By comparing the average CWSI of irrigated units with individual unit CWSI values, the study identified over- and under-irrigation caused by fixed schedules, ultimately determining optimal irrigation intervals [39]. Similarly, in a nectarine orchard, UAV-borne thermal sensing mapped water stress and spatial variability across 1 ha. Thermal images processed into orthomosaics allowed for CWSI calculations based on canopy temperatures, showing strong correlations with midday stem water potential (Ψstem) (R2 = 0.92) and stomatal conductance (g_c) (R2 = 0.97) [35].

In olive orchards, precise water management is critical for optimizing oil quality and quantity, particularly during the pit hardening and oil accumulation stages. UAV thermal imaging has been employed to identify representative trees for monitoring orchard water status [28]. In super high-density (SHD) olive orchards under drip irrigation, the use of CWSI calculated from mini RPAS (Remotely Piloted Aircraft Systems) was evaluated to estimate tree water status variability and establish a non-water-stressed baseline (NWSB) for olive trees [29]. This study compared CWSI with meteorological variables such as soil moisture content, midday Ψstem, gc, and canopy temperature. Among these, gc exhibited the strongest correlation with CWSI (R2 = 0.91), outperforming other water stress indicators [29]. Conversely, a vineyard study reported a lower correlation of R2 = 0.70 between CWSI and gc [36].

In vineyards, the relationship between CWSI and leaf water potential (ΨL) has been extensively studied. These studies demonstrated that CWSI can evaluate instantaneous water status [36] and seasonal water status variability [38]. In Spain, researchers developed non-water stress baselines and examined the relationship between CWSI and ΨL in grape varieties (e.g., Pinot Noir, Chardonnay, Syrah, Tempranillo) across different phenological stages [37]. Although CWSI strongly correlated with ΨL for each variety, phenological stage and varietal differences significantly influenced this relationship. The study emphasized the importance of establishing baselines and ΨL correlations that account for phenology and varietal differences for effective CWSI monitoring [37]. Another study used CWSI-derived ΨL estimates for regulated deficit irrigation in Chardonnay vineyards without negatively affecting yield or wine composition [38]. However, the accuracy of remote ΨL estimation diminished after rainfall and during low vapor pressure deficit (VPD) conditions (<2.3 kPa) [38].

High-resolution thermal imaging (9 cm/pixel) has also been employed in large-scale vineyards (97.5 ha) to assess instantaneous and seasonal water variability through single-flight campaigns [36]. While CWSI did not align with seasonal water status trends, it captured other physiological processes, such as yield, berry size, and sugar content during ripening [36]. Alternative water stress indicators, such as the Photochemical Reflectance Index (PRI), have been explored, but issues related to viewing geometry, canopy architecture, and sunlit/shadow fractions limit their utility [44]. New formulations, like normalized PRI, have been proposed to address these limitations [44]. Additionally, adaptive CWSI has been developed for orchards with diverse cultivars and canopy structures, as a single set of Twet and Tdry values can lead to inaccurate CWSI calculations. Adaptive CWSI showed better correlations with Ψstem (R2 = 0.72) and gc (R2 = 0.82) than conventional CWSI in nectarine and peach orchards [45].

The application of UAV-based thermal imaging in water stress detection has evolved significantly, demonstrating increased precision and adaptability in monitoring plant water status. Previously, studies have primarily relied on CWSI derived from single-day thermal measurements, which, while effective, showed limitations in capturing seasonal water stress patterns due to variability in environmental conditions such as solar radiation, humidity, and phenology over the growing season [38]. Additionally, the single-day CWSI measurement was not able to fully capture seasonal variations in plant water status, as fluctuations in water availability, transpiration rates, and crop responses over time impact stress indicators. These limitations highlight the need for multi-temporal monitoring and the integration of multiple physiological parameters to refine precision irrigation decisions and avoid misinterpretations of crop water stress [36].

Similarly, the latest thermal imaging studies that focused on multiple crop types and cultivars in orchards, rather than single species, reported the need for crop-specific CWSI values [46] (Figure 4). Similarly, CWSI maps using thermal imagery effectively distinguished water stress levels among regulated deficit irrigation (RDI) strategies implemented in vineyards and olive orchards [47]. Adaptive CWSI approaches, such as feature extraction and probability modeling, have improved water stress mapping in mixed orchards [45]. Furthermore, UAV-based thermal imaging has been utilized to integrate canopy temperature data with satellite-based models, such as the Simplified Surface Energy Balance (SSEBop) model, for estimating actual evapotranspiration in pecan orchards [48]. These advancements indicate a shift toward more automated, scalable, and accurate UAV-based thermal monitoring systems, ensuring that irrigation strategies can be tailored to specific field conditions for optimal water resource utilization and yield optimization.

Figure 4.

This graph illustrates the R2 values of commonly used vegetation indices in thermal imaging studies, specifically, the Crop Water Stress Index (CWSI), across different water stress parameters, such as stomatal conductance, leaf water potential, and transpiration rate, in various crops, including nectarine, olive, and vineyard crops.

3.2. Multispectral

Multispectral imaging cameras capture data across various bands of the light spectrum, including visible, near-infrared (NIR), and shortwave infrared (SWIR) bands (Figure 5, Table 2) [49]. This capability enables the calculation of vegetation indices such as the Normalized Difference Vegetation Index (NDVI), Green NDVI (GNDVI), Transformed Chlorophyll Absorption in Reflectance Index/Optimized Soil-Adjusted Vegetation Index (TCARI/OSAVI), and PRI, which are widely used to monitor plant health and water status [49]. These indices exhibit strong correlations with key plant water status indicators, including Ψstem, photosynthesis rate, transpiration rate, and gc [50,51,52,53,54,55,56,57,58,59].

Figure 5.

Various multispectral imaging camera models, including the MicaSense RedEdge-M, MicaSense Inc. (Seattle, WA, USA), Tetracam MCA-6, Tetracam Inc. (Chatsworth, CA, USA), Parrot Sequoia, Parrot Drones SAS (Paris, France), and Tetracam ADC Snap, Tetracam Inc. (Chatsworth, CA, USA).

Table 2.

Specifications of various multispectral imaging camera models, including resolution, scale, and spectral band characteristics.

Under water stress conditions, plants undergo physiological changes that markedly alter their reflectance properties. Reduced leaf water content leads to stomatal closure and chlorophyll degradation, resulting in increased reflectance in the red band and decreased reflectance in the NIR band as cell turgor declines and leaf structure deteriorates [60,61]. Multispectral sensors detect spectral changes, enabling the calculation of indices like NDVI, which decreases under stress, and PRI, which reflects variations in xanthophyll cycle pigments associated with photosynthetic efficiency [49,51]. The SWIR bands (1500–1750 nm and 2100–2500 nm) are particularly sensitive to water content in plants, as they directly interact with water molecules in leaves. Reflectance in the SWIR region increases under water deficit conditions due to reduced water absorption [49,51]. Compared to visible and NIR bands, SWIR demonstrates greater sensitivity to subtle changes in leaf and canopy water content, making it an invaluable tool for early water stress detection [62,63]. However, SWIR sensors are generally more expensive and require higher radiometric resolution, which may constrain their widespread adoption compared to NIR or visible sensors [51]. By integrating data from these spectral bands, particularly SWIR and NIR, multispectral sensors offer a comprehensive perspective on plant water status, supporting precise water stress detection and advanced irrigation management practices.

Several studies have demonstrated the utility of multispectral imaging for water management in vineyards [50,52,54,56,57,64,65]. Vineyards, known for their high sensitivity to water availability and economic importance, represent one of the most studied crop systems for UAV-based multispectral water stress detection. UAV multispectral imagery has been applied using diverse methodologies to detect water stress and manage irrigation effectively.

In [50], the authors examined spatial variability in water stress using TCARI/OSAVI indices, correlating them with Ψstem and gc across varying slopes. In [56], the authors conducted 15 UAV flights to calculate NDVI and TCARI/OSAVI for assessing seasonal water stress trends. In [57], the authors explored correlations between NDVI and GNDVI and gc across four irrigation treatments, demonstrating UAVs’ ability to distinguish water stress under different regimes. In [59], the authors integrated multispectral imagery with weather data in a machine learning model to map ΨL, showcasing the value of combining spectral and environmental data for precise water stress mapping. In [64], the authors enhanced this approach by incorporating SWIR bands, improving sensitivity to water content variations in vineyards. In [52], the authors focused on estimating crop coefficients (Kc) in ”Cabernet Sauvignon” grapevines, combining spectral and structural data with machine learning for precise irrigation scheduling. In [65], the authors introduced fraction-based vegetation indices, isolating pure canopy data to map intra-block water stress variability, offering a novel approach compared to mean-based VIs.

In [52], the authors developed a UAV-based method to estimate the crop coefficient (Kc) of ”Cabernet Sauvignon” grapevines in South Australia. Using a multispectral camera mounted on a UAV, the study captured high-resolution spectral and structural data across phenological stages over two growing seasons. Kc, representing the ratio of actual crop evapotranspiration to reference evapotranspiration, was predicted using univariate, multivariate, and machine learning models. Structural features, such as canopy top-view area, showed the strongest correlation with Kc (Pearson R = 0.56, p ≤ 0.001). The random forest model provided the most accurate Kc predictions, with R2 = 0.675, RMSE = 0.062, and MAE = 0.047, enabling precise irrigation management by capturing spatial variability in water requirements [52]. Similarly, in [57], the authors used UAV multispectral imagery to detect water stress and schedule irrigation in vineyards in Benton City, Washington, USA. Four irrigation treatments (100%, 60%, 30%, and 15% of standard irrigation) were examined. NDVI and GNDVI were derived from multispectral images and compared with leaf gc data, showing significant correlations (NDVI: r = 0.56; GNDVI: r = 0.65). Higher NDVI values corresponded to 100% irrigation, while lower values were observed in the 30% and 15% irrigation treatments, indicating water stress [57].

Other studies employed advanced modeling approaches [54,59,66]. For instance, in [54], researchers combined UAV multispectral data with machine learning algorithms to estimate vineyard water status, achieving R2 = 0.80 and RMSE = 0.12 MPa for Ψstem predictions. In [66], the authors used artificial neural network (ANN) models to predict the spatial variability of Ψstem, improving accuracy (R2 = 0.87, RMSE = 0.12 MPa). In [60], researchers integrated weather data with multispectral imagery, achieving 77% explanatory power for ΨL variability through random forest modeling.

While vineyards dominate the research landscape, UAV multispectral imaging has also been effectively applied to other specialty crops [53,55,67,68,69,70]. In a study on almonds [53], the authors utilized PCA to analyze canopy pixel distributions, achieving superior correlations with Ψstem (R2 = 0.81) compared to traditional NDVI values, and demonstrated enhanced water stress detection accuracy with UAV multispectral data.

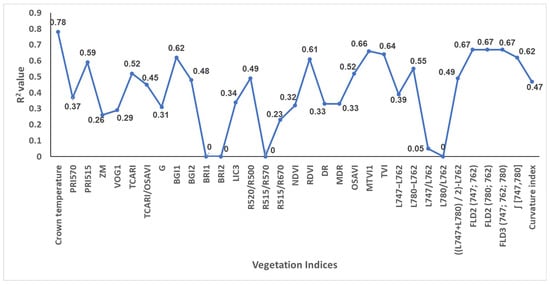

Studies conducted on citrus revealed similar applications. In [67], the authors found that PRI515 and PRI570 were strong indicators of water stress in citrus orchards, showing high correlations with fruit quality parameters (r2 = 0.69 for PRI515 vs. TSS, r2 = 0.58 vs. TA), while NDVI, RDVI, and MTVI had weak correlations (r2 = 0.04 for NDVI vs. TSS, r2 = 0.19 vs. TA), making them less effective for assessing water status, with PRI515 proving to be more reliable than PRI570 under canopy structural changes. In [55], the authors estimated gc in citrus trees under water stress using multispectral imagery and machine learning techniques, achieving a validation R2 = 0.779 and RMSE = 0.00165. In pomegranate orchards, the use of combined multispectral imagery with stochastic configuration networks (SCN) to estimate evapotranspiration achieved R2 = 0.995 and RMSE = 0.046, enabling optimized water use [68]. Similarly, in African eggplant, it was demonstrated that NDVI and OSAVI effectively predicted water stress and yield potential under different irrigation regimes [69]. The findings underscored the importance of combining multispectral data with machine learning for precision irrigation. In [70], the authors demonstrated that random forest machine learning models integrating PRI, multiple VIs, and weather data can accurately estimate tomato ψstem for precision irrigation management. The proximal sensor-based model showed strong agreement with ψstem (R2 = 0.74, MAE = 0.63 bars), while the UAV-based model using 12-band imagery achieved even higher accuracy (R2 = 0.81, MAE = 0.67 bars). UAV-generated ψstem maps effectively captured water status variations across different irrigation treatments and tracked temporal changes [70].

The evolution of UAV-based multispectral imaging has significantly enhanced water stress detection by integrating various vegetation indices, structural features, and advanced modeling techniques to improve irrigation decision-making. Previously, most multispectral imaging studies relied on basic vegetation indices, such as NDVI [53,67]. However, the inclusion of more complex indices, such as TCARI/OSAVI, has improved sensitivity to chlorophyll content and canopy variability [54,59,66]. Further advancements have incorporated SWIR bands, which demonstrate superior accuracy in detecting subtle changes in leaf water content compared to traditional NIR-based indices [64].

Another major advancement was the integration of machine learning models for multispectral-based water stress detection. Traditional correlation-based approaches were gradually replaced by models such as random forests and artificial neural networks (ANNs), which significantly improved the estimation of key water stress indicators, such as Ψstem, ΨL, and Kc [54,67]. The ability of ANNs to compensate for individual weaknesses in vegetation indices has also enabled more robust water stress mapping across complex agricultural environments, such as orchards and vineyards with varying canopy structures [54].

UAV-based multispectral imaging has evolved into a widely applicable tool across diverse specialty crops, extending beyond vineyards to include almonds, citrus, pomegranates, African eggplants, and tomatoes. By integrating sensor fusion, advanced modeling techniques, and crop-specific calibration approaches, multispectral imaging has transitioned from basic reflectance-based monitoring to highly precise, machine learning-driven irrigation optimization systems. However, further refinement of spectral indices, improvements in real-time processing, and the incorporation of additional spectral bands remain essential for maximizing the scalability and operational efficiency of UAV-based multispectral imaging in precision agriculture.

3.3. Hyperspectral

Hyperspectral imaging is a powerful remote sensing technology that captures reflectance using specific sensors across hundreds of narrow spectral bands, enabling detailed analysis of plant physiological and biochemical changes (Figure 6, Table 3). This high spectral resolution allows hyperspectral sensors to detect subtle variations in plant water status that may not be evident in broader-band systems, such as multispectral imaging.

Figure 6.

Various hyperspectral imaging camera models, including the HySpex VNIR (HySpex Inc., Oslo, Norway), HySpex SWIR (HySpex Inc. Oslo, Norway), Specim AFX-17 (Specim Inc., Oulu, Finland), and Nano-Hyperspec (Headwall Photonics, Inc., Bolton, MA, USA).

Table 3.

Specifications of various hyperspectral imaging camera models, including spectral range and scale for each camera.

Water stress in plants induces changes in reflectance at specific wavelengths due to alterations in leaf biochemistry and structure. While structural changes in pigments are discernible to the naked eye, alterations in the NIR and SWIR regions are not visually detectable [76]. Notably, changes in the SWIR spectrum often occur before visible stress symptoms, enabling hyperspectral imaging to identify stress in plants at a presymptomatic stage [77]. Hyperspectral sensors are particularly sensitive to water absorption features associated with O–H bonds, typically found in the SWIR region (1450 nm and 1940 nm) [49]. These wavelengths provide direct indicators of leaf water content, making hyperspectral imaging highly effective for early water stress detection. In addition to water content, hyperspectral imaging captures metabolic changes caused by drought stress, such as the decomposition of cellulose and proteins [71]. These biochemical shifts are critical markers of water stress and can be effectively monitored using hyperspectral data. Hyperspectral imaging also identifies structural alterations in cells that are observable in the NIR [73].

Hyperspectral imaging enables the calculation of narrow-band vegetation indices that are sensitive to water stress, such as the Water Index (WI) and the Normalized Difference Water Index (NDWI), among others [75,76,77,78,79,80,81,82,83]. These indices reflect changes in leaf water content and structural attributes, such as cell wall thickness [60].

The application of drone-based hyperspectral imaging for detecting water stress in specialty crops remains relatively underexplored due to limited data availability. However, existing studies utilizing handheld hyperspectral cameras provide valuable insights into its potential. These studies highlight the capability of hyperspectral imaging to detect water stress, emphasizing opportunities for employing drone-based hyperspectral imaging in specialty crop production. This review synthesizes findings from research conducted with handheld hyperspectral cameras, along with the limited studies available on aerial hyperspectral imaging, to explore the potential advantages, applications, and limitations of drone-based hyperspectral imaging for effective water stress detection in specialty crops.

Among specialty crops, grapevines are the most extensively studied for water stress using hyperspectral imaging [71]. In [72], researchers investigated water stress detection in a ”Shiraz” vineyard in Stellenbosch, South Africa, using hyperspectral imaging and machine learning. Vines were classified as water-stressed (−1.0 MPa to −1.8 MPa) or non-stressed (≥−0.7 MPa) based on Ψstem, which served as ground truth data. Hyperspectral imaging captured spectral subsets of 176 wavebands (473–708 nm) [72]. Preprocessing included empirical line correction using a white reference panel, and data were analyzed using random forest (RF) and Extreme Gradient Boosting (XGBoost) models, achieving test accuracies of 83.3% and 80.0%, respectively [72]. This study highlights the integration of hyperspectral imaging, rigorous preprocessing, and physiological measurements as a robust framework for detecting water stress in vineyards and supporting precision irrigation [72].

Another study in the Finger Lakes Wine Country of Upstate New York used sUAS-equipped hyperspectral imaging to explore real-time grapevine water status [74]. The study focused on a spectral range of 400–1000 nm across three flight days and used midday Ψstem measurements as ground truth. Partial least squares regression (PLS-R) modeling correlated hyperspectral data with Ψstem, achieving a cross-validation R2 = 0.68 and demonstrating strong potential for water stress monitoring [74].

In [71], researchers utilized wide spectral resolution, including VNIR and SWIR, for drought stress detection in Croatian vineyards. Hyperspectral imagery spanning 409–2509 nm was captured using Hyspex VNIR-1600 and SWIR-384 cameras. Analysis with Partial Least Squares–Support Vector Machines (PLS-SVM) achieved over 97% accuracy in distinguishing drought-stressed from irrigated vines [71]. Key wavelengths (1110 nm and 1200–1330 nm) linked to metabolic changes under drought stress showed higher reflectance in irrigated plants, indicating drought-induced decomposition of cellulose and proteins [71]. This study demonstrated the utility of hyperspectral imaging for precision irrigation management in landscapes with low water-holding capacity [71].

A recent study also highlighted the combined use of NIR and SWIR hyperspectral cameras installed on UAVs to predict grapevine water status [73]. An experimental design with 48 zones and 12 water regimes was implemented. Six supervised models were compared to predict Ψstem using hyperspectral data. The optimal model achieved R2 = 0.54 and RMSE = 0.11 MPa, while classification models distinguished water stress levels with 74% accuracy. This research underscores the potential of UAV-based hyperspectral imaging combined with machine learning for precise vineyard irrigation management [73].

Hyperspectral imaging with handheld devices under controlled environmental conditions has also been utilized for water management in other specialty crops, including vegetables and fruits [75,84,85,86,87]. For instance, researchers estimated soil moisture content in potato fields (Solanum tuberosum) based on hyperspectral images of the whole canopy or leaves [75]. This greenhouse experiment involved four water treatment levels: 10%, 15%, 20%, and 25% soil moisture content by weight. Hyperspectral data spanning 400–1000 nm were collected, and various vegetation indices, such as Red Edge NDVI, Modified NDVI, and Vogelmann Red Edge Indices (VOG REI), were calculated. Among these, VOG REI 1 showed the highest correlation with soil moisture content (R2 = 0.9), followed by VOG REI 2 (R2 = 0.886). The study also reported that an optimal soil moisture content of 17% was associated with maximum dry tuber weight, emphasizing the potential of hyperspectral imaging for water stress monitoring and precision irrigation management in potato production [75].

Another greenhouse study emphasized the combined use of hyperspectral imaging with several machine learning algorithms to detect specific spectral signatures as proxies for quantitative water stress in potatoes (Solanum tuberosum) [86]. This study applied four water stress levels at two phenological stages—tuber differentiation and maximum tuberization. Various machine learning models, such as random decision forests, multi-layer perceptron, convolutional neural networks, support vector machines, extreme gradient boost, and AdaBoost, were tested for water stress detection and estimation. Among these, random forest and extreme gradient boost performed best for classification tasks under greenhouse conditions. Further studies were recommended to apply these results under field conditions [86].

Some studies have also tested the ability of hyperspectral imaging to detect combinations of abiotic stresses and to differentiate between abiotic and biotic stresses [83,84]. A controlled pot experiment used spectral data spanning 400–2500 nm for the detection and discrimination of abiotic drought stress in tomato (Solanum lycopersicum) plants from biotic stress caused by root-knot nematode (Meloidogyne incognita) infestation [84]. Advanced classification techniques, such as Partial Least Squares–Support Vector Machine (PLS-SVM), applied to spectral data, allowed for the distinction between various treatment groups, including biotic vs. abiotic stress, with a 59% success rate. This study highlighted the potential applications of hyperspectral imaging for quick nematode resistance evaluation in breeding programs [84].

In [83], the authors investigated the early detection of heat and water stress in strawberry plants (Fragaria × ananassa Duch) using chlorophyll fluorescence indices derived from hyperspectral images. In a controlled laboratory setting, 45 strawberry plants were subjected to heat and water stress. Hyperspectral images capturing fluorescence reflectance between 500 nm and 900 nm were used to extract eight indices: PSNDa, PSNDb, PSSRa, PSSRb, CI-RedEdge, NDRE, SR, and RVSI. The random forest classifier model, incorporating all eight indices, achieved an accuracy of 94% in stress detection, while a combination of RVSI, PSNDb, PSSRb, and CI-RedEdge enabled the Gradient Boosting classifier to reach 91% accuracy. This study demonstrates the potential of fluorescence hyperspectral imaging for the early detection of abiotic stress in strawberry plants, offering a non-destructive approach for timely stress management in specialty crops [83].

The potential of hyperspectral imaging as a non-destructive and rapid method to assess drought-induced physiological and biochemical changes in tea (Camellia sinensis) plants has also been tested [87]. This experiment, conducted in a controlled greenhouse environment, simulated drought stress by withholding irrigation from tea seedlings. Hyperspectral imaging captured reflectance data from leaves to evaluate parameters such as malondialdehyde (MDA), electrolyte leakage (EL), photosystem II efficiency (Fv/Fm), soluble saccharide (SS), and drought damage degree (DDD). Advanced machine learning models, including competitive adaptive reweighted sampling-partial least squares (CARS-PLS) and uninformative variable elimination-support vector machine (UVE-SVM), were applied to predict these indices with high accuracy. The CARS-PLS model demonstrated strong predictive capability for MDA (Rcal = 0.96, Rp = 0.92, RPD = 3.51), while the UVE-SVM model was effective for DDD estimation (Rcal = 0.97, Rp = 0.95, RPD = 4.28) [87]. Similarly, hyperspectral imaging has also been used in combination with chlorophyll fluorescence to locate water-responsive genomic loci in lettuce (Lactuca sativa) [86]. This highlights the potential use of hyperspectral imaging for high-throughput phenotyping of specialty crops [85].

The application of UAV-based hyperspectral imaging for water stress detection in specialty crops has evolved significantly, driven by improvements in spectral resolution, data processing, and integration with advanced machine learning techniques. Early studies primarily relied on handheld hyperspectral cameras to assess water stress using a limited number of indices, providing foundational insights into spectral reflectance changes under drought conditions [75,84,85,86,87]. However, these methods were constrained by limited spatial coverage and manual data collection, which restricted their scalability for large-scale agricultural monitoring. The transition to UAV-mounted hyperspectral sensors and the estimation of a wide range of vegetation indices have enabled high-resolution, field-scale stress detection, providing more detailed spatial and temporal insights into plant water status with improved accuracy (Figure 7).

Figure 7.

This graph compares the correlation (R2 values) of different vegetation indices calculated using hyperspectral imaging with stomatal conductance.

The use of advanced machine learning and deep learning models has further revolutionized hyperspectral-based water stress detection. Traditional correlation-based approaches have been enhanced by advanced predictive modeling techniques, such as Partial Least Squares Regression (PLS-R), Support Vector Machines (SVM), and random forest algorithms, which have significantly improved the accuracy of Ψstem and ΨL estimations [73]. The integration of wide spectral resolution (VNIR and SWIR bands) has also enhanced stress detection capabilities, as key wavelengths associated with water absorption, pigment degradation, and metabolic responses have been identified as reliable indicators of drought stress [71]. The ability of hyperspectral imaging to differentiate abiotic from biotic stresses, as demonstrated in tomato and strawberry stress detection studies, has also broadened its applicability beyond conventional water stress assessment [83,84].

UAV-based hyperspectral imaging has now been successfully applied across a range of specialty crops, including grapevines, potatoes, strawberries, spinach, lettuce, and tea. While initial applications were limited to controlled greenhouse environments, recent advancements in real-time spectral analysis, cloud-based processing, and hyperspectral sensor miniaturization have facilitated the field-scale implementation of UAV hyperspectral imaging. However, some limitations of hyperspectral imaging systems, including the need for standardized calibration methods, improved spectral interpretation frameworks, and enhanced data processing techniques, have been identified in the latest studies. The fusion of hyperspectral imaging with other imaging technologies holds potential for maximizing its scalability and operational efficiency in precision agriculture. For example, the fusion of hyperspectral data with other technologies, such as chlorophyll fluorescence indices, has further optimized stress detection accuracy, supporting early interventions for improved irrigation management.

3.4. Fusion Approach

Fusion approaches that integrate data from multiple aerial sensors have become powerful tools for enhancing water stress detection in specialty crops. These methods combine the strengths of various sensor technologies, including hyperspectral, thermal, multispectral imaging, and ground-based sensing, to provide a comprehensive understanding of plant physiological responses to water stress. Spectral signatures, influenced by factors specific to different regions of the light spectrum, play a crucial role. In the visible spectrum (400–700 nm), reflectance is primarily influenced by pigments such as chlorophyll, carotenoids, and anthocyanins. In contrast, the NIR (700–1000 nm) is shaped mainly by leaf morphology and structure, while the SWIR (1000–2500 nm) reflects the presence of water and metabolites like cellulose and proteins [59,70]. The integration of these sensing techniques enhances both accuracy and spatial resolution, enabling more effective precision irrigation strategies.

A notable example of this approach was demonstrated in a study [88] where hyperspectral and thermal imagery from a UAV platform were used to detect water stress in a citrus orchard. The study employed a micro-hyperspectral imager that captured 260 narrow spectral bands (400–885 nm) and a thermal camera to assess regulated deficit irrigation treatments. Chlorophyll fluorescence, retrieved using the Fraunhofer Line Depth (FLD) principle, showed significant correlations with gc (R2 = 0.67) and water potential (R2 = 0.66). Additionally, thermal imagery provided crown temperature data, which strongly correlated with gc (R2 = 0.78) and moderately with water potential (R2 = 0.34). Narrow-band indices such as the Photochemical Reflectance Index (PRI) and Blue–Green Index (BGI1) demonstrated significant sensitivity to water stress indicators, with BGI1 showing the highest correlation with both gc (r2 = 0.62) and water potential (R2 = 0.49). The relationship between canopy temperature and water stress is complex, as it is influenced by diurnal stomatal conductance patterns, evaporative demand, and internal water status, particularly in crops like citrus in semi-arid regions, where high vapor pressure deficits cause early stomatal closure, complicating thermal-based stress detection. Additionally, orchards and vineyards, typically planted in grids, present challenges such as soil background interference, canopy shadows, and the need for high spatial resolution with frequent revisit periods. To overcome these limitations, the fusion of hyperspectral and thermal imaging provides a more robust approach by integrating structural, physiological, and biochemical indicators, allowing for better validation of water stress levels and enhancing the accuracy of stress detection and irrigation management [88].

Similarly, UAV-based fusion of calibrated thermal data with visible and near-infrared imagery was employed to create high-resolution multispectral orthomosaics in a study [32] assessing water stress in apple orchards. Conducted in a 6400 m2 orchard in Southern France, the study utilized UAV flights over well-irrigated and water-stressed apple trees, employing on-ground reference targets for radiometric calibration and software for accurate thermal data correction. Surface temperature extracted from thermal images was compared with temperature measurements from IR120 sensors located above 10 apple trees, which recorded mean temperature every 10 min, yielding an R2 value of 0.768 in linear regression (p-value < 0.001). High canopy temperatures were observed in water-stressed plants compared to fully irrigated plants, showcasing a strong correlation between aerial data and ground measurements. The fusion of RGB and thermal imaging improves water stress assessment by ensuring accurate canopy temperature measurements, minimizing background interference, and enhancing tree-level stress detection precision [32].

Further research explored hyperspectral–hyperspatial fusion techniques to enhance water stress detection and the retrieval of biophysical parameters in crops, focusing on commercial citrus orchards in Valencia, Spain. The fusion technique was tested on a simulated citrus orchard and in real-world commercial orchards with discontinuous canopies [89]. Thermal hyperspatial and hyperspectral (APEX) imagery were combined to achieve more efficient and accurate water stress estimations. The Photochemical Reflectance Index (PRI), known for its effectiveness in early water stress detection, showed significantly improved results when applied to the fused dataset, achieving an R2 value of 0.62 (p < 0.001) compared to 0.21 with the original hyperspectral APEX dataset. Maximal linear relationships between vegetation indices and biophysical parameters, such as water content and chlorophyll maps, were observed, with R2 values of 0.93 and 0.86, respectively. This research highlights the potential of hyperspectral–hyperspatial fusion techniques for precise physiological assessment and stress monitoring in citrus orchards [89].

Similarly, a study conducted in a commercial orchard in Córdoba, southern Spain, investigated the effects of crown heterogeneity on water stress detection using a fusion approach that combines sun-induced chlorophyll fluorescence (SIF) from hyperspectral imagery with the CWSI from thermal imagery [9]. Three watering regimes were analyzed: a rainfed plot, a fully irrigated control (FI) where irrigation met crop water requirements, and a regulated deficit irrigation (RDI) treatment receiving only 20% of crop evapotranspiration. High-resolution airborne hyperspectral and thermal data were collected, and ground truth measurements, including gc and photosynthetic rates, were used for validation. The study revealed that the relationships between SIF and the assimilation rate significantly improved when only sunlit crown pixels were extracted through segmentation, as opposed to using the entire crown, which was degraded due to canopy heterogeneity. Thermal imagery further showed that CWSI values were within the expected theoretical range when derived from the middle quartile (Q50) of crown segmentation, representing the coldest and purest vegetation pixels. In contrast, upper quartile pixels (Q75) introduced bias due to soil background effects on crown temperature calculations, particularly under all watering regimes. Relationships between CWSI and gc were also heavily influenced by segmentation, with strong correlations (R2 = 0.78) observed for Q50 pure vegetation pixels compared to degraded correlations (R2 = 0.52) when warmer upper quartile pixels were included. The research demonstrated that incorporating chlorophyll fluorescence into the models significantly improved the accuracy of N retrievals, achieving a coefficient of determination (R2) of 0.92, up from R2 = 068–0.77. The findings emphasize the importance of high-resolution imagery for precise crown segmentation to extract pure vegetation pixels, which are critical for accurate SIF and CWSI calculations. This approach enhances the detection of water stress in orchards by minimizing canopy heterogeneity effects and underscores the value of integrating hyperspectral and thermal data for precision agriculture and improved water stress monitoring [9].

A recent study [90] used multi-modal UAV remote sensing data consisting of RGB, thermal infrared (TIR), and multispectral (Mul) to monitor soil moisture in a citrus orchard. Convolutional neural networks (CNN), long short-term memory (LSTM), and a new hybrid model (CNN-LSTM) were used to predict soil moisture at different depths by using inputs from an individual sensor (only RGB) versus a combination of two (RGB + TIR) or three sensors (RGB + TIR + Mul). The multi-sensor data fusion approach significantly improved soil moisture prediction accuracy, with the RGB + Multispectral + Thermal (TIR) fusion model achieving the highest performance (R2 = 0.80–0.88, RMSE = 2.46–2.99 m3/m3), followed by Multispectral + TIR (R2 = 0.64–0.84, RMSE = 2.86–3.89 m3/m3) and RGB + Multispectral (R2 = 0.60–0.81, RMSE = 3.15–4.25 m3/m3). Among standalone sensors, multispectral (Mul) data performed best (R2 = 0.54–0.72), followed by thermal (TIR) (R2 = 0.36–0.52) and RGB (R2 = 0.14–0.26), highlighting the superiority of multi-sensor fusion over single-sensor approaches in soil moisture estimation [90].

In [66], researchers conducted a study in a drip-irrigated Cabernet Sauvignon vineyard in the Maule Region, Chile, introducing a methodology for the automatic co-registration of thermal and multispectral images (490–900 nm) captured by UAVs to remove shadow canopy pixels. Using a modified scale-invariant feature transformation (SIFT) algorithm and Kmeans++ clustering, the method excluded shadow-affected pixels, significantly enhancing water stress detection. Ground truth data, represented by Ψstem, were collected concurrently to validate the results. The findings showed an improvement in the coefficient of determination (R2) between CWSI and Ψstem from 0.64 to 0.77 after shadow pixels were removed. Additionally, the root mean square error (RMSE) and standard error (SE) decreased from 0.2 MPa to 0.1 MPa and from 0.24 MPa to 0.16 MPa, respectively. The study also found that shadow pixels had a greater negative effect on stressed vines compared to well-watered vines. These findings underscore the importance of integrating thermal and multispectral image processing to improve the accuracy of water stress assessments in vineyards, enabling more precise irrigation management [66].

Similarly, this study [58] demonstrated the effectiveness of a multi-sensor UAV platform equipped with RGB, multispectral, and thermal cameras for precision viticulture applications in Italian vineyards. The UAV system, capable of carrying three sensors simultaneously, provided high spatial ground resolution and flexible, timely monitoring, making it ideal for medium-to-small agricultural systems. The study assessed intra-vineyard variability by monitoring vine vigor using a multispectral camera, leaf temperature with a thermal camera, and missing plants using high-resolution RGB imagery. NDVI values, derived from multispectral data, were correlated with shoot weights, showing a strong regression (R2 = 0.69). Thermal imagery was used to generate a CWSI map, which accurately highlighted homogeneous water stress zones after applying canopy pure pixel filtering. This research demonstrated the utility of integrating multiple sensors on UAV platforms for fast, efficient, and multipurpose monitoring in vineyards, offering valuable tools for site-specific management and improved vineyard productivity [58].

In contrast, this study conducted in Greece evaluated water stress estimation in vineyards using aerial SWIR and multispectral UAV data [64]. Data were collected using UAVs equipped with SWIR and multispectral sensors, capturing high-resolution images of the vineyard. The images were processed to calculate vegetation and water-related indices, such as NDVI and NDWI, while SWIR bands provided additional sensitivity to water content variations. Ground validation involved measuring gc and comparing it with UAV-derived indices, revealing strong correlations with R2 values ranging from 0.72 to 0.89. SWIR reflectance is highly sensitive to water absorption, making it particularly effective in identifying subtle differences in plant hydration levels. The results highlighted that integrating SWIR and multispectral data effectively maps spatial water stress patterns in vineyards, offering a precise and reliable tool for optimizing irrigation management and improving water use efficiency [64].

Another study [91] utilized a UAS-based fusion approach by combining multispectral and thermal imaging to assess water stress and water use in surface and direct root zone (DRZ) drip-irrigated vineyards. A modified Mapping Evapotranspiration at High Resolution with Internalized Calibration (METRIC) model (UASM-ET) was applied to estimate high-resolution evapotranspiration under four irrigation treatments (100%, 80%, 60%, and 40% of the commercial rate, CR), with CR derived using soil moisture data and a crop coefficient (Kcb) of 0.5. Over 14 campaigns during the 2018–2019 seasons, UASM-ET estimates were validated against soil water balance (SWB) and Landsat-METRIC (LM-ET) methods. First, UASM-ET estimates were evaluated against those obtained from the soil water balance (SWB) method and the conventional Landsat-METRIC (LM) approach. On average, UASM-ET values (2.70 ± 1.03 mm day−1 [mean ± standard deviation]) were higher than SWB-ET values (1.80 ± 0.98 mm day−1). Despite this difference, a strong linear correlation was observed between the two methods (r = 0.64–0.81, p < 0.01). The study generated spatial canopy transpiration (UASM-T) maps by isolating the soil background from UASM-ET data, which showed a strong correlation with estimates from the standard basal crop coefficient method (Td, MAN = 14%, r = 0.95, p < 0.01). These UASM-T maps were used to evaluate water use differences in DRZ-irrigated grapevines. Canopy transpiration (T) varied significantly across irrigation treatments, with the highest values observed in grapevines receiving 100% or 80% of the commercial rate (CR), followed by those at 60% and 40% CR (p < 0.01). Reference transpiration fraction (TrF) curves derived from the UASM-T maps highlighted the clear influence of irrigation rates on grapevine water use. This fusion approach demonstrates the effectiveness of UAS-based imaging for precise water stress detection and optimized water use in specialty crops like grapevines [91].

Similarly, fusion studies have been conducted in other specialty crops, including fruits and vegetables. For example, UAV-based thermal and RGB images were collected over three years from commercial potato (Solanum tuberosum L. cv. Desiree) fields to assess water stress using the CWSI, which was calculated from canopy temperatures [92]. RGB cameras were utilized alongside thermal imaging to enhance the accuracy of canopy temperature extraction by effectively distinguishing between plant material and soil background. Conducted over three growing seasons in Israel, the study involved short-term and long-term water deficit scenarios to assess the correlation between CWSI and traditional water status measurements such as gc, ΨL, and leaf osmotic potential (LOP). Thermal and RGB images were acquired at various phenological stages, and CWSI was calculated using empirical, theoretical, and statistical methods. The results showed strong correlations between CWSI and SC (R2 ranging from 0.64 to 0.99) and demonstrated CWSI’s sensitivity to irrigation levels, with increasing values reflecting heightened water stress. The study highlighted that CWSI could be effectively derived from thermal imagery alone, simplifying its application for large-scale monitoring. By validating CWSI as a reliable, non-destructive alternative to conventional methods, the research emphasized its potential for optimizing irrigation management, improving yield, and ensuring sustainable water use in potato cultivation [92].

In [93], researchers developed predictive models for spinach (Spinacia oleracea L.) yield and water use efficiency (WUE) using remote sensing data collected with a DJI P4 Multispectral platform equipped with an RGB sensor for visible light imaging and five monochrome sensors (blue, green, red, red edge, and near-infrared). RGB imaging was used to derive minimum canopy height, maximum canopy height (MaxHt), mean canopy height (MeanHt), canopy cover (CCover), and canopy volume (CVol) from the DSM height model. The experiment included two irrigation treatments: a well-watered (WW) zone and a partial water deficit (PWD) zone. Ground truth data included three terminal traits (TTs): fresh biomass yield (FY), dry biomass yield (BY), and field water use efficiency (WUEf). FY was determined as the above-ground fresh biomass weight collected from each plot. WUEf was calculated as the ratio of above-ground biomass yield to the total water used per plot, accounting for irrigation and rainfall, with values averaged across replicates for each variety. These terminal traits were used to validate UAS-based predictions. Machine learning models, including random forest and Partial Least Squares Regression, were trained on UAS-derived vegetation indices (NDVI, NDRE) and validated against ground truth data using metrics like R2, RMSE, and MAE to ensure accuracy and robustness. The robust rank fraction analysis showed that 111 out of 144 terminal trait (TT) × UAS data point (UASDP) models were significant under the partial water deficit (PWD) treatment, compared to only 49 under the well-watered (WW) treatment. For the PWD treatment, canopy volume (CVol) ranked as the strongest predictor for all traits, followed by NDRE, MeanHt, and ExG, while under the WW treatment, ExG, NDRE, and NDVI were the top three predictors for all traits, with CVol and CCover ranking fourth for specific traits. The studies in this section highlighted the potential of UAS platforms to provide a combination of data types for improving monitoring and better decision-making in specialty crop water management [93].

The evolution of UAV-based multi-sensor fusion has significantly advanced water stress detection in specialty crops by overcoming the limitations of single-sensor approaches. Initially, thermal imaging alone was used to assess canopy temperature variations, but its accuracy was compromised by diurnal stomatal fluctuations, soil background interference, and canopy shadows, particularly in semi-arid orchards. The integration of hyperspectral imaging with thermal data improved stress detection by incorporating structural, physiological, and biochemical indicators, allowing for more precise validation of water status [88]. Similarly, the fusion of RGB and thermal imaging enhanced canopy temperature measurements and minimized background errors, thereby improving plant stress detection [32]. Further advancements in multi-sensor fusion, including RGB + Multispectral + Thermal (TIR) combinations, significantly improved soil moisture estimation and irrigation management, outperforming single-sensor methods [89]. Automated image co-registration techniques have further optimized CWSI calculations, increasing accuracy by reducing shadow pixel interference [66]. These innovations underscore the shift from standalone thermal sensing to advanced multi-sensor UAV platforms, integrating AI, machine learning, and automated calibration techniques for real-time, high-precision water stress assessment and irrigation optimization in precision agriculture (Table 4).

Table 4.

Comparative analysis of UAV-based remote sensing for water stress detection in specialty crops.

4. Future Work

The use of UAV-based remote sensing for water stress detection in specialty crops has demonstrated significant potential, but several key research gaps remain that must be addressed to optimize its operational effectiveness. One of the primary challenges is the scalability of UAV-based methods, as payload constraints and the computational demands of processing high-resolution imagery pose limitations for large-scale applications. Even with commercial software like Pix4Dfields version 2.8, the time and cost required to analyze ultra-high-resolution UAV data make it impractical for routine large-area monitoring [23]. Future research should focus on integrating multi-sensor data fusion techniques by combining high-resolution UAV imagery with moderate-resolution, but more frequent, satellite observations from platforms like Landsat and Sentinel-2 [94,95]. This hybrid approach will improve the temporal resolution of water stress monitoring, ensuring more frequent data collection throughout the growing season [60]. However, a major challenge in sensor fusion is ensuring radiometric consistency across different imaging platforms. Differences in sensor calibration and atmospheric conditions can introduce reflectance biases, necessitating the development of standardized calibration methods and machine learning-driven correction models to maintain accuracy and reliability in stress assessments [23].

Another critical limitation in UAV-based water stress detection is the lack of standardized methodologies across different specialty crops and environmental conditions [96,97,98,99]. Many existing studies rely on vegetation indices like NDVI and NDRE, but their effectiveness varies based on canopy structure, soil background, and atmospheric interference. Future research should explore the development of crop-specific water stress indices that incorporate ground-based physiological data, such as stomatal conductance, leaf water potential, and transpiration rates, to improve validation accuracy. Additionally, while thermal imaging using CWSI has proven effective, its accuracy is highly dependent on meteorological conditions such as solar radiation, humidity, and wind speed [29]. The integration of meteorological data with UAV-based thermal indices should be explored to enhance real-time precision irrigation decision-making. The implementation of hybrid thermal-multispectral indices could further refine stress detection by capturing both temperature variations and reflectance-based physiological changes in crops.

The role of emerging technologies, such as LiDAR, machine learning (ML), and artificial intelligence (AI), in synthesizing UAV data for enhanced decision-making is another promising research area. LiDAR sensors, which generate high-resolution 3D models of crop canopies, can be integrated with multispectral and thermal imaging to provide a more comprehensive assessment of plant water status [25], particularly in orchards and vineyards where canopy architecture influences water availability. Machine learning algorithms, including convolutional neural networks (CNNs) and deep learning (DL) models, can automate the classification of water stress levels by identifying complex, non-linear relationships in UAV-acquired spectral and structural data [23]. The application of AI and machine learning models in thermal-based UAV water stress detection remains limited, with most studies relying on conventional indices like CWSI rather than advanced predictive modeling. While initial attempts have integrated machine learning for automated stress classification, further research is needed to develop robust AI-driven models that can improve accuracy by incorporating meteorological factors, canopy variations, and multi-temporal thermal data. The development of AI-driven decision-support systems will enable real-time processing of UAV imagery, allowing for automated irrigation recommendations based on spatial variability in crop water stress. Future research should focus on training these AI models with large-scale, multi-season datasets to improve their robustness across different cropping systems and climatic conditions.

While UAVs offer high-resolution insights, potential sources of error in data collection and processing must also be considered. Variations in flight altitude, sensor angle, and lighting conditions can introduce inconsistencies in imagery, leading to misclassification of water stress levels [100]. Variability in image resolution, atmospheric influences, and crop-specific characteristics often introduces uncertainties in decision-making processes, emphasizing the need to standardize and optimize UAV-based workflows [101]. Additionally, atmospheric interference, cloud cover, and wind conditions can affect data quality, particularly for thermal and hyperspectral sensors. To mitigate these issues, future studies should implement automated radiometric correction techniques, improved UAV flight planning protocols, and standardized preprocessing pipelines to enhance data accuracy. The integration of real-time cloud-based processing can further streamline UAV data analysis, reducing the time between image acquisition and actionable decision-making.

Finally, the widespread adoption of UAV-based solutions will require addressing practical barriers such as high costs, data-intensive workflows, implementation complexity, and resistance from stakeholders, including farmers [102,103]. Efforts should focus on developing cost-effective technologies, providing targeted training programs, and actively engaging stakeholders to ensure acceptance and successful implementation [103]. Integrating UAV-based remote sensing into sustainable irrigation practices is essential for adapting to climate change, safeguarding water resources, and achieving long-term food security [93,95,96,103]. By addressing these challenges and embracing innovative technologies, UAV-based remote sensing can become a cornerstone of precision agriculture, enabling more sustainable and efficient water management in specialty crops.

In the coming years, advancements in automated UAV technology, cloud computing, and AI-powered analytics will enable the transition to real-time, precision irrigation systems that integrate UAV, satellite, and ground-based sensor data. The ultimate goal is to develop a fully automated water stress-monitoring framework that can guide irrigation decisions with minimal user intervention, allowing for sustainable water management and optimized crop yields in specialty crop production [23]. By addressing these research gaps and leveraging technological innovations, UAV-based remote sensing will continue to play a crucial role in the future of smart agriculture and climate-resilient farming systems.

5. Conclusions

The adoption of UAV-based remote sensing has revolutionized water stress detection in specialty crops, offering high-resolution, real-time monitoring that enhances precision irrigation and sustainable water management. The fusion of thermal, multispectral, hyperspectral, and RGB imaging, alongside ground-based and satellite data, has significantly improved the accuracy of stress detection models. Despite these advancements, challenges such as the lack of standardized indices, scalability limitations, and the need for automated data processing persist. The integration of artificial intelligence and machine learning presents a promising avenue for refining predictive models, while the expansion of UAV applications to a broader range of specialty crops will further improve water use efficiency and crop productivity. Addressing regulatory and operational barriers, coupled with advancements in multi-sensor integration and real-time analytics, will be critical in transitioning toward semi-automated and fully automated irrigation systems. By leveraging these innovations, UAV technology will continue to play a pivotal role in optimizing irrigation strategies, improving agricultural sustainability, and mitigating the impacts of climate variability on crop production.

Author Contributions

Conceptualization, H.S. (Harmandeep Sharma) and A.B.; methodology, H.S. (Harmandeep Sharma) and H.S. (Harjot Sidhu); resources, H.S. (Harmandeep Sharma) and H.S. (Harjot Sidhu); writing—original draft preparation, H.S. (Harmandeep Sharma) and H.S. (Harjot Sidhu); writing—review and editing, H.S. (Harmandeep Sharma) and A.B.; visualization, H.S. (Harjot Sidhu). All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Evans-Allen project award no. NC.X-355-5-23-130-1 from the U.S. Department of Agriculture’s National Institute of Food and Agriculture. Any opinions, findings, conclusions, or recommendations expressed in this publication are those of the author(s) and do not necessarily reflect the view of the U.S. Department of Agriculture.

Conflicts of Interest

The authors declare no conflicts of interest.

Acronyms

| ANN | Artificial Neural Network |

| ARVI | Atmospherically Resistant Vegetation Index |

| BGI | Blue–Green Index |

| C_area | Canopy Top-View Area |

| CARS-PLS | Competitive Adaptive Reweighted Sampling-Partial Least Squares |

| Chl-a | Chlorophyll a |

| Chl-b | Chlorophyll b |

| CIrededge | Chlorophyll Index at Red Edge |

| COR | Correlation |

| CUM_NDVI | Cumulative NDVI |

| CWSI | Crop Water Stress Index |

| DDD | Drought Damage Degree |

| DVI | Difference Vegetation Index |

| DRZ | Direct Root Zone |

| EL | Electrolyte Leakage |

| ENDVI | Enhanced NDVI |

| Fv/Fm | Maximum Efficiency of Photosystem II |

| G | Greenness Index |

| GBM | Gradient Boosting Machine |

| GC | Stomatal Conductance |

| GI | Greenness Index |

| GRVI | Green Ratio Vegetation Index |

| GNDVI | Green NDVI |

| Kc | Crop Coefficients |

| KNR | Support Vector Regression |

| MDA | Malondialdehyde |

| MCARI | Modified Chlorophyll Absorption in Reflectance Index |

| MOD_NDVI | Modified NDVI |

| MOD_RSRI | Modified Red Edge SRI |

| MSAVI | Modified Soil-Adjusted Vegetation Index |

| MSR | Modified Simple Ratio |

| MTVI | Modified Triangular Vegetation Index |

| MDA | Malondialdehyde |

| NDGI | Normalized Difference Greenness Index |

| NDRE | Normalized Difference Red Edge Index |

| NDVI | Normalized Difference Vegetation Index |

| NIR | Near-Infrared |

| NWSE | Non-Water-Stressed Baseline |

| OSAVI | Optimized Soil-Adjusted Vegetation Index |

| PCA | Principal Component Analysis |

| P_height | Plant Height |

| PLS | Partial Least Squares |

| PRI | Photochemical Reflectance Index |

| PSNDa | Pigment-Specific Normalized Difference for Chlorophyll A |

| PSNDb | Pigment-Specific Normalized Difference for Chlorophyll B |

| PSSRa | Pigment-Specific Simple Ratio for Chlorophyll A |

| PSSRb | Pigment-Specific Simple Ratio for Chlorophyll B |

| PSRI | Plant senescence reflectance index |

| ΨL | Leaf Water Potential |

| ΨSTEM | Stem Water Potential |

| RBF | Radial Basis Function |

| RDVI | Renormalized Difference Vegetation Index |

| REGI | Red Edge Green Normalized Difference Index |

| RF | Random Forest |

| RGRI | Red–Green Ratio Index |

| RNDVI | Red Edge NDVI |

| RVI | Ratio Vegetation Index |

| RVSI | Red Edge Vegetation Stress Index |

| SAVI | Soil-Adjusted Vegetation Index |

| SCN | Stochastic Configuration Networks |

| SEC | Second Moment |

| SHD | Super High-Density |

| SPA + RF | Successive Projections Algorithm + Random Forest |

| SR | Simple Ratio |

| SRGREEN | Simple Ratio Green |

| SRI | Simple Ratio Index |

| SS | Soluble Saccharide |

| SVR | support vector regression |

| SWIR | Shortwave Infrared |

| TCARI/OSAVI | Transformed Chlorophyll Absorption in Reflectance Index/Optimized Soil-Adjusted Vegetation Index |

| TVI | Triangular Vegetation Index |

| UAS | Unmanned Aerial System |

| UAV | Unmanned Aerial Vehicle |

| UVE + SVM | Uninformative Variable Elimination + Support Vector Machine |

| VPD | Vapor Pressure Deficit |

| VDVI | Visible Band Difference Vegetation Index |

| VOG REI | Vogelmann RedEdge Index |

| WI | Water Index |

| ZM | R750/R710 |

References

- Payne, W.Z.; Kurouski, D. Raman-Based Diagnostics of Biotic and Abiotic Stresses in Plants. A Review. Front. Plant Sci. 2021, 11, 616672. [Google Scholar] [CrossRef]

- Hunter, M.C.; Smith, R.G.; Schipanski, M.E.; Atwood, L.W.; Mortensen, D.A. Agriculture in 2050: Recalibrating Targets for Sustainable Intensification. Bioscience 2017, 67, 386–391. [Google Scholar] [CrossRef]

- United Nations. World Population Prospects 2019: Highlights; United Nations: New York, NY, USA, 2019. [Google Scholar]

- Pisante, M.; Stagnari, F.; Grant, C.A. Agricultural Innovations for Sustainable Crop Production Intensification. Ital. J. Agron. 2012, 7, e40. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Detection of nutrition deficiencies in plants using proximal images and machine learning: A review. Comput. Electron. Agric. 2019, 162, 482–492. [Google Scholar] [CrossRef]