Highlights

What are the main findings?

- We propose NightTrack, a unified framework that jointly optimizes low-light enhancement and object tracking, outperforming state-of-the-art methods in night-time UAV scenarios.

- By introducing Pyramid Attention Modules (PAMs) and jointly estimating illumination and noise, the framework significantly enhances the discriminability of features in low-light conditions.

What is the implication of the main finding?

- The unified paradigm of integrating enhancement and tracking offers a more effective solution for tasks with competing objectives than traditional two-stage pipelines.

- The proposed framework substantially improves the robustness and precision of night-time UAV tracking, presenting a novel perspective to enable practical applications in challenging low-light environments.

Abstract

UAV-based visual object tracking has recently become a prominent research focus in computer vision. However, most existing trackers are primarily benchmarked under well-illuminated conditions, largely overlooking the challenges that may arise in night-time scenarios. Although attempts exist to restore image brightness via low-light image enhancement before feeding frames to a tracker, such two-stage pipelines often struggle to strike an effective balance between the competing objectives of enhancement and tracking. To address this limitation, this work proposes NightTrack, a unified framework that optimizes both low-light image enhancement and UAV object tracking. While boosting image visibility, NightTrack not only explicitly preserves but also reinforces the discriminative features required for robust tracking. To improve the discriminability of low-light representations, Pyramid Attention Modules (PAMs) are introduced to enhance multi-scale contextual cues. Moreover, by jointly estimating illumination and noise curves, NightTrack mitigates the potential adverse effects of low-light environments, leading to significant gains in precision and robustness. Experimental results on multiple night-time tracking benchmarks demonstrate that NightTrack outperforms state-of-the-art methods in night-time scenes, exhibiting strong promises for further development.

1. Introduction

Unmanned aerial vehicles (UAVs) have served as a crucial application platform for visual object tracking. Benefiting from their small size, high maneuverability, and ease of operation, UAVs are particularly well-suited for tracking missions and hold significant application value in areas such as intelligent surveillance [1] and autonomous driving [2]. Driven by the availability and applicability of large-scale datasets [3,4,5], supervised learning tasks utilizing manually annotated labels have made many breakthroughs in visual object tracking research [6,7,8,9,10,11]. Performance requirements for trackers are becoming increasingly stringent with the evolution of modern technology. A key driver is that a wealth of high-value data and critical operations are exclusive to nocturnal settings, rendering trackers that rely solely on daytime data inadequate for high-stakes application scenarios. Consequently, modern applications demand that trackers not only exhibit high precision, robustness, and real-time capability in standard night-time scenarios but also maintain sustained and reliable performance under extreme conditions, such as extremely low illumination. This demand is particularly pronounced in several key domains, notably wildlife ecology and autonomous systems. In wildlife ecology, for instance, many species are nocturnal and their key behaviors—such as hunting and migration—are hidden from daylight observation. Therefore, accurate night-time tracking is crucial for obtaining valid ecological data and implementing effective conservation strategies. In the domain of autonomous systems, the operational envelope is expanding to encompass 24/7 all-weather operations. For instance, UAVs are deployed for nocturnal inspection of critical infrastructure and search-and-rescue missions, while autonomous vehicles must navigate safely on roads with poor or non-existent lighting. In both cases, system safety and mission success are fundamentally dependent on the ability to accurately perceive and interpret the environment under adverse visual conditions.

To date, the visual tracking domain has largely evaluated under favorable lighting, where discriminative features are readily extracted by simple networks. In sharp contrast, real-world night-time images suffer from severe quality degradations, including low contrast, insufficient illumination, and color distortion, which result in a significant loss of detail. Such degradation severely impairs tracker performance and challenges the practical deployment of UAVs [12]. A conventional solution is an “enhance-then-track” pipeline, which first restores image quality using low-light enhancement methods [13,14,15] before executing tracking. Despite its effectiveness, this approach has a fundamental limitation: enhancement and tracking are separate, uncorrelated modules. This means that improvements in visual quality do not translate to tracking-specific discriminability, hindering their collaborative optimization.

To address this important problem, this paper propose a novel method that integrates low-light image enhancement and single object tracking within an end-to-end framework, called NightTrack, to achieve stable tracking in challenging low-light scenarios. Specifically, NightTrack embeds an enhancement algorithm directly in the tracking pipeline, which is optimized to boost tracking performance as well as visual aesthetics conjunctively. Pyramid Attention Modules (PAMs) are designed to combat image quality degradation. Each PAM aggregates multi-scale channel context to help capture and represent local-global dependencies simultaneously, improving the discriminative power of crucial features. Additionally, a Retinex theory-based non-linear projection is employed to estimate and map the illumination and noise curves to a set of normal-light images, effectively addressing the problems caused by degradation factors such as noise in low-light images.

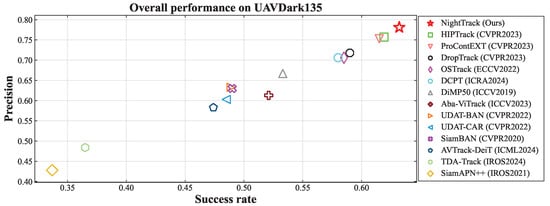

As illustrated in Figure 1, the proposed NightTrack outperforms state-of-the-art (SOTA) tracking baselines. It forms a novel end-to-end framework that organically combines low-light image enhancement with single object tracking, aiming to boost tracking performance in challenging night-time environments. The proposed tracker is a generic model capable of following any object instance designated by an initial bounding box, independent of predefined object categories. This design ensures high flexibility and applicability across a wide range of scenarios.

Figure 1.

Performance comparison on the UAVDark135 [16] benchmark. The results show that NightTrack is able to complete night-time UAV tracking with satisfactory performance.

The main contributions of this work are summarized as follows:

- (1)

- A novel end-to-end framework that jointly optimizes low-light image enhancement and object tracking, which significantly improves tracking performance at night.

- (2)

- Pyramid Attention Modules (PAMs) that each aggregate multi-scale contextual information, thereby substantially enhancing the underlying network’s representation capability and facilitating better detail restoration in night scenes.

- (3)

- An integration of Retinex theory-based illumination and noise curves within the enhancement stage, coupled with a no-reference loss function that enables unsupervised learning of the feature transformation without paired data, counteracting the degradation caused by low-light conditions.

- (4)

- A systematic experimental evaluation of the proposed joint optimization method, demonstrating its SOTA performance on multiple night-time tracking benchmarks, outperforming baseline tracking networks.

2. Related Work

2.1. Low-Light Image Enhancement Methods

Conventional low-light image enhancement approaches can be classified into two primary paradigms. The first paradigm, as described in [17,18], seeks to achieve illumination enhancement by expanding an image’s dynamic range, which is accomplished through the manipulation of its histogram distribution at both global and local scales. The second is founded upon the Retinex theory [19]. Its fundamental principle involves decomposing an image into a reflection component and an illumination component, based on the assumption that the former is invariant under varying illumination conditions. Consequently, the enhancement task is reformulated as an illumination estimation problem, a strategy having been adopted in numerous studies [20,21,22]. However, these conventional approaches are beset by significant limitations. Their intricate optimization processes and protracted computational times preclude their integration into real-time tracking systems deployed on UAVs. Furthermore, a common shortcoming of Retinex-based models is the frequent omission of noise considerations, an oversight that results in the preservation or amplification of noise within the enhanced output, subsequently impairing the performance of downstream applications.

In recent years, deep learning-based methods have demonstrated remarkable success in numerous low-level vision tasks. An early example is LLNet [23] that applies deep learning to low-light image enhancement, establishing a deep autoencoder-based method that simultaneously performs contrast enhancement and denoising. This approach exhibits superior robustness and stability compared to traditional methods. Also, Retinex-Net [24] integrates the Retinex model with deep learning, introducing an end-to-end trainable network for both decomposition and enhancement. Specifically, it employs a decomposition network to separate the illumination and reflection components and subsequently, utilizes an enhancement network to adjust the illumination map. KinD [25] offers a design with three sub-networks for layer decomposition, reflectance restoration, and illumination adjustment, respectively. However, its reliance on three separate deep neural networks results in high computational cost and poor real-time performance. In contrast to these image reconstruction-based methods, Zero-DCE [26] presents a lightweight model for low-light enhancement, by outputting high-order curves to perform pixel-wise adjustments on the input’s dynamic range. As such, it effectively formulates the enhancement task as a curve estimation problem, supported with experimental assessments. The image-to-curve mapping requires only a lightweight network, enabling fast inference and making it suitable for integration into UAV tracking algorithms.

2.2. Image Enhancement for Downstream Vision Tasks

Whilst powerful when applicable, deep convolutional neural networks are highly sensitive to image quality degradation factors such as color distortion, blur, and noise. Consequently, the performance of downstream tasks often degrades when processing real-world video images, which are not always of high quality. A simple solution might be to reinforce the performance of downstream vision tasks, such as object detection [27], image segmentation [28], and object tracking [13,14,15], by applying low-light image enhancement. Darklighter [13] constructs a lightweight curve estimation network to alleviate the adverse effects of inadequate lighting and noise on UAV tracking in night-time scenes. SCT [14] applies the Transformer [29] and curve projection model to train in a task-inspired manner, achieving stable night tracking. PANet [15] considers both human visual perception and specific requirements of UAV tracking, highlighting the targets’ latent feature. The basic idea of these three methods is to use a plug-and-play enhancer as a preprocessing step for UAV tracking. However, this generally meets limited success because traditional enhancement methods primarily focus on improving the visual perceptual quality of images, rather than optimizing features for a specific task.

Recently, researchers have considered integrating enhancement algorithms with downstream tasks, adopting end-to-end frameworks to learn effective feature representations from the synergistic tasks, thereby strengthening the overall performance. For instance, Guo et al. has proposed an end-to-end network for object detection in night-time scenes [30]. By jointly training a dynamic image enhancement network with a detector based on Faster R-CNN [31], the resultant system significantly improves the detection performance under low-light conditions. Also, DANNet [32] employs adversarial learning to train a sub-network for image brightness restoration, mitigating the impact of low-light environments on the segmentation network. Similarly, ADTrack, a dual regression algorithm [12], has been introduced on the basis of correlation filtering, which simultaneously trains a context filter and a target-focused filter, greatly enhancing the robustness of UAV tracking at night.

3. Methodology

3.1. Overall Framework

As previously discussed, low-light image enhancement can be used to restore image quality and serve as a plug-and-play module within a tracker, effectively improving tracking performance. However, such a decoupled paradigm essentially treats enhancement and tracking as two separate tasks that do not collaborate effectively. The tracker is constrained by the enhancer because the enhanced features are not necessarily optimal for the object tracking. To overcome this limitation, an end-to-end joint training framework, NightTrack, is proposed for concurrent image enhancement and object tracking. It aims to enhance the robustness of low-light UAV object tracking by improving image features, rather than pursuing perceptual quality.

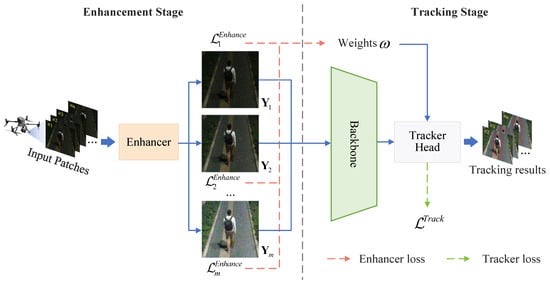

The overall framework of the proposed network, as illustrated in Figure 2, primarily consists of two stages: a low-light image enhancement stage and a tracking stage. For a given low-light image, NightTrack first employs an image (patch-wise) enhancement algorithm to iteratively output m clear images under normal illumination. Subsequently, the features of each enhanced image are fed into the tracker’s backbone network, enabling the system to adaptively select the most effective iteration step. Through end-to-end joint optimization of the enhancer and tracker, the system simultaneously learns illumination-robust representations and discriminative correlation filters, from night-time UAV videos.

Figure 2.

Proposed end-to-end framework. The left side shows the enhancement stage, and the right side shows the tracking stage.

To suppress any quality degradation in low-light images caused by noise (and possibly, other adverse factors), a novel design of Pyramid Attention Modules (PAMs) are embedded within the enhancer. Furthermore, a non-linear projection mechanism is constructed based on the Retinex theory [19]. This mechanism estimates the illumination and noise curves to map the image to a normal exposure, allowing for the simultaneous optimization of image enhancement and denoising. A set of non-reference loss functions is also introduced during the training phase. This is necessary considering the scarcity of night-time UAV tracking datasets, which are insufficient to support the full training of an end-to-end network, while recognizing that manual annotation for such datasets is time-consuming, labor-intensive, and costly. On one hand, the involvement of these losses helps drive the enhancer with the objectives of brightness preservation and structural fidelity. On the other hand, through a joint optimization strategy, they facilitate the synergistic update of the enhancer and tracker. This approach strengthens the visual quality of low-light images while maximally preserving semantic information, ensuring that the tracker’s backbone can extract discriminative features and maintain robust localization accuracy in night-time scenes.

In implementation, the proposed method primarily consists of two sub-networks: an enhancement sub-network and a tracking sub-network. In the enhancement stage, a simple yet effective architecture is employed to restore image brightness, progressively reconstructing the low-light image in an iterative manner. The restored features from each iteration are fed into the tracker’s backbone, where weights are adaptively computed based on their contribution to the subsequent tracking task. This quantifies the importance of the m-th iteration, enabling the model to select the most effective features. To guide the network to focus on the target region, each PAM mines multi-scale spatial context along the channel dimension. This enhances multi-scale representations while effectively suppressing noise and redundancies that are irrelevant for tracking.

In the tracking stage, the proposed framework utilizes HipTrack [6] as the baseline, employing the enhanced images for tracking. This joint design permits gradient backpropagation from the tracking loss to the enhancer, facilitating end-to-end training. Such a synergy not only generates higher-quality images but also markedly improves tracking precision and robustness in low-light conditions (as empirically shown later through comprehensive experimental investigations).

3.2. Enhancement Stage

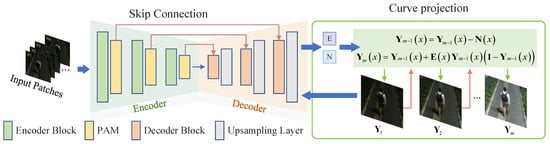

Figure 3 illustrates the structure of the enhancement sub-network. It utilizes a U-Net backbone [33] and incorporates Pyramid Attention Modules (PAMs), to endow the model with stronger global context awareness. Specifically, for a given low-light image , its hierarchical feature representations are extracted sequentially through K encoders. The constitution of each encoder includes an encoding block, a downsampling layer, and a PAM. Except for the first encoder, which outputs a default number (32) of channels, each subsequent encoder doubles the number of channels while halving the spatial resolution, i.e., , .

Figure 3.

Main structure of the enhancement sub-network. By estimating the illumination curve and noise curve and then performing nonlinear projection, input images are enhanced to meet the requirements of feature extraction for UAV tracking.

To realize global context aggregation, the downsampled feature maps are fed into each PAM. This module is designed to learn rich cross-scale representations over multiple spatial scales, thereby effectively mitigating the propagation of redundant features generated by the enhancement stage. During the decoding phase, the spatial resolution is progressively restored through upsampling. A Tanh activation function [34] is applied after the final two convolutional layers to produce the estimated illumination curve and the noise curve , respectively. Finally, based on the Retinex theory [19], the resulting low-light image is mapped into a set of enhanced images . Adaptive weights are then computed, based on these enhanced images to achieve an optimal match between the enhancement results and the tracking objective, improving night-time tracking performance. The technical details implementing this enhancement stage are presented below.

3.2.1. Pyramid Attention Module

UAV images typically possess high spatial resolution, whereas existing low-light image enhancement methodologies are primarily designed for smaller images. Consequently, the direct application of such enhancement networks to high-resolution UAV images presents two principal limitations. Firstly, the network’s receptive field is constrained, impeding the effective modeling of contextual information between different regions. Secondly, directly scaling the image may lead to the degradation or loss of salient details. To mitigate these issues, the present work, inspired by [35], introduces channel attention-based pyramid pooling modules to improve the system’s capacity for global information modeling, strengthening its ability to capture inter-channel dependencies.

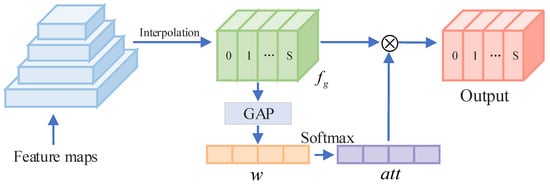

As illustrated in Figure 4, each PAM is composed of four parallel branches, which employ average pooling operations with kernel sizes of , , , and , respectively. This design allows for the extraction of hierarchical representations at multiple scales. These multi-scale features not only encompass rich contextual information but also preserve fine-grained details within the image. The feature maps from each scale are upsampled to the original spatial resolution via bilinear interpolation and then, are concatenated along the channel dimension to obtain the global context feature . Algorithm 1 summarizes the main process of the proposed module.

Figure 4.

Structure of Pyramid Attention Module (PAM): A pyramid parsing module is applied first to capture feature representations at different scales, with resulting features concatenated after upsampling to obtain composite feature map containing multiscale contextual information; attention weight is then separately extracted for different scale feature maps, with weights recalibrated by Softmax [36] and multiplied by corresponding feature maps. Refined feature maps are returned with multi-scale channel information.

Inspired by the work presented in [37], a multi-scale channel attention mechanism is proposed to effectively extract channel attention weights and enhance the utilization of contextual information. The mechanism first uniformly partition the global feature along the channel dimension into S sub-features, denoted as . Then, a global average pooling is performed to generate channel estimation:

| Algorithm 1 Pyramid Attention Module (PAM) algorithm |

| Input: Feature map Parameter: Set the pooling sizes: Define is the size of , S is the number of sub-features Output: The feature map of the K-th encoder

|

Subsequently, a non-linear mapping is constructed through two fully connected (FC) layers to represent the inter-channel dependencies. The attention weights are computed as follows:

where and represent the fully connected (FC) layers, and denote the ReLU and Sigmoid activation functions, respectively. The rationale for such a design is based on the observation that combining linear information between channels is generally of benefits in modeling: facilitating information interaction across high-dimensional and low-dimensional spaces. To achieve the optimization of attention weights among different sub-features, the proposed method employs the Softmax function to normalize and recalibrate the initial attention weights:

where is the attention weight from , and the Softmax function is used to compute the recalibrated weight . This operation not only supports information interaction among the multi-scale channel attentions but also enhances the correlation between local and global features. Subsequently, the calibrated attention weights are multiplied with their corresponding sub-features to obtain the weighted features:

where ⊙ denotes the element-wise multiplication. In summary, the proposed novel PAMs can effectively fuse multi-scale channel attention information, improving the enhancer’s utilization of channel features from different regions and enhancing the discriminative power of key features. This makes the overall system more stable and robust in complex scenarios.

3.2.2. Curve Projection Based on Retinex Theory

In the Retinex model [19], an input image can be regarded as the pixel-wise product of an illumination component and a reflection component :

where represents the illumination intensity of the scene, is determined by the intrinsic properties of the objects themselves and is independent of the illumination, and the symbol ⊙ denotes element-wise multiplication.

Since the reflection component is not affected by illumination, it is typically considered as the enhanced result. However, in low-light imaging, the original frame is often corrupted by significant noise. Direct application of the decomposition will lead to concurrent amplification of noise within the resultant reflection. To overcome this problem, the work of [22] has introduced a noise term into the classical Retinex model:

Once the illumination component and the noise component are estimated, the reflection component can be decomposed from the input image , such that

where ⊘ denotes pixel-by-pixel division. To avoid the case where the divisor is 0, let . Thus, the above formula is reformulated as follows:

As with Zero-DCE [26], low-light image enhancement can be formulated as a pixel-wise high-order curve mapping problem. Given a low-light image , it adjusts the dynamic range of the input image pixel-wise across M iterations, by predicting a set of nonlinear mapping coefficients:

where represents the brightness response after the m-th iteration, and is a parameter matrix with the same dimensionality as the input in which each pixel independently reflects the mapping intensity learned.

The curve projection model obtained so far does not explicitly account for noise. This may result in the amplification of image noise during the dynamic range stretching, severely reducing the robustness of the UAV tracker. To suppress noise concurrently during low-light enhancement, the proposed framework introduces a noise component into the curve mapping model. By estimating the noise distribution of the low-light image, a denoising pre-processing step is applied to the iterative output:

where is the iterative result of the m-th illumination estimation. Empirically, the number of iterations can be set to , that is, .

To consider worst case scenarios, the loss of the enhancement stage is herein defined as the summation of losses of from all iterative outputs:

where the factor is used to balance the enhancement loss against the subsequent tracking loss within the overall objective function (see Appendix A.1 for details). Under the guidance of this loss, the enhancement sub-network can adaptively estimate the optimal illumination and noise curves. This mechanism effectively prevents the amplification of noise in dark areas and helping ensure the stability of UAV tracking.

3.2.3. Loss Functions

Center exposure intensity loss. For UAV tracking, the low-light enhancer is typically used to restore the template patch and search patch. Since the tracked target is always located in the center of the template patch and generally appears in the center of the search patch, the proposed method places greater emphasis on the enhancement effect on the central area of an image. To ensure that the image brightness is controlled within a reasonable range, a spatial weight map is utilized to draw the enhancer’s attention to the central area. Specifically, in implementation, the enhanced image is first divided into T non-overlapping patches each with a size of and the average intensity value of each patch is approximated as the well-illumination value (which is empirically set to 0.6). Let denotes a matrix composed of the average intensity values of the patches arranged in corresponding positions. Then, the center-focused lightness loss is constructed as:

where i and j indicate the spatial position of the corresponding patches, and e is Euler constant.

Illumination estimation loss. To maintain the monotonic relations between adjacent pixels, this work introduces illumination regularization loss to constrain the smoothness of the fitted curve parameter mapping :

where ∇ denotes the first-order differential operator.

Color balance loss. For the sake of correcting any potential color deviation in enhanced images, color intensity loss is added to establish a connection between the three channels, limiting their intensities to a relatively consistent level. The color intensity loss is calculated as follows:

where is the value of the t-th channel of .

Noise estimation loss. The image sequence obtained in inadequate lighting situations often suffers from severe quality degradation, such as color distortion. Effective feature extraction is therefore vital in performing high-level tasks like object tracking, since a large amount of noise may badly mislead the tracker. For this reason, a noise estimation loss is employed to suppress the noise component :

Enhanced Iteration Loss. To summarize, the enhanced loss for each iteration can be expressed as:

where , , , and are coefficients set to adjust the contributions of each loss function (see Appendix A.2 for details).

3.3. Tracking Stage

In the proposed NightTrack, the tracker is built upon HipTrack [6], while the enhancer is embedded into the template and search branches, forming an end-to-end joint optimization framework. Specifically, the enhancer is strategically employed as a preprocessing module, embedded before the backbone networks of both the template and search branches. This design ensures that the backbone networks consistently receive a high-quality input. For a given raw template and search patch, the enhancer first generates M sets of iteratively enhanced images. These M sets are then sequentially fed into the tracker’s backbone to facilitate the extraction of multiple feature sets. During the training phase, backpropagation is performed regarding a weighted loss, where the weighting scheme is directly computed from the enhancement loss of each iteration, as follows:

with representing the loss associated with the t-th iteration. A larger weight is thus assigned to an enhanced image, indicating its higher utility in enhancing the tracker’s performance.

The tracking loss adopts the standard loss function used in the original HipTrack [6], which is calculated as follows:

where , , and serve as pre-defined hyperparameters that weight the contribution of each individual loss component.

Concerning the joint training of the enhancement and tracking sub-networks, the enhancement loss is incorporated into the tracking loss function. Such a design allows the enhancer to learn sample-specific illumination transformations throughout the training process, consequently leading to the generation of more discriminative feature representations, elevating the tracking robustness. By means of this joint optimization, a synergistic interplay is established between the enhancement and tracking tasks. The total loss of the joint optimization is calculated as follows:

Note that, is the weight corresponding to the original RGB image and is invariably equal to 1. Through the joint optimization, the enhancement network can learn a sample specific transformation for improving tracking performance.

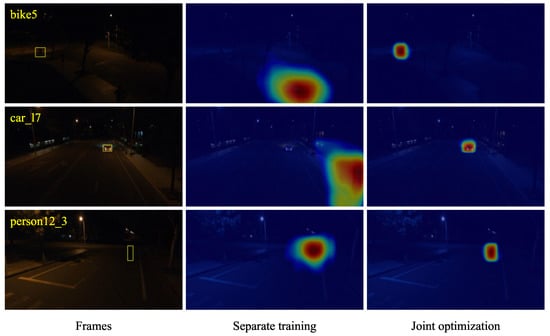

Specifically, the enhancement network adaptively modulates image brightness and contrast in accordance with the requirements of the tracker, while the tracker extracts more stable and highly discriminative target representations from the enhanced features. Thus, a significant improvement in tracking performance can be achieved within complex environments, such as those characterized by low illumination. As an example, a comparative illustration of the tracking confidence maps, generated under “separate training” and “joint optimization” paradigms, is presented in Figure 5. This indicates that the joint optimization paradigm facilitates a more pronounced salience and distinctiveness of the target. In essence, such findings validate that low-light image enhancement can furnish the UAV tracker with more discriminative features, enabling the maintenance of satisfactory performance under nocturnal conditions.

Figure 5.

Qualitative comparison of confidence maps under two training strategies. ‘Separate training’ indicates that the enhancer and the tracker are trained independently, whereas ‘Joint optimization’ refers to the proposed method. The colormap transitions from blue, indicating low confidence, to red, indicating high confidence. It is evident that the joint optimization strategy yields target features with higher saliency and better distinguishability. For easing visualization, the tracked object is delineated by a yellow bounding box in the first column, and the sequence name, sourced from the public UAVDark135 benchmark [16], is displayed in the top-left corner of the original frames.

4. Experimental Evaluation

A systematic evaluation of the NightTrack is herein presented. This work benchmarks the performance of various trackers on three real-world night-time datasets: UAVDark135 [16], DarkTrack2021 [14], and NAT2021 [38]. The rich diversity of these datasets, encompassing various object categories, makes them an ideal testbed for evaluating the generalization capabilities of a generic tracker. The results help validate the superiority of the proposed NightTrack for night-time UAV object tracking.

4.1. Experimental Setup

All experimental investigations carried out are conducted on a server equipped with an NVIDIA GTX 4090 GPU, and the code is implemented based on the PyTorch 2.1.2 framework. The base tracker is trained on four popular datasets in the literature: GOT-10k [4], LaSOT [5], TrackingNet [3], and COCO [39]. The model is trained for 100 epochs using the AdamW optimizer [40] with a batch size of 32. Each epoch involves 60,000 sample pairs. The template and search region sizes are set to and , respectively. Notably, following the training setup as of DCPT [41], images labeled with “night” are selected from the BDD100K [42] and SHIFT [43] datasets, as well as ExDark [44] to serve as night-time data for training the jointly optimized framework.

4.2. Evaluation Metrics

The evaluation follows the One-Pass Evaluation (OPE) [45] protocol, as commonly adopted in the relevant literature, with a focus on three principal metrics: Success Rate, Precision, and Normalized Precision. The Success Rate is based on the IoU between the predicted bounding boxes and its groundtruth. It quantifies the percentage of frames where the IoU exceeds a given threshold, visualized as a Success Plot (SP). The overall tracking performance is measured by the Area Under the Curve (AUC) of the Success Plot, where a higher AUC indicates superior performance. Precision is measured by the Center Location Error (CLE), defined as the Euclidean distance between the centers of the predicted box and that of groundtruth. The Precision Plot (PP) illustrates the trackers’ robustness at different error thresholds, where the representative precision score is typically deemed to be the percentage of frames with a CLE below 20 pixels. Furthermore, the Normalized Precision metric introduces a scale-invariant assessment by normalizing the precision with respect to the groundtruth. The AUC of the Normalized Precision Plot is calculated over a range of , yielding a holistic performance measure that is invariant to object scale.

4.3. Overall Performance

A comparative analysis is conducted between the proposed NightTrack and a suite of SOTA trackers on a range of challenging night-time datasets. The competing methods run are: ProContEXT [7], HIPTrack [6], DropTrack [8], OSTrack [46], DCPT [41], DiMP50_SCT [14], SeqTrack [47], DiMP50 [48], UDAT-BAN [38], UDAT-CAR [38], and AVTrack [49]. To ensure a fair comparison, all models, including the proposed method and all baselines, were evaluated under identical conditions.

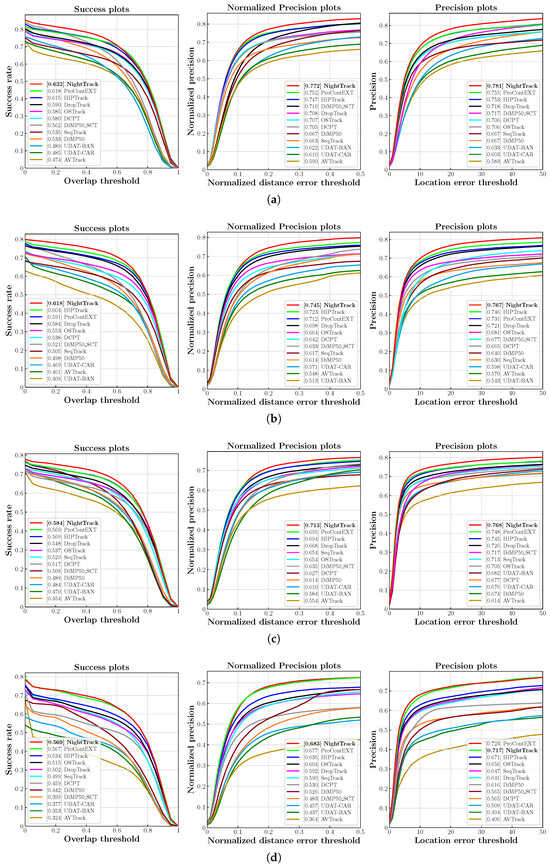

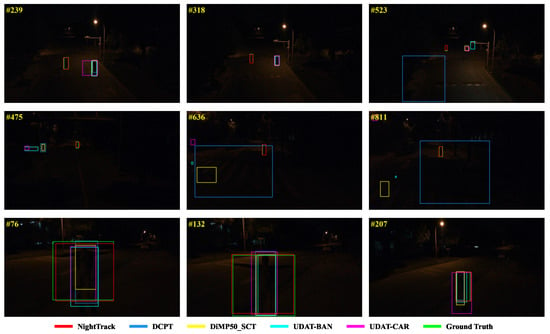

UAVDark135: UAVDark135 [16] is generally regarded as the first tracking benchmark dedicated to night-time UAV scenarios, comprising 135 videos captured by standard UAVs at night. Figure 6a illustrates the success rate, precision, and normalized precision plots on UAVDark135. The results indicate that NightTrack achieves the highest success rate (0.632) and precision (0.781) on this dataset, significantly outperforming existing mainstream trackers. Furthermore, Figure 7 provides a visual comparison of the representative frames, demonstrating the proposed method’s capacity for stable and robust tracking even challenging darkness.

Figure 6.

Performances of NightTrack and SOTA trackers compared on different night-time tracking benchmarks: (a) Results on UAVDark135 [16]. (b) Results on DarkTrack2021 [14]. (c) Results on NAT2021-test [38]. (d) Results on NAT2021-L-test [38].

Figure 7.

Qualitative comparison on the public benchmark UAVDark135. Tracking sequences from top to bottom are: bike6, girl6_1, and person13. The results of different trackers have been shown with different colors.

DarkTrack2021: DarkTrack2021 [14] presents an even more formidable challenge for night-time tracking. Its 110 video sequences are predominantly characterized by complex illumination scenarios, including under-exposure and over-exposure, and depict urban environments captured from more elevated and distant perspectives. The quantitative curves in Figure 6b demonstrate that NightTrack performs exceptionally well against SOTA trackers. It achieves a normalized precision of 0.745 and a precision of 0.767, surpassing the previous best-performing tracker (namely, HIPTrack [6]) by over 2.1% on both metrics, with the success rate also raised by 1.4%.

NAT2021-test and NAT2021-L-test: NAT2021 [38] provides 1400 unlabeled night-time tracking videos (and hence, typically serving as benchmarks for unsupervised training). The test set concerned comprises 180 sequences encompassing 12 challenging attributes, such as full occlusion and extremely low illumination, thereby substantially augmenting the difficulty of UAV tracking. Nevertheless, NightTrack exhibits the top performance again. As shown in Figure 6c, it achieves a success rate of 0.584, a normalized precision of 0.713, and a precision of 0.768 on this dataset. Furthermore, to assess the robustness for long-term tracking, NAT2021-L-test (23 sequences with an average length >1400 frames) is also employed. As depicted in Figure 6d, NightTrack ranks first in both success rate and normalized precision, while trailing in precision by a mere 0.6%.

4.4. Illumination-Oriented Evaluation

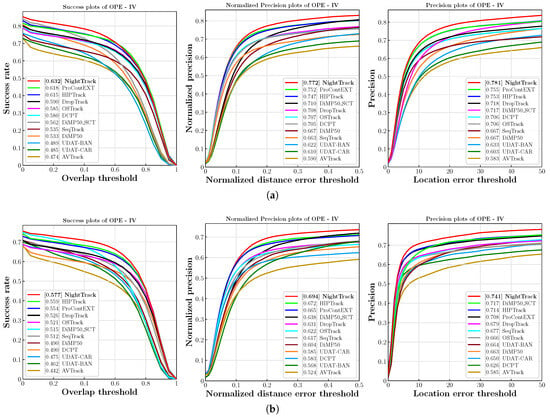

A primary challenge in night-time object tracking is the model’s robustness to drastic and unpredictable illumination changes, such as sudden flashes from vehicle headlights or transitions between brightly lit areas and deep shadows. To specifically evaluate the model’s capability in handling these scenarios, a dedicated analysis was conducted using the illumination variation attribute annotations provided in the UAVdark135 [16] and NAT2021-test [38] benchmarks. These annotations allow for the isolation and testing of performance exclusively on video sequences with significant lighting fluctuations.

The quantitative results on the UAVdark135 and NAT2021-test datasets are presented in Figure 8a and Figure 8b, respectively. As depicted, the proposed NightTrack consistently demonstrates superior performance across both benchmarks. On the UAVdark135 dataset, NightTrack achieves a precision of 0.781 and a success rate of 0.632, representing a significant improvement of 2.8% in precision and 1.7% in success rate over the baseline HipTrack. Similarly, on the NAT2021-test dataset, NightTrack also leads the performance chart, outperforming HipTrack by 2.7% in precision and 1.8% in success rate and surpassing other strong competitors like ProContEXT [7] by a considerable margin on both metrics.

Figure 8.

Success Rate, Normalized Precision, and Precision plots on the Illumination Variation Attribute from the (a) UAVDark135 [16] and (b) NAT2021-test [38] benchmarks.

This enhanced robustness can be directly attributed to the end-to-end joint optimization framework. Unlike traditional two-stage approaches where an image enhancer is applied independently, the enhancer in this work is trained in tandem with the tracker. This co-training mechanism forces the enhancer to learn an illumination-invariant feature space that is specifically optimized for the tracking task. Consequently, when confronted with sudden changes in lighting, the model can adaptively normalize the input, preserving critical discriminative features for the tracker while suppressing distracting artifacts or noise. This ensures a more stable and reliable feature representation, leading to markedly improved tracking performance under challenging illumination conditions.

4.5. Ablation Study

A series of ablation studies are conducted to ascertain the contribution of individual components within the NightTrack framework. This study evaluates the performance of NightTrack, utilizing HipTrack [6] as the baseline, All variants are trained under identical settings, and the tracking performance on the UAVDark135 [16], DarkTrack2021 [14], and NAT2021 [38] datasets is documented in Table 1. For clarity, ‘PAM’ denotes Pyramid Attention Module; ‘Noise’ represents estimated noise curve; ‘Enhancer’ refers to a version with a separately trained enhancer; and ‘Base’ is the original tracker.

Table 1.

Performance comparison of different methods on night-time tracking benchmarks.

Effectiveness of PAM: The efficacy of including PAMs within the system is shown in the second row of Table 1. The integration of PAMs yields consistent improvements in both success rate and precision across all evaluated datasets. This performance uplift can be attributed to the pyramid pooling architecture within each PAM, which is adept at aggregating multi-scale global contextual information.

Effectiveness of Noise Estimation: The contribution of the noise estimation component can be seen from the third row of Table 1, with a clear indication that the incorporation of noise estimation promotes night-time tracking performance. In essence, this component is of paramount importance for enhancing UAV tracking performance, as it counteracts the noise amplification that invariably accompanies the brightness enhancement process.

Effectiveness of the Joint Training Strategy: To corroborate the effectiveness of the joint optimization framework, a further experiment is conducted, wherein the enhancer and tracker are trained in isolation. The results show that while the independently trained components (row 4) may generally lead to marginal improvements, their success rate on the DarkTrack2021 dataset [14] is even lower than what is achievable by the baseline. This indicates that independent optimization can fail due to task mismatch. In contrast, the jointly optimized framework (row 5) results in consistent and superior performance, serving as strong confirmation of the advantages conferred by the end-to-end joint training paradigm.

5. Discussion

In the experimental section, comparative experiments were conducted against SOTA trackers on three widely used night-time UAV benchmarks. The results demonstrate that the proposed NightTrack achieves the best performance on the dark tracking. This success is attributed to two primary factors. Firstly, the joint optimization of the enhancer and tracker enables information complementarity between the two tasks, collectively enhancing the model’s generalization capability in night-time scenarios. Secondly, the Pyramid Attention Module and the curve projection model with illumination-noise components further accentuate the discriminative features of the target. Notably, on the NAT2021-L-test dataset, NightTrack achieved improvements of 3.5% and 4.6% over the baseline, respectively. This serves as strong evidence for the effectiveness of the proposed joint optimization framework.

The significance of these results is underscored by the unique and irreplaceable role of night-time vision in numerous practical applications where data collection during daylight is either impossible or insufficient. For example, many animal species are nocturnal; their natural behaviors and social interactions can only be observed under the cover of darkness. In law enforcement and security, illicit activities, including poaching and smuggling, often occur at night to avoid detection. Furthermore, infrastructure inspection of power lines or pipelines is frequently conducted at night to minimize disruption and capture thermal signatures of defects invisible during the day. These scenarios highlight that night-time data is not merely a variant of daytime data but a unique and critical source of information, making the development of advanced trackers like NightTrack essential.

Against this backdrop of critical need, the practical significance of NightTrack’s performance improvements becomes even more apparent. In many high-stakes applications, even a marginal increase in tracking precision can yield substantial consequences. In wildlife ecology, for example, the ability to maintain continuous, accurate observation of elusive nocturnal species is paramount. A minor improvement in tracking precision can be the difference between collecting robust, actionable data for conservation policy and gathering fragmented, inconclusive observations. This directly translates to more effective habitat management and a greater chance of survival for endangered species. Similarly, for autonomous systems, the demand for 24/7 all-weather operation is relentless. Consider UAVs deployed for nocturnal search-and-rescue missions, where the system’s ability to accurately perceive and track objects is fundamental to preventing catastrophic failures and ensuring public safety. Therefore, the performance gains of NightTrack are not merely incremental metrics but represent a tangible step towards more reliable and robust vision systems for critical missions.

Given the scarcity of low-light UAV tracking benchmarks and the high cost of annotation, future work will focus on exploring unsupervised/self-supervised paradigms. The objective is to learn illumination-robust features for objects directly from unannotated raw night-time videos to migrate the dependency on labeled data.

6. Conclusions

This paper has presented an end-to-end network framework, called NightTrack, which is engineered for the joint optimization of low-light image enhancement and visual object tracking. The architecture of NightTrack constitutes an organic integration of an enhancement sub-network and a tracking sub-network. The former is designed to adaptively accentuate salient features within an image, while concurrently mitigating the adverse effects of illumination fluctuations and noise on the downstream task. This is realized through the incorporation of the novel Pyramid Attention Modules and a curve projection model that disentangles illumination and noise components. The latter sub-network, in turn, extracts features from the enhanced images and is co-trained with the former via a joint loss function that combines both tracking and enhancement objectives. Owing to these innovations, the proposed method is experimentally shown to offer superior performance to that attainable by the state-of-the-art mechanisms, in dealing with real-world night-time UAV tracking scenarios.

Despite its success, the current research has several limitations. Firstly, the joint optimization framework introduces additional computational complexity compared to standalone trackers, which may pose challenges for deployment on resource-constrained UAV platforms. Secondly, while effective on common benchmarks, the model’s robustness against extreme and atypical night-time conditions, such as heavy fog, dense smoke, or rapid and drastic lighting changes, requires further investigation. Lastly, the model’s performance is still dependent on supervised learning with annotated data. Its capability to learn under unsupervised or weakly supervised warrants further investigation.

This study underscore the potent role of synergistically integrating upstream and downstream tasks. By co-optimizing these traditionally disparate stages within a unified framework, this work demonstrate a paradigm that effectively overcomes the inherent performance bottlenecks of standalone models, particularly under adverse environmental conditions such as low illumination. For scenarios with compromised visual quality, including low-light, rain, and haze, this integrated workflow yields substantial performance gains. Consequently, the joint optimization strategy presents a promising direction for solving tasks that are severely impacted by environmental degradation, such as low-light, rain, or haze. Furthermore, this framework shows significant potential for generalization to other vision tasks. For example, by replacing the tracking loss with a detection-specific loss, the enhancer could be co-optimized to boost object detection performance, representing a key avenue for future work.

Future research will prioritize several key directions to improve the performance of night-time object tracking. A primary focus is the development of lightweight and efficient architectures, leveraging techniques such as neural architecture search (NAS) and model distillation to facilitate real-time deployment on embedded systems. Another promising avenue is exploring multi-modal fusion, such as combining RGB with thermal or infrared data, to significantly enhance tracking robustness in complete darkness or adverse weather. Finally, advancing the unsupervised and self-supervised learning paradigms, as mentioned in the discussion, is crucial for breaking the data annotation barrier. Future studies could improve the model by designing more sophisticated pretext tasks that are inherently tailored to the characteristics of night-time scenes, thereby learning more generalized and illumination-invariant features. By pursuing these avenues, the next generation of night-time tracking models can achieve even greater levels of performance, efficiency, and adaptability.

Author Contributions

Conceptualization, X.H.; methodology, X.H.; validation, X.H.; investigation, X.H. and Y.B.; resources, Y.L. and J.M.; writing—original draft preparation, X.H.; writing—review and editing, C.S. and Q.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China (62271400).

Data Availability Statement

The original contributions presented in the study are included in the article.

Acknowledgments

The authors are grateful for the anonymous reviewers’ critical comments and constructive suggestions.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAV | Unmanned Aerial Vehicle |

| PAMs | Pyramid Attention Modules |

| SOTA | State-of-the-art |

| OPE | One-Pass Evaluation |

| SP | Success Plot |

| AUC | Area Under the Curve |

| CLE | Center Location Error |

| PP | Precision Plot |

| NAS | Neural Architecture Search |

Appendix A. Hyperparameter Selection

The total loss for the enhancement stage is formulated as the sum of the losses from all iterative outputs, defined as:

where is the enhancement loss obtained at the m-th iteration. This loss is computed as a weighted combination of four sub-losses:

The selection of these weighting coefficients is critical for stable training and was determined through a hierarchical, empirical process.

Appendix A.1. Primary Balancing Coefficient (γ)

The coefficient is used to balance the overall influence of the enhancement module against the primary tracking task, with the objective of scaling to a magnitude comparable to . Empirical analysis revealed that the model’s performance is robust when is in the range of 0.05 to 0.2. Values outside this range caused instability: a large (e.g., 1.0) led to gradient explosion, while a small value prevented the enhancer from converging. Consequently, was set to 0.1 for all experiments.

Appendix A.2. Internal Balancing Coefficients (λcen, λill, λcol, λnoi)

These four coefficients balance the relative importance of the enhancement sub-losses: center brightness (), illumination (), color () and noise (). To ensure the enhancer addresses all aspects of degradation equally without neglecting any single component, experiments were conducted to tune these weights. The results indicated that assigning them equal importance yielded the best and most stable results. Therefore, all internal weights were set to .

Appendix B. Table of Notations

Table A1.

Summary of symbols used in this paper for easy reference.

Table A1.

Summary of symbols used in this paper for easy reference.

| Symbol | Description | Location |

|---|---|---|

| C | The total number of channels in the global feature . | Section 3.2.1 |

| The two fully connected layers used to compute attention weights. | Section 3.2.1 (Equation (1)) | |

| The height and width of the input image . | Section 3.2 | |

| K | The number of encoders (and decoders) in the U-Net backbone. | Section 3.2 |

| M | The total number of iterations in the curve mapping process. | Section 3.2.2 |

| S | The number of sub-features the global feature is partitioned into. | Section 3.2.1 |

| T | The number of non-overlapping patches the image is divided into. | Section 3.2.3 (Equation (13)) |

| e | Euler’s number, used in the calculation of the weight map . | Section 3.2.3 (Equation (13)) |

| The height and width of the feature map after K downsampling operations. | Section 3.2 | |

| The spatial coordinates (row and column) of a patch. | Section 3.2.3 (Equation (13)) | |

| Indices for color channels, used in the color balance loss. | Section 3.2.3 (Equation (15)) | |

| The empirically set target average illumination value for the patches (0.6). | Section 3.2.3 (Equation (13)) | |

| A matrix of ones with the same dimensions as the input image. | Section 3.2.2 (Equation (8)) | |

| The learned parameter matrix for the m-th iteration, controlling the mapping intensity. | Section 3.2.2 (Equation (9)) | |

| The final, recalibrated attention weight after Softmax normalization. | Section 3.2.1 | |

| A matrix composed of the average intensity values of each patch. | Section 3.2.3 (Equation (13)) | |

| The reciprocal of the illumination map, defined as . | Section 3.2.2 (Equation (8)) | |

| The input low-light image, with dimensions . | Section 3.2 | |

| The estimated illumination map, with dimensions . | Section 3.2 | |

| The estimated noise map, with dimensions . | Section 3.2 | |

| The final weighted output feature for the i-th sub-feature. | Section 3.2.1 (Equation (4)) | |

| The reflection component, representing the intrinsic properties of objects. | Section 3.2.2 | |

| A spatial weight map that emphasizes the central area of an image. | Section 3.2.3 (Equation (13)) | |

| The enhanced image at the m-th iteration. | Section 3.2 | |

| The brightness response of the image at pixel x after the m-th iteration. | Section 3.2.2 | |

| The p-th color channel (e.g., R, G, B) of the enhanced image . | Section 3.2.3 (Equation (15)) | |

| The global context feature aggregated by the Pyramid Attention Module (PAM). | Section 3.2.1 | |

| The i-th sub-feature, with dimensions . | Section 3.2.1 | |

| The initial attention weight for before normalization. | Section 3.2.1 | |

| The channel descriptor for after global average pooling. | Section 3.2.1 | |

| The denoised version of the output from the -th iteration. | Section 3.2.2 (Equation (10)) | |

| The ReLU activation function. | Section 3.2.1 (Equation (1)) | |

| The primary balancing coefficient for the enhancement loss. | Section 3.2.2 (Equation (12)) | |

| Internal balancing coefficient for the center exposure intensity loss. | Section 3.2.3 (Equation (17)) | |

| Internal balancing coefficient for the color balance loss. | Section 3.2.3 (Equation (17)) | |

| Pre-defined hyperparameters to balance the GIoU loss. | Section 3.3 (Equation (19)) | |

| Internal balancing coefficient for the illumination estimation loss. | Section 3.2.3 (Equation (17)) | |

| Pre-defined hyperparameters to balance the L1 loss. | Section 3.3 (Equation (19)) | |

| Pre-defined hyperparameters to balance the location loss. | Section 3.3 (Equation (19)) | |

| Internal balancing coefficient for the noise estimation loss. | Section 3.2.3 (Equation (17)) | |

| ∇ | The first-order differential operator (gradient). | Section 3.2.3 (Equation (14)) |

| ⊙ | The element-wise (Hadamard) multiplication operator. | Section 3.2.1 |

| The weight for the original (non-enhanced) image, which is fixed to 1. | Section 3.3 (Equation (18)) | |

| The weight assigned to the tracking loss of the m-th iteration, computed from its enhancement loss. | Section 3.3 (Equation (18)) | |

| ⊘ | The pixel-wise (element-wise) division operator. | Section 3.2.2 (Equation (7)) |

| The Sigmoid activation function. | Section 3.2.1 (Equation (1)) | |

| The total loss for the enhancement stage, defined as the sum of all iterative losses. | Section 3.2.2 | |

| The total tracking loss from the HipTrack baseline, a weighted sum of its components. | Section 3.3 (Equation (19)) | |

| The Generalized Intersection over Union (GIoU) loss component. | Section 3.3 (Equation (19)) | |

| The L1 norm loss component. | Section 3.3 (Equation (19)) | |

| The location classification loss component. | Section 3.3 (Equation (19)) | |

| The total loss for the end-to-end joint optimization of the enhancer and tracker. | Section 3.3 (Equation (20)) | |

| The enhancement loss corresponding to the output of the m-th iteration. | Section 3.2.2 | |

| The center exposure intensity loss, focusing on the brightness of the central area. | Section 3.2.3 (Equation (13)) | |

| The color balance loss, minimizing intensity differences between color channels. | Section 3.2.3 (Equation (15)) | |

| The illumination estimation loss, enforcing the smoothness of the illumination map . | Section 3.2.3 (Equation (14)) | |

| The noise estimation loss, used to suppress the estimated noise component . | Section 3.2.3 (Equation (16)) |

All symbols are defined in the context of the proposed NightTrack.

References

- Aziz, N.N.A.; Mustafah, Y.M.; Azman, A.W.; Shafie, A.A.; Yusoff, M.I.; Zainuddin, N.A.; Rashidan, M.A. Features-based moving objects tracking for smart video surveillances: A review. Int. J. Artif. Intell. Tools 2018, 27, 1830001. [Google Scholar] [CrossRef]

- Chuang, H.M.; He, D.; Namiki, A. Autonomous target tracking of UAV using high-speed visual feedback. Appl. Sci. 2019, 9, 4552. [Google Scholar] [CrossRef]

- Muller, M.; Bibi, A.; Giancola, S.; Alsubaihi, S.; Ghanem, B. Trackingnet: A large-scale dataset and benchmark for object tracking in the wild. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 300–317. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 5374–5383. [Google Scholar]

- Cai, W.; Liu, Q.; Wang, Y. Hiptrack: Visual tracking with historical prompts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19258–19267. [Google Scholar]

- Lan, J.P.; Cheng, Z.Q.; He, J.Y.; Li, C.; Luo, B.; Bao, X.; Xiang, W.; Geng, Y.; Xie, X. Procontext: Exploring progressive context transformer for tracking. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar]

- Wu, Q.; Yang, T.; Liu, Z.; Wu, B.; Shan, Y.; Chan, A.B. Dropmae: Masked autoencoders with spatial-attention dropout for tracking tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14561–14571. [Google Scholar]

- Lin, B.; Zheng, J.; Xue, C.; Fu, L.; Li, Y.; Shen, Q. Motion-aware correlation filter-based object tracking in satellite videos. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, X.; Liu, F.; Zuo, Y.; Liu, C. Spatial-Temporal Contextual Aggregation Siamese Network for UAV Tracking. Drones 2024, 8, 433. [Google Scholar] [CrossRef]

- Ahmed, M.; Rasheed, B.; Salloum, H.; Hegazy, M.; Bahrami, M.R.; Chuchkalov, M. Seal pipeline: Enhancing dynamic object detection and tracking for autonomous unmanned surface vehicles in maritime environments. Drones 2024, 8, 561. [Google Scholar] [CrossRef]

- Li, B.; Fu, C.; Ding, F.; Ye, J.; Lin, F. ADTrack: Target-aware dual filter learning for real-time anti-dark UAV tracking. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: New York, NY, USA, 2021; pp. 496–502. [Google Scholar]

- Ye, J.; Fu, C.; Zheng, G.; Cao, Z.; Li, B. Darklighter: Light up the darkness for uav tracking. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: New York, NY, USA, 2021; pp. 3079–3085. [Google Scholar]

- Ye, J.; Fu, C.; Cao, Z.; An, S.; Zheng, G.; Li, B. Tracker meets night: A transformer enhancer for UAV tracking. IEEE Robot. Autom. Lett. 2022, 7, 3866–3873. [Google Scholar] [CrossRef]

- Huang, X.; Wu, Z.; Li, Y.; Shang, C.; Shen, Q. Pyramid Attention Enhancement Network for Nighttime UAV Tracking. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Li, B.; Fu, C.; Ding, F.; Ye, J.; Lin, F. All-day object tracking for unmanned aerial vehicle. IEEE Trans. Mob. Comput. 2022, 22, 4515–4529. [Google Scholar] [CrossRef]

- Ibrahim, H.; Kong, N.S.P. Brightness preserving dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 2007, 53, 1752–1758. [Google Scholar] [CrossRef]

- Abdullah-Al-Wadud, M.; Kabir, M.H.; Dewan, M.A.A.; Chae, O. A dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 2007, 53, 593–600. [Google Scholar] [CrossRef]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef] [PubMed]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2016, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Yu, S.; Moon, B.; Ko, S.; Paik, J. Low-light image enhancement using variational optimization-based retinex model. IEEE Trans. Consum. Electron. 2017, 63, 178–184. [Google Scholar] [CrossRef]

- Li, M.; Liu, J.; Yang, W.; Sun, X.; Guo, Z. Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans. Image Process. 2018, 27, 2828–2841. [Google Scholar] [CrossRef] [PubMed]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, J.; Guo, X. Kindling the darkness: A practical low-light image enhancer. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1632–1640. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1780–1789. [Google Scholar]

- Liu, W.; Ren, G.; Yu, R.; Guo, S.; Zhu, J.; Zhang, L. Image-adaptive YOLO for object detection in adverse weather conditions. Proc. AAAI Conf. Artif. Intell. 2022, 36, 1792–1800. [Google Scholar] [CrossRef]

- Liu, W.; Li, W.; Zhu, J.; Cui, M.; Xie, X.; Zhang, L. Improving nighttime driving-scene segmentation via dual image-adaptive learnable filters. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 5855–5867. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Guo, H.; Lu, T.; Wu, Y. Dynamic low-light image enhancement for object detection via end-to-end training. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: New York, NY, USA, 2021; pp. 5611–5618. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Wu, X.; Wu, Z.; Guo, H.; Ju, L.; Wang, S. Dannet: A one-stage domain adaptation network for unsupervised nighttime semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15769–15778. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Bridle, J. Training stochastic model recognition algorithms as networks can lead to maximum mutual information estimation of parameters. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 1989; Volume 2. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- LeCun, Y.; Bottou, L.; Orr, G.B.; Müller, K.R. Efficient backprop. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2002; pp. 9–50. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Ye, J.; Fu, C.; Zheng, G.; Paudel, D.P.; Chen, G. Unsupervised domain adaptation for nighttime aerial tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8896–8905. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Zhu, J.; Tang, H.; Cheng, Z.Q.; He, J.Y.; Luo, B.; Qiu, S.; Li, S.; Lu, H. Dcpt: Darkness clue-prompted tracking in nighttime uavs. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; IEEE: New York, NY, USA, 2024; pp. 7381–7388. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving dataset for heterogeneous multitask learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2636–2645. [Google Scholar]

- Sun, T.; Segu, M.; Postels, J.; Wang, Y.; Van Gool, L.; Schiele, B.; Tombari, F.; Yu, F. SHIFT: A synthetic driving dataset for continuous multi-task domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Denver, CO, USA, 2–6 June 2022; pp. 21371–21382. [Google Scholar]

- Loh, Y.P.; Chan, C.S. Getting to know low-light images with the exclusively dark dataset. Comput. Vis. Image Underst. 2019, 178, 30–42. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

- Ye, B.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Joint feature learning and relation modeling for tracking: A one-stream framework. In Computer Vision–ECCV 2022, Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 341–357. [Google Scholar]

- Chen, X.; Peng, H.; Wang, D.; Lu, H.; Hu, H. Seqtrack: Sequence to sequence learning for visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14572–14581. [Google Scholar]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6182–6191. [Google Scholar]

- Li, Y.; Liu, M.; Wu, Y.; Wang, X.; Yang, X.; Li, S. Learning Adaptive and View-Invariant Vision Transformer for Real-Time UAV Tracking. In Proceedings of the Forty-first International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).