Highlights

What are the main findings?

- A deep neural network (DNN) is employed as an efficient approximate solver to produce real-time binary offloading decisions in UAV-assisted edge computing, effectively addressing the 0–1 mixed-integer programming problem.

- An online learning mechanism enables automatic adaptation to variations in key parameters (e.g., computing resources and task sizes), eliminating the need for time-consuming policy recomputation.

What are the implications of the main findings?

- The proposed QO2 method resolves the fundamental trade-off between computational complexity and decision timeliness in traditional optimization, supporting decision-making with high timeliness.

- It overcomes the limitations of conventional static optimization strategies, which fail under environmental changes, thereby reducing operational overhead and providing a theoretical foundation for deploy-and-adapt edge-computing systems.

Abstract

Unmanned Aerial Vehicle-assisted Edge Computing (UAV-EC) leverages UAVs as aerial edge servers to provide computation resources to user equipment (UE) in dynamically changing environments. A critical challenge in UAV-EC lies in making real-time adaptive offloading decisions that determine whether and how UE should offload tasks to UAVs. This problem is typically formulated as Mixed-Integer Nonlinear Programming (MINLP). However, most existing offloading methods sacrifice strategy timeliness, leading to significant performance degradation in UAV-EC systems, especially under varying wireless channel quality and unpredictable UAV mobility. In this paper, we propose a novel framework that enhances offloading strategy timeliness in such dynamic settings. Specifically, we jointly optimize offloading decisions, transmit power of UEs, and computation resource allocation, to maximize system utility encompassing both latency reduction and energy conservation. To tackle this combinational optimization problem and obtain real-time strategy, we design a Quality of Experience (QoE)-aware Online Offloading (QO2) algorithm which could optimally adapt offloading decisions and resources allocations to time-varying wireless channel conditions. Instead of directly solving MIP via traditional methods, QO2 algorithm utilizes a deep neural network to learn binary offloading decisions from experience, greatly improving strategy timeliness. This learning-based operation inherently enhances the robustness of QO2 algorithm. To further strengthen robustness, we design a Priority-Based Proportional Sampling (PPS) strategy that leverages historical optimization patterns. Extensive simulation results demonstrate that QO2 outperforms state-of-the-art baselines in solution quality, consistently achieving near-optimal solutions. More importantly, it exhibits strong adaptability to dynamic network conditions. These characteristics make QO2 a promising solution for dynamic UAV-EC systems.

1. Introduction

Unmanned aerial vehicles (UAVs) are utilized for a wide range of applications, such as military reconnaissance and disaster relief, due to the high maneuverability and adaptive capabilities [1]. The role of UAVs is evolving beyond traditional sensing, being leveraged as mobile computing units for edge computing [2]. This emerging role is particularly valuable given the limitations of traditional cloud computing, where reliance on remote servers often leads to high latency and limited responsiveness [3]. Compared with fixed terrestrial edge-computing infrastructures, UAV-assisted edge computing (UAV-EC) further offers unique advantages: rapid deployment in dynamic or infrastructure-scarce areas, extended coverage for remote or disaster-affected regions, and adaptive positioning to enhance communication and computation efficiency. Thus, UAV-EC has established itself as an attractive model, in which UAVs function as edge servers in the air to offer immediate and adaptable computing services for user equipment (UE).

The core challenge in UAV-EC systems lies in determining whether and how UE should offload their computation tasks to UAV-based edge servers. This offloading optimization problem inherently requires joint consideration of multiple time-varying factors, including wireless channel conditions, UAV rajectory and mobility patterns, heterogeneous task characteristics, and resource constraints. Designing efficient and adaptive offloading strategies that can promptly accommodate these dynamic variations remains a significant research challenge.

This challenge has led to extensive research in recent years, with a particular focus on the offloading problem under different deployment scenarios such as signal UAV [4], multiple UAVs [5] and cloud–edge–terminal collaborative architecture [6], with diverse objectives, including improving user experience [7] and increasing the revenue of Internet Service Providers (ISPs) [8]. These studies have examined a variety of task models, including continuous arrival tasks [9], indivisible tasks [10], Directed Acyclic Graph (DAG) tasks [11], and independent tasks [12], under various multi-access control manners such as Time-Division Multiple Access (TDMA) [13], Frequency-Division Multiple Access (FDMA) [14], and Non-Orthogonal Multiple Access (NOMA) [15]. The significant benefits of edge computing further stimulate research into hybrid architectures, leading to innovations in device-to-device (D2D)-assisted MEC networks [16], Reconfigurable Intelligent Surface (RIS)-assisted MEC networks [17], and other emerging technological convergences.

While these works provide valuable insights, a critical aspect, i.e., offloading strategy timeliness, remains inadequately addressed, despite its critical importance for practical deployment in dynamic environments. Specifically, when dealing with indivisible tasks, offloading decisions are commonly modeled as a Mixed-Integer Programming (MIP) problem due to the binary (0–1) nature of the offloading decision. Conventional approaches to MIP problems rely heavily on dynamic programming and branch-and-bound methodologies. However, these numerical optimization-based methods exhibit two fundamental limitations that compromise their practical applicability: (i) the speed of problem solving limits timeliness of the proposed strategy; and (ii) lack of correlation between strategies under different settings leads to excessive and invalid calculations. More specifically,

- Computational Timeliness Deficiency: The solving time of these methods significantly impairs strategy timeliness, and thus even fails to harvest MEC. Specifically, time-varying wireless channel conditions largely impact offloading performance, which requires optimal adaptation of offloading decisions and resource allocations. This demands solving the combinatorial optimization problem within channel coherence time. However, the computation complexity is prohibitively high, particularly in large-scale networks. Methods like heuristic local search and convex relaxation often require numerous iterations to reach a satisfying local solution, making them unsuitable for real-time offloading decisions in fast-changing channels.

- Environment Adaptability Limitations: Existing algorithms are predominantly designed for fixed network parameter sets, while failing to exploit correlations between strategies under different environmental conditions. This limitation prevents effective utilization of historical computational data to rapidly adjust offloading and resource-allocation decisions. Consequently, when network environments change, strategy recalculation is necessary. For UAV-EC systems with rapidly changing environments, this constant recalculation would lead to substantial computational overhead.

To this end, this paper introduces a novel approach to enhance the timeliness and robustness of offloading strategies in UAV-EC systems. We model a joint optimization problem that integrates task offloading decisions, transmission power control, and resource allocation to achieve holistic utility maximization. The primary challenge lies in achieving efficient real-time optimization under rapidly varying wireless channel conditions and dynamic resource availability. To tackle this challenge, we introduce the QoE-aware Online Offloading (QO2) algorithm. By incorporating deep neural network (DNN) inference, the QO2 algorithm significantly improves the timeliness of offloading decisions while maintaining high robustness. To further enhance its robustness, we also design a Priority-Based Proportional Sampling (PPS) strategy. Empirical results confirm the superior performance of QO2 over state-of-the-art baselines, as it consistently produces near-optimal solutions. Moreover, QO2 algorithm exhibits strong adaptability to dynamic network conditions such as available computation resources, task characteristics, and user priority distributions. The algorithm demonstrates rapid re-convergence capability to near-optimal solutions, with re-convergence time scaling proportionally to the magnitude of environmental changes. The main contributions are threefold:

- Joint Optimization Framework: We introduce a joint optimization framework for UAV-EC systems that simultaneously co-designs computation offloading decisions and resource allocation. This integrated approach is tailored for dynamic settings, explicitly accounting for time-varying wireless channels to deliver real-time solutions.

- Novel QO2 Algorithm with PPS Strategy: We design the QO2 algorithm, which leverages DNN-based learning to generate binary offloading decisions in real-time, eliminating the computational burden of traditional MINLP solvers. In addition, we design the PPS strategy, which leverages historical optimization patterns to enhance algorithmic robustness across varying environmental conditions.

- Comprehensive Performance Validation: Extensive simulations demonstrate that QO2 achieves superior solution quality compared to state-of-the-art baselines, consistently finding solutions closer to the global optimum. Our approach exhibits strong adaptability to dynamic network conditions and demonstrates rapid re-convergence capability under varying operational scenarios, with convergence time appropriately scaling with the magnitude of environmental changes.

2. Related Work

In this section, we review literature on traditional methods for offloading problem and discuss recent studies on intelligent approaches. Finally, we highlight the unique aspects of our research.

2.1. Computation Offloading

Computation offloading has emerged as a fundamental paradigm to enhance system performance in mobile edge computing (MEC) systems. The existing studies span various scenarios, including single edge servers [18], multiple edge servers [19], and cloud–edge–terminal collaborative architectures [20]. These studies pursue varying optimization objectives tailored to specific application contexts. Some studies prioritize reducing total system latency [21,22,23] or energy consumption [24,25,26,27], while others focus on maximizing user experience quality [28,29] or enhancing system security and computing capabilities [30,31]. Moreover, the significant advantages of edge-computing technology also motivate its integration with emerging technologies, leading to innovative paradigms such as UAV-assisted MEC networks [15,32], RIS-assisted MEC networks [30,33,34,35,36], and blockchain-assisted MEC networks [22,37,38]. Representative works in this domain include Zhang et al. [22], which pioneered a blockchain-secured UAV-EC architecture. Their approach formulates an optimization problem to minimize delay through the combined management of UAV trajectory, computation offloading, and resource allocation, leveraging a block coordinate descent method to find solutions.Similarly, Mao et al. [25] tackle channel uncertainty through the co-optimization of multi-antenna UAV trajectory, computational resources, and communication strategies. They develop an algorithm based on successive convex approximation (SCA) to resolve the associated non-convex energy minimization problem. Basharat et al. [39] propose a digital twin-assisted task offloading scheme for energy-harvesting UAV-MEC networks using branch-and-bound methods, aiming to minimize latency while maximizing associated IoT devices under various constraints.

Despite these significant contributions, traditional offloading strategies often rely on static models that struggle to adapt to dynamic environments and complex task characteristics.This fundamental limitation constrains the full potential of edge-computing systems, particularly in scenarios involving time-varying channel conditions and mobile UAV deployments. Consequently, many researchers have increasingly focused on developing adaptive approaches that could respond effectively to environmental dynamics.

2.2. Learning-Based Offloading Decision Making

As the demand for sophisticated and dynamic business scenarios increases, the intersection of edge computing and machine learning has emerged as a significant research area. Machine learning enables systems to learn from historical data and real-time environments to intelligently predict task loads and resource demands.This capability enables adaptive task scheduling and resource allocation, ultimately improving system performance, energy efficiency, and quality of service.

Compared to traditional algorithms, machine learning approaches performs better in handling nonlinear relationships, complex task offloading decisions, resource allocation, and traffic prediction in edge-computing domains. Deep Q-Networks (DQNs) have demonstrated considerable efficacy in edge computing, owing to their capacity to navigate high-dimensional states and facilitate optimal decision-making for task scheduling and resource distribution. This capability is crucial in dynamic settings with uncertain channels, fluctuating node loads, and evolving network topologies, making DQNs especially suitable for discrete-action-space problems. Ding et al. [40] employ a Double Deep Q-Networks (DDQN) approach to tackle trajectory optimization and resource allocation in UAV-aided MEC systems. Their model jointly optimizes UAV flight path, time allocation, and offloading decisions to maximize the average secure computing capacity. To improve efficiency, they accelerate DDQN convergence through a reduction of the action space. Similarly, Liu et al. [41] propose a collaborative optimization method combining Double Deep Q-Networks (DDQN) and convex optimization for joint 3D trajectory planning and task offloading in UAV-EC systems. However, DQN exhibits limitations in sample efficiency and training stability, which may result in performance degradation, particularly in non-stationary environments.

However, DQN-based approaches exhibit limitations in sample efficiency and training stability, potentially leading to performance degradation in non-stationary environments. To address continuous-action spaces, Deep Deterministic Policy Gradient (DDPG) algorithms have been employed for fine-grained resource allocation and task offloading control. Lin et al. [42] propose a PDDQNLP algorithm that integrates DDPG to handle mixed continuous-action spaces, addressing the mixed-integer nonlinear problem of jointly optimizing offloading decisions, UAV 3D trajectory, and flight time. In [43], Du et al. use DDPG to construct a dynamic programming model with a large continuous-action space, addressing the data relaying and bandwidth allocation problems in UAV-assisted Internet of Things (IoT) systems. While DDPG demonstrates effectiveness in continuous control, it suffers from sensitivity to hyperparameter settings and overfitting tendencies. Ale et al. [44] investigate multi-device MEC systems and propose an enhanced DDPG algorithm to address constrained hybrid action-space challenges in task partitioning and computation offloading, demonstrating efforts to improve DDPG’s robustness in complex scenarios.

Soft Actor–Critic (SAC) algorithms have emerged as an alternative, combining stochastic policy exploration with continuous-action-handling capabilities. SAC demonstrates superior stability and accelerated convergence when dealing with high-dimensional continuous-action spaces. It also maintains a more effective balance between exploration and exploitation, which facilitates more efficient and resilient resource management and task offloading in dynamic settings. Wang et al. [45] combine SAC with a two-stage matching association strategy to jointly optimize trajectories and user associations of multiple UAVs. Goudarzi et al. [46] leverage SAC’s stochastic exploration and continuous-action-handling capabilities to implement the optimal offloading mechanism, addressing the challenge of edge resource scarcity in both high-density urban areas and remote regions. Li et al. [47] proposed the Soft Actor–Critic for Covert Communication and Charging (SAC-CC) algorithm to enhance UAV endurance and adaptability by jointly optimizing trajectory and bandwidth allocation. Bai et al. [48] introduced a multimodal SAC method that combines convolutional neural networks (CNN) and multilayer perceptrons (MLP) for the real-time joint optimization of UAV status, position, and power in dynamic environments. Wang et al. [49] combined a continuous SAC algorithm to optimize the UAV trajectory with a discrete SAC algorithm enhanced by invalid action masking (IAM) to optimize task offloading decisions, achieving collaborative optimization in multi-channel parallel processing scenarios. However, it is important to note that SAC involves high implementation complexity and demands more computational resources.

Despite their effectiveness, these DQN, DDPG, and SAC approaches face common challenges including extensive training data requirements, meticulous hyperparameter tuning, potential convergence instability, and high implementation complexity. To address these limitations, recent research has explored simplified neural network architectures that prioritize system robustness and strategy timeliness which could maintain performance while reducing implementation complexity and adaptation requirements. Huang et al. [50] introduced a Deep Reinforcement learning-based Online Offloading (DROO) framework for wireless powered MEC. Their approach achieves real-time decision-making, demonstrating computational latency under 0.1 s in a network of 30 users. Similarly, our previous work [51] proposes the Intelligent Online Computation Offloading (IOCO) algorithm, which learns historical optimal strategies via a deep neural network to make intelligent offloading decisions in dynamic wireless environments. IOCO incorporates quantization and sampling techniques to improve execution speed and resilience to environment fluctuations. However, IOCO algorithm overlooks the impact of power control on offloading.

The limitations observed in the existing literature serve as the primary motivation for our work. First, while numerous studies focus on optimization accuracy, the timeliness of offloading strategies receives insufficient attention, particularly in UAV-EC systems where environmental conditions change rapidly. Second, most existing approaches fail to jointly consider offloading decisions, power control, and computation resource allocation in a unified optimization framework. Third, the robustness of learning-based algorithms under highly dynamic conditions requires further enhancement to ensure consistent performance across diverse operational scenarios.

To address these limitations, this paper proposes a novel framework that enhances offloading strategy timeliness in UAV-EC systems. Unlike existing approaches that sacrifice strategy timeliness for optimization accuracy, our framework prioritizes real-time adaptation while maintaining near-optimal solution quality. Specifically, we design an adaptive online algorithm that jointly optimizes offloading decisions, the transmitting power of UE, and computation resource allocation. By incorporating power control into our unified decision-making framework, our approach achieves a more comprehensive optimization, resulting in improved overall system performance. Furthermore, through the design of advanced network architectures, quantization methods, and sampling strategies, we enhance the algorithm’s adaptability to dynamic environmental conditions.

3. System Model and Problem Formulation

This section presents network model, following by problem formulation and its analysis. Key notations are summarized in Table 1.

Table 1.

Main notations.

3.1. Network Model

Here, we consider a quasi-static MEC system with an UAV functioning as the edge server (ES) and K fixed UE denoted as a set in its range. Without loss of generality, we assume a block-fading channel model where the channel coefficients remain constant during the uplink transmission period of each offloaded task, but may vary independently across different transmission slots. Since our problem formulation is considered in one block, for the sake of brevity, subscripts t of blocks would be omitted, without causing confusion.

Each UE has a computing task characterized by to be processed, where and present data size and application-specific computational complexity, respectively. Hence, number of CPU cycles needed for task k is [7]. Here, we consider atomic tasks which could not be divided into subtasks. Since UAV has larger computational capability than UE, UE can offload their tasks to UAV. Here, we adopt a binary offloading mode, i.e., task could be either computed locally at the UE or offloaded to the UAV. Let be an indicator variable. Specifically, means that UE k offloads its task to the ES, and elsewise.

3.1.1. Local Computation Model

Computational power consumption is modeled as [52], where is a coefficient dependent on the chip architecture, and f denotes the computational speed in CPU cycles per second. When computation task of UE k is process locally, the execution time and corresponding energy consumption could be expressed as

respectively, where denotes computational speed of UE k.

3.1.2. Edge Computation Model

A typical edge computation process consists of three steps. First, UE offload its task to the ES through the allocated uplink channel. Then, the ES allocates computation resources and then executes the offloaded tasks on behalf of UE. Finally, the ES transmits results back to UE. Since the number of results is much smaller than that of the data offloaded to the ES, and the downlink capacity is much larger than that of the uplink, we safely ignore the overhead of the results transmission [53]. Next, we give more details for the former two steps.

- Uplink Transmission: Each uplink channel is a block-fading Rayleigh channel with block length no less than the maximum execution time of offloaded tasks. The channel gain of UE k, denoted , accounts for the overall effects of path loss and small-scale fading. To emphasize again, we ignore subscripts t of to simplify labeling. Considering the white Guassian noise power is and user bandwidth is W, the data rate of UE k could be given by where is the transmitting power of UE k and could be configured flexibly.

- Edge Execution: We assume that ES could serve K UE concurrently by allocating computational resource to UE k.

As aforementioned, we could obtain the total time for offloading task as

where refers touplink channel cost, e.g., channel encoding. Correspondingly, the energy consumption as

with and denoting static power consumption and inverse of power amplifier efficiency, respectively.

3.2. Problem Formulation

3.2.1. QoE-Aware System Utitily

In such a UAV-EC system, Quality of Experience (QoE) of UE k mainly concerns task completion time and energy consumption , which could be given by

respectively. To normalize these two metrics with different units, we define a utility function of UE k as

with denoting user preferences on task completion time and energy consumption. Since Equation (7) could be rewritten as , it could measure QoE improvements via computation offloading compared with local execution. Further, we define the system utility as

with reflecting the preferences of ES on UE, , , and . Equation (8) not only measures the overall utility of UE but also considers the interest of ES. Without confusion, we use to denote , and the same for the other two notations.

3.2.2. Utility Maximization Problem

The system utility maxmization problem can be formulated as

In P1, C1 indicates that binary offloading model is adopted; C2 states that at most N UE are allowed to transmit simultaneously to guarantee orthogonality; C3 means that the transmission power should be non-negative and must not exceed the power budget; C4 reveals the non-negative feature of allocated computation resource; C5 means that the sum of obtained computation resources could not exceed the computation capability of ES.

3.2.3. Problem Analysis

We observed that P1 is a mixed-integer nonlinear programming. In more detail, it consists of two subproblems; one is the offloading decision, the other is resource allocation. Several tricky points in solving P1 could be summarized as follows:

- A tight coupled relationship among subproblems: More specifically, offloading decision would affect resource allocation and vice versa.

- Integer feature of offloading decision subproblem: Due to rgw integer feature of , it always needs to search among possible actions to find the optimal solution. Obviously, a violent search is not suitable for exponentially large search spaces.

- Non-convexity of resoure allocation subproblem: Even for a given offloading decision, it is still difficult to solve the resource-allocation problem due to its non-convexity.

Besides these three points, another challenge lies in guaranteeing timely decisions before channel changes, since P1 needs to be re-solved once the channel varies. Next, we would overcome these tricky problems, and propose a QoE-aware Online Offloading (QO2) algorithm which could work in an online manner.

4. Proposed Algorithm

In this section, we first show an overview of the QO2 algorithm. Then, three main steps of the QO2 algorithm are introduced. Finally, several implementation issues are discussed.

4.1. Algorithm Overview

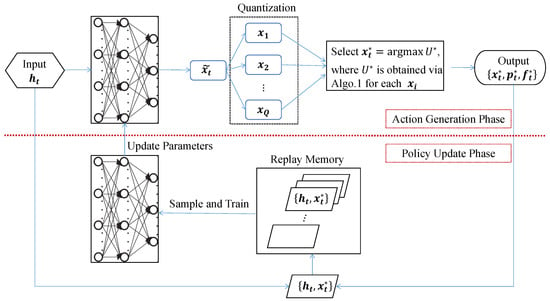

The struture of the QO2 algorithm is illustrated in Figure 1, which consists of action generation and policy update. Specifically,

- Action Generation: First, a relaxed offloading decision is obtained firstly via utilizing a DNN with parameters . Then, a quantization operation is performed to guarantee its integer feature and uplink capacity constraint. Finally, the ES selects the optimal offloading action through solving RA and comparing utility for each quantized offloading decision; meanwhile, it determines the optimal resource-allocation strategy.

- Policy Update: To obtain more intelligent action, the ES would first add experience data to replay memory based on a given caching rule. Then, it would sample a batch of cached data to retrain DNN and accordingly update network parameters, which would be used to generate a new action in the next several blocks.

To sum up, in the action-generation phase, in addition to using a DNN-based method to obtain the offloading decision, a decouplation-based method is designed to solve the resource-allocation problem. In policy update phase, experience replay technique is utilized to update DNN-based offloading policy. The descriptions of three methods are detailed in the following subsections.

Figure 1.

Illustration of QO2 algorithm flow.

4.2. Action Generation: Offloading Decision

Essentially, we aim to obtain an offloading policy function which could quickly generate offloading decision for any channel realization , i.e.,

This is inspired by (i) the universal approximation theorem, which guarantees that a DNN with sufficiently hidden neurons and a linear output layer is capable of representing any continuous mapping function if proper activation functions are applied at neurons, and (ii) inference via forward network could work in the online mode because it is less time consuming; we decide to utilize a DNN with parameters to fit Equation (9). Note that the normalization of input features is generally a critical preprocessing step for stable DNN training, particularly for scenarios with wide numerical ranges. Common techniques include min–max scaling and z-score standardization. Since it is not practical to construct such a DNN with infinite hidden layers, we approximate Equation (9) through the following two steps.

4.2.1. Relaxation Operation

We utilize a DNN with parameters to obtain a relaxed offloading decision. Specifically, we use the ELU and Sigmoid function as the activation function of hidden neurons and output layer, respectively. As such, the output of DNN, denoted , could satisfy .

4.2.2. Quantization and Select Operations

Overall, we first quantize to obtain Q binary offloading decisions , and then select the optimal based on the following rule:

We observed from Equation (10) that the larger Q, the better the algorithm performance, though the higher the complexity. To balance the performance and complexity, we design an order-preserving and -norm-limiting quantization method. Here, taking quantized action as an example, order-preserving means that for , should hold if for all . In addition, -norm-limiting rule guarantees an uplink capacity constraint, i.e., C2 should be satisfied during quantization. Under these two criteria, the value of Q could be altered from 1 to . Next, we present the details of this quantization method.

- First, we sort entries of in a descending order, e.g., where is the k-th order statistic of . DefineThen, the first binary offloading decision could be achieved byfor .

- To generate the remaining offloading actions, we first order entries of respective to their distances to 0.5, , where is the q-th order statistic of . Then, the binary offloading decision could be achieved byfor .

4.3. Action Generation: Resource Allocation

For a given quantized offloading action , P1 could be transformed to the following equivalent problem:

where , , and for simplicity. Observe that uplink power and computation resource allocation are decoupled from each other in both objective and constraints of P2. Hence, we could handle P2 by performing uplink power control and computation resource allocation independently. Details are as below.

4.3.1. Uplink Power Control

Once the offloading decision is fixed, UE are independent of each other in terms of uplink power control. Hence, each UE could assign its transmission power via solving the following problem (Obviously, it is also advisable to optimize uplink transmission power using the ES in a centralized manner.)

By analyzing the special structure of P3 [54], we achieve the optimal solution in closed forms in Theorem 1, based on which we propose a bisection-based uplink transmission power control policy.

Theorem 1.

The optimal solution of P3 could be given by

where is the unique solution of in , with

Proof.

See in Appendix A. □

Based on Theorem 1, we propose a bisection-based power control algorithm (BPC) with low-complexity to solve P3, the specific steps of which are presented in Algorithm 1.

| Algorithm 1 Bisection-based power control algorithm (BPC). |

|

4.3.2. Computation-Resources Allocation

From P2, we formulate the computation-resources allocation problem as

Since both the objective function and feasible region are convex, P4 is a convex optimization problem. Detailed proof is provided in Appendix B. Here, we adopt the Lagrangian dual method to solve P4. Specifically, by denoting as the Lagrange multiplier for C5, we obtain Lagrangian dual problem of P4 as

where Lagrangian dual function is given by

with Lagrangian function expressed as

Using Karush–Kuhn–Tucker (KKT) conditions, i.e., and , we could express the optimal solution of as

Finally, via substituting (14) and (18) into (8), we could obtain for any given . Further, we could select the optimal offloading decision , as (10) stated.

At this point, we have completed action-generation phase, within which QO2 could output the offloading action along with its corresponding optimal resource allocation for channel realization . In the next subsection, we express how QO2 realizes self-evolution for better performance.

4.4. Policy Update

The main idea of policy update is to utilize the offloading decision generated in the first phase to help DNN evolve into a better one. Put simply, the newly generated would be added to our maintained memory space at each iteration. When the memory is full, the oldest data will be deleted. Additionally, we utilize an experience replay technique and periodically sample a batch of data from memory to update DNN. Here, we take one iteration as an example to illustrate some highlights in the update process.

- Sample Strategy: We sample a batch of data , marked by a set of time indices to train DNN (the reward signal is implicitly encoded in through the utility-maximization process. Specifically, is selected as the candidate achieving the maximum utility value among quantized alternatives, thus representing the optimal reward-maximizing action under the given channel state .). Intuitively, the latest data comes from a smarter DNN, and thus contributes more to policy update. However, relying solely on latest data to train DNN would lead to poor stability. To achieve a better balance, we propose a PPS strategy. Specifically, we record sample frequency of data i as , based on which we set its sample priority as . Here, is a coefficient to balance update efficiency and stability. Then, data i would be sampled with probability of . As shown in Section 5, PPS method outmatches Random Sampling (RS) method [50] and Priority-based Sampling (PS) method in terms of convergence rate and stability, respectively.

- Loss Function and Optimizer: Mathematically, training DNN with weights could be considered a logistic regression problem. Hence, we could choose cross-entropy as the loss function, defined aswith “·” denoting the dot product of two vectors. Additionally, Adam algorithm is utilized as our optimizer to reduce loss defined in (19). The details of the Adam algorithm are omitted for brevity.

The sketch of this algorithm is presented in Algorithm 2.

| Algorithm 2 QoE-aware Online Offloading (QO2) Algorithm |

|

4.5. Computational Complexity Analysis and Deployment Issues

At each time slot t, the relaxation operation (Line 4 in Algorithm 2) passes through the DNN , where both the input and output layers have dimension K. This operation incurs a time complexity of . The quantization and selection operations (Line 5) involve sorting the vector and generating Q binary offloading decisions, where , resulting in a time complexity of . Consequently, the overall time complexity for the offloading decision is . In the resource-allocation phase (Line 6), a computation resource allocation scheme, along with utility calculation and selection, exhibit time complexities of , , and per binary offloading decision, respectively. Here, denotes the stopping tolerance of bisection method. For all Q decisions, the total time complexity for resource allocation is . Thus, the aggregate time complexity per decision window is .

Additionally, every iterations (Line 10), the framework performs DNN training to update parameters from to . The PPS method (Line 11) samples a mini-batch of size B from the replay memory of size M, which incurs a time complexity of . Subsequently, the DNN training step (Line 12) incurs a time complexity of . Since training occurs once every iterations, the amortized training complexity per iteration is . In summary, the total time complexity per iteration of QO2 is .

Remark 2.

Although QO2 is designed for UAV-EC system with time-varying channels, it could handle other time-varying parameters, e.g., the available computation resources of ES. All we should modify is the neuron number of the input layer, to be consistent with that of varying parameters. Further, QO2 could even handle other complex problems with less modification, e.g., intelligence access control problems.

5. Numerical Results

In this section, we first demonstrate the effectiveness and convergence of our proposed QO2 algorithm. Further, the effect of different algorithm parameters on effectiveness and convergence is given. Then, we present performance comparison with several benchmarks from the perspective of sampling strategy. Finally, the QO2 algorithm is applied to an extended scenario with time-varying computation resource and task workloads to show its adaptability and robustness.

5.1. Simulation Setup

We conduct simulations in a Python 3.10.16 environment on a MacBook Air with an Apple M1 chip. If not specified, the below default simulation parameters are adopted:

- Communication parameters: We consider that UEs are randomly distributed within a area and a UAV is positioned at the center of the area at an altitude of . Here, the free-space path loss model, i.e., , is adopted to characterize path loss between UE k and ES, where , MHz, and denote antenna gain, carrier frequency, and path loss exponent, respectively. In addition, the small-scale channel fading follows Rayleigh fading, i.e., , and the power density of noise is —174 dBm [55]. Moreover, we consider that there are uplink subchannels with equal bandwidth MHz. Finally, we set the maximum transmit power W, static transmit power W, inverse of power amplifier efficiency , and uplink channel cost .

- Computation parameters: Computational speed of UE and power coefficient are set as cycles/s and . Moreover, we set computation capacity of ES as cycles/s [55].

- Application parameters: We set the input data size and its computation intensive degree as 10 Mb and 100 cycles/bit, respectively.

- Preference parameters: We set . Additionally, we set if k is an odd number and otherwise.

- Algorithm parameters: We choose a fully connected DNN consisting of one input layer and two hidden layers with 120 and 80 hidden neurons, respectively, and one output layer in our proposed QO2 algorithm (the 2-layer architecture with 120 and 80 neurons was selected through a systematic hyperparameter search, which explored configurations with 1–3 layers and various neuron combinations. This configuration demonstrated optimal convergence and robustness across different parameter settings.). Via experiments, we finally set the memory size, batch size, training interval , and learning rate of Adam optimizer as 1024, 128, 5, and 0.01, respectively. Additionally, we set sample parameter as 1 to balance policy efficiency and stability.

5.2. Convergence and Effectiveness Performance of QO2

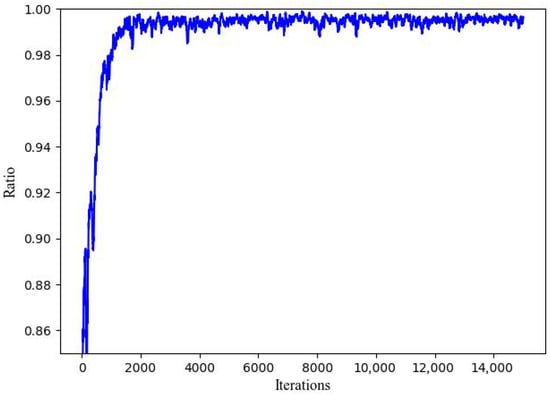

We define a utility ratio to reveal the effectiveness of QO2 algorithm. Specifically,

where is obtained via QO2, while the optimal solution in the denominator is achieved by enumerating all the offloading actions. It is easy to see that . The closer is to 1, the better QO2 performs.

As Figure 2 shows, QO2 could converge to the state with ratio close to 1 within 5000 iterations, demonstrating its good performance in both convergence speed and effectiveness. Initially, since QO2 needs to interact with the environment to accumulate experience, the ratio starts at a relatively low level. However, with the number of iterations increasing, the ratio steadily improves and rapidly approaches 1. Notably, after about 2000 iterations, the ratio stabilizes around 1 with little fluctuation, which indicates that the offloading and resource-allocation decisions generated by QO2 are near-optimal.

Figure 2.

Convergence performance of QO2 algorithm.

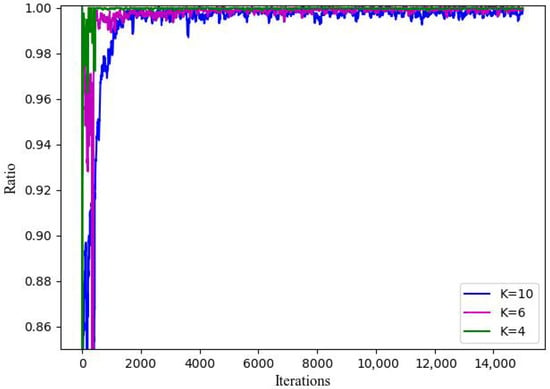

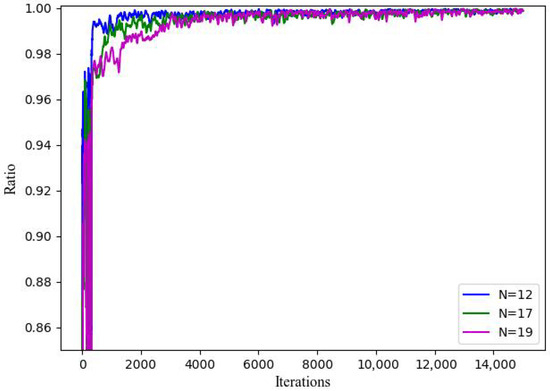

Figure 3 and Figure 4 illustrate the impact of UE number K and subchannel number N on the convergence rate of QO2, respectively. We observed from Figure 3 that the larger K is, the slower QO2 converges. The reasons are for this related to the order-preserving and -norm-limiting quantization method proposed in Section 4.2.2. Specifically, in each iteration, alternative offloading decisions are selected from all possible offloading decisions, and then the optimal offloading decision is determined based on Equation (10). Hence, as K increases, the probability that the optimal decision lies within this candidate set decreases, thereby requiring more iterations to approach the optimum. Figure 4 indicates that the smaller N is, the faster QO2 converges. This is because that a smaller N means that less UE could choose to offload simultaneously, effectively reducing the decision space. With fewer available options, the algorithm requires fewer iterations to reach the optimal state.

Figure 3.

Ratio versus UE number.

Figure 4.

Ratio versus subchannel number.

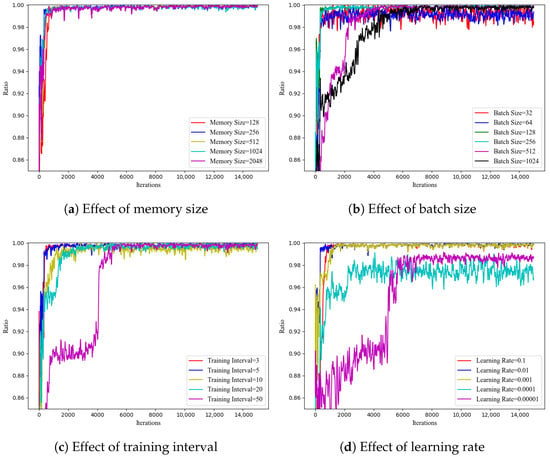

In Figure 5, we investigate how key hyperparameters influence on QO2’s performance. Observed from Figure 5a that convergence and effectiveness performance of QO2 are relatively insensitive to memory size. This is mainly attributed to our proposed PPS, which could consistently pick the most useful data to update the policy. Considering both convergence speed and effectiveness, we adopt a memory size of 1024 in the following simulations. Figure 5b shows the effect of batch size. Overly large batches (=1024) tend to include redundant data, while very small ones (=32) fail to fully utilize stored samples, both leading to slower convergence. Additionally, large batches increase computational cost. To strike a balance between training efficiency and speed, the batch size is set to 128. In Figure 5c, the effect of training interval is examined. In theory, the smaller the value, the more updates of QO2. However, our findings suggest that overly frequent DNN training provides limited benefit. Thus, we set as 5. Finally, Figure 5d presents the influence of the learning rate in the Adam optimizer. An excessively small learning rate (=0.00001) would converge QO2 to suboptimal solution, and thus suffer lower ratio. In the following simulations, we set the learning rate as 0.01. Sensitivity analysis reveals that performance of QO2 remains relatively stable with moderate variations in most hyperparameters (e.g., memory size 256–1024, learning rate 0.001–0.01, batch size 64–256), while training interval and extremely small learning rates (<0.001) show higher sensitivity. To sum up, the final settings for memory size, batch size, , and learning rate are 1024, 128, 5, and 0.01, respectively.

Figure 5.

Ratio under different parameters: (a) memory size; (b) batch size; (c) training interval; (d) learning rate.

5.3. Adaptability Performance of QO2

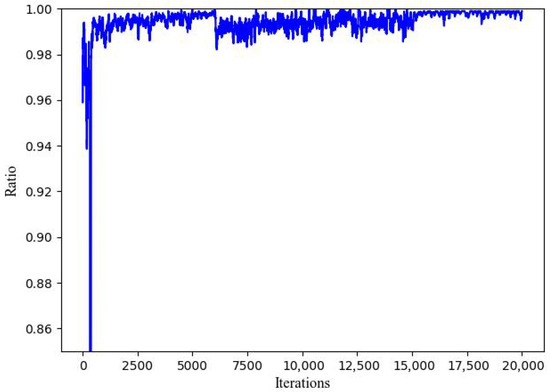

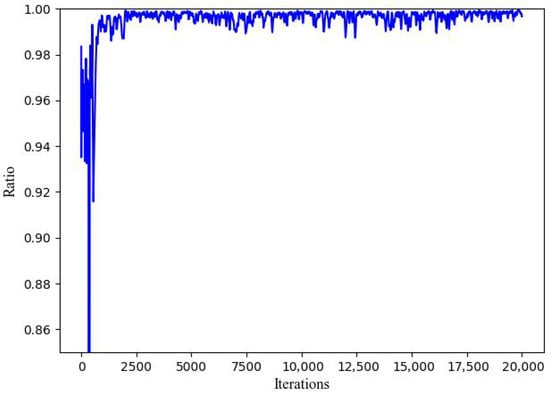

Figure 6 and Figure 7 present the performance of the QO2 algorithm as the total computational speed of the edge server changes. Two scenarios are simulated: an abrupt change in the computing rate and periodic fluctuations. In the first scenario, the initial total computing rate is set to . At iteration 6000, the computing rate is reduced to , and at iteration 15,000, it is restored to its original value. As shown in Figure 6, the benefit ratio drops significantly following the reduction at iteration 6000 but quickly recovers and converges. A similar pattern is observed at iteration 15,000. In the second scenario, the initial computing rate remains , but it fluctuates within a 10% range, cycling through values of , , and . As depicted in Figure 7, despite these periodic changes, the impact on QO2’s convergence is minimal, with the benefit ratio exhibiting only slight fluctuations.

Figure 6.

Performance of QO2 under abrupt changes in .

Figure 7.

Performance of QO2 under periodic fluctuations in .

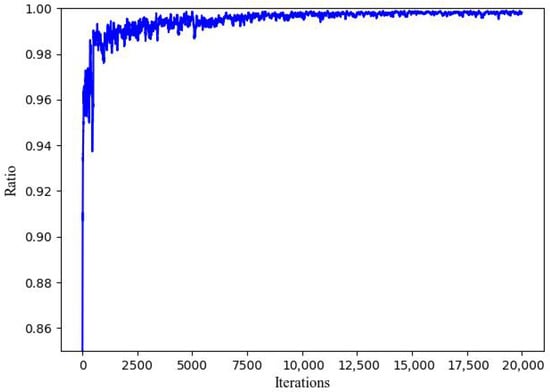

Figure 8 further shows the performance of the QO2 algorithm when the task data size varies periodically. The initial task data size is set to , and it fluctuates within a 10% range, cycling between , , and . As illustrated in Figure 8, QO2 consistently converges to a benefit ratio near 1 with only minor fluctuations, even under varying task sizes.

Figure 8.

Adaptability performance of QO2 regarding data size .

Overall, these results indicate that the QO2 algorithm is robust to environmental variations: the more drastic the change, the longer it takes to re-converge, whereas mild changes require only a few iterations. This aligns with practical intuition and demonstrates QO2’s capability to sustain near-optimal offloading decisions under dynamic conditions. Note that the adaptability to dynamic environments constitutes a core contribution of QO2. Unlike static heuristics that prioritize computational simplicity at the cost of environmental responsiveness, QO2 is designed to provide adaptive decision-making capability while maintaining reasonable computational overhead. This design trade-off is crucial for time-varying edge-computing scenarios.

5.4. Performance of Sampling Method

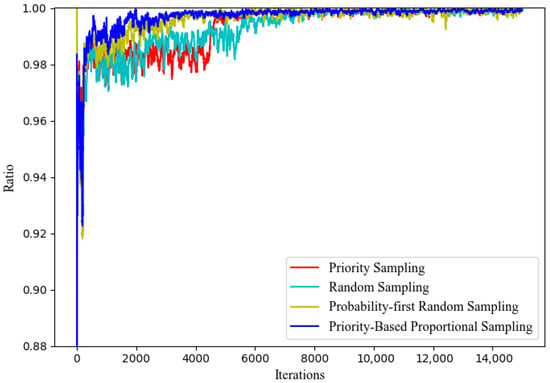

Figure 9 analyze the impact of different sampling methods used during the training of DNNs on the convergence and performance of QO2. Here, we compare PPS strategy with three alternative methods:

- Priority Sampling (PS): Selects the most recent experiences equal to the batch size from memory.

- Random Sampling (RS): Randomly selects experiences equal to the batch size from all stored experiences.

- Probability-first Random Sampling (PRS): Sets the sampling probability for each experience based on its sampling frequency, then chooses a batch of experiences according to these probabilities.

As shown in Figure 9, the benefit ratio curves for all four sampling methods eventually converge to 1. However, the convergence performance of the PS and RS methods are relatively poor. The PS method suffers from low diversity of experiences, while the RS method, despite its high diversity, often includes outdated or less relevant experiences, thus requiring more training data to reach optimal convergence. In contrast, the PRS and PPS methods demonstrate better convergence performance.

Figure 9.

Convergence performance of different sampling methods.

5.5. Performance Comparison

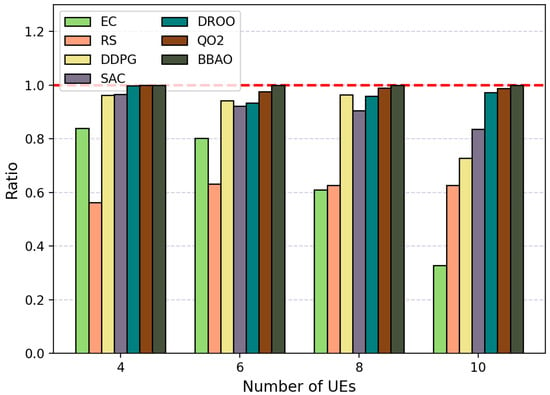

Here, we compare our QO2 algorithm with representative benchmarks as follows:

- Edge computing (EC): All tasks are offloaded.

- Random scheduling (RS): All UE are randomly offloaded to the UAV.

- Branch-and-bound-aided offloading (BBAO) [39]: The branch-and-bound approach is utilized to obtain offloading decisions.Specifically, BBAO uses identical system parameters as other algorithms. For each time slot, it exhaustively enumerates all feasible resource allocations via depth-first search (DFS), computes the system rate for each allocation using the bisection method, and selects the allocation with maximum utility as the optimal solution. This process provides a near-optimal benchmark for performance comparison.

- Deep Reinforcement learning-based Online Offloading (DROO) [50]: DROO algorithm utilizes DRL technique to obtain offloading decision, where random sampling strategy is adopted in the policy update phase.

- Soft-actor–critic method (SAC) [46] scheme: Taking the wireless channel gain between the UE and the edge server as input, use SAC to output binary offloading decisions, and obtain the optimal system utility as a reward to train the SAC policy.

- Deep deterministic policy gradient (DDPG) [44] scheme: Similar to SAC scheme, use DDPG to take the wireless channel gain as input to output binary offloading decisions, and obtain the optimal system utility to train the DDPG policy (to ensure a relatively fair comparison, SAC and DDPG are integrated into QO2 framework via replacing the DNN component, rather than being standalone implementations of the original algorithm).

As shown in Figure 10, we compare convergence performance of various algorithms versus different numbers of UE. Notably, the QO2 algorithm exhibits performance comparable to BBAO, while outperforming the other five algorithms. This is primarily due to QO2’s consideration of additional dimensions, which significantly enhances its performance over EC and RS. Furthermore, unlike actor–critic algorithms such as DDPG and SAC, the QO2 algorithm directly employs a reward function, rather than relying on a critic network, to evaluate the actor. This design enables the objective function to accurately assess the value of the current action based on the true outcomes observed in the environment, thereby delivering superior performance compared to SAC and DDPG. Compared with DROO, QO2 further benefits from its efficient sampling method during experience replay, which enables its ability to leverage historical interactions and produce more effective offloading decisions.

Figure 10.

Comparison of ratio versus UE number.

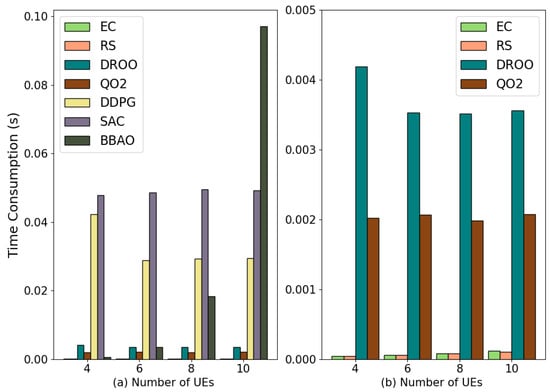

Figure 11 illustrates the time consumption of each algorithm under varying numbers of UEs, presented in two sub-graphs for clarity. Figure 11b is a locally enlarged version of Figure 11a. In Figure 11a, we find that as the scale of UE increases, the computational complexity of BBAO escalates dramatically, while SAC and DDPG exhibit a more moderate growth but still require substantially more time than the other algorithms. Figure 11b indicates that although QO2’s time consumption is slightly higher than that of EC and RS, it remains within a controllable range. Moreover, the time consumption of QO2 is lower than DROO, demonstrating its superior computational efficiency.

Figure 11.

Comparison of system computation time consumption versus UE number.

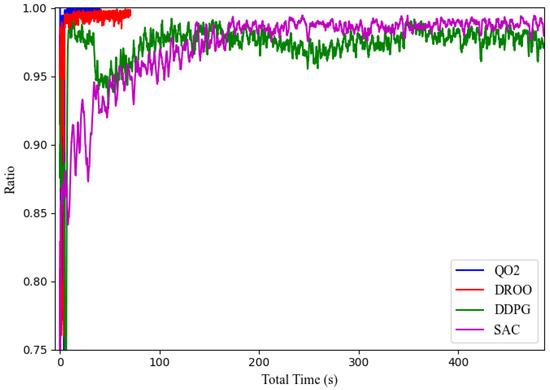

To further demonstrate the computational efficiency advantage of QO2, Figure 12 compares the convergence performance of four algorithms (QO2, DROO, DDPG, and SAC) versus total computation time. For fair comparison, the 10,000 iteration cycles are converted into their corresponding wall-clock time consumption. The results in Figure 12 reveal that QO2 exhibits remarkably rapid convergence, achieving a ratio of approximately 0.99 within merely 10 s. In contrast, DDPG and SAC require approximately 100 and 200 s, respectively, representing 10× and 20× longer computation times to attain comparable performance. Although DROO demonstrates faster initial convergence, its overall time consumption remains similar to that of QO2. These results confirm that QO2 not only achieves near-optimal performance but also demonstrates superior timeliness, making it particularly well-suited for time-sensitive edge-computing scenarios where rapid decision-making is critical.

Figure 12.

Comparison of ratio versus total time consumption.

As shown in Figure 10, Figure 11 and Figure 12, the proposed QO2 algorithm demonstrates computational efficiency suitable for quasi-static to moderately dynamic channel conditions, achieving high-quality approximate solutions through adaptive early stopping. Deployment feasibility in highly dynamic scenarios depends on hardware capabilities and application-specific latency requirements.

6. Conclusions and Future Work

This paper addressed the offloading timeliness in UAV-EC systems. By leveraging deep neural networks, we proposed a novel QO2 algorithm, optimizing offloading decisions, transmit power, and resource allocation to improve system performance. Furthermore, we designed a PPS strategy to enhance the robustness of QO2. Finally, simulation results validated that QO2 could achieve fast convergence and reduce re-convergence time.

While QO2 demonstrates outstanding performance, several limitations still exist. (1) DNN architecture is designed for a fixed number of UE K. When K changes, the network requires retraining, which limits its deployment flexibility. Promising solutions include permutation-invariant architectures, meta-learning techniques, and transfer learning. (2) Performance validation relies solely on simulation-based analysis rather than empirical measurements under real channel dynamics. Future work should incorporate hardware acceleration and real-world testbeds to validate real-time performance under practical channel coherence time constraints and diverse mobility scenarios. (3) This work focuses on single-UAV deployments to establish algorithmic fundamentals. While the core QO2 framework could potentially extend to multi-UAV scenarios, such extensions introduce additional algorithmic challenges, including user association, inter-UAV coordination, and distributed decision-making architectures.

Author Contributions

Conceptualization, Y.W.; Data curation, Z.Q.; Formal analysis, Y.W.; Funding acquisition, Y.W.; Investigation, Z.Q.; Methodology, Y.W.; Project administration, Y.W.; Resources, Y.W.; Software, Z.Q.; Supervision, Y.W.; Validation, Z.Q.; Visualization, Z.Q.; Writing—original draft, Y.W. and Y.Z. (Yuhang Zhang); Writing—review and editing, Y.Z. (Yuhang Zhang), Y.Z. (Yubo Zhao) and H.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the National Natural Science Foundation of China under Grant 62002293, in part by Natural Science Basic Research Program of Shaanxi (Program No. 2025JC-YBQN-794), in part by the China Postdoctoral Science Foundation under Grant BX20200280.

Data Availability Statement

The data from this study are presented in the article; further details can be directed to the corresponding author.

Conflicts of Interest

Author Han Zhang was employed by the company China Unicom Research Institute. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAV-EC | Unmanned Aerial Vehicle-assisted Edge Computing |

| UE | User Equipment |

| MINLP | Mixed-Integer Nonlinear Programming |

| QoE | Quality of Experience |

| QO2 | QoE-aware Online Offloading |

| ISP | Internet Service Provider |

| DAG | Directed Acyclic Graph |

| TDMA | Time Division Multiple Access |

| FDMA | Frequency Division Multiple Access |

| NOMA | Non-Orthogonal Multiple Access |

| D2D | Device-to-Device |

| MEC | Mobile Edge Computing |

| RIS | Reconfigurable Intelligent Surface |

| MIP | Mixed-Integer Programming |

| DNN | Deep Neural Network |

| SCA | Successive Convex Approximation |

| DQN | Deep Q-Network |

| DDQN | Double Deep Q-Network |

| DDPG | Deep Deterministic Policy Gradient |

| SAC | Soft Actor–Critic |

| DROO | Deep Reinforcement learning-based Online Offloading |

| IOCO | Intelligent Online Computation Offloading |

| ES | Edge Server |

| BPC | Bisection-based Power Control |

| BBAO | Branch-and-Bound-Aided Offloading |

| PPS | Priority-Based Proportional Sampling |

| PRS | Probability-first Random Sampling |

| PS | Priority Sampling |

| RS | Random Sampling |

Appendix A

Proof.

The first order derivative of is:

The denominator is always positive. Thus, the sign of is determined by the numerator, which is defined as as Equation (15). The first-order derivative of is:

Since all parameters are positive, throughout , establishing that is strictly monotonically increasing. In addition, the left boundary satisfies . Since is strictly increasing from a negative value, we consider two exhaustive cases:

Case 1: If , then for all and . At Step 1, throughout, meaning f is strictly decreasing on . The minimum is attained at .

Case 2: If , then by the intermediate value theorem applied to the continuous, strictly increasing function , there exists a unique such that , equivalently . For : ; for : (f is strictly increasing); thus, is the unique global minimizer of f on . □

Appendix B

Proof.

To establish that P4 is a convex optimization problem, we next prove 1 the objective function is convex and 2 the feasible region is a convex set.

- Convexity of objective function: Consider the objective , where and . For each term , the second derivative is , which proves that is convex. For the multivariate function , the Hessian matrix is:Since all diagonal entries are non-negative, , which proves that is convex.

- Convexity of feasible region: The feasible region is defined by constraints C4 and C5. For C4: , each constraint defines a half-space, which is a convex set. The intersection of all such half-spaces, , is convex. For C5: , this is a linear inequality constraint of the form , where . Linear functions are both convex and concave, and the sublevel set is a half-space, which is convex. The feasible region is the intersection of convex sets (the non-negative orthant and a half-space). Since the intersection of convex sets is convex, is a convex set.

Since the objective function is strictly convex over the domain , and the feasible region is a convex set, P4 is a convex optimization problem. □

References

- Mu, J.; Zhang, R.; Cui, Y.; Li, J.; Wang, J.; Shi, Y. UAV Meets Integrated Sensing and Communication: Challenges and Future Directions. IEEE Commun. Mag. 2023, 61, 62–67. [Google Scholar] [CrossRef]

- Tang, J.; Nie, J.; Zhao, J.; Zhou, Y.; Xiong, Z.; Guizani, M. Slicing-Based Software-Defined Mobile Edge Computing in the Air. IEEE Wirel. Commun. 2022, 29, 119–125. [Google Scholar] [CrossRef]

- Alamouti, S.M.; Arjomandi, F.; Burger, M. Hybrid Edge Cloud: A Pragmatic Approach for Decentralized Cloud Computing. IEEE Commun. Mag. 2022, 60, 16–29. [Google Scholar] [CrossRef]

- Liu, B.; Wan, Y.; Zhou, F.; Wu, Q.; Hu, R.Q. Resource Allocation and Trajectory Design for MISO UAV-Assisted MEC Networks. IEEE Trans. Veh. Technol. 2022, 71, 4933–4948. [Google Scholar] [CrossRef]

- Sun, G.; Wang, Y.; Sun, Z.; Wu, Q.; Kang, J.; Niyato, D.; Leung, V.C.M. Multi-Objective Optimization for Multi-UAV-Assisted Mobile Edge Computing. IEEE Trans. Mob. Comput. 2024, 23, 14803–14820. [Google Scholar] [CrossRef]

- Bai, Z.; Lin, Y.; Cao, Y.; Wang, W. Delay-Aware Cooperative Task Offloading for Multi-UAV Enabled Edge-Cloud Computing. IEEE Trans. Mob. Comput. 2022, 23, 1034–1049. [Google Scholar] [CrossRef]

- Shen, L. User Experience Oriented Task Computation for UAV-Assisted MEC System. In Proceedings of the IEEE INFOCOM 2022—IEEE Conference on Computer Communications, London, UK, 2–5 May 2022; pp. 1549–1558. [Google Scholar]

- Sun, Z.; Sun, G.; He, L.; Mei, F.; Liang, S.; Liu, Y. A Two Time-Scale Joint Optimization Approach for UAV-Assisted MEC. In Proceedings of the IEEE INFOCOM 2024—IEEE Conference on Computer Communications, Vancouver, BC, Canada, 20–23 May 2024; pp. 91–100. [Google Scholar]

- Sun, G.; He, L.; Sun, Z.; Wu, Q.; Liang, S.; Li, J.; Niyato, D.; Leung, V.C.M. Joint Task Offloading and Resource Allocation in Aerial-Terrestrial UAV Networks with Edge and Fog Computing for Post-Disaster Rescue. IEEE Trans. Mob. Comput. 2024, 23, 8582–8600. [Google Scholar] [CrossRef]

- Tian, J.; Wang, D.; Zhang, H.; Wu, D. Service Satisfaction-Oriented Task Offloading and UAV Scheduling in UAV-Enabled MEC Networks. IEEE Trans. Wirel. Commun. 2023, 22, 8949–8964. [Google Scholar] [CrossRef]

- Chai, F.; Zhang, Q.; Yao, H.; Xin, X.; Gao, R.; Guizani, M. Joint Multi-Task Offloading and Resource Allocation for Mobile Edge Computing Systems in Satellite IoT. IEEE Trans. Veh. Technol. 2023, 72, 7783–7795. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, M.; Ai, B.; Wang, Y.; Sun, S. Adaptive Bitrate Video Caching in UAV-Assisted MEC Networks Based on Distributionally Robust Optimization. IEEE Trans. Mob. Comput. 2023, 23, 5245–5259. [Google Scholar] [CrossRef]

- Wang, H.; Jiang, B.; Zhao, H.; Zhang, J.; Zhou, L.; Ma, D.; Wei, J.; Leung, V.C.M. Joint Resource Allocation on Slot, Space and Power Towards Concurrent Transmissions in UAV Ad Hoc Networks. IEEE Trans. Wirel. Commun. 2022, 21, 8698–8712. [Google Scholar] [CrossRef]

- Dai, M.; Wu, Y.; Qian, L.; Su, Z.; Lin, B.; Chen, N. UAV-Assisted Multi-Access Computation Offloading via Hybrid NOMA and FDMA in Marine Networks. IEEE Trans. Netw. Sci. Eng. 2022, 10, 113–127. [Google Scholar] [CrossRef]

- Lu, W.; Ding, Y.; Gao, Y.; Chen, Y.; Zhao, N.; Ding, Z.; Nallanathan, A. Secure NOMA-Based UAV-MEC Network Towards a Flying Eavesdropper. IEEE Trans. Commun. 2022, 70, 3364–3376. [Google Scholar] [CrossRef]

- Yang, D.; Zhan, C.; Yang, Y.; Yan, H.; Liu, W. Integrating UAVs and D2D Communication for MEC Network: A Collaborative Approach to Caching and Computation. IEEE Trans. Veh. Technol. 2025, 74, 10041–10046. [Google Scholar] [CrossRef]

- Yang, Z.; Xia, L.; Cui, J.; Dong, Z.; Ding, Z. Delay and Energy Minimization for Cooperative NOMA-MEC Networks with SWIPT Aided by RIS. IEEE Trans. Veh. Technol. 2023, 73, 5321–5334. [Google Scholar] [CrossRef]

- Wang, K.; Fang, F.; da Costa, D.B.; Ding, Z. Sub-Channel Scheduling, Task Assignment, and Power Allocation for OMA-Based and NOMA-Based MEC Systems. IEEE Trans. Commun. 2021, 69, 2692–2708. [Google Scholar] [CrossRef]

- Wang, K.; Ding, Z.; So, D.K.C.; Karagiannidis, G.K. Stackelberg Game of Energy Consumption and Latency in MEC Systems With NOMA. IEEE Trans. Commun. 2021, 69, 2191–2206. [Google Scholar] [CrossRef]

- Zhang, Y.; Di, B.; Zheng, Z.; Lin, J.; Song, L. Distributed Multi-Cloud Multi-Access Edge Computing by Multi-Agent Reinforcement Learning. IEEE Trans. Wirel. Commun. 2021, 20, 2565–2578. [Google Scholar] [CrossRef]

- Guo, K.; Gao, R.; Xia, W.; Quek, T.Q.S. Online Learning Based Computation Offloading in MEC Systems With Communication and Computation Dynamics. IEEE Trans. Commun. 2021, 69, 1147–1162. [Google Scholar] [CrossRef]

- Wang, C.; Zhai, D.; Zhang, R.; Li, H.; Yu, F.R. Latency Minimization for UAV-Assisted MEC Networks With Blockchain. IEEE Trans. Commun. 2024, 72, 6854–6866. [Google Scholar] [CrossRef]

- Sun, Y.; Xu, J.; Cui, S. User Association and Resource Allocation for MEC-Enabled IoT Networks. IEEE Trans. Wirel. Commun. 2022, 21, 8051–8062. [Google Scholar] [CrossRef]

- Maraqa, O.; Al-Ahmadi, S.; Rajasekaran, A.S.; Sokun, H.U.; Yanikomeroglu, H.; Sait, S.M. Energy-Efficient Optimization of Multi-User NOMA-Assisted Cooperative THz-SIMO MEC Systems. IEEE Trans. Commun. 2023, 71, 3763–3779. [Google Scholar] [CrossRef]

- Mao, W.; Xiong, K.; Lu, Y.; Fan, P.; Ding, Z. Energy Consumption Minimization in Secure Multi-Antenna UAV-Assisted MEC Networks With Channel Uncertainty. IEEE Trans. Wirel. Commun. 2023, 22, 7185–7200. [Google Scholar] [CrossRef]

- Ernest, T.Z.H.; Madhukumar, A.S. Computation Offloading in MEC-Enabled IoV Networks: Average Energy Efficiency Analysis and Learning-Based Maximization. IEEE Trans. Mobile Comput. 2024, 23, 6074–6087. [Google Scholar] [CrossRef]

- Wang, R.; Huang, Y.; Lu, Y.; Xie, P.; Wu, Q. Robust Task Offloading and Trajectory Optimization for UAV-Mounted Mobile Edge Computing. Drones 2024, 8, 757. [Google Scholar] [CrossRef]

- Liu, X.; Deng, Y. Learning-Based Prediction, Rendering and Association Optimization for MEC-Enabled Wireless Virtual Reality (VR) Networks. IEEE Trans. Wirel. Commun. 2021, 20, 6356–6370. [Google Scholar] [CrossRef]

- Chu, W.; Jia, X.; Yu, Z.; Lui, J.C.S.; Lin, Y. Joint Service Caching, Resource Allocation and Task Offloading for MEC-Based Networks: A Multi-Layer Optimization Approach. IEEE Trans. Mobile Comput. 2024, 23, 2958–2975. [Google Scholar] [CrossRef]

- Michailidis, E.T.; Volakaki, M.G.; Miridakis, N.I.; Vouyioukas, D. Optimization of Secure Computation Efficiency in UAV-Enabled RIS-Assisted MEC-IoT Networks with Aerial and Ground Eavesdroppers. IEEE Trans. Commun. 2024, 72, 3994–4009. [Google Scholar] [CrossRef]

- Zhang, Y.; Kuang, Z.; Feng, Y.; Hou, F. Task Offloading and Trajectory Optimization for Secure Communications in Dynamic User Multi-UAV MEC Systems. IEEE Trans. Mobile Comput. 2024, 23, 14427–14440. [Google Scholar] [CrossRef]

- Lu, F.; Liu, G.; Lu, W.; Gao, Y.; Cao, J.; Zhao, N. Resource and Trajectory Optimization for UAV-Relay-Assisted Secure Maritime MEC. IEEE Trans. Commun. 2024, 72, 1641–1652. [Google Scholar] [CrossRef]

- Chen, Z.; Tang, J.; Wen, M.; Li, Z.; Yang, J.; Zhang, X. Reconfigurable Intelligent Surface Assisted MEC Offloading in NOMA-Enabled IoT Networks. IEEE Trans. Commun. 2023, 71, 4896–4908. [Google Scholar] [CrossRef]

- Fang, K.; Ouyang, Y.; Zheng, B.; Huang, L.; Wang, G.; Chen, Z. Security Enhancement for RIS-Aided MEC Systems with Deep Reinforcement Learning. IEEE Trans. Commun. 2025, 73, 2466–2479. [Google Scholar] [CrossRef]

- Wu, Z.; Zhang, H.; Liu, X.; Li, L.; Li, H. IRS Empowered MEC System With Computation Offloading, Reflecting Design, and Beamforming Optimization. IEEE Trans. Commun. 2024, 72, 3051–3063. [Google Scholar] [CrossRef]

- Hu, X.; Zhao, H.; Zhang, W.; He, D. Online Resource Allocation and Trajectory Optimization of STAR–RIS–Assisted UAV–MEC System. Drones 2025, 9, 207. [Google Scholar] [CrossRef]

- Hao, X.; Yeoh, P.L.; She, C.; Vucetic, B.; Li, Y. Secure Deep Reinforcement Learning for Dynamic Resource Allocation in Wireless MEC Networks. IEEE Trans. Commun. 2024, 72, 1414–1427. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, H.; Ji, H.; Yang, L.; Li, X.; Leung, V.C.M. Transaction Throughput Optimization for Integrated Blockchain and MEC System in IoT. IEEE Trans. Wirel. Commun. 2022, 21, 1022–1036. [Google Scholar] [CrossRef]

- Basharat, M.; Naeem, M.; Khattak, A.M.; Anpalagan, A. Digital Twin-Assisted Task Offloading in UAV-MEC Networks With Energy Harvesting for IoT Devices. IEEE Internet Things J. 2024, 11, 37550–37561. [Google Scholar] [CrossRef]

- Ding, Y.; Han, H.; Lu, W.; Wang, Y.; Zhao, N.; Wang, X.; Yang, X. DDQN-Based Trajectory and Resource Optimization for UAV-Aided MEC Secure Communications. IEEE Trans. Veh. Technol. 2023, 73, 6006–6011. [Google Scholar] [CrossRef]

- Liu, C.; Zhong, Y.; Wu, R.; Ren, S.; Du, S.; Guo, B. Deep Reinforcement Learning Based 3D-Trajectory Design and Task Offloading in UAV-Enabled MEC System. IEEE Trans. Veh. Technol. 2025, 74, 3185–3195. [Google Scholar] [CrossRef]

- Lin, N.; Tang, H.; Zhao, L.; Wan, S.; Hawbani, A.; Guizani, M. A PDDQNLP Algorithm for Energy Efficient Computation Offloading in UAV-Assisted MEC. IEEE Trans. Wirel. Commun. 2023, 22, 8876–8890. [Google Scholar] [CrossRef]

- Du, T.; Gui, X.; Teng, X.; Zhang, K.; Ren, D. Dynamic Trajectory Design and Bandwidth Adjustment for Energy-Efficient UAV-Assisted Relaying with Deep Reinforcement Learning in MEC IoT System. IEEE Internet Things J. 2024, 11, 37463–37479. [Google Scholar] [CrossRef]

- Ale, L.; King, S.A.; Zhang, N.; Sattar, A.R.; Skandaraniyam, J. D3PG: Dirichlet DDPG for Task Partitioning and Offloading with Constrained Hybrid Action Space in Mobile-Edge Computing. IEEE Internet Things J. 2022, 9, 19260–19272. [Google Scholar] [CrossRef]

- Wang, B.; Kang, H.; Li, J.; Sun, G.; Sun, Z.; Wang, J.; Niyato, D. UAV-Assisted Joint Mobile Edge Computing and Data Collection via Matching-Enabled Deep Reinforcement Learning. IEEE Internet Things J. 2025, 12, 19782–19800. [Google Scholar] [CrossRef]

- Goudarzi, S.; Soleymani, S.A.; Anisi, M.H.; Jindal, A.; Xiao, P. Optimizing UAV-Assisted Vehicular Edge Computing with Age of Information: A SAC-Based Solution. IEEE Internet Things J. 2025, 12, 4555–4569. [Google Scholar] [CrossRef]

- Li, D.; Du, B.; Bai, Z. Deep Reinforcement Learning-Enabled Trajectory and Bandwidth Allocation Optimization for UAV-Assisted Integrated Sensing and Covert Communication. Drones 2025, 9, 160. [Google Scholar] [CrossRef]

- Bai, Y.; Xie, B.; Liu, Y.; Chang, Z.; Jäntti, R. Dynamic UAV Deployment in Multi-UAV Wireless Networks: A Multi-Modal Feature-Based Deep Reinforcement Learning Approach. IEEE Internet Things J. 2025, 12, 18765–18778. [Google Scholar] [CrossRef]

- Wang, T.; Na, X.; Nie, Y.; Liu, J.; Wang, W.; Meng, Z. Parallel Task Offloading and Trajectory Optimization for UAV-Assisted Mobile Edge Computing via Hierarchical Reinforcement Learning. Drones 2025, 9, 358. [Google Scholar] [CrossRef]

- Huang, L.; Bi, S.; Zhang, Y.-J.A. Deep Reinforcement Learning for Online Computation Offloading in Wireless Powered Mobile-Edge Computing Networks. IEEE Trans. Mobile Comput. 2019, 19, 2581–2593. [Google Scholar] [CrossRef]

- Wang, Y.; Qian, Z.; He, L.; Yin, R.; Wu, C. Intelligent Online Computation Offloading for Wireless-Powered Mobile-Edge Computing. IEEE Internet Things J. 2024, 11, 28960–28974. [Google Scholar] [CrossRef]

- Wang, Y.; Sheng, M.; Wang, X.; Wang, L.; Li, J. Mobile-Edge Computing: Partial Computation Offloading Using Dynamic Voltage Scaling. IEEE Trans. Commun. 2016, 64, 4268–4282. [Google Scholar] [CrossRef]

- Sheng, M.; Wang, Y.; Wang, X.; Li, J. Energy-Efficient Multiuser Partial Computation Offloading with Collaboration of Terminals, Radio Access Network, and Edge Server. IEEE Trans. Commun. 2020, 68, 1524–1537. [Google Scholar] [CrossRef]

- Boyd, S.P.; Vandenberghe, L. Convex Optimization, 1st ed.; Cambridge University Press: Cambridge, UK, 2004; pp. 136–146. [Google Scholar]

- Liu, J.; Zhang, X.; Zhou, H.; Xia, L.; Li, H.; Wang, X. Lyapunov-Based Deep Deterministic Policy Gradient for Energy-Efficient Task Offloading in UAV-Assisted MEC. Drones 2025, 9, 653. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).