Designing a User-Centered Interaction Interface for Human–Swarm Teaming

Abstract

:1. Introduction

- In a user study with 100 participants, we evaluated the effect of different visualization techniques on the usability of human–swarm interaction interface and reported the result;

- The preferred visualization method is then used to build an interaction interface. This method reduces the number of visualizations that an operator has to use to control and monitor the swarm;

- We propose a human-in-the-loop collective decision-making method that governs the human–swarm decisions. Our model is task-generic for human–swarm teaming (i.e., the operator is treated as an agent as well) with proximal interactions that allows for state estimation and control;

- Through simulation, we demonstrate the effectiveness of the swarm in tracking an unfolding event (a fire spreading) through minimal interactions by a single operator.

2. User Evaluation of Human–Swarm Visualization Methods

2.1. Design and Procedure

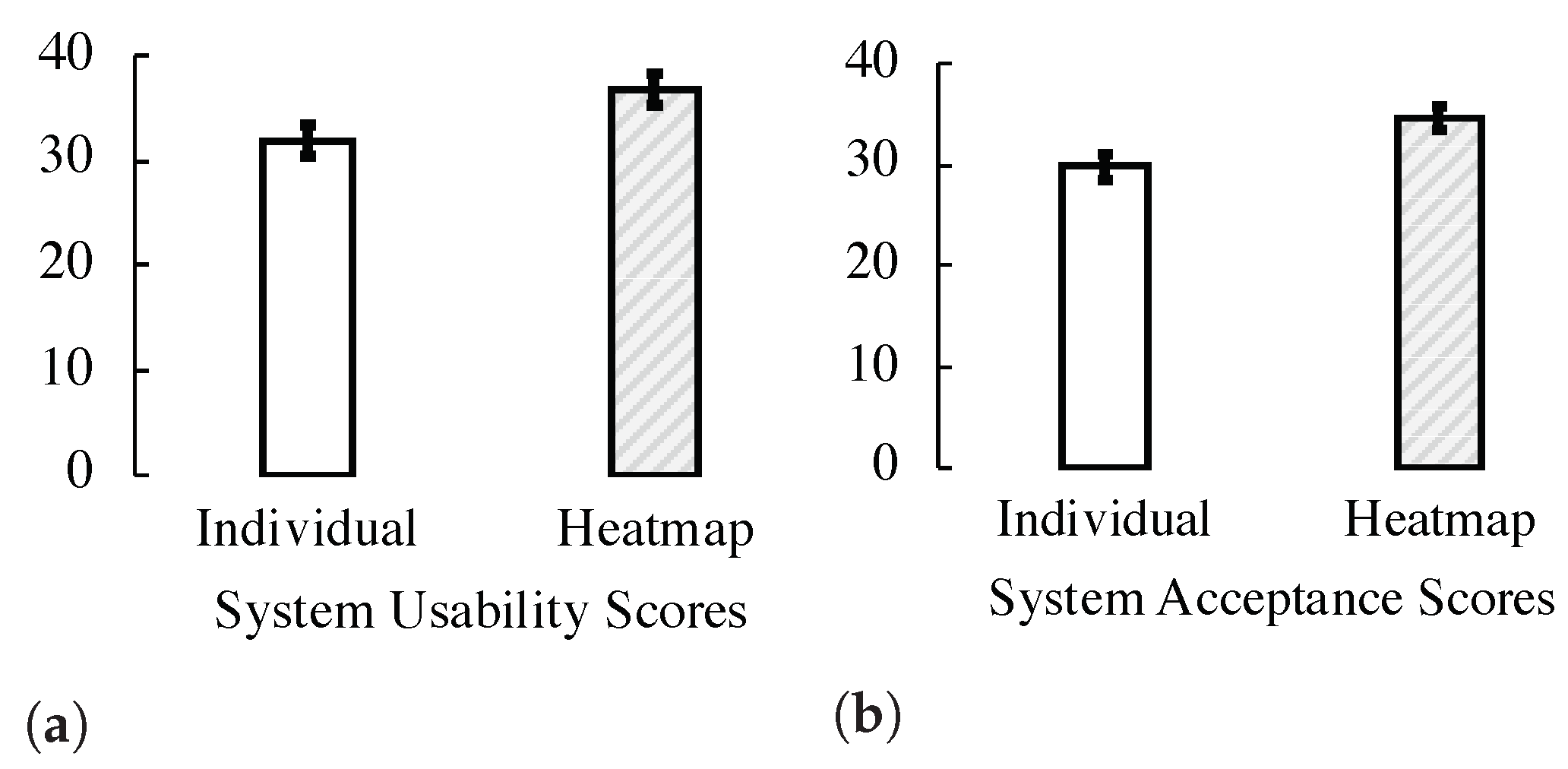

2.2. Results

2.3. Conclusions

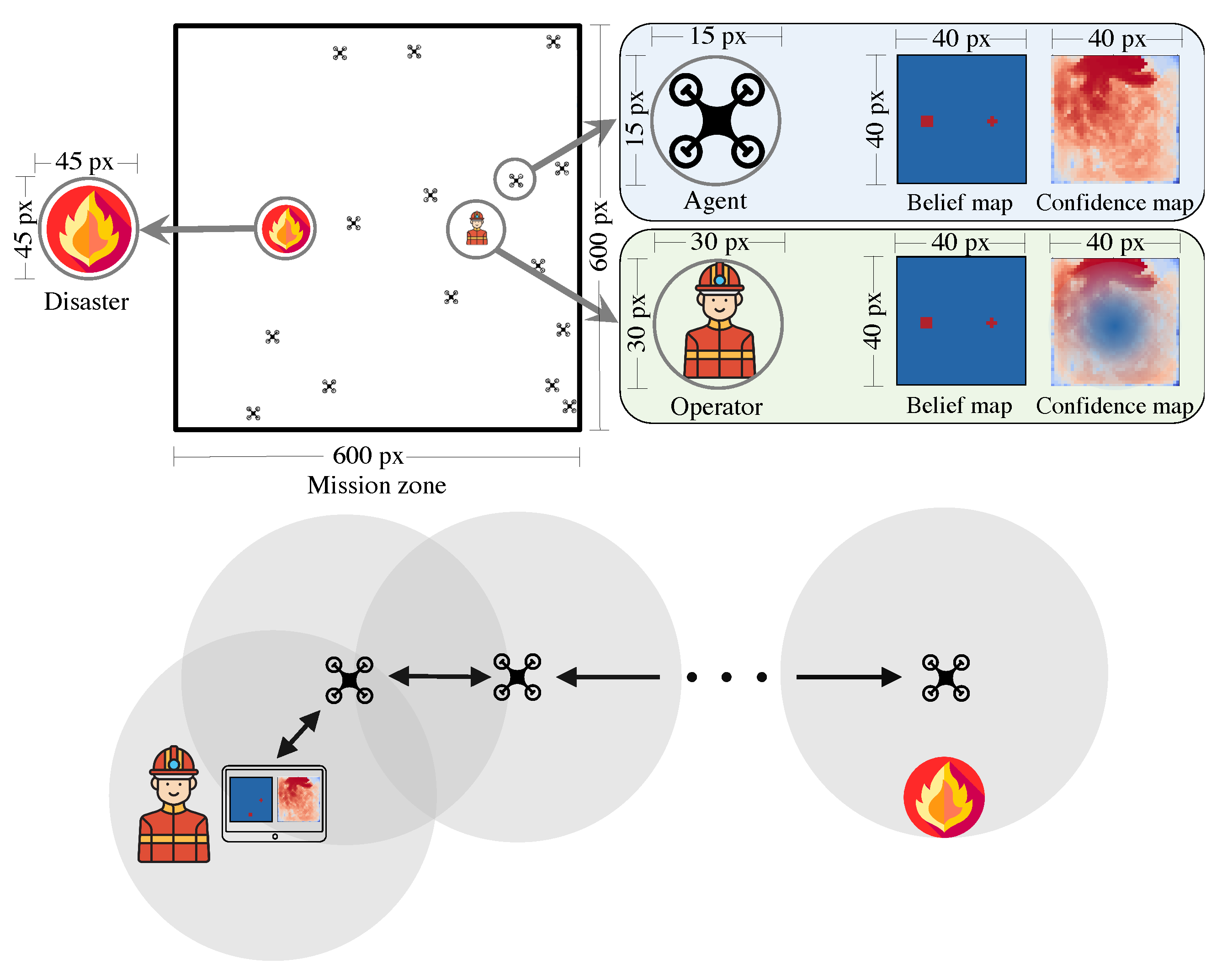

3. Human–Swarm Teaming Model

3.1. UAV Model

3.2. Operator and Swarm Interactions

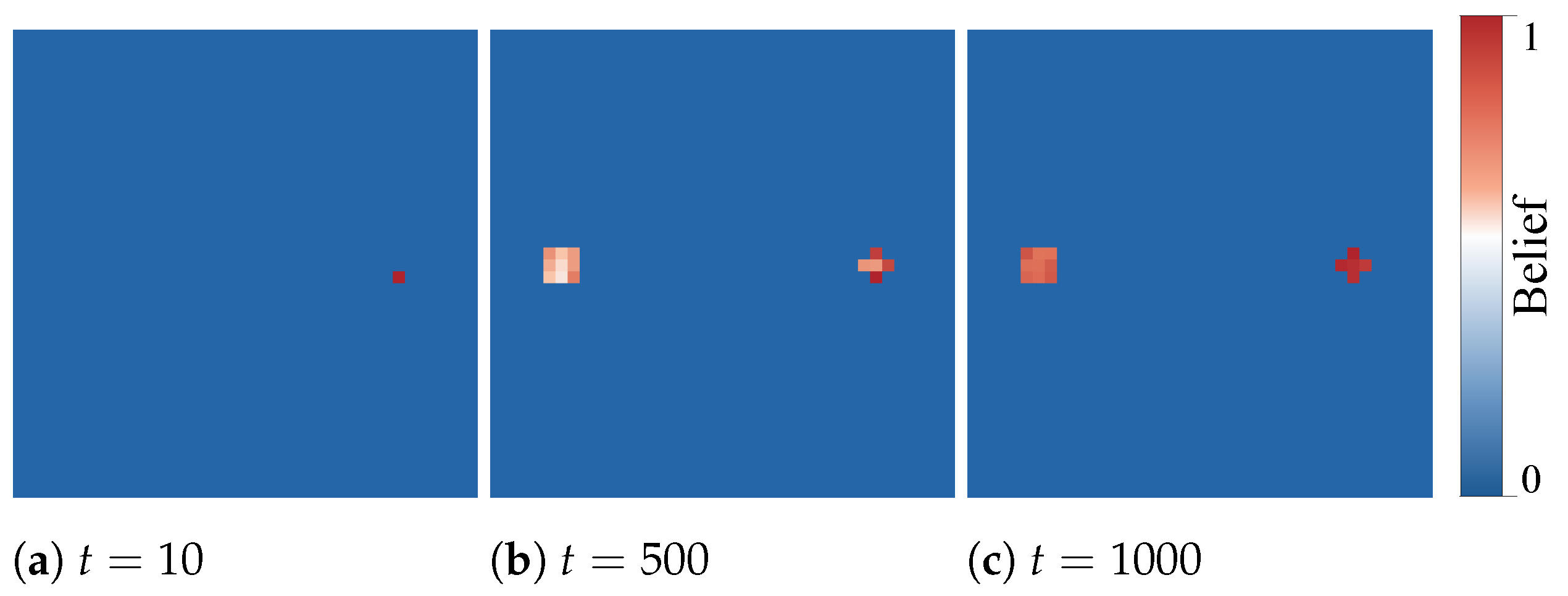

3.2.1. The Belief Map

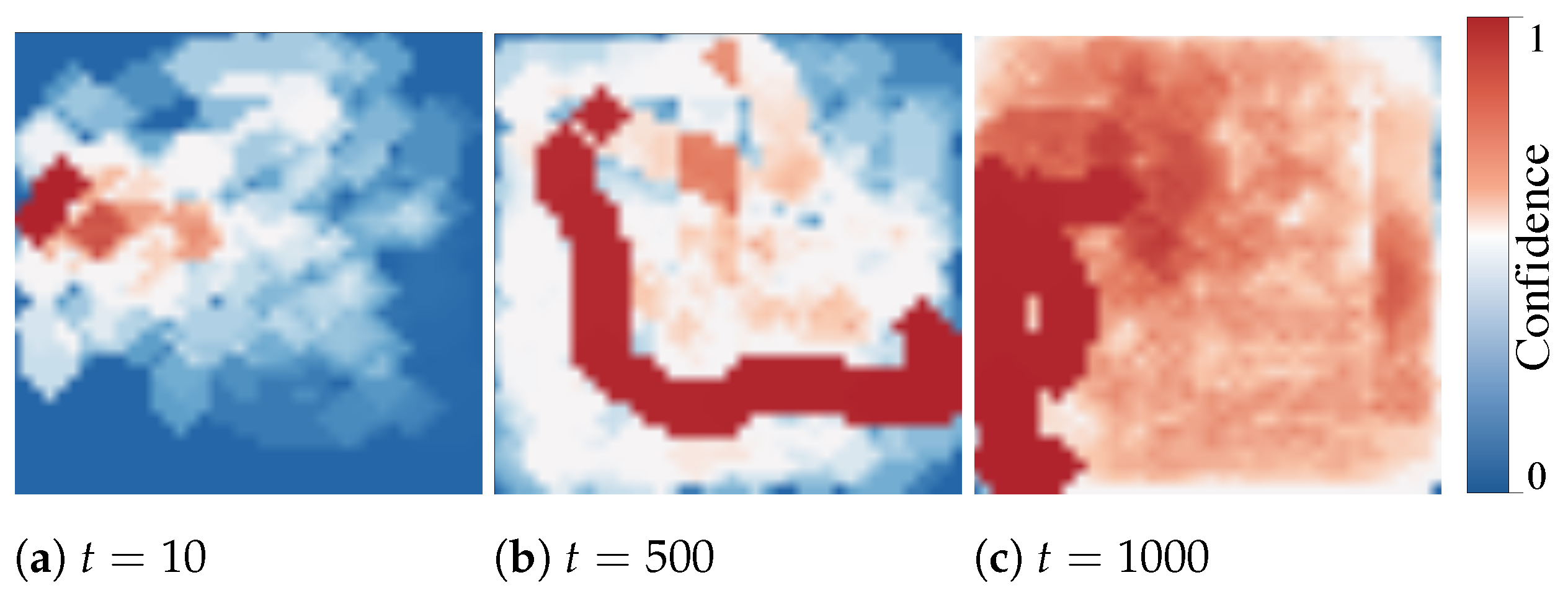

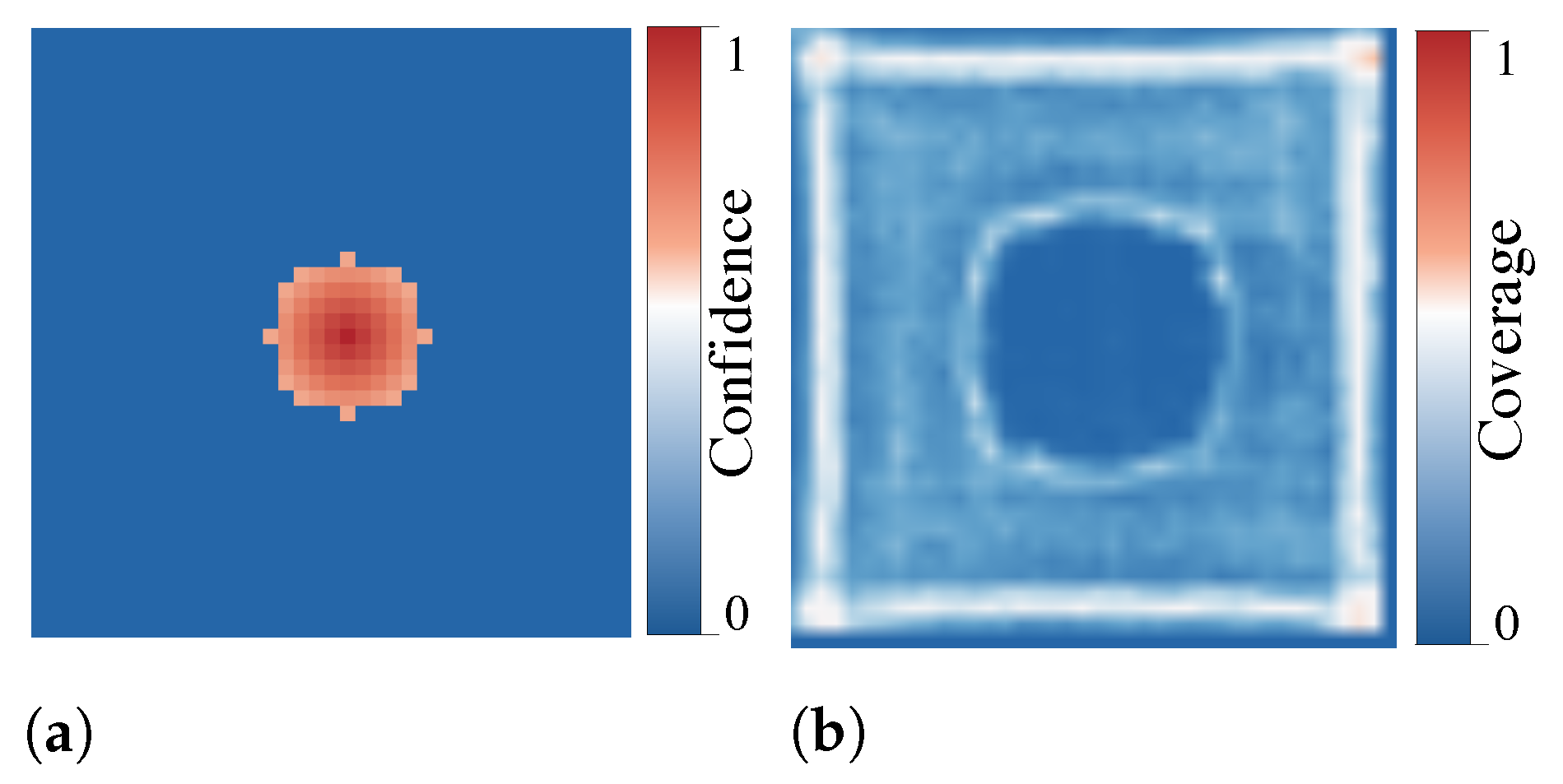

3.2.2. The Confidence Map

3.3. Human–Swarm Collective Decision-Making Model

3.4. Swarm’s Path Planning

4. Simulation Platform

Belief and Confidence Maps Setup

5. Empirical Evaluation

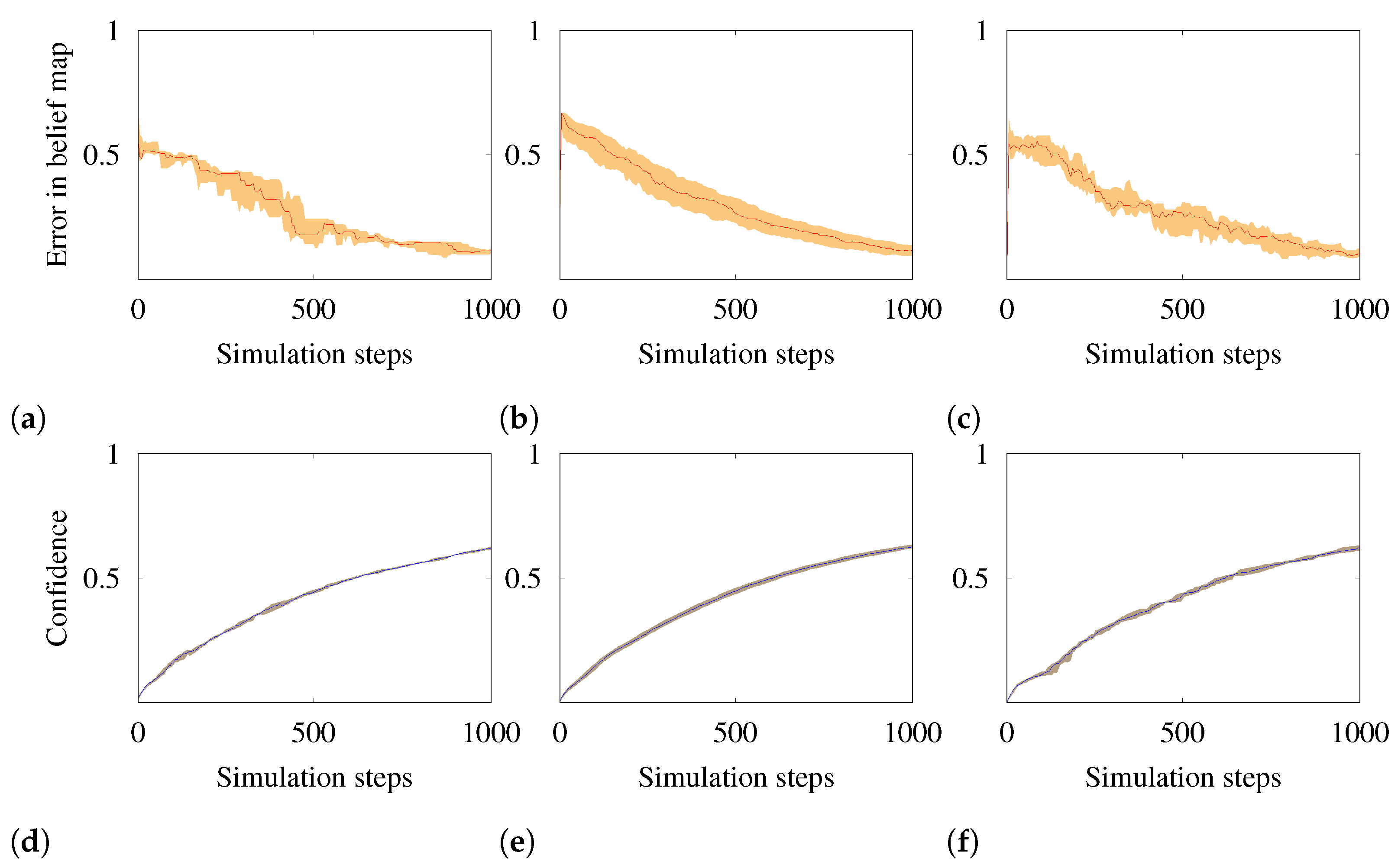

5.1. Experiment I: Autonomous Exploration

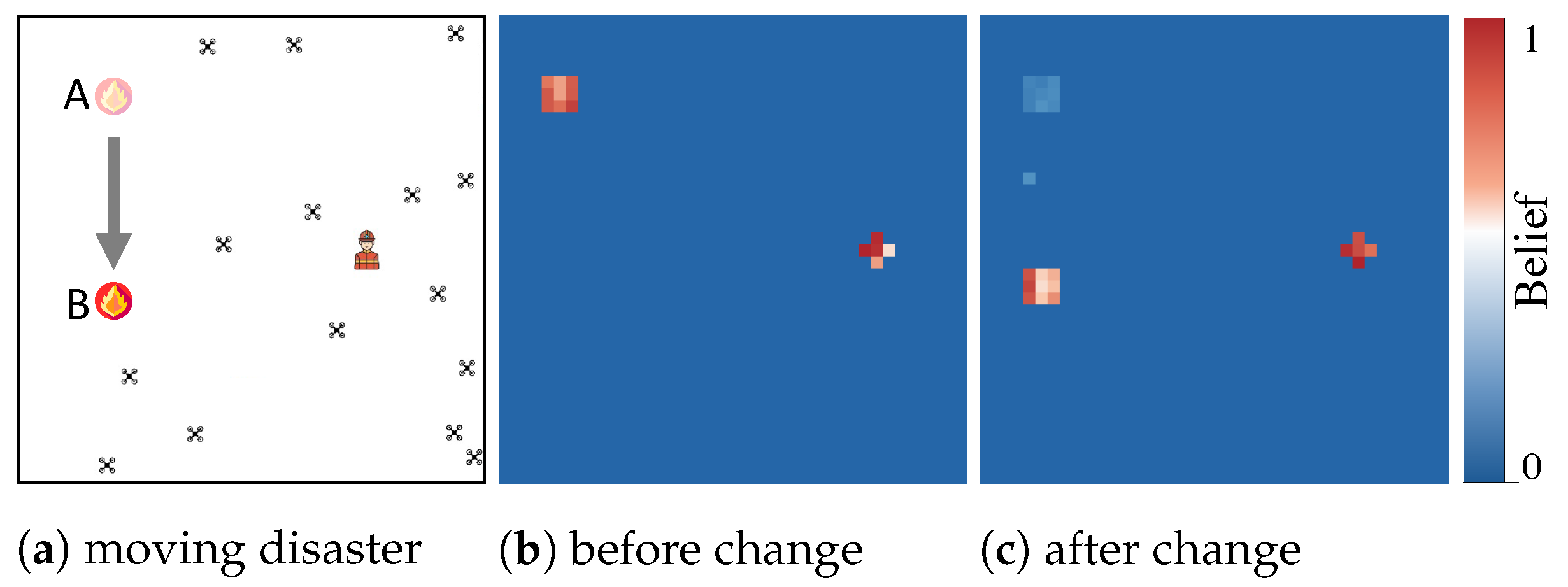

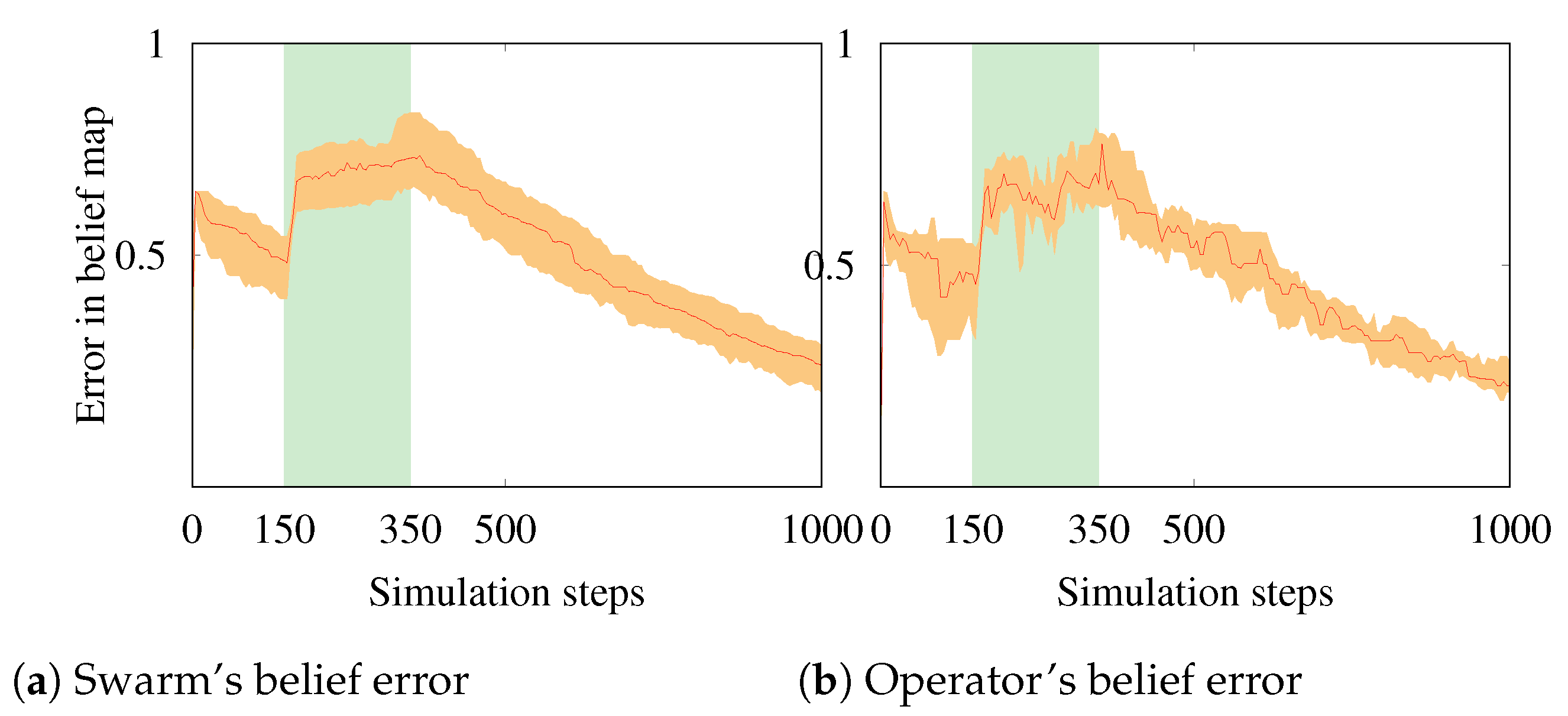

5.2. Experiment II: Response to Evolving Disaster

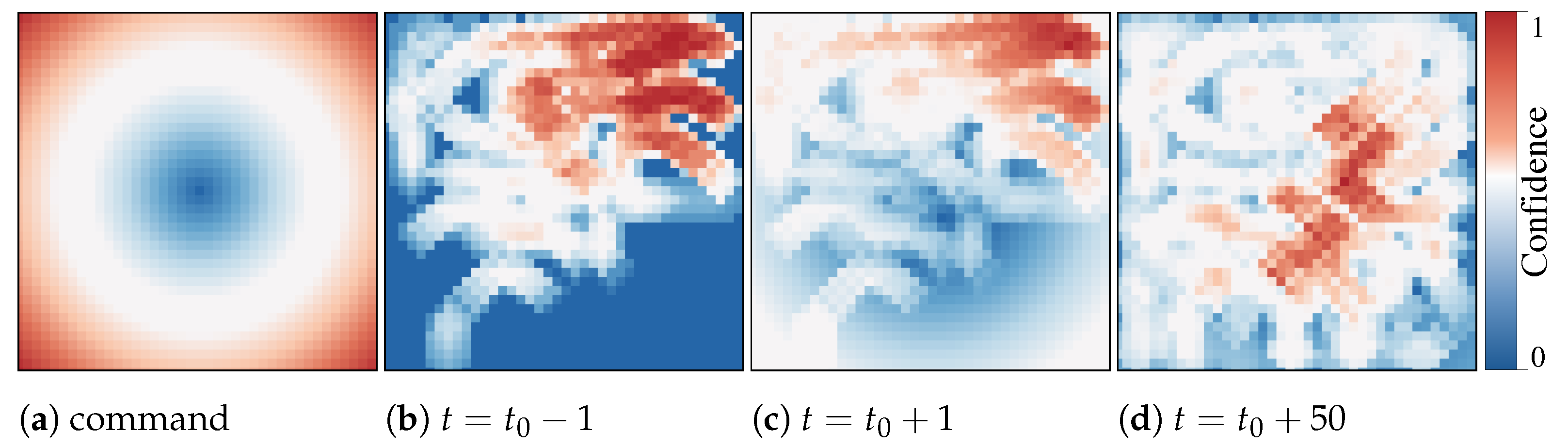

5.3. Experiment III: Human Interaction

5.4. Experiment IV: Human–Swarm Interaction User Study

6. Discussion and Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Innocente, M.S.; Grasso, P. Self-organising swarms of firefighting drones: Harnessing the power of collective intelligence in decentralised multi-robot systems. J. Comput. Sci. 2019, 34, 80–101. [Google Scholar] [CrossRef]

- Gkotsis, I.; Kousouraki, A.C.; Eftychidis, G.; Kolios, P.; Terzi, M. Swarm of UAVs as an emergency response technology. Risk Analysis Based on Data and Crisis Response Beyond Knowledge. In Proceedings of the 7th International Conference on Risk Analysis and Crisis Response (RACR 2019), Athens, Greece, 15–19 October 2019; p. 353. [Google Scholar]

- Busnel, Y.; Caillouet, C.; Coudert, D. Self-organized Disaster Management System by Distributed Deployment of Connected UAVs. In Proceedings of the ICT-DM 2019-6th International Conference on Information and Communication Technologies for Disaster Management, Paris, France, 18–20 December 2019; pp. 1–8. [Google Scholar]

- Chung, S.J.; Paranjape, A.A.; Dames, P.; Shen, S.; Kumar, V. A survey on aerial swarm robotics. IEEE Trans. Robot. 2018, 34, 837–855. [Google Scholar] [CrossRef] [Green Version]

- Hexmoor, H.; McLaughlan, B.; Baker, M. Swarm Control in Unmanned Aerial Vehicles. In Proceedings of the IC-AI, Vegas, NV, USA, 27–30 June 2005; pp. 911–917. [Google Scholar]

- Liu, R.; Jia, F.; Luo, W.; Chandarana, M.; Nam, C.; Lewis, M.; Sycara, K. Trust-Aware Behavior Reflection for Robot Swarm Self-Healing. In Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems, Montreal, QC, Canada, 13–17 May 2019; pp. 122–130. [Google Scholar]

- Matsuka, K.; Feldman, A.O.; Lupu, E.S.; Chung, S.J.; Hadaegh, F.Y. Decentralized Formation Pose Estimation for Spacecraft Swarms. Adv. Space Res. 2020, 67, 3527–3545. [Google Scholar] [CrossRef]

- Cain, M.S.; Wendell, D.M. Human perception and prediction of robot swarm motion. Micro-and Nanotechnology Sensors, Systems, and Applications XI. Int. Soc. Opt. Photonics 2019, 10982, 1098226. [Google Scholar]

- Lindner, S.; Schulte, A. Evaluation of Swarm Supervision Complexity. In International Conference on Intelligent Human Systems Integration; Springer: Berlin/Heidelberg, Germany, 2021; pp. 50–55. [Google Scholar]

- Patel, J.; Xu, Y.; Pinciroli, C. Mixed-granularity human-swarm interaction. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), IEEE, Montreal, QC, Canada, 20–24 May 2019; pp. 1059–1065. [Google Scholar]

- Ramchurn, S.D.; Wu, F.; Jiang, W.; Fischer, J.E.; Reece, S.; Roberts, S.; Rodden, T.; Greenhalgh, C.; Jennings, N.R. Human–agent collaboration for disaster response. Auton. Agents Multi-Agent Syst. 2016, 30, 82–111. [Google Scholar] [CrossRef] [Green Version]

- Nam, C.; Walker, P.; Li, H.; Lewis, M.; Sycara, K. Models of trust in human control of swarms with varied levels of autonomy. IEEE Trans. Hum.-Mach. Syst. 2019, 50, 194–204. [Google Scholar] [CrossRef]

- Ashcraft, C.C. Moderating Influence as a Design Principle for Human-Swarm Interaction; Brigham Young University: Provo, UT, USA, 2019. [Google Scholar]

- Oliveira, T.L.; Batista, M.R.; Romero, R.A. Analysis of human-swarm interaction through potential field manipulation. In Proceedings of the 2017 Latin American Robotics Symposium (LARS) and 2017 Brazilian Symposium on Robotics (SBR), IEEE, Curitiba, Brazil, 8–10 November 2017; pp. 1–6. [Google Scholar]

- Levillain, F.; St-Onge, D.; Zibetti, E.; Beltrame, G. More than the sum of its parts: Assessing the coherence and expressivity of a robotic swarm. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), IEEE, Nanjing, China, 27–31 August 2018; pp. 583–588. [Google Scholar]

- Capelli, B.; Secchi, C.; Sabattini, L. Communication through motion: Legibility of multi-robot systems. In Proceedings of the 2019 International Symposium on Multi-Robot and Multi-Agent Systems (MRS), IEEE, New Brunswick, NJ, USA, 22–23 August 2019; pp. 126–132. [Google Scholar]

- Brooke, J. SUS - A Quick and Dirty Usability Scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Van Der Laan, J.D.; Heino, A.; De Waard, D. A simple procedure for the assessment of acceptance of advanced transport telematics. Transp. Res. Part C: Emerg. Technol. 1997, 5, 1–10. [Google Scholar] [CrossRef]

- Lancaster, H.O.; Seneta, E. Chi-square distribution. Encycl. Biostat. 2005, 2. [Google Scholar] [CrossRef]

- Shaffer, J.P. Multiple hypothesis testing. Annu. Rev. Psychol. 1995, 46, 561–584. [Google Scholar] [CrossRef]

- Girden, E.R. ANOVA: Repeated Measures; Number 84; Sage: Newcastle upon Tyne, UK, 1992. [Google Scholar]

- Soorati, M.D.; Krome, M.; Mora-Mendoza, M.; Ghofrani, J.; Hamann, H. Plasticity in Collective Decision-Making for Robots: Creating Global Reference Frames, Detecting Dynamic Environments, and Preventing Lock-ins. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Macau, China, 3–8 November 2019; pp. 4100–4105. [Google Scholar]

- Valentini, G.; Ferrante, E.; Dorigo, M. The best-of-n problem in robot swarms: Formalization, state of the art, and novel perspectives. Front. Robot. AI 2017, 4, 9. [Google Scholar] [CrossRef] [Green Version]

- Dorigo, M.; Theraulaz, G.; Trianni, V. Reflections on the future of swarm robotics. Sci. Robot. 2020, 5, eabe4385. [Google Scholar] [CrossRef] [PubMed]

- Valentini, G.; Brambilla, D.; Hamann, H.; Dorigo, M. Collective perception of environmental features in a robot swarm. In International Conference on Swarm Intelligence; Springer: Berlin/Heidelberg, Germany, 2016; pp. 65–76. [Google Scholar]

- Valentini, G.; Hamann, H.; Dorigo, M. Self-organized collective decision making: The weighted voter model. In Proceedings of the AAMAS, Paris, France, 5–9 May 2014; pp. 45–52. [Google Scholar]

- Valentini, G.; Hamann, H.; Dorigo, M. Efficient decision-making in a self-organizing robot swarm: On the speed versus accuracy trade-off. In Proceedings of the 2015 International Conference on Autonomous Agents and Multiagent Systems, Istanbul, Turkey, 4–8 May 2015; pp. 1305–1314. [Google Scholar]

- Kolling, A.; Walker, P.; Chakraborty, N.; Sycara, K.; Lewis, M. Human interaction with robot swarms: A survey. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 9–26. [Google Scholar]

- Kolling, A.; Sycara, K.; Nunnally, S.; Lewis, M. Human swarm interaction: An experimental study of two types of interaction with foraging swarms. J. Hum.-Robot Interact. 2013, 2, 104–129. [Google Scholar] [CrossRef] [Green Version]

- Brown, D.S.; Kerman, S.C.; Goodrich, M.A. Human-swarm interactions based on managing attractors. In Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction, Bielefeld, Germany, 3–6 March 2014; pp. 90–97. [Google Scholar]

- Choset, H.; Lynch, K.M.; Hutchinson, S.; Kantor, G.; Burgard, W.; Kavraki, L.E.; Thrun, S. Principles of Robot Motion: Theory, Algorithms, and Implementations; Chapter 7; The MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Soria, E.; Schiano, F.; Floreano, D. SwarmLab: A Matlab Drone Swarm Simulator. arXiv 2020, arXiv:2005.02769. [Google Scholar]

- Garcia, R.; Barnes, L. Multi-UAV Simulator Utilizing X-Plane. J. Intell. Robot. Syst. 2010, 57, 393–406. [Google Scholar] [CrossRef]

- Pinciroli, C.; Trianni, V.; O’Grady, R.; Pini, G.; Brutschy, A.; Brambilla, M.; Mathews, N.; Ferrante, E.; Di Caro, G.; Ducatelle, F.; et al. ARGoS: A modular, parallel, multi-engine simulator for multi-robot systems. Swarm Intell. 2012, 6, 271–295. [Google Scholar] [CrossRef] [Green Version]

- The Python Arcade Library. Project Website. 2019. Available online: http://arcade.academy/ (accessed on 1 November 2021).

- Walker, P.; Nunnally, S.; Lewis, M.; Kolling, A.; Chakraborty, N.; Sycara, K. Neglect benevolence in human control of swarms in the presence of latency. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Seoul, Korea, 14–17 October 2012; pp. 3009–3014. [Google Scholar]

- Hamann, H.; Reina, A. Scalability in computing and robotics. IEEE Trans. Comput. 2021. [Google Scholar] [CrossRef]

| Contextual Factor | Individual | Heat Map | p | |

|---|---|---|---|---|

| Larger swarm size | 21 | 79 | * | |

| Communication constrained | 43 | 57 | ||

| Displaying motion and coverage | 31 | 69 | * | |

| Time critical | 28 | 72 | * | |

| Time non-critical | 43 | 57 | ||

| Detecting errors | 74 | 26 | * | |

| Transparency | 44 | 56 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Divband Soorati, M.; Clark, J.; Ghofrani, J.; Tarapore, D.; Ramchurn, S.D. Designing a User-Centered Interaction Interface for Human–Swarm Teaming. Drones 2021, 5, 131. https://doi.org/10.3390/drones5040131

Divband Soorati M, Clark J, Ghofrani J, Tarapore D, Ramchurn SD. Designing a User-Centered Interaction Interface for Human–Swarm Teaming. Drones. 2021; 5(4):131. https://doi.org/10.3390/drones5040131

Chicago/Turabian StyleDivband Soorati, Mohammad, Jediah Clark, Javad Ghofrani, Danesh Tarapore, and Sarvapali D. Ramchurn. 2021. "Designing a User-Centered Interaction Interface for Human–Swarm Teaming" Drones 5, no. 4: 131. https://doi.org/10.3390/drones5040131

APA StyleDivband Soorati, M., Clark, J., Ghofrani, J., Tarapore, D., & Ramchurn, S. D. (2021). Designing a User-Centered Interaction Interface for Human–Swarm Teaming. Drones, 5(4), 131. https://doi.org/10.3390/drones5040131