Abstract

Digital skills should be incorporated in the curriculum as the skills are essential attributes graduates should acquire before joining the workforce. Understanding the digital skills framework and abilities among learners may help educators create suitable learning outcomes, activities, and assessments to enable the learners to acquire these skills. This study shares expert judgements on a digital skills instrument (DSI) on content validation framework according to sufficiency, clarity, coherence, and relevance. A pre-test involving 218 students from various disciplines was conducted and Cronbach’s Alpha was used to measure the validity and reliability of the DSI. The overall result indicated a strong reliability. Clarity was the characteristic garnering the highest value, followed by coherence, relevance, and sufficiency. The DSI was revised and 28 items encompassing five components were proposed following the feedback of: information literacy, computer and technology literacy, digital communication/collaboration skill, digital identity and well-being, and digital ethic. Based on the pre-test, all the components indicated high reliability, ranging from 0.847 to 0.958, and all of the items were retained to measure digital skills. Overall, the experts’ evaluations and pre-tests suggested that the DSI and its five components as the digital skills framework can be used for measuring digital skills.

1. Introduction

1.1. Digital Skills Proficiency: Influencing Factors

Having proficient digital skills is important in this era of modernity, as technology has become a necessity to keep the world functioning smoothly. Because of this, digital literacy has been an issue of concern regarding whether upcoming generations will be adequately equipped with digital knowledge. The ability to locate, evaluate, and produce clear information across a range of media platforms is referred to as digital literacy [1]. There are many factors that influence one’s digital skills proficiency. Technical skills, critical understanding, and communicative abilities are some of the factors listed [1], with critical understanding being the most important. On the other hand, students’ level of education also has an impact on the development of their digital skills [2]. Additionally, individuals who have a great critical understanding are also able to undertake a more in-depth analysis and evaluation of the information that they find in the media [1]. Another factor is technical skills, defined by Dewi et al. [1] as “the ability to access and operate media”. As the factor implies, individuals possessing this skill are good at computer and internet skills, the use of media, and even advanced use of the internet. Another factor is one’s ability to communicate. It has been suggested that individuals who have mastered the skill are able to interact with others and participate online, while also being able to produce media content. The other factor that affects digital skills proficiency that was discussed by Van Deursen et al. [3] is age. It has been postulated that a person’s age may have an impact on their digital competency. On the other hand, digital skills have facilitated the growth of big data analysis. Because decision-making based on big data analysis is now widespread, there is a compelling need to improve digital skills [4].

1.2. Digital Skills as Graduate Attributes in the Curriculum Framework

Digital skills are among the future skill sets required of all graduates in order to embrace challenges and stay relevant [5]. They are known to provide the younger generation with the skills required in the 21st century, ensuring their survival in an unfamiliar environment. Therefore, those who have these skills are more employable, productive, and creative, and they will remain safe and secure in the new digital economy landscape. In other words, digital skills are needed as they encompass the ability to acquire and manage information through digital devices, communication applications, and networks [6]. The new skillset and the change in the education landscape also prompted the Malaysian Qualification Agency (MQA) to revise the 2010 qualification framework [7] so that it would emphasize the digital attribute. This was done to ensure that students at higher education institutions would be taught and trained in the skills, in addition to other skills such as numeracy, communication, interpersonal, and entrepreneurial skills. This new trajectory is aligned with the global agenda of preparing graduates to thrive in a volatile, uncertain, complex, and ambiguous (VUCA) environment. For this reason, since April 2019, the Malaysian Qualification Agency (MQA) has required all universities in Malaysia to comply with this new framework for their new curricula.

To meet this requirement, educators must not only expose their students to digital skills, but they must also determine the levels of their students’ digital skills in the first place in order to help the former to design materials that can be used to equip the latter with the skills. This is important, as it can help them to develop appropriate assessments and approaches to achieve intended learning outcomes according to different disciplines or courses. Most importantly, the practice also helps them to understand their students better and to design a curriculum that meets their needs.

The Malaysian Qualification Framework (MQF) has established a general definition and attributes as the minimal criteria for higher education providers to develop their curricula. The MQF defines digital skills as the ability to use information/digital technology to support work and studies. The skills include gathering and storing information, processing data, using applications for problem solving and communication, and using digital skills ethically or responsibly. Four components were extracted from the general definition: information literacy, computer and technology literacy, visual literacy, and digital communication/collaboration skills.

The focus of this paper is to seek validation from experts based on their views on the digital skills instrument that contains the four components, and to establish the reliability of final items based on the pilot study. The aim is that the research outcome could guide curriculum developers and course designers to design appropriate content knowledge, delivery activities, and assessments that could instill and equip students with both skills that are aligned with the MQA description and the aspiration of the Malaysian Government who wishes to produce graduates with 21st-century skills.

2. Materials and Methods

This study is a cross-sectional study that uses the quantitative method to construct a digital skills framework. This study involves the development of questionnaire items, validation of the questionnaire process and testing the reliability of the questionnaire. The validation of the questionnaire involves expert content validation. For determining the reliability of the questionnaire, internal reliability was conducted and analyzed using Cronbach’s Alpha.

2.1. Digital Skills Instrument (DSI)

There are 22 items that are divided according to the four components in the digital skills instrument (DSI), namely, information literacy, computer and technology literacy, visual literacy, and digital communication/collaboration skills. Table 1 shows a breakdown of the number of items for each of the components.

Table 1.

Initial component of digital skills instrument (DSI).

2.2. Expert Judgment Content Validation

Evaluation through expert judgements involves asking several individuals to make a judgement on an instrument or to express their opinions on a particular aspect (refer to Table 2 for the list of experts). The experts play a crucial role in explaining, adding, and/or modifying the required components. Initially, two experts in measurement and evaluation from a comprehensive and a research university were selected to evaluate the digital instrument. Then, feedback from eight experts was also taken into account in the refinement of the DSI. Overall, two digital content experts, three senior lecturers from science and technology, and three experts from social sciences were involved to ensure the validity of the DSI before its final version was administered to the actual sample.

Table 2.

Judges and field of expertise.

The volunteer experts came from a variety of disciplines, including science, technology, and social sciences (non-science and technology). The criteria for selection were based on either their experiences in curriculum development, in holding any academic post, or in having more than 10 years research or teaching experience. According to the standard and MQF, digital skills must be measured across disciplines and at all qualification levels. Therefore, experts from different fields should be more than able to provide better descriptions of appropriate digital attributes for different curriculum or academic programs.

All of the items were divided into the categories of sufficiency, clarity, coherence, and relevance (refer to Table 3 for the scales and indicators of the categories). All of the experts were required to rate each item using a scale ranging from 4 to 1. The experts were also encouraged to give comments in the open space in the instrument.

Table 3.

Categories, indicators, and scale.

2.3. Pilot Study

After the questionnaire was assessed by the experts, the resulting instrument was pilot tested with potential respondents to determine the reliability of each component and item in the final instrument. An online survey was done and randomly distributed to students from various faculties and fields.

3. Results

3.1. Content Validation by Expert Judgment

Cronbach’s Alpha values were measured to check the data reliability and internal consistency, and values higher than 0.6 were considered to be acceptable. Values ranging from 0 to 1 with greater values indicate higher internal consistency measures on the opinions and perceptions among the respondents. The reliability of the instrument focusing on the experts’ evaluations on the four constructs, namely sufficiency, clarity, coherence, and the relevance of the instrument were analyzed using Cronbach’s Alpha. The overall result indicated strong reliability (0.915 ≤ Cronbach’s α ≤ 0.95) for the 22 items. Table 4 presents the descriptive statistics (means and standard deviations) of the categories of the perceptions of digital skills. The means ranged from 3.40 to 3.56.

Table 4.

Cronbach’s Alpha and descriptive statistics for each of the categories.

Table 5 indicates the Cronbach’s Alpha values for each component and characteristic based on the judgments by the experts.

Table 5.

Cronbach’s Alpha values for each of the components and characteristics of digital skills.

It can be seen that the Cronbach’s Alpha values for information literacy ranged from 0.924 to 0.961 and the Cronbach’s Alpha values for computer and technology literacy ranged from 0.860 to 0.966, indicating strong internal consistencies compared with the other two components, namely visual literacy, whose values ranged from 0.732 to 0.902, and digital communication/collaboration skills, whose values ranged from 0.650 to 0.837. It could be concluded that the experts’ scores on their perceptions showed consistency towards digital skills on information literacy and computer and technology literacy. On the other hand, the respondents’ perceptions of visual literacy showed a moderate level of consistency compared with digital communication/collaboration skills.

Further analysis on the enhancement of the questionnaires was conducted by considering the input given by the experts. For example, the questionnaire was improved by restructuring the order of the items, the sentence structure, and by adding two components, as well as removing the visual literacy component. Some of the items in the component were also merged with the other components. Consequently, 28 items representing five components in the DSI, namely information literacy, computer and technology literacy, digital communication/collaboration skills, digital identity and well-being, and digital ethics were developed.

3.2. Pilot Study

Distribution of Respondents

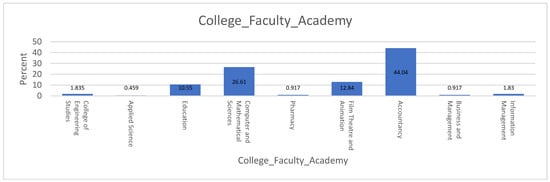

Figure 1 below shows the distribution of respondents based on college and faculties. Overall, 218 students from various academic programs participated in the online survey. Specifically, the highest percentage of the respondents came from the Faculty of Accountancy (44.04%), followed by students from the Faculty of Computer and Mathematical Sciences (26.61%) and from the Faculty of Film Theatre and Animation (12.84%). The results of the pilot study were analyzed using Cronbach’s Alpha. Table 6 shows the proposed number of items by components, as well as the item-total statistics, which include the scale mean if an item is deleted, scale variance if an item is deleted, and corrected item-total correlation and Cronbach’s Alpha if an item is deleted. As eliminating items from each component reduces the respective Cronbach’s Alpha, most items were retained. Moreover, the overall Cronbach’s Alpha analysis revealed a strong internal consistency among respondents for each component. The overall analysis and the value of Cronbach’s Alpha if an item was deleted showed very high values with alpha values greater than 0.8.

Figure 1.

Distribution of respondents according to college and faculties.

Table 6.

Item-total statistics: item and values in five components of perceptions on digital skills.

4. Discussion

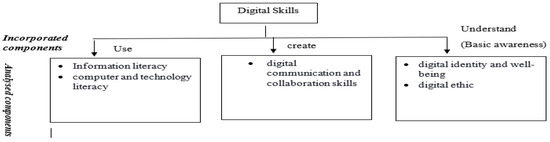

The findings of this study led to the development of a digital skills framework. The construction of the digital skills framework in this study was subjected to a process of recurrent validation. There were two stages in the process. In the first stage, two experts provided extensive feedback on the content for four constructs (information literacy, computer and technology literacy, visual literacy, and digital communication/collaboration skills). The result of the first stage validation shows that two components, “visual literacy” and “digital communication/collaboration skills”, had a moderate level of consistency. Only “visual literacy” was removed from the list of components. “Digital communication/collaboration skills” remained as a component as they are the skills that are currently needed. In the digital world, all learners or users must be able to collaborate with others in order to achieve the purpose and advantages of using digital technology. These qualities, notably communication and collaboration skills, are seen as crucial in the 21st century [9]. The second stage of validation involved eight experts. In the second stage, two important components were added based on the relevant items and comments from the experts. Their input recognized the need for practicing integrity in the digital world. Many recent studies have focused on the influence of digital technology use on well-being, particularly among adolescents [10]. Hence, components of the framework’s five components are information literacy, computer and technology literacy, digital communication and collaboration skills, digital identity and well-being, and digital ethics. The importance of these primary components in the development of digital skills has been emphasized. Generally, these components incorporate basic awareness (such as managing information, full participation in the digital society, and showing responsibilities and appreciation), use (such as technical knowledge and the utilization of knowledge resources), and the creation of content with an effort towards adaptation to the digital society [11]. Figure 2 illustrates the analyzed components alongside the incorporated components, which have been highlighted by [11].

Figure 2.

Comparison between the analyzed components (this study) and incorporated components [11].

As shown in Figure 2, anyone who wants to develop their digital skills must first learn how to obtain information and use computers or other digital devices, as shown in the incorporated component of “use”. A healthy society emphasizes not only the application of digital technology and knowledge (which leads to information literacy, computer literacy, and technology literacy), but also the development of new knowledge through communication and collaboration. Additionally, everyone is drawn closer together via engagement and communication, as depicted in the incorporated component of “create”. However, when there are several parties or individuals involved, communication becomes more complex. Thus, for ethical and well-being considerations, the use of knowledge exploration and social interaction in communication should be monitored. Hence, “understand” entails a socialization process, i.e., the individuals’ responsibilities in interaction and communication. A guide on how to use digital tools wisely, such as social presence and emotional presence in social networks, is required for consideration in order for the development of digital skills. Additionally, a proper guide can assist individuals to develop their digital identities [12]. Eventually, the five components of the digital skills framework have been well-elucidated in the three aspects of “use”, “create”, and “understand” with basic awareness, as shown in Figure 2.

5. Conclusions

The aim of this study was to obtain the validity and reliability of the digital skills framework through expert judgements and a pilot test study. Consequently, information literacy, computer and technology literacy, digital communication and collaboration skills, digital identity and well-being, and digital ethics are the five agreed-upon components of the digital skills. Understanding the components of the digital skills is important to all educators before they can design their lessons, learning activities, and assessments during curriculum development. Another critical aspect is that educators must identify their students’ digital literacy or ability in order to create significant and meaningful digital learning experiences in the classroom. The digital skills instrument (DSI) can be used as a tool to gain better insights into digital literacy among students. Finally, this tool can also serve as a digital needs analysis tool to investigate the strengths and weaknesses, and provide continuous quality improvement in curriculum development.

Author Contributions

Conceptualization, S.R.S.A.; methodology, S.R.S.A. and S.H.T.; software, S.M.D.; validation, S.R.S.A., S.H.T., and S.M.D.; formal analysis, S.R.S.A., S.H.T., and S.M.D.; investigation, S.R.S.A., S.H.T., and S.M.D.; resources, S.R.S.A.; data curation, S.R.S.A., S.H.T., and S.M.D.; writing—original draft preparation, S.R.S.A., and S.M.D.; writing—review and editing, F.A.N., and S.F.D.; visualization, S.R.S.A.; supervision, S.R.S.A.; project administration, S.R.S.A.; funding acquisition, S.R.S.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by a special research grant from Universiti Teknologi Mara (600-RMC/GPK 5/3 (007/2020)).

Institutional Review Board Statement

Ethical review and approval for this study was obtained from the UITM Research Ethic Committee (REC/08/202) MR/713.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author. The data are not publicly available due to privacy restrictions.

Acknowledgments

The researchers would like to express their appreciation to all the experts who dedicated their time and expertise during the preparation of the survey in the study.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Dewi, R.S.; Hasanah, U.; Zuhri, M. Analysis Study of Factors Affecting Students’ Digital Literacy Competency. Ilkogr. Online 2021, 20, 424–431. [Google Scholar]

- Vodă, A.I.; Cautisanu, C.; Grădinaru, C.; Tănăsescu, C.; de Moraes, G.H.S.M. Exploring Digital Literacy Skills in Social Sciences and Humanities Students. Sustainability 2022, 14, 2483. [Google Scholar] [CrossRef]

- Van Deursen, A.J.A.M.; Helsper, E.J.; Eynon, R. Measuring Digital Skills. From Digital Skills to Tangible Outcomes Project Report. 2014. Available online: https://www.oii.ox.ac.uk/research/projects/?id=112 (accessed on 20 January 2022).

- Sivarajah, U.; Kamal, M.M.; Irani, Z.; Weerakkody, V. Critical analysis of Big Data challenges and analytical methods. J. Bus. Res. 2017, 70, 263–286. [Google Scholar] [CrossRef]

- World Economic Forum. Platform for Shaping the Future of the New Economy and Society. Schools of the Future: Defining New Models of Education for the Fourth Industrial Revolution; World Economic Forum: Geneva, Switzerland, 2020. [Google Scholar]

- UNESCO. A Global Framework of Reference on Digital Literacy Skills for Indicators 4.4.2; Information Paper No. 51; UNESCO Institute for Statistics: Montreal, QC, Canada, 2018; Available online: http://uis.unesco.org/sites/default/files/documents/ip51-global-framework-reference-digital-literacy-skills-2018-en.pdf (accessed on 20 January 2022).

- Malaysian Qualifications Agency. Malaysian Qualification Framework (MQF), 2nd ed.; Malaysian Qualification Agency: Kuala Lumpur, Malaysia, 2017. [Google Scholar]

- Escobar-Pérez, J.; Cuervo-Martínez, Á. Content Validity and expert judgement: An approach to its use. Av. Med. 2008, 6, 27–36. [Google Scholar]

- Van Laar, E.; Van Deursen, A.J.; Van Dijk, J.A.; De Haan, J. The relation between 21st-century skills and digital skills: A systematic literature review. Comput. Hum. Behav. 2017, 72, 577–588. [Google Scholar] [CrossRef]

- Dienlin, T.; Johannes, N. The impact of digital technology use on adolescent well-being. Dialogues Clin. Neurosci. 2022, 22, 135–142. [Google Scholar] [CrossRef] [PubMed]

- Belshaw, D.A. What Is’ Digital Literacy’? A Pragmatic Investigation. Ph.D. Thesis, Durham University, Durham, UK, 2012. [Google Scholar]

- Bozkurt, A.; Tu, C.H. Digital identity formation: Socially being real and present on digital networks. Educ. Media Int. 2016, 53, 153–167. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).