Can Transfer Learning Overcome the Challenge of Identifying Lemming Species in Images Taken in the near Infrared Spectrum? †

Abstract

1. Introduction

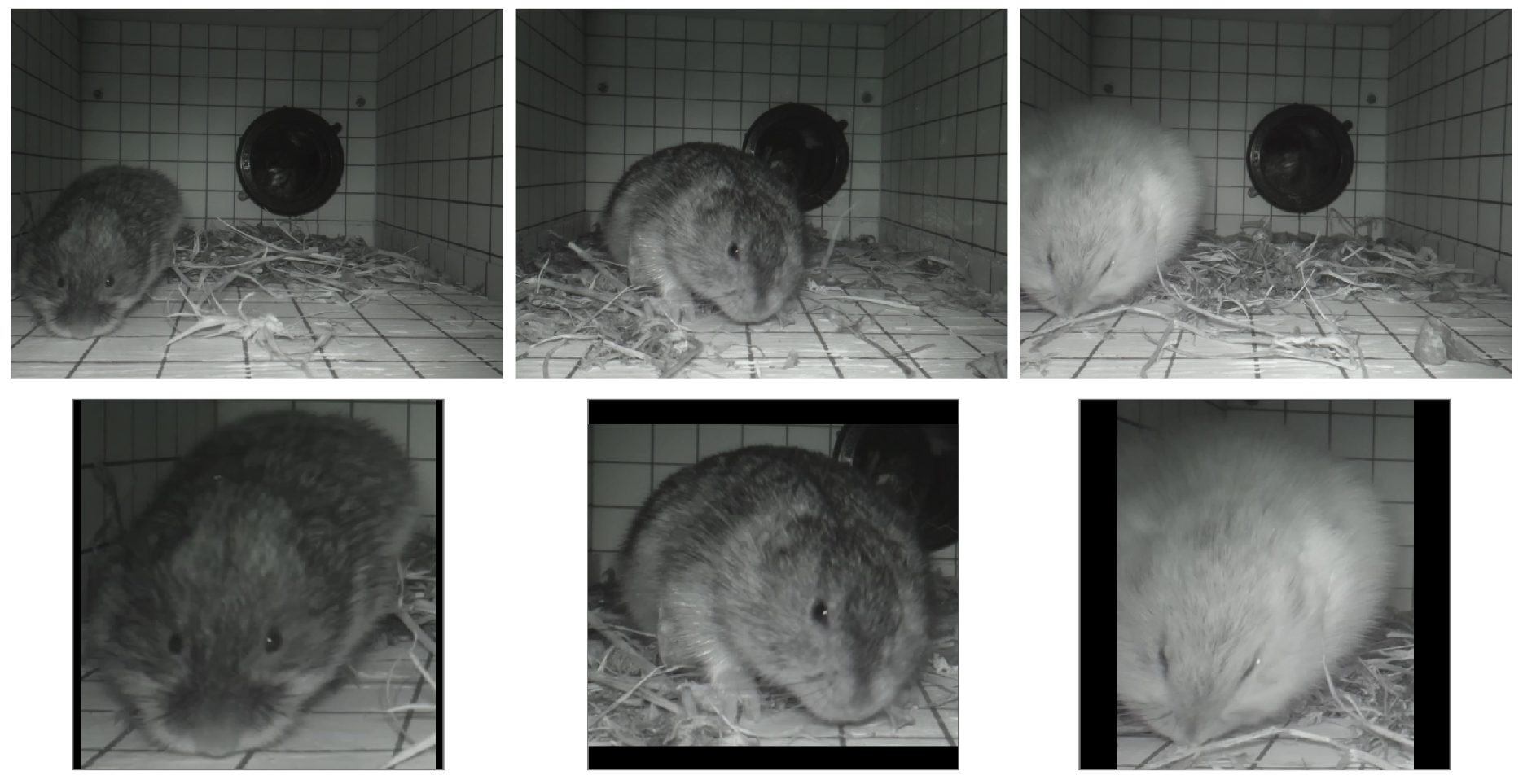

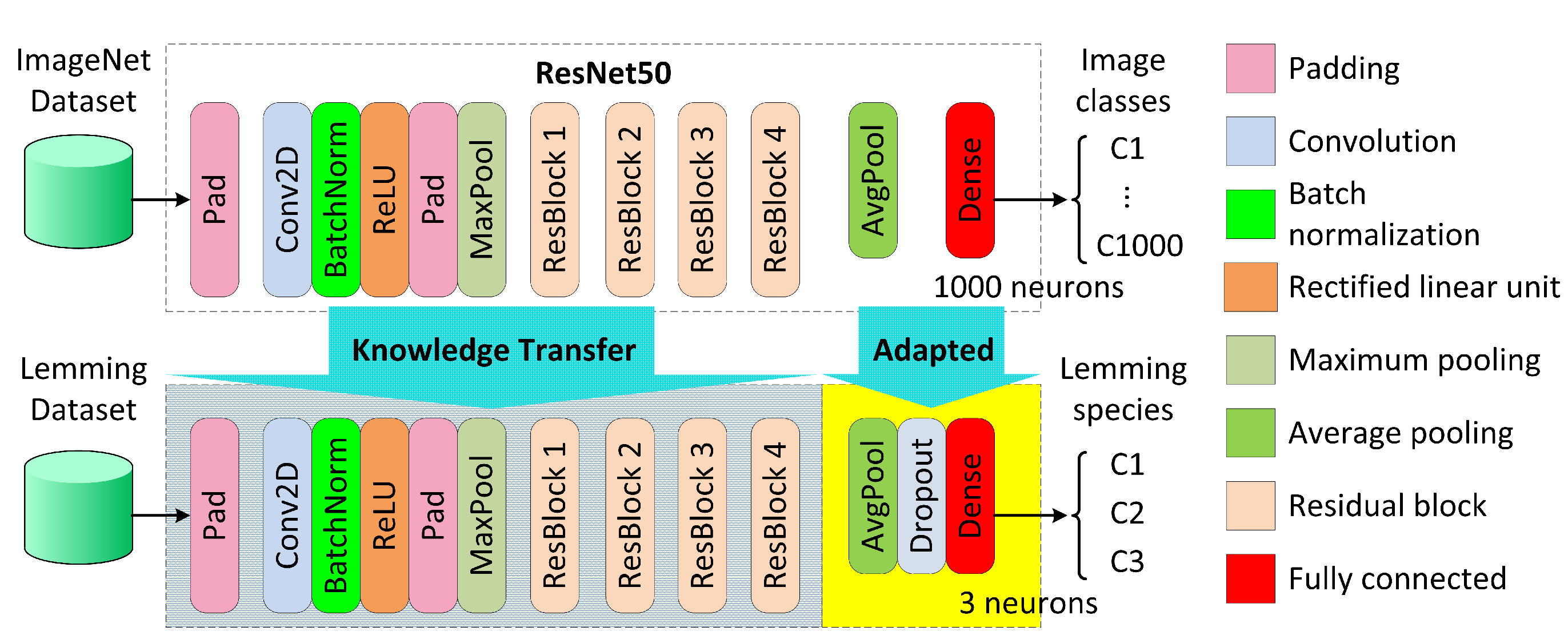

2. Data and Method

3. Results

4. Discussion and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Poirier, M.; Gauthier, G.; Domine, F. What guides lemmings movements through the snowpack? J. Mammal. 2019, 100, 1416–1426. [Google Scholar] [CrossRef]

- Krebs, C.J. Population Fluctuations in Rodents; The University of Chicago Press: Chicago, IL, USA, 2013. [Google Scholar]

- Schmidt, N.M.; Ims, R.A.; Høye, T.T.; Gilg, O.; Hansen, L.H.; Hansen, J.; Lund, M.; Fuglei, E.; Forchhammer, M.C.; Sittler, B. Response of an arctic predator guild to collapsing lemming cycles. Proc. R. Soc. B Biol. Sci. 2012, 279, 4417–4422. [Google Scholar] [CrossRef] [PubMed]

- Soininen, E.M.; Jensvoll, I.; Killengreen, S.T.; Ims, R.A. Under the snow: A new camera trap opens the white box of subnivean ecology. Remote Sens. Ecol. Conserv. 2015, 1, 29–38. [Google Scholar] [CrossRef]

- Kalhor, D.; Poirier, M.; Pusenkova, A.; Maldague, X.; Gauthier, G.; Galstian, T. A Camera Trap to Reveal the Obscure World of the Arctic Subnivean Ecology. IEEE Sens. J. 2021, 21, 28025–28036. [Google Scholar] [CrossRef]

- Kalhor, D.; Poirier, M.; Gauthier, G.; Ibarra-Castanedo, C.; Maldague, X. An Autonomous Monitoring System with Microwatt Technology for Exploring the Lives of Arctic Subnivean Animals. Electronics 2024, 13, 3254. [Google Scholar] [CrossRef]

- Pusenkova, A.; Poirier, M.; Kalhor, D.; Galstian, T.; Gauthier, G.; Maldague, X. Optical design challenges of subnivean camera trapping under extreme Arctic conditions. Arct. Sci. 2022, 8, 313–328. [Google Scholar] [CrossRef]

- Low, D.G. Distinctive image features from scale-invariant keypoints. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Pagire, V.; Chavali, M.; Kale, A. A comprehensive review of object detection with traditional and deep learning methods. Signal Process. 2025, 237, 110075. [Google Scholar] [CrossRef]

- Chen, L.; Li, S.; Bai, Q.; Yang, J.; Jiang, S.; Miao, Y. Review of Image Classification Algorithms Based on Convolutional Neural Networks. Remote Sens. 2021, 13, 4712. [Google Scholar] [CrossRef]

- Zhang, R.; Zhu, Y.; Ge, Z.; Mu, H.; Qi, D.; Ni, H. Transfer Learning for Leaf Small Dataset Using Improved ResNet50 Network with Mixed Activation Functions. Forests 2022, 13, 2072. [Google Scholar] [CrossRef]

| Predicted Class | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M1 (DS1 & SKFCV) | M2 (DS2 & GKFCV) | M3 (DS1 & SKFCV) | M4 (DS2 & GKFCV) | ||||||||||

| C1 | C2 | C3 | C1 | C2 | C3 | C1 | C2 | C3 | C1 | C2 | C3 | ||

| True Class | C1 | 0.998 | 0.001 | 0.001 | 0.991 | 0.007 | 0.002 | 0.851 | 0.147 | 0.002 | 0.952 | 0.045 | 0.003 |

| C2 | 0.016 | 0.983 | 0.001 | 0.009 | 0.988 | 0.003 | 0.179 | 0.816 | 0.005 | 0.047 | 0.948 | 0.005 | |

| C3 | 0 | 0 | 1 | 0 | 0 | 1 | 0.007 | 0.003 | 0.990 | 0 | 0.008 | 0.992 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kalhor, D.; Poirier, M.; Maldague, X.; Gauthier, G. Can Transfer Learning Overcome the Challenge of Identifying Lemming Species in Images Taken in the near Infrared Spectrum? Proceedings 2025, 129, 65. https://doi.org/10.3390/proceedings2025129065

Kalhor D, Poirier M, Maldague X, Gauthier G. Can Transfer Learning Overcome the Challenge of Identifying Lemming Species in Images Taken in the near Infrared Spectrum? Proceedings. 2025; 129(1):65. https://doi.org/10.3390/proceedings2025129065

Chicago/Turabian StyleKalhor, Davood, Mathilde Poirier, Xavier Maldague, and Gilles Gauthier. 2025. "Can Transfer Learning Overcome the Challenge of Identifying Lemming Species in Images Taken in the near Infrared Spectrum?" Proceedings 129, no. 1: 65. https://doi.org/10.3390/proceedings2025129065

APA StyleKalhor, D., Poirier, M., Maldague, X., & Gauthier, G. (2025). Can Transfer Learning Overcome the Challenge of Identifying Lemming Species in Images Taken in the near Infrared Spectrum? Proceedings, 129(1), 65. https://doi.org/10.3390/proceedings2025129065