Abstract

In this manuscript, we use approximations of conformable derivatives for designing iterative methods to solve nonlinear algebraic or trascendental equations. We adapt the approximation of conformable derivatives in order to design conformable derivative-free iterative schemes to solve nonlinear equations: Steffensen and Secant-type methods. To our knowledge, these are the first conformable derivative-free schemes in the literature, where the Steffensen conformable method is also optimal; moreover, the Secant conformable scheme is also a procedure with memory. A convergence analysis is made, preserving the order of classical cases, and the numerical performance is studied in order to confirm the theoretical results. It is shown that these methods can present some numerical advantages versus their classical partners, with wide sets of converging initial estimations.

1. Introduction

Many theoretical and applied problems require us to find the solution of the nonlinear equation , where . Due to most of the nonlinear problems not having an analytical solution, many authors have proposed numerical methods by means of fixed point schemes to approximate the solution of . Most of these procedures possess the evaluation of an integer order derivative, or its approximation.

In the recent literature, some authors have introduced several numerical methods with noninteger order derivatives (fractal derivative, fractional derivative, and conformable derivative). These derivatives of order establish a generalization of a classical one, which is a particular case when the order is . Noninteger derivatives can be used to model many applied problems because of the higher degree of freedom of its tools compared to classical calculus tools [1,2,3,4].

In Ref. [5], the first Newton’s methods with fractal derivatives are presented, whose order of convergence is quadratic. With regard to iterative schemes with fractional derivatives, the authors in Ref. [6] designed Newton-type methods, of order , with Caputo and Riemann–Liouville fractional derivatives. In Ref. [7], two Newton’s methods with Caputo and Riemann–Liouville fractional derivatives, of order , allow the design of two Traub’s methods with Caputo and Riemann–Liouville fractional derivatives, of order for each one; these are the first multipoint fractional methods in the literature. The authors in Ref. [8] perform a dynamical analysis of Newton-type methods whose derivatives are replaced by Caputo and Riemann–Liouville fractional derivatives. In Ref. [9], Newton’s schemes with Caputo and Riemann–Liouville fractional derivatives of order are proposed, obtaining a quadratic order of convergence in both cases. Also, the authors in Ref. [10] study the dynamics of a family of procedures with Caputo and conformable derivatives of order three.

Iterative methods with fractional derivatives do not hold the order of convergence of their classical versions; they need higher-order fractional derivatives to increase the order of convergence, preventing it from being possible to obtain optimal order procedures according to Kung and Traub conjecture [11]; unlike schemes with conformable derivatives. So, another approach is the conformable calculus [12,13], whose low computational cost constitutes an advantage versus fractional calculus, due to special functions as Gamma or Mittag–Leffler functions are not evaluated [3]. In that sense, several conformable iterative schemes were designed: In Refs. [14,15] are proposed the scalar and vectorial versions of a Newton-type method with conformable derivative/Jacobian, respectively, and a general technique is designed in Ref. [16] in order to obtain the conformable version of any scalar classical procedure. Also, the authors in Ref. [17] proposed the first multipoint conformable method for solving nonlinear systems (a Traub-type method). Finally, some derivative-free schemes were designed (a Steffensen-type and Secant-type procedures) with an approximation of conformable derivatives in Ref. [18], and a Traub–Steffensen-type method in Ref. [19] (in scalar and vectorial version). The theoretical convergence order of these methods is preserved in practice. Indeed, these methods show good qualitative behavior, improving even their respective classical cases in some numerical aspects.

Most of these fractal, fractional, and conformable schemes mentioned above need the evaluation of fractal, fractional, or conformable derivatives, respectively. Since conformable procedures have presented many advantages versus fractional ones, in this manuscript, we focus in the approximation of conformable derivatives in order to design, to our knowledge, the first conformable derivative-free iterative methods to solve nonlinear equations: a Steffensen-type method and a Secant-type method (based in Ref. [18]); we also compare them with their classical partners.

Let us recall some basic definitions from conformable calculus: Given a function , its left conformable derivative, starting from a, of order , where , being , can be defined as shown next [12,13]

If this limit does exist, then f is -differentiable. Let us suppose that f is differentiable, then . Given such that f is -differentiable in , then .

This derivative preserves the property of non fractional derivatives: , where K is a constant. As mentioned before, this kind of derivative does not require to evaluate any special function.

In Ref. [20], an appropriate conformable Taylor series is provided, as shown in the following result.

Theorem 1

(Theorem 4.1, [20]). Let be an infinitely α-differentiable function, , about , where the conformable derivatives start at a. Then, the conformable Taylor series of can be given by

being , , , , ….

We can easily prove that , , and so on. So, (2) is expressed as

Since the Secant-type method we propose includes memory, we need to introduce a generalization of order of convergence (The R-order [21,22]), but first, let us see the concept of R-factor:

Definition 1

([21,22]). Let ϕ be a converging iterative method to some limit β, and let be an arbitrary sequence in converging to β. Then, the R-factor of the sequence is

We can define now the R-order:

Definition 2

([21,22]). The R-order of convergence of an iterative method ϕ at the point β is

The following result states a relation between the roots of a characteristic polynomial and the R-order of an iterative procedure with memory:

Theorem 2

([21,22]). Let ϕ be an iterative method with memory generating the sequence of approximations of the root , and let us suppose that the sequence converges to . If exists a not null constant η, and nonnegative numbers , , such that

is fulfilled, then the R-order of iterative scheme ϕ satisfies

being the only positive root of polynomial

Finally, if we take into account that, given an iteration function of order p, its asymptotical error constant C is defined as [23]

then the next result permits the calculation of the error constant of an iterative scheme with p-order of convergence, knowing that of other iterative method with the same order.

Theorem 3

([23], Theorems 2–8). Let us consider iteration functions and with order p and fixed point with multiplicity m. If we define

and and are the asymptotical error constants of and , respectively. Therefore,

Later, we support Theorem 3 in the conformable schemes proposed in this work, and we use for our purposes.

2. Deduction of the Methods

As in Ref. [18], we consider the approximation of (1) with the following conformable finite divided difference of linear order:

In Refs. [14,15,16], we can see that the conformable schemes preserve the theoretical order of their classical versions (when ), no matter if these procedures are scalar or vectorial, one-point, or multipoint. Now, we wonder if the conformable version of derivative-free methods (with or without memory) hold the order of convergence of their classical versions too. For this aim, we use the general technique proposed in [16], which is useful for finding the conformable partner of any known procedure, and show that these procedures preserve the order of convergence of their classical versions.

The general technique given in Ref. [16], states that the classical method

has the conformable version

If in (11) includes classical derivatives of , then in (12) includes conformable derivatives of . So, given a classical scheme, we need to identify the analytical expression of to obtain its conformable version.

In the case of Steffensen’s procedure [22,23,24]:

where is an approximation of the classical derivative. So,

Regarding (10),

being in (10). Hence, the conformable version of Steffensen’s method is

and we denote it by SeCO; note that when the classical Steffensen’s scheme is obtained.

In the case of the Secant procedure [22,23]:

where is an approximation of the classical derivative. Then,

3. Convergence Analysis

The next result establishes the conditions for the quadratic order of convergence of SeCO. We use the notation and .

Theorem 4.

Let us consider a sufficiently differentiable function in the open interval I, that holds a zero of . Assume that the initial approximation is close enough to . Therefore, the order of convergence (local) of conformable Steffensen’s scheme (SeCO) defined by

is at least 2, being , and its error equation is

where , , such that , .

Proof.

Knowing that , the Taylor expansion of and about are

and

respectively, being , for .

The generalized binomial theorem is [25]

where (see [26])

and is the Gamma function. So, using this result,

Then,

and

The quotient results

Again,

hence,

By generalized binomial result,

Finally,

and proof is finished. □

Remark 1.

Classical Steffensen’s method has the error equation

Then, it is confirmed the relation between the asymptotic error constants seen in Theorem 3.

Remark 2.

SeCO is, up to our knowledge, the first optimal conformable derivative-free scheme according to Kung–Traub’s conjecture (see [11]).

The following result states the conditions for the superlinear order of convergence of EeCO. We use the notation , , and .

Theorem 5.

Let us consider a sufficiently differentiable function in the open interval I, holding a zero of . If the initial approximations and are close enough to , then the Secant procedure (EeCO)

for , has local order of convergence of conformable at least , being , and the error equation is

where , for , such that , , .

Proof.

Knowing that , the Taylor expansion of about is calculated as

being , for .

Knowing that , then . So, using generalized binomial Theorem,

Thus, the expansion of is

and

Therefore, knowing that ,

Hence,

and

Finally,

Using Theorem 2, the characteristic polynomial obtained is , whose only positive root is . So, the convergence of the conformable Secant method is superlinear. □

Remark 3.

Since the error equation of the classical Secant scheme is

the relation between asymptotic error constants seen in Theorem 3 is checked.

Remark 4.

EeCO is the first conformable iterative procedure with memory.

The theoretical order of convergence of derivative-free classical schemes (with memory or not) is also preserved in the conformable version of these methods. In the next section, some numerical tests are made under several nonlinear functions, and the stability of such methods is studied.

4. Numerical Results

To obtain the results shown in this section, we have used Matlab R2020a with double precision arithmetics, or as stopping criteria, and a maximum of 500 iterates. The approximate computational order of convergence (ACOC)

defined in [27], is used to confirm that theoretical order of convergence is also conserved in practice.

Now, we test six nonlinear functions with the methods that we have designed in the previous section; in this sense, we compare each scheme with its classic version (when ). For EeCO, we choose to perform the first iteration, we fix for each method, and .

In each table, we show the results obtained for each test function using the two schemes designed in the previous section (SeCO and EeCO), where coincides in both procedures.

The first test function is , with real and complex roots , , , , , and .

In Table 1, we can see that SeCO can require the same number of iterations as the classical Steffensen’s method (when ), and can be slightly higher than 2 when . Note that SeCO needs the initial estimate to be very close to to converge with any . We observe that EeCO require in some cases less iterations than Secant scheme for most values of , and the ACOC can be slightly higher than 1.618.

Table 1.

Results for , with initial estimates for SeCO, and and for EeCO.

Our second test function is , with real roots and .

In Table 2 we note that SeCO can converge in fewer iterations than its classical partner, and a different root can be found; case is not shown because this method converges to some point which is not a root of , due to one of the stopping criteria is much greater than zero, also, no results are shown when it is required more than 500 iterations. We can see that EeCO can require the same number of iterations of its classical partner, and can be slightly higher than 1.618.

Table 2.

Results for , with initial estimates for SeCO, and and for EeCO.

Next test function is , with double roots , , and .

In Table 3, we observe that many times SeCO requires lower number of iterations than Steffensen’s procedure, and the ACOC is linear for all , because the multiplicity of all roots is . We note that number of iterations is increasing when EeCO is used, a distinct root can be found when a different value of is chosen, and again, is linear for any , because the multiplicity of these roots is 2; we show no results for as this scheme converges to some point which is not a root of , due to one of the stopping criteria is much greater than zero.

Table 3.

Results for , with initial estimates for SeCO, and and for EeCO.

The fourth nonlinear function is , with roots and .

In Table 4, we see that SeCO needs fewer iterations than its classical partner in many cases; is not provided for because it is necessary at least three iterations to be computed, and we do not show results for as this procedure does not converge to a root of . We can observe that the classical Secant method has failed, whereas, the conformable version can find solution for some values of , and the ACOC can be slightly higher than 1.618; and again, no results are shown for because this procedure does not converge to a root of .

Table 4.

Results for , with initial estimates for SeCO, and and for EeCO.

The fifth test function is , with real root . Also, the complex root can be obtained.

In Table 5, we note that SeCO can require fewer iterations than its classical version in some cases, a different root can be found when choosing a distinct value of , a complex root can be obtained starting from a real initial estimate, and can be slightly higher than 2. We can see that EeCO can converge in a lower number of iterations than its classical version, and the ACOC can be slightly higher than 1.618. No results are shown in both methods when they require more than 500 iterations.

Table 5.

Results for , with initial estimates for SeCO, and and for EeCO.

Finally, our sixth test function is , with real roots , , , , , , , , , , , , and .

In Table 6, we observe that SeCO and EeCO need lower number of iterations than their classical partners, respectively, for some values of , and that is similar to the classical one in each case; no results is shown for because this scheme converges to some point which is not a root of , due to one of the stopping criteria is much greater than zero. Neither are results shown for EeCO when , since it requires more than 500 iterations to converge. We note that with each procedure different roots are obtained by modifying the value of .

Table 6.

Results for , with initial estimates for SeCO, and and for EeCO.

Qualitative Performance

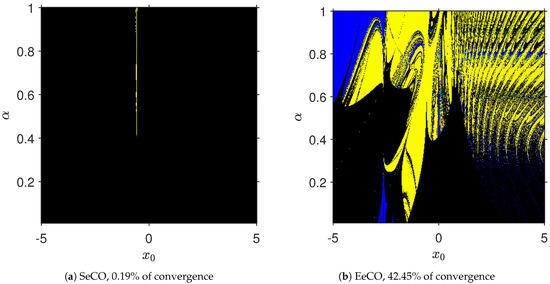

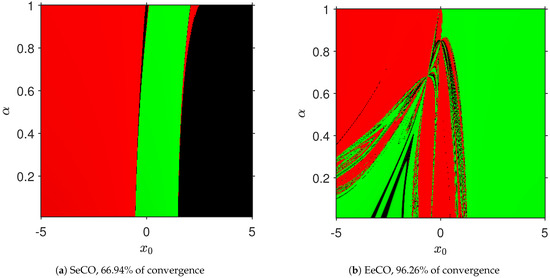

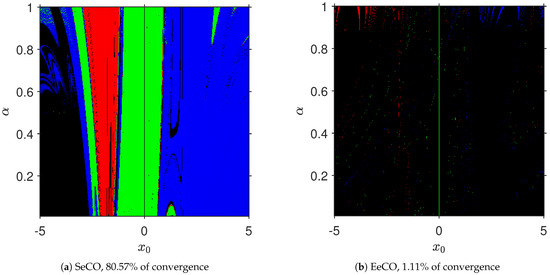

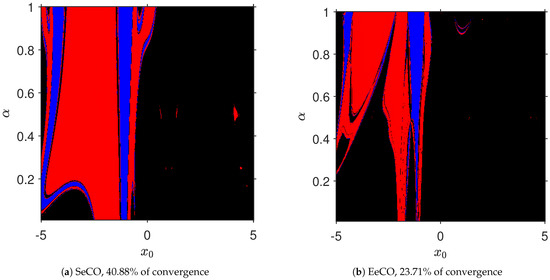

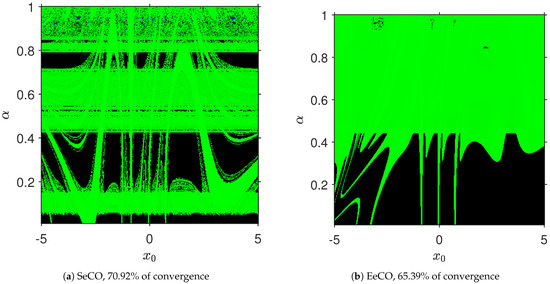

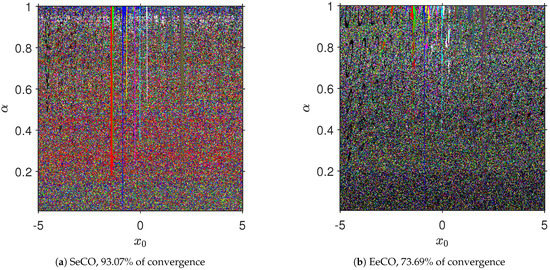

Now, we analyze the dependency regarding the initial estimations of the schemes designed in this manuscript; for this, convergence planes defined in [28] are used. In them, the abscissa axis corresponds to the initial estimate (for both schemes), and the order of the derivative corresponds to the ordinate axis. We define a mesh of points that can be represented in different colors. Those not represented in black correspond to the pairs converging to one of the roots, with a tolerance of . Different roots have associated different colors. Therefore, a point represented in black means that the iterative process does not converge to any root in a maximum of 500 iterations.

For all convergence planes we calculate the percentage of convergent , to compare the efficiency of these procedures. For each method we set in each plane, , and . In the case of EeCO we select to perform the first iteration, just as we did in the numerical tests in Section 3.

In Figure 1, we can observe that SeCO achieves approximately only 0.2% of convergence, and only one root is obtained, whereas EeCO reaches approximately 43% of convergence, where all the roots are reached.

Figure 1.

Convergence planes for .

In Figure 2, SeCO attains around 67% of convergence, and EeCO attains around 97% of convergence. Both roots are obtained on each plane.

Figure 2.

Convergence planes for .

In Figure 3, we see that SeCO achieves about 81% of convergence, but EeCO reaches only about 1% of convergence. The three roots are found.

Figure 3.

Convergence planes for .

In Figure 4, we observe that SeCO attains approximately 41% of convergence, whereas EeCO attains approximately 24% of convergence. Both roots are obtained on each plane.

Figure 4.

Convergence planes for .

In Figure 5, we note that SeCO achieves around 71% of convergence, and EeCO reaches only around 66% of convergence. Both roots (the real an the complex) are found on each plane for different values of . These results improve those obtained for and .

Figure 5.

Convergence planes for .

In Figure 6, we can see that SeCO attains about 93% of convergence, but EeCO attains about 74% of convergence. Let us remark that each method obtains all the roots and the convergence rates are higher in this case than those obtained on the previous functions.

Figure 6.

Convergence planes for .

5. Concluding Remarks

In this work, the first derivative-free conformable iterative methods in the literature have been designed (SeCO and EeCO), where SeCO is the first optimal derivative-free conformable method, and EeCO is also the first conformable scheme with memory. The convergence of these procedures was analyzed, and these methods preserve the order of convergence of their classical versions (when ). This fact provides the proposed schemes with more degrees of freedom, as with the same initial estimation, and changing the values of , we can obtain all the roots of the equations or improve the convergence results, in some sense. In order to check these advantages, the numerical performance of these schemes has been studied. Derivative-free conformable procedures converge when the classical versions fail, roots can be found in fewer iterations, a different solution can be obtained by selecting different values of , complex roots can be achieved with real seeds, and the approximated computational order of convergence can be slightly higher. Also, we visualized the dependence on initial estimates by using convergence planes, and these methods showed good stability, due to the percentage of converging points , furthermore, all roots are obtained in most of planes. Therefore, the set of converging initial estimations is wider in the case of conformable derivative-free methods.

Author Contributions

Conceptualization, G.C.; methodology, M.P.V.; software, A.C.; formal analysis, J.R.T.; investigation, A.C.; writing—original draft preparation, G.C. and M.P.V.; writing—review and editing, A.C. and J.R.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data sharing not applicable.

Acknowledgments

The authors would like to thank the anonymous reviewers whose comments and suggestions have improved the final version of this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, W.; Liang, Y.; Cai, W. Hausdorff Calculus: Applications to Fractal Systems, 1st ed.; de Gruyter: Berlin, Germany; Boston, MA, USA, 2019. [Google Scholar]

- Miller, K.S. An Introduction to Fractional Calculus and Fractional Differential Equations, 1st ed.; J. Wiley and Sons: Hoboken, NJ, USA, 1993. [Google Scholar]

- Podlubny, I. Fractional Differential Equations, 1st ed.; Academic Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Mathai, A.M.; Haubold, H.J. Fractional and Multivariable Calculus, Model Building and Optimization Problems, 1st ed.; Springer Optimization and Its Applications: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Akgül, A.; Grow, D. Fractal Newton Methods. Mathematics 2023, 11, 2277. [Google Scholar] [CrossRef]

- Akgül, A.; Cordero, A.; Torregrosa, J.R. A fractional Newton method with 2αth-order of convergence and its stability. Appl. Math. Lett. 2019, 98, 344–351. [Google Scholar] [CrossRef]

- Candelario, G.; Cordero, A.; Torregrosa, J.R. Multipoint Fractional Iterative Methods with (2α + 1)th-Order of Convergence for Solving Nonlinear Problems. Mathematics 2020, 452, 452. [Google Scholar] [CrossRef]

- Gdawiec, K.; Kotarski, W.; Lisowska, A. Newton’s method with fractional derivatives and various iteration processes via visual analysis. Numer. Algorithms 2021, 86, 953–1010. [Google Scholar] [CrossRef]

- Bayrak, M.A.; Demir, A.; Ozbilge, E. On Fractional Newton-Type Method for Nonlinear Problems. J. Math. 2022, 2022, 7070253. [Google Scholar] [CrossRef]

- Nayak, S.K.; Parida, P.K. The dynamical analysis of a low computational cost family of higher-order fractional iterative method. Int. J. Comput. Math. 2023, 100, 1395–1417. [Google Scholar] [CrossRef]

- Kung, H.T.; Traub, J.F. Optimal Order of One-Pont and Multipoint Iteration. J. Assoc. Comput. Mach. 1974, 21, 643–651. [Google Scholar] [CrossRef]

- Khalil, R.; Al Horani, M.; Yousef, A.; Sababheh, M. A new definition of fractional derivative. J. Comput. Appl. Math. 2014, 264, 65–70. [Google Scholar] [CrossRef]

- Abdeljawad, T. On conformable fractional calculus. J. Comput. Appl. Math. 2014, 279, 57–66. [Google Scholar] [CrossRef]

- Candelario, G.; Cordero, A.; Torregrosa, J.R.; Vassileva, M.P. An optimal and low computational cost fractional Newton-type method for solving nonlinear equations. Appl. Math. Lett. 2022, 124, 107650. [Google Scholar] [CrossRef]

- Candelario, G.; Cordero, A.; Torregrosa, J.R.; Vassileva, M.P. Generalized conformable fractional Newton-type method for solving nonlinear systems. Numer. Algorithms 2023, 93, 1171–1208. [Google Scholar] [CrossRef]

- Candelario, G.; Cordero, A.; Torregrosa, J.R.; Vassileva, M.P. Solving Nonlinear Transcendental Equations by Iterative Methods with Conformable Derivatives: A General Approach. Mathematics 2023, 11, 2568. [Google Scholar] [CrossRef]

- Wang, X.; Xu, J. Conformable fractional Traub’s method for solving nonlinear systems. Numer. Algorithms 2023. [Google Scholar] [CrossRef]

- Candelario, G. Métodos Iterativos Fraccionarios para la Resolución de Ecuaciones y Sistemas no Lineales: Diseño, Análisis y Estabilidad. Doctoral Thesis, Universitat Politècnica de València, Valencia, Spain, 2023. Available online: http://hdl.handle.net/10251/194270 (accessed on 16 May 2023).

- Singh, H.; Sharma, J.R. A fractional Traub-Steffensen-type method for solving nonlinear equations. Numer. Algorithms 2023. [Google Scholar] [CrossRef]

- Toprakseven, S. Numerical Solutions of Conformable Fractional Differential Equations by Taylor and Finite Difference Methods. J. Nat. Appl. Sci. 2019, 23, 850–863. [Google Scholar] [CrossRef]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables, 1st ed.; Academic Press: Boston, MA, USA, 1970. [Google Scholar]

- Petković, M.S.; Neta, B.; Petković, L.D.; Džunić, J. Multipoint Methods for Solving Nonlinear Equations, 1st ed.; Elsevier: St. Francisco, CO, USA, 2013. [Google Scholar]

- Traub, J.F. Iterative Methods for the Solution of Equations, 1st ed.; Prentice-Hall: Des Moines, IA, USA, 1964. [Google Scholar]

- Steffensen, J.F. Remarks on iteration. Scand. Actuar. J. 1933, 1, 64–72. [Google Scholar] [CrossRef]

- Graham, R.L.; Knuth, D.E.; Patashnik, O. Concrete Mathematics, 1st ed.; Addison-Wesley Longman Publishing: Boston, MA, USA, 1994. [Google Scholar]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions, 1st ed.; Dover Publications: Mineola, NY, USA, 1970. [Google Scholar]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method using fifth order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Magreñan, A.Á. A new tool to study real dynamics: The convergence plane. Appl. Math. Comput. 2014, 248, 215–224. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).