Abstract

Multiplicative noise removal is a quite challenging problem in image denoising. In recent years, hyper-Laplacian prior information has been successfully introduced in the image denoising problem and significant denoising effects have been achieved. In this paper, we propose a new hybrid regularizer model for removing multiplicative noise. The proposed model consists of the non-convex higher-order total variation and overlapping group sparsity on a hyper-Laplacian prior regularizer. It combines the advantages of the non-convex regularization and the hybrid regularization, which may simultaneously preserve the fine-edge information and reduce the staircase effect at the same time. We develop an effective alternating minimization method for the proposed nonconvex model via an alternating direction method of multipliers framework, where the majorization–minimization algorithm and the iteratively reweighted algorithm are adopted to solve the corresponding subproblems. Numerical experiments show that the proposed model outperforms the most advanced model in terms of visual quality and certain image quality measurements.

1. Introduction

Image noise removal is an extremely significant pre-processing step in the image-processing task. Multiplicative noise frequently presents in synthetic aperture radars, ultrasound imaging, and laser images, which causes image quality degradation. Therefore, the problem of multiplicative noise removal is very important. Mathematically, the degraded observation image model with multiplicative noise is represented as

where f denotes the degraded image, u denotes an original image, and the multiplicative noise follows a Gamma distribution with the probability density function (PDF):

where is a Gamma function, and L is an integer to represent the noise level. The smaller the value of L, the more severe the damage of the noise becomes. The mean value of is 1 and the variance of is .

Variational methods are effectively used for removing multiplicative noise. Among these variational methods, the total variation (TV)-based method is the best known and the most popular and efficient, since it can effectively preserve the image edges while suppressing noise [1,2]. In [3], a famous TV-based multiplicative noise removal model (RLO model) with two equality constraints was first designed by Rudin et al., which can be addressed by a gradient projection algorithm. Unfortunately, the RLO model can only deal with the noise that follows Gaussian distribution and cannot yield satisfactory recovery results for Gamma distribution. For the removal of the multiplicative Gamma noise, Aubert and Aujol [4] proposed the following variational model (the AA model) based on total variational regularization:

where is a positive regular parameter. The problem (3) is a non-convex optimization problem, so it is difficult to obtain a globally optimal solution. To overcome the effects of non-convex objective functions, many researchers have considered many convex models over the past decade. In [5,6,7], the authors used logarithm transforming to derive the corresponding strictly convex model:

where . In addition, Steidl and Teuber [8] chose to use the I-divergence as the data fidelity term of the objective function and proposed a convex optimization model:

The advantage of the above model is that a nonlinear log transformation is not required. By introducing an overlapping group sparsity total variation regularization term into an I-divergence data fidelity term, Liu et al. [9] proposed the convex variational model (“OGSTVD” for short) for removing multiplicative noise:

where the is the OGSTV regular function. They also illustrate the effectiveness of their model in image restoration by some numerical results.

All of the above models are TV-based convex regularizer ones. Although many studies showed that TV-based models have a good performance in keeping sharp edges, they tend to produce undesired staircase effects. To compensate for these shortcomings, many higher-order TV regularization terms are also proposed. By combining the first-order and second-order TV, Liu [10] proposed a hybrid regularization model for multiplicative noise removal. Shama et al. [11] proposed a model based on second-order total generalized variational regularization (TGV) to remove multiplicative noise. Influenced by model (4), Lv [12] proposed a TGV-based model (M-TGV for short) for multiplicative noise removal with multilook M. To obtain high-quality recovered images, some non-convex regularizers were introduced into the variational models. The non-convex regularizer can effectively smooth the homogeneous region of the image while preserving the edge details of the image. Chartrand [13] gives a non-convex optimization problem whose objective function is the norm, giving an arbitrary sparse signal under the theoretical constitutability condition. The models with non-convex regularizers can also be found in [14,15,16,17]. Extensive studies show that the models with non-convex regularizers have outperformed the models with convex regularizers in preserving the edges of the image.

Natural image gradients obey a heavy-tailed distribution, and hyper-Laplacian (HL) prior is more approximated to the heavy-tailed distribution than the Gaussian or Laplacian prior. Krishnan et al. [18] proposed a fast non-blurred image deblurring method based on a super-Laplace prior. Many scholars studied multispectral image denoising [19,20,21,22,23] based on the global spectral structure of the HL prior regularization term. Shi et al. [24] combined the OGSTV and HL prior regularizer (OGSHL) to remove Gaussian noise. They showed that their proposed model can effectively utilize more texture information and find a satisfying balance between suppressing staircase effects and recovering the structure information.

Using the above strategy as inspiration, we develop a novel hybrid regularization model to eliminate multiplicative noise. The model combines the advantages of nonconvex regularization and OGSHL regularization. More specifically, the model can be expressed as follows:

where the is the OGSHL regular function and . According to what we know, there has been no attempt to combine overlapping group sparsity on a hyper-Laplacian (OGSHL) prior with higher-order non-convex total variation regularizers to remove multiplicative noise. The main contributions of this paper are summarized as follows: (1) We propose a new hybrid regular denoising model that combines a non-convex higher-order total variation and overlapping group sparsity total variation on a hyper-Laplacian prior. It can suppress the staircase effect while retaining more image detail information. (2) To make the optimization model easy to handle, we propose an efficient alternating minimization method. (3) The experimental results verify that the proposed method outperforms many state-of-the-art methods.

The remaining portions of this article are outlined below. Section 2 introduces the definitions of the higher-order TV and an OGSHL. In Section 3, based on the alternating direction method of multipliers, we develop an efficient algorithm to solve the corresponding multiplicative removal problem. In Section 4, numerical results show the effectiveness of the proposed method. In Section 5, a summary of the paper is presented.

2. Preliminaries

We present some relevant background knowledge in this section.

2.1. Second-Order TV

For any , denotes the intensity value of z at pixel for . The definitions of the first-order forward and backward difference operators are given below:

By introducing the operators above, the second-order differential operator is expressed as

2.2. Overlapping Group Sparsity on Hyper-Laplacian Prior

With respect to the two-dimensional image matrix , a -point group is defined in [25] as

where and , denotes the nearest integer that is not nearer than t. It is clear that can be seen as a square block of continuous sampling of z. According to the dictionary parsimonious search, Liu et al. [25] arranged as a set of column vectors z, i.e., . Then, the two-dimensional overlapping group sparsity regularizer is defined as

From the above definition, the definition of the overlapping group sparse total variation can be expressed as

The hyper-Laplacian prior theory has recently attracted much attention since it offers a benign approximation to the heavy-tailed distribution of natural image gradients. Kyongson et al. [26] define the OGS-HL regularizer by

with

where is a vector whose elements are the r-th power of the absolute value of the corresponding element, r is the scale parameter of the hyper-Laplacian distribution, , see [26]. If , .

3. Proposed Method with Adaptive Parameter Adjustment

In the framework of the alternating direction method of multipliers (ADMM), the variable splitting technique is used to solve the proposed model. We first introduce new auxiliary variables , and q. Then, the original unconstrained optimization problem (7) can be converted into an equivalent constrained minimization problem as follows:

We define the corresponding augmented Lagrangian function of (8) as follows:

where are positive penalty parameters, and are the Lagrange multipliers, respectively. The optimal solution of (8) can be obtained by finding the saddle point of under the framework of the ADMM. We alternately solve the following subproblems in the framework:

and the Lagrange multiplier parameters are updated as follows:

Firstly, fixing , and , the w-subproblem is following optimization problem:

Using the Euler–Lagrange equation of (9), we can obtain the optimal solution of the w subproblem:

Then, the solution of w is

Secondly, the q-subproblem is equivalent to the following nonconvex optimization problem:

The q-subproblem (11) is rewritten as

where . In this paper, we adopted the the iterative re-weighted algorithm (IRL1) method [27] to solve this non-convex regularized problem (12). As in [27], we approximated the nonconvex minimization (12) to the following weighted problem:

where is a weight vector with each component of and is a number close to zero. The minimization problem (13) has a optimal solution and is obtained by the one-dimensional shrink operator:

Thirdly, the minimization of v-subproblem can be expressed as

By using the majorization–minimization (MM) [26], the problem (15) can be iteratively solved as

where is a diagonal matrix whose elements of each diagonal are

with , for and , ⊙ denotes element-wise multiplication, . Therefore, the explicit optimal solution to the v subproblem is given as follows:

where I denotes an identity matrix, and .

Finally, the z subproblem can be simplified as

By differentiating the minimization problem (18) directly, its optimal solution can be obtained from the following Euler–Lagrange equation:

By using the fast Fourier transform, the optimal solution of (19) can be given as

We give a detailed description of the proposed method (named Algorithm 1: NHOGSHL) for removing multiplicative noise as follows.

| Algorithm 1: NHOGSHL for image restoration under multiplicative noise |

| Input: |

| Initialize: |

| While |

| (1): Update by solving (10); |

| (2): Update by solving (14); |

| (3): Update by solving (17); |

| (4): Update by solving (20); |

| (5): Update |

| Output |

4. Numerical Experiments

In this section, we illustrate the effectiveness of the NHOGSHL model (7), compared with the following three models: the CONVEX model in [28], the OGSTVD model in [9], and the M-TGV model in [12]. The six gray-scale images shown in Figure 1, whose sizes are all , were used in the experiment. All the experimental results of this article were obtained under MATLAB R2014a running Matlab code on a PC equipped with 4.00 GB RAM and Intel(R) Core(TM) i5-6500U CPU(3.20 GHz). In addition to selecting the peak signal-to-noise ratio (PSNR, unit: dB) as a quantitative and qualitative index to measure image quality, the structural similarity index measurement (SSIM) [29] was also calculated to assess the visual quality. They are defined as follows:

where denotes the original image and z denotes the recovered clean image.

where and are the means of the and z, respectively. and represent the standard deviation of the and z, respectively, and represents the covariance of the and z. and are normal numbers with denominator values close to zero. As the PSNR value is higher, the SSIM value is nearer to 1, and the image recovery is better. In all experiments, the stop criterion is set to

Figure 1.

Test images: (a) Camera, (b) Peppers, (c) Boats, (d) Tulips, (e) Lin, (f) Man.

4.1. Parameters Setting

In this section, we will describe in detail the best values for the different parameters , and r. We take three images of “Boats”, “Camera”, and “Lin” as examples to illustrate the process of parameter selection. We added multiplicative noise level with to these three images.

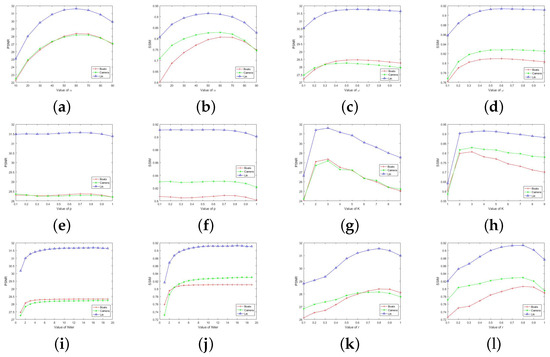

First, we assume that the other parameters are known and let change between 10 and 90, so as to determine the optimal value of parameter . The variation in PSNR and SSIM with the value of parameter is plotted in Figure 2. We can see that when the value is around 60, the proposed method has the best effect. Similarly, the optimal value of can be obtained. When the parameter is around 0.7, both PSNR and SSIM reach the maximum. Therefore, the parameter was set to in the following experiments.

Figure 2.

(a–l) The PSNR and SSIM values with respect to the parameters , , p, K, , and r.

Next, Figure 2 plots the PSNR and SSIM and the relationship between the parameters p and r. From Figure 2, we can see that the optimal results can be obtained when and . Therefore, we set and .

Finally, Figure 2 shows that the optimal value of the group size . The experimental results tend to converge to the optimum when the number of iterations is . Therefore, we set in our experiments.

4.2. Experimental Results

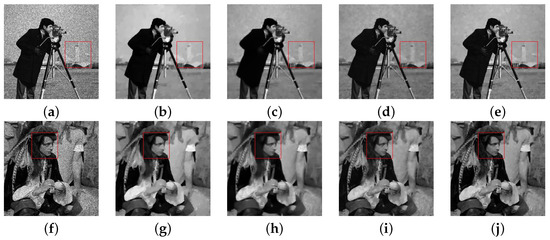

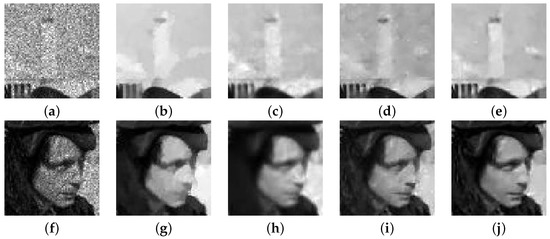

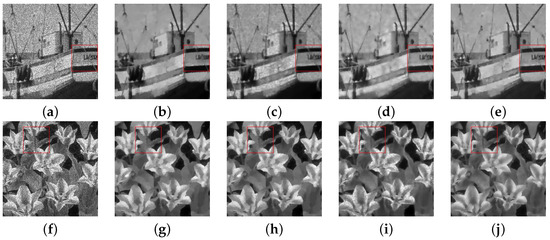

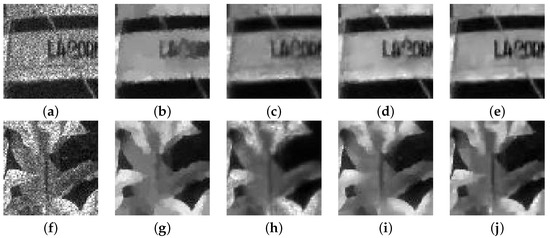

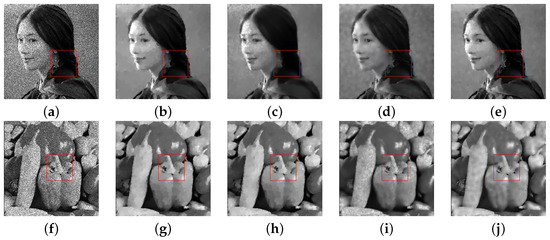

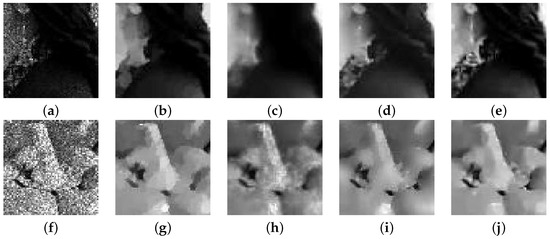

In this section, we conducted some experiments to verify the good performance of the proposed algorithm. Firstly, we gave the denoising results of the different methods a noise level of in Figure 3. Figure 3 shows that our method is more competitive in both visual effect and quantitative analysis. We selected the region of the images (the marked red box area in Figure 3) to enlarge to better observe the recovered image. As seen in Figure 3 and Figure 4, our method provides superior recovery for the structure of the restored image. Next, in Figure 5 and Figure 6, the recovery images of “Boats” and “Tulips” are shown for four different methods at a noise level of . The other compared methods produce staircase artifacts and lose a lot of detail, while our method successfully overcomes the above shortcomings. Finally, under the noise level , the recovered images of “Lin” and “Peppers” by the different methods are shown in Figure 7 and Figure 8. As can be seen in the figures, our method has a great advantage in restoring sharp edges, such as the earrings of “Lin” and the terriers of “Peppers”. Compared with the two models, M-TGV and our method have better performance in restoring detail information and texture structure. Compared with the M-TGV method, our method can restore more details and textures.

Figure 3.

Recovery results of four algorithms on different images with the noise at : (a) Degraded. (b) CONVEX. (c) OGSTVD. (d) M-TGV. (e) NHOGSHL. (f) Degraded. (g) CONVEX. (h) OGSTVD. (i) M-TGV. (j) NHOGSHL.

Figure 4.

Zoomed-in region of Figure 3: (a) Degraded. (b) CONVEX. (c) OGSTVD. (d) M-TGV. (e) NHOGSHL. (f) Degraded. (g) CONVEX. (h) OGSTVD. (i) M-TGV. (j) NHOGSHL.

Figure 5.

Recovery results of four algorithms on different images with the noise at : (a) Degraded. (b) CONVEX. (c) OGSTVD. (d) M-TGV. (e) NHOGSHL. (f) Degraded. (g) CONVEX. (h) OGSTVD. (i) M-TGV. (j) NHOGSHL.

Figure 6.

Zoomed-in region of Figure 5: (a) Degraded. (b) CONVEX. (c) OGSTVD. (d) M-TGV. (e) NHOGSHL. (f) Degraded. (g) CONVEX. (h) OGSTVD. (i) M-TGV. (j) NHOGSHL.

Figure 7.

Recovery results of four algorithms on different images with the noise at : (a) Degraded. (b) CONVEX. (c) OGSTVD. (d) M-TGV. (e) NHOGSHL. (f) Degraded. (g) CONVEX. (h) OGSTVD. (i) M-TGV. (j) NHOGSHL.

Figure 8.

Zoomed-in region of Figure 7: (a) Degraded. (b) CONVEX. (c) OGSTVD. (d) M-TGV. (e) NHOGSHL. (f) Degraded. (g) CONVEX. (h) OGSTVD. (i) M-TGV. (j) NHOGSHL.

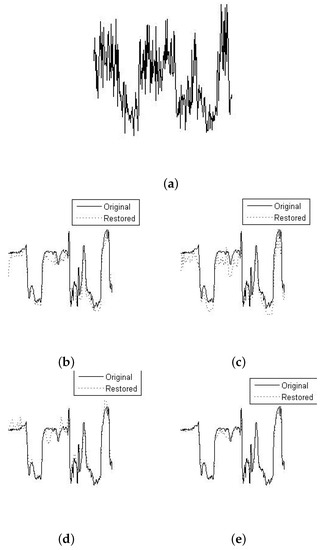

To clearly highlight the competitiveness of our method, Figure 9 plots the fifth column of the zoomed “Boats” image under the noise level . From the fitting results shown in Figure 9, our method can obtain much better fitting results than the other three methods.

Figure 9.

Slice of Boats (the 5th column) and their corresponding denoising results under the noise level : (a) Noise image. (b) CONVEX. (c) OGSTVD. (d) M-TGV. (e) NHOGSHL.

Table 1, Table 2 and Table 3 summarize all the PSNRs and SSIMs of the different methods under the different noise levels. From the results in the tables, it is obvious that our method has a higher PSNR and SSIM. These also demonstrate the effectiveness of our method in terms of visual effect and quantitative analysis.

Table 1.

Summary of the results of PSNR values and SSIM values restored by different algorithms under the noise level of .

Table 2.

Summary of the results of PSNR values and SSIM values restored by different algorithms under the noise level of .

Table 3.

Summary of the results of PSNR values and SSIM values restored by different algorithms under the noise level of .

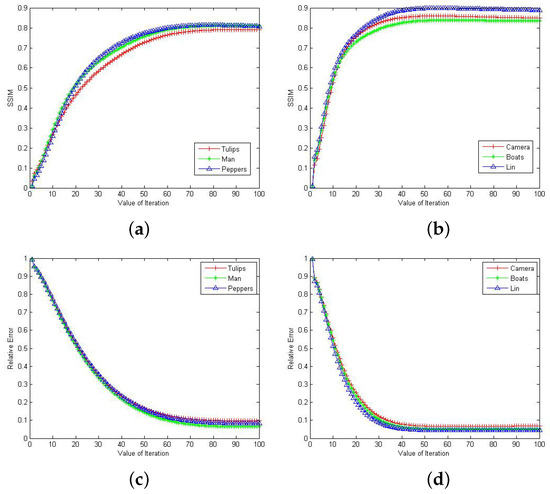

4.3. Convergence Analysis

In this section, we verify the convergence of our method. We plot the curves of SSIM and RelErr values of the restored images versus the iterations under different noise levels in Figure 10. As seen in Figure 10, the curves of relative error are stable as the number of iterations rises.

Figure 10.

The SSIM and the ReErr values versus iteration in our model for different images with different noise levels: (a) . (b) . (c) . (d) .

5. Conclusions

This paper presents a new nonconvex regularization model for multiplicative noise removal. The new model employs non-convex norm regularization and OGSHL regularization as a hybrid regularizer. An efficient alternating method is proposed based on an MM algorithm and the iteratively reweighted algorithm to solve the NHOGSHL model under the framework of ADMM. Numerical experiments demonstrate that the NHOGSHL model is competitive against the compared methods. In future work, we hope to extend this method to deal with problems related to removed mixed noises.

Author Contributions

J.Z. proposed the algorithm and designed the experiments; Y.W. and J.W. performed the experiments; J.Z. and B.H. wrote the paper; All authors read and approved the final manuscript.

Funding

This work was supported by a Project of Shandong Province Higher Educational Science and Technology Program (J17KA166), by the Joint Funds of the National Natural Science Foundation of China (U22B2049).

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, F.; Ng, M.K.; Shen, C.M. Multiplicative noise removal with spatially varying regularization parameters. Siam J. Imaging Sci. 2010, 3, 1–20. [Google Scholar] [CrossRef]

- Shi, B.L.; Huang, L.H.; Pang, Z.F. Fast algorithm for multiplicative noise removal. J. Vis. Commun. Image Represent. 2012, 23, 126–133. [Google Scholar] [CrossRef]

- Rudin, L.; Lions, P.L.; Osher, S. Multiplicative denoising and deblurring: Theory and algorithms. In Geometric Level Set Methods in Imaging, Vision, and Graphics; Springer: Berlin/Heidelberg, Germany, 2003; pp. 103–119. [Google Scholar]

- Aubert, G.; Aujol, J.F. A variational approach to removing multiplicative noise. Siam J. Appl. Math. 2008, 68, 925–946. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Figueiredo, A.T. Multiplicative noise removal using variable splitting and constrained optimization. IEEE Trans. Image Process. 2010, 19, 1720–1730. [Google Scholar] [CrossRef]

- Huang, Y.M.; Ng, M.K.; Wen, Y.W. A new total variation method for multiplicative noise removal. Siam J. Imaging Sci. 2009, 2, 20–40. [Google Scholar] [CrossRef]

- Shi, J.; Osher, S. A nonlinear inverse scale space method for a convex multiplicative noise model. Siam J. Imaging Sci. 2008, 1, 294–321. [Google Scholar] [CrossRef]

- Steidl, G.; Teuber, T. Removing mulitiplicative noise by Douglas-Rachford splitting method. J. Math. Imaging Vis. 2010, 36, 168–184. [Google Scholar] [CrossRef]

- Liu, J.; Huang, T.Z.; Liu, G.; Wang, S.; Lv, X.G. Total variation with overlapping group sparsity for speckle noise reduction. Neurocomputing 2016, 216, 502–513. [Google Scholar] [CrossRef]

- Liu, P. Hybrid higher-order total variation model for multiplicative noise removal. Iet Image Process. 2020, 14, 862–873. [Google Scholar] [CrossRef]

- Shama, M.G.; Huang, T.Z.; Liu, J.; Wang, S. A convex total generalized variation regularized model for multiplicative noise and blur removal. Appl. Math. Comput. 2016, 276, 109–121. [Google Scholar] [CrossRef]

- Lv, Y.H. Total generalized variation denoising of speckled images using a primal-dual algorithm. J. Appl. Math. Comput. 2020, 62, 489–509. [Google Scholar] [CrossRef]

- Chartrand, R. Exact reconstruction of sparse signals via nonconvex minimization. IEEE Signal Process. Lett. 2007, 14, 707–710. [Google Scholar] [CrossRef]

- Han, Y.; Feng, X.C.; Baciu, G.; Wang, W.W. Nonconvex sparse regularizer based speckle noise removal. Pattern Recognit. 2013, 46, 989–1001. [Google Scholar] [CrossRef]

- Chen, X.J.; Zhou, W.J. Smoothing nonlinear conjugate gradient method for image restoration using nonsmooth nonconvex minimization. Siam J. Imaging Sci. 2010, 3, 765–790. [Google Scholar] [CrossRef]

- Nikolova, M. Analysis of the recovery of edges in images and signals by minimizing nonconvex regularized least-squares. Multiscale Model. Simul. 2005, 4, 960–991. [Google Scholar] [CrossRef]

- Nikolova, M.; Ng, M.K.; Zhang, S.; Ching, W.K. Efficient reconstruction of piecewise constant images using nonsmooth nonconvex mininmization. Siam J. Imaging Sci. 2008, 1, 2–25. [Google Scholar] [CrossRef]

- Krishnan, D.; Fergus, R. Fast image deconvolution using hyper-Laplacian priors. In Proceedings of the Advances in Neural Information Processing Systems 22: 23rd Annual Conference on Neural Information Processing Systems 2009, Vancouver, BC, Canada, 7–10 December 2009; pp. 1033–1041. [Google Scholar]

- Levin, A.; Weiss, Y.; Durand, F.; Freeman, W.T. Understanding and evaluating blind deconvolution algorithms. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2009, Miami, FL, USA, 20–25 June 2009; pp. 1964–1971. [Google Scholar]

- Fergus, R.; Singh, B.; Hertzmann, A.; Roweis, S.T.; Freeman, W.T. Removing camera shake from a single photograph. ACM Trans. Graphics 2006, 25, 787–794. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Zhong, S. Hyper-Laplacian regularized unidirectional lowrank tensor recovery for multispectral image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 5901–5909. [Google Scholar]

- Kong, J.; Lu, K.; Jiang, M. A new blind deblurring method via hyper-Laplacian prior. Procedia Comput. Sci. 2017, 107, 789–795. [Google Scholar] [CrossRef]

- Zuo, W.M.; Meng, D.Y.; Zhang, L.; Feng, X.C.; Zhang, D. A generalized iterated shrinkage algorithm for non-convex sparse coding. In Proceedings of the IEEE International Conference on Computer Vision 2013, Sydney, Australia, 3–6 December 2013; pp. 217–224. [Google Scholar]

- Shi, M.Z.; Han, T.T.; Liu, S.Q. Total variation image restoration using hyper-Laplacian prior with overlapping group sparsity. Signal Process. 2016, 126, 65–76. [Google Scholar] [CrossRef]

- Liu, J.; Huang, T.Z.; Selesnick, I.W.; Lv, X.G.; Chen, P.Y. Image restoration using total variation with overlapping group sparsity. Inf. Sci. 2015, 295, 232–246. [Google Scholar] [CrossRef]

- Jon, K.; Sun, Y.; Li, Q.X.; Liu, J.; Wang, X.F.; Zhu, W.S. Image restoration using overlapping group sparsity on hyper-Laplacian prior of image gradient. Neurocomputing 2021, 420, 57–69. [Google Scholar] [CrossRef]

- Candès, E.J.; Wakin, M.B.; Boyd, S.P. Enhancing sparsity by reweighted ℓ1 minimization. J. Fourier Anal. Appl. 2008, 14, 877–905. [Google Scholar] [CrossRef]

- Zhao, X.L.; Wang, F.; Ng, M.K. A new convex optimization model for multiplicative noise and blur removal. Siam J. Imaging Sci. 2014, 7, 456–475. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).