Abstract

Artificial Intelligence (AI)-enabled diagnosis has emerged as a promising avenue for revolutionizing medical image analysis, such as X-ray analysis, across a wide range of healthcare disciplines, including dentistry, consequently offering swift, efficient, and accurate solutions for identifying various dental conditions. In this study, we investigated the application of the YOLOv9 model, a cutting-edge object detection algorithm, to automate the diagnosis of dental diseases from X-ray images. The proposed methodology encompasses a comprehensive analysis of dental datasets, as well as preprocessing and model training. Through rigorous experimentation, remarkable accuracy, precision, recall, mAP@50, and an F1-score of 84.89%, 89.2%, 86.9%, 89.2%, and 88%, respectively, are achieved. With significant improvements over the baseline model of 17.9%, 15.8%, 18.5%, and 16.81% in precision, recall, mAP@50, and F1-score, respectively, with 7.9 ms inference time. This demonstrates the effectiveness of the proposed approach in accurately identifying dental conditions. Additionally, we discuss the challenges in automated diagnosis of dental diseases and outline future research directions to address knowledge gaps in this domain. This study contributes to the growing body of literature on AI in dentistry, providing valuable insights for researchers and practitioners.

1. Introduction

Advances in digital dentistry have revolutionized diagnostic procedures, offering more efficient and precise methods for identifying various oral diseases. The use of artificial intelligence (AI) in diagnostic processes has enormous potential for improving diagnosis accuracy and speed, particularly when evaluating X-ray images [1].

AI-empowered diagnostics are becoming more promising in terms of accuracy for identifying dental problems including caries and other dental diseases effectively. Likewise, predictive modeling is another area of research in which AI models are investigated to predict treatment outcomes, support dental decision-making, and enhance patient satisfaction. Additionally, personalized patient care planning and remote consultation, including telemedicine, can significantly improve the overall landscape of modern dentistry. With that said, it is apparent that the AI technologies including machine learning and deep learning models have been significantly contributing toward effective dental health practices [2,3].

In the realm of dental diagnostics, as research endeavors increasingly integrate AI, our analysis has identified significant knowledge gaps that demand attention. Foremost among these is the insufficiency of both quality and quantity in contemporary datasets, which undermines the reliability of AI-driven dental diagnostic tools. Furthermore, the validation of AI instruments by dental practitioners in real-world clinical settings often falls short, resulting in uncertainties surrounding their practical implementation. Additionally, there is a lack of clarity and transparency regarding AI methodologies within the dental field, which may hinder the adoption of these advancements by professionals [4]. Moreover, research on AI-enabled dental diagnostics remains limited to a narrow range of dental conditions, thereby constraining its potential impact and breadth of application. To address these challenges, future research should prioritize improving the quality and size of datasets to enhance the accuracy and reliability of AI in dental diagnostics. Collaborative efforts among dental professionals are crucial for rigorously validating and refining AI tools in clinical settings. Efforts should also be directed towards demystifying AI technologies for dental practitioners, emphasizing the ethical and legal considerations inherent in their use. Lastly, expanding the scope of dental conditions studied using AI will provide a more comprehensive understanding of its capabilities and limitations in dental diagnostics. By addressing these research gaps, the path will be paved for the development and adoption of more effective, efficient, and widely accepted AI applications in dentistry [5]. Further, the current study aims to contribute toward Saudi Arabia’s Vision 2030 goals for improving the healthcare sector through digital transformation. Currently, there are limited studies in this domain considering the local datasets.

As a summary, the research gaps are as follows:

- Dataset: Insufficiency of both quality and quantity in contemporary datasets, which undermines the reliability of AI-driven dental diagnostic tools.

- Multi-label classification: Research on AI-enabled dental diagnostics remains limited to a narrow range of dental conditions, thereby constraining its potential impact and breadth of application.

- Clinical validation: The validation of AI instruments by dental practitioners in real-world clinical settings often falls short. This creates uncertainties about their practical implementation.

- To address the research gaps mentioned, the objectives and contributions of the study are as follows:

- Comprehensive Data Collection and Rigorous Processing: We compile an extensive dataset of dental X-ray images representing a broad spectrum of dental conditions, including rare and common pathologies. It is followed by meticulous processing and augmentation techniques to enhance the dataset.

- Model development and validation: We aim to design and refine sophisticated deep learning models tailored explicitly for dental image analysis for multi label classification. Subsequently, models are evaluated and validated.

- Collaborative Integration with Dental Clinical Practices: To ensure the practical applicability of our models, we will collaborate closely with dental specialists and practitioners. This includes pilot testing in clinical environments to gather feedback and further refine the model.

The remaining part of this paper is organized as follows: Section 2 provides an overview of Related Work, discussing previous research and advancements in the field. Section 3 offers an overview of the Methodology employed in this study, outlining the materials and methods utilized. Section 4 presents the performance and results obtained from our study, detailing the outcomes of our research efforts. Section 5 initiates the Discussion, in which we delve into the implications of our findings and compare them with the existing literature. Finally, Section 6 summarizes our findings and concludes the paper by highlighting avenues for future research.

2. Related Work

According to Samaranayake et al. [2], AI has played a significant role in disease detection, including dental diseases. It plays a transformative role in dentistry and helps dental health professionals gain confidence [3,4]. Lira and Giraldi introduced an automated method for analyzing panoramic dental radiographs (PDR) or X-ray images. Their method focused on tooth segmentation and feature extraction using mathematical morphology, quadtree decomposition, thresholding, snake models, and PCA. While successful in tooth segmentation, it faced challenges with overlapping teeth and lacked clear information about the dataset used. Nonetheless, their technique offers a novel approach with potential applications in the field [6].

Another study conducted by Xie et al. [7], the authors explored the use of Artificial Neural Networks (ANN) in orthodontic decision-making for children aged 11 to 15. They used a Back Propagation (BP) ANN model and analyzed a dataset of 200 individuals with various indices. The ANN model accurately predicted the need for extractions with an 80% success rate. While the study’s methodology and focus on a specific age group were detailed, its applicability to other age ranges or orthodontic conditions may be limited [7].

Albahbah et al. [8] introduced a dental caries identification method using dental X-ray images in 2016. They used artificial neural networks (ANNs) and Histograms of Oriented Gradients (HOG) for feature extraction. However, the study lacked important dataset details, raising concerns about its reliability and applicability [8]. Subsequently, Naam et al. aimed to improve the detection of proximal caries in panoramic dental X-ray images. They utilized image processing techniques but lacked comparative effectiveness data and dataset details. The study highlighted technology’s potential to enhance caries detection accuracy and advance panoramic dental X-ray image analysis [9]. Tran et al. developed a consultancy system for dentistry diagnostics using X-ray images. They used fuzzy rule-based systems, including the Fuzzy C-Means (FCM) clustering algorithm and Fuzzy Inference System (FIS), to aid dentists in decision-making. The system showed efficacy in identifying dental issues, outperforming the Fuzzy K-Nearest Neighbor (FKNN) method. However, limitations included issues with dataset quality and quantity, which could affect nuanced diagnostic outcomes [10]. Furthermore, Lee et al. used deep CNN algorithms to detect dental caries on periapical radiographs. They achieved high diagnostic accuracies using a pre-trained Google Net Inception v3 CNN. The study had strengths in utilizing robust evaluation metrics, but limitations included focusing on permanent teeth, image resolution, and the absence of clinical parameters [11].

Jader et al. developed a deep learning system using the Mask R-CNN architecture to segment teeth in panoramic X-ray images. The system showed promising results but had limitations due to a small dataset and ambiguous data preprocessing techniques [12]. Tuzoff et al. achieved a sensitivity of 0.9941 and an accuracy of 0.9945 for tooth identification, along with a sensitivity of 0.9800 and specificity of 0.9994 for tooth numbering using Faster R-CNN architecture. Their study included a dataset of 1574 panoramic radiographs [13]. In the same year, Chen et al. achieved over 90% accuracy and recall rates in teeth detection using the faster R-CNN model with a dataset of 1250 digitized dental films. The method showed promise in automating teeth detection tasks, although further optimization and customization may be needed for different dental imaging scenarios [14].

Geetha and Aprameya used machine learning to diagnose dental caries in radiographs. Their method, employing KNN, achieved high accuracy (98.5%) and precision, with a low False Positive Rate (4.7%). This study highlights the potential of automated dental caries diagnosis systems, emphasizing the need for improved dataset quality and quantity [15]. Vinayahalingam et al. used deep learning to automate the detection and segmentation of M3 and IAN on panoramic dental radiographs. Their study achieved promising results with mean Dice-coefficients of 0.947 for M3s and 0.847 for IAN. Challenges included feature extraction specifications and contrast issues on the radiographs [16].

In 2020, Wang et al. introduced an automated dental X-ray detection method using adaptive histogram equalization and a hybrid multi-CNN, achieving over 90% test accuracy and an AUC of 0.97 [17]. In the same year, a study by You et al. developed a deep learning-based AI model for identifying dental plaque on primary teeth, showing promising results in plaque detection. However, limitations included a lack of CNN architecture details, small sample size, and scope constraints [18]. Chung et al. proposed a novel approach for automating the detection and identification of individual teeth in panoramic X-ray images in 2020. Their method achieved precise tooth detection and identification, offering potential application in dental clinics without additional identification algorithms [19].

Additionally, in 2020, Bianchi et al. explored AI’s potential in dental and maxillofacial radiology (DMFR), finding that CNNs performed comparably to expert dentists in dental imaging tasks. They remain optimistic about AI’s transformative role in DMFR for diagnosis and treatment planning, while acknowledging challenges such as algorithm complexity and dataset limitations [20]. Likewise, Muresan et al. proposed an automated method for teeth recognition and categorizing dental issues in panoramic X-ray images. Their approach accurately segmented teeth and identified abnormalities, showing superiority over alternative methods, although further refinement is needed to enhance accuracy and memory usage [21].

In 2021, Sonavane et al. employed a CNN-based approach to detect cavities using an open source dataset from Kaggle comprising 74 teeth images, with 60 for training and 14 for testing. They achieved a maximum accuracy of 71.43%. However, they suggest increasing the dataset size to enhance accuracy further [22]. Similarly in the same year, Huang and Lee introduced a caries detection study using optical coherence tomography (OCT) images. They achieved a success rate of 95.21% with the ResNet-152 architecture using 748 single tooth pictures from 63 OCT tooth images [23]. López-Janeiro et al. aimed to enhance the diagnostic accuracy of malignant salivary gland tumors using a machine learning algorithm. Despite limitations such as small sample size, the study highlights the potential of machine learning in improving tumor identification [24]. In the same year, Rodrigues et al. explored the possibilities of AI in dentistry, emphasizing the importance of collaboration between dental and technical disciplines [25].

Another study in 2021 evaluated machine learning methods for diagnosing temporomandibular disorder (TMD) using infrared thermography (IT). The study discusses the effectiveness of different techniques and highlights limitations such as a small sample size and lack of generalizability [26]. Similarly, another study in the same year, the role of AI in dentistry was examined, exploring its potential applications and reviewing techniques in the field. While discussing recent publications and comparing methodologies, the study also addressed research challenges and future directions. However, it lacked specific examples of AI use in dentistry and provided limited details about methodology and findings [27].

Subbotin proposed improving panoramic dental photograph display for dentists using computer technology and cloud platforms in 2021. However, the study lacked crucial details about the machine learning system’s components and performance metrics [28]. Lastly, in 2021, Muramatsu et al. developed a CNN-based system to automate tooth detection and classification in dental panoramic radiographs. Their approach aims to create structured dental charts automatically and facilitate computerized dental analysis. Using a dataset of 1000 images from Asahi University Hospital, they achieved high accuracy in tooth detection (96.4%) and classification for tooth types and conditions (93.2% and 98.0%, respectively). However, limitations include a small dataset and the exclusion of molars [29].

In 2022, Imak et al. proposed a novel method for automatically detecting dental caries using periapical images. They utilized a multi-input deep CNN ensemble (MI-DCNNE) model and achieved a remarkable accuracy of 99.13% [30]. In the same year, Kühnisch et al. conducted a study on dental diagnostics using artificial intelligence. They employed convolutional neural networks (CNNs) to identify and characterize tooth decay from intraoral pictures, achieving an accuracy rate of 92.5% in detecting cavities [31]. Almalki et al. developed a deep learning model to identify and categorize common dental issues. They created a dataset of 1200 dental X-ray images and utilized the YOLOv3 model, achieving a high accuracy rate of 99.33%. However, the limited sample size and restricted hospital data pose limitations to the study [32].

Similarly, in 2022, AL-Malaise et al. developed a method to accurately classify dental X-ray images into three categories: cavities, fillings, and implants. Using a dataset of 116 panoramic dental X-ray images, they trained a convolutional neural network called NASNet, which achieved an impressive accuracy rate of 96.51% and outperformed other models [33]. Another study proposed a CNN algorithm for detecting and segmenting mucosal thickening and mucosal retention cysts in the maxillary sinus using CBCT scans. The algorithm achieved high accuracy regardless of the imaging protocol, although limitations included manual segmentation, a small sample size, and lack of external validation [34].

Zhou et al. proposed a context-aware CNN for dental caries detection, considering neighboring teeth information to improve accuracy. Their method outperformed a conventional CNN baseline and showed improved diagnostic accuracy [35]. Likewise, another study developed a CNN model based on NASNet to accurately classify dental X-ray images into categories such as cavity, filling, and implant. The model achieved over 96% accuracy, and the study discussed transfer learning, multiclass classification, and potential applications while also noting limitations and areas for improvement [36]. An AI-based system was developed using deep learning architectures to detect periodontal bone loss in dental images. Among various classifiers, linear SVM achieved the highest accuracy. However, specific details and evaluation methods were not provided, limiting the understanding of the study’s findings [37].

In 2023, Anil et al. explored the transformative potential of AI in dental caries detection, discussing classifiers like SVMs, Random Forests, and CNNs. Although lacking specific dataset details and direct AI model findings, the study provided valuable insights into the impact of AI on dental caries diagnosis [38]. In the same year, Zhu et al. developed an AI framework using CNNs to enhance the accuracy and efficiency of diagnosing dental ailments on panoramic radiographs. The framework demonstrated high specificity and diagnostic performance for various dental diseases, highlighting the promising potential of AI, particularly CNNs, in revolutionizing dental diagnostics using PRs [39].

Based on the brief literature review, it is apparent that AI has been a promising candidate in dental diagnostics, and there is still room for improvement in terms of dataset diversity, investigation of the latest deep learning approaches to further fine-tune accuracy, and mitigation of false positives [40,41]. Moreover, research on AI-enabled dental diagnostics remains limited to a narrow range of dental conditions, thereby constraining its potential impact and breadth of application. To address these challenges, future research endeavors should prioritize enhancing the quality and size of datasets to bolster the accuracy and dependability of AI in dental diagnostics. Collaborative efforts involving dental professionals are crucial for the rigorous validation and refinement of AI tools in clinical contexts [1].

Furthermore, accuracy, recall, precision, F1-score, mAP50, mAP50-95, and AUC were among the most widely used figure of merits. Several deep learning models have been investigated in the literature. However, YOLO family was among the most widely used models for dental X-ray images. Most of the datasets were obtained from the local and community hospitals with a minimum of 49 to a maximum 3000 images per dataset were observed. On average the number of images in the dataset was around 500. Nonetheless, no noteworthy study has been conducted focusing middle eastern datasets on dental X-ray images.

Efforts should also be directed towards demystifying AI technologies for dental practitioners, emphasizing the ethical and legal considerations inherent in their use. Lastly, expanding the scope of dental conditions studied using AI will provide a more comprehensive understanding of its capabilities and limitations in the realm of dental diagnostics. By addressing these gaps, the path will be paved for the development and adoption of more effective, efficient, and widely accepted AI applications in dentistry. This is where the proposed study plays its role.

Table 1 presents the summary of literature review.

Table 1.

Summary of literature review.

3. Methodology

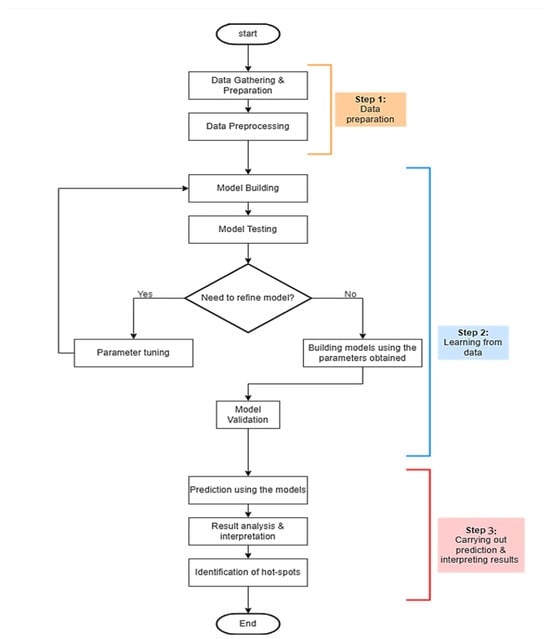

This section comprehends the proposed methodology for AI based dental image analyses for diagnosis. The proposed research employs standard computational intelligence methodologies, with a primary focus on one technique: YOLO v9. The outcomes derived from this technique will be juxtaposed with prior study results, primarily based on accuracy and error rate metrics. The subsequent discussion will delve into the general steps involved in constructing a computational intelligence-based system. These standard procedures are visually represented in Figure 1, which comprises three major phases (milestones): data preparation, data-driven learning, and prediction and result interpretation.

Figure 1.

Methodological steps.

Figure 1 provides a structured breakdown of the essential phases and processes involved in developing the proposed models. Each primary phase is outlined briefly.

Step 1: Data Preparation. At this foundational stage, the focus is on two critical operations: “Data Gathering and Preparation” and “Data Preprocessing.” Initially, data is collected and prepared. Subsequently, the preprocessing is performed to ensure the data is suitable for training purposes. This preprocessing aims to optimize the data for training, after which it proceeds to the subsequent stage.

Step 2: Learning from Data. This phase encompasses a range of activities, including “Model Building,” “Model Testing,” “Parameter Tuning,” “Rebuilding Models with the Tuned Parameters,” and “Model Validation.” Here, the specific learning technique YOLO v9 has been employed. The primary objective is to identify the most accurate model. The process begins with model creation and subsequent testing. The evaluation outcome determines whether the model is ready for the next phase or requires recalibration. If adjustments are needed, parameters are fine-tuned, and the activities in Step 2 are revisited. Upon successful validation, the model is reconstructed using the refined parameters and validated again. Once validation is successful, the model advances to the final phase.

Step 3: Prediction and Results Interpretation. The concluding phase encompasses activities such as “Model-Based Predictions,” “Result Analysis and Interpretation,” and “Hotspot Identification.” The model, refined in Step 2, is exposed to a new dataset to generate predictions. Following the prediction, the results undergo comprehensive analysis, leading to interpretations that identify the most effective model. Additionally, ‘hotspots’ or areas for potential improvement in subsequent projects are identified.

3.1. Data Preprocessing

The dataset was obtained from the Roboflow open repository, where it was uploaded and investigated by Fay (2024) [42]. The dataset was already annotated. The authors also reported preliminary results of mAP@50 at 70.7%, precision at 71.3%, and recall at 71.1% using their Dentix Computer Vision Model [42]. The proposed study is the second study in this regard. Hence [42] is serving as the baseline study in our case.

Following meticulous data preprocessing, our dataset was organized into two primary folders, each containing subfolders for “images” and “labels,” in line with the standard data structure used across all iterations of the YOLO framework. The dataset consisted of 589 images with four classes including, ‘crown-bridge’, ‘filling’, ‘implant’, and ‘Root Canal Obturation’, which were then strategically partitioned into distinct training, testing, and validation sets, comprising 70% (412 images), 20% (118 images), and 10% (59 images) of the total data, respectively. This deliberate allocation ensures that the proposed model is trained on a diverse range of data while also enabling us to identify any classes that may be inaccurately detected through rigorous validation.

For data preprocessing, the following operations were performed. Initially, the images were converted to grayscale, and a smoothing operation was applied. Subsequently, histogram equalization was performed using the “Contrast Limited Adaptive Histogram Equalization” function. Further, auto-orientation was applied on the images and they were uniformly stretched to 500 × 500 pixels.

It is worth noting that the dataset was imbalanced: crown-bridge, filling, implant, and root-canal-obturation had 397, 491, 45, and 210 instances, respectively. Nonetheless, no balancing operation was performed; rather, a ‘let the data speak’ approach was employed. Moreover, one image may belong to more than one class; for instance, filling and implant may be represented by the same image. An image may belong to at least one and up to all four classes, depending on the dental conditions provided.

Additionally, data augmentation techniques have been employed to enhance the robustness of the proposed model. Each image underwent all operations and produced multiple images. The types of augmentation methods are given in Table 2.

Table 2.

Data augmentation methods.

3.2. The Proposed Individual Computational Intelligence

YOLOv9 is a state-of-the-art, optimized, and stable version of the YOLO family of object detection algorithms, employed as a computational intelligence methodology in the proposed research [43]. Renowned for its rapid and accurate object identification in images while maintaining high speed, YOLOv9 further enhances these capabilities through refined model architecture, advanced training methods, and optimized strategies. Its one-stage architecture enables real-time image processing by swiftly predicting class probabilities and bounding box positions for objects of interest, diverging from traditional two-stage techniques to reduce complexity and inference times. Leveraging a deep convolutional neural network backbone, YOLOv9 efficiently extracts image features and employs a multi-scale approach to handle objects of varying sizes and orientations within a single image. Overall, YOLOv9 represents a state-of-the-art solution for object identification, combining speed, precision, and adaptability. Its integration underscores our commitment to leveraging advanced technology to address challenges in dental diagnostics, with the goal of enhancing patient care and treatment outcomes.

Specific to key issues in dental radiography, YOLOv9 provides improved feature extraction for low-contrast dental images, dynamic anchor box refinement for irregularly shaped teeth and lesions, an attention mechanism for spatial and channel attention in dental images, and multi-scale detection for multi-scale feature fusion [43].

3.3. Performance Evaluation of the Proposed Models

We utilize a range of performance indicators to evaluate the effectiveness of our proposed model in identifying dental conditions in X-ray images [40]. These metrics offer crucial insights into the model’s ability to accurately detect various dental problems across different dimensions.

Subsequently, the values used in the formulae for accuracy, precision, and recall correspond to True Positive (TP), actual cases predicted positively, True Negative (TN), actual negative predictions, False Positive (FP), negative predicted as positive, and False Negative (FN), positive predicted as negative.

- Accuracy. This metric measures the proportion of correctly identified cases relative to the total number of instances in the dataset. While accuracy is important, it should be considered alongside other metrics for a comprehensive evaluation of the model’s efficacy. It is represented as

- Recall. Also known as sensitivity, recall indicates the proportion of actual positive cases that the model correctly identifies. It is calculated by dividing the total number of true positives by the total number of false negatives. A higher recall value indicates that the model can effectively detect most positive cases, minimizing false negatives. Mathematically it can be represented as

- Precision. Precision assesses how well the model can identify positive cases among all instances predicted as positive. It is computed as the true positive ratio, reflecting the accuracy of positive predictions. A higher precision value signifies fewer false positives, resulting in more reliable positive identifications.

- F1-score: It is the harmonic mean of recall and precision. It provides a balance between recall and precision. It measures how the model prevents FP while still detecting the true objects. Mathematically can be expressed as

- mAP (Mean Average Precision): mAP is a widely used metric in object detection tasks, including YOLOv9-based models. It calculates the average precision across all classes, offering an aggregated measure of the model’s performance in detecting objects of interest. A higher mAP score indicates superior overall performance in object localization and classification.

- mAP@50: This metric demonstrates a model’s accuracy in detecting and localizing objects at a 50% Intersection over Union (IoU) threshold. It estimates how well the model performs when the predicted box overlaps with the actual object box by at least 50%.

- mAP@50-95: This metric evaluates the model’s performance across a range of IoU thresholds from 0.5 to 0.95, providing a more comprehensive view of the model’s accuracy. It rewards models that not only detect the presence of objects but also locate them at high precision.

- Confusion Matrix: This matrix provides a comprehensive overview of the model’s classification performance by comparing the expected classes with the actual classes. True positives, true negatives, false positives, and false negatives constitute their primary components. The confusion matrix enables a detailed examination of the model’s performance across various classes, helping to identify areas for improvement.

By evaluating the proposed models using these performance metrics, we aim to gauge their effectiveness in accurately identifying dental conditions in X-ray images and offer valuable insights for further optimization and refinement.

4. Experimental Results

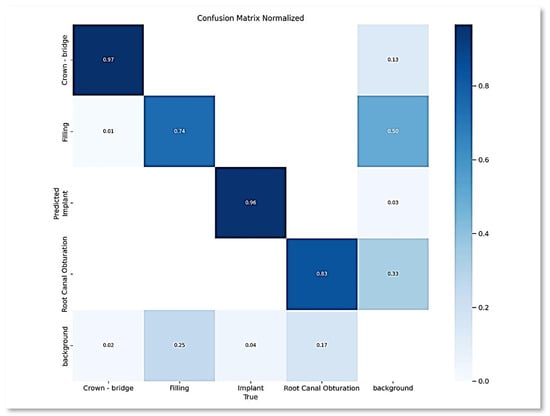

Following the deployment of the YOLOV9 model, a comprehensive analysis of the experimental outcomes was conducted. The culmination of this analysis is presented herein, beginning with the depiction of the normalized confusion matrix in Figure 2, which offers a detailed representation of the model’s performance across different classes.

Figure 2.

Normalized confusion matrix.

Based on the color coding in Figure 2, it is apparent that the crown-bridge class outperformed all the other classes, with the darkest blue. Followed by the implant class, root canal, and filling, respectively. The same trend can be further observed in the subsequent analyses, including various metrics and graphs. It is mainly because the dataset is unbalanced, with varying numbers of instances per class.

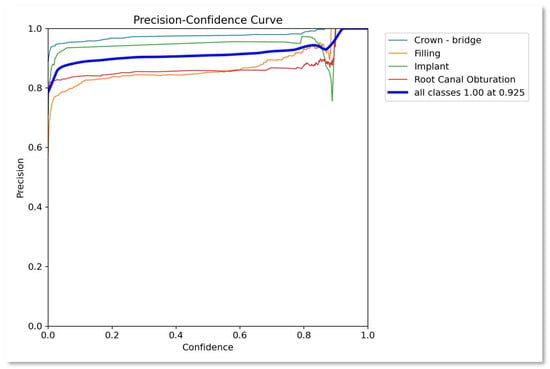

Additionally, Figure 3, Figure 4, Figure 5, Figure 6 and Figure 7 further elucidate the findings, portraying various curves and metrics that provide deeper insights into the model’s behavior and effectiveness. Figure 3 presents precision confidence curve for the proposed approach. The overall performance is promising for each class and on average for all classes the precision is 1.0 at 0.925 confidence. That is obtained by closely observing the trend expressed in the figure.

Figure 3.

Precision–confidence curve.

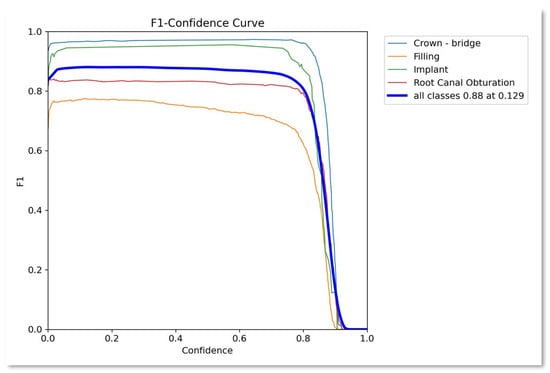

Figure 4.

F1–confidence curve.

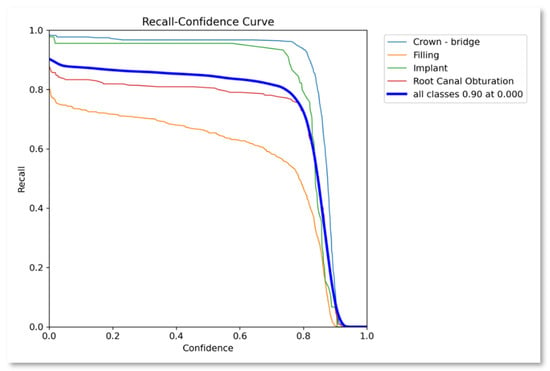

Figure 5.

Recall–confidence curve.

Figure 6.

Precision–recall curve.

Figure 7.

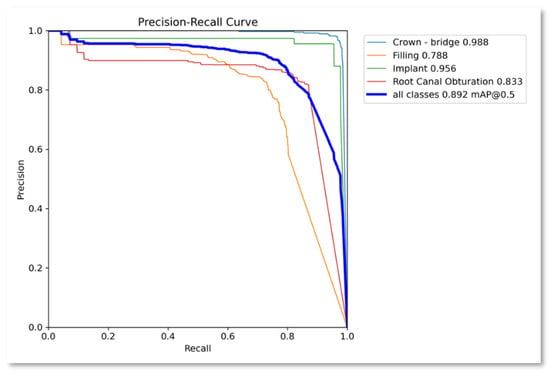

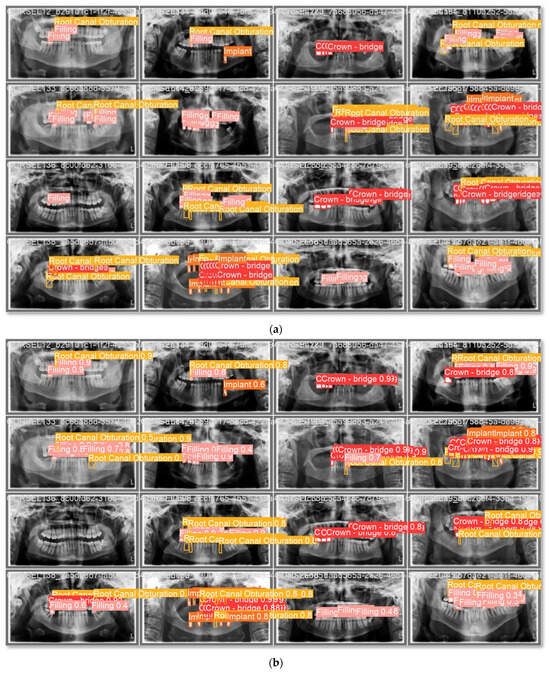

Batches prediction, (a) Batch 2 labels; (b) Batch 2 prediction.

Similarly, Figure 4 presents the F1-score confidence curve showing the performance of the proposed approach as 0.88 average over all the classes. Similarly, Figure 5 presents the recall confidence curve with an average recall value for all classes as 0.9.

Figure 6 represents the precision-recall confidence curve for the proposed approach. The precision–recall curve is a graph of the relationship between precision and recall for different thresholds. It is used for the summary of the trade-off between the positive predictive value and the true positive rate of a predictive model for different probability thresholds. A high area under the curve is both high recall and high precision, where high precision is equivalent to a low false positive rate and high recall is equivalent to a low false negative rate. The average precision–recall value for all the classes is 0.892, while the highest value is achieved by the ‘Crown’ class at 0.988, followed by ‘Implant’ class at 0.956. ‘Root canal obturation’ class obtained 0.8333 while the ‘Filling’ class exhibited a relatively poor performance at 0.7880. The results are very much supported by and aligned with the normalized confusion matrix given in Figure 2.

These experimental results serve as a pivotal component for gauging the efficacy and robustness of the implemented model, providing valuable feedback for refinement and optimization in future iterations. The proposed model exhibits an inference time of 7.9 ms. To showcase the visual evidence of the model’s performance a few sample images are depicted. As shown in Figure 7a,b, the model made good predictions for the classes in batch 2. Figure 7a shows the actual class labels across images, identifying the classes in each image. Consequently, Figure 7b presents the predicted labels by the proposed model with the classification accuracy for each label.

With that said, the proposed model achieved an average accuracy of 0.8489, a precision of 0.892, a recall of 0.869, an F1-score of 0.880, and an mAP50 of 0.892, as shown in Table 3. Crown-bridge, implant, root-canal, and filling performance were, respectively, higher to lower.

Table 3.

Summary of the results.

Table 4 contains the hyperparameters used in for the proposed model with their respective optimum values. The hyperparameters were optimized using the grid search approach.

Table 4.

Hyperparameter values.

Comparison with State-of-the-Art

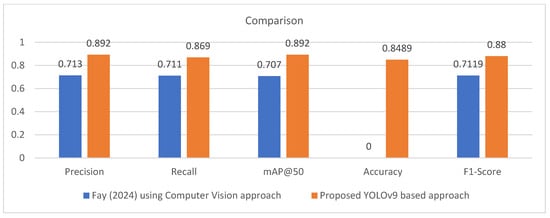

To compare the proposed study with state-of-the-art, the study by Fay (2024) [42] is the only study that is closest to the proposed approach based on common dataset and deep learning approaches being employed in both studies. So, we took this as a baseline study. Table 5 and Figure 8 present the comparison with the baseline study. It is apparent from the comparison that the proposed study significantly outperformed the baseline study in terms of precision, recall, mAP@50, and F1-score by 17.9%, 15.8%, 18.5%, and 16.81%, respectively. The main reason behind this improvement includes better preprocessing including augmentation and updated YOLOv9 model. However, accuracy was not reported for the baseline model.

Table 5.

Comparison with the baseline.

Figure 8.

Comparison with the baseline model [42].

5. Discussion

The achieved model performance, as evidenced by an accuracy of 0.8489, precision of 0.892, recall of 0.869 and F1-score of 0.88 (as depicted in Table 3), signifies a notable advancement in dental diagnostics using computational intelligence. These metrics, fundamental to evaluating the model’s efficacy, reflect its ability to distinguish between positive and negative cases while minimizing both false positive and false negatives. Such robust performance metrics underscore the model’s potential utility in real-world clinical scenarios, where accurate and reliable diagnostic tools are paramount for ensuring optimal patient care and treatment outcomes.

Further insights into the model’s performance are gleaned from the Precision–Confidence Curve (Figure 3), F1–Confidence Curve (Figure 4), Recall–Confidence Curve (Figure 5), and Precision–Recall Curve (Figure 6). These visualizations provide a nuanced understanding of how the model’s precision, recall, and F1 scores vary across different confidence thresholds. The Precision–Recall Curve, in particular, illuminates the delicate balance between precision and recall, offering valuable insights into the model’s performance trade-offs at varying decision thresholds. Moreover, the Precision–Confidence Curve and F1–Confidence Curve offer additional granularity by showcasing precision and F1 scores at different confidence levels. Such detailed analyses enable a more comprehensive interpretation of the model’s results and aid in determining optimal decision thresholds for clinical deployment. The proposed model exhibits an inference time of 7.9 ms.

Additionally, the study outperformed the baseline model by a significant margin across all evaluation metrics. Collectively, these findings underscore the significant strides made in leveraging computational intelligence, particularly the YOLOv9 model, for enhancing dental diagnostics. The model’s exceptional blend of speed, precision, and adaptability, as demonstrated by its performance metrics and visualizations, holds promise to revolutionize dental healthcare by improving diagnostic accuracy, streamlining treatment workflows, and ultimately enhancing patient outcomes.

As far as the limitations are concerned, firstly, it focuses on single datasets. Secondly, the dataset is highly imbalanced, and no data-balancing techniques have been employed. Thirdly, YOLOv9 is not old yet, and the latest models are worth investigating. In future studies, data fusion and balancing can be performed and investigated under higher variants of the YOLO family. Additionally, in the future, vision transformers, large visual models (LVMs), and ensemble deep learning models may be employed on a diverse dataset to target multiple disease detection in dental panoramic radiographs [44,45,46,47,48].

6. Conclusions

In conclusion, this study presents a comprehensive exploration of AI-enabled diagnosis using the YOLOv9 model for X-ray image analysis in dentistry. Through rigorous experimentation and analysis, we have demonstrated the efficacy of our approach in accurately identifying various dental conditions, achieving a notable accuracy of 0.8489, a precision of 0.892, a recall of 0.869, and an F1-score of 0.88, with significant improvement over the baseline model. The use of advanced computational intelligence methodologies, particularly the YOLOv9 model, holds significant promise for revolutionizing dental diagnostics by providing efficient, accurate solutions. The study’s findings highlight the importance of robust datasets, preprocessing techniques, and model training processes in developing reliable AI-driven diagnostic tools for dentistry. Moreover, the discussion of challenges and knowledge gaps in this domain underscores the need for ongoing research to address these limitations and further enhance the applicability of AI in dental healthcare. Moving forward, future research endeavors should focus on expanding the scope of dental conditions studied, improving dataset quality and quantity, and fostering collaboration between AI researchers and dental practitioners. By addressing these challenges and leveraging cutting-edge technologies, we can unlock the full potential of AI in dentistry, ultimately improving patient care and treatment outcomes. In summary, this study contributes to the growing body of literature on AI and machine learning in dentistry, providing valuable insights and paving the way for the development of more effective, efficient, and widely accepted AI applications in dental diagnostics.

Author Contributions

Supervision, D.M., M.I.A. and A.R.; Conceptualization, D.M., M.I.A. and A.R.; Software, H.A., F.B., G.A., N.A. and J.A.; Data curation, G.A., N.A. and J.A.; Writing the draft, H.A., F.B. and G.A.; Reviewing and editing, D.M., A.R. and F.A.; Validation, F.A. and M.I.A.; Visualization, N.A. and J.A.; Project Administration, A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data is available in a public repository and can be found at https://universe.roboflow.com/fay-regu8/dentix (accessed on 30 April 2025).

Conflicts of Interest

The authors have no conflict of interest to report for the current study.

References

- Musleh, D.; Almossaeed, H.; Balhareth, F.; Alqahtani, G.; Alobaidan, N.; Altalag, J.; Aldossary, M.I. Advancing Dental Diagnostics: A Review of Artificial Intelligence Applications and Challenges in Dentistry. Big Data Cogn. Comput. 2024, 8, 66. [Google Scholar] [CrossRef]

- Samaranayake, L.; Tuygunov, N.; Schwendicke, F.; Osathanon, T.; Khurshid, Z.; Boymuradov, S.A.; Cahyanto, A. The Transformative Role of Artificial Intelligence in Dentistry: A Comprehensive Overview. Part 1: Fundamentals of AI, and its Contemporary Applications in Dentistry. Int. Dent. J. 2025, 75, 383–396. [Google Scholar] [CrossRef]

- Sarhan, S.; Badran, A.; Ghalwash, D.; Gamal Almalahy, H.; Abou-Bakr, A. Perception, usage, and concerns of artificial intelligence applications among postgraduate dental students: Cross-sectional study. BMC Med. Educ. 2025, 25, 856. [Google Scholar] [CrossRef]

- Naik, S.; Vellappally, S.; Alateek, M.; Alrayyes, Y.F.; Al Kheraif, A.A.A.; Alnassar, T.M.; Alsultan, Z.M.; Thomas, N.G.; Chopra, A. Patients’ Acceptance and Intentions on Using Artificial Intelligence in Dental Diagnosis: Insights from Unified Theory of Acceptance and Use of Technology 2 Model. Int. Dent. J. 2025, 75, 103893. [Google Scholar] [CrossRef]

- Shan, T.; Tay, F.R.; Gu, L. Application of Artificial Intelligence in Dentistry. J. Dent. Res. 2021, 100, 232–244. [Google Scholar] [CrossRef]

- Lira, P.; Giraldi, G.; Neves, L.A. Segmentation and Feature Extraction of Panoramic Dental X-Ray Images. Int. J. Nat. Comput. Res. 2010, 1, 1–15. [Google Scholar] [CrossRef]

- Xie, X.; Wang, L.; Wang, A. Artificial neural network modelling for deciding if extractions are necessary prior to orthodontic treatment. Angle Orthod. 2010, 80, 262–266. [Google Scholar] [CrossRef] [PubMed]

- ALbahbah, A.A.; El-Bakry, H.M.; Abd-Elgahany, S. Detection of Caries in Panoramic Dental X-ray Images. Int. J. Electron. Commun. Comput. Eng. 2016, 7, 250–256. [Google Scholar]

- Na’Am, J.; Harlan, J.; Madenda, S.; Wibowo, E.P. Image Processing of Panoramic Dental X-Ray for Identifying Proximal Caries. TELKOMNIKA (Telecommun. Comput. Electron. Control) 2017, 15, 702–708. [Google Scholar] [CrossRef]

- Tuan, T.M.; Duc, N.T.; Van Hai, P.; Son, L.H. Dental Diagnosis from X-Ray Images using Fuzzy Rule-Based Systems. Int. J. Fuzzy Syst. Appl. 2017, 6, 1–16. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Jader, G.; Fontineli, J.; Ruiz, M.; Abdalla, K.; Pithon, M.; Oliveira, L. Deep Instance Segmentation of Teeth in Panoramic X-Ray Images. In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October–1 November 2018; pp. 400–407. [Google Scholar]

- Tuzoff, D.; Tuzova, L.; Bornstein, M.; Krasnov, A.; Kharchenko, M.; Nikolenko, S.; Sveshnikov, M.; Bednenko, G. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019, 48, 20180051. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, K.; Lyu, P.; Li, H.; Zhang, L.; Wu, J.; Lee, C.H. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci. Rep. 2019, 9, 3840. [Google Scholar] [CrossRef] [PubMed]

- Geetha, V.; Aprameya, K.S. Dental Caries Diagnosis in X-ray Images using KNN Classifier. Indian J. Sci. Technol. 2019, 12, 5. [Google Scholar] [CrossRef]

- Vinayahalingam, S.; Xi, T.; Berge, S.; Maal, T.; De Jong, G. Automated detection of third molars and mandibular nerve by deep learning. Sci. Rep. 2019, 9, 9007. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, L.; Zhang, Y.; Lv, D.; Li, Z.; Qi, W. An Adaptive Enhancement Based Hybrid CNN Model for Digital Dental X-ray Positions Classification. arXiv 2020, arXiv:2005.01509. [Google Scholar] [CrossRef]

- You, W.; Hao, A.; Li, S.; Wang, Y.; Xia, B. Deep learning-based dental plaque detection on primary teeth: A comparison with clinical assessments. BMC Oral Health 2020, 20, 141. [Google Scholar] [CrossRef]

- Chung, M.; Lee, J.; Park, S.; Lee, M.; Lee, C.E.; Lee, J.; Shin, Y.-G. Individual tooth detection and identification from dental panoramic x-ray images via point-wise localization and distance regularization. Artif. Intell. Med. 2021, 111, 101996. [Google Scholar] [CrossRef] [PubMed]

- Leite, A.F.; Vasconcelos, K.F.; Willems, H.; Jacobs, R. Radiomics and machine learning in oral healthcare. Proteom.-Clin. Appl. 2023, 14, 1900040. [Google Scholar] [CrossRef] [PubMed]

- Muresan, M.P.; Barbura, A.R.; Nedevschi, S. Teeth detection and dental problem classification in panoramic X-ray images using deep learning and image processing techniques. In Proceedings of the IEEE 16th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 3–5 September 2020; pp. 457–463. [Google Scholar]

- Sonavane, A.; Yadav, R.; Khamparia, A. Dental cavity classification of using convolutional neural network. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1022, 012116. [Google Scholar] [CrossRef]

- Huang, Y.P.; Lee, Y.S. Deep Learning for Caries Detection Using Optical Coherence Tomography. medRxiv 2021. [Google Scholar] [CrossRef]

- López-Janeiro, Á.; Cabañuz, C.; Blasco-Santana, L.; Ruiz-Bravo, E. A tree-based machine learning model to approach morphologic assessment of malignant salivary gland tumors. Ann. Diagn. Pathol. 2021, 56, 151869. [Google Scholar] [CrossRef]

- Rodrigues, J.A.; Krois, J.; Schwendicke, F. Demystifying artificial intelligence and deep learning in dentistry. Braz. Oral Res. 2021, 35, e094. [Google Scholar] [CrossRef] [PubMed]

- Diniz de Lima, E.; Souza Paulino, J.A.; Lira de Farias Freitas, A.P.; Viana Ferreira, J.E.; Silva Barbosa, J.S.; Bezerra Silva, D.F.; Meira Bento, P.; Araújo Maia Amorim, A.M.; Pita Melo, D. Artificial intelligence and infrared thermography as auxiliary tools in the diagnosis of temporomandibular disorder. Dentomaxillofac. Radiol. 2021, 51, 20210318. [Google Scholar] [CrossRef]

- Babu, A.; Onesimu, J.A.; Sagayam, K.M. Artificial Intelligence in dentistry: Concepts, Applications and Research Challenges. E3S Web Conf. 2021, 297, 01074. [Google Scholar] [CrossRef]

- Subbotin, A. Applying Machine Learning in Fog Computing Environments for Panoramic Teeth Imaging. In Proceedings of the 2021 XXIV International Conference on Soft Computing and Measurements (SCM), St. Petersburg, Russia, 26–28 May 2021; pp. 237–239. [Google Scholar]

- Muramatsu, C.; Morishita, T.; Takahashi, R.; Hayashi, T.; Nishiyama, W.; Ariji, Y.; Zhou, X.; Hara, T.; Katsumata, A.; Ariji, E.; et al. Tooth Detection and Classification on Panoramic Radiographs for Automatic Dental Chart Filing: Improved Classification by Multi-Sized Input Data. Oral Radiol. 2021, 37, 13–19. [Google Scholar] [CrossRef]

- Imak, A.; Celebi, A.; Siddique, K.; Turkoglu, M.; Sengur, A.; Salam, I. Dental Caries Detection Using Score-Based Multi-Input Deep Convolutional Neural Network. IEEE Access 2022, 10, 18320–18329. [Google Scholar] [CrossRef]

- Kühnisch, J.; Meyer, O.; Hesenius, M.; Hickel, R.; Gruhn, V. Caries Detection on Intraoral Images Using Artificial Intelligence. J. Dent. Res. 2022, 101, 158–165. [Google Scholar] [CrossRef]

- Almalki, Y.E.; Din, A.I.; Ramzan, M.; Irfan, M.; Aamir, K.M.; Almalki, A.; Alotaibi, S.; Alaglan, G.; Alshamrani, H.A.; Rahman, S. Deep Learning Models for Classification of Dental Diseases Using Orthopantomography X-ray OPG Images. Sensors 2022, 22, 7370. [Google Scholar] [CrossRef]

- AL-Ghamdi, A.; Ragab, M.; AlGhamdi, S.; Asseri, A.; Mansour, R.; Koundal, D. Detection of Dental Diseases through X-Ray Images Using Neural Search Architecture Network. Comput. Intell. Neurosci. 2022, 2022, 3500552. [Google Scholar] [CrossRef]

- Hung, K.F.; Ai, Q.Y.H.; King, A.D.; Bornstein, M.M.; Wong, L.M.; Leung, Y.Y. Automatic Detection and Segmentation of Morphological Changes of the Maxillary Sinus Mucosa on Cone-Beam Computed Tomography Images Using a Three-Dimensional Convolutional Neural Network. Clin. Oral Investig. 2022, 26, 3987–3998. [Google Scholar] [CrossRef]

- Zhou, X.; Yu, G.; Yin, Q.; Liu, Y.; Zhang, Z.; Sun, J. Context Aware Convolutional Neural Network for Children Caries Diagnosis on Dental Panoramic Radiographs. Comput. Math. Methods Med. 2022, 2022, 6029245. [Google Scholar] [CrossRef]

- Bhat, S.; Birajdar, G.K.; Patil, M.D. A comprehensive survey of deep learning algorithms and applications in dental radiograph analysis. Healthc. Analytics 2023, 4, 100282. [Google Scholar] [CrossRef]

- Sunnetci, K.M.; Ulukaya, S.; Alkan, A. Periodontal Bone Loss Detection Based on Hybrid Deep Learning and Machine Learning Models with a User-Friendly Application. Biomed. Signal Process. Control 2022, 77, 103844. [Google Scholar] [CrossRef]

- Anil, S.; Porwal, P.; Porwal, A. Transforming Dental Caries Diagnosis through Artificial Intelligence-Based Techniques. Cureus 2023, 15, e41694. [Google Scholar] [CrossRef]

- Zhu, J.; Chen, Z.; Zhao, J.; Yu, Y.; Li, X.; Shi, K.; Zhang, F.; Yu, F.; Shi, K.; Sun, Z.; et al. Artificial Intelligence in the Diagnosis of Dental Diseases on Panoramic Radiographs: A Preliminary Study. BMC Oral Health 2023, 23, 358. [Google Scholar] [CrossRef] [PubMed]

- Alharbi, S.S.; Alhasson, H.F. Exploring the Applications of Artificial Intelligence in Dental Image Detection: A Systematic Review. Diagnostics 2024, 14, 2442. [Google Scholar] [CrossRef] [PubMed]

- Schwendicke, F.; Tichy, A. The expanding role of AI in dentistry: Beyond image analysis. Br. Dent. J. 2025, 238, 800–801. [Google Scholar] [CrossRef]

- Fay, R. Dentix Dataset. Roboflow Universe. April 2024. Available online: https://universe.roboflow.com/fay-regu8/dentix (accessed on 15 December 2024).

- Wang, C.; Yeh, I.; Mark Liao, H. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024. [Google Scholar] [CrossRef]

- Ahmed, M.S.; Rahman, A.; AlGhamdi, F.; AlDakheel, S.; Hakami, H.; AlJumah, A.; AlIbrahim, Z.; Youldash, M.; Alam Khan, M.A.; Basheer Ahmed, M.I. Joint Diagnosis of Pneumonia, COVID-19, and Tuberculosis from Chest X-ray Images: A Deep Learning Approach. Diagnostics 2023, 13, 2562. [Google Scholar] [CrossRef]

- Surdu, A.; Budala, D.G.; Luchian, I.; Foia, L.G.; Botnariu, G.E.; Scutariu, M.M. Using AI in Optimizing Oral and Dental Diagnoses-A Narrative Review. Diagnostics 2024, 14, 2804. [Google Scholar] [CrossRef]

- Masoumeh, F.N.; Mohsen, A.; Elyas, I. Transforming dental diagnostics with artificial intelligence: Advanced integration of ChatGPT and large language models for patient care. Front. Dent. Med. 2025, 5, 1456208. [Google Scholar] [CrossRef] [PubMed]

- Najeeb, M.; Islam, S. Artificial intelligence (AI) in restorative dentistry: Current trends and future prospects. BMC Oral Health 2025, 25, 592. [Google Scholar] [CrossRef] [PubMed]

- Gollapalli, M.; Rahman, A.; Kudos, S.A.; Foula, M.S.; Alkhalifa, A.M.; Albisher, H.M.; Al-Hariri, M.T.; Mohammad, N. Appendicitis Diagnosis: Ensemble Machine Learning and Explainable Artificial Intelligence-Based Comprehensive Approach. Big Data Cogn. Comput. 2024, 8, 108. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.