AI-Enabled Diagnosis Using YOLOv9: Leveraging X-Ray Image Analysis in Dentistry

Abstract

1. Introduction

- Dataset: Insufficiency of both quality and quantity in contemporary datasets, which undermines the reliability of AI-driven dental diagnostic tools.

- Multi-label classification: Research on AI-enabled dental diagnostics remains limited to a narrow range of dental conditions, thereby constraining its potential impact and breadth of application.

- Clinical validation: The validation of AI instruments by dental practitioners in real-world clinical settings often falls short. This creates uncertainties about their practical implementation.

- To address the research gaps mentioned, the objectives and contributions of the study are as follows:

- Comprehensive Data Collection and Rigorous Processing: We compile an extensive dataset of dental X-ray images representing a broad spectrum of dental conditions, including rare and common pathologies. It is followed by meticulous processing and augmentation techniques to enhance the dataset.

- Model development and validation: We aim to design and refine sophisticated deep learning models tailored explicitly for dental image analysis for multi label classification. Subsequently, models are evaluated and validated.

- Collaborative Integration with Dental Clinical Practices: To ensure the practical applicability of our models, we will collaborate closely with dental specialists and practitioners. This includes pilot testing in clinical environments to gather feedback and further refine the model.

2. Related Work

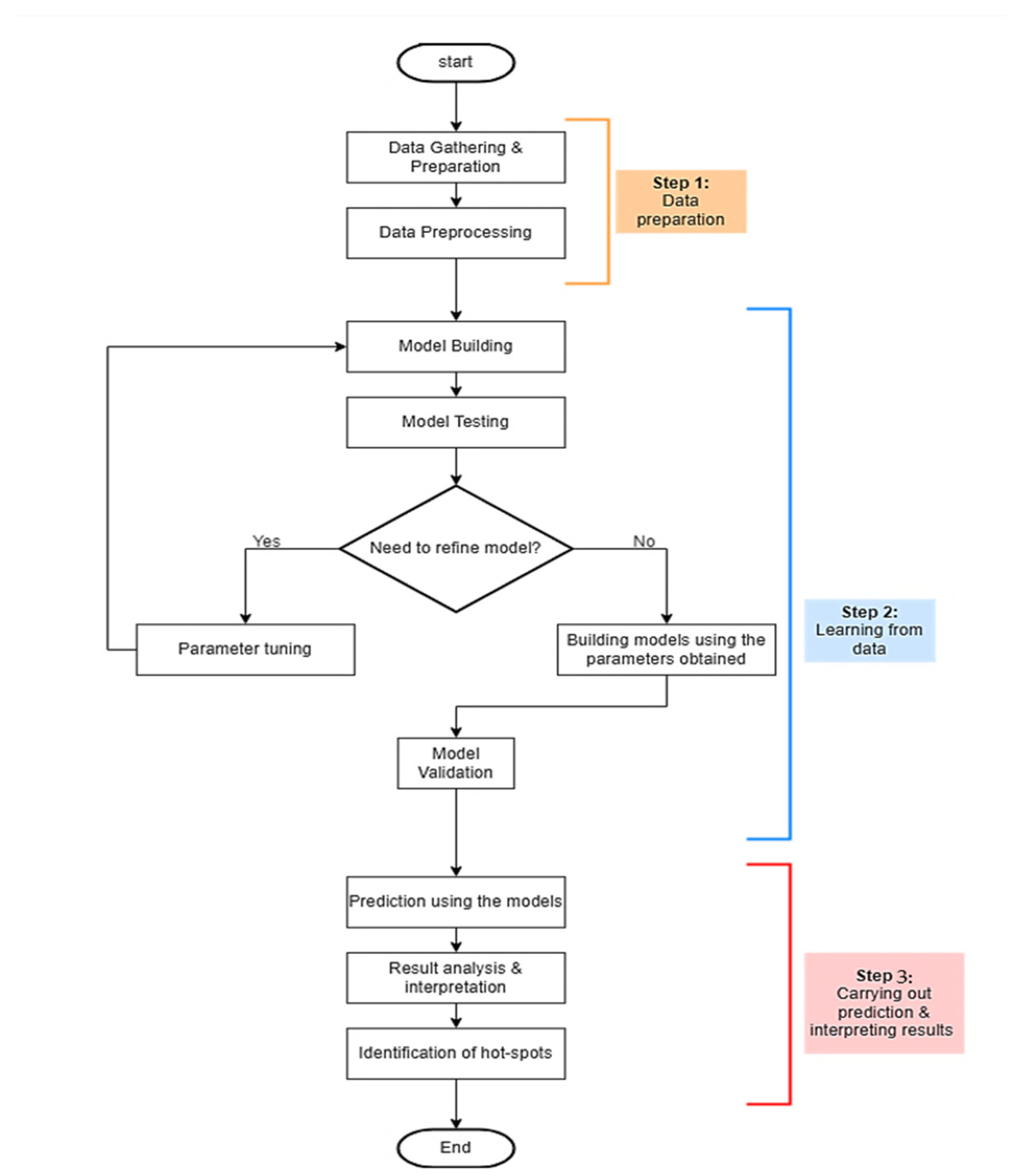

3. Methodology

3.1. Data Preprocessing

3.2. The Proposed Individual Computational Intelligence

3.3. Performance Evaluation of the Proposed Models

- Accuracy. This metric measures the proportion of correctly identified cases relative to the total number of instances in the dataset. While accuracy is important, it should be considered alongside other metrics for a comprehensive evaluation of the model’s efficacy. It is represented as

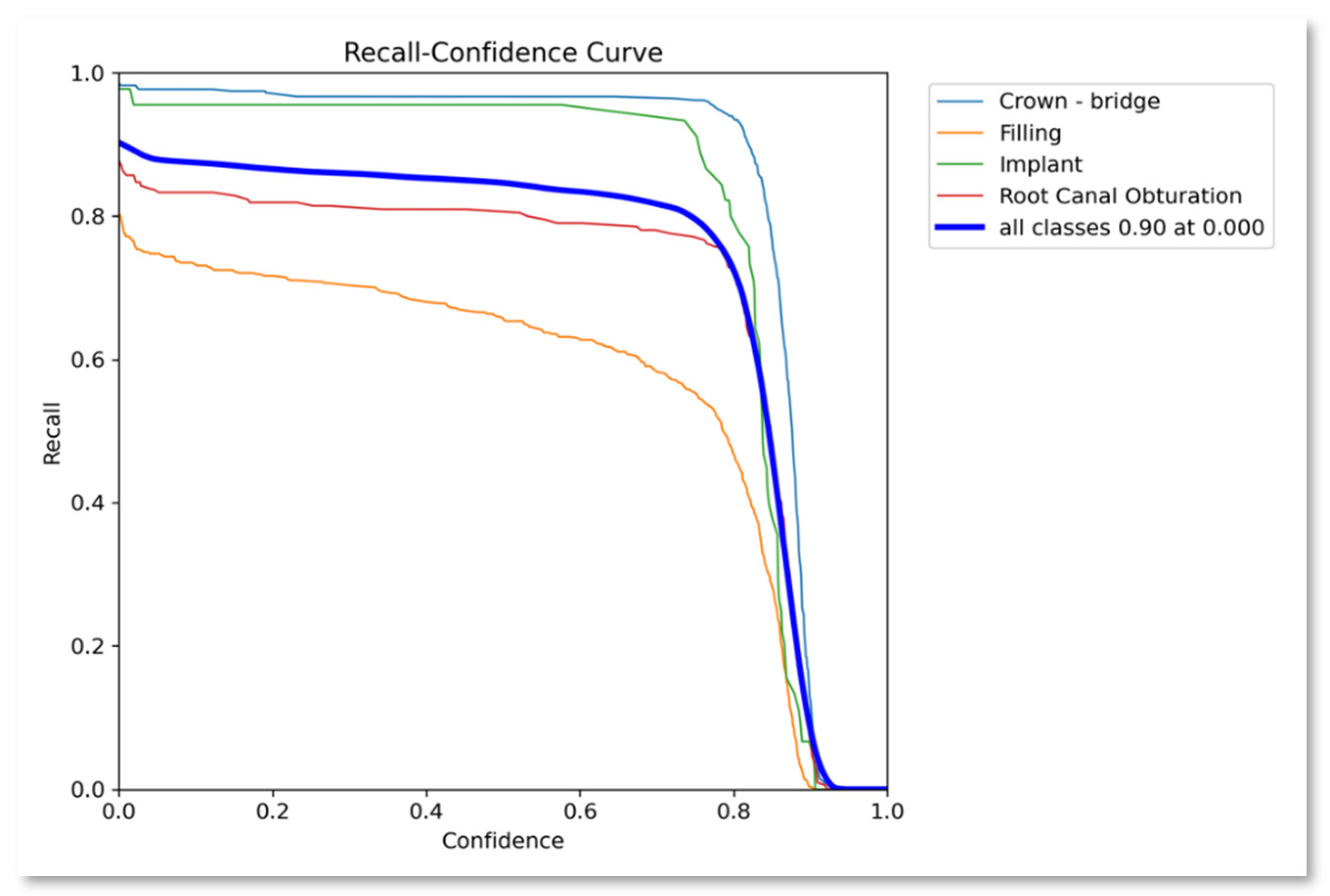

- Recall. Also known as sensitivity, recall indicates the proportion of actual positive cases that the model correctly identifies. It is calculated by dividing the total number of true positives by the total number of false negatives. A higher recall value indicates that the model can effectively detect most positive cases, minimizing false negatives. Mathematically it can be represented as

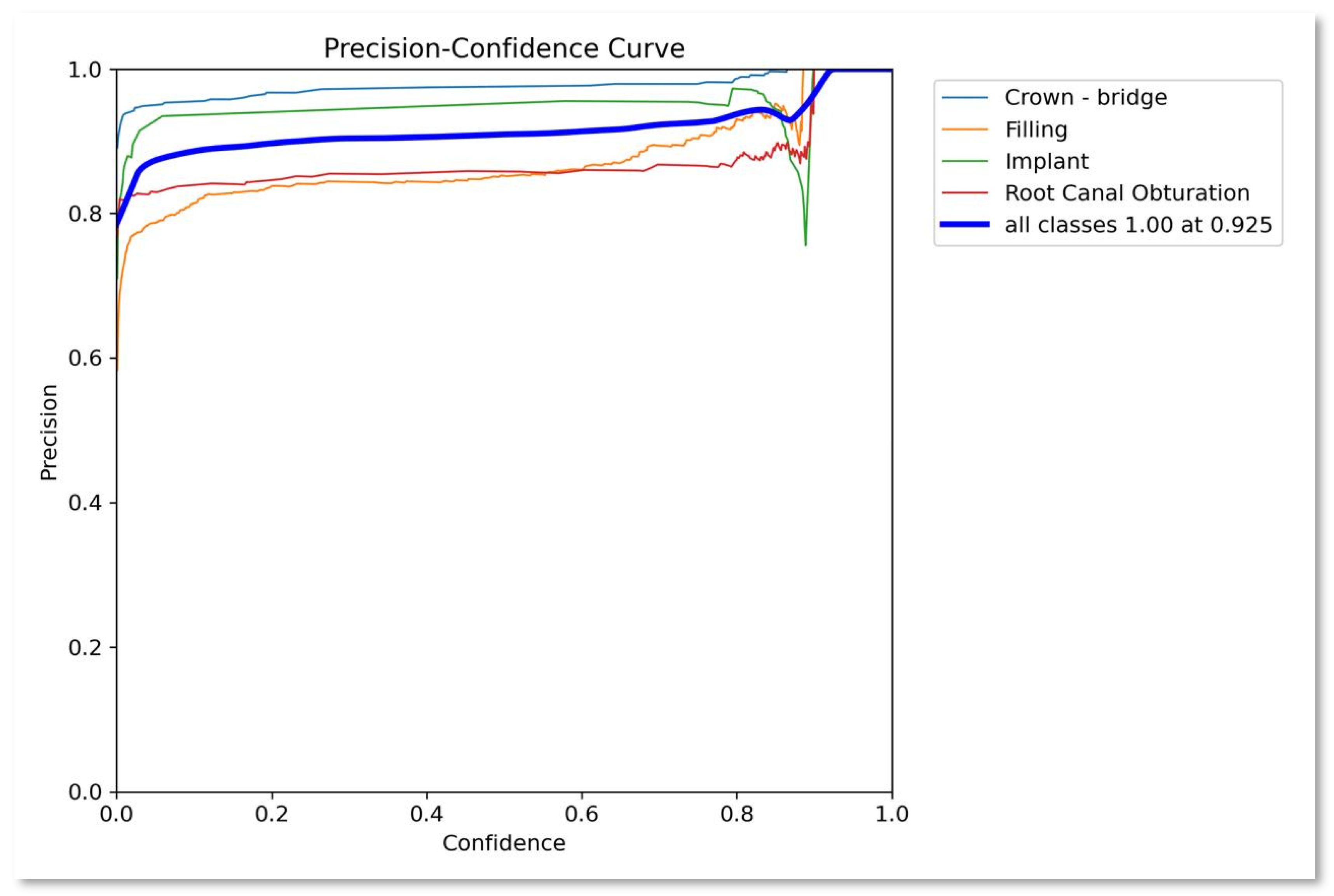

- Precision. Precision assesses how well the model can identify positive cases among all instances predicted as positive. It is computed as the true positive ratio, reflecting the accuracy of positive predictions. A higher precision value signifies fewer false positives, resulting in more reliable positive identifications.

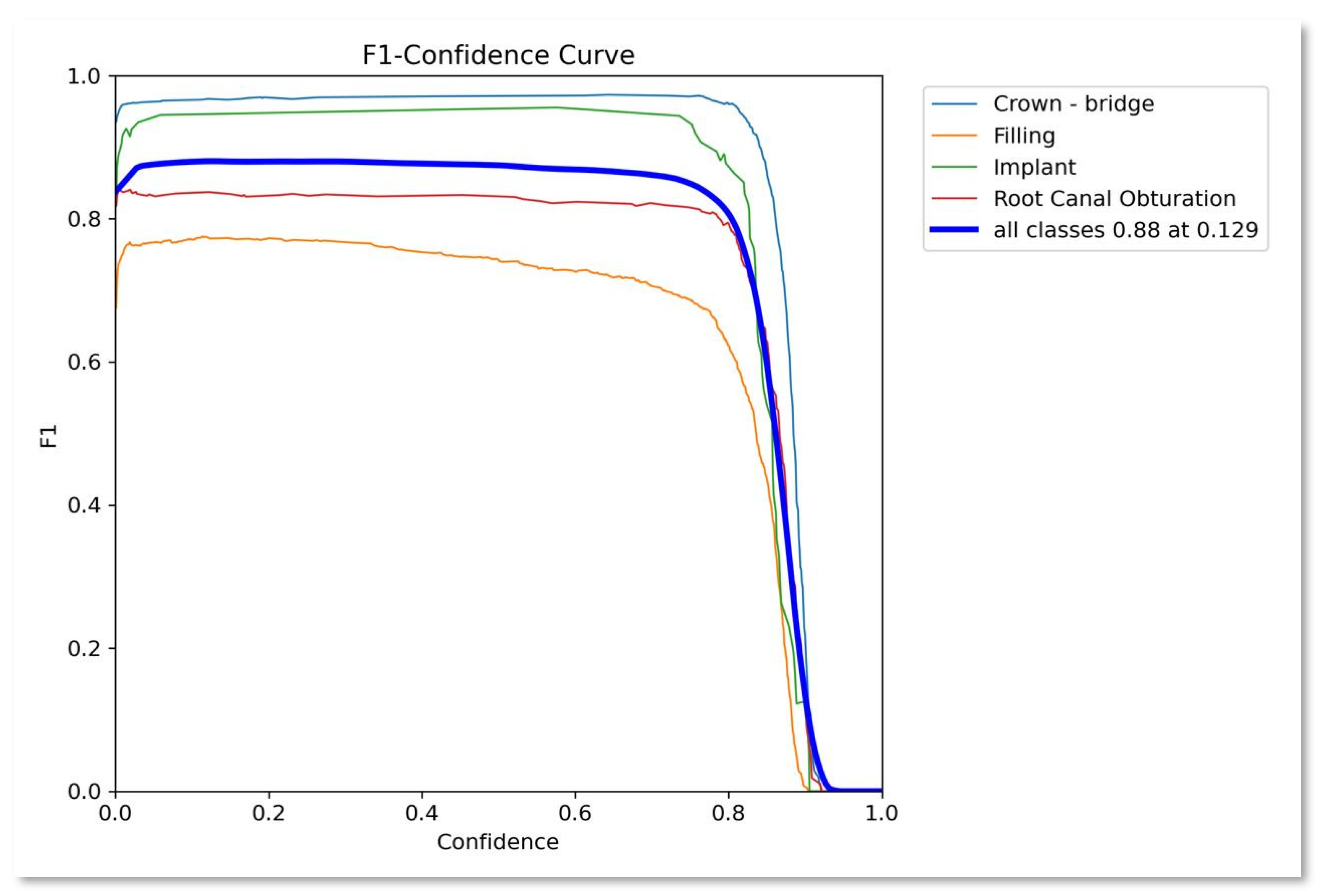

- F1-score: It is the harmonic mean of recall and precision. It provides a balance between recall and precision. It measures how the model prevents FP while still detecting the true objects. Mathematically can be expressed as

- mAP (Mean Average Precision): mAP is a widely used metric in object detection tasks, including YOLOv9-based models. It calculates the average precision across all classes, offering an aggregated measure of the model’s performance in detecting objects of interest. A higher mAP score indicates superior overall performance in object localization and classification.

- mAP@50: This metric demonstrates a model’s accuracy in detecting and localizing objects at a 50% Intersection over Union (IoU) threshold. It estimates how well the model performs when the predicted box overlaps with the actual object box by at least 50%.

- mAP@50-95: This metric evaluates the model’s performance across a range of IoU thresholds from 0.5 to 0.95, providing a more comprehensive view of the model’s accuracy. It rewards models that not only detect the presence of objects but also locate them at high precision.

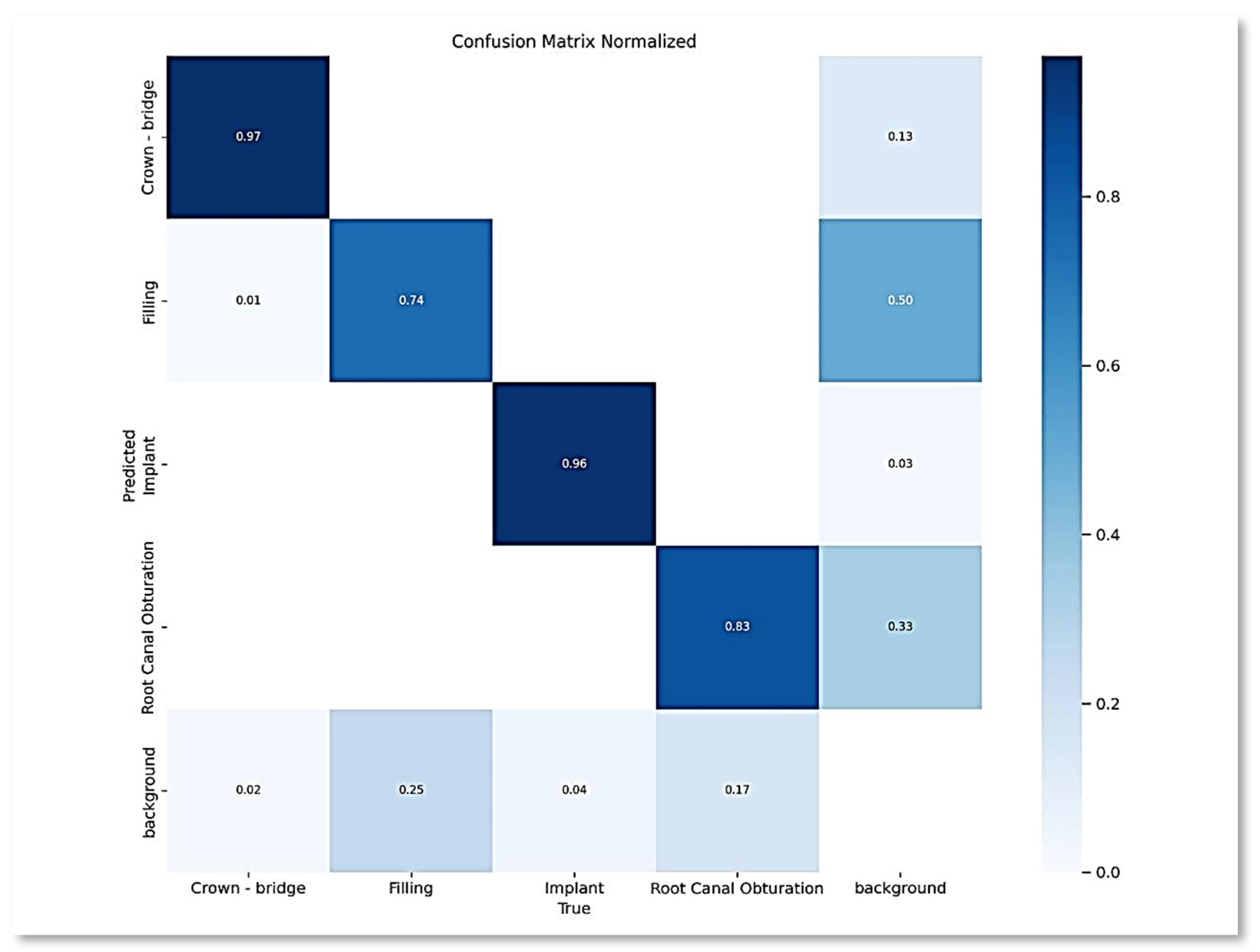

- Confusion Matrix: This matrix provides a comprehensive overview of the model’s classification performance by comparing the expected classes with the actual classes. True positives, true negatives, false positives, and false negatives constitute their primary components. The confusion matrix enables a detailed examination of the model’s performance across various classes, helping to identify areas for improvement.

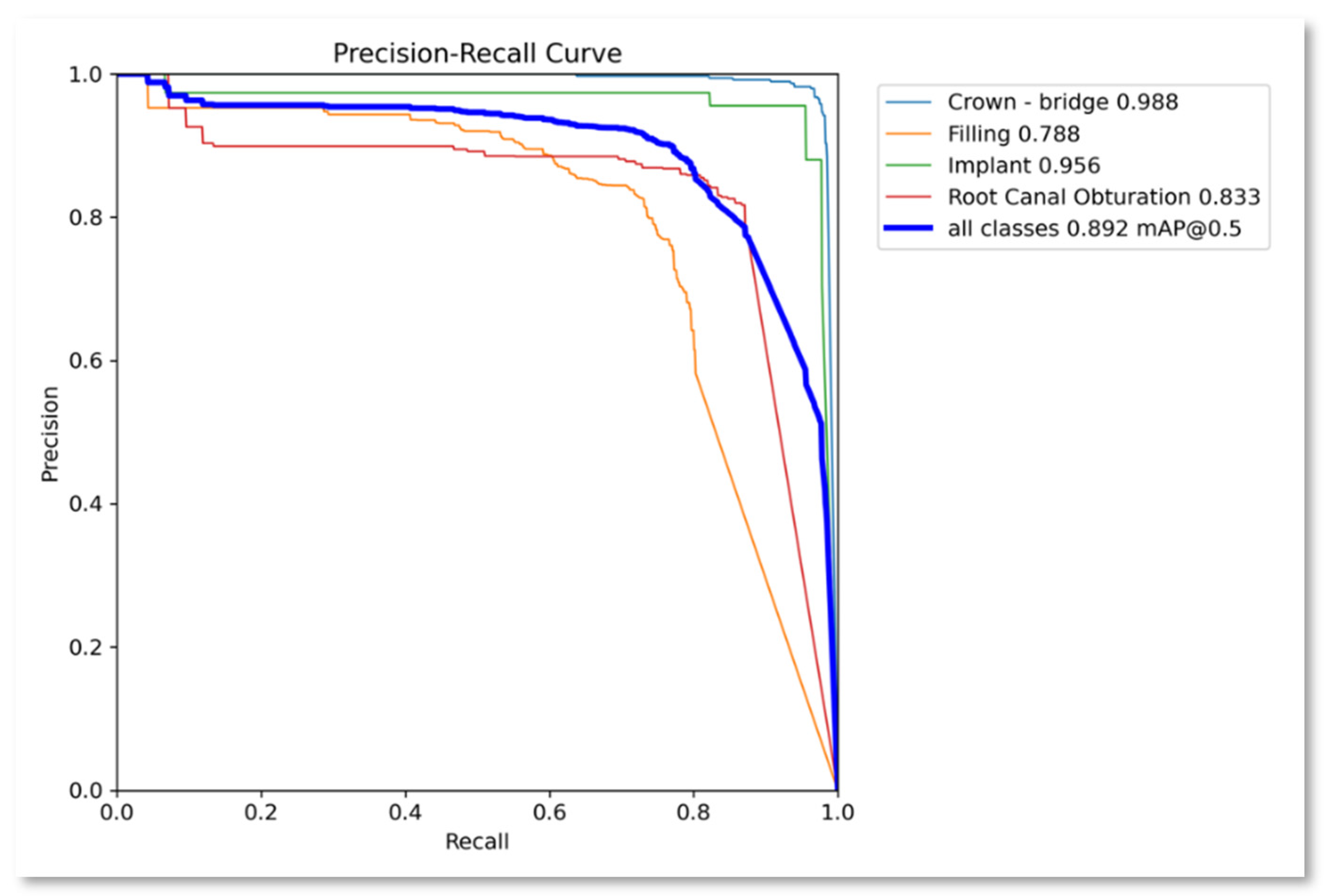

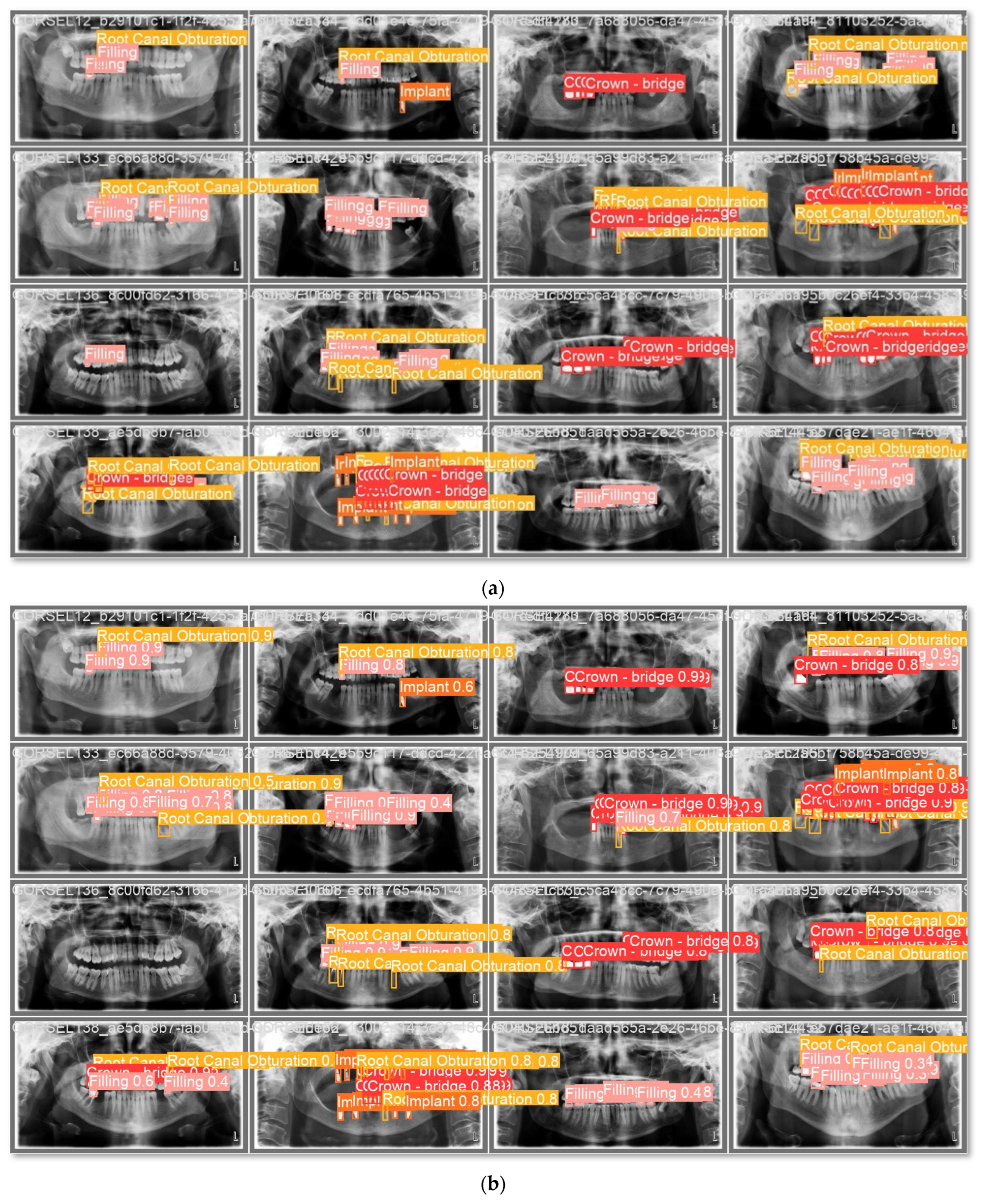

4. Experimental Results

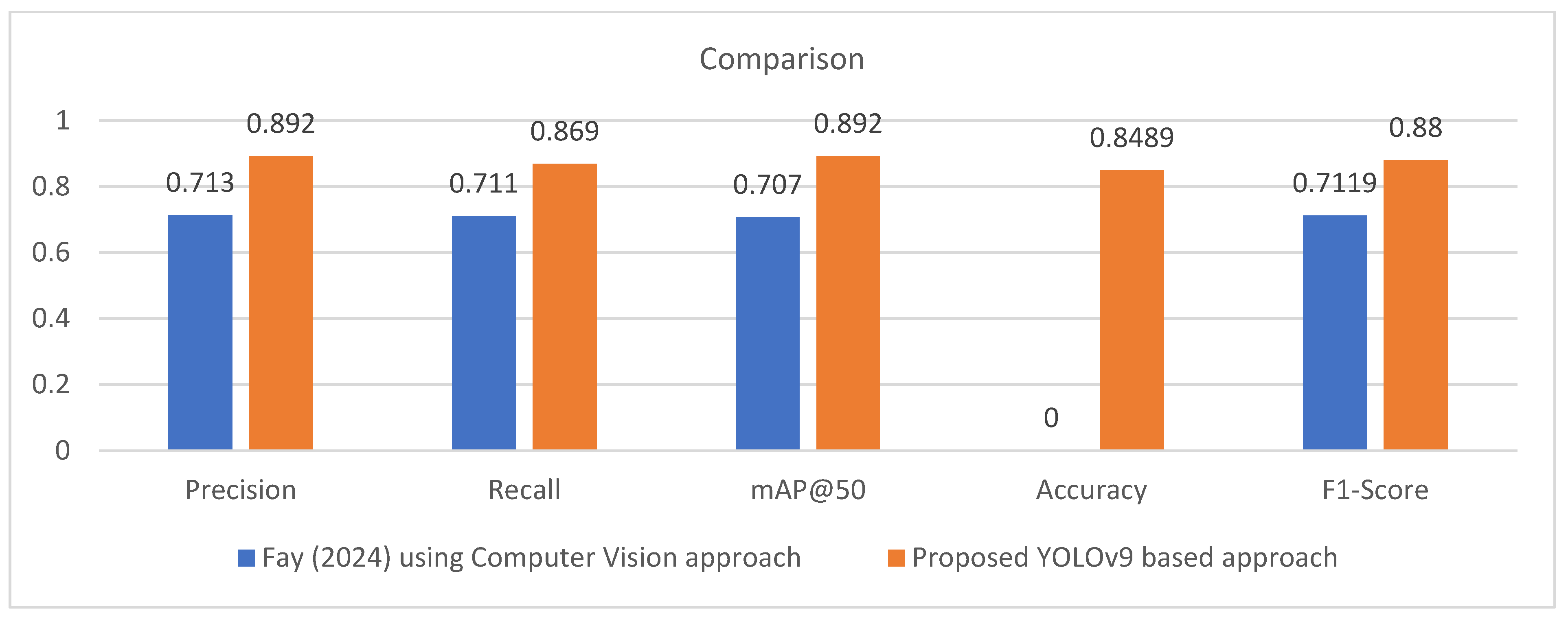

Comparison with State-of-the-Art

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Musleh, D.; Almossaeed, H.; Balhareth, F.; Alqahtani, G.; Alobaidan, N.; Altalag, J.; Aldossary, M.I. Advancing Dental Diagnostics: A Review of Artificial Intelligence Applications and Challenges in Dentistry. Big Data Cogn. Comput. 2024, 8, 66. [Google Scholar] [CrossRef]

- Samaranayake, L.; Tuygunov, N.; Schwendicke, F.; Osathanon, T.; Khurshid, Z.; Boymuradov, S.A.; Cahyanto, A. The Transformative Role of Artificial Intelligence in Dentistry: A Comprehensive Overview. Part 1: Fundamentals of AI, and its Contemporary Applications in Dentistry. Int. Dent. J. 2025, 75, 383–396. [Google Scholar] [CrossRef]

- Sarhan, S.; Badran, A.; Ghalwash, D.; Gamal Almalahy, H.; Abou-Bakr, A. Perception, usage, and concerns of artificial intelligence applications among postgraduate dental students: Cross-sectional study. BMC Med. Educ. 2025, 25, 856. [Google Scholar] [CrossRef]

- Naik, S.; Vellappally, S.; Alateek, M.; Alrayyes, Y.F.; Al Kheraif, A.A.A.; Alnassar, T.M.; Alsultan, Z.M.; Thomas, N.G.; Chopra, A. Patients’ Acceptance and Intentions on Using Artificial Intelligence in Dental Diagnosis: Insights from Unified Theory of Acceptance and Use of Technology 2 Model. Int. Dent. J. 2025, 75, 103893. [Google Scholar] [CrossRef]

- Shan, T.; Tay, F.R.; Gu, L. Application of Artificial Intelligence in Dentistry. J. Dent. Res. 2021, 100, 232–244. [Google Scholar] [CrossRef]

- Lira, P.; Giraldi, G.; Neves, L.A. Segmentation and Feature Extraction of Panoramic Dental X-Ray Images. Int. J. Nat. Comput. Res. 2010, 1, 1–15. [Google Scholar] [CrossRef]

- Xie, X.; Wang, L.; Wang, A. Artificial neural network modelling for deciding if extractions are necessary prior to orthodontic treatment. Angle Orthod. 2010, 80, 262–266. [Google Scholar] [CrossRef] [PubMed]

- ALbahbah, A.A.; El-Bakry, H.M.; Abd-Elgahany, S. Detection of Caries in Panoramic Dental X-ray Images. Int. J. Electron. Commun. Comput. Eng. 2016, 7, 250–256. [Google Scholar]

- Na’Am, J.; Harlan, J.; Madenda, S.; Wibowo, E.P. Image Processing of Panoramic Dental X-Ray for Identifying Proximal Caries. TELKOMNIKA (Telecommun. Comput. Electron. Control) 2017, 15, 702–708. [Google Scholar] [CrossRef]

- Tuan, T.M.; Duc, N.T.; Van Hai, P.; Son, L.H. Dental Diagnosis from X-Ray Images using Fuzzy Rule-Based Systems. Int. J. Fuzzy Syst. Appl. 2017, 6, 1–16. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Jader, G.; Fontineli, J.; Ruiz, M.; Abdalla, K.; Pithon, M.; Oliveira, L. Deep Instance Segmentation of Teeth in Panoramic X-Ray Images. In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October–1 November 2018; pp. 400–407. [Google Scholar]

- Tuzoff, D.; Tuzova, L.; Bornstein, M.; Krasnov, A.; Kharchenko, M.; Nikolenko, S.; Sveshnikov, M.; Bednenko, G. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019, 48, 20180051. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, K.; Lyu, P.; Li, H.; Zhang, L.; Wu, J.; Lee, C.H. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci. Rep. 2019, 9, 3840. [Google Scholar] [CrossRef] [PubMed]

- Geetha, V.; Aprameya, K.S. Dental Caries Diagnosis in X-ray Images using KNN Classifier. Indian J. Sci. Technol. 2019, 12, 5. [Google Scholar] [CrossRef]

- Vinayahalingam, S.; Xi, T.; Berge, S.; Maal, T.; De Jong, G. Automated detection of third molars and mandibular nerve by deep learning. Sci. Rep. 2019, 9, 9007. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, L.; Zhang, Y.; Lv, D.; Li, Z.; Qi, W. An Adaptive Enhancement Based Hybrid CNN Model for Digital Dental X-ray Positions Classification. arXiv 2020, arXiv:2005.01509. [Google Scholar] [CrossRef]

- You, W.; Hao, A.; Li, S.; Wang, Y.; Xia, B. Deep learning-based dental plaque detection on primary teeth: A comparison with clinical assessments. BMC Oral Health 2020, 20, 141. [Google Scholar] [CrossRef]

- Chung, M.; Lee, J.; Park, S.; Lee, M.; Lee, C.E.; Lee, J.; Shin, Y.-G. Individual tooth detection and identification from dental panoramic x-ray images via point-wise localization and distance regularization. Artif. Intell. Med. 2021, 111, 101996. [Google Scholar] [CrossRef] [PubMed]

- Leite, A.F.; Vasconcelos, K.F.; Willems, H.; Jacobs, R. Radiomics and machine learning in oral healthcare. Proteom.-Clin. Appl. 2023, 14, 1900040. [Google Scholar] [CrossRef] [PubMed]

- Muresan, M.P.; Barbura, A.R.; Nedevschi, S. Teeth detection and dental problem classification in panoramic X-ray images using deep learning and image processing techniques. In Proceedings of the IEEE 16th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 3–5 September 2020; pp. 457–463. [Google Scholar]

- Sonavane, A.; Yadav, R.; Khamparia, A. Dental cavity classification of using convolutional neural network. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1022, 012116. [Google Scholar] [CrossRef]

- Huang, Y.P.; Lee, Y.S. Deep Learning for Caries Detection Using Optical Coherence Tomography. medRxiv 2021. [Google Scholar] [CrossRef]

- López-Janeiro, Á.; Cabañuz, C.; Blasco-Santana, L.; Ruiz-Bravo, E. A tree-based machine learning model to approach morphologic assessment of malignant salivary gland tumors. Ann. Diagn. Pathol. 2021, 56, 151869. [Google Scholar] [CrossRef]

- Rodrigues, J.A.; Krois, J.; Schwendicke, F. Demystifying artificial intelligence and deep learning in dentistry. Braz. Oral Res. 2021, 35, e094. [Google Scholar] [CrossRef] [PubMed]

- Diniz de Lima, E.; Souza Paulino, J.A.; Lira de Farias Freitas, A.P.; Viana Ferreira, J.E.; Silva Barbosa, J.S.; Bezerra Silva, D.F.; Meira Bento, P.; Araújo Maia Amorim, A.M.; Pita Melo, D. Artificial intelligence and infrared thermography as auxiliary tools in the diagnosis of temporomandibular disorder. Dentomaxillofac. Radiol. 2021, 51, 20210318. [Google Scholar] [CrossRef]

- Babu, A.; Onesimu, J.A.; Sagayam, K.M. Artificial Intelligence in dentistry: Concepts, Applications and Research Challenges. E3S Web Conf. 2021, 297, 01074. [Google Scholar] [CrossRef]

- Subbotin, A. Applying Machine Learning in Fog Computing Environments for Panoramic Teeth Imaging. In Proceedings of the 2021 XXIV International Conference on Soft Computing and Measurements (SCM), St. Petersburg, Russia, 26–28 May 2021; pp. 237–239. [Google Scholar]

- Muramatsu, C.; Morishita, T.; Takahashi, R.; Hayashi, T.; Nishiyama, W.; Ariji, Y.; Zhou, X.; Hara, T.; Katsumata, A.; Ariji, E.; et al. Tooth Detection and Classification on Panoramic Radiographs for Automatic Dental Chart Filing: Improved Classification by Multi-Sized Input Data. Oral Radiol. 2021, 37, 13–19. [Google Scholar] [CrossRef]

- Imak, A.; Celebi, A.; Siddique, K.; Turkoglu, M.; Sengur, A.; Salam, I. Dental Caries Detection Using Score-Based Multi-Input Deep Convolutional Neural Network. IEEE Access 2022, 10, 18320–18329. [Google Scholar] [CrossRef]

- Kühnisch, J.; Meyer, O.; Hesenius, M.; Hickel, R.; Gruhn, V. Caries Detection on Intraoral Images Using Artificial Intelligence. J. Dent. Res. 2022, 101, 158–165. [Google Scholar] [CrossRef]

- Almalki, Y.E.; Din, A.I.; Ramzan, M.; Irfan, M.; Aamir, K.M.; Almalki, A.; Alotaibi, S.; Alaglan, G.; Alshamrani, H.A.; Rahman, S. Deep Learning Models for Classification of Dental Diseases Using Orthopantomography X-ray OPG Images. Sensors 2022, 22, 7370. [Google Scholar] [CrossRef]

- AL-Ghamdi, A.; Ragab, M.; AlGhamdi, S.; Asseri, A.; Mansour, R.; Koundal, D. Detection of Dental Diseases through X-Ray Images Using Neural Search Architecture Network. Comput. Intell. Neurosci. 2022, 2022, 3500552. [Google Scholar] [CrossRef]

- Hung, K.F.; Ai, Q.Y.H.; King, A.D.; Bornstein, M.M.; Wong, L.M.; Leung, Y.Y. Automatic Detection and Segmentation of Morphological Changes of the Maxillary Sinus Mucosa on Cone-Beam Computed Tomography Images Using a Three-Dimensional Convolutional Neural Network. Clin. Oral Investig. 2022, 26, 3987–3998. [Google Scholar] [CrossRef]

- Zhou, X.; Yu, G.; Yin, Q.; Liu, Y.; Zhang, Z.; Sun, J. Context Aware Convolutional Neural Network for Children Caries Diagnosis on Dental Panoramic Radiographs. Comput. Math. Methods Med. 2022, 2022, 6029245. [Google Scholar] [CrossRef]

- Bhat, S.; Birajdar, G.K.; Patil, M.D. A comprehensive survey of deep learning algorithms and applications in dental radiograph analysis. Healthc. Analytics 2023, 4, 100282. [Google Scholar] [CrossRef]

- Sunnetci, K.M.; Ulukaya, S.; Alkan, A. Periodontal Bone Loss Detection Based on Hybrid Deep Learning and Machine Learning Models with a User-Friendly Application. Biomed. Signal Process. Control 2022, 77, 103844. [Google Scholar] [CrossRef]

- Anil, S.; Porwal, P.; Porwal, A. Transforming Dental Caries Diagnosis through Artificial Intelligence-Based Techniques. Cureus 2023, 15, e41694. [Google Scholar] [CrossRef]

- Zhu, J.; Chen, Z.; Zhao, J.; Yu, Y.; Li, X.; Shi, K.; Zhang, F.; Yu, F.; Shi, K.; Sun, Z.; et al. Artificial Intelligence in the Diagnosis of Dental Diseases on Panoramic Radiographs: A Preliminary Study. BMC Oral Health 2023, 23, 358. [Google Scholar] [CrossRef] [PubMed]

- Alharbi, S.S.; Alhasson, H.F. Exploring the Applications of Artificial Intelligence in Dental Image Detection: A Systematic Review. Diagnostics 2024, 14, 2442. [Google Scholar] [CrossRef] [PubMed]

- Schwendicke, F.; Tichy, A. The expanding role of AI in dentistry: Beyond image analysis. Br. Dent. J. 2025, 238, 800–801. [Google Scholar] [CrossRef]

- Fay, R. Dentix Dataset. Roboflow Universe. April 2024. Available online: https://universe.roboflow.com/fay-regu8/dentix (accessed on 15 December 2024).

- Wang, C.; Yeh, I.; Mark Liao, H. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024. [Google Scholar] [CrossRef]

- Ahmed, M.S.; Rahman, A.; AlGhamdi, F.; AlDakheel, S.; Hakami, H.; AlJumah, A.; AlIbrahim, Z.; Youldash, M.; Alam Khan, M.A.; Basheer Ahmed, M.I. Joint Diagnosis of Pneumonia, COVID-19, and Tuberculosis from Chest X-ray Images: A Deep Learning Approach. Diagnostics 2023, 13, 2562. [Google Scholar] [CrossRef]

- Surdu, A.; Budala, D.G.; Luchian, I.; Foia, L.G.; Botnariu, G.E.; Scutariu, M.M. Using AI in Optimizing Oral and Dental Diagnoses-A Narrative Review. Diagnostics 2024, 14, 2804. [Google Scholar] [CrossRef]

- Masoumeh, F.N.; Mohsen, A.; Elyas, I. Transforming dental diagnostics with artificial intelligence: Advanced integration of ChatGPT and large language models for patient care. Front. Dent. Med. 2025, 5, 1456208. [Google Scholar] [CrossRef] [PubMed]

- Najeeb, M.; Islam, S. Artificial intelligence (AI) in restorative dentistry: Current trends and future prospects. BMC Oral Health 2025, 25, 592. [Google Scholar] [CrossRef] [PubMed]

- Gollapalli, M.; Rahman, A.; Kudos, S.A.; Foula, M.S.; Alkhalifa, A.M.; Albisher, H.M.; Al-Hariri, M.T.; Mohammad, N. Appendicitis Diagnosis: Ensemble Machine Learning and Explainable Artificial Intelligence-Based Comprehensive Approach. Big Data Cogn. Comput. 2024, 8, 108. [Google Scholar] [CrossRef]

| Study | Aim | Method | Dataset | Preprocessing | Feature Extraction | Result |

|---|---|---|---|---|---|---|

| [26] (2021) | Identifying cavities. | CNN-based method. | Kaggle dataset. 74 images | Python image processing libraries | N/A. | The maximum accuracy is 71.43%. |

| [23] (2022) | Detecting caries. | CNN | From National Yang-Ming University. 100 images | Self-developed OCT, periapical films, and Micro-CT for dental assessment. | Convolutions with tiny kernels are to extract local features like edges, impulses, and noise in images. | Accuracy 95.21%. Sensitivity 98.85% Specificity 89.83%. PPV and NPV were 93.48% and 98.15%, respectively. |

| [29] (2021) | Detecting and classifying teeth in PDR. | CNN-based method. | Asahi University Hospital. 100 images | Segmentation result of the lower mandible contour to identify the approximate location of teeth. | Features were extracted from each input picture using convolution and residual layers before merging them. | Detection sensitivity 96.4%, with 0.5% false positives. The categorization accuracy for tooth kinds and conditions was 93.2% and 98.0%, respectively. |

| [30] (2022) | Detection of dental caries | (MI-DCNNE) | From private oral and dental clinics. 340 images | Image to improve raw pictures using a sharpening filter and altering intensity settings to increase contrast and highlight problematic regions. | CNN | Accuracy 99.13%. |

| [31] (2022) | Identifying caries from intraoral pictures | CNNs | Anonymized photographs from permanent teeth. 2417 images | Image augmentation, transfer learning, normalization to compensate for under- and overexposure | Utilized MobileNet2 architecture for the CNN which uses inverted residual blocks | When all test photos were reviewed, the CNN correctly identified cavities in 92.5% of cases. |

| [32] (2022) | Automated dental problem detection. | YOLOv3. | OPGs with DSLR camera, and clinics with 1200 images | Data augmentation Rotation range Zoom range Shear range Horizontal flip | CNN | Accuracy of 99.33%. |

| [33] (2022). | To classify PDR images into cavities, filling and implant. | NASNet. | Using Kaggle, PDR Dataset with 245 images. | Data Augmentation, by utilizing several operations Gaussian blur, and noise. | NASNet, AlexNet, CNN. | Accuracy of the model 96.51% with data augmentation and 93.36% without augmentation. |

| [34] (2022) | To enhance the tooth decay diagnosis in PDR using CNN, especially in children. | CNN is based on ResNet. | Three Chinese hospitals 210 images | Standard image preprocessing | CNN model. | The context-aware CNN model performs better than the typical CNN baseline in terms of accuracy, precision, recall, F1-score, and AUC. |

| [38] (2023) | Diagnosing various dental illnesses using PDR. | (CNNs), specifically two models: BDU-Net and nnU-Net. | Stomatology Hospital of Zhejiang Chinese Medical University. 1996 images | Image resampling, image normalization, image spacing, Patch size setting | CNNs: BDU-Net and nnU-Net | Sensitivity, specificity, (AUC) are specified as: For impacted teeth, 0.964, 0.996, 0.960, and 0.980. For full crowns, 0.953, 0.998, 0.951, and 0.975. For residual roots, 0.871, 0.999, 0.870, and 0.935. For missing teeth, 0.885, 0.994, 0.879, and 0.939 For caries, 0.554, 0.990, 0.544, and 0.772. |

| Operation | Values/Parameter |

|---|---|

| Mosaic | 1.0 |

| Flip (horizontal) | 0.5 |

| Auto augment | randaugment |

| Erasing | 0.4 |

| Class | Instances | Accuracy | Precision | Recall | F1-score | mAP50 | mAP50-95 |

|---|---|---|---|---|---|---|---|

| All | 1143 | 0.8489 | 0.892 | 0.869 | 0.880 | 0.892 | 0.488 |

| Crown-bridge | 397 | 0.907 | 0.959 | 0.975 | 0.9669 | 0.988 | 0.571 |

| Filling | 491 | 0.795 | 0.829 | 0.721 | 0.7712 | 0.788 | 0.371 |

| Implant | 45 | 0.892 | 0.939 | 0.956 | 0.9474 | 0.956 | 0.459 |

| Root Canal Obturation | 210 | 0.8016 | 0.84 | 0.827 | 0.8334 | 0.827 | 0.39 |

| Parameter | Value |

|---|---|

| Epochs | 100 |

| Batch size | 16 |

| Image size | 640 × 640 |

| Optimizer and Learning rate | AdamW with lr = 0.00125 |

| Momentum | 0.9 |

| Weight decay | 0.0005 |

| Dropout | 0.0 |

| Warmup epochs | 3.0 |

| Box loss gain | 7.5 |

| Class loss gain | 0.5 |

| DFL loss gain | 1.5 |

| Study | Precision | Recall | mAP@50 | Accuracy | F1-Score |

|---|---|---|---|---|---|

| Fay (2024) [42] using Computer Vision approach | 0.713 | 0.711 | 0.707 | Not reported | 0.7119 |

| Proposed YOLOv9 based approach | 0.892 | 0.869 | 0.892 | 0.8489 | 0.880 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Musleh, D.; Rahman, A.; Almossaeed, H.; Balhareth, F.; Alqahtani, G.; Alobaidan, N.; Altalag, J.; Aldossary, M.I.; Alhaidari, F. AI-Enabled Diagnosis Using YOLOv9: Leveraging X-Ray Image Analysis in Dentistry. Big Data Cogn. Comput. 2026, 10, 16. https://doi.org/10.3390/bdcc10010016

Musleh D, Rahman A, Almossaeed H, Balhareth F, Alqahtani G, Alobaidan N, Altalag J, Aldossary MI, Alhaidari F. AI-Enabled Diagnosis Using YOLOv9: Leveraging X-Ray Image Analysis in Dentistry. Big Data and Cognitive Computing. 2026; 10(1):16. https://doi.org/10.3390/bdcc10010016

Chicago/Turabian StyleMusleh, Dhiaa, Atta Rahman, Haya Almossaeed, Fay Balhareth, Ghadah Alqahtani, Norah Alobaidan, Jana Altalag, May Issa Aldossary, and Fahd Alhaidari. 2026. "AI-Enabled Diagnosis Using YOLOv9: Leveraging X-Ray Image Analysis in Dentistry" Big Data and Cognitive Computing 10, no. 1: 16. https://doi.org/10.3390/bdcc10010016

APA StyleMusleh, D., Rahman, A., Almossaeed, H., Balhareth, F., Alqahtani, G., Alobaidan, N., Altalag, J., Aldossary, M. I., & Alhaidari, F. (2026). AI-Enabled Diagnosis Using YOLOv9: Leveraging X-Ray Image Analysis in Dentistry. Big Data and Cognitive Computing, 10(1), 16. https://doi.org/10.3390/bdcc10010016