Abstract

This paper presents a device that converts sound wave frequencies into colors to assist people with hearing problems in solving accessibility and communication problems in the hearing-impaired community. The device uses a precise mathematical apparatus and carefully selected hardware to achieve accurate conversion of sound to color, supported by specialized automatic processing software suitable for standardization. Experimental evaluation shows excellent performance for frequencies below 1000 Hz, although limitations are encountered at higher frequencies, requiring further investigation into advanced noise filtering and hardware optimization. The device shows promise for various applications, including education, art, and therapy. The study acknowledges its limitations and suggests future research to generalize the models for converting sound frequencies to color and improving usability for a broader range of hearing impairments. Feedback from the hearing-impaired community will play a critical role in further developing the device for practical use. Overall, this innovative device for converting sound to color represents a significant step toward improving accessibility and communication for people with hearing challenges. Continued research offers the potential to overcome challenges and extend the benefits of the device to a variety of areas, ultimately improving the quality of life for people with hearing impairments.

1. Introduction

Visualizing sound through RGB colors is beneficial for people with hearing impairments or conditions that affect auditory perception. By translating sound into a visual format, such as color patterns or lighting effects, sound information can be perceived and interpreted in a different way, improving the overall experience and understanding of auditory content.

In the rapidly evolving field of music technology, exciting breakthroughs have revolutionized music production by introducing innovative interfaces tailored to the individual needs of each musician. These groundbreaking developments allow musicians to design, customize, and seamlessly integrate these interfaces into their creative processes [1]. Despite this progress, a significant portion of the population continues to encounter barriers that prevent them from actively participating in the art of making and listening to music [2].

Rushton et al. [3] examines the importance of music for children with profound and multiple learning disabilities, focusing on music education, music play, and the role of music in the home environment. It discusses the difference between music education and music therapy, proposes a new model of music education emphasizing intrinsic musical value, and presents parental perspectives on the positive effects of music in supporting mood regulation and adding structure to children’s lives. The findings highlight the importance of music in the lives of children with learning disabilities and provide valuable information for further research and practice.

Ox [4] provides research related to the ecologically organized nature of color, the role of image schemas, and the potential of color systems as semiotic tools for scientific visualization. The study of color as a semiotic system opens up possibilities for effective scientific visualizations. Considering color as a communication tool with symbolic meaning, it becomes a valuable resource for the visual transmission of complex information. However, further research and empirical evidence are needed to support and extend the concepts presented.

According to James et al. [5], the use of color-based representations for music offers advantages such as a multisensory experience, improved understanding of musical features, accessibility through a web-based version, applications in sound design, and potential for genre classification. However, there are limitations, including subjectivity in color perception, the complexity of designing a mapping system, the need for a large-scale database, challenges in genre classification, and a limited scope of application outside of music.

In his review, Vickery [6] points out that the move to full-color representation of music and notes offers advantages such as expanded capabilities, improved efficiency in rendering sound events, and nuanced sound representation. Cross-modal comparisons, technological advances, and the potential for a digital Super-Score format contribute to the evolution of practices for representing music through notes and, from there, through color. Challenges in this approach include learning, interpretation, competition for space, subjectivity, idiosyncrasy, technical implementation, and the need for continuous adaptation to modern hardware and software techniques.

Azar et al. [7] investigated sound visualization techniques for the hearing impaired, offering them enhanced awareness of their surroundings. It combines various visualization options into one software package, providing comprehensive information derived from sound. The program is being evaluated through a user study, and future work includes hardware implementation and improvements in speech recognition and communication options. However, limitations include the use of only a simulation environment, the need for hardware integration, potential technical complexities, specific user interface requirements, and a lack of detailed study results.

Di Pasquale [8] proposes a scale of 12 colors that correspond to a sound of a certain frequency. The color number is calculated in base 7 or base 10. The author’s proposed scale uses a well-defined mathematical apparatus to standardize the correspondence between sound frequency and color.

Benitez et al. [9] proposed a mobile application called S.A.R.A. (Synthetic Augmented Reality Application), which turns mobile devices into a wearable music interface. The app captures the environment through the device’s camera and translates it into sounds inspired by synaesthetics. Originally developed as a stand-alone application, it has evolved into a performance tool that integrates with dance troupe choreography. The app allows performers to generate their own sounds and visuals based on their movements, offering a unique and customizable artistic experience. While it has advantages such as an innovative interface and an open-source nature, its limitations include portability considerations, technical requirements, and potential barriers to accessibility.

A commercial integrated circuit, LP3950 (Texas Instruments Inc., Dallas, TX, USA), is proposed, converting sound to three analog RGB channels for LEDs. The integrated circuit can be tuned to operate depending on the amplitude or frequency of the sound. It offers the option of including a mono or stereo microphone. While the LP3950 offers sound-to-light conversion capabilities and operational flexibility, it has limitations in advanced audio processing, noise reduction, integration with digital systems, and dependence on external components. These factors need to be taken into account when evaluating its suitability for specific applications.

Fried et al. [10] investigated the participation of preverbal children with profound and multiple disabilities in the design process of a personalized multisensory music experience. The study used participatory design methods and a variety of qualitative and quantitative methodologies. The authors highlight the diversity of interaction strategies used by children, the limitations of accessibility in their design, and the importance of using a multifaceted approach to draw informed conclusions. The study presents recommendations for developing inclusive multisensory experiences for this user group. A disadvantage of this research is that a room is needed in which to implement this project to activate multiple human senses when playing music, such as color, touch, and vibration. This greatly limits the accessibility of people with disabilities in other cities or countries.

In recent years, the sector has witnessed a surge in studies focused on beamforming techniques and their real-world applications [11]. However, the proliferation of diverse algorithms from various authors and research groups necessitates a comprehensive literature review. Such an evaluation aims to enhance awareness and prevent confusion among scientists and technicians in the field.

Chen et al. [12] investigated color–shape associations in neurotypical Japanese individuals, focusing on the role of phonological information in constructing these associations. The research employs two experiments, a direct questionnaire survey and an Implicit Association Test (IAT), to compare color–shape associations between deaf and hearing participants. The direct questionnaire results indicate similar patterns of color–shape associations (red circle, yellow triangle, and blue square) in both groups. However, the IAT results show that deaf participants did not exhibit the same facilitated processing of congruent pairs as hearing participants. These findings suggest that phonological information likely plays a role in the construction of color–shape associations in deaf individuals. One limitation of the present study is the sample size in Experiment 2, which included 19 deaf participants compared to 24 hearing participants. Despite using the bootstrap analysis method, the small sample size might have affected the statistical power of the results. As a result, the IAT performance of the deaf individuals may not have been adequately captured. Future studies with a larger sample size could provide more robust insights into the IAT performance of deaf individuals and facilitate more accurate comparisons with hearing participants.

Bottari et al. [13] contributed to the understanding of faster response times to visual stimuli in profoundly deaf individuals. By comparing deaf and hearing participants in a simple detection task and a shape discrimination task, the research investigates whether enhanced reactivity in the deaf can be solely attributed to faster orienting of visual attention. The findings indicate that the advantage of faster response times in the deaf is not limited to peripheral targets, suggesting a more complex underlying mechanism. The limitation of this work is that the study does not identify the specific reorganized sensory processing that may account for the observed advantages in the deaf participants. Further research is needed to explore the underlying neural mechanisms and potential differences in attentional gradients between deaf and hearing individuals.

Carbone et al. [14] propose a multidisciplinary approach, leveraging advancements in artificial cognition, advanced sensing, data fusion research, and machine learning to enhance the emotional experience of music. By utilizing an adaptive neural framework and intricate brain and multisensory performance characteristics, the research aims to provide individualized visual and tactile listening experiences for both hearing-impaired and normal individuals. The proposed algorithmic mapping of fine-grained emotions and sensing characteristics to brain sensing performance could potentially lead to novel enhancements for sensory-centric applications and music emotion perception, enjoyment, learning, and performance. The implementation of such a multidisciplinary approach may require significant computational resources and expertise, making it challenging to apply widely. The effectiveness of the proposed algorithmic mapping and its ability to capture the full complexity of human emotions and sensory experiences may still require further validation and refinement through real-world testing and user studies. The research may face challenges in achieving seamless integration and synchronization between different sensory modalities to provide a cohesive and enhanced music experience.

Palmer et al. [15] provide robust experimental evidence for cross-modal matches between music and colors, as well as emotionally expressive faces and colors, supporting the existence of music-to-color associations and emotional mediation. The research demonstrates consistent and highly reliable associations between high-level dimensions of classical orchestral music and appearance dimensions of color, reinforcing the notion of emotional mediation between the two. The results show strong correlations between emotional ratings for music and corresponding emotional ratings for colors and emotionally expressive faces, providing further support for the emotional mediation hypothesis. The consistency of results across participant samples from the United States and Mexico suggests that music-to-color associations and emotional mediation may be universal, potentially having broader cross-cultural relevance. The research focuses on participants from the United States and Mexico, and it remains unclear whether similar results will be evident in cultures that use non-western musical scales and structures, limiting the generalizability of the findings to a global context. The study does not explore the underlying neural mechanisms responsible for the observed cross-modal matches and emotional associations, leaving room for further investigation into the cognitive processes involved. The research primarily focuses on classical orchestral music and may not fully capture associations between other music genres and colors, potentially limiting the scope of the findings to specific musical contexts.

Brittney [16] focuses on the importance of considering acoustics in assessing the speech production of young children with hearing loss who use hearing aids and cochlear implants. The Acoustic Monitoring Protocol (AMP) provides specific frequency information on speech production skills, offering valuable insights into a child’s access to acoustic information. The research examines speech production trends and observable patterns using the AMP, providing critical information for progress monitoring and communication purposes. The study identifies positive correlations between speech production and the amount of time enrolled in specialized preschool for children with hearing loss, offering potential insights into intervention and support. The research primarily focuses on children using hearing aids, bone-anchored hearing aids, or cochlear implants with listening and spoken language as their communication modality, potentially excluding other communication modalities. The AMP’s analysis of three error patterns in children with hearing loss may not fully capture speech production complexities. It does not explore neural mechanisms or speech therapy approaches, allowing room for further understanding.

Lerousseau et al. [17] explore the potential of musical training as a therapeutic approach to enhance auditory perception remediation, language perception, and production in cochlear implant recipients. Music training involves cognitive abilities like temporal predictions, hierarchical processing, and auditory–motor interactions, which are highly relevant for language and communication. The research highlights that music making requires precise temporal coordination and interpersonal synchrony, fostering cognitive-motor skills and social behaviors. Music training has shown effects on speech and language processing, suggesting its potential for improving communication and language skills in hearing-impaired individuals. The authors acknowledge that the existing studies on the effect of music training on interpersonal verbal coordination and social skills in individuals with hearing loss are limited. Further research is needed to establish more robust evidence in this area. While the research suggests that music, especially ensemble playing, could positively impact social aspects of language in hearing-impaired individuals, the specific mechanisms and long-term effects require further investigation. The study emphasizes music training as a promising tool for developing social aspects of language, but it does not address potential challenges or barriers to implementing such interventions in clinical settings or educational programs. The impact of music-based therapies on language outcomes in cochlear implant recipients may vary among individuals due to the large inter-individual variability in language development.

The study from de Camargo et al. [18] studied the relationship between word recognition tasks and the degree of hearing loss in children with bilateral sensorineural hearing loss (SNHL). They used Speech Intelligibility Index (SII) values as indicators, comparing words with and without sense. By analyzing word and nonsense-word repetition tasks, the research provides insights into the performance of children with hearing loss in different speech perception scenarios. The use of SII values allows for an objective assessment of the relationship between hearing ability and speech perception performance, helping to understand how hearing loss impacts word recognition tasks. The research focuses solely on children with oral language as their main mode of communication, potentially excluding children with other communication modalities. The study does not delve into the underlying cognitive or language processing mechanisms that may influence performance in word recognition tasks, leaving room for further investigation into these factors. The results indicate no regularity in the relationship between hearing ability and performance in speech perception tasks, suggesting the need for further research to explore potential contributing factors and individual variations.

From the review of the available literature, it can be summarized that the advantages of color-based representation of music include multisensory experience, improved understanding of musical characteristics, and accessibility. Limitations include subjectivity in color perception, complexity in designing visualization systems, and limited application outside of music.

The advantages of representing sound by color include expanded possibilities and nuanced sound representation. Challenges include learning and interpretation, competition for vertical space, subjectivity and idiosyncrasy, technical implementation, and adaptation to modern hardware and software.

Sound visualization for the hearing impaired provides an enhanced sense of the surrounding environment. Limitations include dependence on simulation environments, hardware integration, technical complexities, specific user interface requirements, and limited study results.

When mapping sound frequency to color, no standardized correspondence between sound frequency and color is offered. Further research is needed in this area.

Personalized systems for multisensory musical experiences focus on preverbal children with disabilities. Challenges include accessibility limitations and the need for specific physical space for their implementation.

The goal of the present work is to create an affordable device that converts sound waves into color.

The proposed device has to have improved characteristics for a seamless user experience and adaptability. Designed with clarity and simplicity in mind, it accommodates users with varying technical expertise. We believe in fostering collaboration and inclusivity, which is why all hardware specifications, schematics, and design files are openly accessible for study, modification, and upgrades.

This device seamlessly integrates into existing setups and systems, with support for standard interfaces and connection protocols. Equipped with a microphone or audio input module, it efficiently captures sound signals and processes them in real-time. The device offers dynamic control over colored light output, utilizing RGB LEDs to visualize sound aspects with a wide range of colors and intensities.

Customizability is a core feature, allowing users to personalize the mapping between sound features and color visuals to suit their preferences. The hardware is scalable, enabling expansion for multiple audio channels and for synchronized color output. Complex audio sources are handled with efficiency, ensuring effective processing and visualization.

Energy efficiency is prioritized, with considerations for low power consumption and battery operability, providing longer run times and enhanced portability. By utilizing readily available, cost-effective components, the device becomes accessible to diverse user groups and finds applications across various domains.

This work is organized in the following order: In Materials and Methods, the calculation and design methods of the device are defined. A methodology for reference measurements is presented. Measuring and controlling technical means and methods for their analysis are selected, and the statistical methods used are described. In the Results section, a circuit diagram, a block diagram of the software, and a standard procedure for working with the proposed device are presented. The results of a comparative analysis between the proposed device and an online calculator are presented, as are the data proposed in the literature. In the discussion part, a comparative analysis is made with accessible literary sources. In the conclusion, the obtained results are summarized, and directions for future research are suggested.

The following contributions can be summarized: Software tools have been developed for the implementation of the proposed procedures for the analysis of sound frequencies, with the aim of visualizing them as colors. A model of an automated system was created that allows the conversion of sound frequencies to color. The achieved accuracy was experimentally evaluated. It was found that the accuracy of the conversion depends on the frequency of the sound. Error rates have been shown to be greater at high sound wave frequencies (above 1000 Hz).

2. Material and Methods

2.1. Conversion from Sound Frequency to Color Values

The methodology and mathematical formulations of di Pasquale [8] were used. According to this methodology, the sound frequency F, Hz, is multiplied by 240 and presented in GHz. The wavelength λ, nm, can be calculated using the following formula:

where VL, nm/s is the speed of light in vacuum (VL = 299,792,458,000,000,000 nm/s); F, Hz is the frequency of sound.

For example, at F = 699 Hz, multiplying it by 240 gives 768,558,627,815,424 Hz or 768,558.627815424 GHz. Dividing the speed of light by the frequency of sound, (299,792,458,000,000,000/768,558,627,815,424), gives λ = 390.071 nm, which corresponds to the color blue.

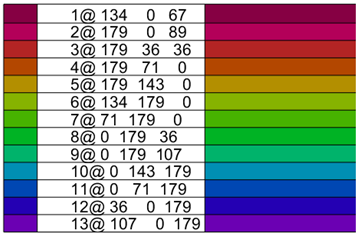

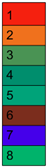

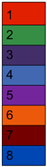

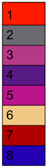

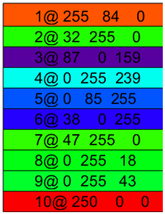

Table 1 presents the sound frequencies and their corresponding colors in the RGB model. The color preview is in reverse order.

Table 1.

Frequencies and RGB values of colors.

2.2. Selection of Measuring, Control and Visualization Technical Means

The following hardware components have been selected to build a technical tool for visualizing sound through color:

- Nano compatible single board microcomputer with Atmega 328 p and CH340 USB to Serial converter (Kuongshun Electronic Ltd., Shenzhen, China).

- Digital RGB diode with WS2811 driver (Pololu Corp., Las Vegas, NV, USA).

- Electret microphone with MAX9814 amplifier (Adafruit Industries, New York City, NY, USA).

- USB AC/DC Charger Adapter 5 V DC, 2 A (Hama GmbH & Co. KG, Monheim, Bavaria, Germany).

2.3. Design of Decorative Box of the Device

Although encasing the device in a box with a decorative design is optional, it can improve the overall appeal, user experience, and sturdiness of the device, making it more desirable and engaging for users.

Decorative elements from Vector Stock (https://www.vectorstock.com, accessed on 3 June 2023) were used.

Decorative elements in raster format are vectorized in Inkscape ver. 1.2.2 (https://inkscape.org, accessed on 4 June 2023). They are set to 10 × 10 cm in size and saved in *.SVG file format.

Two-dimensional vector images were entered into an AUTODESK TinkerCAD online application (Autodesk Inc., San Francisco, CA, USA). They were transformed into three-dimensional objects with a thickness of 5 mm.

Fabrication preparation was conducted in UltiMaker Cura ver. 5.3.1 (Ultimaker B.V., Utrecht, The Netherlands). PLA material was used, with a wall thickness of 0.2 mm in two layers. The print speed was 70 mm/s. Padding was of the grid type. The extruder temperature was 210 °C, and that of the work table was 40 °C.

The generated G-code files were analyzed with the online application NC Viewer ver. 1.1.3 (https://ncviewer.com, accessed on 21 June 2023).

A 3D printer, Ender-3 Neo (Creality 3D Technology Co., Ltd., Shenzhen, China), was used to make the decorative elements.

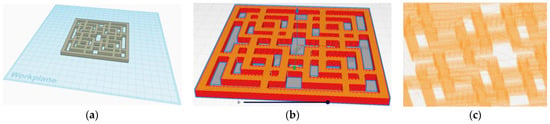

Figure 1 shows the stages of the preparation of a decorative element. The 2D element was entered into the TinkerCAD online application and given a 3D shape. It is saved as a *.STL file. It was entered into the Ultimaker Cura application, where it was configured for 3D printing. The generated G-code file was analyzed in an online NC viewer application.

Figure 1.

Stages of preparation of 3D decorative element. (a) TinkerCAD; (b) UltiMaker Cura; (c) NC Viewer.

2.4. Assessment of the Potential of a Proposed Device through Comparative Analysis

Using benchmarking, this study evaluated the proposed device against existing solutions in the subject area. This technique allows one to assess the extent to which the obtained results improve the current state of the subject area, providing valuable information to increase its competitiveness and potential market value. By establishing a solid foundation for future research, development, and engineering, benchmarking facilitates informed decision-making and enhances the overall evaluation of the proposed device’s performance.

The specific evaluation criteria involved comparing the colors produced by the device with the colors generated by the online calculator or an existing method for the same sound frequencies. The online calculator is “The Color of Sound” (https://www.flutopedia.com/sound_color.htm, accessed on 14 July 2023).

RGB values are converted to Lab. The color difference ΔE is determined by the following formula:

where Lc, ac, bc are color components determined with the proposed device; La, aa, ba are color components calculated by an online calculator.

The color difference varies between 0 and 100; the closer it is to 0, the closer the proposed device’s colors are to those of the online calculator, and the closer it is to 100, the more different they are.

A comparative analysis was performed on data from Benitez et al. [9], who present a three-decade sound-to-color conversion scale. Data for the period 1911–2004 were used.

2.5. Process of Collecting Data for the Experimental Evaluation of the Device’s Accuracy

The process of collecting data for the experimental evaluation of a device’s accuracy involves careful planning and execution to ensure reliable and meaningful results. The specific protocol depends on the specific nature of the device and the intended application. The data collection process for the proposed device follows the more commonly used methodology for this purpose [19]. Table 2 presents the stages of the process of collecting data for the experimental evaluation of the device.

Table 2.

Stages of the process of collecting data for the experimental evaluation of a device’s accuracy.

2.6. Determining Uncertainty and Quality Assurance

Defining uncertainty and implementing quality assurance measures are important steps in obtaining reliable and credible measurement results. They provide a framework for evaluating the accuracy and consistency of measurements, enabling informed decision making, and maintaining sufficiently high standards in the field of sound-to-color conversion.

Table 3 presents the steps involved in uncertainty definition and quality assurance, ensuring a clear and concise presentation of the information. Each stage is described, along with its purpose and the commonly used methods or practices associated with it [20].

Table 3.

Stages of determining uncertainty and quality assurance of the proposed device.

3. Results and Discussion

3.1. Results

3.1.1. Construction and Setup of an Acoustic Device

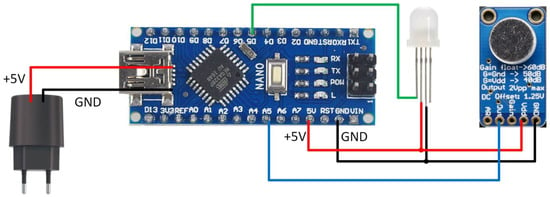

Figure 2 presents a circuit diagram of the proposed device. The circuit is designed to be powered either by an adapter or via the USB port of a personal computer. In particular, the device demonstrates efficient power management; with the RGB digital LED and microphone module having low current consumption (<100 mA), they are powered directly by the single-board microcomputer. The RGB diode is controlled via digital pin D5, while the microphone is connected to analog input A5, ensuring seamless integration and functionality within the proposed device.

Figure 2.

Electrical schematic of the proposed device—general view.

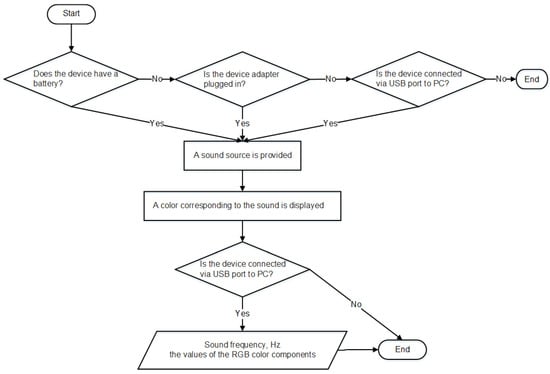

A battery (power bank) can be added to the device. When providing power, the device reacts to each sound source by visualizing the corresponding color, and when connected to a computer, it provides information about the sound frequency in hertz and the values of the RGB color components. Figure 3 shows a block diagram of the standard operating procedure for handling the device.

Figure 3.

Flowchart of the standard operating procedure.

3.1.2. Standard Operating Procedure of the Proposed Device

Standard operating procedure includes checking for battery or USB power on the computer. Checking if a sound source is available, the device determines the sound frequency and converts it to color. With a USB connection, the sound frequency and color data are transmitted to the PC.

3.1.3. Control Program for the Proposed Device

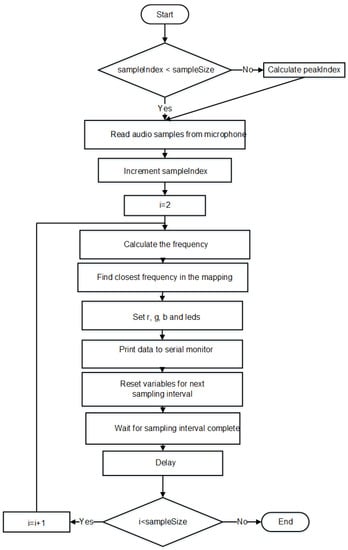

The control program for the proposed device is written in Arduino-compatible C++ and uses the <FastLED> library to control digital RGB LEDs. The complete program listing is presented in Appendix A.

This program runs in the following order:

- The FastLED library is included, and constants, variables, and arrays are defined for the RGB LED and color components. Two constant arrays are initialized for the frequencies and the color components.

- The necessary configurations are set up in the setup() function, such as initializing the serial communication, setting the analog pin for the microphone as input, and adding the RGB LED configuration.

- The main logic of the program is found in the loop() function. Audio samples are read from the microphone, and the frequency of the input signal is calculated.

- The closest matching frequency in a predefined map is searched, and the corresponding RGB values for that frequency are extracted.

- The extracted RGB values are assigned to the LED.

- The calculated frequency and RGB values are displayed on the serial monitor.

- After each sampling interval, the program resets the necessary variables and waits until the start of the next sampling interval.

- The delay (16 ms) operator introduces a small delay to avoid excessive processing.

Figure 4 shows a block diagram of the loop() function of the proposed program. The proposed program allows sound visualization through a frequency map to the corresponding RGB values and their visualization through an RGB LED. The program continuously reads audio samples, calculates the frequency, and updates the LED color accordingly. The serial monitor provides real-time feedback on frequency and RGB values.

Figure 4.

Flowchart of the loop function of the proposed program.

3.1.4. Description of the Developed Device and Its Key Components

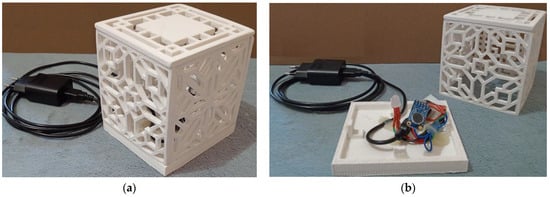

Figure 5 shows a general view of the developed device. The device features an elegantly crafted exterior with decorative sides. At the heart of this device lies a single-board microcomputer that serves as the driving force behind its capabilities. This microcomputer organizes the various functions and interactions between the individual components. The microphone is positioned in such a way that it captures sound data with sufficient precision. The device’s RGB LED takes center stage in the visualization process. The microcomputer processes the incoming audio data received from the microphone and uses its computing power to translate it into vivid and visually stunning colors via the RGB LED.

Figure 5.

Developed device—general view. (a) Outside view; (b) internal components.

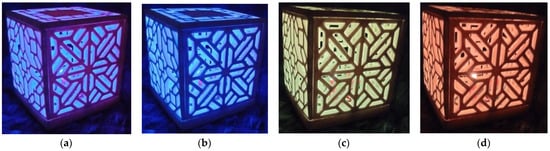

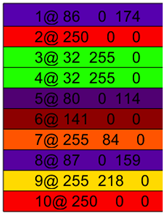

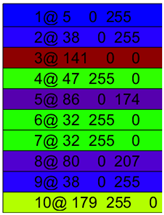

3.1.5. Color Visualization of Sound Frequencies from the Proposed Device

Figure 6 shows the color visualization of sound by the device proposed in this work.

Figure 6.

Visualization of sound by color. (a) F = 215 Hz, RGB = [134 0 67], pink color; (b) F = 710 Hz, RGB = [36 0 179], blue color; (c) F = 457 Hz, RGB = [179 143 0], yellow color; (d) F = 436, RGB = [179 71 0], orange color.

The frequency value of 215 Hz falls between the frequencies of the first and second standard colors. By interpolating the RGB values, the approximate color is determined. Since the frequency is closer to the first standard color, the resulting color will be pink.

The frequency value of 710 Hz falls between the frequencies of the twelfth and thirteenth standard colors. Interpolating the RGB values since the frequency is closer to the thirteenth standard color, the resulting color is blue.

The frequency value of 457 Hz falls between the frequencies of the fourth and fifth standard colors. Since the frequency is closer to the fourth standard color, the resulting color will be yellow.

The frequency value of 436 Hz falls between the third and fourth standard colors. By interpolating the RGB values, we can determine the approximate color. Since the frequency is closer to the fourth standard color, the resulting color is orange.

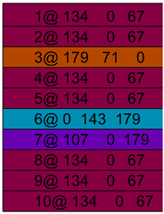

3.1.6. Comparative Analysis of Color Representation at Different Sound Frequencies

The proposed device’s colors were compared to an online tool. The data from the measurements are presented in Appendix B. The data presented are for typical urban sound sources such as music, movement of people and cars, and conversations.

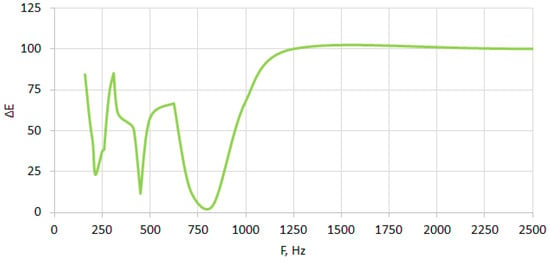

Figure 7 shows a plot of the color difference ΔE at different frequencies between the proposed device and an online calculator.

Figure 7.

Color difference between proposed device and online calculator.

At high frequencies (above 100 Hz), a significant difference is observed between the proposed device and the online tool, with color differences of around 100 or more. These frequencies include 160 Hz, 185 Hz, 275 Hz, 290 Hz, 380 Hz, 415 Hz, 500 Hz, 625 Hz, 1000 Hz, 1250 Hz, 2500 Hz, 5000 Hz, and 5100 Hz. The device’s colors differ significantly from the online tool’s calculations in these cases.

At low frequencies, relatively small differences are seen between the proposed device and the online tool, with color differences around or close to 0. These frequencies include 205 Hz, 215 Hz, 260 Hz, 310 Hz, 330 Hz, 450 Hz, 625 Hz, and 830 Hz. Here, the device’s colors closely match those rendered by the online tool.

The technical reason for the observed results can be attributed to the differences in the algorithms and methodologies used for sound frequency to color conversion between the proposed device and the online calculator.

At high frequencies (above 1000 Hz), significant color differences (around 100 or more) may arise due to variations in the signal processing and mathematical models employed by both systems. The algorithms in the proposed device might be sensitive to certain frequency ranges, leading to deviations in the color representation from the online tool’s calculations.

On the other hand, at low frequencies, the relatively small differences (around or close to 0) can be attributed to the algorithms being more accurate and consistent within this frequency range. The mathematical computations and signal processing methods employed in both systems may align more closely at lower frequencies, resulting in similar color outputs.

Different devices and software tools might utilize various mathematical models, color spaces, and calibration techniques, leading to discrepancies in the color representation of sound frequencies. Addressing these technical differences can be crucial to refining the proposed device and achieving better alignment with existing tools or standards.

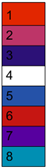

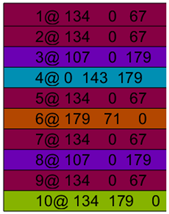

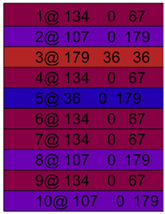

Table 4 presents a comparative analysis of the colors obtained at different sound frequencies using the proposed device, an online calculator, and the S2 variant. The values of the RGB color component for the compared sources can be found in Appendix C. The results show that the proposed device shows color variations for the given frequencies, with noticeable differences compared to the S2 variant and the online calculator. However, for the rest of the cases, the options for representing sound as colors mostly show shades of the same color for closely spaced frequencies. An example of this can be seen with the S6. Despite the differences in the actual RGB values, the proposed device, the online calculator, and the S2 variant demonstrate similar trends in color changes in the frequency range. This suggests a consistent pattern and variation among these devices. It is worth noting that some frequencies may not be accurately reproduced by some scales, as shown by S4.

Table 4.

Comparison of colors between proposed device and literature sources.

Table 5 presents data from uncertainty definition and quality assurance for the device proposed in this work.

Table 5.

Determining uncertainty and quality assurance of the proposed device.

3.1.7. Determining Uncertainty and Quality Assurance for the Proposed Device

Defining the uncertainty in the determination of color, depending on the frequency of the sound waves, involves measuring multiple samples and calculating the variability between the results. In this case, when measuring low frequencies, the variability is 21, while at high frequencies, the variability increases to 39 compared to an online calculator. Also, the average color difference (ΔE) at low frequencies is 52, while at high frequencies, it is 79, compared to the online calculator. These variations indicate the level of uncertainty and potential errors in the device’s color reproduction capabilities.

By measuring and monitoring aspects of quality assurance, it is ensured that standard operating procedures are followed, quality control measures are in place, traceability is maintained, and user feedback is incorporated to ensure continuous improvement and effective support.

3.2. Discussion

The results obtained in the present work complement and refine those presented in the available literature. The proposed device distinguishes itself through its precision, automation, exceptional performance at low frequencies, diverse applications, emphasis on future research, and user-centric design. These advantages make it a promising innovation with significant potential to improve accessibility and communication for individuals with hearing difficulties.

The device in this work fulfills the requirements for implementing sound visualization through color, as suggested by Vickery [6] and Rushton et al. [3].

We removed the limitations that James et al. [5] related to subjectivity in color perception, the complexity of designing a mapping system, the need for a large-scale database, challenges in genre classification, and a limited scope of application outside of music. The device proposed in the present work has a fixed number of colors. Thus, it is convenient and easy for people with auditory perception problems to learn.

Azar et al. [7], Di Pasquale [8], and Ox [4] theoretically address issues of sound visualization through color. An advantage of the present work is the proposed software and hardware implementation of these principles.

The implemented device is portable and affordable. The limitations of Frid et al.’s [10] development, which required a specially adapted room for sound visualization through color, have been addressed in terms of wearability, technical requirements, and accessibility barriers. The device proposed in this work has the potential to increase the accessibility of people with disabilities from different cities or countries to technical means of visualizing sound through color.

By focusing on creating a portable and affordable device, limitations associated with dedicated rooms or high-cost devices can be overcome. For example, in Bulgaria, the hardware components (without the decorative case) used in the creation of the proposed device have a total cost of EUR 40 as of March 2023. This increases accessibility and allows users to use the device in a variety of environments, regardless of their location or financial constraints.

In conventional systems, the colors corresponding to sound frequencies span the entire visible spectrum, resulting in multiple shades of the same color. This abundance of hues presents a significant obstacle for the hearing impaired, making it challenging to accurately associate colors with specific changes in sound frequency. The device developed in this work improves this process by working with a carefully selected set of colors specifically tailored to the needs of people with hearing difficulties, rather than by covering the entire visible spectrum. When sound is detected, the device’s algorithm analyzes the frequency and selects the most appropriate color. By working with a limited set of colors, the device ensures that users can easily learn and remember associations between colors and sound frequencies. This simplifies the learning process and allows hearing-impaired people to accurately interpret and respond to auditory information in real time.

Consideration of portability considerations and potential accessibility barriers is critical. By designing the device with user comfort, ease of use, and accessibility in mind, it can be made more inclusive and user-friendly. This includes looking at factors such as device size, weight, user interface, and assistive technology compatibility.

4. Conclusions

In this paper, a device is proposed and tested that can be used to convert the frequency of sound waves to color accurately and reliably.

A performance test was performed, and the measurement and visualization accuracy of the device were evaluated against existing sound-to-color conversion methods. The measurement device was found to provide a sufficiently accurate and reliable conversion compared to conventional sound visualization methods by color.

To facilitate the analysis and visualization of sound frequencies as color, special software tools were developed. These tools are specifically designed to implement the proposed procedures described in the study. The purpose of this software is to allow the conversion of sound frequencies into the corresponding colors.

A device was constructed to automate the process of converting sound frequencies into color. The accuracy of this system was rigorously evaluated through experimental testing. The evaluation aimed to assess the precision and reliability of the frequency-to-color conversion achieved by the system.

The color difference when comparing the proposed device was found to vary between 52 and 79. It depends on the sound frequency. The biggest difference compared to the other sound-to-color conversion methods occurs at high frequencies (above 1000 Hz).

Because of the conversion of sound to color, a precisely defined mathematical apparatus is used in the proposed device. This allows the process to be standardized. Thus, the algorithms, procedures, and hardware proposed in this work can be used by people with hearing difficulties.

This work should continue with research aimed at generalizing the models for the conversion of sound frequencies to color.

Author Contributions

Conceptualization, Z.Z. and D.O.; methodology, Z.Z.; software, Z.Z. and G.S.; validation, J.I., N.A. and Z.Z.; formal analysis, D.O.; investigation, Z.Z. and G.S.; resources—J.I.; data curation, Z.Z. and N.A.; writing—original draft preparation, Z.Z.; writing—review and editing—Z.Z. and D.O.; visualization—Z.Z. and J.I; supervision, Z.Z. and D.O.; project administration, Z.Z.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All of the data is contained within the article.

Acknowledgments

This work was administrative and technically supported by the Bulgarian national program “Development of scientific research and innovation at Trakia University in the service of health and sustainable well-being”—BG-RRP-2.004-006-C02.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| AMP | Acoustic Monitoring Protocol |

| IAT | Implicit Association Test |

| LED | Light-emitting diode |

| OC | Online calculator |

| PC | Personal computer |

| PD | Proposed device |

| RGB | Red, green, and blue of RGB color model |

| S.A.R.A. | Synesthetic Augmented Reality Application |

| SII | Speech Intelligibility Index |

| SNHL | Sensorineural hearing loss |

| SOP | Standard operating procedure |

| USB | Universal serial bus |

Appendix A

| Listing A1. Listing of program for conversion of sound frequency to RGB values. | |

| #include <FastLED.h> #define LED_PIN 5 #define NUM_LEDS 3 int r, g, b; CRGB leds[NUM_LEDS]; const int microphonePin = A5; const int sampleSize = 32; const int samplingInterval = 1; const int samplingDuration = sampleSize * samplingInterval; const int samplingFrequency = 1000/samplingInterval; unsigned long startTime = 0; int sampleIndex = 0; int samples[sampleSize]; //Frequency to RGB mapping const int numFrequencies = 13; const int frequencies[numFrequencies] = {370, 392, 415, 440, 466, 493, 523, 554, 587, 622, 659, 699, 740}; const int colors[numFrequencies][3] = { {134, 0, 67}, {179, 0, 89}, {179, 36, 36}, {179, 71, 0}, {179, 143, 0}, {134, 179, 0}, {71, 179, 0}, {0, 179, 36}, {0, 179, 107}, {0, 143, 179}, {0, 71, 179}, {36, 0, 179}, {107, 0, 179} }; void setup() { Serial.begin(9600); pinMode (microphonePin, INPUT); FastLED.addLeds<WS2812, LED_PIN, GRB>(leds, NUM_LEDS); } void loop() { if (sampleIndex < sampleSize) { //Read audio samples from the microphone samples[sampleIndex] = analogRead(microphonePin); sampleIndex++; } else { | //Calculate the peakIndex int peakIndex = 1; int peakValue = samples[peakIndex]; if (samples[i] > peakValue) { peakIndex = i; peakValue = samples[i]; } } for (int i = 2; i < sampleSize; i++) { double frequency = samplingFrequency/peakIndex; //Find the closest frequency in the mapping int closestIndex = 0; double smallestDifference = abs(frequency-frequencies[0]); for (int i = 1; i < numFrequencies; i++) { double difference = abs(frequency-frequencies[i]); if (difference < smallestDifference) { closestIndex = i; smallestDifference = difference; } } r = colors[closestIndex][0]; g = colors[closestIndex][1]; b = colors[closestIndex][2]; leds[0] = CRGB(g, r, b); FastLED.show(); //Print the frequency and RGB values to the serial monitor Serial.print(“Frequency: “); Serial.print(frequency); Serial.print(“ Hz\tRGB: “); Serial.print(r); Serial.print(“, “); Serial.print(g); Serial.print(“, “); Serial.println(b); //Reset variables for the next sampling interval sampleIndex = 0; startTime = millis(); } //Wait until the sampling interval is complete while (millis()-startTime < samplingInterval) { //Do nothing } delay(16); } |

Appendix B

Colors generated from device and online calculator from different sound sources.

Table A1.

Colors generated from device and online calculator for music sounds.

Table A1.

Colors generated from device and online calculator for music sounds.

| № | F, Hz | Device | Online Tool | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R | G | B | Color | R | G | B | Color | ||

| 1 | 205 | 134 | 0 | 67 |  | 86 | 0 | 174 |  |

| 2 | 250 | 134 | 0 | 67 | 250 | 0 | 0 | ||

| 3 | 1250 | 107 | 0 | 179 | 32 | 255 | 0 | ||

| 4 | 625 | 0 | 143 | 179 | 32 | 255 | 0 | ||

| 5 | 215 | 134 | 0 | 67 | 80 | 0 | 114 | ||

| 6 | 450 | 179 | 71 | 0 | 141 | 0 | 0 | ||

| 7 | 260 | 134 | 0 | 67 | 255 | 84 | 0 | ||

| 8 | 830 | 107 | 0 | 179 | 87 | 0 | 159 | ||

| 9 | 275 | 134 | 0 | 67 | 255 | 218 | 0 | ||

| 10 | 500 | 134 | 179 | 0 | 250 | 0 | 0 | ||

Table A2.

Colors generated from device and online calculator for urban sounds.

Table A2.

Colors generated from device and online calculator for urban sounds.

| № | F, Hz | Device | Online Tool | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R | G | B | Color | R | G | B | Color | ||

| 1 | 185 | 134 | 0 | 67 |  | 5 | 0 | 255 |  |

| 2 | 380 | 134 | 0 | 67 | 38 | 0 | 255 | ||

| 3 | 450 | 179 | 71 | 0 | 141 | 0 | 0 | ||

| 4 | 310 | 134 | 0 | 67 | 47 | 255 | 0 | ||

| 5 | 205 | 134 | 0 | 67 | 86 | 0 | 174 | ||

| 6 | 625 | 0 | 143 | 179 | 32 | 255 | 0 | ||

| 7 | 5000 | 107 | 0 | 179 | 32 | 255 | 0 | ||

| 8 | 200 | 134 | 0 | 67 | 80 | 0 | 207 | ||

| 9 | 380 | 134 | 0 | 67 | 38 | 0 | 255 | ||

| 10 | 290 | 134 | 0 | 67 | 179 | 255 | 0 | ||

Table A3.

Colors generated from device and online calculator for sounds from conversations.

Table A3.

Colors generated from device and online calculator for sounds from conversations.

| № | F, Hz | Device | Online Tool | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R | G | B | Color | R | G | B | Color | ||

| 1 | 260 | 134 | 0 | 67 |  | 255 | 84 | 0 |  |

| 2 | 2500 | 107 | 0 | 179 | 32 | 255 | 0 | ||

| 3 | 415 | 179 | 36 | 36 | 87 | 0 | 159 | ||

| 4 | 330 | 134 | 0 | 67 | 0 | 255 | 239 | ||

| 5 | 710 | 36 | 0 | 179 | 0 | 85 | 255 | ||

| 6 | 380 | 134 | 0 | 67 | 38 | 0 | 255 | ||

| 7 | 310 | 134 | 0 | 67 | 47 | 255 | 0 | ||

| 8 | 5100 | 107 | 0 | 179 | 0 | 255 | 18 | ||

| 9 | 160 | 134 | 0 | 67 | 0 | 255 | 43 | ||

| 10 | 1000 | 107 | 0 | 179 | 250 | 0 | 0 | ||

Appendix C

Table A4.

Color values at different frequencies. Comparison with literature sources.

Table A4.

Color values at different frequencies. Comparison with literature sources.

| F, Hz | RGB | S1, 1910 | S2, 1911 | S3, 1930 | S4, 1940 | S5, 1944 | S6, 2004 | OC | PD |

|---|---|---|---|---|---|---|---|---|---|

| 262 | R | 255 | 255 | 207 | 236 | 255 | 217 | 255 | 179 |

| G | 255 | 84 | 21 | 16 | 11 | 199 | 103 | 0 | |

| B | 42 | 0 | 0 | 0 | 0 | 27 | 0 | 89 | |

| 277 | R | 0 | 162 | 247 | 254 | 255 | 35 | 255 | 179 |

| G | 159 | 43 | 6 | 232 | 99 | 122 | 234 | 36 | |

| B | 46 | 130 | 29 | 237 | 54 | 24 | 0 | 36 | |

| 294 | R | 13 | 200 | 255 | 248 | 255 | 51 | 151 | 179 |

| G | 150 | 248 | 125 | 98 | 120 | 149 | 255 | 71 | |

| B | 147 | 45 | 4 | 0 | 0 | 109 | 0 | 0 | |

| 311 | R | 25 | 79 | 211 | 255 | 255 | 60 | 41 | 179 |

| G | 113 | 103 | 100 | 212 | 223 | 59 | 255 | 143 | |

| B | 174 | 138 | 19 | 206 | 38 | 106 | 0 | 0 | |

| 330 | R | 129 | 13 | 173 | 255 | 255 | 137 | 0 | 134 |

| G | 41 | 60 | 194 | 254 | 255 | 64 | 255 | 179 | |

| B | 135 | 174 | 67 | 22 | 10 | 108 | 239 | 0 | |

| 349 | R | 225 | 170 | 189 | 255 | 223 | 221 | 0 | 71 |

| G | 41 | 29 | 168 | 255 | 255 | 73 | 125 | 179 | |

| B | 146 | 42 | 1 | 216 | 66 | 113 | 255 | 0 | |

| 370 | R | 174 | 69 | 27 | 39 | 29 | 108 | 5 | 0 |

| G | 39 | 19 | 112 | 168 | 172 | 48 | 0 | 179 | |

| B | 108 | 131 | 64 | 68 | 44 | 78 | 255 | 36 | |

| 392 | R | 245 | 240 | 74 | 0 | 0 | 123 | 69 | 0 |

| G | 32 | 113 | 147 | 142 | 163 | 45 | 0 | 179 | |

| B | 15 | 29 | 84 | 109 | 121 | 29 | 234 | 107 | |

| 415 | R | 226 | 189 | 44 | 200 | 37 | 198 | 87 | 0 |

| G | 38 | 53 | 17 | 219 | 82 | 22 | 0 | 143 | |

| B | 0 | 104 | 119 | 231 | 168 | 18 | 159 | 179 | |

| 440 | R | 224 | 54 | 66 | 66 | 115 | 236 | 116 | 0 |

| G | 32 | 142 | 47 | 104 | 37 | 89 | 0 | 71 | |

| B | 0 | 69 | 106 | 178 | 155 | 11 | 0 | 179 | |

| 466 | R | 255 | 107 | 181 | 88 | 186 | 240 | 178 | 36 |

| G | 27 | 106 | 57 | 26 | 20 | 200 | 0 | 0 | |

| B | 0 | 113 | 134 | 131 | 139 | 131 | 0 | 179 | |

| 494 | R | 255 | 66 | 117 | 134 | 165 | 237 | 238 | 107 |

| G | 119 | 121 | 42 | 22 | 5 | 217 | 0 | 0 | |

| B | 0 | 173 | 108 | 116 | 87 | 40 | 0 | 179 |

References

- Scheibler, R.; Ono, N. Blinkies: Open Source Sound-to-Light Conversion Sensors for Large-Scale Acoustic Sensing and Applications. IEEE Access 2020, 8, 67603–67616. [Google Scholar] [CrossRef]

- Rushton, R.; Kossyvaki, L. The Role of Music within the Home-Lives of Young People with Profound and Multiple Learning Disabilities: Parental Perspectives. Br. J. Learn. Disabil. 2022, 50, 29–40. [Google Scholar] [CrossRef]

- Rushton, R.; Kossyvaki, L. Using Musical Play with Children with Profound and Multiple Learning Disabilities at School. Br. J. Spec. Educ. 2020, 47, 489–509. [Google Scholar] [CrossRef]

- Ox, J. Color Systems are Categories that Carry Meaning in Visualizations: A Conceptual Metaphor Theory Approach. Electron. Imaging 2016, 16, 1–9. [Google Scholar] [CrossRef]

- James, Z.; Robins, M.; Shaher, E. Music to Color Barcode. Rochester Projects 2019. Available online: https://hajim.rochester.edu/ece/sites/zduan/teaching/ece472/projects/2019/MusicToColorBarcode.pdf (accessed on 21 May 2023).

- Vickery, L. Some approaches to representing sound with colour and shape. In Proceedings of the 4th International Conference on Technologies for Music Notation and Representation, Montreal, QC, Canada, 24–26 May 2018; pp. 165–173. [Google Scholar]

- Azar, J.; Saleh, H.; Al-Alaoui, M. Sound Visualization for the Hearing Impaired. iJET Int. J. Emerg. Technol. Learn. 2007, 2, 1–7. [Google Scholar]

- Di Pasquale, P. CHROMOSCALE Unique Language for Sounds Colors and Numbers. Chromoscale and Chromotones, Sound & Color and Base 7. 2013. Available online: https://www.academia.edu/2401099/CHROMOSCALE_Unique_Language_for_Sounds_Colors_and_Numbers (accessed on 3 June 2023).

- Benitez, M.; Vogl, M. S.A.R.A.: Synesthetic augmented reality application. In Proceedings of the Shapeshifting Conference, Auckland, New Zealand, 14–16 April 2014; pp. 1–20. [Google Scholar]

- Frid, E.; Panariello, C.; Núñez-Pacheco, C. Customizing and Evaluating Accessible Multisensory Music Experiences with Pre-Verbal Children–A Case Study on the Perception of Musical Haptics Using Participatory Design with Proxies. Multimodal Technol. Interact. 2022, 6, 55. [Google Scholar] [CrossRef]

- Licitra, G.; Artuso, F.; Bernardini, M.; Moro, A.; Fidecaro, F.; Fredianelli, L. Acoustic Beamforming Algorithms and Their Applications in Environmental Noise. Curr. Pollut. Rep. 2023, 9, 327. [Google Scholar] [CrossRef]

- Chen, N.; Tanaka, K.; Namatame, M.; Watanabe, K. Color-Shape Associations in Deaf and Hearing People. Front. Psychol. 2016, 7, 355. [Google Scholar] [CrossRef] [PubMed]

- Bottari, D.; Nava, E.; Ley, P.; Pavani, F. Enhanced reactivity to visual stimuli in deaf individuals. Restor. Neurol. Neurosci. 2010, 28, 167–179. [Google Scholar] [CrossRef] [PubMed]

- Carbone, R.; Carbone, J. The Color of Music: Towards Hearing Impaired Enrichment with Cognitive AI/ML and Multi-Sensory Emotion Fusion. In Proceedings of the 23rd International Conference on Artificial Intelligence, Las Vegas, NV, USA, 26–29 July 2021. [Google Scholar]

- Palmer, S.; Schloss, K.; Xu, Z.; Prado-León, L. Music–color associations are mediated by emotion. J. Psy. Cogn. Sci. 2012, 110, 8836–8841. [Google Scholar] [CrossRef] [PubMed]

- Brittney, L. Speech Production Tool for Children with Hearing Loss. All Grad. Plan B Other Rep. 2013, 279, 1–53. [Google Scholar]

- Lerousseau, J.; Hidalgo, C.; Schön, D. Musical Training for Auditory Rehabilitation in Hearing Loss. J. Clin. Med. 2020, 9, 1058. [Google Scholar] [CrossRef] [PubMed]

- de Camargo, N.; Mendes, B.; de Albuquerque, B.; Novaes, C. Relationship between hearing capacity and performance on tasks of speech perception in children with hearing loss. CoDAS 2020, 32, e20180139. [Google Scholar]

- Taherdoost, H. Data Collection Methods and Tools for Research; A Step-by-Step Guide to Choose Data Collection Technique for Academic and Business Research Projects. Int. J. Acad. Res. Manag. (IJARM) 2021, 10, 10–38. [Google Scholar]

- Possolo, A. Simple Guide for Evaluating and Expressing the Uncertainty of NIST Measurement Results. In NIST Technical Note 1900; U.S. Department of Commerce, National Institute of Standards and Technology: Gaithersburg, MD, USA, 2015. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).