Face Validation of Database Forensic Investigation Metamodel

Abstract

1. Introduction

2. Background and Related Works

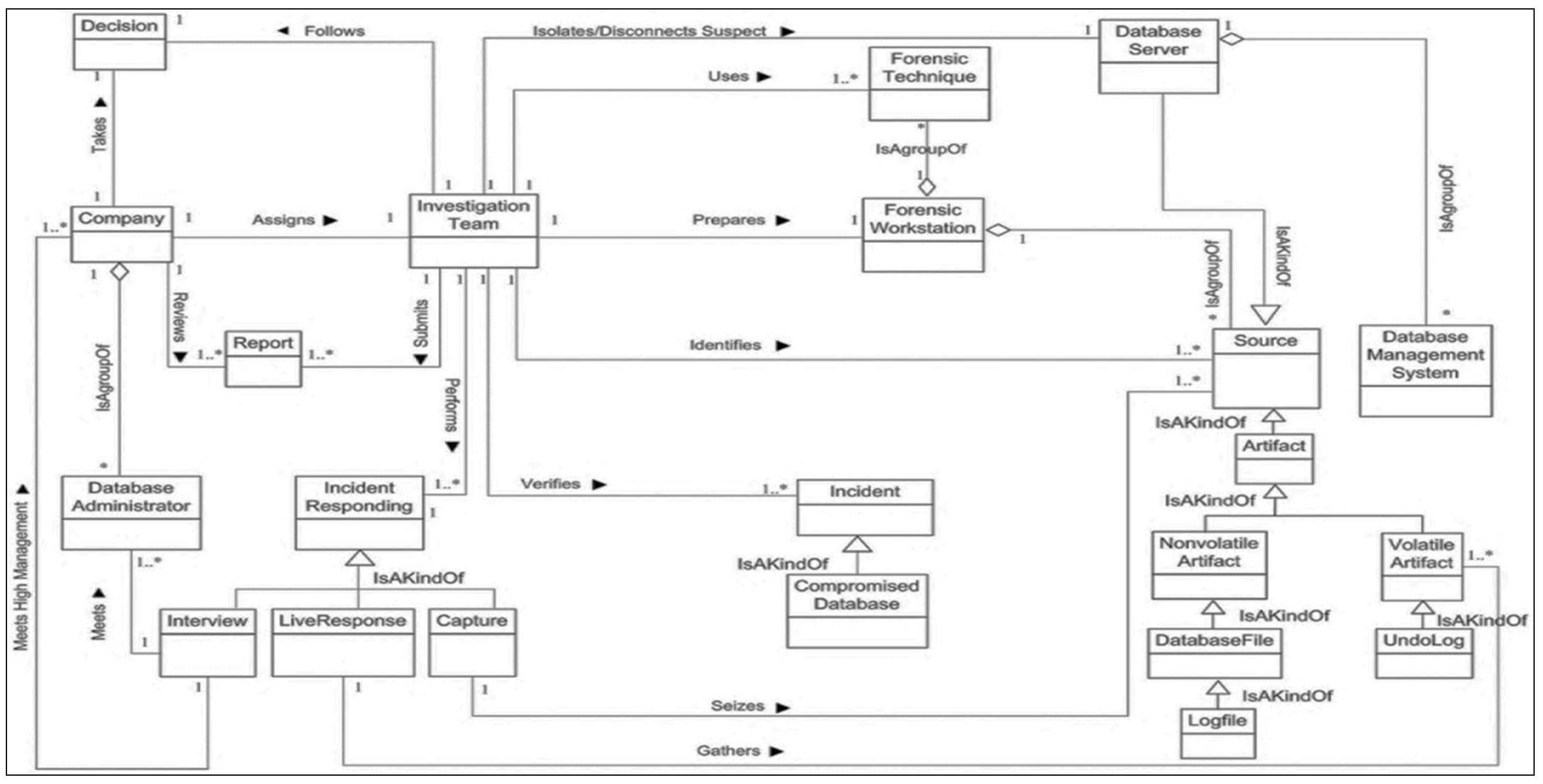

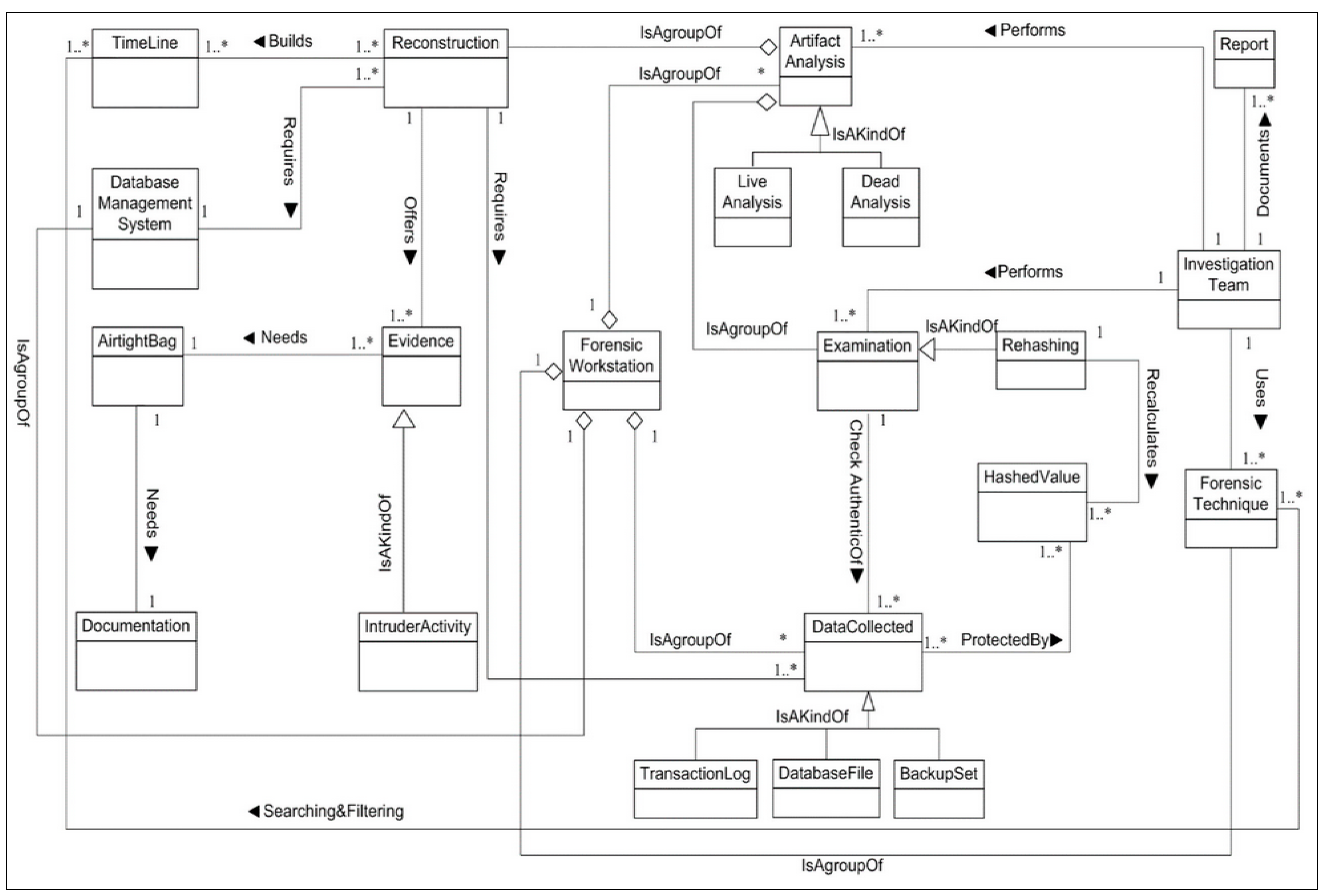

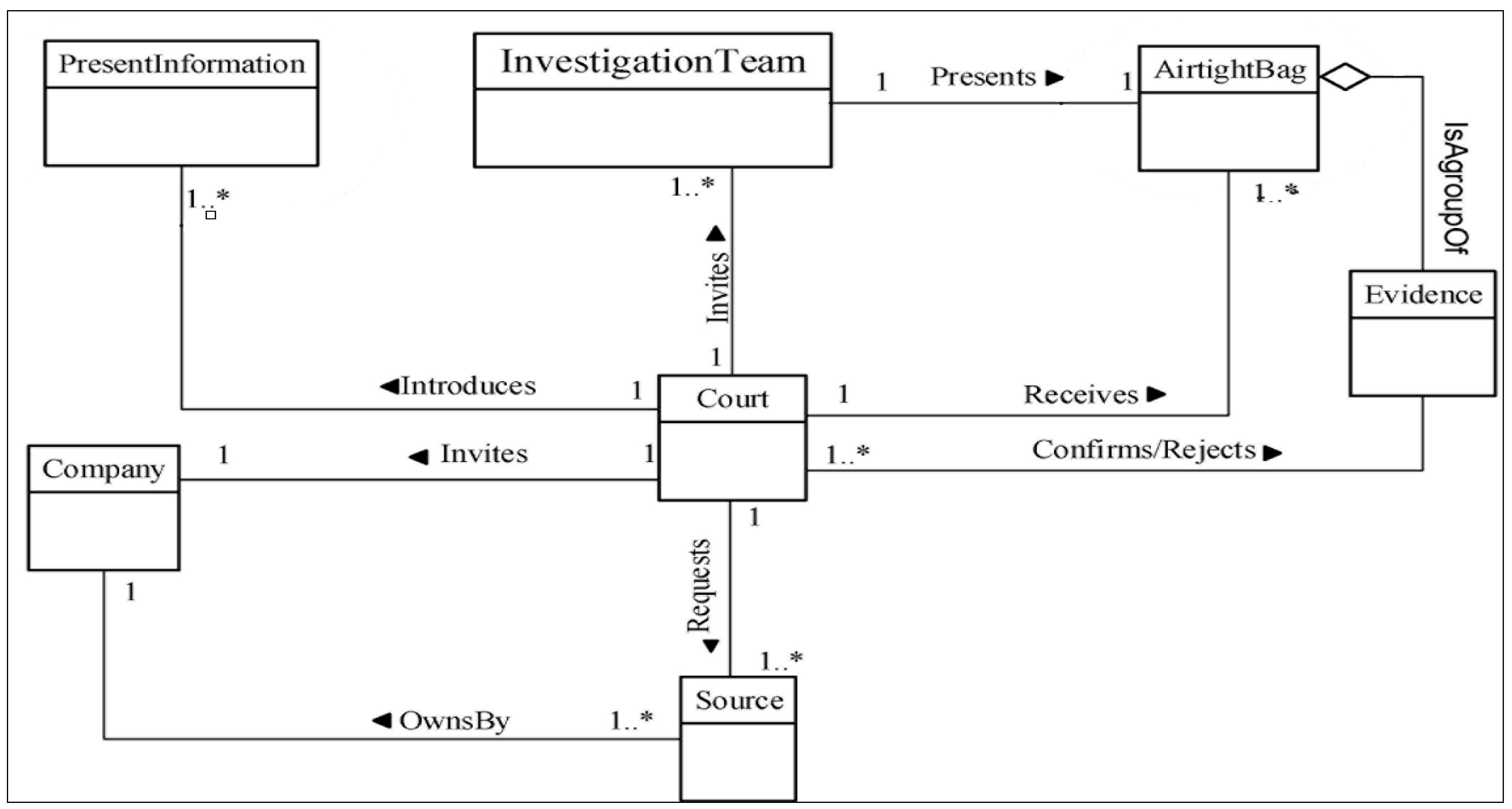

3. Database Forensic Investigation Metamodel

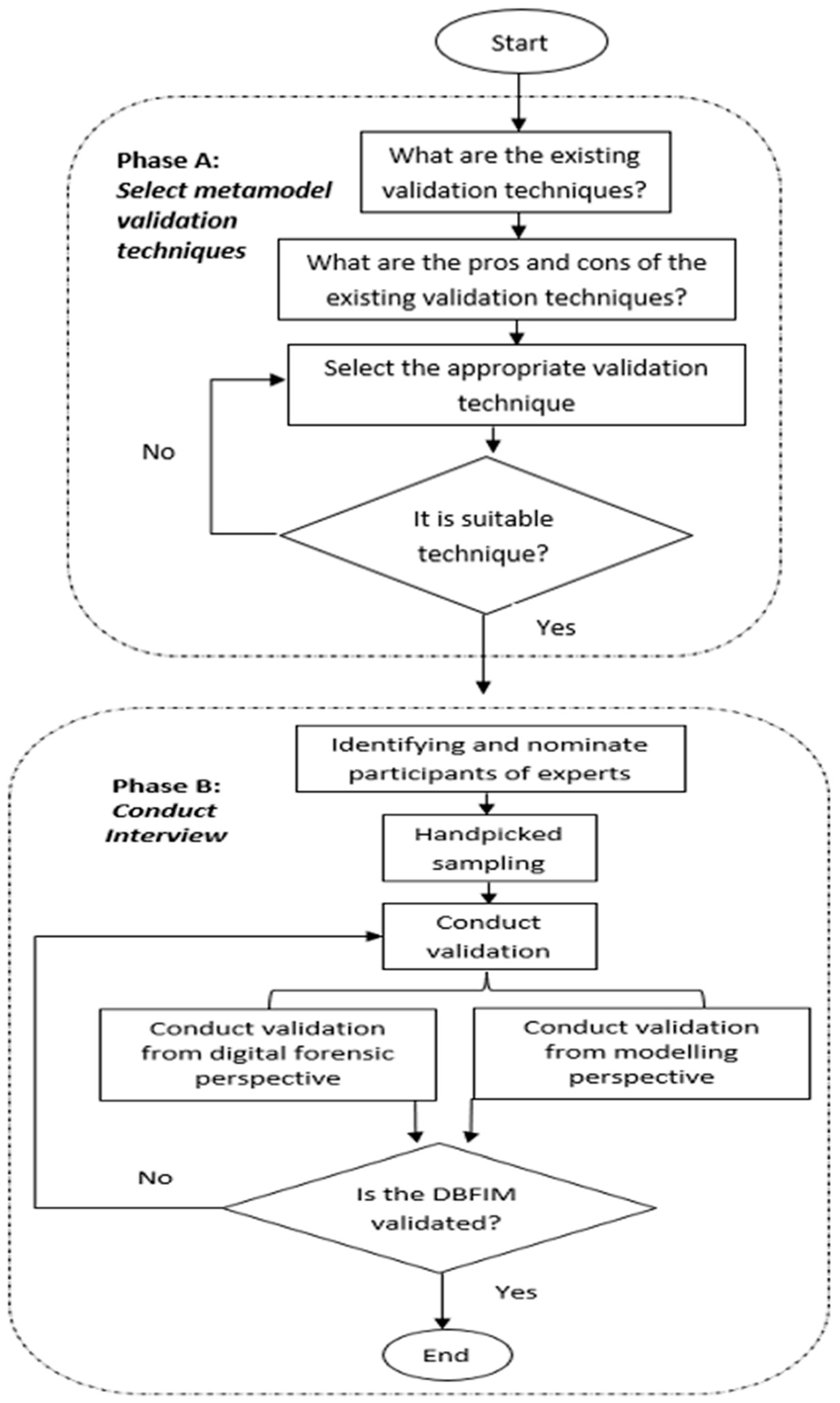

4. Methodology

4.1. Phase A (Figure 5a): Select Metamodel Validation Techniques: This Phase Aims to Find the Proper Validation Techniqe to Validate the Proposed DBFIM. To Find the Proper/Appropriate Validation Technique, This Study Should Answer These Two Quesisions

- What are the existing metamodel validation techniques?

- What are the pros and cons of the existing metamodel validation techniques?

4.2. Phase B (Figure 5b): Conduct Interviews: This Phase Aims to Conduct Interviews to Validate the DBFIM. It Consists of Two Steps: Identification and Selection of Expert Participants; and Conduct Validation

4.2.1. Identification and Selection of Expert Participants

4.2.2. Conduct Validation

“This research is a very good one, and helpful for the lab to understand better about process involves in the DBFI domain. It includes major concepts of DBFI domain in a single model; hence facilitates fast understanding. Definitely, it’s useful for a digital forensic lab. On the other methodology per se, it is interesting in finding such a flow can help interpret the forensic investigation process in a much more precise understanding. This model is useful for Cyber Security Malaysia to explain concepts of DBFI to newly hired staff, as well as to the investigation team in Malaysia”.

“The proposed metamodel is able to facilitate the DBFI practitioners on creating the model based on their specific requirements. This could help in reducing the complexity of managing the process forensic investigation especially on acquiring and examining the evidence. By having this metamodel, it could reduce the misconception of the terms used during the investigation amongst DBFI users and practitioners in which they’re used to the same process of investigation. Also, DBFIM is important to assist forensic examiners to identify and investigate database related incidents in a systematic way that will be acceptable in the court of law. It could help on having a standard term and providing consistency definition on terms used in the investigation. Based on the proposed metamodel, it’s comprehensive enough for handling the DBFI”.

“As long as you follow adopted, adjusted and structured methods to establish metamodel from relevant or credible citations. Then it should be ok. The best solution to evaluate the DBFIM correctness, the student must be using application-based and proven syntactic and semantic model by successful prototyping”.

4.2.3. Conduct Validation of Developed DBFIM from a Modelling Perspective

- i.

- Correctness: Evaluates DBFIM building/structuring.

- ii.

- Relevance: Evaluates the concepts and terminologies relevant to DBFIM. Concepts without relevance may be eliminated or changed.

- iii.

- Economic efficiency: Evaluates the efficiency of DBFIM creation (use and re-use of existing knowledge and solutions).

- iv.

- Clarity: Evaluates the clarity of DBFIM.

5. Results of Validation

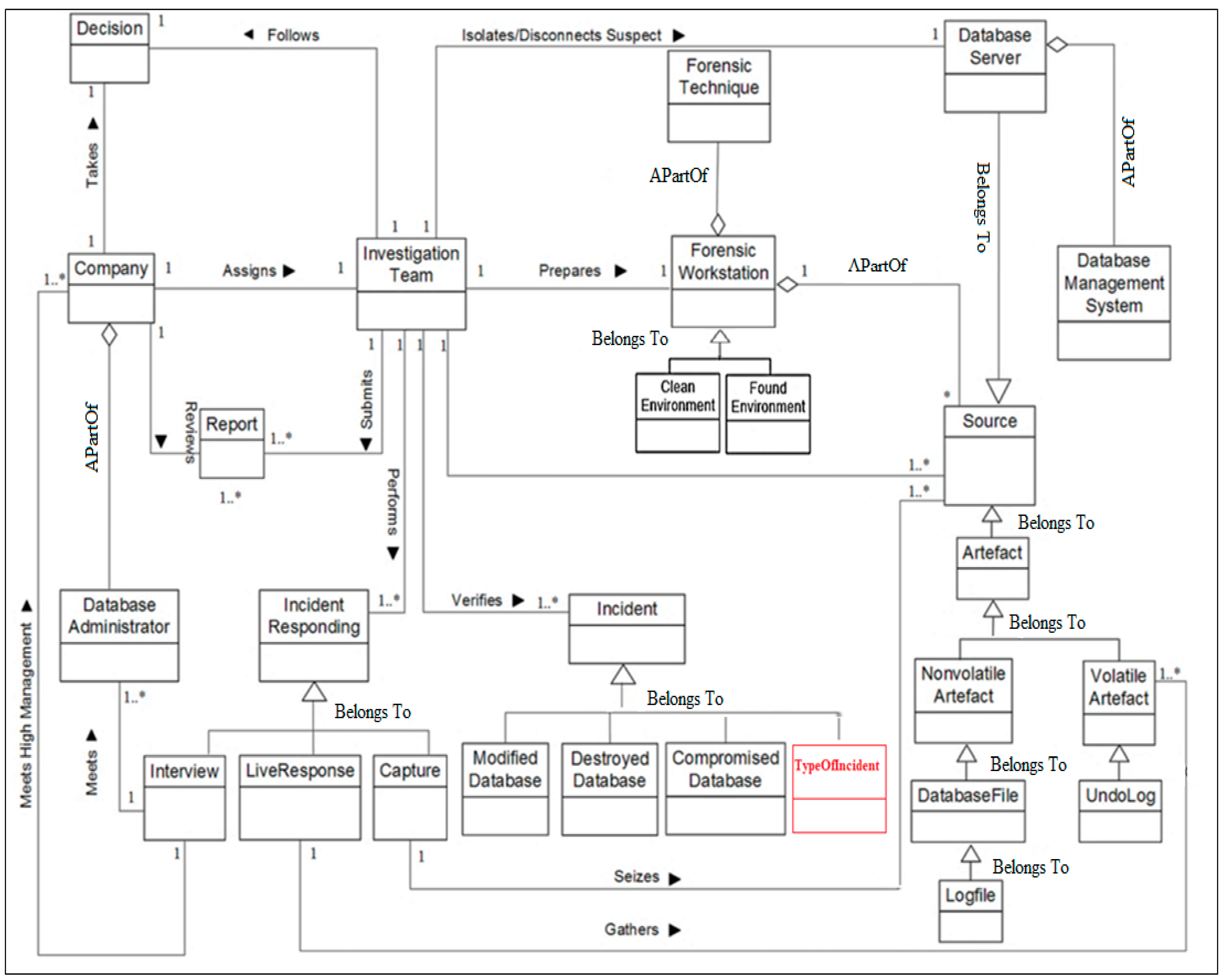

5.1. DBFIM Process Class 1: Identification DBFIM

5.2. DBFIM Process Class 2: Artifact Collection

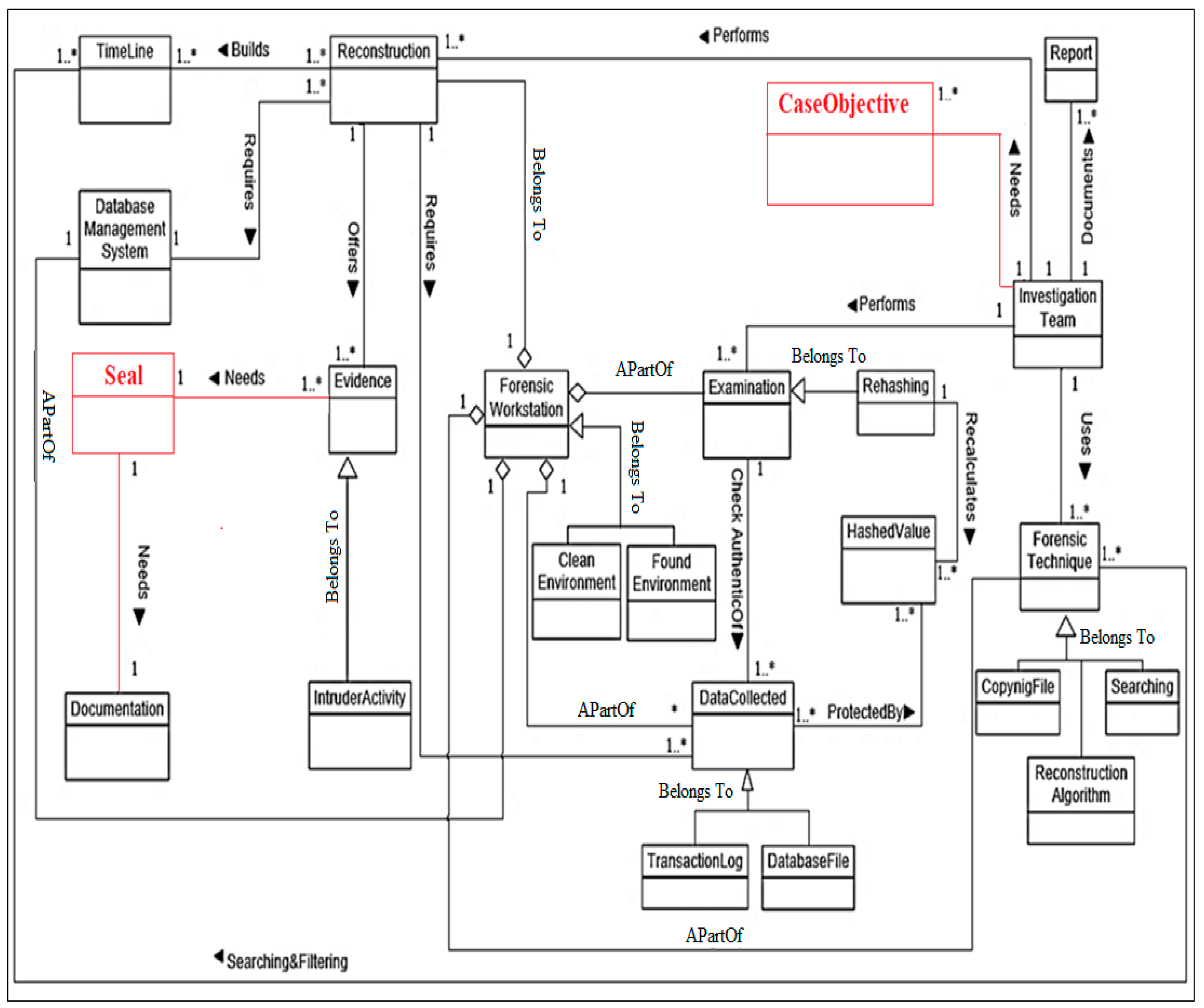

5.3. DBFIM Process Class 3: Artifact Analysis

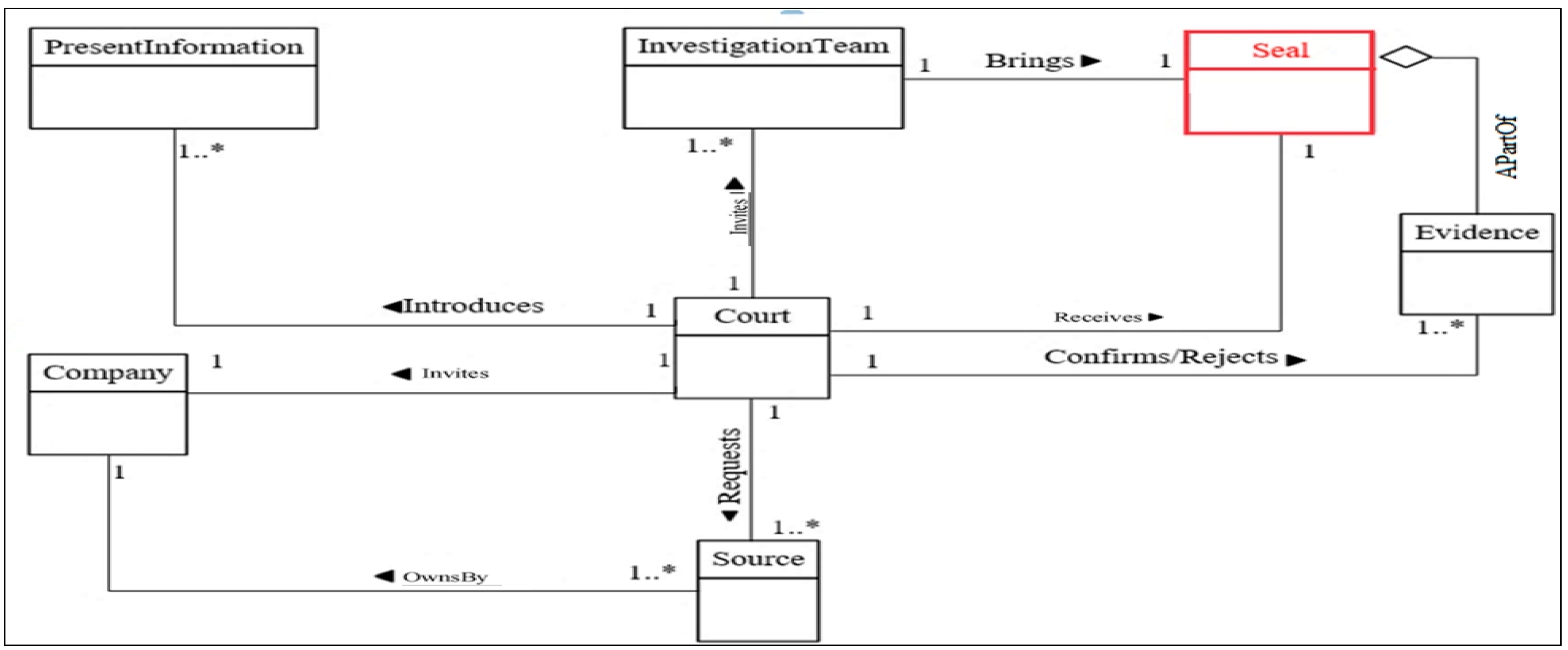

5.4. DBFIM Process Class 4: Documentation and Presentation

6. Discussion and Analysis

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Al-Dhaqm, A.M.R.; Othman, S.H.; Razak, S.A.; Ngadi, A. Towards adapting metamodelling technique for database forensics investigation domain. In Proceedings of the 2014 International Symposium on Biometrics and Security Technologies (ISBAST), Kuala Lumpur, Malaysia, 26–27 August 2014; pp. 322–327. [Google Scholar]

- Fasan, O.M.; Olivier, M.S. On Dimensions of Reconstruction in Database Forensics. In Proceedings of the Workshop on Digital Forensics & Incident Analysis(WDFIA 2012), Crete, Greece, 6–8 June 2012; pp. 97–106. [Google Scholar]

- Al-dhaqm, A.; Razak, S.; Othman, S.H.; Ngadi, A.; Ahmed, M.N.; Mohammed, A.A. Development and validation of a Database Forensic Metamodel (DBFM). PLoS ONE 2017, 12, e0170793. [Google Scholar] [CrossRef] [PubMed]

- Sargent, R.G. Verification and validation of simulation models. In Proceedings of the 37th Conference on Winter Simulation, Baltimore, MD, USA, 5–8 December 2010; pp. 130–143. [Google Scholar]

- Goerger, S.R. Validating Computational Human Behavior Models: Consistency and Accuracy Issues; Naval Postgraduate School: Monterey, CA, USA, 2004. [Google Scholar]

- Olivier, M.S. On metadata context in database forensics. Digit. Investig. 2009, 5, 115–123. [Google Scholar] [CrossRef]

- Wong, D.; Edwards, K. System and Method for Investigating a Data Operation Performed on a Database. U.S. Patent Application 10/879,466, 29 December 2005. [Google Scholar]

- Wright, P.M. Oracle Database Forensics Using Logminer; Global Information Assurance Certification Paper; SANS Institute: Bethesda, MD, USA, 2005. [Google Scholar]

- Litchfield, D. Oracle Forensics Part 1: Dissecting the Redo Logs; NGSSoftware Insight Security Research (NISR), Next Generation Security Software Ltd.: Sutton, UK, 2007. [Google Scholar]

- Litchfield, D. Oracle Forensics Part 2: Locating Dropped Objects; NGSSoftware Insight Security Research (NISR), Next Generation Security Software Ltd.: Sutton, UK, 2007. [Google Scholar]

- Litchfield, D. Oracle Forensics: Part 3: Isolating Evidence of Attacks Against the Authentication Mechanism; Next Generation Security Software Ltd.: Sutton, UK, 2007. [Google Scholar]

- Litchfield, D. Oracle Forensics Part 4: Live Response; Next Generation Security Software Ltd.: Sutton, UK, 2007. [Google Scholar]

- Litchfield, D. Oracle Forensics Part 5: Finding Evidence of Data Theft in the Absence of Auditing; Next Generation Security Software Ltd.: Sutton, UK, 2007. [Google Scholar]

- Litchfield, D. Oracle Forensics Part 6: Examining Undo Segments, Flashback and the Oracle Recycle Bin; Next Generation Security Software Ltd.: Sutton, UK, 2007. [Google Scholar]

- Litchfield, D. Oracle Forensics Part 7: Using the Oracle System Change Number in Forensic Investigations; NGSSoftware Insight Security Research (NISR), Next Generation Security Software Ltd.: Sutton, UK, 2008. [Google Scholar]

- Wagner, J.; Rasin, A.; Grier, J. Database forensic analysis through internal structure carving. Digit. Investig. 2015, 14, S106–S115. [Google Scholar] [CrossRef]

- Ogutu, J.O. A Methodology to Test the Richness of Forensic Evidence of Database Storage Engine: Analysis of MySQL Update Operation in InnoDB and MyISAM Storage Engines. Ph.D. Thesis, University of Nairobi, Nairobi, Kenya, 2016. [Google Scholar]

- Wagner, J.; Rasin, A.; Malik, T.; Hart, K.; Jehle, H.; Grier, J. Database Forensic Analysis with DBCarver. In Proceedings of the CIDR 2017, 8th Biennial Conference on Innovative Data Systems Research, Chaminade, CA, USA, 8–11 January 2017. [Google Scholar]

- Al-Dhaqm, A.; Razak, S.; Othman, S.H.; Choo, K.-K.R.; Glisson, W.B.; Ali, A.; Abrar, M. CDBFIP: Common Database Forensic Investigation Processes for Internet of Things. IEEE Access 2017, 5, 24401–2441621. [Google Scholar] [CrossRef]

- Al-Dhaqm, A.; Razak, S.; Othman, S.H. Model Derivation System to Manage Database Forensic Investigation Domain Knowledge. In Proceedings of the 2018 IEEE Conference on Application, Information and Network Security (AINS), Langkawi, Malaysia, 21–22 November 2018. [Google Scholar]

- Hungwe, T.; Venter, H.S.; Kebande, V.R. Scenario-Based Digital Forensic Investigation of Compromised MySQL Database. In Proceedings of the 2019 IST-Africa Week Conference (IST-Africa), Nairobi, Kenya, 8–10 May 2019. [Google Scholar]

- Khanuja, H.K.; Adane, D. To Monitor and Detect Suspicious Transactions in a Financial Transaction System Through Database Forensic Audit and Rule-Based Outlier Detection Model. In Organizational Auditing and Assurance in the Digital Age; IGI Global: Hershey, PA, USA, 2019; pp. 224–255. [Google Scholar]

- Al-Dhaqm, A.; Abd Razak, S.; Dampier, D.A.; Choo, K.-K.R.; Siddique, K.; Ikuesan, R.A.; Alqarni, A.; Kebande, V.R. Categorization and organization of database forensic investigation processes. IEEE Access 2020, 8, 112846–112858. [Google Scholar] [CrossRef]

- Al-Dhaqm, A.; Abd Razak, S.; Siddique, K.; Ikuesan, R.A.; Kebande, V.R. Towards the Development of an Integrated Incident Response Model for Database Forensic Investigation Field. IEEE Access 2020, 8, 145018–145032. [Google Scholar] [CrossRef]

- Al-Dhaqm, A.; Abd Razak, S.; Othman, S.H.; Ali, A.; Ghaleb, F.A.; Rosman, A.S.; Marni, N. Database Forensic Investigation Process Models: A Review. IEEE Access 2020, 8, 48477–48490. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, B.; Sun, Y. Android Encryption Database Forensic Analysis Based on Static Analysis. In Proceedings of the 4th International Conference on Computer Science and Application Engineering, New York, NY, USA, 20–22 October 2020; pp. 1–9. [Google Scholar]

- Hu, P.; Ning, H.; Qiu, T.; Song, H.; Wang, Y.; Yao, X. Security and privacy preservation scheme of face identification and resolution framework using fog computing in internet of things. IEEE Internet Things J. 2017, 4, 1143–1155. [Google Scholar] [CrossRef]

- Sargent, R.G. Verification and validation of simulation models. In Proceedings of the 2010 Winter Simulation Conference, Baltimore, MD, USA, 5–8 December 2010; pp. 166–183. [Google Scholar]

- De Kok, D. Feature selection for fluency ranking. In Proceedings of the 6th International Natural Language Generation Conference, Dublin, Ireland, 7–9 July 2010; pp. 155–163. [Google Scholar]

- Bermell-Garcia, P. A Metamodel to Annotate Knowledge Based Engineering Codes as Enterprise Knowledge Resources. Ph.D. Thesis, Cranfield University, Cranfield, UK, 2007. [Google Scholar]

- Shirvani, F. Selection and Application of MBSE Methodology and Tools to Understand and Bring Greater Transparency to the Contracting of Large Infrastructure Projects. Ph.D. Thesis, University of Wollongong, Keiraville, Austrilia, 2016. [Google Scholar]

- Kleijnen, J.P.; Deflandre, D. Validation of regression metamodels in simulation: Bootstrap approach. Eur. J. Oper. Res. 2006, 170, 120–131. [Google Scholar] [CrossRef]

- Kleijnen, J.P. Kriging metamodeling in simulation: A review. Eur. J. Oper. Res. 2009, 192, 707–716. [Google Scholar] [CrossRef]

- Oates, B. Researching Information Systems and Computing; SAGE: Thousand Oaks, CA, USA, 2006. [Google Scholar]

- Hancock, M.; Herbert, R.D.; Maher, C.G. A guide to interpretation of studies investigating subgroups of responders to physical therapy interventions. Phys. Ther. 2009, 89, 698–704. [Google Scholar] [CrossRef] [PubMed]

- Robson, C. Real World Research: A Resource for Social Scientists and Practitioner-Researchers; Blackwell Oxford: Oxford, UK, 2002; Volume 2. [Google Scholar]

- Hancock, B.; Ockleford, E.; Windridge, K. An Introduction to Qualitative Research: Trent Focus Group Nottingham; Trent Focus: Nottingham, UK, 1998. [Google Scholar]

- Banerjee, A.; Chitnis, U.; Jadhav, S.; Bhawalkar, J.; Chaudhury, S. Hypothesis testing, type I and type II errors. Ind. Psychiatry J. 2009, 18, 127. [Google Scholar] [CrossRef] [PubMed]

- Grant, J.S.; Davis, L.L. Selection and use of content experts for instrument development. Res. Nurs. Health 1997, 20, 269–274. [Google Scholar] [CrossRef]

- Hauksson, H.; Johannesson, P. Metamodeling for Business Model Design: Facilitating development and communication of Business Model Canvas (BMC) models with an OMG standards-based metamodel. Master’s Thesis, KTH Royal Institute, Stockholm, Sweden, 2013. [Google Scholar]

- O’Leary, Z. The Essential Guide to doing Research; Sage: Thousand Oaks, CA, USA, 2004. [Google Scholar]

- Othman, S.H. Development of Metamodel for Information Security Risk Management. Ph.D. Thesis, Universiti Teknologi Malaysia, Johor, Malaysia, 2013. [Google Scholar]

- Bandara, W.; Indulska, M.; Chong, S.; Sadiq, S. Major issues in business process management: An expert perspective. In Proceedings of the 15th European Conference on Information Systems, St Gallen, Switzerland, 7–9 June 2007. [Google Scholar]

- Becker, J.; Rosemann, M.; Von Uthmann, C. Guidelines of business process modeling. In Business Process Management; Springer: Berlin/Heidelberg, Germany, 2000; pp. 30–49. [Google Scholar]

- Frühwirt, P.; Kieseberg, P.; Krombholz, K.; Weippl, E. Towards a forensic-aware database solution: Using a secured database replication protocol and transaction management for digital investigations. Digit. Investig. 2014, 11, 336–348. [Google Scholar] [CrossRef]

- Fowler, K. SQL Server Forenisc Analysis; Pearson Education: London, UK, 2008. [Google Scholar]

- Fowler, K.; Gold, G.; MCSD, M. A real world scenario of a SQL Server 2005 database forensics investigation. In Information Security Reading Room Paper; SANS Institute: Bethesda, MD, USA, 2007. [Google Scholar]

- Choi, J.; Choi, K.; Lee, S. Evidence Investigation Methodologies for Detecting Financial Fraud Based on Forensic Accounting. In Proceedings of the CSA’09, 2nd International Conference on Computer Science and its Applications, Jeju Island, Korea, 10–12 December 2009; pp. 1–6. [Google Scholar]

- Son, N.; Lee, K.-G.; Jeon, S.; Chung, H.; Lee, S.; Lee, C. The method of database server detection and investigation in the enterprise environment. In Proceedings of the FTRA International Conference on Secure and Trust Computing, Data Management, and Application, Crete, Greece, 28–30 June 2011; pp. 164–171. [Google Scholar]

- Susaimanickam, R. A Workflow to Support Forensic Database Analysis. Ph.D. Thesis, Murdoch University, Murdoch, Australia, 2012. [Google Scholar]

- Lee, D.; Choi, J.; Lee, S. Database forensic investigation based on table relationship analysis techniques. In Proceedings of the 2009 2nd International Conference on Computer Science and Its Applications, CSA 2009, Jeju Island, Korea, 10–12 December 2009. [Google Scholar]

- Khanuja, H.K.; Adane, D. A framework for database forensic analysis. Comput. Sci. Eng. Int. J. (CSEIJ) 2012, 2, 27–41. [Google Scholar] [CrossRef]

- Adedayo, O.M.; Olivier, M.S. Ideal Log Setting for Database Forensics Reconstruction. Digit. Investig. 2015, 12, 27–40. [Google Scholar] [CrossRef]

- Pavlou, K.E.; Snodgrass, R.T. Forensic analysis of database tampering. ACM Trans. Database Syst. (TODS) 2008, 33, 30. [Google Scholar] [CrossRef]

- Tripathi, S.; Meshram, B.B. Digital Evidence for Database Tamper Detection. J. Inf. Secur. 2012, 3, 9. [Google Scholar] [CrossRef]

| ID | Validation Technique | Definitions | Critics |

|---|---|---|---|

| 1. | Machine-Aided [41] | Metamodel specifications are expressed using a formal language. One possibility would be a metamodeling language based on Boolean predication calculus. Such a calculus can take the form of a supplementary set of constraint expressions written in an appropriate Boolean expression language. Care must be taken to ensure the names of object types and relationships appear correctly in the Boolean expressions. | This technique is used for specific multi-graph machines. |

| 2. | Leave-one-out cross Validation [42] | A method for metamodel verification when additional validation points cannot be afforded. It is a special case of cross-validation. In this approach, each sample point used to fit the model is removed one at a time, after which the model is rebuilt without that sample point, and the difference between the model without the sample point and the actual value at the sample point is computed for all sample points. | Is used to validate lost sensitive data and was developed for mathematical metamodeling purposes. |

| 3. | Multistage Validation [43] | Combination of three chronicled strategies, which are logic, experimentation, and positive financial matters, into a multistage procedure of validation. | This technique was developed for simulation purposes. |

| 4. | Tracing/Traceability [4] | The conduct of particular entities in the model is followed (taken after) through the model to figure out whether the rationale of the model was right and if the essential exactness was acquired. | This technique was introduced to evaluate the logical consistency of metamodels against domain models. |

| 5. | Face Validity [4] | Consults with domain experts that the model was reasonably carried out. This procedure guarantees sensible inputs if it produces sensible outputs (getting some information about the domain by an expert whether the model and/or its behavior is sensible). | This technique was developed to validate the completeness, logicalness, and usefulness of metamodels. |

| 6. | Cross-validation [44] | A strategy to choose a “successful” possibility for real re-enactment by evaluating differences between an anticipated output with every other input | It is a model evaluation technique that can assess the precision of a model without requiring any extra example focuses. It was developed for mathematical metamodeling purposes. |

| 7. | Comparison against other models [4] | Inferred concepts of a created metamodel are validated and the validated model is compared with concepts of other (legitimate) existing comparable “domain models or metamodel”. | It used to validate the completeness of the metamodel against domain models. |

| 8. | Bootstrap Approach [32] | A method to test the ampleness of relapse metamodels where the bootstrap appraises the dispersion of any acceptance measurement in arbitrary reproductions with recreated runs. | Bootstrapping is a computationally superior re-sampling technique for simulations. It was developed for simulation modeling purposes. |

| 9. | Formal Ontology [45] | A part of the discipline of ontology in philosophy. It creates general speculations that record parts of reality that are not particular to any field of science, be they in material science or conceptual modeling. | It is used with ontological domains. It focused on theories. |

| 10. | Subjective Validation [46] | A strategy utilized when metamodel information and simulation information do not fulfill the factual presumptions required for target validation. | It is used to validate analog circuit metamodels. |

| 11. | Case Study [47] | A technique to integrate a current formal ontology for information objects with an upper ontology for messages and activities, considering the discourse act hypothesis spoke to consistently utilize UML profiles. | This technique was developed to evaluate the derivation process of metamodels. |

| Digital Forensics Experts | |

|---|---|

| Expert 1 | |

| Designation | Quality manager and digital forensic expert |

| University/Institute | Cyber Security Malaysia |

| Department | Digital Forensics Department |

| Communication Type | Face-to-face |

| Expert 2 | |

| Designation | Visiting professor at the NTU School of Science and Technology |

| University/Institute | Nottingham Trent University |

| Department | School of Science and Technology |

| Communication Type | Remote communication (LinkedIn and Email) |

| Expert 3 | |

| Designation | Senior lecturer |

| University/Institute | University Technology Malaysia Melaka |

| Department | Department of System and Computer Communication |

| Communication Type | Face-to-face |

| Expert 4 | |

| Designation | Managing director at I SEC Academy (M) |

| University/Institute | I SEC Academy (M) Sdn Bhd |

| Department | Department of Digital Forensic |

| Communication Type | Face-to-face |

| Expert 5 | |

| Designation | Senior Lecturer |

| University/Institute | UTM |

| Department | Information System |

| Communication Type | Face-to-face |

| Expert 6 | |

| Designation | Cyber forensics and security researcher |

| University/Institute | Edith Cowan University, Australia |

| Department | ECU Security research Institute |

| Communication Type | Remote communication (via Zoom) |

| DBFIM Process | Concept 1 | Concept 2 | Modification | Relation Name |

|---|---|---|---|---|

| Identification | Types of incident | Incident | Add (Specialization) | Belongs to |

| Artefact Collection | Hashing | Forensic techniques | Add (Specialization) | Belongs to |

| Artefact Analysis | Case objective | Investigation team | Add (Association) | Needs |

| Seal | Evidence | Add (Association) | Needs | |

| Seal | Documentation | Add (Association) | Needs | |

| Documentation and Presentation | Seal | Investigation team | Add (Association) | Brings |

| Seal | Evidence | Add (Specialization) | A part of | |

| Seal | Court | Add (Association) | Receives |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Dhaqm, A.; Razak, S.; Ikuesan, R.A.; R. Kebande, V.; Hajar Othman, S. Face Validation of Database Forensic Investigation Metamodel. Infrastructures 2021, 6, 13. https://doi.org/10.3390/infrastructures6020013

Al-Dhaqm A, Razak S, Ikuesan RA, R. Kebande V, Hajar Othman S. Face Validation of Database Forensic Investigation Metamodel. Infrastructures. 2021; 6(2):13. https://doi.org/10.3390/infrastructures6020013

Chicago/Turabian StyleAl-Dhaqm, Arafat, Shukor Razak, Richard A. Ikuesan, Victor R. Kebande, and Siti Hajar Othman. 2021. "Face Validation of Database Forensic Investigation Metamodel" Infrastructures 6, no. 2: 13. https://doi.org/10.3390/infrastructures6020013

APA StyleAl-Dhaqm, A., Razak, S., Ikuesan, R. A., R. Kebande, V., & Hajar Othman, S. (2021). Face Validation of Database Forensic Investigation Metamodel. Infrastructures, 6(2), 13. https://doi.org/10.3390/infrastructures6020013