Abstract

Crab aquaculture is an important component of the freshwater aquaculture industry in China, encompassing an expansive farming area of over 6000 km2 nationwide. Currently, crab farmers rely on manually monitored feeding platforms to count the number and assess the distribution of crabs in the pond. However, this method is inefficient and lacks automation. To address the problem of efficient and rapid detection of crabs via automated systems based on machine vision in low-brightness underwater environments, a two-step color correction and improved dark channel prior underwater image processing approach for crab detection is proposed in this paper. Firstly, the parameters of the dark channel prior are optimized with guided filtering and quadtrees to solve the problems of blurred underwater images and artificial lighting. Then, the gray world assumption, the perfect reflection assumption, and a strong channel to compensate for the weak channel are applied to improve the pixels of red and blue channels, correct the color of the defogged image, optimize the visual effect of the image, and enrich the image information. Finally, ShuffleNetV2 is applied to optimize the target detection model to improve the model detection speed and real-time performance. The experimental results show that the proposed method has a detection rate of 90.78% and an average confidence level of 0.75. Compared with the improved YOLOv5s detection results of the original image, the detection rate of the proposed method is increased by 21.41%, and the average confidence level is increased by 47.06%, which meets a good standard. This approach could effectively build an underwater crab distribution map and provide scientific guidance for crab farming.

Keywords:

underwater crab image processing; dark channel prior; color correction; channel compensation; target detection Key Contribution:

The article proposes a novel underwater crab image processing algorithm and applies an improved object detection model to detect crabs, which enables the acquisition of a crab distribution map. This method effectively enhances the crab detection rate, contributes to precise feeding, and improves the economic benefits of crab farming.

1. Introduction

With an area of more than 6000 km2, an annual production of more than 800,000 tons, and a total production value of more than RMB 80 billion, crab farming is developing rapidly and has become the largest freshwater aquaculture product in China [1,2,3]. The breeding cycle of crab is about 7 months [4]. The main method of obtaining crab growth information is through traditional manual sampling. Nowadays, crab farmers rely on manually monitored feeding platforms to count the number and assess the distribution of crabs in a pond. This method requires a significant amount of labor and resources, is consuming and laborious, and lacks automation. Therefore, it is necessary to have a comprehensive and accurate automated system to count the number and assess the distribution of crabs during the process of crab aquaculture. With the development of science and technology, image processing, target recognition and other technologies are widely applied in the process of crab farming.

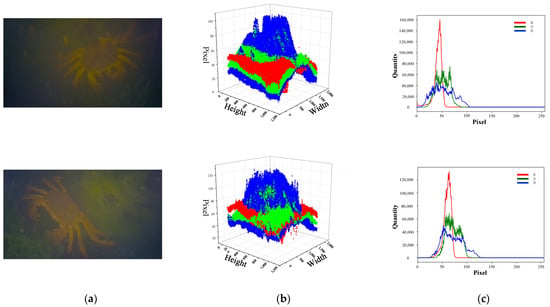

To obtain the number and distribution of crabs in crab ponds, target recognition algorithms are applied to detect the crabs. The special characteristics of the underwater environment cause deficiencies in the images of crab ponds captured using underwater cameras. On one hand, the depth of crab ponds varies from 0.5 m to 2 m [5,6]. Consequently, the images of crab ponds at different depths have low contrast and low brightness and are foggy, showing a bluish-green color. The term “foggy underwater images” refers to underwater images that appear blurry and make it difficult to clearly identify the species or boundaries of objects. On the other hand, underwater crab images taken with the assistance of artificial light source have bright spots in the center and uneven colors. Therefore, direct detection of the original images with deep learning algorithms will leave out a large number of crabs and have large data errors. Intuitively, Figure 1 shows that the three color channels have a low number of pixels and a concentrated distribution, with the red channel having a more pronounced distribution. The pixels in the center of the green and blue channels are better rendered, while the surrounding area has lower pixel values. Therefore, it is necessary to develop crab image processing algorithms to improve the quality and color of underwater crab detection images.

Figure 1.

An example of underwater images of crabs in a pond. (a) Original image of underwater crab, (b) 3D color distribution map; (c) 3-channel pixel histogram. The red, green, and blue colors in (b) and (c) represent the pixels of the red, green, and blue channels of the image, respectively.

With the development of neural network technology, many researchers have used underwater animal recognition based on image preprocessing to count numbers of underwater animal species and observe their growth. Both Li et al. [7] and Zhai et al. [8] employed a combination of traditional image processing and the YOLO series algorithms to recognize and count organisms such as underwater fish and sea cucumbers. Zhao et al. [9] applied the appropriate techniques to detect abnormal behavior and monitor the growth of fish. Currently, there are relatively few approaches to investigate the growth and population distribution of crabs. Sun et al. [10] constructed a crab growth model and predicted the total amount of bait to be fed using neural networks, which saved bait and improved farming efficiency. Siripattanadilok and Siriborvornratanakul [11] proposed a deep learning method to identify and count harvestable mud shell crabs. Chen et al. [12] constructed a crab gender detection model with more than 96% accuracy to classify crab gender non-destructively and efficiently. The general model is divided into two parts: image processing and target recognition. Image processing lays the foundation for target recognition. The target recognition provides concretization support for the subsequent industrial development.

Nowadays, underwater image processing is mainly categorized into two types: traditional image processing algorithms and neural network-based image processing algorithms. Traditional image processing algorithms directly modify the pixel values of the image or construct models for processing. The commonly employed algorithms are HE (histogram equalization), DCP (dark channel prior), and Retinex. Zhu et al. [13] employed homomorphic filtering, MSRCR, and CLAHE (contrast limited adaptive histogram equalization) for linear fusion to remove fog, which resulted in a shallow-water underwater image with clear edges and balanced colors. Li et al. [14] proposed a marine underwater image enhancement approach combining Retinex and a multiscale fusion framework to obtain clear and fog-free images. Zhou et al. [15] incorporated mean square error and color restoration factor into the Retinex algorithm to improve the color of underwater images, and then applied simulated annealing optimization algorithm to enhance the details of underwater images. However, these approaches fail to consider the effect of artificial light sources, which leads to over-enhancement. Zhou et al. [16] applied a perfect reflection enhanced red channel and an improved dark channel to optimize the blue-green transmittance map to eliminate color deviation and retain more details. Combining traditional image processing algorithms is considered one of the current mainstream underwater image processing methods.

Neural network-based machine vision approaches play an important role in different applications and providing new methods for image processing. Perez et al. [17] first applied a CNN model to process foggy underwater images and achieved effective results. Li et al. [18] constructed an unsupervised generative adversarial network, called WaterGAN, for color correction of underwater images, which effectively solved the problem of neural networks requiring a large amount of data, since underwater data are difficult to obtain. However, there are two problems with neural network-based image processing in practical applications. Firstly, the acquired underwater images of crab ponds are blurred with fog and uneven colors. It is impossible to obtain clear images in advance to train the neural network model. Secondly, the underwater environment and turbidity of different areas are different, resulting in different parameters of the trained network. The generalization ability of the neural network is limited, so the trained network cannot handle images of different crab ponds.

Crab identification technology is an important foundation for constructing underwater crab distribution models and optimizing and improving baiting methods. With the rapid development of graphics processor unit (GPU) performance, deep neural networks play a significant role in target detection. The YOLO series of algorithms with excellent performance and convenient deployment are widely applied in various target detection scenarios. Zhang et al. [19] improved the YOLOv4 model by utilizing MobileNetV2 and the attention mechanism, aiming to improve the real-time and fast detection of small targets in complex marine environments. Wen et al. [20] embedded the channel attention (CA) and squeeze-and-excitation (SE) attention modules in YOLOv5s to improve the detection accuracy of underwater targets. Zhao et al. [21] optimized YOLOv4-tiny using the symmetric extended convolution and attention module FPN-Attention to effectively capture valuable features of underwater images. However, the model has limitations, such as applying a single underwater dataset and slow inference speed. Similarly, Mao et al. [22], Liu et al. [23], and Zhang et al. [24] have improved modules to reduce model size and improve detection speed. Unfortunately, little research has been conducted to effectively develop a lightweight YOLO-based target detection algorithm for underwater crabs.

To address the problem of blurring and low brightness of underwater images of crab ponds, a two-step color correction and improved underwater image processing method of crab ponds is proposed. Then, the improved crab identification algorithm is employed to identify and count the number of crabs in the image-processed underwater image dataset of crab ponds. The main contributions of this paper are as follows.

(1) Guided filtering and quadtrees are fused to optimize transmittance and atmospheric light values for the dark channel prior, removing fog and preserving image detail.

(2) A new color correction approach for underwater images is proposed to address the problem of color imbalance in underwater images. Firstly, the approach combines the gray world and perfect reflections to construct a color correction equation to correct the red channel. Secondly, the strong channel compensates for the weak channel to compensate for the red and blue channels.

(3) A K-means++ clustering algorithm, ShuffleNetV2 lightweight model, and distance intersection over union (DIoU) prediction box are proposed to optimize the YOLOv5 model. The improved YOLOv5 model effectively improves the model training convergence speed, enhances the model speed and real-time performance, and provides a technical foundation for underwater crab distribution construction.

2. Materials and Methods

2.1. Database Acquisition

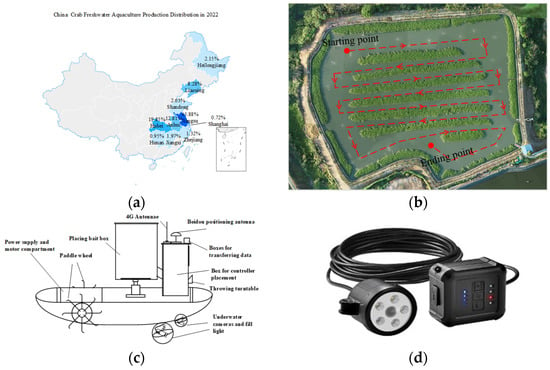

Since crabs live at fixed water depths, constructing a crab-centric target dataset provides an image basis for crab recognition and effectively builds a crab distribution map. Figure 2a shows the top 6 provinces in terms of distribution of crab freshwater aquaculture in China in 2022. River crab freshwater aquaculture is mainly concentrated in the middle and lower reaches of the Yangtze River, and the northeast is also a major production area. Crab freshwater aquaculture in Jiangsu Province accounts for 45.88% of the country’s freshwater aquaculture, ranking the first in the country, and is an important part of crab aquaculture. The dataset of underwater images of crab in the pond that are applied in the experiment is from a demonstration base of crab farming in Changzhou City, Jiangsu Province, from May to August in 2022. The crab breeding area of the Changzhou crab breeding demonstration base is about 0.73 km2, and the water depth of the crab pond is about 0.5–2 m. Since crab feeding is concentrated at night and early morning, based on this characteristic, underwater cameras and low-light auxiliary lighting equipment are employed for image acquisition. Figure 2b shows the trajectory planning of the automatic baiting boat operation. Figure 2c shows an automatic baiting boat, in which two underwater cameras are deployed at the rear of the boat for crab image acquisition. Figure 2d shows the manual compensation acquisition equipment to prevent some areas from being missed by the automatic baiting boat collection. The crab images captured in the dataset have a resolution of 1920 × 1080 pixels. The image maintains its original size during the enhancement process. The input image size of YOLOv5s is selected to be 640 × 640. We constructed a dataset of 3000 underwater images of crabs. The algorithm was run on a device with a processor AMD R9-6900HX, 16 GB of RAM, a graphics card RTX3070Ti, and an operating system of Windows 10.

Figure 2.

Underwater crab video acquisition methods. (a) China crab freshwater aquaculture production distribution in 2022, (b) automatic baiting boat operation trajectory, (c) automatic baiting boat and its video collection equipment deployment, (d) manual collection equipment.

2.2. Overview of the Methodology

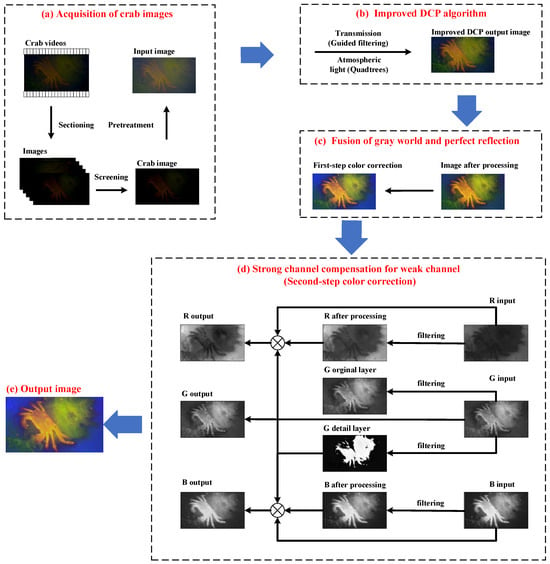

To address the problem of blurring and low brightness of underwater images of crab ponds, a two-step color correction and improved underwater image processing method of crab ponds is proposed in this paper to improve the quality of underwater image dataset of crab ponds. Then, the improved crab identification algorithm is employed to identify and count the number of crabs in the image-processed underwater image dataset of crab ponds. An overview of the proposed method is shown in Figure 3. Our underwater enhancement method consists of four main steps: (1) After slicing and filtering the captured video, we obtain images that meet the experimental conditions and then perform pre-processing. (2) The improved DCP algorithm is employed to defog the underwater images of the crabs in the pond to obtain clear and high-quality fog-free images. (3) In the first step of color correction, the gray world assumption and perfect reflection assumption are combined to enhance the red channel. (4) In the second step of color correction, strong channels compensating for weak channels are employed to enhance the red and blue channels, ultimately resulting in color-balanced, information-rich crab images.

Figure 3.

Systematic overview of the proposed approach.

2.3. Improved Dark Channel Prior Approach

Image processing algorithms based on the dark channel prior (DCP) pioneered a new idea of image defogging. DCP is a simple approach in an algorithmic implementation and is effective in removing the sense of fog from fogged images under water. It is assumed that there are certain regions in the image in which at least one channel has a pixel value tending to zero. However, due to the influence of artificial light sources, underwater crab images have uneven colors and inadequate edge information extraction, and the original DCP algorithm fails to meet the application environment. In this paper, we apply guided filtering and quadtree to optimize transmittance and atmospheric light, respectively, to enhance edge information extraction and weaken the effect of uneven illumination. The underwater image dataset of the crabs acquired using the underwater camera also fits this property. He et al. statistically summarized the solution for clear images with fog images. Since the underwater image and the image with fog possess the same properties, Equation (1) is employed to obtain a clear image . To solve for the original underwater fog-free image of the crabs, the atmospheric light value and transmission need to be calculated. Transmittance is the ratio between the amount of incoming light and the amount leaving from the other side of the incident side of the water. Atmospheric light value is an atmospheric light at infinity.

where denotes fog image, denotes clear image, denotes the atmospheric light value, denotes transmission.

When estimating the atmospheric light in DCP, the mean value of the candidate region with the larger value of image pixels is adopted to approximate the real atmospheric light. However, due to the influence of the artificial light source, the center brightness of the underwater crab image is larger, which leads to an excessive higher atmospheric light value of the solution and affects the real image solution. The quadtree algorithm [25,26] is employed to optimize the atmospheric light solution candidate region of the underwater crab image to reduce the data of the atmospheric light value and ensure the effectiveness of the image processing. The algorithm sets the quartiles of standard deviation as the evaluation function and selects the suitable quartile threshold after the experiment. At each image segmentation, the standard deviation of the four regions is calculated to compare with each other and to satisfy the interquartile threshold. The operation of image delineation for the candidate regions is continued and repeated until the final delineated alternative regions satisfy the evaluation metric threshold.

The transmittance of the localized region is assumed to be constant, where the transmittance is denoted by . Since there are some impurities interfering in the underwater environment of the crab pond and the underwater camera acquires images with a sense of fog, a correction parameter for the transmittance is introduced, and is taken as 0.95. We obtain as follows:

where denotes random window areas, denotes color channel. is applied to indicate transmittance. A low transmittance can lead to overexposure in certain areas of the image. To prevent this situation, the minimum threshold of transmittance is set, expressed as = 0.1. Guided filtering is applied to further optimize transmittance [27,28,29]. The formula for obtaining the final clear image is as follows:

2.4. First-Step Color Correction

DCP effectively addresses the fogging problem of underwater crab images. The gray world assumption [30] and the perfect reflection assumption [31] are combined in color correction [32]. In the underwater crab image, a linear color correction equation is constructed to compensate for the color balance of the red channels.

where denotes the average of R channel pixels, and are the channel correction factor, denotes the pixel value of the channel in the image.

The gray world assumption holds that the average value of the R, G, and B components converge to the same grayscale value in a color-rich image. The perfect reflection assumption supposes that the brightest point in the image is the white point and sets the value of that point to the maximum value as the sum of the R, G, and B channel values. To solve for the correction factor of the linear expression, a joint equation based on two assumptions is described as follows:

where and are the width and height of the requested image. According to Equation (5), the gray world assumption and the perfect reflection assumption are combined to enhance the red channel. Therefore, an innovative image correction method combining the gray world assumption and the perfect reflection assumption effectively enhances the red channel pixel values in the underwater crab images.

2.5. Second-Step Color Correction

First-step color correction is designed to compensate for some deficiencies of the red channel, but with insufficient enhancement. In consideration of the characteristics of the green channel’s low loss in the light scattering process, the compensation equation is constructed to realize the green channel to compensate for the red and blue channels. Via double-scale decomposition of single channel images with massive equalization filtering, the strong channel compensates for the weak channel. The dual-scale decomposition divides the image into structure and texture. The filter size is adapted according to the experiment.

The implementation steps are introduced as follows:

(1) After first-step color correction processing, the underwater crab image is channel-separated, which facilitates the pre-processing of the R, G, and B channels individually. The R channel is obtained as the original layer after double-scale decomposition, followed by median filtering to obtain the final R channel original layer . The same operation is taken for the B channel and G channel to obtain the original layers and . The original G channel and the G channel original layer are subtracted to obtain the G channel detail layer .

(2) Equation (6) indicates how the G channel detail layer is superimposed onto the R channel original layer and the B channel original layer to construct the compensation equation. This compensation approach can solve the detail degradation problem of the red and blue channels and prevent overcompensation. However, applying average filtering in the R channel reduces pixel information, and thus a compensation equation is adopted to achieve color balance.

where and are the coefficients of the compensation equation. is set to 0.85, is set to 0.55.

(3) The image after second-step color correction is obtained by summing the obtained and with the unprocessed G. The outcome has a significant enhancement in the R and B channels in the RGB histogram distribution to achieve color balance.

2.6. Crab Identification Algorithm Based on Improved YOLOv5s

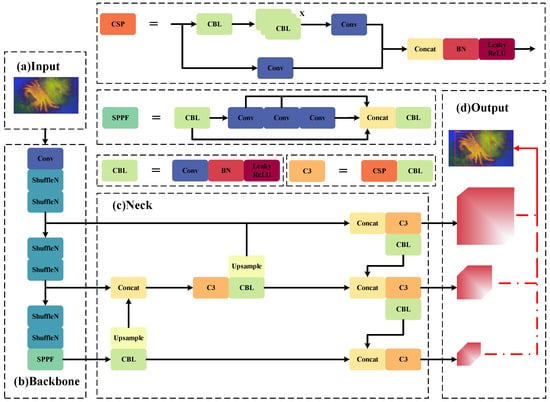

After image processing, the crab images show clarity and color balance, which provides the basis for the algorithm to identify and count the number of river crabs [33,34]. The YOLO series [35,36,37,38] is widely cited as a classical algorithm in the first stage for target detection in various environments. However, the YOLOv5s model is large, and therefore its detection speed in crab recognition cannot satisfy the need for rapidity and real-time performance. Therefore, in engineering applications, it is necessary to reduce model size and improve image detection speed while ensuring detection precision. The K-means++ clustering algorithm, ShuffleNetV2 lightweight model, and DIoU prediction box were proposed in this paper to optimize the YOLOv5s model. To improve the convergence speed of the pre-selected boxes and ground truth boxes in the model and accelerate network training, the K-means++ algorithm is employed to cluster the same crab labels in the self-built underwater crab dataset. This results in 9 pre-selected boxes that are tailored to this dataset. To decrease the size of the crab detection model and increase detection speed and real-time performance, a lightweight model, ShuffleNetV2, was employed to replace the backbone of the original network, ensuring the crab accuracy while reducing the model parameters. Finally, the DIOU loss function was adopted to solve the problem of the target box in GIOU wrapping the prediction box and being unable to distinguish between the relative positions. Figure 4 shows the improved YOLOv5s model by ShuffleNetV2.

Figure 4.

Structure diagram of the improved YOLOv5s model. The model includes four parts: input, backbone, neck, and output. ShuffleNetV2 acts as the backbone to make the network more lightweight. The color selection does not carry any specific meaning.

The K-means algorithm [39] is applied by the YOLOv5s model to cluster the publicly available COCO dataset. K-means essentially involves a loop that iterates over the centroids of the pre-selected box types, calculating the distance from each pre-selected box to the centroid of the new pre-selected box type and re-classifying them according to the closest distance. The loop stops when the intra-class distance is minimized and the inter-class distance is maximized. However, the K-means algorithm is susceptible to the influence of the initial center of mass which is prone to empty clusters, which results in inaccurate clustering and unrepresentative pre-selected boxes. The anchor boxes generated from the COCO dataset cannot be adapted to the crab dataset because of the large variation in the size of the pre-selected anchor boxes. To address the above two problems, the K-means++ algorithm [40] was employed to optimize the selection of the center of mass. The K-means++ algorithm was applied to perform anchor box clustering on the crab dataset. To increase the convergence speed of the model, the YOLOv5s algorithm presets nine pre-selected boxes and categorizes the nine pre-selected boxes into three different dimensions. Therefore, the core of the clustering algorithm is set to nine. The labels of the dataset were clustered, and the clustering results are shown in Table 1.

Table 1.

Clustering results of pre-selected anchor boxes.

To decrease the size of the crab detection model and increase the detection speed and real-time performance, the lightweight model ShuffleNetV2 was proposed. ShufflenetV2 studied the operation of ShuffleNetv1 and MobileNetv2 and combined theory and experiment to obtain four practical guiding principles. First, equal channel width minimizes memory access cost. As the number of point convolutions used in the network increases, the network runs faster. Second, excessive group convolution increases the MAC. Group convolution reduces complexity but decreases model capacity. Third, network fragmentation reduces degree of parallelism and slows down the model. Fourth, element-wise operations are non-negligible, replacing some modules of the residual unit to improve speed. Compared to lightweight networks of the same type, ShufflenetV2 performs faster training and detection on the GPU. It is more compatible and can be combined with modules such as squeeze excitation to improve model performance. Based on the four guiding principles mentioned above, a new operation proposed by ShuffleNetV2 is channel splitting. It divides the channel dimension of the input feature map into two parts after the channel segmentation operation and represents them by A and B. The A module has the same mapping with the same module input size, output size, and the same number of channels. The B module first goes through a 1 × 1 convolution and then a 3 × 3 depth-separable convolution. The depth-separable convolution has better computational power for the same size features. To perform channel fusion, concat is employed to merge modules A and B.

IoU stands for intersection over union, and it reflects the detection performance between the predicted frame and the ground truth frame. It is a commonly used metric in object detection. GIoU [41] is adopted in the YOLOv5s model to calculate the target regression function. When there is a certain angle deviation between the real box and the preselected box, the GIoU calculation is worse. We adopt DIoU [42,43] for optimization. DIoU provides the change direction of the predicted box even when the real box and the predicted box do not overlap and avoids the divergence of the training process.

3. Result and Discussion

3.1. Subjective Estimation

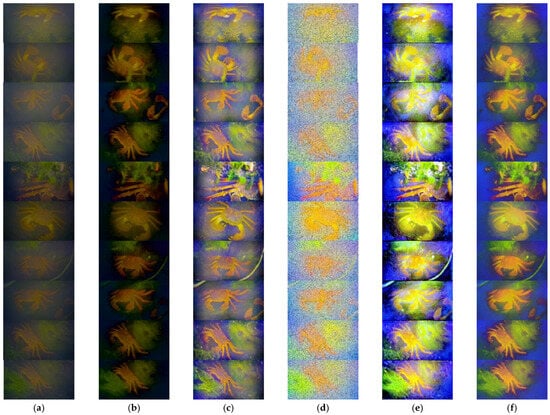

Figure 5 shows the comparison plots of 10 sets of randomly selected original images with the results of applying dark channel prior (DCP), adaptive histogram equalization (AHE), multi-scale Retinex with chromaticity preservation (MSRCP) [44], gated context aggregation network (GCANet) [45], and our proposed method.

Figure 5.

Comparison of processing results among algorithms: (a) original image, (b) DCP, (c) AHE, (d) MSRCP, (e) GCANet, (f) proposed method.

In Figure 5, the original images of the underwater crab exhibit low contrast, weak lighting conditions, and fuzziness. However, the crabs in the original images were recognizable by eye. The crab images processed using the DCP algorithm have low brightness and dark colors. The edges and details of the crabs could not be seen clearly, which resulted in the target recognition algorithm failing to accurately identify the crabs. The crab image processed via the AHE algorithm presents a favorable visual effect. The outline of the crab is clearly visible, which facilitates subsequent processing. However, the high pixel count in the blue channel of the image processed via the AHE algorithm causes the image to appear blue and deviate from the color of the real image. The crab images processed using the MSRCP algorithm have serious noise, fuzzy contours, and poor image quality, preventing clear identification of crab details. This indicates that the MSRCP algorithm is poorly scalable and cannot be directly transferred from the above-water environment to the underwater environment. The GCANet algorithm works with the weights given in the paper, and it was trained with the water environment dataset. The crab images processed with the GCANet algorithm have average processing effects and are susceptible to artificial lighting sources. The crab images processed using the proposed method not only have good image-defogging effects but the details of the crab are also highlighted, and the color is balanced. The auxiliary light source has less influence, and the overall vision is better, providing high-quality images for subsequent processing.

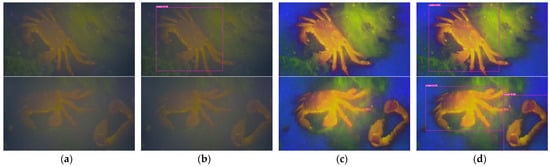

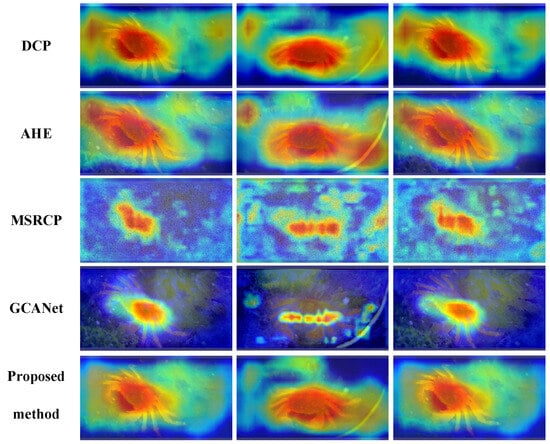

Figure 6 represents the recognition results of improved YOLOv5s before and after enhancement. In the original image, a single crab is easy to recognize, but multiple crabs or crabs with incomplete morphological information cannot be identified. The images processed with the various algorithms were input into the Grad-CAM model for visual analysis of the heat map, and the results are shown in Figure 7. The crab images processed by the proposed method show a heat map with concentrated and correct heat areas on the crab target. This indicates that the proposed method provides the basis for target detection. The heat areas of other compared algorithms present as scattered or non-unique, which affects the target detection results.

Figure 6.

Comparison of recognition results of improved YOLOv5s model before and after enhancement: (a) original image, (b) recognition results of the original image, (c) enhanced image, (d) recognition results of the enhanced image.

Figure 7.

Heat map visualization results after multiple image processing algorithms.

3.2. Objective Estimation

To guarantee the detection of underwater crabs, image quality and structure similarity are important criteria to measure the quality of various image processing algorithms. Structural similarity (SSIM) [46], peak signal noise ratio (PSNR) [47], underwater color image quality evaluate (UCIQE) [48], information entropy (IE) [49], and natural image quality evaluator (NIQE) [50] were selected as image quality evaluation indicators. PSNR indicates the comparison of image quality before and after processing by the algorithm, with higher values indicating better image quality. SSIM indicates the similarity of an image before and after processing to avoid corrupting the original image information through image processing algorithms.

In the underwater crab image dataset, five groups of original images and algorithm-processed images were randomly selected for statistical analysis of image parameter detection.

Table 2 shows the objective estimation results of the processing results of various image processing algorithms. As shown in Table 2, the SSIM and PSNR of crab images processed using the proposed method perform better among the same type of algorithms. In particular, the SSIM indicator ranks first in all five groups of images, with a mean value of 0.864. The SSIM indicators of the crab images processed with the other comparative algorithms are in the range of 0.037–0.709, which is worse than that of proposed method. This indicates that the comparative algorithm corrupts the original images to different degrees, which affects the rendering of the images. The PSNR indicator of the crab images processed by the proposed method is within 14.662–16.467, ranking first among all five groups of images. In UCIQE, IE, and NIQE, all five algorithms maintain a high level of quality. In summary, the PSNR and SSIM indicators of the proposed method ranks first in the objective estimation. The crab images processed using the proposed method have good effect and retain the similar structure to the original image, which provides a good foundation for the subsequent target recognition.

Table 2.

Quantitative analysis results of underwater crab images. The five parametric quantitative evaluation metrics, SSIM (structural similarity), PSNR (peak signal noise ratio), UCIQE (underwater color image quality evaluate), IE (information entropy) and NIQE (natural image quality evaluator), are employed to evaluate the dark channel prior (DCP), adaptive histogram equalization (AHE), multi-scale Retinex with chromaticity preservation (MSRCP) [47], gated context aggregation network (GCANet), and proposed method processed images, respectively.

3.3. Engineering Application Assessment

In engineering applications, underwater crab image processing is the practical foundation for identifying crabs, counting the number of crabs in the pond, and analyzing the current growth of crabs. Due to the complex underwater environment of crab ponds, there are numerous water plants and plankton interfering with crab detection. In engineering application assessment, the crab detection rate and average confidence level in the processed images are important reference indicators for evaluating image processing algorithms.

3.3.1. Comparison of Target Detection Algorithms

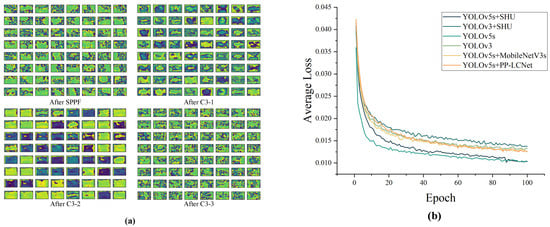

To objectively analyze the performance of the target detection algorithm, the number of parameters, giga floating-point operations per second (GFLOPs), precision, recall, mean average precision (mAP), and frames per second (FPS) are taken as algorithm operation characteristics to evaluate the target detection algorithm. To test the practicality of the improved YOLOv5s algorithm for detecting underwater crab targets in the ponds, YOLOv3, YOLOv3 + Shuffle, YOLOv5s, YOLOv5s + Shuffle, YOLOv5s + MobileNetV3, and YOLOv5s + PP-LCNet training comparison were performed. The model has an epoch of 100, a batch size of 8, and an input image size of 640 × 640. In Figure 8a, the model outputs the features map of the backbone of the SPFF and detection head front C3. In Figure 8b, the average loss of each model training, YOLOv5, and improved YOLOv5 are faster to converge and the average loss function is smaller.

Figure 8.

The process of improved YOLOv5 algorithm is demonstrated: (a) characteristic maps after SPPF and C3 processing, (b) the average loss during training for each model.

Table 3 shows the reference metrics of different target detection algorithms. The improved YOLOv5s model adopting ShuffleNetV2 shows improvements in parameters, GFLOPs, and FPS. The YOLOv5s model with ShuffleNetV2 has 3.20 million parameters, with GFLOPs of 5.9 and FPS of 50. Compared with the original YOLOv5s model, the YOLOv5s model with ShuffleNetV2 reduced the number of parameters by 54.58%, reduced GFLOPs by 63.13%, and increased FPS by 21.95%. However, the precision, recall, and mAP of the improved model have a small decrease, which does not affect the engineering application of the model. The YOLOv3 model optimized with ShuffleNetV2 shows the same trend. This indicates that the lightweight ShuffleNetV2 module effectively reduces the number of parameters and GFLOPs of the model and improves the FPS. There are greater improvements in model training and more rapid detection of images, which is promising for applications in rapid and complex projects.

Table 3.

The performance parameters of the six-output network model. Parameters, giga floating-point operations per second (GFLOPs), precision, recall, mean average precision (mAP), and frames per second (FPS) indicators for YOLOv3, YOLOv3 + SHU, YOLOv5s, YOLOv5s + SHU, YOLOv5s + MobileNetV3s, and YOLOv5s + PP-LCNet algorithms are counted.

Among the ShuffleNetV2, MobileNetV3s, and PP-LCNet lightweight models, ShuffleNetV2 not only had a smaller number of model parameters, but also had a higher FPS with better real-time performance. PP-LCNet is a complex model with twice the number of parameters as the original model and cannot be adapted to real-time systems. Compared with the original model, the FPS of MobileNetV3s model was reduced by 12.20%. In summary, among the lightweight networks, ShuffleNetV2 model is smaller and had better real-time detection capability, which can be better adapted to the engineering application of the crab detection.

3.3.2. Engineering Application Evaluation

An underwater crab image dataset was constructed with 2000 images. In the engineering application evaluation, the dataset was fed into the improved YOLOv5s model for crab identification with the aim of measuring the quality of the underwater crab image processing algorithm proposed in this paper. The dataset was divided into a training and testing set in the ratio of 7:3. Then the whole underwater crab image dataset was fed into improved YOLOv5s model with trained parameters to verify the performance of the network. A total of 1800 crabs in the dataset were identified via manual identification. The detection rate and average confidence in the engineering detection results were taken as reference indicators for the quality of the underwater image processing algorithm. In engineering applications, the model metrics are classified into two criteria, qualifying and good, through statistical experiments. The qualifying standard for detection rate is 60%, and the good standard is 85%. For the average confidence, the qualifying standard is 0.5 and the good standard is 0.7.

In this paper, the improved YOLOv5s algorithm is adopted to perform crab detection for the five image processing results of DCP, AHE, MSRCP, GCANet, and proposed method. The number of crabs in the underwater crab image data set is 1800 by manual statistics. There are situations in the dataset where the water plants were completely obscured, and the crabs could not be seen clearly. As shown in Table 4, a total of 1345 crabs were detected in the original images, with a detection rate of 74.72%, in which the average confidence level of crabs is 0.51. Among the five image processing algorithms, the crab images processed with the proposed method detected a total of 1634 crabs, ranking first in the number of detections. It had a detection rate of 90.78% and an average confidence level of 0.75. Compared with the detection results of the original image, detection rate of the proposed method increased by 21.41%, and the average confidence level increased by 47.06%. The detection rate and average confidence level reached a good standard, which can be better applied to engineering applications and provide technology upgrades for the industry. Among the comparison algorithms, the crab images processed using the DCP algorithm show a slight improvement in detection rate and average confidence, which only reaches the “qualifying” standard. The results of AHE, MSRCP, and GCANet do not meet the qualifying standard for some of the indicators. Therefore, the underwater crab image dataset processed using the proposed method performs well in engineering applications which can satisfy the application requirements of crab farming.

Table 4.

Detection results of improved YOLOv5s.

The subjective estimation, objective estimation, and engineering application assessment of the five image processing algorithms are synthesized and summarized as follows. The crab images processed using the DCP algorithm have low brightness, invisible details, and general objective estimation indicators. The crab images processed with the DCP algorithm show a slight improvement in the detection rate and average confidence level. The crab images processed with the AHE algorithm deviate from the color of the real image, and the engineering detection rate is reduced by 7.13% compared to the detection results of original image. The crab images processed with the MSRCP algorithm have severe noise, fuzzy outlines, and poor image quality, which seriously interferes with the detection of the target detection algorithm and impossible for engineering applications. The UCIQE performance of the crab images processed using the GCANet algorithm is better, but the results of engineering applications are normal. The crab images processed with the proposed method exhibit the defogging effect, outstanding details, and balanced colors, presenting high quality visual effects. The SSIM and PSNR of the crab images processed with the proposed method perform the best among the same type of algorithms. It has an average SSIM of 0.864 and a PSNR indicator between 14.662 and 16.467. The proposed method detected a total of 1634 crabs in the database, which shows a detection rate of 90.78% and an average confidence level of 0.75, which meet the “good” standard. Compared with the detection results of the original image, the detection rate of the proposed method increased by 21.41%, and the average confidence level increased by 47.06%. The results processed with the proposed method achieved better results in subjective estimation, objective estimation, and engineering application assessment. The approach effectively captures the density distribution of crabs and constructs a submerged crab distribution map, providing science and technology for fine-grained farming.

4. Conclusions

A two-step color correction and improved dark channel prior image processing approach is proposed for underwater crab images with low contrast, weak light conditions, color imbalance, and interference from auxiliary light sources. Dark channel prior is improved with guided filtering and quadtree to overcome the problems of blurred underwater images and artificial lighting sources. The color correction approach combining the gray world assumption, the perfect reflection assumption, and strong channels to compensate for weak channels is employed to optimize the underwater image color. To satisfy the rapidity and real-time performance requirements of detection for engineering applications, the YOLOv5 model is improved by adopting the ShuffleNetV2 network. The experimental results show that the proposed method processes the resultant images to achieve better results in subjective evaluation, objective evaluation, and engineering application assessment, which effectively addresses the problems existing in the underwater images and meets the engineering requirements. As mentioned above, the proposed image processing method and improved YOLOv5s algorithm will be deployed on an embedded board and applied to an automated baiting boat. This method can effectively improve real-time detection accuracy of crabs in ponds under various environments and conditions, including nighttime and daytime.The real-time crab population detection device can accurately obtain the density distribution of crabs in a crab pond and construct an underwater crab distribution map. Combined with information such as water quality parameters, pond area, and the current growth stage of crabs, the appropriate amount of baiting can be scientifically determined.

The approach in this paper also has certain limitations. To better suit the project crab farming environment with a high degree of concentration, the algorithm is developed based on a self-built crab dataset, which is not widely applied for target identification in different underwater environments. It is also influenced by artificial light sources, of which there are two, forming points in the central area of the image, which has an effect on the processing quality. This causes the image to present too-vivid color blocks after processing, which affects the visual experience of the image and can be further optimized subsequently. In the future, we will also focus on the following two issues. Firstly, we will expand the dataset to prevent problems such as misidentification or failure to recognize crabs due to poor shooting angles and improve the recognition of crabs with different morphologies. Additionally, based on the research conducted in this article, we will build a deep learning algorithm to monitor crab growth. This will contribute to the intelligent and refined development of the aquaculture industry.

Author Contributions

Conceptualization, Y.S.; methodology, Z.L.; software, B.Y.; validation, D.Z.; formal analysis, B.Y.; investigation, Z.L.; data curation, Z.L.; resources, Y.L.; writing—original draft preparation, Y.S.; writing—review and editing, Y.L.; visualization, B.Y.; supervision, D.Z.; project administration, D.Z.; funding acquisition, Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant No. 62173162); Jiangsu Provincial Modern Agricultural Machinery Equipment and Technology Demonstration and Promotion Project in 2022 (Grant No. NJ2022-28); the Priority Academic Program Development of Jiangsu Higher Education Institutions of China (Grant No. PAPD-2018-87).

Institutional Review Board Statement

The crabs were observed and photographed in their natural environment without any interference or harm, so the ethic approval is not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Cao, S.; Chen, Z.; Sun, Y.; Zhao, D.; Hong, J.; Ruan, C. Research on Automatic Bait Casting System for Crab Farming. In Proceedings of the 2020 5th International Conference on Electromechanical Control Technology and Transportation, Nanchang, China, 15–17 May 2020; pp. 403–408. [Google Scholar]

- Cao, S.; Zhao, D.; Sun, Y.; Ruan, C. Learning-based low-illumination image enhancer for underwater live crab detection. ICES J. Mar. Sci. 2021, 78, 979–993. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, J.; Shi, D.; Xu, F.; Wu, H. Study on Methods of Comprehensive Climatic Regionalization of the Crab in Jiangsu Province. Chin. J. Agric. Resour. Reg. Plan. 2021, 42, 130–135. [Google Scholar]

- Gu, J.; Zhang, Y.; Liu, X.; Du, Y. Preliminary Study on Mathematical Model of Growth for Young Chinese Mitten Crab. J. Tianjin Agric. Coll. 1997, 4, 23–30. [Google Scholar]

- Cao, S.; Zhao, D.; Liu, X.; Sun, Y. Real-time robust detector for underwater live crabs based on deep learning. Comput. Electron. Agric. 2020, 172, 105339. [Google Scholar] [CrossRef]

- Cao, S.; Zhao, D.; Sun, Y.; Liu, X.; Ruan, C. Automatic coarse-to-fine joint detection and segmentation of underwater non-structural live crabs for precise feeding. Comput. Electron. Agric. 2021, 180, 105905. [Google Scholar] [CrossRef]

- Li, J.; Xu, W.; Deng, L.; Xiao, Y.; Han, Z.; Zheng, H. Deep learning for visual recognition and detection of aquatic animals: A review. Rev. Aquac. 2022, 15, 409–433. [Google Scholar] [CrossRef]

- Zhai, X.; Wei, H.; He, Y.; Shang, Y.; Liu, C. Underwater Sea Cucumber Identification Based on Improved YOLOv5. Appl. Sci. 2022, 12, 9105. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, S.; Lu, J.; Wang, H.; Feng, Y.; Shi, C.; Li, D.; Zhao, R. A lightweight dead fish detection method based on deformable convolution and YOLOV4. Comput. Electron. Agric. 2022, 198, 107098. [Google Scholar] [CrossRef]

- Sun, Y.; Chen, Z.; Zhao, D.; Zhan, T.; Zhou, W.; Ruan, C. Design and Experiment of Precise Feeding System for Pond Crab Culture. Trans. Chin. Soc. Agric. Mach. 2022, 53, 291–301. [Google Scholar]

- Siripattanadilok, W.; Siriborvornratanakul, T. Recognition of partially occluded soft-shell mud crabs using Faster R-CNN and Grad-CAM. Aquac. Int. 2023, 1–21. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Y.; Li, D.; Duan, Q. Chinese mitten crab detection and gender classification method based on GMNet-YOLOv4. Comput. Electron. Agric. 2023, 214, 108318. [Google Scholar] [CrossRef]

- Zhu, D. Underwater Image Enhancement Based on the Improved Algorithm of Dark Channel. Mathematics 2023, 11, 1382. [Google Scholar] [CrossRef]

- Li, T.; Zhou, T. Multi-scale fusion framework via retinex and transmittance optimization for underwater image enhancement. PLoS ONE 2022, 17, e0275107. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Yao, J.; Zhang, W.; Zhang, D. Multi-scale retinex-based adaptive gray-scale transformation method for underwater image enhancement. Multimed. Tools Appl. 2021, 81, 1811–1831. [Google Scholar] [CrossRef]

- Zhou, J.; Liu, D.; Xie, X.; Zhang, W. Underwater image restoration by red channel compensation and underwater median dark channel prior. Appl. Opt. 2022, 61, 2915–2922. [Google Scholar] [CrossRef] [PubMed]

- Perez, J.; Attanasio, A.C.; Nechyporenko, N.; Sanz, P.J. A Deep Learning Approach for Underwater Image Enhancement. In Proceedings of the 6th International Work-Conference on the Interplay between Natural and Artificial Computation, Elche, Spain, 1–5 June 2017; pp. 183–192. [Google Scholar]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised Generative Network to Enable Real-Time Color Correction of Monocular Underwater Images. IEEE Robot. Autom. Lett. 2017, 3, 387–394. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, S.; Song, W.; He, Q.; Wei, Q. Lightweight Underwater Object Detection Based on YOLO v4 and Multi-Scale Attentional Feature Fusion. Remote Sens. 2021, 13, 4706. [Google Scholar] [CrossRef]

- Wen, G.; Li, S.; Liu, F.; Luo, X.; Er, M.-J.; Mahmud, M.; Wu, T. YOLOv5s-CA: A Modified YOLOv5s Network with Coordinate Attention for Underwater Target Detection. Sensors 2023, 23, 3367. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Zheng, J.; Sun, S.; Zhang, L. An Improved YOLO Algorithm for Fast and Accurate Underwater Object Detection. Symmetry 2022, 14, 1669. [Google Scholar] [CrossRef]

- Mao, G.; Weng, W.; Zhu, J.; Zhang, Y.; Wu, F.; Mao, Y. Model for marine organism detection in shallow sea using the improved YOLO-V4 network. Trans. Chin. Soc. Agric. Eng. 2021, 37, 152–158. [Google Scholar]

- Liu, K.; Peng, L.; Tang, S. Underwater Object Detection Using TC-YOLO with Attention Mechanisms. Sensors 2023, 23, 2567. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Y.; Xu, X.; Liu, Y.; Lu, L.; Wu, Q. YoloXT: A object detection algorithm for marine benthos. Ecol. Inform. 2022, 72, 101923. [Google Scholar] [CrossRef]

- Wang, Y.; Cao, J.; Tang, M.; Li, G.; Hao, Q.; Fang, Y. Underwater image restoration based on improved dark channel prior. Symp. Nov. Photoelectron. Detect. Technol. Appl. 2021, 11763, 998–1003. [Google Scholar]

- Yu, J.; Huang, S.; Zhou, S.; Chen, L.; Li, H. The improved dehazing method fusion-based. In Proceedings of the 2019 Chinese Automation Congress, Hangzhou, China, 22–24 November 2019; pp. 4370–4374. [Google Scholar]

- Zou, Y.; Ma, Y.; Zhuang, L.; Wang, G. Image Haze Removal Algorithm Using a Logarithmic Guide Filtering and Multi-Channel Prior. IEEE Access 2021, 9, 11416–11426. [Google Scholar] [CrossRef]

- Suresh, S.; Rajan, M.R.; Pushparaj, J.; Asha, C.S.; Lal, S.; Reddy, C.S. Dehazing of Satellite Images using Adaptive Black Widow Optimization-based framework. Int. J. Remote Sens. 2021, 42, 5072–5090. [Google Scholar] [CrossRef]

- Sun, S.; Zhao, S.; Zheng, J. Intelligent Site Detection Based on Improved YOLO Algorithm. In Proceedings of the International Conference on Big Data Engineering and Education (BDEE), Guiyang, China, 12–14 August 2021; pp. 165–169. [Google Scholar]

- Gasparini, F.; Schettini, R. Color correction for digital photographs. In Proceedings of the 12th International Conference on Image Analysis and Processing, Mantova, Italy, 17–19 September 2003; pp. 646–651. [Google Scholar]

- Gasparini, F.; Schettini, R. Color balancing of digital photos using simple image statistics. Pattern Recognit. 2004, 37, 1201–1217. [Google Scholar] [CrossRef]

- Xu, X.; Cai, Y.; Liu, C.; Jia, K.; Sun, L. Color Cast Detection and Color Correction Methods Based on Image Analysis. Meas. Control Technol. 2008, 27, 10–12+21. [Google Scholar]

- Zhao, D.; Liu, X.; Sun, Y.; Wu, R.; Hong, J.; Ruan, C. Detection of Underwater Crabs Based on Machine Vision. Trans. Chin. Soc. Agric. Mach. 2019, 50, 151–158. [Google Scholar]

- Zhao, D.; Cao, S.; Sun, Y.; Qi, H.; Ruan, C. Small-sized Efficient Detector for Underwater Freely Live Crabs Based on Compound Scaling Neural Network. Trans. Chin. Soc. Agric. Mach. 2020, 51, 163–174. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- MacQueen, J. Some Methods for Classification and Analysis of Multivariate Observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 21 June–18 July 1967; pp. 281–297. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. k-Means++: The Advantages of Careful Seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the 34th AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2019; pp. 12993–13000. [Google Scholar]

- Zhou, Q.; Qin, J.; Xiang, X.; Tan, Y.; Xiong, N. Algorithm of Helmet Wearing Detection Based on AT-YOLO Deep Mode. Comput. Mater. Contin. 2021, 69, 159–174. [Google Scholar] [CrossRef]

- Bhateja, V.; Yadav, A.; Singh, D.; Chauhan, B. Multi-scale Retinex with Chromacity Preservation (MSRCP)-Based Contrast Enhancement of Microscopy Images. Smart Intell. Comput. Appl. 2022, 2, 313–321. [Google Scholar]

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated Context Aggregation Network for Image Dehazing and Deraining. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision, Waikoloa Village, HI, USA, 7–11 January 2018; pp. 1375–1383. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A Statistical Evaluation of Recent Full Reference Image Quality Assessment Algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality Evaluation Metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Tsai, D.; Lee, Y.; Matsuyama, E. Information Entropy Measure for Evaluation of Image Quality. J. Digit. Imaging 2008, 21, 338–347. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).