IDP-Head: An Interactive Dual-Perception Architecture for Organoid Detection in Mouse Microscopic Images

Abstract

1. Introduction

2. Related Work

2.1. Application of Object Detection in Medical Image Analysis

2.2. Advanced Visual Perception Mechanisms and the Research Gap in Detection Head Design

3. Materials and Methods

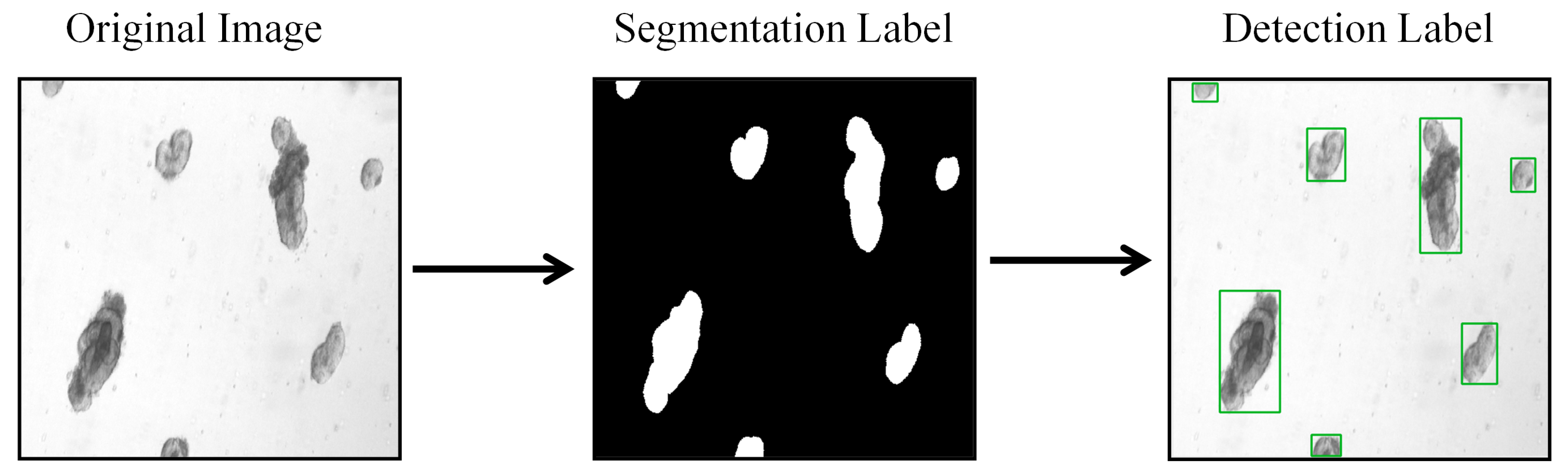

3.1. Datasets

3.2. Description of Compared Models

3.3. Evaluation Metrics

3.4. Implementation Details

3.5. Overview of Methods

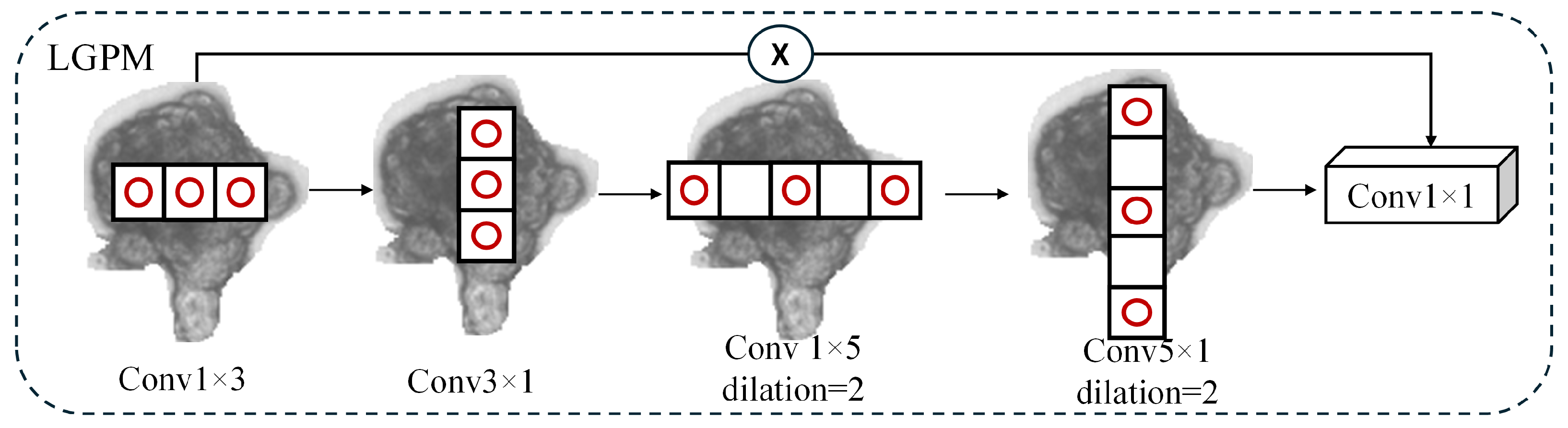

3.6. Interactive Dual-Perception Head

4. Experimental Results

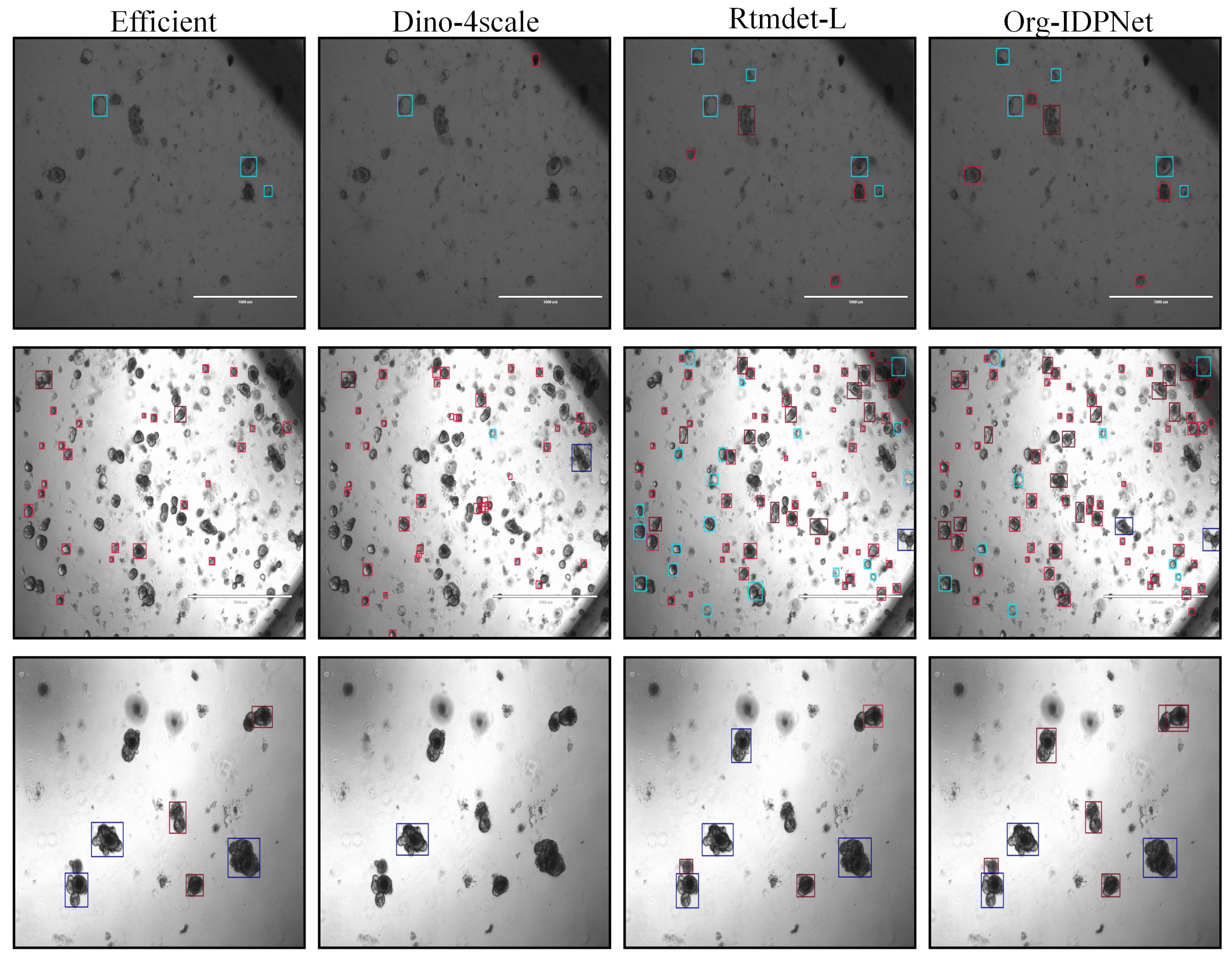

4.1. Comparative Experiments on MouseOrg

4.2. Comparative Experiments on TelluOrg

4.3. Ablation Study

5. Discussion

5.1. Confusion Matrix Analysis

5.2. Precision Analysis

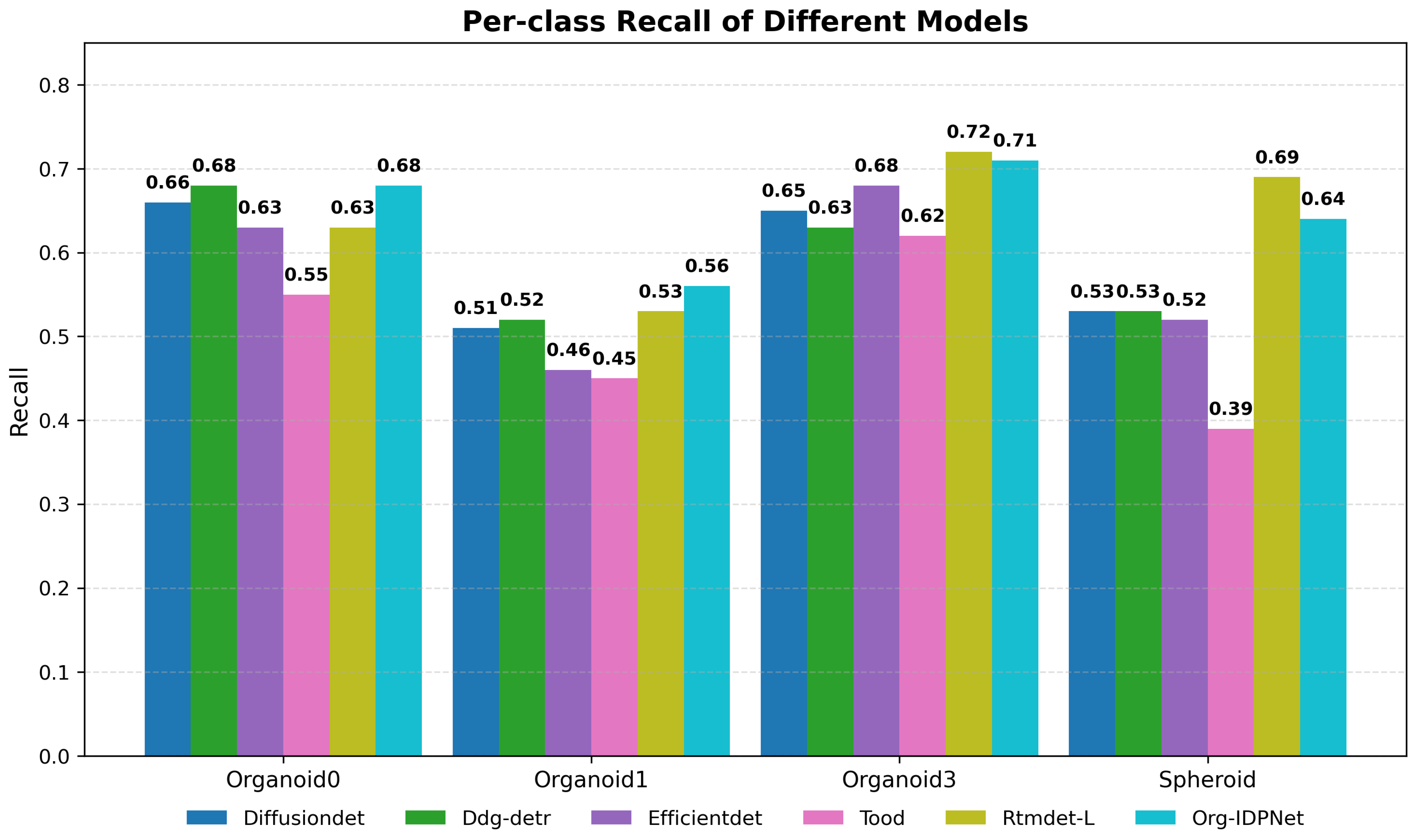

5.3. Recall Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lee, M.J.; Lee, J.; Ha, J.; Kim, G.; Kim, H.-J.; Lee, S.; Koo, B.-K.; Park, Y.K. Long-term three-dimensional high-resolution imaging of live unlabeled small intestinal organoids via low-coherence holotomography. Exp. Mol. Med. 2024, 56, 2162–2170. [Google Scholar] [CrossRef]

- Ewoldt, J.K.; DePalma, S.J.; Jewett, M.E.; Karakan, M.Ç.; Lin, Y.-M.; Mir Hashemian, P.; Gao, X.; Lou, L.; McLellan, M.A.; Tabares, J.; et al. Induced pluripotent stem cell-derived cardiomyocyte in vitro models: Benchmarking progress and ongoing challenges. Nat. Methods 2025, 22, 24–40. [Google Scholar] [CrossRef]

- Shoji, J.-Y.; Davis, R.P.; Mummery, C.L.; Krauss, S. Global literature analysis of organoid and organ-on-chip research. Adv. Healthc. Mater. 2024, 13, 2301067. [Google Scholar] [CrossRef] [PubMed]

- Rachmian, N.; Medina, S.; Cherqui, U.; Akiva, H.; Deitch, D.; Edilbi, D.; Croese, T.; Salame, T.M.; Ramos, J.M.P.; Cahalon, L.; et al. Identification of senescent, TREM2-expressing microglia in aging and Alzheimer’s disease model mouse brain. Nat. Neurosci. 2024, 27, 1116–1124. [Google Scholar] [CrossRef] [PubMed]

- Merrill, N.M.; Kaffenberger, S.D.; Bao, L.; Vandecan, N.; Goo, L.; Apfel, A.; Cheng, X.; Qin, Z.; Liu, C.-J.; Bankhead, A.; et al. Integrative drug screening and multiomic characterization of patient-derived bladder cancer organoids reveal novel molecular correlates of gemcitabine response. Eur. Urol. 2024, 86, 434–444. [Google Scholar] [CrossRef] [PubMed]

- Jain, A.; Gut, G.; Sanchis-Calleja, F.; Tschannen, R.; He, Z.; Luginbühl, N.; Zenk, F.; Chrisnandy, A.; Streib, S.; Harmel, C.; et al. Morphodynamics of human early brain organoid development. Nature 2025, 644, 1010–1019. [Google Scholar] [CrossRef]

- Papargyriou, A.; Najajreh, M.; Cook, D.P.; Maurer, C.H.; Bärthel, S.; Messal, H.A.; Ravichandran, S.K.; Richter, T.; Knolle, M.; Metzler, T.; et al. Heterogeneity-driven phenotypic plasticity and treatment response in branched-organoid models of pancreatic ductal adenocarcinoma. Nat. Biomed. Eng. 2024, 9, 836–864. [Google Scholar] [CrossRef]

- Saad, M.M.; O’Reilly, R.; Rehmani, M.H. A survey on training challenges in generative adversarial networks for biomedical image analysis. Artif. Intell. Rev. 2024, 57, 19. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Alif, M.A.R.; Hussain, M. YOLOv1 to YOLOv10: A comprehensive review of YOLO variants and their application in the agricultural domain. arXiv 2024, arXiv:2406.10139. [Google Scholar] [CrossRef]

- Wang, Y.; Deng, Y.; Zheng, Y.; Chattopadhyay, P.; Wang, L. Vision transformers for image classification: A comparative survey. Technologies 2025, 13, 32. [Google Scholar] [CrossRef]

- Quan, Y.; Zhang, D.; Zhang, L.; Tang, J. Centralized feature pyramid for object detection. IEEE Trans. Image Process. 2023, 32, 4341–4354. [Google Scholar] [CrossRef] [PubMed]

- Ji, S.; Zhang, H.; Zhang, J.; Fei, C.; Wang, X.; Liu, J.; Zhang, P. A three-stage model for infrared small target detection with spatial and semantic feature fusion. Expert Syst. Appl. 2026, 295, 128776. [Google Scholar] [CrossRef]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. Rtmdet: An empirical study of designing real-time object detectors. arXiv 2022, arXiv:2212.07784. [Google Scholar] [CrossRef]

- Xiao, T.; Yang, L.; Zhang, D.; Cui, T.; Zhang, X.; Deng, Y.; Li, H.; Wang, H. Early detection of nicosulfuron toxicity and physiological prediction in maize using multi-branch deep learning models and hyperspectral imaging. J. Hazard. Mater. 2024, 474, 134723. [Google Scholar] [CrossRef]

- Xu, C.; Zhang, T.; Zhang, D.; Zhang, D.; Han, J. Deep generative adversarial reinforcement learning for semi-supervised segmentation of low-contrast and small objects in medical images. IEEE Trans. Med. Imaging 2024, 43, 3072–3084. [Google Scholar] [CrossRef]

- Archana, R.; Jeevaraj, P.S.E. Deep learning models for digital image processing: A review. Artif. Intell. Rev. 2024, 57, 11. [Google Scholar] [CrossRef]

- Peng, L.; Lu, Z.; Lei, T.; Jiang, P. Dual-structure elements morphological filtering and local z-score normalization for infrared small target detection against heavy clouds. Remote Sens. 2024, 16, 2343. [Google Scholar] [CrossRef]

- Sun, Y.; Sun, Z.; Chen, W. The evolution of object detection methods. Eng. Appl. Artif. Intell. 2024, 133, 108458. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, D.; Feng, J.; Cascone, L.; Nappi, M.; Wan, S. A multi-objective segmentation method for chest X-rays based on collaborative learning from multiple partially annotated datasets. Inf. Fusion 2024, 102, 102016. [Google Scholar] [CrossRef]

- Moglia, A.; Cavicchioli, M.; Mainardi, L.; Cerveri, P. Deep learning for pancreas segmentation on computed tomography: A systematic review. Artif. Intell. Rev. 2025, 58, 220. [Google Scholar] [CrossRef]

- Xue, F.; Pang, L.; Huang, X.; Ma, R. A lightweight convolutional network based on RepLKnet and Ghost convolution. In Proceedings of the 2024 4th International Conference on Electronic Information Engineering and Computer Communication (EIECC), Wuhan, China, 27–29 December 2024; pp. 1571–1576. [Google Scholar]

- Fu, C.; Du, B.; Zhang, L. Resc-net: Hyperspectral image classification based on attention-enhanced residual module and spatial-channel attention. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar]

- Crist, W. Debunking the diffusion of senet. Board Game Stud. J. 2021, 15, 13–27. [Google Scholar] [CrossRef]

- Song, X.; Fang, X.; Meng, X.; Fang, X.; Lv, M.; Zhuo, Y. Real-time semantic segmentation network with an enhanced backbone based on Atrous spatial pyramid pooling module. Eng. Appl. Artif. Intell. 2024, 133, 107988. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, X.; Dowbaj, A.M.; Sljukic, A.; Bratlie, K.; Lin, L.; Fong, E.L.S.; Balachander, G.M.; Chen, Z.; Soragni, A.; et al. Organoids. Nat. Rev. Methods Prim. 2022, 2, 94. [Google Scholar] [CrossRef]

- Domènech-Moreno, E.; Brandt, A.; Lemmetyinen, T.T.; Wartiovaara, L.; Mäkelä, T.P.; Ollila, S. Tellu–an object-detector algorithm for automatic classification of intestinal organoids. Dis. Model. Mech. 2023, 16, dmm049756. [Google Scholar] [CrossRef]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab detection toolbox and benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, Y.; Yang, T.; Zhang, X.; Cheng, J.; Sun, J. You only look one-level feature. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13039–13048. [Google Scholar]

- Ahmed, A.; Tangri, P.; Panda, A.; Ramani, D.; Karmakar, S. Vfnet: A convolutional architecture for accent classification. In Proceedings of the 2019 IEEE 16th India Council International Conference (INDICON), Rajkot, India, 13–15 December 2019; pp. 1–4. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. Tood: Task-aligned one-stage object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 3490–3499. [Google Scholar]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Tomizuka, M.; Li, L.; Yuan, Z.; Wang, C.; et al. Sparse R-CNN: End-to-end object detection with learnable proposals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 14454–14463. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.-Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Chen, S.; Sun, P.; Song, Y.; Luo, P. DiffusionDet: Diffusion model for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 19830–19843. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Zhang, S.; Wang, X.; Wang, J.; Pang, J.; Lyu, C.; Zhang, W.; Luo, P.; Chen, K. Dense distinct query for end-to-end object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7329–7338. [Google Scholar]

- Chen, Z.; Yang, C.; Chang, J.; Zhao, F.; Zha, Z.-J.; Wu, F. DDOD: Dive deeper into the disentanglement of object detector. IEEE Trans. Multimed. 2023, 26, 284–298. [Google Scholar] [CrossRef]

- Zong, Z.; Song, G.; Liu, Y. DETRs with collaborative hybrid assignments training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 6748–6758. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Wang, J.; Li, F.; He, L. A unified framework for adversarial patch attacks against visual 3D object detection in autonomous driving. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4949–4962. [Google Scholar] [CrossRef]

- Zhou, H.; Chen, H.; Yu, B.; Pang, S.; Cong, X.; Cong, L. An end-to-end weakly supervised learning framework for cancer subtype classification using histopathological slides. Expert Syst. Appl. 2024, 237, 121379. [Google Scholar] [CrossRef]

| Methods | Evaluation Metrics | |||||||

|---|---|---|---|---|---|---|---|---|

| mAP | mAP50 | mAP75 | mAP-s | mAP-m | mAP-l | Parameters | Flops | |

| Yolof [29] | 0.285 | 0.634 | 0.209 | 0.128 | 0.405 | 0.403 | 42.339 M | 61.222 G |

| Vfnet [30] | 0.366 | 0.669 | 0.368 | 0.359 | 0.376 | 0.410 | 32.709 M | 118 G |

| Tood [31] | 0.538 | 0.861 | 0.607 | 0.494 | 0.585 | 0.525 | 32.018 M | 123 G |

| Sparse-RCNN [32] | 0.134 | 0.302 | 0.106 | 0.117 | 0.174 | 0.089 | 106 M | 97.63 G |

| Faster-RCNN | 0.179 | 0.432 | 0.104 | 0.103 | 0.267 | 0.129 | 41.348 M | 134 G |

| EfficientDet [33] | 0.607 | 0.862 | 0.671 | 0.454 | 0.717 | 0.688 | 3.828 M | 2.303 G |

| DINO-4scale [34] | 0.487 | 0.723 | 0.609 | 0.455 | 0.586 | 0.590 | 47.54 M | 179 G |

| DiffusionDet [35] | 0.704 | 0.887 | 0.784 | 0.601 | 0.800 | 0.743 | – | – |

| Deformable-DETR [36] | 0.425 | 0.762 | 0.437 | 0.317 | 0.519 | 0.470 | 40.099 M | 123 G |

| DDQ-DETR-4scale [37] | 0.412 | 0.724 | 0.457 | 0.367 | 0.498 | 0.426 | – | – |

| Ddod [38] | 0.473 | 0.846 | 0.504 | 0.462 | 0.498 | 0.421 | 32.196 M | 111 G |

| Co-DETR-5scale [39] | 0.745 | 0.917 | 0.857 | 0.641 | 0.853 | 0.810 | 64.455 M | – |

| CenterNet [40] | 0.391 | 0.745 | 0.367 | 0.420 | 0.384 | 0.324 | 32.111 M | 123 G |

| YOLO V11 [41] | 0.743 | 0.934 | 0.851 | 0.618 | 0.838 | 0.796 | 2.58 M | 6.3 G |

| RTMDet-L | 0.670 | 0.894 | 0.785 | 0.557 | 0.767 | 0.765 | 52.255 M | 79.951 G |

| Org-IDPNet (Ours) | 0.767 | 0.941 | 0.874 | 0.638 | 0.855 | 0.849 | 5.548 M | 8.112 G |

| Methods | Evaluation Metrics | |||||||

|---|---|---|---|---|---|---|---|---|

| mAP | mAP50 | mAP75 | mAP-s | mAP-m | mAP-l | Parameters | FLOPs | |

| YOLOF | 0.124 | 0.267 | 0.099 | 0.009 | 0.087 | 0.151 | 42.409 M | 83.32 G |

| VFNet | 0.105 | 0.214 | 0.091 | 0.028 | 0.087 | 0.083 | 32.716 M | 161 G |

| TOOD | 0.394 | 0.605 | 0.441 | 0.153 | 0.405 | 0.346 | 32.025 M | 168 G |

| Sparse-RCNN | 0.106 | 0.220 | 0.091 | 0.034 | 0.090 | 0.090 | 106 M | 130 G |

| Faster-RCNN | 0.109 | 0.256 | 0.074 | 0.039 | 0.095 | 0.137 | 41.364 M | 178 G |

| EfficientDet | 0.479 | 0.703 | 0.534 | 0.131 | 0.471 | 0.674 | 3.83 M | 2.313 G |

| DINO-4scale | 0.367 | 0.530 | 0.425 | 0.183 | 0.374 | 0.435 | 47.546 M | 235 G |

| DiffusionDet | 0.489 | 0.699 | 0.553 | 0.184 | 0.501 | 0.494 | – | – |

| Deformable-DETR | 0.322 | 0.566 | 0.325 | 0.110 | 0.312 | 0.449 | 40.099 M | 165 G |

| DDQ-DETR-4scale | 0.519 | 0.718 | 0.599 | 0.196 | 0.530 | 0.590 | – | – |

| DDOD | 0.255 | 0.432 | 0.280 | 0.099 | 0.232 | 0.229 | 32.203 M | 151 G |

| Co-DINO-5scale | 0.606 | 0.788 | 0.704 | 0.258 | 0.615 | 0.666 | 64.483 M | – |

| CenterNet | 0.202 | 0.376 | 0.197 | 0.077 | 0.206 | 0.170 | 32.118 M | 167 G |

| YOLO V11 | 0.595 | 0.785 | 0.689 | 0.224 | 0.610 | 0.592 | 2.583 M | 6.3 G |

| RTMDet-L | 0.637 | 0.833 | 0.737 | 0.253 | 0.645 | 0.659 | 52.257 M | 79.958 G |

| Org-IDPNet (Ours) | 0.667 | 0.860 | 0.775 | 0.255 | 0.684 | 0.736 | 5.549 M | 8.115 G |

| EfficientDet | DINO-4scale | |||||

|---|---|---|---|---|---|---|

| Category | mAP | mAP50 | mAP75 | mAP | mAP50 | mAP75 |

| Organoid0 | 0.360 | 0.632 | 0.358 | 0.394 | 0.589 | 0.476 |

| Organoid1 | 0.405 | 0.612 | 0.451 | 0.307 | 0.446 | 0.355 |

| Organoid3 | 0.601 | 0.847 | 0.705 | 0.405 | 0.598 | 0.428 |

| Spheroid | 0.549 | 0.722 | 0.624 | 0.370 | 0.486 | 0.438 |

| RTmdet-L | Org-IDPNet (Ours) | |||||

| Category | mAP | mAP50 | mAP75 | mAP | mAP50 | mAP75 |

| Organoid0 | 0.566 | 0.811 | 0.655 | 0.612 | 0.847 | 0.716 |

| Organoid1 | 0.627 | 0.827 | 0.725 | 0.644 | 0.847 | 0.744 |

| Organoid3 | 0.702 | 0.880 | 0.806 | 0.714 | 0.895 | 0.833 |

| Spheroid | 0.651 | 0.815 | 0.762 | 0.700 | 0.852 | 0.806 |

| MouseOrg | TelluOrg | |||||

|---|---|---|---|---|---|---|

| Method | mAP | mAP50 | mAP75 | mAP | mAP50 | mAP75 |

| base | 0.734 | 0.92 | 0.847 | 0.642 | 0.836 | 0.744 |

| base + LGPM | 0.753 | 0.933 | 0.865 | 0.655 | 0.85 | 0.758 |

| base + PCSM | 0.756 | 0.931 | 0.862 | 0.65 | 0.846 | 0.762 |

| base + LGPM + PCSM | 0.767 | 0.941 | 0.874 | 0.667 | 0.86 | 0.775 |

| Method | organoid0 | organoid1 | organoid3 | Spheroid |

|---|---|---|---|---|

| Tood | 0.505 | 0.400 | 0.483 | 0.593 |

| RTMDet-L | 0.484 | 0.458 | 0.456 | 0.351 |

| Org-IDPNet | 0.598 | 0.507 | 0.551 | 0.549 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Fan, C.; Zhou, X.; Wei, P. IDP-Head: An Interactive Dual-Perception Architecture for Organoid Detection in Mouse Microscopic Images. Biomimetics 2025, 10, 614. https://doi.org/10.3390/biomimetics10090614

Yang Y, Fan C, Zhou X, Wei P. IDP-Head: An Interactive Dual-Perception Architecture for Organoid Detection in Mouse Microscopic Images. Biomimetics. 2025; 10(9):614. https://doi.org/10.3390/biomimetics10090614

Chicago/Turabian StyleYang, Yuhang, Changyuan Fan, Xi Zhou, and Peiyang Wei. 2025. "IDP-Head: An Interactive Dual-Perception Architecture for Organoid Detection in Mouse Microscopic Images" Biomimetics 10, no. 9: 614. https://doi.org/10.3390/biomimetics10090614

APA StyleYang, Y., Fan, C., Zhou, X., & Wei, P. (2025). IDP-Head: An Interactive Dual-Perception Architecture for Organoid Detection in Mouse Microscopic Images. Biomimetics, 10(9), 614. https://doi.org/10.3390/biomimetics10090614