Abstract

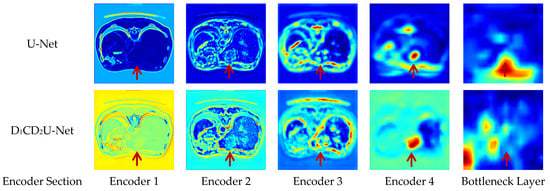

The highly heterogeneous and irregular morphology of liver tumors presents considerable challenges for automated segmentation. To better capture complex tumor structures, this study proposes a liver tumor segmentation framework based on multi-scale deformable feature fusion and global context modeling. The method incorporates three key innovations: (1) a Deformable Large Kernel Attention (D-LKA) mechanism in the encoder to enhance adaptability to irregular tumor features, combining a large receptive field with deformable sensitivity to precisely extract tumor boundaries; (2) a Context Extraction (CE) module in the bottleneck layer to strengthen global semantic modeling and compensate for limited capacity in capturing contextual dependencies; and (3) a Dual Cross Attention (DCA) mechanism to replace traditional skip connections, enabling deep cross-scale and cross-semantic feature fusion, thereby improving feature consistency and expressiveness during decoding. The proposed framework was trained and validated on a combined LiTS and MSD Task08 dataset and further evaluated on the independent 3D-IRCADb01 dataset. Experimental results show that it surpasses several state-of-the-art segmentation models in Intersection over Union (IoU) and other metrics, achieving superior segmentation accuracy and generalization performance. Feature visualizations at both encoding and decoding stages provide intuitive insights into the model’s internal processing of tumor recognition and boundary delineation, enhancing interpretability and clinical reliability. Overall, this approach presents a novel and practical solution for robust liver tumor segmentation, demonstrating strong potential for clinical application and real-world deployment.

1. Introduction

Liver tumors are common hepatic lesions and pose significant clinical challenges. Based on their biological behavior, they are classified as either benign or malignant, with the latter referred to as liver cancer. Liver cancer is characterized by high malignancy, rapid proliferation, and a strong propensity for distant metastasis. In recent years, the incidence of liver cancer has continued to rise, which makes it one of the most pressing global public health challenges [1,2]. However, the early clinical symptoms of liver cancer are often nonspecific, and the small size of early lesions makes them difficult to detect accurately using computed tomography (CT). As a result, most patients are diagnosed at intermediate or advanced stages, significantly increasing treatment complexity and leading to uncertain prognoses [3,4].

Against this backdrop, there is an urgent need to develop more efficient and accurate diagnostic and therapeutic strategies to improve treatment outcomes and patient survival. Surgical resection remains the primary clinical approach for treating liver cancer, particularly demonstrating favorable outcomes for early-stage lesions. However, in cases where tumors are located in anatomically complex regions or exhibit poorly defined boundaries, precise intraoperative localization of the lesion becomes more challenging, increasing the technical complexity of surgery and the risk of postoperative complications [5]. To address these challenges, medical image analysis techniques have played an increasingly critical role in the auxiliary diagnosis and therapeutic planning of liver tumors. Among these, liver tumor segmentation serves as a central component of intelligent image processing, aiming to accurately delineate tumor boundaries and their spatial distribution from CT images, which is essential for enabling personalized and precise treatment. Accurate lesion segmentation via automated or semi-automated approaches not only facilitates the formulation of appropriate preoperative resection plans but also provides real-time intraoperative guidance, thereby enhancing the safety and completeness of surgical procedures [6,7].

Although significant progress has been made in liver tumor segmentation based on CT images in recent years, numerous challenges persist in clinical practice. A major challenge stems from the significant morphological heterogeneity of liver tumors, which exhibit considerable variations in size, shape, and spatial location, along with notable inter-patient variability. These morphological variations often result in irregular tumor contours and indistinct boundaries, with limited contrast between tumors and surrounding healthy tissues, thereby significantly complicating accurate segmentation [8,9]. Furthermore, liver tumors undergo spatiotemporal progression during disease development, such as continuous growth or morphological changes. These dynamic patterns not only increase the complexity of the segmentation task but also place greater demands on model stability and generalization across different pathological stages [10]. Moreover, CT image acquisition is often affected by noise, patient motion artifacts, and complex anatomical structures, which degrade image quality, blur boundaries, and reduce tissue contrast, thereby exacerbating the difficulty of distinguishing tumors from adjacent tissues [11]. In addition, accurate tumor annotation critically relies on clinical expertise. However, the highly variable tumor morphology introduces subjective variability in interpretation, and the annotation process itself is time-consuming and labor-intensive, which hinders the construction of large-scale, high-quality labeled datasets and limits further improvement of segmentation model performance [12].

In the early stages, liver tumor image segmentation primarily relied on manual delineation by clinicians, a time-consuming process that was prone to operator-dependent variability, resulting in a heightened risk of misdiagnosis or missed lesions and overall low efficiency [13]. With the rapid advancement of medical imaging technologies, liver tumor segmentation methods have undergone a significant transition from traditional techniques to automated approaches. Traditional segmentation methods [14,15,16,17,18,19,20], such as active contour models, clustering analysis, edge detection, and level set methods, have shown some success under specific conditions. However, these approaches heavily depend on manually defined parameters and handcrafted features, making them inadequate for handling the complex and heterogeneous morphology of liver tumors. Moreover, liver tumor segmentation often requires the integration of multiple traditional techniques and relies on intricate preprocessing and postprocessing steps, resulting in cumbersome workflows that fall short of clinical demands for efficiency and robustness.

With the rapid development of deep learning, convolutional neural network (CNN)-based segmentation methods have become mainstream in research, significantly advancing automated liver tumor segmentation. Inspired by the hierarchical information processing mechanisms of the biological visual system, the receptive field design and local connectivity of convolutional neural networks mimic the way simple cells in the visual cortex extract local features, thereby providing a biomimetic foundation for image segmentation tasks. Although CNNs have achieved remarkable progress in liver tumor segmentation, the downsampling operations involved often lead to the loss of important features, thereby compromising segmentation accuracy. To address the issue of feature loss due to downsampling, Shelhamer E et al. [21] proposed the Fully Convolutional Network (FCN), which mitigates this loss through feature summation and performs pixel-wise classification to enable end-to-end segmentation. Building on this framework, Sun C et al. [22] employed the FCN architecture for liver tumor segmentation. Christ P F et al. [23] introduced a cascaded FCN architecture, where the first FCN extracts the liver region, which is then used as input to a second FCN for tumor segmentation. Zheng S et al. [24] further enhanced the FCN by integrating deformable modeling based on Non-negative Matrix Factorization (NMF), which was used to refine tumor boundary contours. However, due to FCN’s limitations in feature fusion, it struggles to preserve spatial details adequately, prompting further exploration of more advanced network architectures to improve segmentation performance.

To address the limitations of FCNs in preserving spatial information, Ronneberger O et al. [25] proposed the U-shaped network, U-Net, which employs a symmetric encoder–decoder architecture and skip connections to integrate local details with global contextual information. U-Net has since been widely adopted in medical image segmentation. This architectural design draws inspiration from the cooperative functioning of feedforward and feedback pathways in the biological nervous system. The symmetric encoder–decoder structure emulates the hierarchical processing of the visual pathway, while the skip connections mimic the shortcut connections in the brain, effectively preserving multi-scale spatial features. For liver tumor segmentation, various modifications have been made to the original U-Net to enhance its capability in representing complex lesion regions. Sahli H et al. [26] applied U-Net to liver tumor segmentation and compared its performance with SegNet, demonstrating U-Net’s superior ability to handle tumor regions. Ayalew Y A et al. [27] optimized the U-Net architecture by modifying the number of filters and network depth to improve segmentation performance in liver tumor regions. With the introduction of residual networks (ResNet) and densely connected networks (DenseNet), researchers began integrating these architectures with U-Net to enhance feature extraction and gradient propagation, thereby improving segmentation accuracy in medical imaging. Seo H et al. [28] proposed an improved U-Net with residual paths for liver tumor segmentation. This architecture incorporates residual paths with deconvolution and activation modules into skip connections and adds convolutional layers in the decoder to enhance fine-grained feature fusion. Sabir M W et al. [29] introduced the ResU-Net, which embeds residual blocks into the U-Net encoder to improve the modeling capacity for tumor-specific features. Li X et al. [30] developed a hybrid densely connected U-Net that combines 2D and 3D DenseUNet architectures to extract intra-slice and inter-slice tumor features, which are then integrated through a feature fusion module to produce the final segmentation output. Despite the strong performance of U-Net and its variants in improving segmentation accuracy, challenges remain in the flexible fusion of multi-scale features and the effective integration of deep semantic information.

To further address the limitations of the traditional U-Net in deep feature integration and cross-layer information flow, Zhou Z et al. [31] proposed the U-Net++ architecture. This architecture redesigns the skip connections to more flexibly fuse encoder features while alleviating training difficulties associated with increased network depth. This improvement is inspired by the cross-level interconnections of biological neural networks, akin to the dense lateral connections among different cortical regions that enable information integration and complementation, thereby enhancing the model’s capacity for multi-scale feature fusion. Based on this improvement, Wang J et al. [32] applied U-Net++ to liver tumor segmentation using a two-stage strategy: first segmenting the liver region and then reusing the resulting liver mask as input for precise tumor segmentation. Peng Q et al. [33] proposed FLAS-UNet++ for liver tumor segmentation, which builds upon U-Net++ by introducing Atrous Spatial Pyramid Pooling (ASPP) in the fourth and fifth encoding layers to enhance boundary learning. Additionally, Focal Loss was incorporated to focus the model on tumor edges and address class imbalance. Although U-Net++ improves feature fusion, it still faces challenges in capturing complex tumor characteristics, highlighting the need for more refined mechanisms to enhance the network’s sensitivity to critical regions.

To overcome the limitations in feature representation of existing networks, researchers have explored the integration of attention mechanisms into U-Net and its variants. By embedding attention modules into the encoder, decoder, and skip connections, these models aim to enhance responsiveness to tumor regions. This development aligns with the selective attention mechanisms of biological cognitive systems, emulating the neural regulation of the human visual system that enhances the processing of relevant information while suppressing irrelevant signals. Li H et al. [34] proposed a modified convolutional attention mechanism by incorporating attention modules into the skip connections, thereby improving sensitivity to liver tumor regions. Li J et al. [35] developed an Efficient Channel Attention-based Res-Unet++ for liver tumor segmentation, where the ECA-res module replaces conventional convolution layers in the encoder to enhance feature extraction and alleviate network degradation in deep architectures. Kushnure D T et al. [36] introduced HFRU-Net for liver tumor segmentation. This model reconfigures skip connections using local feature fusion mechanisms to enhance contextual information extraction. It incorporates a squeeze-and-excitation module within the skip connections and applies an Atrous Spatial Pyramid Pooling (ASPP) block at the bottleneck to strengthen deep spatial feature representation. Zang L et al. [37] applied a PCNN-based preprocessing step for image enhancement prior to liver tumor segmentation and integrated residual blocks with SE attention modules into both the encoder and decoder of U-Net to improve tumor-focused feature learning. Li W et al. [38] proposed SPA-Unet for liver tumor segmentation, embedding a channel attention-based spatial pyramid structure in the encoder to extract multi-scale features and capture contextual information. Additionally, a Residual Attention Block (RABlock) was incorporated into the decoder to accelerate convergence and emphasize key regions. Liu L et al. [39] proposed the SEU2-Net architecture, embedding the SE attention mechanism into the nested U-shaped structure of U2-Net to enhance the representation of liver tumor features. Although the aforementioned methods have demonstrated effectiveness in enhancing local feature representation, their reliance on convolutional operations limits their receptive fields, resulting in insufficient global context modeling. This highlights the need for architectures capable of capturing long-range dependencies.

To overcome the limitations of convolutional operations, researchers have begun exploring architectures with stronger global modeling capabilities. In this context, the Transformer architecture, with its self-attention mechanism, offers a natural advantage by directly capturing dependencies between arbitrary positions within an image, making it particularly effective for long-range context modeling. This has led to the emergence of hybrid architectures that integrate CNNs with Transformers. The design of such hybrid architectures is inspired by the brain’s multimodal information processing mechanisms. The CNN component emulates the visual cortex’s ability to extract local features, while the transformer’s self-attention mechanism mimics the establishment of global working memory and long-range dependencies between the prefrontal cortex and other brain regions, thereby enabling holistic understanding of complex visual scenes. Wang X et al. [40] proposed TransFusionNet for liver tumor segmentation. This model leverages a Transformer encoder to extract semantic features, while CNN and squeeze-and-excitation (SE) attention modules are used to capture local spatial and edge features. A multi-scale feature fusion module is employed to integrate these features and pass them to the decoder, thereby enhancing the network’s feature representation capacity. Li X et al. [41] proposed TransU2-Net, a liver tumor segmentation model based on a deeply nested U-shaped structure. Transformers were incorporated into the skip connections of U2-Net to enhance global context modeling, and a novel multi-scale fusion strategy was introduced to optimize the integration of features across different dimensions. Zhang C et al. [42] proposed SAA-Net for liver tumor segmentation, introducing a Scale-Axis Attention (SAA) mechanism as its core component. The SAA module enhances the sensitivity and expressiveness of multi-scale features through dynamic scale attention, while the axis attention module improves the efficiency of self-attention computation and strengthens convolutional attention, enabling the effective capture of long-range spatial dependencies.

With the continuous advancement of deep learning, the design paradigm of medical image segmentation networks has evolved from convolutional neural networks (CNNs) to Transformers, and more recently to Mamba-based architectures. Transformers, relying on the self-attention mechanism, demonstrate notable strengths in modeling global dependencies, but they suffer from high computational complexity. By contrast, Mamba, as an emerging state space model (SSM), achieves higher computational efficiency while more effectively modeling long-range sequence dependencies, showing greater adaptability in spatiotemporal feature representation. From a biomimetic perspective, the Mamba architecture draws inspiration from the brain’s dynamic modeling of continuous states during information processing, enabling efficient capture and integration of complex contextual information and providing new directions and technical support for medical image segmentation. Consequently, researchers have begun to explore hybrid CNN–Mamba frameworks to leverage their complementary strengths in local feature extraction and global dependency modeling. Jiang Y et al. [43] proposed MLLA-UNet for liver tumor segmentation, which incorporates a Mamba-inspired linear attention mechanism into the encoder to enhance feature extraction and employs a symmetric sampling structure to facilitate multi-scale feature fusion. Qamar S et al. [44] developed SAMA-UNet, which integrates adaptive Mamba-like aggregated attention into the encoder to strengthen feature representation, while employing a causal resonance learning mechanism to improve multi-scale feature integration. Zhu M et al. [45] introduced BPP-Net for liver tumor segmentation, inspired by the complex parallel and serial structures of the biological visual system. In this network, three Mamba modules of different scales are embedded in each encoding layer to extract multi-scale features, while a dual-channel fusion decoder progressively refines the segmentation results. These developments indicate that hybrid architectures have become a prevailing trend in medical image segmentation research, including CNN–Transformer [46], CNN–attention [47], and CNN–Mamba frameworks [48,49], offering important insights and technical references for liver tumor semantic segmentation. U-Net has been widely adopted and recognized as a baseline model in liver tumor semantic segmentation tasks. To further improve segmentation performance, researchers have continued to explore U-Net-based enhancements, primarily focusing on optimizing the encoder and skip connections to strengthen feature extraction and fusion capabilities. However, existing methods still suffer from several limitations: (1) Due to the highly heterogeneous and irregular morphology of liver tumors, the standard U-Net and its derivatives exhibit limited feature extraction capacity. Traditional network architectures struggle to capture the high variability of tumor boundaries and the morphological diversity of lesions, resulting in inadequate representation of complex tumor structures. (2) Most current improvements concentrate on localized refinements of skip connections, while overlooking the complementary relationships among multi-scale features within the encoder. This oversight leads to significant semantic inconsistency between the encoder and decoder, which severely hinders effective feature transmission. (3) U-Net and its variants are mostly based on CNN architectures, where the inherent locality of convolution operations limits the network’s global receptive field. This local constraint significantly hampers the model’s ability to perceive the overall tumor structure, thus impeding accurate semantic segmentation.

To address the limitations in liver tumor semantic segmentation, this study proposes enhancements to the U-Net architecture from three perspectives: feature extraction, feature fusion, and global perception. The specific improvements are as follows: (1) A Deformable Large Kernel Attention (D-LKA) mechanism is introduced in the encoder stage, which dynamically adjusts the geometric deformation and receptive field distribution of the convolutional kernels to enable adaptive focusing on tumor regions. This design enhances the model’s ability to extract features from tumors with ambiguous boundaries and improves its sensitivity to small-scale lesions. (2) A Dual Cross Attention mechanism (DCA) is employed to replace traditional skip connections, facilitating the modeling of long-range dependencies and strengthening global contextual representation. This design enables deep integration across encoder feature hierarchies and promotes the fusion of high-level semantic information with low-level spatial details, thereby alleviating semantic gaps during feature transmission. (3) A multi-scale Context Extraction (CE) module is innovatively integrated into the bottleneck layer. Building upon the global modeling capacity provided by the Dual Cross Attention mechanism, this design further enhances the model’s ability to capture multi-scale contextual information and improves its overall perception of global features.

It is important to note that although the D-LKA, CE, and DCA mechanisms have each been proven effective in their original contexts, the innovation of this study lies in their tailored integration, which achieves a synergistic effect. Within the encoder, D-LKA enables dynamic adaptation to tumor morphological heterogeneity, focusing on critical regions and mitigating boundary ambiguity. The CE module enhances tumor-related channel semantics during deep feature extraction while suppressing noise. DCA restructures the information flow between the encoder and decoder, not only replacing conventional skip connections but also establishing bidirectional long-range dependencies across levels, thereby enabling deep integration of locally adaptive features, channel-level semantic enhancement, and multi-scale global interactions. This synergistic design substantially enhances the model’s overall ability to represent complex liver tumor structures, surpassing the performance achievable by individual modules or simple stacking, and serves as the key driver of the proposed method’s performance improvement.

2. Methods

2.1. Baseline Network: U-Net

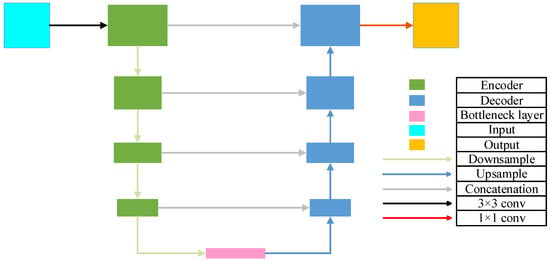

U-Net [25] is a widely used convolutional neural network architecture for image segmentation, characterized by its symmetric U-shaped design. This structure integrates an encoder (downsampling path), a bottleneck, and a decoder (upsampling path), and connects feature maps from corresponding encoder and decoder layers via skip connections, as illustrated in Figure 1.

Figure 1.

The architecture of U-Net.

The encoder consists of multiple convolutional blocks, each typically comprising a convolutional layer, an activation function, and a pooling layer. Let denote the output feature map of the block in the encoder. The encoder output is expressed as shown in Equation (1):

where denotes the convolution operation, is the activation function, represents the pooling operation, and is the input image.

The decoder also consists of multiple convolutional blocks, each typically comprising an upsampling layer (a transposed convolution followed by a convolution), a convolutional layer, an activation function, and a skip connection from the corresponding encoder layer. Let denote the output feature map of the block in the decoder. The decoder output is defined as shown in Equation (2):

where denotes the upsampling operation, © represents feature map concatenation, and refers to the necessary cropping operation to ensure spatial dimension alignment. is the number of blocks in the encoder, and is the initial decoder feature map, typically obtained by upsampling from , the output of the final encoder block, i.e., the bottleneck layer.

The final block of the decoder is typically followed by one or more convolutional layers to generate the final segmentation map. Let denote the output segmentation map; the final segmentation result is expressed as shown in Equation (3):

where is used to convert the output into a probability map, where the sum of probabilities across all classes for each pixel equals 1.

2.2. Overall Network Architecture with Multi-Scale Deformable Feature Fusion and Global Perception

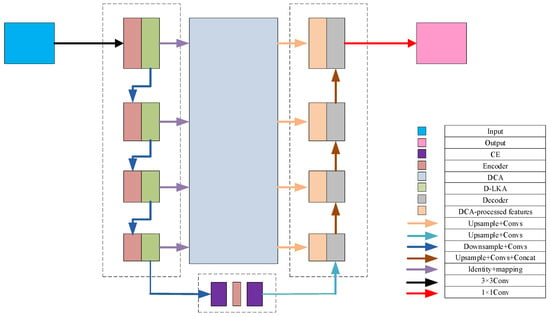

Based on the U-Net architecture, this study proposes an enhanced segmentation network, D1CD2U-Net, by integrating a Deformable Large Kernel Attention mechanism (D1), a Context Extraction module (C), and a Dual Cross Attention mechanism (D2). The composite structure of D1CD2U-Net provides strong feature modeling capabilities. To facilitate an intuitive understanding of the proposed feature processing flow, the overall architecture of the improved network is illustrated in Figure 2.

Figure 2.

Overall network architecture with multi-scale deformable feature fusion and global perception.

To address the task of liver tumor segmentation, this study proposes a novel segmentation network framework that integrates multi-scale deformable features with global perception capabilities. Given the complex and heterogeneous morphology of liver tumors, the model incorporates a Deformable Large Kernel Attention mechanism, which adaptively adjusts the receptive field size and dynamically focuses on key tumor regions. This enhances the model’s ability to represent heterogeneous tumor shapes, improving both its adaptability and robustness. The mechanism thus provides more discriminative features for accurate subsequent segmentation.

Next, a Context Extraction module is introduced at the bottleneck layer of the encoder to enhance the capture of global contextual information. This enables the model to acquire a more comprehensive and in-depth understanding of both the liver and its tumor environment, laying a solid foundation for subsequent feature fusion and image reconstruction.

To further optimize the feature transmission path, a Dual Cross Attention mechanism is employed to replace traditional skip connections. This mechanism operates on two levels: (1) Channel Self-Attention, which emphasizes interactions among different feature channels, enhancing salient features while suppressing irrelevant noise; and (2) Spatial Self-Attention, which captures interdependencies across different spatial locations to facilitate effective spatial information exchange. By passing encoder features through this Dual Cross Attention mechanism, the network achieves deep integration and fusion across spatial and channel dimensions, significantly enriching feature representation.

Finally, the features processed by the dual attention mechanism are organically fused with the global information extracted from the bottleneck layer, resulting in a more comprehensive and semantically rich feature representation. These features are then fed into the decoder, providing a strong foundation for the subsequent image reconstruction process. During decoding, the tumor’s fine structures are gradually restored through layer-wise upsampling and feature fusion, ultimately achieving accurate image segmentation. The entire framework, through these carefully designed components, enables efficient and precise processing of liver tumor images. The feature transmission process of the model is formulated as shown in Equation (4):

where denotes the encoder and bottleneck layers: for n = 1 to 4, it refers to the encoder layers, and for n = 5, it represents the bottleneck layer. denotes the decoder layers; represents the Context Extraction module; stands for the Deformable Large Kernel Attention mechanism; and denotes the Dual Cross Attention mechanism.

The three core modules proposed in this study are inspired by biological mechanisms, reflecting a biomimetic design philosophy. (1) The D-LKA module, designed for the encoding stage, is inspired by the selective attention mechanism of the visual nervous system. It emulates the ability of cortical neurons to dynamically adjust the size and shape of receptive fields in response to stimuli. By introducing geometric deformations to convolutional kernels, it achieves adaptive receptive field distribution, analogous to the visual system’s enhanced focus on specific targets. This substantially improves the model’s ability to capture features of heterogeneous tumor structures and blurred boundaries. (2) The DCA module replaces conventional skip connections and is inspired by long-range connections and global working memory mechanisms in neural networks of the brain. By leveraging self-attention, DCA dynamically establishes long-range dependencies between features, simulating the prefrontal cortex’s self-organizing process of global integration and selection. Through bidirectional pathways between the encoder and decoder, it effectively mitigates semantic inconsistencies and promotes deep fusion of multi-level features, resembling the active coordination and modulation of perceptual information in advanced cognitive systems. (3) The CE module, integrated into the bottleneck layer, draws inspiration from the multi-scale parallel processing strategy of the biological visual system. It mimics the visual system’s ability to simultaneously process high-resolution information from the fovea and broad contextual cues from peripheral vision. By fusing multi-scale features, it enhances the model’s capacity to capture semantic dependencies across different ranges, thereby improving its overall perception and discrimination of complex lesion regions.

The core challenges of liver tumor CT segmentation can be summarized as follows: (1) high morphological heterogeneity and blurred lesion boundaries; (2) the dynamic spatiotemporal evolution of lesions; (3) the susceptibility of CT image quality to interference; and (4) the scarcity and subjectivity of high-quality annotated data. To address these challenges, this study proposes an improved framework: the D-LKA module adaptively responds to morphological variations in lesions, enabling precise focus on blurred boundaries. A multi-scale CE mechanism captures semantic information across different scales, thereby enhancing the model’s adaptability and robustness to lesion evolution. DCA strengthens global context modeling, mitigating the adverse effects of image quality variations.

2.3. Feature Extraction Architecture Design

Liver tumors often exhibit pronounced deformable characteristics, imposing stringent demands on the accuracy and robustness of segmentation models. The U-Net architecture, a classic model in medical image segmentation, demonstrates strong feature extraction capabilities due to its distinctive encoder–decoder framework. However, in the challenging task of liver tumor segmentation, the fixed receptive field convolution strategy employed by the traditional U-Net often fails to adequately capture subtle variations along tumor boundaries and the complex interactions between tumors and surrounding tissues, thereby limiting further improvements in segmentation performance.

To overcome this bottleneck, recent research trends have focused on integrating attention mechanisms into the U-Net architecture [35,38,40,42]. Inspired by the human visual system’s information processing, attention mechanisms dynamically adjust the weighting of input data, enabling the model to intelligently focus on the most critical regions within an image. Common attention mechanisms include channel attention [50], spatial attention [51], and self-attention [52], each enhancing the model’s representation capability from different perspectives. Channel attention improves performance by optimizing the contribution of each feature map channel; spatial attention emphasizes the saliency of each spatial location within the feature map; and self-attention captures global information by computing correlations between any two points in the feature map. However, given the irregular shapes and blurred boundaries of liver tumors, these conventional attention mechanisms still have certain limitations.

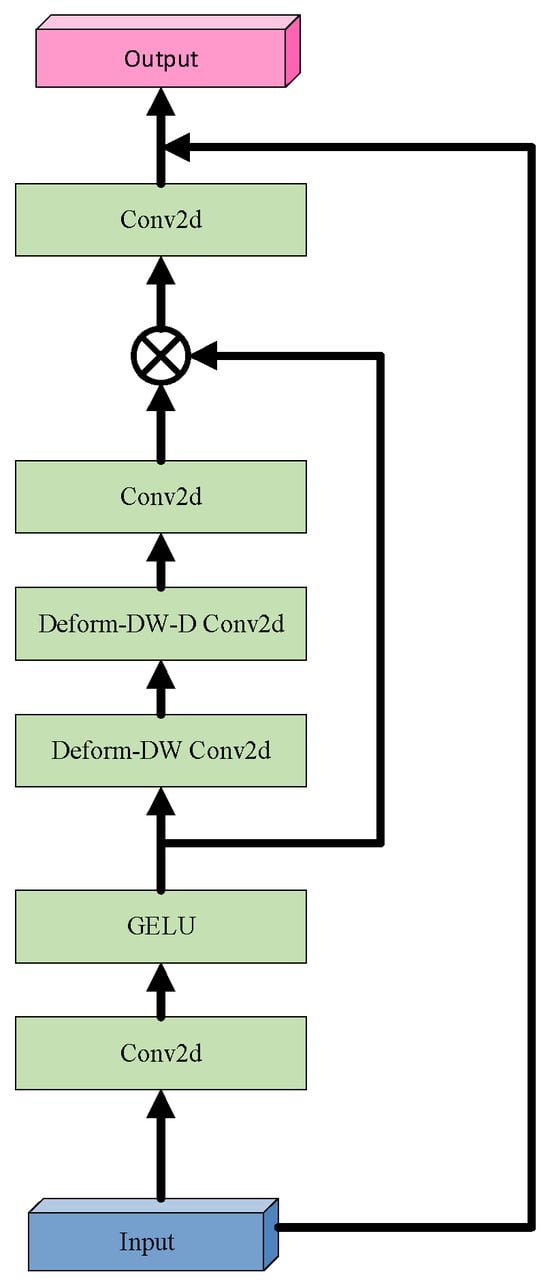

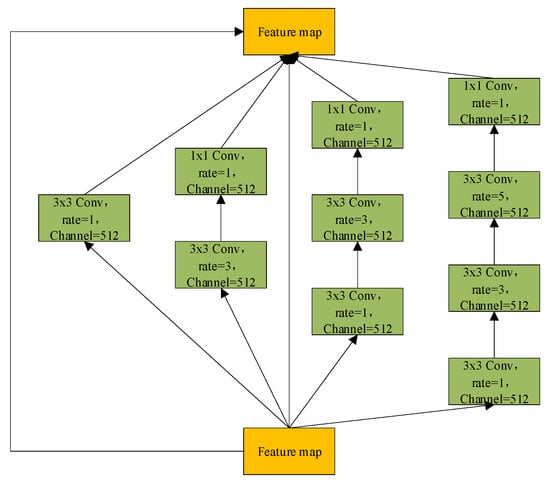

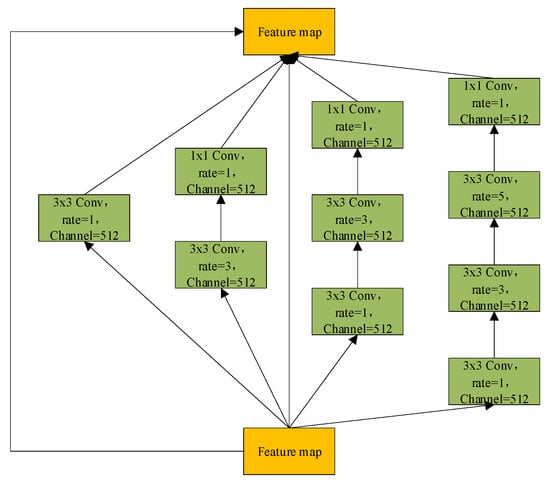

In response, this study introduces the Deformable Large Kernel Attention (D-LKA) mechanism, integrated into the encoder layers of U-Net. The core strength of D-LKA lies in its unique architectural design and powerful information aggregation capabilities [53]. By incorporating deformable convolution techniques, D-LKA flexibly adjusts the shape and size of convolution kernels to more precisely capture subtle variations in tumor regions and their surrounding environment. Additionally, its large kernel design ensures the model captures richer contextual information, which is critical for distinguishing tumors from normal tissue. Moreover, D-LKA preserves fine local feature details, helping maintain clear and continuous segmentation boundaries, thereby further enhancing segmentation quality. The structural design of D-LKA is illustrated in Figure 3.

Figure 3.

Architecture of the Deformable Large Kernel Attention (D-LKA) module.

Before delving into D-LKA, it is essential to revisit the Large Kernel Attention (LKA) mechanism. LKA simulates the receptive field of self-attention by decomposing a large convolutional kernel and incorporates an attention mechanism to enhance the focus on target features. Specifically, a convolution with kernel size K is decomposed into a combination of three convolutions: a depthwise convolution with kernel size , a depthwise dilated convolution with kernel size and dilation rate , and a channel-wise convolution ( convolution). Firstly, LKA employs a small depthwise convolution to capture local image features. Secondly, it applies a depthwise dilated convolution to expand the receptive field, enabling the capture of broader contextual information without increasing the number of parameters. Finally, a convolution is used to integrate the features and produce the final attention map, facilitating cross-channel information fusion and feature aggregation.

D-LKA innovatively integrates the core concept of deformable convolution into the LKA framework by introducing a dynamic sampling grid, enabling flexible deformation of the convolutional kernel. Specifically, an auxiliary convolutional layer learns spatial offset fields from the input features autonomously, generating geometrically adaptive kernels. This feature-driven approach achieves two key properties: (1) sampling point locations undergo continuous spatial transformations based on the target morphology; (2) the kernel shape dynamically adapts to local structural features. Technically, the offset prediction network shares the same kernel size and dilation rate as its corresponding convolutional layer, ensuring scale consistency between geometric deformation and semantic features. For sampling points with non-integer coordinates, bilinear interpolation is employed for sub-pixel feature extraction, ensuring continuity and differentiability during deformation. This innovative design enables D-LKA to simultaneously achieve three critical functions: precise local detail perception, effective global context integration, and adaptive modeling of target shapes, thereby significantly enhancing the model’s capability to represent complex liver tumor boundaries. The mathematical formulation of D-LKA is presented in Equation (5):

where denotes the input feature; GELU(x) represents the GELU activation function; refers to a 2D convolution; indicates a deformable depthwise convolution; denotes a deformable depthwise dilated convolution; and corresponds to the attention map, where each value reflects the relative importance of the associated feature. The operator represents element-wise multiplication.

2.4. Design of the Feature Fusion Architecture

In the U-Net architecture, skip connections play a crucial role in facilitating information flow between the encoder and decoder, enabling effective fusion of features across different levels. Existing improvements primarily focus on integrating various types of attention mechanisms or multi-scale representations within the skip connections. However, these approaches still fall short in fully bridging the semantic gap between encoder and decoder features [54,55]. This semantic gap often results in over-segmentation or under-segmentation in liver tumor delineation, leading to false positives or false negatives. Moreover, the convolutional architecture of U-Net is inherently limited to local feature extraction, which constrains its ability to model global context—an essential aspect for fine-grained segmentation tasks. Addressing these issues requires the design of more sophisticated and effective feature fusion mechanisms to enhance the model’s ability to capture broad contextual information, thereby mitigating the semantic gap and improving global modeling capabilities.

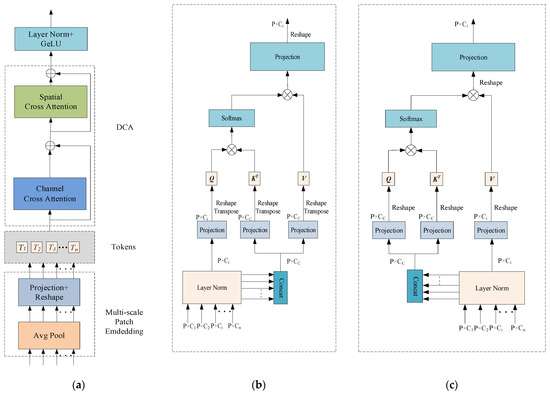

The Dual Cross Attention (DCA) mechanism Is an attention module designed to integrate multi-scale encoder features by capturing global dependencies across both channel and spatial dimensions [56]. Specifically, the Channel Cross Attention (CCA) module first performs cross-channel attention operations among channel tokens to capture global channel-wise dependencies. Then, the Spatial Cross Attention (SCA) module captures spatial dependencies via Cross Attention among spatial tokens, enhancing the model’s sensitivity to fine-grained spatial structures. These refined encoder features are subsequently upsampled and fused with the corresponding decoder features, enabling effective multi-level feature integration. By establishing cross-scale and cross-channel associations, this mechanism effectively integrates spatial and channel information, alleviating the feature mismatch caused by the semantic gap in traditional skip connections and improving segmentation performance. Based on these advantages, this study replaces traditional skip connections with the DCA mechanism to enhance the model’s perception of global structures. The detailed structure is illustrated in Figure 4.

Figure 4.

The Dual Cross Attention (DCA) mechanism; (a) overall architecture of the Dual Cross Attention (DCA) mechanism; (b) Channel Cross Attention (CCA) module; (c) Spatial Cross Attention (SCA) mechanism.

The architecture of the Dual Cross Attention (DCA) mechanism remains consistent with the number of encoder stages. In other words, given n + 1 multi-scale encoder stages, the DCA module takes the multi-scale features from the first n stages as input to enhance feature representation and connects them to the corresponding n decoder stages. As illustrated in Figure 4, the DCA mechanism consists of two main stages. The first stage comprises a multi-scale embedding module that extracts encoder tokens from the input features. In the second stage, Channel Cross Attention and Spatial Cross Attention mechanisms are applied to these encoder tokens to capture long-range dependencies. Finally, the tokens are upsampled through a sequence of normalization and GeLU activation, and then fused with the corresponding decoder stages to enable effective cross-level feature integration.

2.4.1. Patch Embedding Based on a Multi-Scale Encoder

Patches are extracted from n multi-scale encoder stages. Given n encoder stages at different scales, the input size is , and the patch size is , where corresponds to the scale levels. Average pooling with a kernel size and stride of is applied to extract patches. Subsequently, depthwise separable convolution is employed on the flattened 2D patches to perform feature mapping. The resulting feature representation of the multi-scale embedding module is formulated as Equation (6):

where denotes the flattened patches from the i encoder stage. Note that represents the number of patches, which is the same across each . This uniformity allows the application of Cross Attention mechanisms among these tokens.

2.4.2. Channel Cross Attention

Each token is processed using Channel Cross Attention(CCA). Specifically, each is first normalized via Layer Normalization (LN), then concatenated along the channel dimension to form , which is used to generate the key and value, while itself serves as the query. Depthwise separable convolution is integrated into the self-attention mechanism to better capture local context while reducing computational complexity. To achieve this, all linear projections are replaced with depthwise convolutional projections. The expressions for the queries, keys, and values in the Channel Cross Attention module are defined in Equation (7):

where represent the mapped queries, keys, and values, respectively. Accordingly, the formulation of the Channel Cross Attention is provided in Equation (8):

where is a scaling factor designed to control the variance of the dot-product results, thereby mitigating the risk of gradient vanishing. The output of the Cross Attention mechanism is a weighted sum of the values, where the weights are determined by the similarity between the queries and the keys. Finally, the output of the Cross Attention is refined using depthwise separable convolution and then fed into the Spatial Cross Attention module.

2.4.3. Spatial Cross Attention

Given the output from the CCA module, layer normalization is applied followed by concatenation along the channel dimension. Unlike the CCA module, the concatenated token is used as both the query and key, while each serves as the value. A 1 × 1 depthwise separable convolution is applied to project the queries, keys, and values. The expressions for the queries, keys, and values in the Spatial Cross Attention (SCA) module are defined in Equation (9):

where represent the mapped queries, keys and values, respectively. The formulation of the Spatial Cross Attention is presented in Equation (10):

where is a scaling factor. In the multi-head setting, , where denotes the number of heads. The output of the SCA is refined using depthwise separable convolution to produce the final feature representation of the DCA mechanism. After layer normalization and GeLU activation, the n output tokens of the module are upsampled and connected to the corresponding layers in the decoder.

This study introduces a dual attention mechanism that differs from the traditional self-attention approach. Instead of generating attention maps separately at each stage, the DCA mechanism integrates multi-scale features from different encoder stages. This enables the model to capture long-range dependencies across various encoder levels, thereby narrowing the semantic gap between features extracted at different encoder layers, and ultimately mitigating the semantic inconsistency between the encoder and decoder.

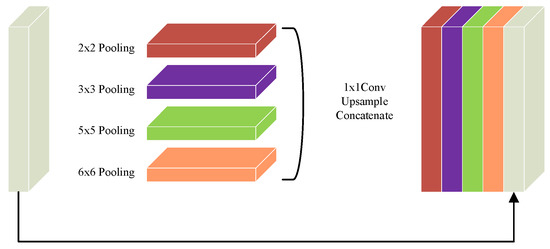

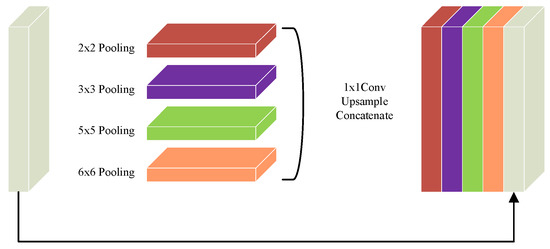

2.5. Design of the Bottleneck Structure

Previous studies have primarily focused on optimizing the encoder, decoder, and skip connections to improve network performance [36,37,38], while the bottleneck layer—serving as a critical bridge between them—has often been overlooked. As a vital component of the U-Net architecture, the bottleneck layer not only encapsulates the most semantically rich features but also plays a pivotal role in effectively transmitting these features from the encoder to the decoder, which is crucial for ensuring segmentation accuracy. However, the original bottleneck design relies on conventional convolutions, which are inherently limited by a narrow receptive field. This restricts the network’s capacity to capture global contextual information, potentially compromising the comprehensiveness and precision of the segmentation results. Therefore, enhancing the bottleneck structure to better capture global context has become an important direction for improving the segmentation performance of U-Net. In this study, a Context Extraction (CE) mechanism is introduced into the bottleneck layer. This mechanism comprises two core modules: a Dense Atrous Convolution (DAC) module and a Residual Multi-kernel Pooling (RMP) module [57]. This design enables the efficient extraction of high-level semantic information, thereby enhancing the model’s contextual understanding.

The DAC (Dense Atrous Convolution) module combines atrous convolution, the Inception architecture, and the residual learning mechanism from ResNet. It is designed to enhance the model’s ability to capture multi-scale information while preserving feature integrity. The structure of the DAC module is illustrated in Figure 5. Specifically, the DAC module consists of four parallel convolutional branches, each employing a different dilation rate (rate = 1, 3, 1 + 3, and 1 + 3 + 5) to simulate multi-scale receptive fields ranging from local detail to global context. This design is inspired by the multi-branch parallelism of the Inception architecture, aiming to improve adaptability to complex structural variations through architectural diversity. In each branch, a preceding standard convolution is applied to reduce the computational burden of directly using large receptive-field atrous convolutions, while also enhancing feature diversity through linear projection. The multi-scale features extracted by each branch are then fused with the input feature map via element-wise addition across channels. This process draws on the residual connection mechanism from ResNet, which facilitates gradient propagation and mitigates information degradation in deep networks. In liver tumor segmentation tasks, where tumors often exhibit highly complex and irregular morphologies, the DAC module provides a robust feature foundation by leveraging large-receptive-field branches to capture contextual semantics and small-receptive-field branches to retain sensitivity to fine details. The mathematical formulation of the DAC module is presented in Equation (11):

where denotes a 3 × 3 convolution, represents a 1 × 1 convolution, and indicates the dilation rates used in different branches.

To address the high computational cost associated with the enhanced feature representation in the DAC module, this study introduces a Residual Multi-kernel Pooling (RMP) module as an optimization strategy. The structure of the RMP module is illustrated in Figure 6. The RMP module employs four different pooling kernels with sizes ranging from 2 × 2 to 6 × 6 to capture multi-scale receptive fields. This design enhances the model’s feature extraction capacity while reducing redundant computations and the number of parameters. Subsequently, a 1 × 1 convolution is applied to perform dimensionality reduction and feature refinement. An upsampling operation then aligns all feature maps to a unified spatial resolution to ensure spatial consistency. During the fusion stage, the multi-scale features are concatenated with the original feature map, enabling efficient integration of cross-scale information and preservation of critical semantic features. This structure facilitates the construction of compact yet rich feature representations, significantly reduces computational overhead, and improves the model’s processing efficiency and representational capacity in complex scenarios. The mathematical formulation of the RMP module is presented in Equation (12):

where denotes max pooling operations with different kernel sizes, represents the upsampling operation, and indicates the feature concatenation operation.

2.6. Rationale for Model Design

The D-LKA module combines deformable convolution with large kernel attention to enhance the modeling of long-range dependencies and spatial context. In liver tumor segmentation, where tumors often exhibit irregular shapes and low contrast with the liver parenchyma, the choice of kernel size largely determines the scope and granularity of feature integration. Small kernels are more effective in capturing local details and boundary information, but they lack sufficient receptive fields to establish global connections for large or diffuse tumors. Conversely, large kernels expand the receptive field and enrich contextual information, but they may also introduce irrelevant background noise that weakens discriminative capability.

Figure 5.

Dense Atrous Convolution (DAC) module.

Figure 6.

Residual Multi-kernel Pooling (RMP) module.

The CE module typically employs convolution kernels of varying sizes to construct multi-scale feature extraction pathways. An appropriate combination of kernel sizes is crucial for accurately segmenting tumors of different scales while delineating their boundaries. Smaller kernels are better suited for capturing fine structural details at tumor edges, whereas larger kernels facilitate the modeling of the overall spatial distribution of tumors and their relationship with the surrounding liver parenchyma, thereby balancing local and global information.

The DCA mechanism establishes dynamic non-local connections across feature map positions via attention, while its deformable property enables the model to adaptively focus on context regions most relevant to tumor pixels, overcoming the limitations of fixed geometric structures. In liver tumor segmentation, the number of attention heads determines the model’s ability to capture diverse feature relationships in parallel. Increasing the number of heads allows the model to learn multiple representation patterns, such as intra-tumor texture heterogeneity, proximity to vessels, and overall boundary constraints, thereby improving segmentation accuracy for complex tumors. However, more heads do not necessarily yield better performance: too few may lead to insufficient context modeling, whereas too many increase the risk of overfitting and may interfere with deformable offset prediction. Thus, the number of heads must be carefully balanced between feature diversity and model generalization.

The proposed model integrates three key modules for attention and feature enhancement. The D-LKA (Deformable Large Kernel Attention) employs two deformable convolution layers with kernel sizes of 5 × 5 and 7 × 7, where the 7 × 7 kernel is combined with dilation (dilation = 3) and corresponding padding (padding = 9) to enlarge the receptive field without compromising resolution, ensuring that the output feature map size matches the input. The DAC (Dense Atrous Convolution) module utilizes parallel 3 × 3 convolutions with dilation rates of 1, 3, and 5 to capture multi-scale contextual information, further combined with the RMP (Residual Multi-kernel Pooling) module, which extracts global features through pooling at four scales (2 × 2, 3 × 3, 5 × 5, and 6 × 6). After upsampling and concatenation, the bottleneck channel dimension expands from 512 to 516 (512 + 4), yielding an output of 516 × 32 × 32 while only slightly increasing the parameter count. The DCA (Dual Cross Attention) mechanism is configured with one channel attention head (channel_head = 1) and four spatial attention heads (spatial_head = 4) to facilitate interaction and enhancement of encoder features. The spatial resolution remains unchanged before and after processing, ensuring effective fusion with decoder skip connections.

The input image (3 × 512 × 512) is progressively processed by the encoder. Initially, convolution and D-LKA are applied to extract 64 × 512 × 512 feature maps, followed by max-pooling that downsamples them to 64 × 256 × 256. This process is repeated across successive layers, with the number of channels doubling and the spatial resolution halving at each stage, generating feature maps of 128 × 256 × 256, 256 × 128 × 128, and 512 × 64 × 64, and finally downsampling to 512 × 32 × 32. At the bottleneck, DAC combined with RMP expand the channel dimension to 516 × 32 × 32, thereby enriching contextual information.

The DCA module enhances features across encoder layers while preserving their original dimensions (ranging from 64 × 512 × 512 to 512 × 64 × 64). The decoder then progressively restores spatial resolution through upsampling. Starting from 516 × 32 × 32, the feature maps are upsampled to 512 × 64 × 64 and fused with corresponding encoder features via concatenation. This process reconstructs feature maps of 256 × 128 × 128, 128 × 256 × 256, and 64 × 512 × 512, before the final convolution produces a 2 × 512 × 512 segmentation output, fully recovering the input resolution.

3. Evaluation Metrics for Segmentation Performance

To comprehensively evaluate the model’s performance in liver tumor semantic segmentation, seven quantitative metrics were employed: Precision, Recall, Dice Similarity Coefficient (Dice), Intersection over Union (IoU), mean Intersection over Union (MIoU), Accuracy, and Mean Pixel Accuracy (MPA). True Positive (TP) refers to the number of pixels correctly predicted as a liver tumor by the model. This indicates the model’s ability to accurately identify tumor regions that are truly present. False Positive (FP) denotes the number of non-tumor pixels incorrectly classified as tumor by the model. This reflects false alarms where the model erroneously labels healthy tissue as tumor. False Negative (FN) indicates the number of tumor pixels mistakenly predicted as non-tumor. This reveals instances where the model fails to detect actual tumor regions. True Negative (TN) represents the number of non-tumor pixels correctly identified as such by the model. This demonstrates the model’s capacity to correctly exclude regions that do not contain tumors.

Precision refers to the proportion of correctly identified liver tumor samples among all samples predicted by the model as liver tumors. It reflects the reliability of the model’s positive predictions. The formulation is presented in Equation (13):

Recall refers to the proportion of correctly detected liver tumor samples among all actual tumor samples. It measures the model’s ability to identify positive instances. The formulation is provided in Equation (14):

The Dice Similarity Coefficient (Dice) is used to evaluate the overlap between the predicted liver tumor region and the ground truth annotation. It is defined as twice the area of the intersection divided by the sum of the areas of the predicted and ground truth regions. The formulation is presented in Equation (15):

The Intersection over Union (IoU) is another commonly used overlap metric, defined as the ratio of the intersection area to the union area between the predicted liver tumor region and the ground truth. Compared with the Dice coefficient, IoU adopts a more stringent formulation, and thus typically yields lower values under the same conditions. The formulation is presented in Equation (16):

The mean Intersection over Union (MIoU) is defined as the average IoU across all classes and is used to comprehensively evaluate the model’s segmentation performance for each class. For liver tumor semantic segmentation, which is a binary classification task, the formulation is given in Equation (17):

Accuracy represents the proportion of correctly predicted pixels to the total number of pixels. The formulation is provided in Equation (18):

Mean Pixel Accuracy (MPA) refers to the average accuracy with which the model correctly classifies pixels across all classes. The formulation is given in Equation (19):

where k denotes the number of classes; pii represents the number of pixels that actually belong to class i and are correctly predicted as class i, while Pij denotes the number of pixels that belong to class i but are incorrectly predicted as class j.

4. Datasets and Experimental Design

Currently, three publicly available liver tumor imaging datasets are widely used: the LiTS dataset from the Liver Tumor Segmentation Challenge 2017 Database, the 3D-IRCADb01 dataset from the 3D Image Reconstruction for Comparison of Algorithm Database, and the MSD Task08 dataset from the Medical Segmentation Decathlon Task08 Database.

The LiTS dataset is a CT imaging dataset specifically designed for liver tumor segmentation. It comprises 3D CT scans collected from multiple medical centers, including 131 cases for training and 70 for testing. The 3D-IRCADb01 dataset consists of 3D CT scans from 20 patients (10 female and 10 male), the majority of whom have liver tumors. It contains 20 folders, each corresponding to an individual patient. Among the various annotated structures, the ground truth for liver tumors is selected for analysis. The MSD Task08 dataset includes 443 3D CT volumes, divided into 303 training cases and 140 testing cases.

To ensure annotation accuracy, all datasets were reverified and corrected by experienced radiologists.

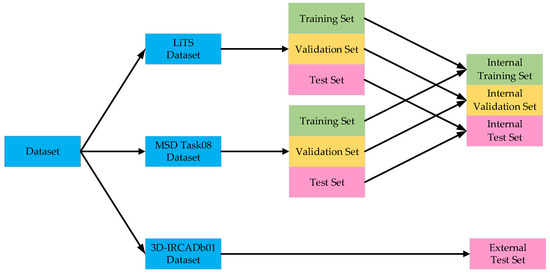

In the aforementioned liver tumor datasets, the test sets do not provide ground truth annotations for liver tumors; therefore, they were excluded from the experimental samples. Specifically, the LiTS dataset contains 118 annotated tumor cases, the 3D-IRCADb01 dataset includes 15 annotated cases, and the MSD Task08 dataset provides 303 annotated cases. To avoid evaluation bias caused by data leakage, the training, validation, and test sets were constructed based on patient-level partitioning. The LiTS and MSD Task08 datasets were each divided into training, validation, and test sets using an 8:1:1 ratio. The training sets from both datasets were merged, and the same strategy was applied to the validation and test sets. Furthermore, given the significantly lower number of tumor cases compared to normal cases in the datasets, non-tumor cases were excluded from the training, validation, and test sets to mitigate the impact of class imbalance and enhance the model’s ability to learn liver tumor features. To evaluate the generalization capability of the proposed model, the 3D-IRCADb01 dataset was used as an independent external test set. The dataset partitioning scheme and detailed results are illustrated in Figure 7 and Table 1.

Figure 7.

Dataset partitioning results.

Table 1.

Detailed dataset partitioning results.

To improve the model’s generalizability across multi-source datasets and harmonize spatial scale differences among LiTS, MSD Task08, and 3D-IRCADb01—which exhibit substantial variability in scanning parameters such as slice thickness and voxel spacing—all images were resampled to a uniform voxel resolution. This standardization ensures consistent spatial resolution of the liver region across all images, thereby minimizing distribution discrepancies caused by differences in scanning equipment and centers.

To enhance the effectiveness and compatibility of image representation, and considering that the typical Hounsfield Unit (HU) range for liver CT images lies between [40, 70], this study truncates the CT image HU values to the interval of [−400, 400]. The images are first clipped to this range, followed by linear normalization to map the pixel values to the [0, 255] scale. Subsequently, the data is converted to uint8 format to meet the input data type and value range requirements of the neural network. Finally, the processed images are saved in JPG format, with corresponding annotations stored as PNG files.

To improve model robustness and mitigate potential overfitting caused by the limited number of training samples, various data augmentation strategies were employed during training. These included random rotations (±15°) and random scaling (with a scale factor ranging from 0.9 to 1.1), which effectively increased sample diversity without significantly compromising the structural integrity of the original images. It is important to note that data augmentation was applied only to the training set, while the validation and test sets were kept unchanged to ensure the objectivity and comparability of model evaluation results.

To ensure the reproducibility and reliability of the liver tumor semantic segmentation experiments, all experiments were conducted on a carefully configured hardware and software platform. The hardware environment consisted of a workstation running Ubuntu 22.04, equipped with an Intel Core i7-9700K processor (3.80 GHz), an NVIDIA GeForce GTX 2080 Ti GPU, and 32 GB of RAM, which collectively met the computational and memory demands of deep learning model training and inference. The software environment was based on the PyTorch 1.12.1 deep learning framework and Python 3.7.13 for model development and training. CUDA 11.6 and cuDNN 8.5.x libraries were integrated to fully leverage GPU parallelism and significantly accelerate the training process.

Regarding hyperparameter settings, the model was trained for 50 epochs with a batch size of 4. A cosine annealing learning rate schedule was employed, starting from 1 × 10−4 and gradually decreasing to 1 × 10−7. This strategy enables rapid convergence in the early training stages while helping to prevent overfitting in later epochs. The Adam optimizer, known for its efficient adaptive learning rate mechanism, was adopted to facilitate fast and stable model convergence. Details of the experimental environment and hyperparameter configurations are summarized in Table 2.

Table 2.

Experimental environment and hyperparameter settings.

5. Loss Function Design

In the semantic segmentation task of liver tumors, Focal Loss and Dice Loss were adopted as the primary loss functions to guide network optimization during training.

Focal Loss is a loss function specifically designed to address the issue of class imbalance. In liver tumor segmentation tasks, the tumor regions typically occupy only a small portion of the entire image, resulting in a severely imbalanced class distribution that negatively impacts model training. To mitigate this issue, Focal Loss was introduced as the primary supervised loss function. By introducing a modulating factor, Focal Loss effectively down-weights easy-to-classify examples, encouraging the model to focus more on hard-to-classify samples. This mechanism alleviates class imbalance and enhances the model’s attention to informative examples. Moreover, Focal Loss emphasizes low-confidence and difficult samples, which helps the model better attend to boundary regions and hard-to-segment areas, thereby improving its ability to learn and represent task-critical regions. The formulation of Focal Loss is given in Equation (20):

where denotes the predicted probability for the true class. In the context of liver tumor segmentation, if the current pixel belongs to the tumor class, then represents the confidence score for the tumor; otherwise, indicates the confidence for the background. is the modulating factor. Setting to 1 effectively enhances the model’s sensitivity in identifying tumor regions, particularly for lesions with indistinct boundaries or small volumes.

Dice Loss is a widely used loss function in image segmentation tasks that evaluates model performance by measuring the similarity between the predicted and ground truth regions. In liver tumor segmentation, Dice Loss quantifies the spatial overlap between the model’s segmentation output and the true tumor region. Compared to loss functions such as cross-entropy, Dice Loss is more robust to class imbalance, as it focuses on the overlap of regions rather than individual sample classes. The formulation of Dice Loss is presented in Equation (21):

where represents the model’s prediction for liver tumor pixels, denotes the ground truth labels of the tumor pixels, and is a smoothing term, typically set to a small positive constant to prevent numerical instability caused by division by zero.

In the semantic segmentation task of liver tumors, a combined use of Focal Loss and Dice Loss is employed. This approach addresses class imbalance while emphasizing accurate segmentation boundary alignment. Typically, the two losses are summed to form a composite objective function, as expressed in Equation (22):

where is set to 1, which balances class imbalance handling and region matching accuracy without introducing additional tuning complexity.

6. Experimental Results and Performance Analysis

6.1. Ablation Experiments Analysis

To validate the effectiveness of the proposed liver tumor semantic segmentation method, an ablation study was conducted in this section. Specifically, D1 denotes the Deformable Large Kernel Attention module, C represents the Context Extraction mechanism, D2 stands for the Dual Cross Attention mechanism, and D1CD2U-Net refers to the proposed segmentation network. Modules were progressively ablated to analyze their individual contributions to liver tumor segmentation performance. Furthermore, seven segmentation metrics—Precision, Recall, Dice, IoU, MIoU, Accuracy, and MPA—were employed to evaluate the algorithm’s performance. The detailed ablation results are presented in Table 3.

Table 3.

Ablation study results.

To evaluate the impact of the Deformable Large Kernel Attention module on liver tumor segmentation performance, an ablation study was conducted by removing this module and observing changes in segmentation metrics. The results indicate that, compared to the full D1CD2U-Net, the CD2U-Net without the Deformable Large Kernel Attention module exhibits decreases in Precision, Recall, and IoU, reflecting a notable decline in the model’s ability to extract liver tumor features. This demonstrates that the Deformable Large Kernel Attention mechanism plays a critical role in the model and significantly contributes to liver tumor segmentation performance.

To assess the effect of the Dual Cross Attention mechanism on liver tumor segmentation, an ablation study was performed by progressively removing this module and monitoring changes in performance metrics. The ablation results reveal that, relative to D1CD2U-Net, the D1CU-Net—lacking the Dual Cross Attention module—shows reductions in Precision, Recall, and IoU, indicating certain limitations in feature fusion. This suggests that incorporating the Dual Cross Attention mechanism effectively enhances the integration of deep and shallow features within the model. To evaluate the impact of the Context Extraction mechanism on liver tumor segmentation, an ablation study was conducted by progressively removing this module and observing the corresponding changes in performance metrics. The results show that, compared with D1CD2U-Net, the D1D2U-Net variant exhibits decreased Precision, Recall, and IoU, reflecting a reduced capability to capture global information. These findings indicate that the Context Extraction mechanism is beneficial for this task and contributes to improved model performance in liver tumor segmentation.

Overall, the ablation study thoroughly validates the significant contribution of the Deformable Large Kernel Attention module, Context Extraction mechanism, and Dual Cross Attention mechanism in enhancing liver tumor segmentation performance. The experimental results demonstrate that D1CD2U-Net achieves superior performance across all evaluation metrics, further confirming the critical roles of these modules in feature representation and information integration, as well as their synergistic effects. Specifically, Deformable Large Kernel Attention enhances feature extraction, effectively capturing fine-grained structural details of the liver and tumor regions; the Context Extraction module focuses on global semantic modeling, providing comprehensive contextual information that allows the model to accurately identify tumor regions even in complex backgrounds; and the Dual Cross Attention module strengthens local feature representation through cross-channel feature fusion, improving sensitivity to edge details and small lesions. Although Context Extraction and Dual Cross Attention exhibit functional complementarity, their overlap is limited—for instance, Context Extraction can also provide additional information enhancement in multi-scale feature interactions. Therefore, Context Extraction and Dual Cross Attention are not entirely independent but display significant synergy: global semantic guidance from Context Extraction enables Dual Cross Attention to perform more precise local feature fusion, collectively enhancing the model’s segmentation accuracy, stability, and adaptability to complex tumor morphologies.

6.2. Performance Comparison with State-of-the-Art Methods

6.2.1. Performance Comparison on the Internal Test Set

To demonstrate the performance advantages of the proposed liver tumor semantic segmentation method, this study conducted a comprehensive comparison between D1CD2U-Net and nine state-of-the-art network architectures, including U-Net, FCN, LinkNet, PSPNet, DeeplabV3+, Unext, AG-Net, MISSFormer, and TransCeption. Consistent with the ablation study, seven segmentation metrics—Precision, Recall, Dice, IoU, MIoU, Accuracy, and MPA—were utilized to evaluate the algorithm’s performance. The detailed comparison results are presented in Table 4.

Table 4.

Comparison results on the internal test set.

In the field of liver tumor segmentation, numerous classical network architectures—such as U-Net, FCN, LinkNet, PSPNet, DeeplabV3+, Unext, AG-Net, MISSFormer, and TransCeption—have been proposed, each incorporating distinctive feature extraction and fusion strategies aimed at improving segmentation accuracy. U-Net achieves deep multi-scale feature fusion through skip connections and feature concatenation. FCN and LinkNet employ feature weighting strategies to optimize feature integration. PSPNet introduces a pyramid pooling module to enhance the integration of global contextual information. DeeplabV3+ leverages atrous spatial pyramid pooling to expand the receptive field for contextual awareness. Unext improves sensitivity to local features through multilayer perception-based designs. AG-Net enhances the response to critical features by combining attention mechanisms with structure-preserving techniques. MISSFormer and TransCeption adopt Transformer-based architectures, which significantly enhance the model’s global modeling capability.

Although current mainstream models have achieved notable progress in liver tumor segmentation, the evaluation metrics presented in Table 4 reveal limitations in both feature fusion and feature extraction across these architectures. The feature fusion mechanisms in U-Net, FCN, LinkNet, and AG-Net struggle to effectively bridge the semantic gap between shallow and deep features. The feature extraction strategies employed by all the comparative models remain insufficient for capturing the complex and fine-grained characteristics of tumor regions, thereby limiting their ability to achieve highly precise segmentation. While PSPNet, DeeplabV3+, and Unext demonstrate effective deep feature extraction, they are limited in their ability to capture broader global contextual information. MISSFormer and TransCeption focus primarily on global context modeling but tend to neglect the extraction of local details. In response to these challenges, this study proposes the D1CD2U-Net model, which incorporates a series of carefully designed enhancements to address the aforementioned limitations.

In the encoder stage of the model, a Deformable Large Kernel Attention mechanism is effectively integrated. This module is designed to enhance the network’s sensitivity to tumor regions while mitigating the loss of critical features during the downsampling process, thereby enabling more accurate and efficient capture of tumor-related features. Furthermore, a Context Extraction mechanism is introduced at the bottleneck layer. By expanding the receptive field and integrating multi-scale information, this module significantly improves the network’s ability to capture global contextual features, providing richer and more comprehensive support for subsequent segmentation tasks. In addition, the conventional skip connection strategy is replaced with a Dual Cross Attention mechanism. This mechanism not only facilitates effective communication and fusion between features at different scales—thereby alleviating the semantic gap—but also greatly enhances the network’s global modeling capability. As a result, the model is able to make more accurate and robust segmentation decisions when dealing with complex and heterogeneous liver tumor images.

According to the evaluation metrics, the proposed D1CD2U-Net consistently outperforms competing models in terms of Precision, Recall, Dice, IoU, MIoU, Accuracy, and MPA. A high Precision score indicates the model’s strong accuracy in identifying tumor regions, while a high Recall reflects its high sensitivity in detecting tumor instances. The high Dice coefficient further validates the model’s superior performance in segmenting tumor regions. As the harmonic mean of Precision and Recall, Dice reflects both the sensitivity and consistency of the model in identifying overlapping tumor regions, highlighting its effectiveness in capturing local structural accuracy. The elevated values of IoU and MIoU indicate the model’s spatial coverage accuracy in both binary and multi-class segmentation tasks. Specifically, IoU quantifies the ratio between the intersection and union of predicted and ground truth regions, emphasizing the model’s exactness in covering target areas. As the average IoU across all classes, MIoU reflects the model’s consistency and generalization capability across various categories. The high values of Accuracy and MPA further demonstrate the model’s classification performance at both global and pixel levels. The combined performance across all metrics confirms the efficiency and accuracy of the D1CD2U-Net in liver tumor segmentation tasks. In particular, the jointly high values of Dice and IoU demonstrate that the model can not only accurately localize tumor regions but also maintain strong agreement with manual annotations—an essential attribute for supporting precision diagnosis and clinical decision-making.

6.2.2. Performance Comparison on the External Test Set

To comprehensively and rigorously evaluate the generalization capability of the D1CD2U-Net model, the 3D-IRCADb01 dataset was selected as an independent test benchmark. This choice aims to provide an accurate assessment of the model’s real-world effectiveness when applied to unseen and previously unencountered data, thereby avoiding potential biases associated with the original test set. The detailed comparison results are presented in Table 5.

Table 5.

Comparison results on the external test set.

D1CD2U-Net exhibits competitive performance across multiple key performance metrics. Specifically, it achieved a Precision of 0.9607, significantly outperforming all comparative models, indicating high consistency between predicted tumor regions and ground truth. The model also attained a Recall of 0.7421, reflecting its ability to identify most actual liver tumor regions. Although this score suggests further improvement is possible, D1CD2U-Net surpasses other models in reducing false negatives.

Notably, its Dice score reached 0.8376. As a metric evaluating overlap between predicted and annotated regions, this highlights the model’s sensitivity to tumor boundaries and morphological details. Additionally, the model achieved an IoU of 0.7203, directly quantifying segmentation accuracy. The MIoU of 0.8587 further confirms its high accuracy and consistency for all classes.

D1CD2U-Net also attained the Accuracy of 0.9972, demonstrating exceptional classification performance. These results reflect the model’s robustness in liver tumor segmentation and its capability to handle complex medical imaging data. With an MPA of 0.8709, it also achieves high pixel-level classification precision. In summary, D1CD2U-Net exhibits superior segmentation performance and generalization ability for liver tumor segmentation tasks.

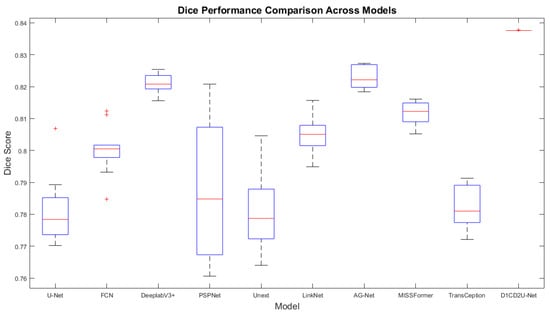

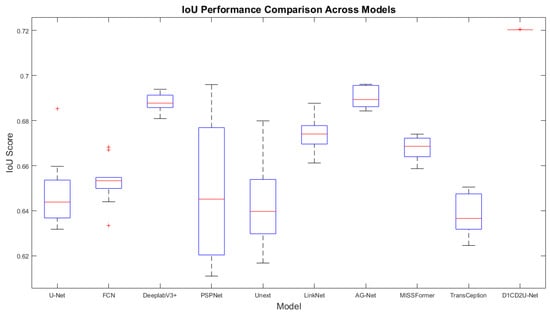

6.3. Saliency Analysis of the Model

Most evaluation metrics currently report only average performance, lacking statistical verification of method improvements. To quantify the performance gains of the proposed approach relative to other models, this section employs the paired t-test to assess the significance of differences using p-values, accompanied by boxplots for visual illustration, thereby enhancing the reliability and interpretability of the results. The red markers in the box plot represent outliers. The specific experimental results are presented in Table 6 and Table 7, as well as Figure 8, Figure 9, Figure 10 and Figure 11. All model performance metrics are reported as the mean ± standard deviation across 10 independent experiments. A two-tailed paired t-test was used to compare each model against the improved model (D1CD2U-Net). An asterisk (*) indicates that the performance difference relative to the improved model is statistically significant (p < 0.05), and this explanation is applicable to both Table 6 and Table 7.

Table 6.

Dice coefficient and IoU on internal test set.

Table 7.

Dice coefficient and IoU on the external test set.

Figure 8.

Boxplot of Dice scores on the internal test set.

Figure 9.

Boxplot of IoU scores on the internal test set.

Figure 10.

Boxplot of Dice scores on the external test set.

Figure 11.

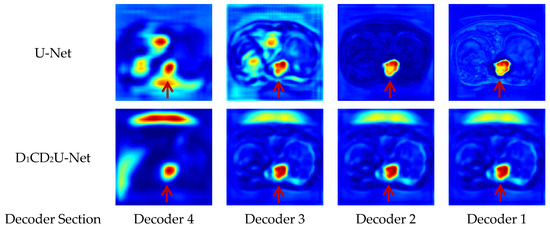

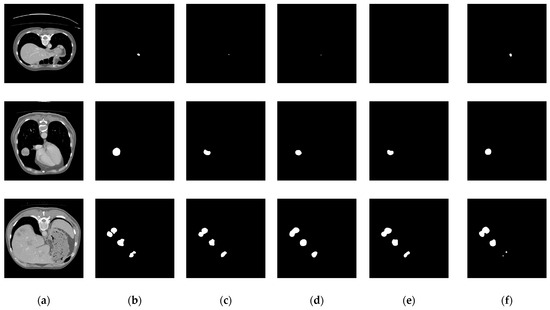

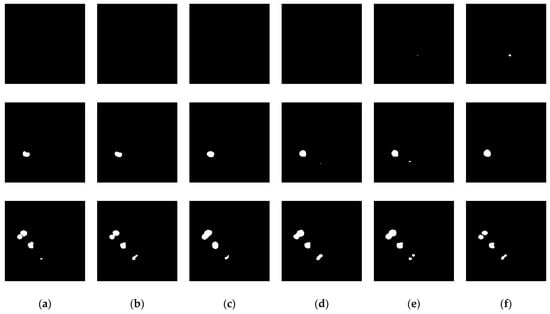

Boxplot of IoU scores on the external test set.