Abstract

Accurate detection of Alzheimer’s disease (AD) is critical yet challenging for early medical intervention. Deep learning methods, especially convolutional neural networks (CNNs), have shown promising potential for improving diagnostic accuracy using magnetic resonance imaging (MRI). This study aims to identify the most informative combination of MRI slice orientation and anatomical location for AD classification. We propose an automated framework that first selects the most relevant slices using a feature entropy-based method applied to activation maps from a pretrained CNN model. For classification, we employ a lightweight CNN architecture based on depthwise separable convolutions to efficiently analyze the selected 2D MRI slices extracted from preprocessed 3D brain scans. To further interpret model behavior, an attention mechanism is integrated to analyze which feature level contributes the most to the classification process. The model is evaluated on three binary tasks: AD vs. mild cognitive impairment (MCI), AD vs. cognitively normal (CN), and MCI vs. CN. The experimental results show the highest accuracy (97.4%) in distinguishing AD from CN when utilizing the selected slices from the ninth axial segment, followed by the tenth segment of coronal and sagittal orientations. These findings demonstrate the significance of slice location and orientation in MRI-based AD diagnosis and highlight the potential of lightweight CNNs for clinical use.

1. Introduction

Alzheimer’s disease is an age-related disease that causes damage to the brain nerve cells (neurons) responsible for primary human activities such as remembering, speaking, and thinking. Once symptoms appear and the clinical diagnosis is confirmed, the disease becomes irreversible, making a diagnosis at its early stages an urgent requirement for medical intervention to slow down its progression []. AD progresses in three stages: preclinical AD with brain biomarkers, MCI, and dementia due to AD [,]. Although MCI is commonly associated with AD, not all patients develop the disease. While AD can be confirmed after death by histopathological examination, it can also be assessed during an individual’s lifetime using clinical examination and neuroimaging techniques [,,].

Magnetic resonance imaging is the most commonly used neuroimaging technique in AD research, due to its high-resolution visualization that provides related details such as hippocampal atrophy and cortical thinning [,,]. Additionally, MRI is non-invasive and safe even if repeated many times, making it ideal for long-term disease progression monitoring [,]. However, processing 3D MRI images is computationally extensive, as each image contains millions of voxels, leading researchers to explore efficient methods to minimize the computational overhead, as in [,].

Deep learning techniques, particularly CNNs, have been intensely investigated in AD research as they can automatically extract relevant features rather than depending on handcrafted ones. The integration of CNNs with 3D and 2D MRI has shown relatively good results in distinguishing AD from MCI and CN states [,,,,].

MRI scans are typically visualized using three standard volumetric orientations: axial, coronal, and sagittal. Each orientation offers a different perspective of the brain’s structure. At the same time, a particular orientation of a specific brain location may provide more critical information in distinguishing and identifying AD-related changes. Investigating the influence of these factors is crucial for maximizing neuroimaging-based models. Although MRI slice orientation and selection significantly impact classification performance, they have not been extensively studied and there are many gaps in existing studies. The literature in this area relies on investigating a single orientation or specific brain segment or using complicated machine learning techniques for the feature extraction phase. Thus, we can summarize the limitations as follows:

- Limited comparisons across various orientations of the brain;

- Lack of clear definitions for guided selection of brain slices;

- The literature suffers from complicated models.

Therefore, in this study, we present a lightweight deep learning model to investigate the impact of brain slice orientation and anatomical segmentation on AD classification. The model employs depthwise separable convolutions, significantly reducing training time without sacrificing performance. To enhance the diagnostic relevance of the input data, we introduce an automated slice selection mechanism based on feature entropy computed from a pretrained CNN (MobileNetV2) activation map. This method systematically selects the most informative slice from each 3D MRI segment across axial, coronal, and sagittal views. The key contributions of this research are summarized as follows:

- A comprehensive analysis of the influence of MRI slice orientation on AD classification;

- Introduction of a feature entropy-based slice selection method that identifies the most informative slice per brain segment using CNN activation maps;

- Development of a simple yet effective lightweight CNN model based on depthwise separable convolutions for efficient classification;

- Brain slice analysis using a consistent partitioning strategy to evaluate region-wise diagnostic importance;

- Introducing an attention mechanism for feature level interpretation.

This study provides a foundation for further investigation into MRI-based AD diagnosis by identifying optimal combinations of slice orientation and location for enhanced classification accuracy.

2. Related Work

Many studies have investigated AD detection using deep learning techniques, focusing on neuroimaging, in which MRI and positron emission tomography (PET) scans have received the highest interest. In this study, we focused on MRI scans. It is essential to effectively use the available 3D MRIs for Alzheimer’s disease diagnosis in a way that facilitates future research through identifying the most relevant 2D slices and orientations to be extracted from these 3D scans. Most previous studies in this domain have relied heavily on random slice selection or focused on a specific brain orientation, while others have employed complex and extensive machine learning techniques to specify relevant slices. We also noticed that some achieved relatively low accuracy when compared to other state-of-the-art methods.

Kim et al. [] proposed a notable effort: a relevant slice-selective method that relies on a generative adversarial network for PET scans. The brain area considered in their research ranges from the amygdala to the end of the posterior cingulate cortex (PCC). They chose this specific brain part as it is the part where AD causes anatomical and pathological changes. Their model is a binary classifier that classifies AD vs. CN, which was trained using the selected slices on the coronal plane without considering axial and sagittal orientations. They achieved 92% and 94% accuracy using single and double slices, respectively.

Similarly, focusing on slice orientation, Ramalho et al. [] highlighted the importance of MRI orientations over the AD diagnosis by studying the impact of different orientations on classifying patients with MCI versus healthy CN patients. The evaluation was conducted using a CNN model. While all three orientations were investigated, the study did not specify the particular slices or brain regions analyzed within each orientation. The classification only focused on MCI vs. CN without considering the AD stage.

From a different perspective, Puente-Castro et al. [] focused on studying the sagittal plane, which is less commonly utilized compared to other horizontal orientations. They employed transfer learning to assess the sagittal plane, and their study showed that it is equally effective compared to the other horizontal planes. However, the authors did not specify which brain region was employed in their research.

In contrast, De Souza et al. [] introduced a hybrid model that combined machine and transfer learning for AD prediction from MRI scans. They employed a genetic algorithm for slice selection. Once the relevant slices were selected, transfer learning using EfficientNetV2S was utilized for feature extraction and classification. The experiments were applied to male and female MRIs separately and combined. AD, CN, and MCI were the three classes considered. First, a multiclass classification was used, in which AD vs. all, MCI vs. all, and CN vs. all were considered. Three brain planes were considered, and the slice selection pipeline specified the relevant slices from all the orientations and chose adjacent slices only.

A similar hybrid model consisting of machine learning and a deep learning pipeline for slice selection from 3D MRI images was proposed by Inan et al. []. Random forest and gradient boosting were utilized to select the 16 most relevant slices. A transfer learning model based on EfficientNetV2S extracted features and classified subjects using binary classification of CN vs. AD, CN vs. MCI, and CN vs. MCI, achieving an accuracy of 83.64%, 82.69%, and 71.40%, respectively.

Ghosh et al. [] proposed a deep learning model to be trained in a federated way to detect Alzheimer’s disease using MRI images. Privacy, consistency, and robustness were tackled in this research. The initial weight of MobileNet, which was gained from training the ImageNet database, was used. MRI slice orientations, coronal, sagittal, and axial planes, were explored to choose the best orientation for AD detection. However, the authors did not specify which coronal slice was selected for model training, leaving room for further exploration regarding the most informative regions within the coronal plane.

Beyond MRI scans, Khatri et al. [] integrated MRI and functional MRI (fMRI) to identify AD biomarkers. A support vector machine (SVM) and a random forest (RF) were used to classify the data. They worked with a specific frequency range and considered 48 axial slices of the fMRI. Finally, Bi et al. [] employed unsupervised technologies. First, they employed PCANet, an unsupervised CNN, which was used for feature extraction, and then k-means for AD diagnosis. Table 1 summarizes the most relevant articles investigating the influence of different orientations and slice selection methodologies for AD classification. It compares the used datasets, methods, orientations, and datatypes.

Table 1.

Summary of the related work.

3. Materials and Methods

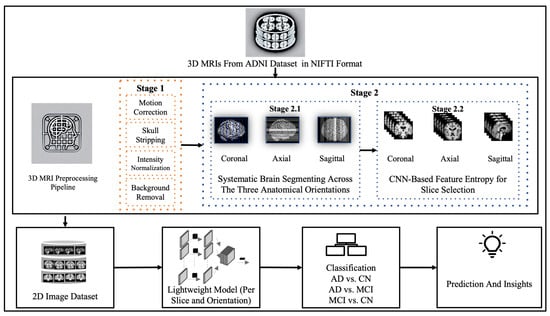

This section describes the dataset and methodologies used. Figure 1 illustrates the overall framework of this study, beginning with data acquisition and preprocessing, followed by the selection of 2D slices for the three primary brain orientations. Next, selected slices from each partition and orientation were fed into the lightweight CNN to gain insights about the best combination for Alzheimer’s disease detection.

Figure 1.

Overview of the research workflow.

3.1. The Alzheimer’s Disease Neuroimaging Initiative (ADNI) Dataset

The ADNI is a large research center project mainly launched to study biomarkers leading to early AD detection. ADNI significantly contributed to the studies in this area using machine and deep learning by offering large and high-resolution neuroimaging. It is recognized internationally as a trusted source of experimental data on Alzheimer’s disease. Permission is needed to access the ADNI dataset. In this study, we applied to access the data through the Image and Data Archive (IDA) and received our approval on 21 June 2023. From the ADNI1 collection, we downloaded 3495 3D MRI scans. The scans were divided into AD, MCI, and CN, as shown in Table 2.

Table 2.

Details of ADNI subjects.

3.2. MRI Preprocessing

Preparing medical images for analysis is a critical step that facilitates the development of effective deep learning models. Many preprocessing procedures must be implemented to prepare medical images for the proposed architecture. To ensure accurate classification results, we prepared the 3D MRI images obtained from the ADNI dataset for further processing and analysis. We utilized FreeSurfer, a well-known open-source tool mainly designed to process structural MRI scans. The Autorecon1 pipeline was applied to perform essential procedures, including (1) motion correction, (2) skull stripping, and (3) intensity normalization, and we added one more non-FreeSurfer process: (4) background removal. These four processes are explained as follows:

- Motion correction corrects minor head movements that may occur during head scanning. It ensures that all slices in one scan are aligned.

- Skull stripping removes all non-brain tissues from MRI scans to isolate the brain, which is a crucial step for AD classification.

- Intensity normalization adjusts the intensities of MRI voxels to a standard range to enhance the contrast and consistency in the images.

- For background removal, we cropped the background to eliminate most of the non-brain elements.

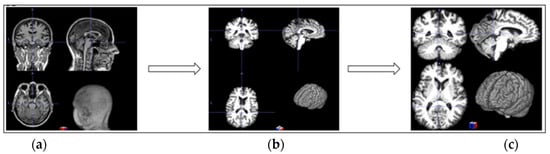

Although FreeSurfer is widely used for processing medical images, it is considered time-consuming. Our device’s processing time for a single image with autorecon1 took approximately 30 min. Given that our dataset contained over 3450 images, we would have needed more than 1700 h, nearly 70 days, to preprocess all the images. To address this problem, we implemented parallel processing using eight cores, which significantly reduced the preprocessing time, thereby making the pipeline more efficient for large-scale analysis. Figure 2 shows the preprocessing stage.

Figure 2.

Three-dimensional MRI preprocessing: (a) original image; (b) preprocessed with FreeSurfer autorecon1; (c) background removal.

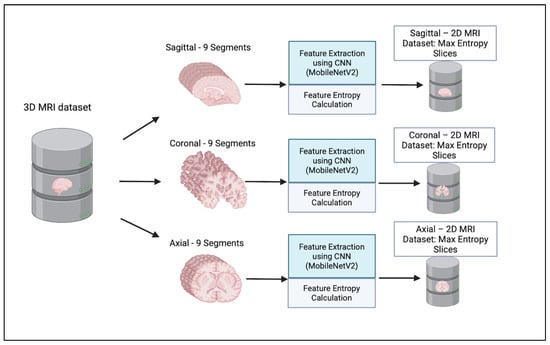

3.3. Automated 2D Slice Selection

To enable consistent analysis of the influence of 2D MRI slice orientation and location across subjects in AD diagnosis, we developed an automated slice selection framework based on entropy computed from CNN feature maps. This method identifies the most informative slices from 3D MRI volumes while preserving anatomically and diagnostically relevant structures.

For each 3D image, was partitioned into fifteen equally sized segments along the three standard orientations: Axial, Coronal, and Sagittal. Let V denote a normalized 2D MRI slice extracted from a segment S, and let FE denote Feature Extractor from the CNN. The 2D slices V ∈ are passed through the CNN to obtain a 3D activation map as in (1):

where C is the number of feature channels. For each channel Ac, we compute the Shannon entropy as shown in (2):

where is the estimated probability from the normalized histogram of pixel intensities in . Equation (3) explains the overall entropy of slice V, which is defined as the average entropy across all channels:

The slice with the highest mean entropy is selected as the most informative representative of that segment:

In the sagittal plane, the brain was divided from left to right into vertical slices along the midline. In the axial plane, the brain was segmented from top to bottom, resulting in horizontal cross-sections. For the coronal plane, the division proceeded from the back to the front of the brain, resulting in vertical slices that moved from the back of the skull toward the forehead. This segmentation approach allows the model to identify the most informative slice from each region independently by narrowing the search space within defined anatomical brain segments. This method reduces the risk of overlooking critical features that may be missed in global analysis. Moreover, it enhances the interpretability and relevance of the selected slices.

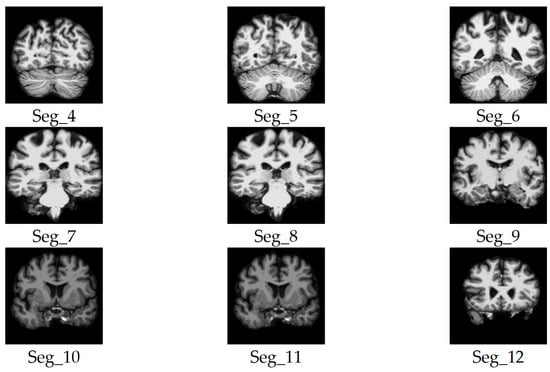

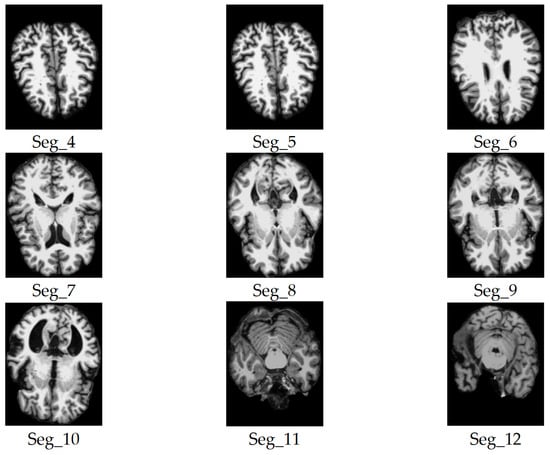

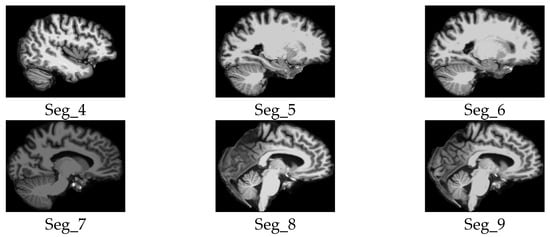

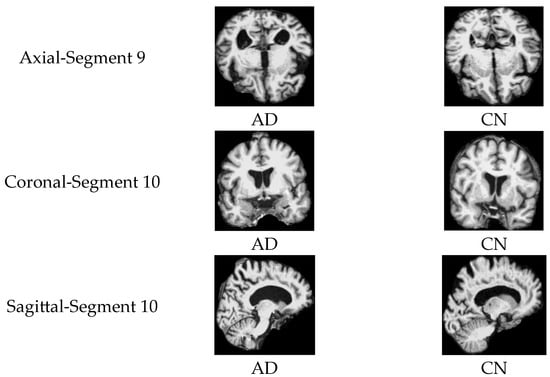

We considered only the nine central segments, neglecting the edge parts of the brain, as they did not contain complete brain images, but rather partial structures, which limits their diagnostic value. Figure 3 illustrates the 2D slice selection process. Figure 4, Figure 5 and Figure 6 show samples of the selected 2D images.

Figure 3.

Two-dimensional MRI Slice selection process.

Figure 4.

Samples of the selected slices of the coronal plane segments.

Figure 5.

Samples of the selected slices of the axial plane segments.

Figure 6.

Samples of the selected slices of the sagittal plane segments.

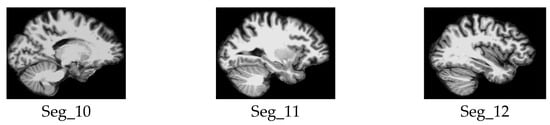

3.4. Proposed Lightweight CNN with Attention-Based Fusion

Our primary goal was to create a lightweight and efficient model designed to classify patients into three main categories: patients with AD vs. CN, AD vs. MCI, and, finally, MCI vs. CN. Focusing on these critical comparisons aimed to answer our research question and identify the most impactful orientation and brain parts contributing to precise diagnostic outcomes. Additionally, by leveraging attention-based feature fusion, the model offers interpretability, highlighting which level of features influenced the decision, thereby enhancing clinical relevance.

The proposed architecture is a multi-layered network built on a depth-wise separable CNN, which serves as the model backbone. The choice of a lightweight design was motivated not only by the need to reduce computational overhead but also by a commitment to the principles of sustainable artificial intelligence (Green AI). By significantly reducing the number of floating-point operations (FLOPs), the model minimizes energy consumption, which in turn helps lower carbon emissions.

We proposed a lightweight convolutional neural network architecture enhanced with hierarchical attention fusion to improve Alzheimer’s Disease classification from 2D MRI slices. The model utilizes depthwise separable convolutions and a custom attention mechanism to reduce computational cost while maintaining spatial feature relevance. A complete overview of the architecture is illustrated in Figure 7.

Figure 7.

Complete overview of the proposed model.

A hierarchical feature extraction approach is introduced instead of relying on the last layer. The model collects and integrates features from multiple levels of abstraction, which helps in capturing both fine-grained structural details and broader anatomical patterns.

The proposed architecture takes an input image of size 150 × 150 × 3. It consisted of three sequential convolutional blocks; each block consisted of depthwise separable filters with 3 × 3 kernels followed by a max-pooling layer with a kernel size of 2 × 2. Each block captures a distinct level of abstraction:

- Block 1 focuses on low-level visual features such as edges, textures, and intensity gradients;

- Block 2 captures mid-level representations, including shapes and localized anatomical patterns;

- Block 3 encodes high-level semantic information, such as complex structures or regions.

This multiscale feature extraction enables the model to analyze brain MRI slices by learning attention weights across these blocks and emphasizing the most relevant level of abstraction for each input. The output of each block is flattened and projected into a shared 32-dimensional latent space using fully connected layers. These feature vectors are normalized to ensure consistent statistical properties across blocks.

To determine the relative importance of each block’s features, an attention mechanism is introduced. The normalized vectors are stacked along a new temporal axis, forming a feature matrix. This matrix is processed through shared dense layers, producing attention logits for each block. These layers learn higher-level abstract representations of each block’s features, preparing them for attention scoring. A Softmax is applied across the three blocks to produce attention weights that sum to one, enabling the network to learn a weighted fusion of features based on relevance to the classification task. These weights are then used to perform element-wise multiplication with the corresponding feature vectors, allowing the model to emphasize the most informative feature level.

The attention-weighted vectors are aggregated via summation to produce a fused feature representation, which is then passed through a final dense layer with sigmoid activation to generate the binary classification output.

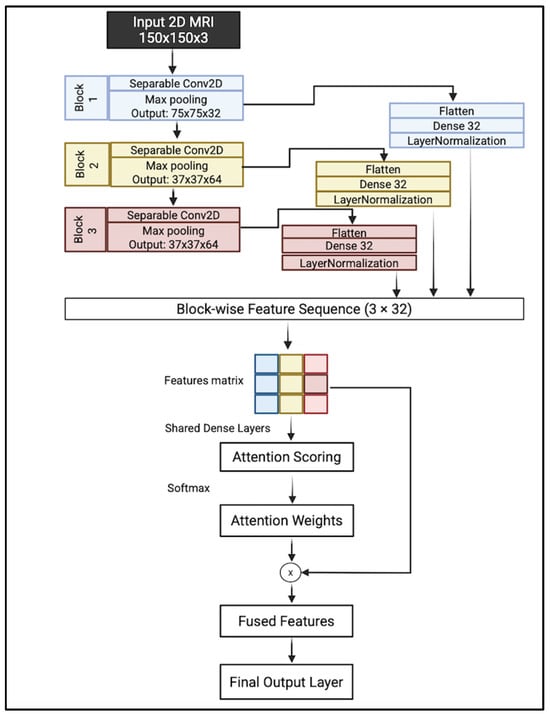

Depthwise Separable Convolution (DSC)

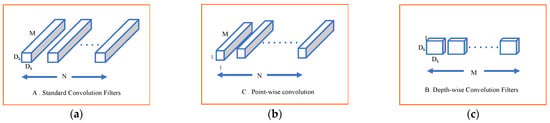

Our model employs depthwise separable convolution. This type of deep learning convolution is specially designed to reduce the computational complexity and number of parameters in conventional CNN. DSC factorizes the standard CNN into point- and depth-wise convolutions. A depthwise convolution mainly uses a single convolutional filter per input instead of combining all input channels as in standard convolution. In contrast, the point-wise convolution, which is a 1 × 1 convolution, combines the output of the previous layer. Figure 8 illustrates the depth- and point-wise convolution. By applying this separation, DCS dramatically reduces the computation cost, as shown in (5) for standard CNN and (6) for DSC [].

Figure 8.

(a) Standard CNN filters; (b) depth-wise convolution; (c) point-wise convolution.

In these expressions, Dk represents the kernel size, M is the number of input channels, N is the number of output channels, and Df is the spatial dimensions (height × width) of the feature map.

4. Experimental Results

4.1. Experimental Environment and Platform

All experiments were conducted using Python 3.11.7 within Jupyter Notebook(via Anaconda distribution, notebook server version 6.5.7) on a MacBook Pro with an Apple M1 chip, 10 cores, 16 GB of memory, and macOS Sonoma. The model was trained using the Adam optimizer with a learning rate of 0.001. Binary cross-entropy was used as the loss function. Training was conducted over 20 epochs with a batch size of 32. A 5-fold stratified K-Fold cross-validation strategy was applied, with 10% of the data held out for testing in each fold.

4.2. Evaluation Metrics

Before discussing the performance metrics used, it is essential to understand four fundamental terms: true positive (TP), true negative (TN), false positive (FP), and false negative (FN). These terms define the accuracy of the predictions made by our model, where MRI scans from the ADNI dataset were classified into three main classes: AD, MCI, and CN.

- AD vs. MCI

- ○

- TP: The patient is correctly predicted as having AD when they have Alzheimer’s disease.

- ○

- TN: The patient is correctly predicted as having MCI when they have a mild cognitive impairment.

- ○

- FP: The model incorrectly predicts AD when the patient has mild cognitive impairment.

- ○

- FN: The model incorrectly predicts MCI when the patient has Alzheimer’s disease.

- AD vs. CN

- ○

- TP: The patient is correctly predicted as having AD while they have Alzheimer’s disease.

- ○

- TN: The patient is correctly predicted as CN when they are cognitively normal.

- ○

- FP: The model incorrectly predicts AD when the patient is cognitively normal.

- ○

- FN: The model incorrectly predicts CN when the patient has Alzheimer’s disease.

- MCI vs. CN

- ○

- TP: The patient is correctly predicted as having MCI when they have mild cognitive impairment.

- ○

- TN: The patient is correctly predicted as CN when they are cognitively normal.

- ○

- FP: The model incorrectly predicts MCI when the patient is cognitively normal.

- ○

- FN: The model incorrectly predicts CN when the patient has mild cognitive impairment.

Several performance metrics were utilized to draw attention to the importance of different orientations and slice locations for AD diagnosis. First, the computational load was assessed by calculating the number of FLOPs. In addition, an attention-based feature importance analysis was conducted to understand which blocks the model relies on most in the classification process. Subsequently, each classification experiment was evaluated using standard performance metrics, including accuracy, precision, sensitivity (recall), specificity, F1 score, and the area under the receiver operating characteristic curve (AUC).

- Accuracy represents the number of correctly classified MRIs (TP and TN) out of all the predictions shown in Equation (7).

- 2.

- Precision represents the number of positively predicted MRIs out of all positive predictions (TP and FP), as shown in Equation (8).

- 3.

- Sensitivity measures the model’s ability to detect positive cases (patients with AD and MCI). High sensitivity is a significant measure in the medical field [] since it means how well the model identifies actual disease cases, reducing the FN rate, as shown in Equation (9).

- 4.

- Specificity measures the model’s ability to detect true negative (non-AD) cases. It is essential to minimize the FP in clinical diagnosis, and it is calculated as in Equation (10).

- 5.

- The F1 score is a harmonic balance between precision and sensitivity. The main reason for considering the F1 score in the performance evaluation process is that we are working in a sensitive medical area [], which can be calculated using Equation (11).

- 6.

- The AUC assesses the model by plotting the TP rate (TPR) to the FP rate (FPR).

4.3. Experimental Results Results Discussion

To benchmark our approach, we compared our model with three known lightweight architectures—MobileNetV1, MobileNetV2, and EfficientNetB0, based on their computational cost, as measured by the number of FLOPs as shown in Table 3. The proposed method stands out with the lowest FLOPs, requiring approximately 78.1 million operations. It reflects a significant reduction in computational complexity—about 74% less than MobileNetV2 and over 80% less than MobileNetV1 and EfficientNetB0.

Table 3.

FLOPs Comparison of Proposed and Baseline Lightweight Models.

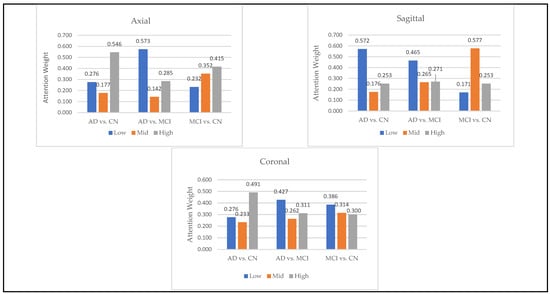

To analyze the role of hierarchical features, we calculated the average of attention weights assigned to low, medium, and high-level features across segments as shown in Figure 9. These correspond to features extracted from Block 1 (low-level), Block 2 (mid-level), and Block 3 (high-level) in the convolutional architecture. The results revealed that high-level features were most impactful for AD vs. CN classification, especially in axial and coronal orientations, whereas mid-level features played a larger role in differentiating MCI from CN in sagittal views. In the AD vs. MCI tasks, the model relied more on low-level features, suggesting that fine spatial details—like edges and textures—may be helpful in capturing the subtle differences between these two conditions.

Figure 9.

Contribution of Low, Mid, and High Feature Levels in Alzheimer’s Classification Tasks.

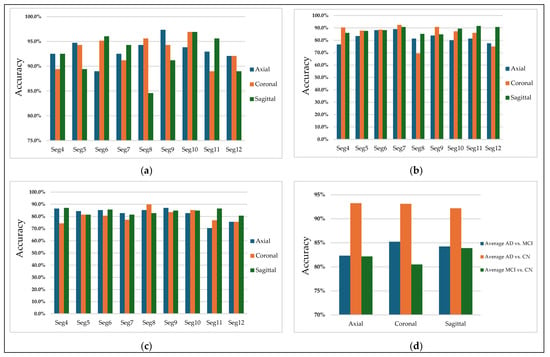

Figure 10 highlights the classification accuracy for different slices and illustrates the effect of orientations on the model’s performance. The first three subplots (a), (b), and (c) are for the binary classification tasks: AD vs. MCI, AD vs. CN, and MCI vs. CN, respectively. Each subplot shows the accuracy plotted against multiple 2D MRI selected slices of nine segments (Seg-4 to Seg-12) for the sagittal, coronal, and axial orientations. In contrast, the fourth subplot (d) represents the average of the three orientations across all slices.

Figure 10.

Classification accuracy for (a) AD vs. CN, (b) AD vs. MCI, (c) MCI vs. CN, and (d) Average accuracy across all slices.

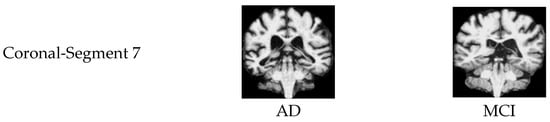

Subplot (a), corresponding to AD vs. MCI, shows that the selected slices from segments 7 of the coronal plane have more discriminative features in distinguishing Alzheimer’s disease and mild cognitive impairment patients, achieving an accuracy of 92%. Figure 11 illustrates sample images that achieved higher accuracies in classifying AD vs. MCI.

Figure 11.

Samples of 2D MRI slices achieving the highest classification accuracies for AD vs. MCI.

Subplot (b), which corresponds to the AD vs. CN classification task, indicates that the highest accuracy values, reaching 97%, were achieved with three specific orientation–slice combinations: axial plane with segment 9, coronal and sagittal plane with segment 10. Figure 12 shows some samples of those images. The above results suggest that these orientation–segment combinations have more discriminative features for distinguishing Alzheimer’s disease from cognitively normal subjects than the others, as evidenced by the higher classification accuracy achieved in these cases. The graph further illustrates that the center segments of the brain play the most significant role in the classification process on the axial and coronal planes. The opposite happens on the sagittal plane, where the edge segments have a higher effect on the prediction process.

Figure 12.

Samples of 2D MRI slices achieving the highest classification accuracies for AD vs. CN.

Notably, the best-performing segments identified by our model, such as the ninth axial segment, yielded the highest classification accuracy in distinguishing AD from CN. The strong performance of the ninth axial segment in distinguishing AD from CN can be attributed to its anatomical coverage of the medial temporal lobe, including the hippocampus and surrounding structures regions commonly affected in early Alzheimer’s pathology. It is further supported by the attention weights, which show that high-level features contributed most significantly to classification (49.1%), suggesting that the model relied on disease-relevant atrophic patterns present in this slice.

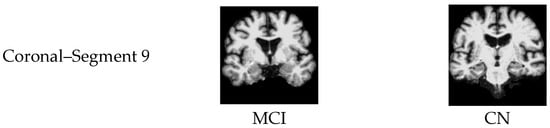

In general, MCI vs. CN, shown in subplot (c), yielded the lowest accuracy values compared to the other classification processes. The Coronal plane played the most significant role in identifying MCI patients. Figure 13 illustrates samples of 2D MRI slices that achieved higher accuracies in classifying MCI vs. CN.

Figure 13.

Samples of 2D MRI slices achieving the highest classification accuracies for MCI vs. CN.

Finally, subplot (d) illustrates a comparative analysis of the average accuracy of all slices for the three orientations. AD vs. CN shows the highest average accuracy among all selected slices from all segments, suggesting that distinguishing Alzheimer’s disease from cognitively normal individuals is more straightforward using CNN-based features.

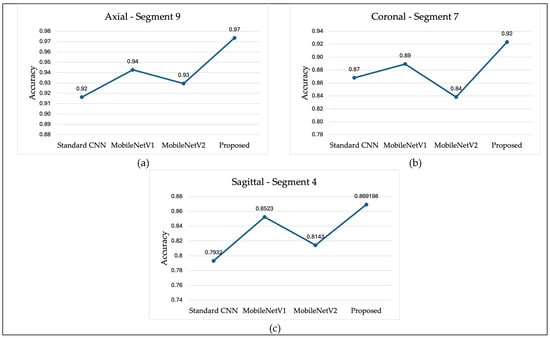

Figure 14 presents a comparison of classification accuracy between the proposed method and several state-of-the-art models, including MobileNetV1, MobileNetV2, and a conventional three-layer CNN. Subfigure (a) illustrates the classification accuracy for the AD vs. CN task using axial plane slices from Segment 9. Subfigure (b) shows the performance for the AD vs. MCI task using coronal plane slices from Segment 7. Lastly, subfigure (c) compares the results for the MCI vs. CN task using sagittal plane slices from Segment 4. In all three comparisons, the proposed model achieved higher classification accuracy than the standard CNN and both versions of MobileNet. Notably, MobileNetV1 generally performed better than MobileNetV2 and the standard CNN.

Figure 14.

Classification Accuracy Comparison (a) AD vs. CN, (b) AD vs. MCI, (c) MCI vs. CN.

Table 4, Table 5 and Table 6 present detailed results for the accuracy, precision, sensitivity, specificity, F1 score, and AUC for the comparisons of AD vs. CN, AD vs. MCI, and MCI vs. CN, respectively. Table 4 illustrates that, as previously mentioned, Segment 9 in the axial orientation obtained the highest accuracy. Axial and coronal slices showed comparable average accuracies (93.2 and 93.1, respectively), slightly outperforming the sagittal view (92.2).

Table 4.

AD vs. CN.

Table 5.

AD vs. MCI.

Table 6.

MCI vs. CN.

Table 5 shows that among the three orientations, coronal slices achieved the highest average accuracy (85.2), with Segment 7 performing best, followed closely by Segment 9. The sagittal plane also showed strong performance in Segments 10 and 11. In contrast, axial slices demonstrated comparatively lower average accuracy, with Segment 7 being the most discriminative.

Table 6 shows that, on average, sagittal slices achieved the highest accuracy, specifically for segments 4, 6, and 11. The axial orientation also yielded comparable results, with an average accuracy of 82.2, particularly in Segment 9. Meanwhile, coronal slices had the lowest average accuracy, although Segment 8 achieved an accuracy of 89.9. These results suggest that mid-to-edge segments in the sagittal and axial planes are more informative for distinguishing MCI from CN.

5. Conclusions

In this study, we investigated the impact of 2D MRI slice orientations, along with the segment location of the selected slice, on the performance of a lightweight CNN model for AD diagnosis. The used images were derived from 3D MRIs obtained from the ADNI dataset, across the three MRI orientations: axial, coronal, and sagittal. The model’s performance was evaluated using different performance metrics. The results demonstrated a clear influence of both the orientation and the slice position. The model performed the best in distinguishing AD from CN subjects; in particular, among all configurations, the axial slices yielded the most consistent performance across multiple segments (particularly segment 9), where it achieved the highest overall accuracy of 97.4%. The coronal slices also demonstrated strong results in segments 8 through 11. In contrast, the sagittal orientation performed best in segment 10, with an accuracy of 96.9%.

In conclusion, this work demonstrates that the combination of slice orientation and slice location has a significant impact on classification performance, and that a careful choice of these parameters can enhance model performance for lightweight CNN-based Alzheimer’s disease classification. Future research may extend these findings by incorporating the most significant segments to explore cross-orientation fusion strategies or validating the results on larger multi-site datasets to assess their generalizability.

Author Contributions

Conceptualization, N.A.M. and M.H.A.; methodology, N.A.M.; software, N.A.M.; validation, N.A.M. and M.H.A.; formal analysis, N.A.M. and M.H.A.; investigation, N.A.M.; resources N.A.M. and M.H.A.; data curation, N.A.M.; writing—original draft preparation, N.A.M.; writing—review and editing, M.H.A.; visualization, N.A.M.; supervision, M.H.A.; project administration, N.A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available at: https://adni.loni.usc.edu/ accessed on 11 July 2025.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ewers, M.; Sperling, R.A.; Klunk, W.E.; Weiner, M.W.; Hampel, H. Neuroimaging Markers for the Prediction and Early Diagnosis of Alzheimer’s Disease Dementia. Trends Neurosci. 2011, 34, 430–442. [Google Scholar] [CrossRef]

- Better, M.A. Alzheimer’s disease facts and figures. Alzheimers Dement. 2023, 19, 1598–1695. [Google Scholar]

- Zhou, Q.; Wang, J.; Yu, X.; Wang, S.; Zhang, Y. A Survey of Deep Learning for Alzheimer’s Disease. Mach. Learn. Knowl. Extr. 2023, 5, 611–668. [Google Scholar] [CrossRef]

- Ramalho, B.A.C.; Bortolato, L.R.; Gomes, N.D.; Wichert-Ana, L.; Padovan-Neto, F.E.; da Silva, M.A.A.; de Lacerda, K.J.C.C. The Impact of the Orientation of MRI Slices on the Accuracy of Alzheimer’s Disease Classification Using Convolutional Neural Networks (CNNs). J. Med. Artif. Intell. 2024, 7, 35. [Google Scholar] [CrossRef]

- Yang, H.; Xu, H.; Li, Q.; Jin, Y.; Jiang, W.; Wang, J.; Wu, Y.; Li, W.; Yang, C.; Li, X.; et al. Study of Brain Morphology Change in Alzheimer’s Disease and Amnestic Mild Cognitive Impairment Compared with Normal Controls. Gen. Psychiatr. 2019, 32, e100005. [Google Scholar] [CrossRef] [PubMed]

- Bi, X.; Li, S.; Xiao, B.; Li, Y.; Wang, G.; Ma, X. Computer Aided Alzheimer’s Disease Diagnosis by an Unsupervised Deep Learning Technology. Neurocomputing 2020, 392, 296–304. [Google Scholar] [CrossRef]

- Frisoni, G.; Fox, N.; Jack, C. The clinical use of structural MRI in Alzheimer disease. Nat. Rev. Neurol. 2010, 6, 67–77. [Google Scholar] [CrossRef] [PubMed]

- Oliveira, M.J.; Ribeiro, P.; Rodrigues, P.M. Machine Learning-Driven GLCM Analysis of Structural MRI for Alzheimer’s Disease Diagnosis. Bioengineering 2024, 11, 1153. [Google Scholar] [CrossRef] [PubMed]

- Qiang, Y.-R.; Zhang, S.-W.; Li, J.-N.; Li, Y.; Zhou, Q.-Y. Diagnosis of Alzheimer’s Disease by Joining Dual Attention CNN and MLP Based on Structural MRIs, Clinical and Genetic Data. Artif. Intell. Med. 2023, 145, 102678. [Google Scholar] [CrossRef] [PubMed]

- Mohi ud din dar, G.; Bhagat, A.; Ansarullah, S.I.; Othman, M.T.B.; Hamid, Y.; Alkahtani, H.K.; Ullah, I.; Hamam, H. A Novel Framework for Classification of Different Alzheimer’s Disease Stages Using CNN Model. Electronics 2023, 12, 469. [Google Scholar] [CrossRef]

- Illakiya, T.; Ramamurthy, K.; Siddharth, M.V.; Mishra, R.; Udainiya, A. AHANet: Adaptive Hybrid Attention Network for Alzheimer’s Disease Classification Using Brain Magnetic Resonance Imaging. Bioengineering 2023, 10, 714. [Google Scholar] [CrossRef] [PubMed]

- Hassan, N.; Musa Miah, A.S.; Shin, J. Residual-Based Multi-Stage Deep Learning Framework for Computer-Aided Alzheimer’s Disease Detection. J. Imaging 2024, 10, 141. [Google Scholar] [CrossRef] [PubMed]

- Jin, Z.; Gong, J.; Deng, M.; Zheng, P.; Li, G. Deep Learning-Based Diagnosis Algorithm for Alzheimer’s Disease. J. Imaging 2024, 10, 333. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.W.; Lee, H.E.; Lee, S.; Oh, K.T.; Yun, M.; Yoo, S.K. Slice-Selective Learning for Alzheimer’s Disease Classification Using a Generative Adversarial Network: A Feasibility Study of External Validation. Eur. J. Nucl. Med. Mol Imaging 2020, 47, 2197–2206. [Google Scholar] [CrossRef] [PubMed]

- Puente-Castro, A.; Fernandez-Blanco, E.; Pazos, A.; Munteanu, C.R. Automatic Assessment of Alzheimer’s Disease Diagnosis Based on Deep Learning Techniques. Comput. Biol. Med. 2020, 120, 103764. [Google Scholar] [CrossRef] [PubMed]

- De Souza, R.G.; Dos Santos Lucas E Silva, G.; Dos Santos, W.P.; De Lima, M.E.; Alzheimer’s Disease Neuroimaging Initiative. Computer-Aided Diagnosis of Alzheimer’s Disease by MRI Analysis and Evolutionary Computing. Res. Biomed. Eng. 2021, 37, 455–483. [Google Scholar] [CrossRef]

- Inan, M.S.K.; Sworna, N.S.; Islam, A.K.M.M.; Islam, S.; Alom, Z.; Azim, M.A.; Shatabda, S. A Slice Selection Guided Deep Integrated Pipeline for Alzheimer’s Prediction from Structural Brain MRI. Biomed. Signal Process. Control 2024, 89, 105773. [Google Scholar] [CrossRef]

- Ghosh, T.; Palash, M.I.A.; Yousuf, M.A.; Hamid, M.A.; Monowar, M.M.; Alassafi, M.O. A Robust Distributed Deep Learning Approach to Detect Alzheimer’s Disease from MRI Images. Mathematics 2023, 11, 2633. [Google Scholar] [CrossRef]

- Khatri, U.; Kwon, G.-R. Alzheimer’s Disease Diagnosis and Biomarker Analysis Using Resting-State Functional MRI Functional Brain Network with Multi-Measures Features and Hippocampal Subfield and Amygdala Volume of Structural MRI. Front. Aging Neurosci. 2022, 14, 818871. [Google Scholar] [CrossRef] [PubMed]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017. [Google Scholar] [CrossRef]

- Nogales, A.; García-Tejedor, Á.J.; Monge, D.; Vara, J.S.; Antón, C. A Survey of Deep Learning Models in Medical Therapeutic Areas. Artif. Intell. Med. 2021, 112, 102020. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).