Abstract

Accurate sky–obstacle segmentation in hemispherical fisheye imagery is essential for solar irradiance forecasting, photovoltaic system design, and environmental monitoring. However, existing methods often rely on expensive all-sky imagers and region-specific training data, produce coarse sky–obstacle boundaries, and ignore the optical properties of fisheye lenses. We propose a low-cost segmentation framework designed for fisheye imagery that combines synthetic data generation, lens-aware augmentation, and a hybrid deep-learning pipeline. Synthetic fisheye training images are created from publicly available street-view panoramas to cover diverse environments without dedicated hardware, and lens-aware augmentations model fisheye projection and photometric effects to improve robustness across devices. On this dataset, we train a convolutional neural network (CNN) and refine its output with gradient-boosted decision trees (GBDT) to sharpen sky–obstacle boundaries. The method is evaluated on real fisheye images captured with smartphones and low-cost clip-on lenses across multiple sites, achieving an Intersection over Union (IoU) of 96.63% and an F1 score of 98.29%, along with high boundary accuracy. An additional evaluation on an external panoramic baseline dataset confirms strong cross-dataset generalization. Together, these results show that the proposed framework enables accurate, low-cost, and widely deployable hemispherical sky segmentation for practical solar and environmental imaging applications.

1. Introduction

1.1. Context and Motivation

Long-term resource assessment is a critical step in photovoltaic system design, since developers need reliable estimates of how much solar energy will be available at a site, often in complex environments where surrounding obstacles such as trees, poles, or nearby buildings create shading. Traditional approaches often rely on digital surface models [1], but their ability to represent these local features is limited by data resolution and coverage.

Sky imaging provides an interesting alternative by using fisheye cameras to capture the entire sky hemisphere in a single frame. These images record both the visible sky and the surrounding obstacles, providing the basis to study how obstructions affect solar access. Fisheye lenses are available in both high-end, professional models and inexpensive consumer versions; the latter include low-cost clip-on smartphone adapters that are commercially available for less than 30 USD, which make fisheye imagery easy to acquire in practice.

These fisheye images not only capture the visible sky but also provide the information needed for quantitative solar assessment. In solar engineering, global irradiance is commonly described as the sum of direct beam, diffuse sky, and ground-reflected components [2]. Accurate sky–obstacle segmentation from fisheye imagery enables the estimation of direct and diffuse irradiance components by computing shading factors that describe the fraction of the sky dome obstructed by surrounding objects. Specifically, one shading factor corresponds to the direct component, obtained by tracing the sun’s path and identifying obstructions, and another corresponds to the diffuse component, derived from the proportion of the sky covered by obstacles. These coefficients quantify the irradiance loss due to shading and thus allow estimation of effective irradiance from fisheye images [3].

To support such analyses, a fisheye-specific segmentation framework is introduced that is low cost, accurate, and generalizable across diverse environments. The framework is further integrated into a shading estimation tool designed to remain simple and practical for end users.

Before reviewing prior work, two specific issues are highlighted as major obstacles for fisheye-based sky segmentation: the unique distortions introduced by fisheye optics, and the inadequacy of existing public datasets.

1.2. Challenges of Fisheye Imagery

Compared to conventional pinhole or rectilinear lenses, fisheye optics use a highly non-linear projection to map an extremely wide field of view onto the image plane. This mapping introduces strong radial distortion that increases toward the image periphery. Rather than preserving straight lines and geometric proportions, fisheye lenses deliberately trade spatial fidelity for maximum scene coverage.

The fisheye imaging process is typically modeled as a two-step projection onto a virtual unit sphere. First, 3D scene points are projected linearly onto the unit sphere. Then, the spherical points are mapped onto the image plane through a nonlinear projection function. Four projection models are commonly used to describe this transformation: the equidistant, equisolid-angle, orthographic, and stereographic projections [4].

These structural properties pose considerable challenges for conventional image processing methods. First, the distortion alters object shapes, making it difficult to apply models pretrained on undistorted datasets. Second, the image borders often exhibit degraded visual quality due to optical effects such as astigmatism, blur, and chromatic aberration [5], all of which can impair the predictions of segmentation models not specifically adapted to these distortions.

1.3. Limitations of Public Datasets for Fisheye Sky Segmentation

Despite the growing availability of public datasets for semantic segmentation, existing resources are largely inadequate for our target task. Most publicly available datasets such as Cityscapes [6], ADE20K [7], and ApolloScape [8] are designed for autonomous driving or general scene parsing and typically employ rectilinear camera systems. Consequently, fisheye imagery in these datasets is either absent or captured in a manner that does not represent a true hemispherical view of the sky. Moreover, these datasets often focus on object-centric or urban scene segmentation rather than sky–obstacle discrimination.

Furthermore, when sky annotations are available in these datasets, they tend to be coarse, with imprecise boundaries that are inadequate for applications requiring fine-grained pixel-level segmentation of sky–obstacle boundaries. Occlusions such as tree branches, cables, or architectural elements near the skyline are often simplified or excluded from annotation masks. Additionally, most public datasets are geographically biased toward urban environments in Europe, North America, or China, limiting their representativeness in rural, mountainous, or climatically diverse locations.

1.4. Contributions

These observations highlight the need for approaches that are both robust across diverse imaging conditions and capable of precise, pixel-accurate sky–obstacle delineation required for shading-factor estimation. To address these gaps, our work makes three main contributions:

- A publicly released, globally diverse dataset comprising 182 fisheye images: 80 for CNN training and 60 for meta-model refinement, all generated from Google Street View panoramas, and 21 real smartphone images each for validation and testing. Only the corresponding annotations are publicly provided, along with a script that allows users to retrieve the original panoramas through the official Google Street View API. The real captured images used for validation and testing are released in full to ensure reproducibility and systematic benchmarking.

- Lens-aware augmentation strategies that simulate chromatic aberration and projection variability, improving robustness to the optical distortions of real fisheye systems. They contribute an IoU increase of +0.14% and significantly enhance generalization across heterogeneous lenses and imaging conditions.

- A hybrid segmentation framework that integrates a convolutional neural network with a gradient-boosted decision tree meta-model for pixel-level refinement. The meta-model yields consistent region-level gains (up to +0.89% IoU) and markedly larger improvements on boundary-focused metrics (up to +3.38% Boundary IoU and +5.14% Boundary F1) over the CNN-only baseline, showing that GBDT post-processing is an effective way to sharpen sky–obstacle contours.

1.5. Paper Organization

The remainder of this article is structured as follows. Section 2 reviews related works in sky segmentation and fisheye image analysis, providing context within existing research. Building on this background, Section 3 details the proposed fisheye sky segmentation pipeline, including dataset generation, augmentation strategies, and the hybrid CNN-GBDT framework. Section 4 presents quantitative benchmarks and segmentation metrics for comparison across models, together with qualitative visualizations on real fisheye test images. This section further examines the impact of individual contributions through ablation studies, evaluates performance on an external panoramic dataset, and analyzes runtime efficiency to assess practical applicability. Section 5 discusses the implications of the findings, highlights current limitations, and outlines possible directions for future work. Finally, Section 6 summarizes the contributions and main results of the study.

2. Related Work

2.1. Sky Imaging for Irradiance Forecasting

Recent advances in machine learning have applied sky imaging primarily to short-term irradiance forecasting (nowcasting), where cloud detection and motion estimation are used to predict solar variability over minutes to hours [9,10,11,12,13]. For example, deep networks have been especially effective for cloud analysis, covering cloud classification and sector clustering [14] and diurnal/nocturnal cloud masks via enhanced Fully Convolutional Networks (FCNs) [15], and multi-location deep learning has shown predictive value for irradiance estimation [16]. These studies highlight the potential of combining all-sky imagery with deep learning, but their focus remains on dynamic cloud modeling and short-term prediction rather than on static obstructions and long-term resource assessment.

2.2. Early Approaches to Sky Segmentation

Since the focus is on long-term solar resource assessment, the key challenge is to account for static obstructions across the entire sky hemisphere, as they can attenuate direct solar radiation and reduce diffuse irradiance from the sky dome. This requires accurate sky–obstacle segmentation, which has been approached in different ways over time. Early approaches to fisheye sky segmentation relied on handcrafted cues. Classical pipelines used edge or region-based descriptors, including region classification on fisheye views [17], Hellinger-kernel distances over local descriptors [18], and edge-centric methods for solar exposure prediction [19]. While computationally efficient, these methods are sensitive to illumination conditions, exposure settings, and lens artifacts. As a result, they often yield coarse skylines and struggle with thin obstacles such as branches or cables. This sensitivity is particularly critical in applications such as hemispherical canopy photography, where contrast variations strongly affect threshold-based segmentation [20,21].

2.3. Learning-Based Sky Segmentation and Distortion-Aware Models

The limitations of handcrafted pipelines, particularly their limited ability to generalize across diverse imaging conditions, motivated the exploration of learning-based models that can automatically extract more robust representations from data. In rectilinear imagery, learning-based sky segmentation has been addressed with supervised classifiers trained on color and texture-based features in hazy outdoor scenes [22] and for monocular obstacle avoidance, where sky/non-sky masks provide horizon and obstacle cues for navigation [23]. More recent approaches rely on convolutional models, either to adaptively select among classical sky-segmentation algorithms [24] or to predict pixel-wise sky-ground masks for navigation imagery [25], thereby reducing manual feature design and annotation effort. However, these methods all operate on rectilinear images and do not use fisheye imagery. More recently, distortion-aware architectures have emerged that explicitly address the geometry of wide-angle optics. Methods such as deformable convolutions [26,27] and radial transformer designs [28] adapt segmentation networks to fisheye projection models, marking an important step toward high-fidelity analysis of hemispherical views. However, these models are generally developed for urban driving scenes, where the sky boundary is not the primary target and segmentation accuracy is evaluated at the object level. Our framework instead focuses on precise, pixel-wise sky segmentation across globally distributed fisheye imagery, emphasizing robust skyline fidelity rather than multi-class urban semantics. While the present method does not incorporate deformation modules, these techniques are conceptually relevant to handling fisheye distortion, and we consider their integration as a potential direction for improving sky segmentation accuracy under diverse projection geometries.

2.4. Cross-Domain Applications of Sky Segmentation

The relevance of sky segmentation extends well beyond solar forecasting and has become essential in fields such as navigation, positioning, and remote sensing. For example, skyline-based approaches have been used for visual navigation and localization in urban canyons [29]. In navigation and Global Navigation Satellite Systems (GNSS) remote sensing of such environments, fisheye sky segmentation has been exploited in several complementary ways to mitigate signal degradation, including FCN-based sky masks in tightly coupled GNSS/Inertial Navigation System (INS)/Vision systems [30], skymask matching between sky-pointing fisheye images and 3D city models for positioning and heading estimation [31], GNSS satellite visibility analysis and LOS/Non-Line-Of-Sight (NLOS) discrimination based on fisheye sky masks [32], and patch-based GNSS satellite state characterization from fisheye imagery [33]. Recent work has also demonstrated the utility of fisheye sky segmentation in urban climate studies. In particular, the Sky View Factor, a key variable for modeling radiative exchange and thermal comfort, can be estimated from hemispherical sky masks synthesized from Google Street View panoramas [34,35], enabling large-scale, low-cost assessments of urban morphology and sky openness. In parallel, forest ecology has leveraged fisheye sky segmentation to model light transmission under tree canopies. Hemispherical photographs have been used to estimate subcanopy shortwave radiation by analyzing canopy density and structure [36]. Despite the diversity of use cases, many public systems supporting these applications are geographically localized (often urban-only), and their masks can exhibit coarse sky–obstacle boundaries; such imperfections are inadequate for tasks that rely on precise skyline. Our work targets this gap by emphasizing pixel-wise sky–obstacle delineation in hemispherical views and by preserving thin occluders (e.g., branches, cables) needed for accurate sky openness, canopy light modeling, and visibility assessment.

These examples illustrate the central role of sky segmentation in modeling solar radiation exposure and sky openness in both natural and urban environments, and show that improving segmentation fidelity can provide tangible benefits across real-world applications. Building on this context, the next section describes the proposed fisheye-specific segmentation pipeline and its underlying methodology.

3. Materials and Methods

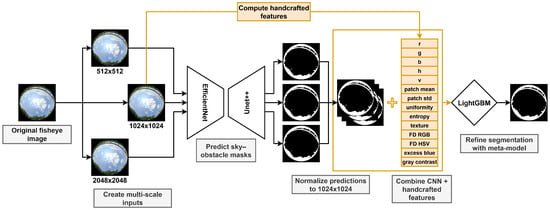

Figure 1 illustrates the main stages of the fisheye sky segmentation pipeline, from image preprocessing to model inference. Each input image is resized to three resolutions (512, 1024, and 2048 pixels), and a CNN is applied independently at each scale to produce probability maps. These predictions are then rescaled to a common 1024 × 1024 resolution and flattened for feature extraction. Together with handcrafted color, texture, and contrast descriptors, they serve as inputs to a Light Gradient Boosting Machine (LightGBM) [37], a GBDT meta-model that refines the segmentation and improves boundary accuracy across diverse scenes. The following subsections describe each stage of this pipeline in detail.

Figure 1.

Overview of the proposed fisheye sky segmentation pipeline. Fisheye images are processed at multiple scales with a CNN, and refined by a LightGBM meta-model.

3.1. Dataset

3.1.1. Data Generation Strategy

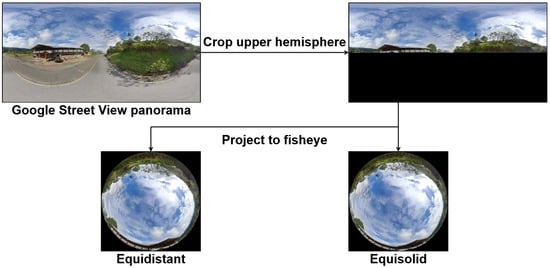

Given the absence of a suitable public benchmark that meets our criteria, we constructed a synthetic data generation pipeline based on Google Street View panoramas, illustrated in Figure 2. This pipeline, combined with annotation strategies, allows us to produce high-fidelity segmentation labels tailored specifically for global-scale sky analysis in fisheye imagery.

Figure 2.

Conversion pipeline from a Google Street View panorama to synthetic fisheye views.

The panoramas provided by Google Street View are encoded in an equirectangular projection, where each pixel corresponds to geographic coordinates. The horizontal axis encodes the longitude , and the vertical axis encodes the latitude . To simulate a camera oriented toward the sky, we crop the top half of each panorama, corresponding to the upper hemisphere. The latitude range is therefore limited to .

To simulate a fisheye image, we generate a normalized 2D grid of image coordinates , representing a square sensor of size . At each point in the grid, we compute the radial distance from the center:

We focus on the equidistant and equisolid-angle projections because they correspond to the most common models used in low-cost fisheye lenses [38].

Equidistant Projection

In the equidistant fisheye model, the incident angle between the optical axis and an incoming ray is linearly proportional to the radial distance r on the image plane, with f denoting the focal length of the projection [4]:

To map the upper hemisphere () to the unit disk (), we set when , which implies:

Thus, the angle becomes

We compute spherical coordinates from each normalized fisheye pixel position. The zenith angle corresponds to the incident angle defined by , while the azimuthal angle is computed as

To sample RGB values from the source panorama, we project each computed spherical direction to coordinates in the cropped equirectangular image, which has width W and height H. These coordinates are computed as

Here, and are normalized to the range before being scaled to pixel indices. then denote the corresponding pixel locations in the panorama. RGB values are sampled using nearest-neighbor interpolation. A circular mask is applied to exclude pixels where , corresponding to rays outside the field of view.

Equisolid-Angle Projection

In the equisolid-angle projection model, the radial distance r from the image center is related to the incident angle [4]:

The inverse mapping recovers from r:

We normalize the projection such that the entire upper hemisphere () maps to the unit disk (), yielding

Substituting this into the inverse projection gives the final form

We then compute spherical coordinates as

where is the azimuthal angle and the zenith angle. The panorama indices are then computed using the same formulas as in the equidistant case. Nearest-neighbor and circular masking are applied identically.

This procedure enables the efficient synthesis of large volumes of realistic fisheye images using publicly available equirectangular panoramas. For our application, we prioritize rural and semi-urban scenes with diverse occlusions (e.g., trees, cables), while excluding night-time scenes and unoccluded landscapes. The resulting dataset covers a broad range of environmental conditions, including snow, desert, and mountainous terrain. Although nearest-neighbor interpolation is sufficient for segmentation purposes, bilinear interpolation could alternatively be used to produce smoother and more photorealistic fisheye renderings.

3.1.2. Dataset Partitioning

Our dataset consists of both synthetic and real fisheye images. The synthetic images were generated from Google Street View panoramas using two distinct fisheye projection models. The CNN was trained on 80 synthetic images, including 63 generated with the equidistant projection and 17 with the equisolid-angle projection. The LightGBM meta-model was trained on an additional 60 synthetic images, consisting of 50 equidistant and 10 equisolid-angle samples.

To evaluate the performance of our models under real-world conditions, we additionally collected a set of 42 real fisheye images captured using two different smartphones: the Poco X4 Pro 5G (Poco/Xiaomi, Beijing, China) and the Samsung Galaxy A52s 5G (Samsung Electronics, Suwon, Republic of Korea). Both devices were equipped with 2 Pixter-branded fisheye lenses (Pixter, Paris, France) offering a 180° and 235° field of view, respectively. The images were acquired across three different sites: the CNRS station in Moulis (16 images), the LAAS-CNRS campus (16 images), and the University of Toulouse (10 images), thus covering a wide variety of rural, semi-urban, and urban settings. This diversity is critical for a robust evaluation of model generalization.

In total, the dataset comprises 182 fisheye images, which we partitioned into 80 training images for CNN learning, 60 images for meta-model refinement, 21 validation images, and 21 test images. The training and meta-model sets consist entirely of synthetic data, while the validation and test sets include only real fisheye images, ensuring that model evaluation is performed on authentic, unseen sensor data. The overall dataset composition, including projection type, quantity, and purpose, is summarized in Table 1.

Table 1.

Summary of dataset composition by projection model, quantity, and purpose.

3.1.3. Annotation Strategy

Initial manual annotation. Coarse masks were first derived using simple image processing: Canny edge detection and intensity thresholding on the blue channel. The union of these masks provided an initial sky estimate, which was manually refined in GIMP [39]. This process produced 79 high-quality annotations (63 for training, 8 for validation, 8 for testing), each requiring approximately 40 min.

Semi-automated annotation. To accelerate dataset expansion, a preliminary segmentation model trained on the 63 manual annotations was used to pre-label additional fisheye images. Predictions were then corrected manually to achieve pixel-level accuracy. This strategy enabled efficient scaling to 103 more annotated images (60 for meta-model training, 17 for CNN training, 13 for validation, and 13 for testing).

Given the significant time required for pixel-level refinement, the dataset was not further expanded once additional annotations no longer yielded noticeable performance gains. With the final set of 182 annotated images, the segmentation framework achieves stable and competitive results.

3.2. Sky Segmentation Pipeline

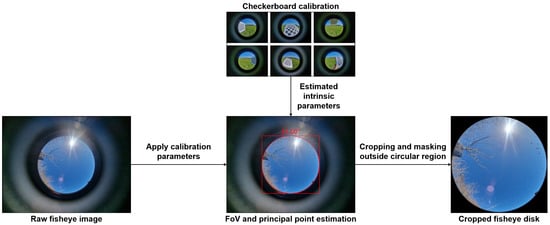

3.2.1. Fisheye Calibration and Disk Extraction

To obtain spatially accurate fisheye disk images, the camera is first calibrated using an enhanced omnidirectional calibration pipeline based on the method of Scaramuzza et al. [40], implemented via the py-omnicalib library [41]. Calibration is performed by detecting checkerboard corners across a diverse image set, refining subpixel correspondences, and optimizing the projection polynomial that maps 3D incident angles to radial image distances. From this calibration, the camera’s principal point and effective field of view (FoV) are estimated by minimizing the reprojection error in angular space. For consistency with hemispherical sky analysis, the estimated FoV is limited to (corresponding to a zenith angle range of 0–). Consequently, even when the original fisheye lens exceeds this range, the image is cropped to retain only the upper hemisphere, ensuring a consistent representation of the sky dome. These intrinsic parameters allow computation of the maximum usable radius of the fisheye disk relative to the principal point. A square crop centered at the principal point is then applied, and all pixels outside the calculated circular region are masked, producing a clean, circularly bounded fisheye disk suitable for downstream pixel-wise processing, as illustrated in Figure 3. This calibration and disk-extraction procedure is applied to all real fisheye images used for validation and testing in our experiments.

Figure 3.

Fisheye calibration and disk extraction pipeline.

3.2.2. Data Augmentation Strategies

As discussed in Section 1.2, fisheye imagery introduces geometric and chromatic distortions that challenge conventional computer vision models. To improve robustness, we designed a data augmentation pipeline tailored to the optical characteristics of fisheye lenses (Figure 4). This pipeline combines two fisheye-specific augmentations with a set of standard photometric and structural transforms:

Figure 4.

Examples of fisheye-specific augmentations. (Left) chromatic aberration simulation. (Right) synthetic radial distortion applied within the red square.

- 1.

- Chromatic Aberration: a common artifact in wide-angle optics, particularly in lower-cost fisheye lenses, where refractive dispersion causes different wavelengths of light to focus at varying depths. This leads to visible color fringing, often at object boundaries in peripheral image regions [5]. To simulate this effect (Figure 4, left), we implement a radial chromatic shift: the red and blue channels are displaced outward and inward, respectively, while the green channel remains fixed. The displacement scales with distance from the optical center, mimicking wavelength-dependent dispersion and training the network to handle color misalignments without overreliance on chromatic boundaries.

- 2.

- Fisheye Lens Distortion: to simulate the geometric variability of real-world fisheye optics, we apply synthetic radial distortion using OpenCV’s cv2.fisheye model [42]. We apply a parametric distortion defined by a sampled set of radial coefficients , alongside a camera matrix derived from image dimensions. This transformation (Figure 4, right) introduces nonlinear warping consistent with lenses of varying focal lengths and manufacturing tolerances, exposing the model to a broad distribution of optical behaviors and improving generalization across domains.

- 3.

- Photometric and Structural Augmentations: to address variability in lighting and imaging conditions, we apply standard augmentations: random gamma, brightness, contrast, saturation, hue, and spatial filters such as Gaussian blur and sharpening to simulate optical softness or lens imperfections. We also include random horizontal and vertical flips, which preserve the circular symmetry of fisheye images while increasing orientation diversity in training. Additionally, we employ CutMix [43] to blend patches from different training images, increasing structural diversity and reducing overfitting to scene-specific layout.

In practice, the augmentation parameters were empirically tuned to improve generalization and limit overfitting. Photographic augmentations were applied with randomly sampled values reflecting weak augmentations, as these produced more stable results than strong ones. Fisheye-related augmentations were implemented as strong augmentations to expose the model to a wider range of distortions, and all augmentations were applied stochastically so that each training batch presented a different combination of transformations. Altogether, these augmentations addressed the core degradations found in fisheye imagery and, despite the relatively small dataset, enabled the training of a robust and efficient model capable of generalizing across diverse environments and lens geometries.

3.2.3. Training Procedure

Model training was carried out on an NVIDIA H100 GPU with 80 GB VRAM using high-resolution input images of size , to achieve fine-grained pixel-level segmentation accuracy. The implementation was developed in Python 3.11.14 using PyTorch 2.6.0 with CUDA 12.6. The optimization process employed a combined loss function designed to balance pixel-wise classification and region overlap:

We used the AdamW optimizer with an initial learning rate of , weight decay of , and a cosine annealing learning rate schedule for gradual decay. To mitigate memory constraints while maintaining numerical stability, training was performed in mixed precision mode using the bfloat16 format. Due to the large input resolution and limited dataset size, the batch size was set to 4. To ensure reproducibility, a fixed seed was applied across all modules.

During training, we monitored the model using the training loss and the validation loss, the latter computed on a separate validation set. An early stopping criterion halted training after 4 consecutive epochs without improvement in the validation loss, and the corresponding loss curves were inspected to detect overfitting or training instability. After training, the final model selected according to the validation loss was evaluated on the independent test set using standard metrics, including Accuracy, Precision, Recall, F1 Score, and IoU. To ensure consistent evaluation across the valid image region, all metrics were computed exclusively within a circular binary mask corresponding to the fisheye projection area.

3.2.4. Architecture Selection

To address the trade-offs between segmentation accuracy, computational cost, and generalization, we adopt an encoder–decoder framework for binary sky–obstacle segmentation. In this structure, the encoder extracts hierarchical feature representations from the fisheye input, while the decoder reconstructs the segmentation map at the original resolution. This design provides a well-established foundation for systematically benchmarking encoder backbones and decoder variants under controlled conditions.

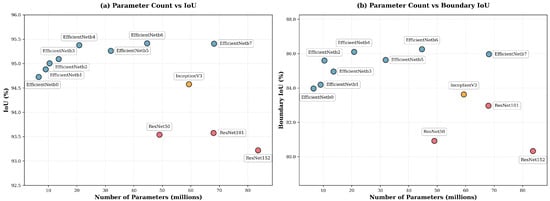

We systematically benchmarked a wide range of pretrained encoder backbones commonly used in semantic segmentation, including ResNet-50, ResNet-101, ResNet-152 [44], InceptionV4 [45], and the EfficientNet [46] family from b0 to b7. All models were initialized using the AdvProp [47] pretraining strategy. Encoder comparisons were performed using a fixed decoder (U-Net++), and each configuration was trained and evaluated over 10 independent runs. Table 2 reports mean and standard deviation for region-level metrics (F1, IoU) and boundary-focused metrics (Boundary F1, Boundary IoU), which emphasize contour accuracy. The formal definitions of these metrics follow in Section 4.1.

Table 2.

Performance comparison of different encoders using a U-Net++ decoder. Metrics are reported in %. Bold values indicate the best result within each column.

Overall, these results highlight a clear hierarchy among encoder backbones. EfficientNet variants consistently outperform ResNet and InceptionV4 models across both region-level and boundary-focused metrics, while also using substantially fewer parameters. This trend is consistent with the design of EfficientNet: its compound scaling strategy increases depth, width, and input resolution together, which provides strong feature representations at several spatial scales. This is useful in our setting, where large, smooth sky regions coexist with thin, high-frequency sky–obstacle boundaries. The squeeze-and-excitation modules further adjust channel responses and may help distinguish small visual differences near the sky boundary. InceptionV4 uses parallel convolution branches and therefore tends to outperform ResNet, but it remains heavier and does not reach the boundary accuracy of mid-scale EfficientNet variants. ResNet, by contrast, mainly relies on depth scaling with less balanced changes in width and resolution, which leads to a less favorable trade-off between model size and accuracy and matches its lower boundary performance in our experiments.

Beyond the comparison across architecture families, we observe that increasing encoder capacity generally improves performance up to the mid-to-large EfficientNet variants. Among EfficientNet encoders, b4 through b7 form a plateau of top-performing models: they obtain the highest mean IoU and Boundary IoU, and their confidence intervals (mean ± standard deviation) largely overlap. Although EfficientNet-b5 exhibits a slightly lower mean IoU than EfficientNet-b4 in our benchmark, this difference is of the same order as the reported standard deviations and is therefore compatible with stochastic variability induced by random initialization, mini-batch sampling, and optimization dynamics. Given that the observed differences between EfficientNet-b4, b5, b6, and b7 are small relative to the experimental uncertainty, we treat these four variants as competitive candidates and subsequently evaluate all of them within our full segmentation + LGBM pipeline to assess how their final, post-processed performance differs.

In parallel, we evaluated several state-of-the-art decoder architectures: U-Net [48], U-Net++ [49], MA-Net [50], LinkNet [51], FPN [52], PSPNet [53], PAN [54], UPerNet [55], and SegFormer [56], while keeping the encoder fixed to EfficientNet-b7 for a strong and stable backbone. Decoder comparisons are summarized in Table 3.

Table 3.

Performance comparison of different decoders using an EfficientNet-b7 encoder. Metrics are reported in %. Bold values indicate the best result within each column.

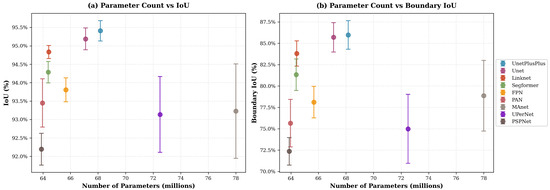

The decoder comparison also reveals a consistent performance hierarchy. U-Net and U-Net++ achieve the strongest region-level IoU, with U-Net++ performing best overall. This trend is consistent with the design of U-Net++, whose nested dense skip connections and deep supervision promote repeated fusion of encoder features across multiple semantic depths. Such multi-scale aggregation is known to enhance gradient propagation and refine high-resolution details during reconstruction, which is particularly relevant for our task where skyline contours can be thin. LinkNet and SegFormer remain competitive in terms of region overlap but show weaker boundary fidelity, which may reflect the lighter skip-based fusion in LinkNet and the stronger global-context bias in SegFormer, both of which can be less favorable in contour-critical segmentation. Finally, pyramid-style decoders (FPN, PSPNet, UPerNet) lag behind in both IoU and boundary metrics; their emphasis on coarse contextual pooling is effective for broad scene parsing but is likely to attenuate fine-grained boundary recovery in this binary sky–obstacle setting. Finally, the two plots in Figure 5 and Figure 6 complement the results in Table 2 and Table 3 by illustrating the effect of model scaling. For encoders, EfficientNets form the Pareto frontier, achieving higher IoU and Boundary IoU at substantially lower parameter counts than ResNet or Inception backbones. For decoders, U-Net++ appears as the best compromise between complexity and accuracy, marginally heavier than U-Net but clearly superior in both region and boundary metrics. These visual trends align with our quantitative findings and motivate our final selection of EfficientNet-b4 to b7 paired with U-Net++.

Figure 5.

Encoder parameter count (a) vs. IoU and (b) Boundary IoU (decoder fixed to U-Net++).

Figure 6.

Decoder parameter count (a) vs. IoU and (b) Boundary IoU (encoder fixed to EfficientNet-b7).

3.2.5. Post-Processing Meta-Model

To refine the pixel-level accuracy of our segmentation outputs, we introduce a supervised post-processing meta-model based on gradient-boosting decision trees, implemented with LightGBM.

From a modelling perspective, we adopt LightGBM for three reasons. First, it naturally operates at the pixel level and can jointly exploit heterogeneous feature types (CNN probabilities, color components, textural statistics) in a single model. Second, its non-linear decision structure is well suited to capturing interactions between multi-scale predictions and handcrafted cues, enabling it to refine ambiguous pixels that lie near the skyline or around thin occluders. Third, it offers an effective way to combine the complementary predictions obtained at , , and resolutions. Rather than simply averaging or voting across scales, the LightGBM model learns, from the joint multi-scale probabilities and the handcrafted features, when a pixel behaves like a large homogeneous region (where lower-resolution predictions are more reliable) or like a fine-detail boundary (where higher-resolution predictions are preferred), and weighs the scale-specific probabilities accordingly, as qualitatively illustrated in Section 4.3.

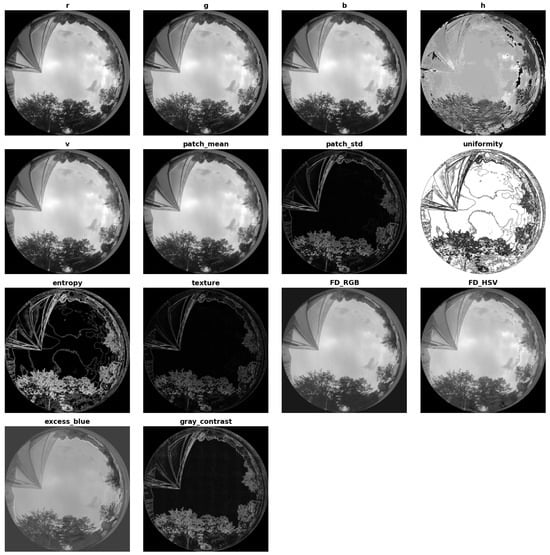

Specifically, LightGBM is trained on features extracted from a manually annotated dataset of 60 fisheye images, labeled using the semi-automated procedure described in Section 3.1.3. For each image, 50,000 pixels are randomly sampled within the circular fisheye region, resulting in 3,000,000 training instances. Each sampled pixel is represented by a comprehensive set of descriptors summarised in Table 4, with representative feature maps illustrated in Figure 7.

Table 4.

Summary of all pixel-wise features extracted for LightGBM training. Features shown in bold were retained in the final meta-model after feature selection.

Figure 7.

Representative feature maps corresponding to the descriptors used to train the LightGBM meta-model.

These descriptors combine multi-scale CNN probabilities with complementary color-based and statistical-structural features established in vision-based sky segmentation [22,23,35,57]. Multi-space color components (RGB, HSV, YCbCr) and chromatic indices such as dark channel, hue disparity, color saturation, and scene depth provide robust cues against radiometric and illumination variations [22,23]. Local statistical measures, including patch mean, standard deviation, third moment, smoothness, uniformity, entropy, texture, gradient magnitude, and contrast energy, capture both low-frequency homogeneity typical of sky regions and high-frequency heterogeneity characteristic of cluttered non-sky areas [23]. Empirical indices such as the Fisher Discriminant coefficients and the Excess Blue index further enhance discrimination of blue-dominant sky regions from diverse non-sky elements [35,57].

To reduce redundancy and model complexity, a two-stage feature selection process is applied. First, features with a Pearson correlation coefficient below 0.5 with respect to the ground-truth labels are discarded. Then, highly collinear variables are removed while retaining the CNN-derived multi-scale predictions due to their strong predictive value. The final selected set therefore includes the CNN probability maps at 512, 1024, and 2048 input resolutions, together with selected color and textural descriptors (e.g., r, g, b, h, v, uniformity, entropy, texture, Fisher Discriminant features), the excess blue index, and the grayscale contrast energy.

In practice, this meta-model acts as a pixel-wise refinement layer, particularly effective in sparse or occluded scenes where the sky is partially obstructed by small objects and where a single-resolution CNN model tends to underperform.

4. Results

4.1. Model Evaluation

To evaluate the final segmentation performance, we trained U-Net++ models with EfficientNet-b4, b5, b6, and b7 encoders, corresponding to the top-performing backbones identified in the architecture benchmark. Model performance was assessed using the F1 score and IoU, computed as weighted averages by class support. In addition, we report two boundary-focused metrics: Boundary IoU [58] and Boundary F1 [59]. Boundary IoU evaluates overlap only within narrow bands around the predicted and ground-truth contours, yielding a boundary-sensitive analogue of IoU that is symmetric and less biased by region interiors; we follow the reference implementation and use a dilation ratio of . Boundary F1 (also called the boundary F-measure) is the harmonic mean of boundary precision and recall under a small localization tolerance; throughout this work we use a tolerance of pixels. These complementary measures directly target skyline fidelity and contour alignment. Baseline CNN-only results are summarized in Table 5.

Table 5.

Performance of baseline U-Net++ models with EfficientNet encoders (without LGBM).

For each encoder, we further trained a dedicated post-processing meta-model based on LightGBM, as described in Section 3.2.5. Hyperparameter tuning was conducted using Optuna [60]. During training, we employed early stopping on the validation metric to automatically select the effective number of boosting iterations (trees). In practice, early stopping consistently halted training after fewer than 100 trees across all configurations, resulting in compact boosted tree models and keeping the post-processing stage lightweight. To convert the probabilistic output of the LGBM model into a binary mask, we evaluated several thresholding strategies: simple fixed thresholding, Otsu’s method [61], and Conditional Random Fields (CRF) [62]. Empirically, a single fixed decision threshold tuned on the validation set yielded the best overall results for all encoders. The results obtained with LGBM post-processing are summarized in Table 6.

Table 6.

Performance of LGBM-enhanced segmentation models.

The LGBM post-processing module yields consistent improvements over the CNN-only baselines for all EfficientNet variants, enhancing both region-level and boundary-focused metrics. Across encoders, IoU increases by roughly –, while Boundary IoU and Boundary F1 improve by up to about and , respectively. Although these absolute gains may appear modest, on 1024 × 1024 images they correspond to correcting misclassified labels over thousands of pixels per frame, predominantly along object boundaries, which is perceptually significant for skyline delineation. Among the refined models, EfficientNet-b7 attains the highest region-level F1, IoU, and Boundary F1, whereas EfficientNet-b6 slightly outperforms it on Boundary IoU. EfficientNet-b4 closely matches b5 in region metrics but remains noticeably weaker on boundary scores, reinforcing the importance of explicitly evaluating boundary-sensitive criteria. In practice, this results in a clear accuracy–efficiency trade-off: EfficientNet-b7 maximizes segmentation quality, while smaller variants (b4–b6) sacrifice only a small amount of performance in exchange for substantially fewer parameters and more lightweight inference. Overall, combining a deep segmentation backbone with a supervised refinement layer yields precise sky–obstacle delineation with improved region overlap and markedly enhanced skyline fidelity across a range of model capacities.

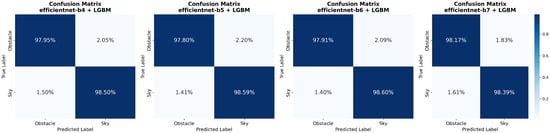

In addition to the region-level and boundary-focused metrics, we also examine confusion matrices for the LGBM-refined models, shown in Figure 8. These matrices provide a class-wise view of prediction behaviour by separating errors into false-sky (obstacle pixels predicted as sky) and false-obstacle (sky pixels predicted as obstacles). For solar irradiance forecasting, a pessimistic model is preferable: it should be more inclined to label ambiguous pixels as obstacles rather than sky, so that irradiance is slightly underestimated rather than overestimated. Among the tested variants, the LGBM + EfficientNet-b7 model best matches this behaviour, achieving the lowest false-sky rate while accepting a slightly higher false-obstacle rate compared to the other architectures.

Figure 8.

Confusion matrices for the LGBM-refined models on the test set, showing class-wise sky/obstacle prediction rates. From left to right: EfficientNet-b4, EfficientNet-b5, EfficientNet-b6, and EfficientNet-b7 encoders.

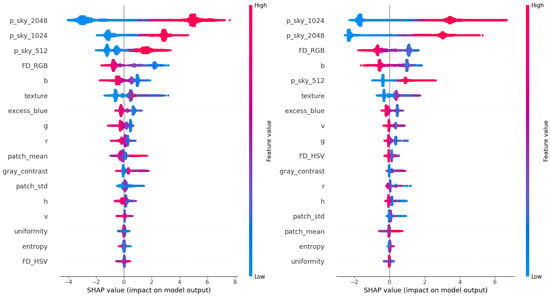

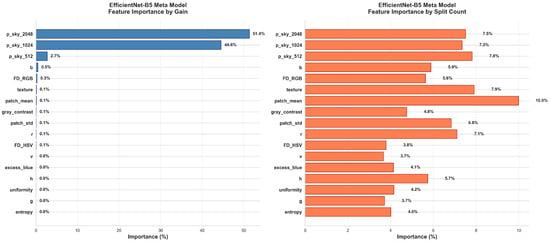

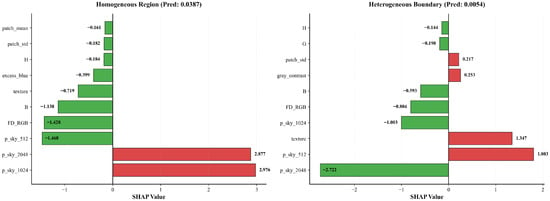

To better understand which features drive the improved segmentation accuracy, we analyzed representative LightGBM meta-models using SHAP [63]. We focus here on the EfficientNet-b5 and EfficientNet-b7 backbones, which span mid to high-capacity encoders. Figure 9 shows that the most impactful features across both configurations include multi-scale CNN predictions (p_sky_2048, p_sky_1024, p_sky_512), along with handcrafted descriptors such as Fisher Discriminant in RGB space, blue channel intensity, and texture. This highlights the value of combining deep and handcrafted features for reliable sky–obstacle boundary refinement, especially across varying spatial resolutions.

Figure 9.

SHAP summary plots for the LightGBM models based on EfficientNet-b5 predictions (left) and EfficientNet-b7 predictions (right). Each dot corresponds to a pixel sample, colored by feature value; greater horizontal spread indicates higher predictive influence.

To complement the SHAP-based interpretation, the built-in LightGBM feature importances were also examined using the gain and split count criteria (Figure 10) for the same two representative configurations. The gain metric measures how much each feature contributes to the reduction of the loss function, whereas the split count reflects how frequently a feature is selected across all decision trees.

Figure 10.

Built-in LightGBM feature importances for EfficientNet-b5 (top) and EfficientNet-b7 (bottom) meta-models, evaluated using gain (left) and split count (right).

In both EfficientNet-b5 and b7 meta-models, the multi-scale CNN predictions dominate the gain ranking, confirming that the base segmentation probabilities are the most informative predictors for sky–obstacle discrimination. However, the split count analysis reveals a broader distribution, with local statistical, textural, and color-based features appearing more frequently in splits. These features provide complementary thresholds that support fine-grained boundary refinement, even if their overall contribution remains smaller.

Minor differences between gain and split rankings mainly arise from correlations among color and luminance-related descriptors, reflecting mild multicollinearity effects also observed in the SHAP analysis. Overall, both analyses confirm that the LightGBM meta-model primarily relies on multi-scale CNN-derived probabilities while leveraging handcrafted features to adjust decision boundaries in visually complex regions.

4.2. Impact of the New Augmentations on Model Performance

To evaluate the contribution of chromatic aberration and fisheye lens distortion augmentations, we conducted an ablation study where both were omitted. EfficientNet-b5 and EfficientNet-b7 encoders with a U-Net++ decoder were trained following the same protocol described in Section 3.2.4, and each configuration was repeated across 10 independent runs. We report the mean IoU and standard deviation to account for variability.

When trained without the augmentations, EfficientNet-b5 achieved a mean IoU of , compared to with augmentations. For EfficientNet-b7, the mean IoU dropped from (with augmentations) to (without augmentations).

Although the numerical differences are modest, these results indicate that the proposed augmentations provide measurable benefits. More importantly, they enhance robustness by exposing models to optical variability, thereby supporting improved generalization across diverse lenses and imaging conditions. This is particularly valuable for real-world deployment, where heterogeneous hardware and optical characteristics are common.

4.3. Qualitative Analysis of Model Predictions

Figure 11 illustrates the advantage of using a LightGBM meta-model to enhance segmentation predictions. At resolution, a segmentation error is visible in the red-highlighted area, where a section of the sky is occluded by an overhead obstacle. Interestingly, this error is absent in the prediction, which better captures large structural context but suffers from coarser boundary precision, as seen in the blue-highlighted region. In contrast, the prediction preserves fine details but can be less stable in large homogeneous regions. The LightGBM model effectively fuses the strengths of predictions at different resolutions, producing a refined output that minimizes both types of error.

Figure 11.

Visualization of prediction complementarity on a challenging validation image. From left to right: input RGB fisheye image, EfficientNet-b5 sky–obstacle probability maps for input resolutions of 512 × 512, 1024 × 1024, and 2048 × 2048 pixels, and final binary mask produced by the LightGBM meta-model. The scene includes a complex skyline, with an obstacle dividing the sky into separate regions (red area) and dense vegetation in the lower hemisphere (blue area). The original image resolution is 1024 × 1024 pixels.

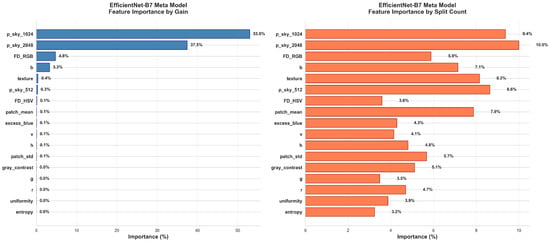

To better understand how the meta-model exploits these complementary behaviours, Figure 12 reports local SHAP waterfall plots for two representative sky pixels taken from the red and blue areas in Figure 11. In both cases, the desired output is a probability close to zero, corresponding to the sky class. For the pixel located in a homogeneous sky region (red area, left panel), the prediction values at and strongly push the model towards the obstacle class, but this tendency is counteracted by the prediction at together with several handcrafted descriptors (blue-channel intensity, Fisher Discriminant, texture, Excess Blue, and local statistics), which contribute in the opposite direction and keep the final probability close to zero. For the pixel on a heterogeneous boundary (blue area, right panel), the situation is reversed: the coarse prediction at and the local texture favour the obstacle class, while the finer-scale prediction at and , along with Fisher Discriminant and other colour features, pulls the score back towards the sky class.

Figure 12.

Local SHAP waterfall plots showing the top 10 features (with highest SHAP contribution) for two ground-truth sky pixels from Figure 11. (Left plot) sky pixel in a homogeneous region. (Right plot) sky pixel on a heterogeneous sky–obstacle boundary.

Formally, the LightGBM model outputs a logit value for each pixel, which is decomposed by SHAP as

where is the global base value (the expected logit over the training data) and is the contribution of feature i for that pixel. The corresponding obstacle probability is obtained by applying the sigmoid function

For the homogeneous-sky pixel, the base value is and the sum of all SHAP contributions is . The raw logit is therefore

and the obstacle probability becomes

For the boundary sky pixel, the same base value combines with a SHAP sum of , giving

and thus

These examples illustrate how the meta-model adaptively reweights the multi-scale prediction values and handcrafted features so that, even when one scale misclassifies a pixel, the final decision remains consistent with the sky label.

This multi-scale fusion strategy proves especially useful in heterogeneous environments: low-resolution predictions (e.g., 512 × 512) improve structural coherence in urban settings with frequent occlusions, while high-resolution predictions (e.g., 2048 × 2048) preserve fine details important in rural or vegetated landscapes.

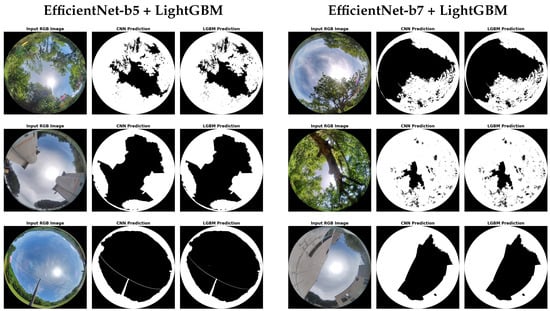

Figure 13 presents additional qualitative results for the EfficientNet-b5 and EfficientNet-b7 models across a variety of urban and rural scenes. Both models demonstrate strong generalization across environments, with the LGBM post-processing consistently correcting local errors and improving edge coherence. These visualizations support the quantitative performance gains reported in Section 4.3, highlighting the practical robustness of the combined segmentation pipeline in real-world scenarios.

Figure 13.

Qualitative sky–obstacle segmentation on unseen fisheye validation and test images. The left panel shows results from EfficientNet-b5 and the right panel from EfficientNet-b7. Each panel shows, from left to right, the input RGB image, the CNN prediction, and the LightGBM-refined mask.

4.4. Comparison with an External Baseline

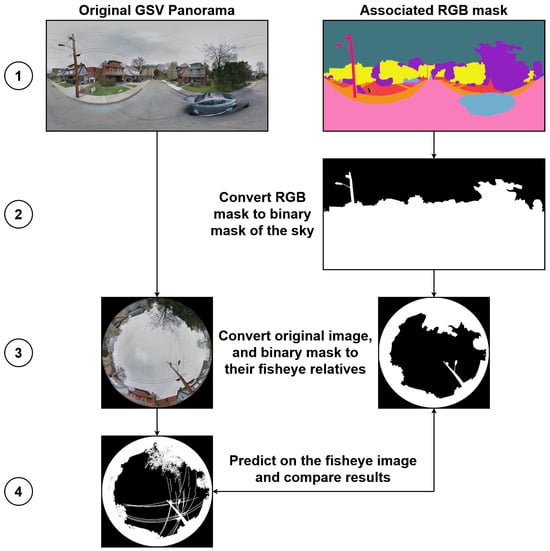

As highlighted in Section 1.3, direct comparison with existing segmentation benchmarks is hindered by the scarcity of high-quality fisheye datasets with accurate sky annotations. However, we identified a recent work [64] that employs a similar methodology using equirectangular panoramas from Google Street View. This study introduces the CVRG-Pano dataset and proposes two U-Net-based architectures for semantic segmentation of panoramic imagery.

To enable a fair comparison, we employed our fisheye transformation pipeline to convert the CVRG-Pano test images and their associated multi-class masks into the fisheye domain. The evaluation procedure is summarized in Figure 14. In step 1, each panoramic image is paired with its ground-truth semantic mask. In step 2, the RGB mask is converted to a binary sky mask. Step 3 involves projecting both the RGB image and the binary sky mask into the fisheye domain using the equidistant projection model, which is representative of low-cost commercial fisheye lenses. Finally, in step 4, we perform sky segmentation using our trained U-Net++ models with EfficientNet-b4, b5, b6, and b7 backbones, each evaluated with and without LightGBM-based post-processing refinement.

Figure 14.

Evaluation pipeline showing the four steps used to assess model generalization on the CVRG-Pano dataset. A detailed description of the four steps is provided in the main text.

We report IoU scores in Table 7. Despite annotation inconsistencies in CVRG-Pano, such as coarse boundaries and the omission of fine-grained obstacles like cables and thin branches, our models maintain strong generalization and achieve high performance, even on data not tailored for precise sky–obstacle segmentation.

Table 7.

IoU comparison of our models evaluated on fisheye projections of the CVRG-Pano dataset.

The IoU values of the base models on CVRG-Pano closely match those obtained on our internal fisheye test set, indicating good cross-dataset generalization. In contrast to our own dataset, LightGBM post-processing does not systematically improve IoU here: three backbones (b4–b6) exhibit a slight decrease, while EfficientNet-b7 shows only a modest gain. This behaviour is consistent with the nature of the CVRG-Pano annotations, whose coarse boundaries and missing small obstacles penalize boundary-focused refinements that produce sharper, more detailed skylines than those represented in the ground truth. In other words, the meta-model tends to correct visually plausible fine structures (e.g., tree crowns, cables) that are not labeled, which can reduce IoU with respect to the provided masks.

Nevertheless, all configurations achieve IoUs around 95–96% on this external dataset, underscoring the robustness of the proposed pipeline. From a practical perspective, the LightGBM-refined predictions may be particularly valuable not for maximizing IoU on CVRG-Pano, but for upgrading the quality of its sky annotations by supplying more accurate, high-resolution sky–obstacle boundaries.

4.5. End-to-End Runtime Performance on CPU and GPU

We evaluated the runtime of the main components of the segmentation pipeline for EfficientNet-b4, b5, b6, and b7 backbones. For each model, we measured the total time required for all CNN forward passes across the three input resolutions used in our pipeline (512 × 512, 1024 × 1024, and 2048 × 2048), together with the time spent on handcrafted feature extraction and subsequent LightGBM inference. All timings are averaged over 42 fisheye images of size 1024 × 1024. CPU experiments were performed on an Intel Core i5-1335U (1.3 GHz). For GPU measurements, CNN inference was executed on an NVIDIA H100 (80 GB VRAM) hosted in a server with an AMD EPYC 9124 (3 GHz), while feature extraction and LightGBM inference remained CPU-bound. The full set of results is reported in Table 8.

Table 8.

Average per-image runtime (mean ± std, in seconds) for CNN prediction across three input resolutions, handcrafted feature extraction, and LightGBM inference across EfficientNet-b4–b7 backbones, computed over 42 images of size 1024 × 1024.

On the CPU-only configuration, the end-to-end latency ranges from 16.8 s (EfficientNet-b4) to 30.7 s (EfficientNet-b7). The CNN stage accounts for more than 90% of this cost and is itself dominated by the 2048 × 2048 forward pass, which represents roughly 70–80% of the total CNN runtime. For comparison, a single 1024 × 1024 CNN forward pass requires only 2.8–5.6 s on CPU, so the complete multi-scale + LightGBM pipeline is approximately 5–6× slower than a single-resolution prediction. This highlights the computational price of the improved accuracy obtained with multi-scale inference and post-processing.

On the GPU configuration, multi-scale CNN inference becomes inexpensive, with total forward times between 0.33 s and 0.50 s depending on the backbone. A single 1024 × 1024 CNN pass on the H100 requires only 0.06–0.12 s, illustrating the lower bound achievable on high-end accelerators. However, the overall runtime (2.4–2.8 s) is dominated by CPU-side feature extraction and LightGBM inference, whose performance depends primarily on the host CPU rather than on the GPU. As a result, differences between backbones shrink to only a few hundred milliseconds, making the most accurate model (EfficientNet-b7) essentially cost-neutral on GPU. In contrast, on CPU-only systems, smaller backbones such as b4 or b5 offer a more favorable accuracy–latency trade-off while retaining strong segmentation performance.

5. Discussion

This study demonstrates that sky–obstacle segmentation in hemispherical fisheye imagery can be effectively achieved through a hybrid framework combining deep learning with structured post-processing. Synthetic fisheye views were generated from Google Street View panoramas and enriched with lens and lighting-aware augmentations to produce a dataset that captures global variability without the cost of large-scale field acquisition. Experimental results confirm that convolutional neural networks trained on this dataset achieve high accuracy on real fisheye images, and that the LightGBM refinement step further improves segmentation precision along challenging boundaries, particularly when evaluated with boundary-focused metrics.

Two aspects are particularly noteworthy. First, the proposed augmentations not only yield gains in IoU but also improve robustness. This is especially valuable given the relatively limited dataset size: augmentations compensate for data scarcity by exposing the model to a wider range of optical variability. As a result, the framework generalizes well to real-world fisheye captures and to an independent public benchmark (CVRG-Pano), underscoring its reliability beyond the training distribution. Second, the integration of the post-processing meta-model enables a true pixel-wise analysis. Leveraging CNN-derived multi-scale predictions alongside handcrafted descriptors, the meta-model refines fine occlusions such as vegetation or cables. This precise pixel-wise fidelity is particularly important for long-term solar resource assessment, where shading factor estimation depends on accurate delineation of skyline masks.

Beyond these quantitative gains, the behaviour of the hybrid CNN-LightGBM pipeline is qualitatively different from more classical graph-based post-processing strategies, such as dense CRFs. These models typically enforce local smoothness with contrast-sensitive pairwise terms, which is effective at removing small isolated errors but tends to oversmooth thin occluders and assumes that boundaries align well with colour or intensity edges. In our fisheye setting, thin structures (branches, cables) and chromatic aberrations frequently violate these assumptions. By contrast, the LightGBM meta-model operates directly on heterogeneous, pixel-wise features: multi-scale CNN probabilities, multi-space colour components, local statistics and texture descriptors, and learns non-linear combinations that distinguish large homogeneous sky regions from cluttered boundaries. This design explains why the hybrid model yields not only modest improvements in region-level IoU over the pure CNN baseline, but markedly larger gains on boundary-sensitive metrics (Boundary IoU and Boundary F1). An additional advantage of the LightGBM layer is its compatibility with post-hoc interpretability methods: gain and split-based feature importances, together with SHAP values, show that the meta-model primarily relies on multi-scale CNN probabilities while using colour and textural descriptors to refine decisions in visually complex regions. Together with these feature-attribution results, the observed performance gains suggest that most of the useful spatial regularisation is already captured by the learned meta-model through its access to multi-scale CNN predictions and local contextual descriptors, making the hybrid CNN-LightGBM approach a data-driven alternative to manually designed CRF and graph-based post-processing in fisheye sky imagery.

5.1. Limitations

While the proposed framework is effective, several limitations should be acknowledged. Our pipeline includes a calibration-based procedure for fisheye disk extraction (Section 3.2.1), which requires checkerboard calibration images. Alternative approaches using image processing to automatically crop the fisheye disk without calibration do exist, but were not implemented here, since our calibration-driven method was sufficient in the present context.

In addition, the full multi-scale pipeline is computationally demanding, especially on low-power CPUs. Most of the runtime is spent in the repeated CNN forward passes at high resolutions (in particular 2048 × 2048), with feature extraction and LightGBM inference adding a smaller but non-negligible overhead. As a result, the current design is not specifically tailored to ultra-constrained embedded platforms. In scenarios where efficiency is paramount, practitioners can trade accuracy for speed in several ways: by adopting lighter encoders (e.g., EfficientNet-b0/b1 with U-Net or U-Net++), by reducing the number or the maximum scale of input resolutions (for instance, dropping the 2048 × 2048 pass or relying on a single 1024 × 1024 prediction), and by limiting the set of handcrafted features used by the meta-model. These variants provide straightforward paths to substantially lower latency while retaining a large fraction of the segmentation quality.

Finally, the dataset size remains modest relative to large-scale vision benchmarks. Although augmentation strategies mitigate this limitation, broader geographic and temporal diversity would further strengthen robustness. In particular, the dataset was originally collected for solar irradiance prediction, and therefore contains very few low-illumination conditions (e.g., night-time scenes). The distribution of test images could also be improved: while it already spans urban, semi-urban, and rural areas, it lacks a wider range of environmental conditions such as snow, fog, or heavy rain, which are important for fully stress-testing sky–obstacle segmentation models.

5.2. Future Work

Potential extensions concern both modeling and data. On the methodological side, a promising direction is the design of distortion-aware architectures tailored to fisheye sky segmentation. Integrating operators such as deformable convolutions or radial/polar transformers could better account for the non-uniform spatial sampling of fisheye lenses; to the best of our knowledge, no dedicated distortion-aware framework has yet been proposed specifically for this task.

At the data generation stage, our current fisheye rendering pipeline relies on nearest-neighbor interpolation when mapping equirectangular panoramas to the fisheye domain. While this choice is sufficient for segmentation and preserves label alignment, it can introduce blocky artefacts in the synthesized images. Future work could investigate higher-order resampling schemes, such as bilinear or bicubic interpolation, or learnable warping modules to produce smoother, more photorealistic fisheye renderings, which may further improve generalization to real-world imagery.

From a data perspective, applications that go beyond solar irradiance prediction may benefit from broader coverage than offered by the present dataset. In particular, additional fisheye data could target low-illumination scenarios and a wider range of weather and environmental conditions, as well as more diverse geographic and seasonal contexts.

6. Conclusions

A scalable and affordable framework for hemispherical sky–obstacle segmentation has been presented, combining synthetic fisheye data generation from Google Street View panoramas, lens-aware augmentation, and a hybrid CNN-LightGBM architecture. To ensure transparency and reproducibility, the complete source code and the dataset annotations are publicly released, together with a script that enables retrieval of the original panoramas via the official Google Street View API. The real fisheye images captured with smartphone lens attachments, used for validation and testing, are also shared in full.

On real fisheye images acquired with low-cost smartphone systems, the proposed framework achieves up to 96.63% IoU, 98.29% F1, 92.25% Boundary F1, and 91.43% Boundary IoU, demonstrating both high region-level accuracy and precise sky–obstacle boundary delineation across devices and acquisition sites. Additional experiments on an external panoramic benchmark (CVRG-Pano) confirm that these models generalize well beyond the training distribution, even when evaluated on data not specifically tailored for fine-grained skyline annotations.

Beyond its quantitative performance, the framework establishes a practical basis for long-term solar resource assessment, while remaining relevant for environmental monitoring and navigation tasks. The complete segmentation pipeline is integrated into a shading estimation tool that computes shading factors from fisheye imagery, enabling reliable quantification of obstacle-induced solar losses along the sun’s path.

Future extensions may further improve the approach through distortion-aware architectures tailored to fisheye optics, lightweight variants suitable for constrained hardware, and expanded datasets that cover a broader range of geographic contexts, illumination regimes, and weather conditions to strengthen generalization.

Author Contributions

Conceptualization, N.B.; methodology, N.B.; software, N.B.; validation, N.B.; formal analysis, N.B.; investigation, N.B.; resources, N.B.; data curation, N.B.; writing—original draft preparation, N.B.; writing—review and editing, N.B. and V.B.; visualization, N.B.; supervision, V.B.; project administration, V.B.; funding acquisition, V.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in Fisheye sky segmentation at https://gitlab.laas.fr/nbouillon/fisheye-sky-segmentation (accessed on 1 December 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Polo, J.; García, R.J. Solar Potential Uncertainty in Building Rooftops as a Function of Digital Surface Model Accuracy. Remote Sens. 2023, 15, 567. [Google Scholar] [CrossRef]

- Duffie, J.A.; Beckman, W.A. Solar Engineering of Thermal Processes, 4th ed.; Wiley: Hoboken, NJ, USA, 2013. [Google Scholar] [CrossRef]

- Cao, K.B.K. Photovoltaic Applications in Demanding Situations: Estimation and Optimisation of Solar Ressources for Autonomous Power Supplies. Ph.D. Thesis, INSA de Toulouse, Toulouse, France, 2023. [Google Scholar]

- Kannala, J.; Brandt, S. A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1335–1340. [Google Scholar] [CrossRef] [PubMed]

- Jakab, D.; Deegan, B.M.; Sharma, S.; Grua, E.M.; Horgan, J.; Ward, E.; van de Ven, P.; Scanlan, A.; Eising, C. Surround-View Fisheye Optics in Computer Vision and Simulation: Survey and Challenges. IEEE Trans. Intell. Transp. Syst. 2024, 25, 10542–10563. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar] [CrossRef]

- Zhou, B.; Zhao, H.; Puig, X.; Xiao, T.; Fidler, S.; Barriuso, A.; Torralba, A. Semantic understanding of scenes through the ade20k dataset. Int. J. Comput. Vis. 2019, 127, 302–321. [Google Scholar] [CrossRef]

- Huang, X.; Wang, P.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The ApolloScape Open Dataset for Autonomous Driving and Its Application. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2702–2719. [Google Scholar] [CrossRef]

- Dev, S.; Savoy, F.M.; Lee, Y.H.; Winkler, S. Estimating solar irradiance using sky imagers. Atmos. Meas. Tech. 2019, 12, 5417–5429. [Google Scholar] [CrossRef]

- Dev, S.; Savoy, F.M.; Lee, Y.H.; Winkler, S. Estimation of solar irradiance using ground-based whole sky imagers. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 7236–7239. [Google Scholar] [CrossRef]

- Rajagukguk, R.A.; Kamil, R.; Lee, H.J. A Deep Learning Model to Forecast Solar Irradiance Using a Sky Camera. Appl. Sci. 2021, 11, 5049. [Google Scholar] [CrossRef]

- Manzoor, A.; Mohandas, R.; Scanlan, A.; Grua, E.M.; Collins, F.; Sistu, G.; Eising, C. A Comparison of Spherical Neural Networks for Surround-View Fisheye Image Semantic Segmentation. IEEE Open J. Veh. Technol. 2025, 6, 717–740. [Google Scholar] [CrossRef]

- Kumar, A.; Kashyap, Y.; Sharma, K.M.; Vittal, K.P.; Shubhanga, K.N. MSSEAG-UNet: A Novel Deep Learning Architecture for Cloud Segmentation in Fisheye Sky Images and Solar Energy Forecast. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–13. [Google Scholar] [CrossRef]

- Luo, J.; Pan, Y.; Su, D.; Zhong, J.; Wu, L.; Zhao, W.; Hu, X.; Qi, Z.; Lu, D.; Wang, Y. Innovative cloud quantification: Deep learning classification and finite-sector clustering for ground-based all-sky imaging. Atmos. Meas. Tech. 2024, 17, 3765–3781. [Google Scholar] [CrossRef]

- Shi, C.; Zhou, Y.; Qiu, B.; He, J.; Ding, M.; Wei, S. Diurnal and nocturnal cloud segmentation of all-sky imager (ASI) images using enhancement fully convolutional networks. Atmos. Meas. Tech. 2019, 12, 4713–4724. [Google Scholar] [CrossRef]

- Nie, Y.; Paletta, Q.; Scott, A.; Pomares, L.M.; Arbod, G.; Sgouridis, S.; Lasenby, J.; Brandt, A. Sky image-based solar forecasting using deep learning with heterogeneous multi-location data: Dataset fusion versus transfer learning. Appl. Energy 2024, 369, 123467. [Google Scholar] [CrossRef]

- Bour, H.; El Merabet, Y.; Ruichek, Y.; Messoussi, R.; Benmiloud, I. An Efficient Sky Detection Algorithm From Fisheye Image Based on region classification and segment analysis. Trans. Mach. Learn. Artif. Intell. 2017, 5, 714–724. [Google Scholar] [CrossRef]

- Merabet, Y.E.; Ruichek, Y.; Ghaffarian, S.; Samir, Z.; Boujiha, T.; Touahni, R.; Messoussi, R.; Sbihi, A. Hellinger Kernel-based Distance and Local Image Region Descriptors for Sky Region Detection from Fisheye Images. In Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISAPP 2017), Porto, Portugal, 27 February–1 March 2017; Scitepress: Setúbal, Portugal, 2017; Volume 4, pp. 419–427. [Google Scholar] [CrossRef]

- Laungrungthip, N.; McKinnon, A.; Churcher, C.; Unsworth, K. Edge-based detection of sky regions in images for solar exposure prediction. In Proceedings of the 2008 23rd International Conference Image and Vision Computing, Christchurch, New Zealand, 26–28 November 2008; pp. 1–6. [Google Scholar] [CrossRef]

- Rich, P. Characterizing Plant Canopies with Hemispherical Photographs. Remote Sens. Rev. 1990, 5, 13–29. [Google Scholar] [CrossRef]

- Jonckheere, I.; Nackaerts, K.; Muys, B.; Coppin, P. Assessment of automatic gap fraction estimation of forests from digital hemispherical photography. Agric. For. Meteorol. 2005, 132, 96–114. [Google Scholar] [CrossRef]

- Song, Y.; Luo, H.; Ma, J.; Hui, B.; Chang, Z. Sky Detection in Hazy Image. Sensors 2018, 18, 1060. [Google Scholar] [CrossRef]

- de Croon, G.; De Wagter, C.; Remes, B.; Ruijsink, R. Sky Segmentation Approach to obstacle avoidance. In Proceedings of the 2011 Aerospace Conference, Big Sky, MT, USA, 5–12 March 2011; pp. 1–16. [Google Scholar] [CrossRef]

- Nice, K.A.; Wijnands, J.S.; Middel, A.; Wang, J.; Qiu, Y.; Zhao, N.; Thompson, J.; Aschwanden, G.D.P.A.; Zhao, H.; Stevenson, M. Sky Pixel Detection in Outdoor Imagery Using an Adaptive Algorithm and Machine Learning. Urban Clim. 2020, 31, 100572. [Google Scholar] [CrossRef]

- Kuang, B.; Rana, Z.A.; Zhao, Y. Sky and Ground Segmentation in the Navigation Visions of the Planetary Rovers. Sensors 2021, 21, 6996. [Google Scholar] [CrossRef]

- Manzoor, A.; Singh, A.; Sistu, G.; Mohandas, R.; Grua, E.; Scanlan, A.; Eising, C. Deformable Convolution Based Road Scene Semantic Segmentation of Fisheye Images in Autonomous Driving. arXiv 2024, arXiv:2407.16647. [Google Scholar] [CrossRef]

- Playout, C.; Ahmad, O.; Lecue, F.; Cheriet, F. Adaptable Deformable Convolutions for Semantic Segmentation of Fisheye Images in Autonomous Driving Systems. arXiv 2021, arXiv:2102.10191. [Google Scholar] [CrossRef]

- Athwale, A.; Afrasiyabi, A.; Lagüe, J.; Shili, I.; Ahmad, O.; Lalonde, J.F. DarSwin: Distortion Aware Radial Swin Transformer. arXiv 2024, arXiv:2304.09691. [Google Scholar] [CrossRef]

- Ramalingam, S.; Bouaziz, S.; Sturm, P.; Brand, M. SKYLINE2GPS: Localization in urban canyons using omni-skylines. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 3816–3823. [Google Scholar] [CrossRef]

- Wang, J.; Xu, B.; Liu, J.; Gao, K.; Zhang, S. Sky-GVIO: Enhanced GNSS/INS/Vision Navigation with FCN-Based Sky Segmentation in Urban Canyon. Remote Sens. 2024, 16, 3785. [Google Scholar] [CrossRef]

- Lee, M.J.L.; Lee, S.; Ng, H.-F.; Hsu, L.-T. Skymask Matching Aided Positioning Using Sky-Pointing Fisheye Camera and 3D City Models in Urban Canyons. Sensors 2020, 20, 4728. [Google Scholar] [CrossRef]

- Kallio, V. Sky Segmentation of Fisheye Images for Identifying Non-Line-of-Sight Satellites. Master’s Thesis, Tampere University, Tampere, Finland, 2023. [Google Scholar]

- Menier, C.; Meurie, C.; Ruichek, Y.; Marais, J. A Patch Based Fisheye Image Segmentation for Satellite State Characterization. In Proceedings of the 2025 IEEE/ION Position, Location and Navigation Symposium (PLANS), Salt Lake City, UT, USA, 28 April–1 May 2025; pp. 1479–1487. [Google Scholar] [CrossRef]

- Middel, A.; Lukasczyk, J.; Maciejewski, R.; Demuzere, M.; Roth, M. Sky View Factor footprints for urban climate modeling. Urban Clim. 2018, 25, 120–134. [Google Scholar] [CrossRef]

- Zeng, L.; Lu, J.; Li, W.; Li, Y. A fast approach for large-scale Sky View Factor estimation using street view images. Build. Environ. 2018, 135, 74–84. [Google Scholar] [CrossRef]

- Webster, C.; Mazzotti, G.; Essery, R.; Jonas, T. Enhancing airborne LiDAR data for improved forest structure representation in shortwave transmission models. Remote Sens. Environ. 2020, 249, 112017. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; 30. [Google Scholar]

- Schneider, D.; Schwalbe, E.; Maas, H.G. Validation of geometric models for fisheye lenses. ISPRS J. Photogramm. Remote Sens. 2009, 64, 259–266. [Google Scholar] [CrossRef]

- The GIMP Development Team. GNU Image Manipulation Program (GIMP), Version 3.0.4; Community, Free Software (license GPLv3). 2025. Available online: https://www.gimp.org/ (accessed on 1 December 2025).

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A Toolbox for Easily Calibrating Omnidirectional Cameras. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5695–5701. [Google Scholar] [CrossRef]

- Pönitz, T. Python Omnidirectional Camera Calibration. Available online: https://github.com/tasptz/py-omnicalib (accessed on 12 August 2025).

- Bradski, G. The OpenCV Library. Dr. Dobb’S J. Softw. Tools 2000, 25, 120–123. [Google Scholar]

- Yun, S.; Han, D.; Chun, S.; Oh, S.J.; Yoo, Y.; Choe, J. CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6022–6031. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17), San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Xie, C.; Tan, M.; Gong, B.; Wang, J.; Yuille, A.L.; Le, Q.V. Adversarial Examples Improve Image Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 816–825. [Google Scholar] [CrossRef]