Abstract

Hyperspectral remote sensing images (HSIs) provide invaluable information for environmental and agricultural monitoring, yet they are often degraded by atmospheric haze, which distorts spatial and spectral content and hinders downstream analysis. Progress in hyperspectral dehazing has been limited by the absence of paired real-haze benchmarks; most prior studies rely on synthetic haze or unpaired data, restricting fair evaluation and generalization. We present HyperHazeOff, the first comprehensive benchmark for hyperspectral dehazing that unifies data, tasks, and evaluation protocols. It comprises (i) RRealHyperPDID, 110 scenes with paired real-haze and haze-free HSIs (plus RGB images), and (ii) RSyntHyperPDID, 2616 paired samples generated using a physically grounded haze formation model. The benchmark also provides agricultural field delineation and land classification annotations for downstream task quality assessment, standardized train/validation/test splits, preprocessing pipelines, baseline implementations, pretrained weights, and evaluation tools. Across six state-of-the-art methods (three RGB-based and three HSI-specific), we find that hyperspectral models trained on the widely used HyperDehazing dataset fail to generalize to real haze, while training on RSyntHyperPDID enables significant real-haze restoration by AACNet. HyperHazeOff establishes reproducible baselines and is openly available to advance research in hyperspectral dehazing.

1. Introduction

Haze complicates image analysis and adversely affects the performance of machine vision systems [,]. The haze effect results from the partial absorption and scattering of light reflected from the scene as it travels through the turbid medium between the scene and the sensor. The goal of dehazing is to reconstruct the scene as it would appear under haze-free conditions, given a hazy observation. This study addresses the problem of remote sensing image dehazing (RSID). Following the formulation in [], RSID methods can be categorized along two principal axes. The first distinguishes between mono-temporal approaches, which rely on a single hazy observation of the scene, and multi-temporal approaches [], which exploit time-series data to recover haze-free information. The second axis differentiates mono-modal methods, which operate solely on optical imagery, from multi-modal methods that incorporate additional data sources such as Synthetic Aperture Radar (SAR) [,]. In this work, we focus on the mono-modal, mono-temporal setting.

Classical mono-temporal dehazing techniques are based on physical models of haze formation combined with simplifying assumptions, such as the dark channel prior (DCP), which assumes the existence of near-zero intensity values in local haze-free image patches [], as well as other model-based approaches [,,,]. In recent years, deep learning methods have been introduced for dehazing, ranging from early convolutional neural networks (CNNs) such as FFANet [], RSDehazeNet [], and CANet [] to transformer-based architectures like DehazeFormer [], AIDTransformer [], and RSDformer []. The latest Mamba-based models, such as RSDehamba [] and HDMba [], are reported to have improved capability to capture spatially varying haze distribution. Beyond model architectures, several works propose specialized training strategies to improve generalization, including multi-negative contrastive auxiliary learning [].

Developing and evaluating dehazing algorithms requires datasets containing paired hazy and haze-free images of the same scene. However, haze is a difficult-to-reproduce and poorly controlled imaging condition. Open-source datasets with haze-free references and real haze generated by haze machines are available [] for ground-based image dehazing, but this approach is infeasible for RSID. Only unpaired datasets with real haze had previously been available for RSID, and there was a lack of corresponding clean reference images []. To compensate for this, haze augmentation, where haze is synthetically applied to clear remote sensing images using physical or empirical models, became a common practice. However, the quality of the augmentation model critically affects the realism of the generated data. For example, [] demonstrated that imperfections in the haze synthesis model can cause noticeable brightness loss in augmented images. Synthetic augmentation thus can only serve as a complementary tool and cannot fully replace real paired data []. The first dataset with hazy and haze-free remote sensing images containing RGB image pairs derived from multispectral (MS) satellite data, Real-World Remote Sensing Hazy Image Dataset (RRSHID), was introduced in 2025 [].

Dehazing hyperspectral images (HSIs) presents unique challenges. Compared to RGB imagery, HSIs capture rich spectral information, offering considerable potential for downstream remote sensing tasks. While several specialized HSI dehazing methods have been proposed [,,,], evaluating their real-world performance remains difficult due to the absence of paired hyperspectral datasets with real haze. Unlike multispectral imagery, hyperspectral remote sensing data are expensive and technically complex to acquire. No dataset comparable to RRSHID currently exists for the hyperspectral domain. Moreover, there are no standardized haze-augmented benchmarks with defined train–validation–test splits or publicly available pre-trained models. The only available dataset, HyperDehazing [], lacks standardized splits, while its accompanying repository [] provides no trained weights. As a result, the capability of existing HSI dehazing models to generalize to real-world data remains largely unexplored.

To address this gap, we introduce the Remote Sensing Hyperspectral Dehazing Benchmark along with the Remote Sensing Real-World Hyperspectral Paired Dehazing Image Dataset (RRealHyperPDID)—the first hyperspectral dataset containing paired real-world hazy and haze-free remote sensing images.

The key contributions of this work are as follows:

- RRealHyperPDID, the first hyperspectral dataset containing paired real-world hazy and haze-free remote sensing images, providing the first opportunity to rigorously evaluate the real-world performance of hyperspectral dehazing algorithms.

- Agricultural field delineation and land classification annotations, enabling quantitative assessment of how dehazing influences downstream tasks relevant to precision agriculture and supporting downstream task quality assessment (DTQA) beyond traditional image-level metrics.

- Comprehensive evaluation of six dehazing algorithms, including both RGB-based and hyperspectral-specific methods, which reveals that existing state-of-the-art HSI models trained on previously available synthetic datasets (e.g., HyperDehazing) fail to generalize to real-haze data.

- RSyntHyperPDID, a large-scale synthetic hyperspectral dehazing dataset generated using a physically based haze formation model. Models trained on RSyntHyperPDID demonstrate effective generalization to real hazy data, establishing a new baseline.

- A fully open-source benchmark (HyperHazeOff), including standardized data splits (for both RSyntHyperPDID and HyperDehazing), preprocessing pipelines for hyperspectral and RGB data, baseline implementations, pretrained model weights, and evaluation scripts for image quality assessment (IQA) and DTQA—ensuring transparency and reproducibility for future research.

2. Related Work

2.1. Dehazing Datasets

The primary sources of HSIs used in RSID datasets are the Chinese Gaofen-5 (GF-5) satellite and the American AVIRIS airborne sensor. GF-5 data have a spatial resolution of 30 m per pixel, whereas the resolution of AVIRIS data depends on flight altitude and typically ranges from 2 to 20 m per pixel. Both sensors cover a similar spectral range (400–2500 nm), but the GF-5 provides higher spectral resolution—330 spectral channels compared to 224 channels for AVIRIS.

2.1.1. Datasets with Synthetic Haze

Synthetic haze augmentation is commonly used to generate paired data for training and evaluating dehazing algorithms. In this approach, haze is artificially added to clear HS remote sensing images using physical models of atmospheric scattering, which simulate the wavelength-dependent attenuation and diffusion of light.

Commonly, one of two models is employed for synthetic haze augmentation. The atmospheric scattering (AS) model is physically based and accounts for both the additional illumination caused by light scattering from haze particles and the partial absorption of light reflected from the scene:

where is the hazy image, is the haze-free image, represents atmospheric light, is the haze transmission map (HTM), is the scattering coefficient (dependent on atmospheric particle properties, including size), and is the scene depth or, in remote sensing, the depth of the haze at x.

A simplified alternative model is expressed as

where is the haze abundance coefficient, and the HTM is assumed to be wavelength()-independent.

Model (2) has been employed in constructing the HyperDehazing [] and HDD [] datasets. The HDD dataset includes a single AVIRIS HS image with artificially added haze, and the corresponding augmentation procedure is described only briefly []. In contrast, the HyperDehazing dataset provides a detailed description of its haze synthesis process, known as Hazy HSI Synthesis (HHS) []. HyperDehazing contains 2000 pairs of GF-5 HS images with augmented haze. To generate the HTM, donor images containing real haze were used, and their transmission maps () were extracted using a DCP-based method. A total of 20 distinct HTMs were derived. The haze abundance coefficient was estimated for five representative land-cover types—vegetation, buildings, water, bare soil, and mountains—based on images with real haze.

The AS model has primarily been applied to MS imagery [,,] through Channel Correlation-Based Haze Synthesis (CCHS). In CCHS, the HTM for each spectral channel is derived from a guided channel using the wavelength ratio:

The guided-channel HTM is typically extracted from real hazy images. In [], the scattering coefficient is considered spatially uniform. However, as noted in [], remote sensing images can cover large geographical areas (up to tens of kilometers), leading to spatial variations in haze density and properties. Accordingly, the scattering coefficient is modeled as spatially dependent through a parameter , i.e., . is estimated from . The atmospheric light is estimated as the mean intensity of the top 0.01% brightest pixels in each spectral channel.

2.1.2. Datasets with Real Haze

Both HyperDehazing and HDD also include subsets of HS images with real haze but without corresponding haze-free references. HyperDehazing contains 70 GF-5 images, while HDD includes 20 hazy GF-5 satellite images and 20 AVIRIS airborne images.

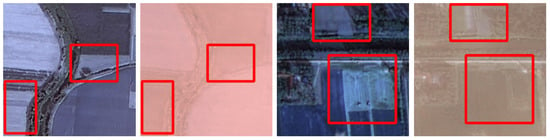

Paired hyperspectral datasets with real haze had not been available until recently. The first such dataset was proposed for RGB imagery—the RRSHID dataset []—which contains paired RGB images of the same region under hazy and haze-free conditions. The source multispectral data for RRSHID were obtained from the China High-Resolution Earth Observation System (CHEOS), and these multispectral images were then converted to RGB by the authors [] to construct the RRSHID dataset. A key limitation of RRSHID is the presence of temporal scene changes between the paired images. Moreover, the dataset provides no metadata describing the nature or extent of these temporal changes, which complicates its use for objective evaluation. As described in [], RRSHID image pairs were selected automatically based on acquisition time proximity (1–3 months apart). We can observe that this leads to notable differences in land surface appearance, especially in agricultural areas (see Figure 1).

Figure 1.

Examples from RRSHID [] showing scenes with significant temporal changes (highlighted with red boxes) between paired hazy and haze-free images.

2.2. Dehazing Quality Assessment

Evaluation of RSID methods can be performed using two main approaches. The first is image quality assessment (IQA), which evaluates the visual or spectral fidelity of the resulting dehazed images. The second is downstream task quality assessment (DTQA), which assesses how dehazing influences the performance of subsequent remote sensing tasks.

The IQA approach can be further categorized into Full-Reference IQA (FRIQA) and No-Reference IQA (NRIQA). FRIQA involves comparing a dehazed image with a corresponding clean reference image using universal image quality metrics. These include classical measures such as Root Mean Square Error (RMSE), Peak Signal-To-Noise Ratio (PSNR), Structural Similarity Index (SSIM) [], and Spectral Angle Mapper (SAM) [], as well as learned perceptual metrics such as Learned Perceptual Image Patch Similarity (LPIPS) []. In contrast, NRIQA is applied when reference images are not available (e.g., for unpaired datasets such as the real-haze subsets of the HyperDehazing and HDD datasets). In this case, the quality of a hazy or dehazed image is evaluated independently using neural network-based metrics designed specifically for dehazing assessment, such as FADE [], BIQME [], and VDA-DQA [].

While DTQA is less frequently used for RSID evaluation, it provides valuable insight into the practical impact of dehazing on remote sensing applications. For example, Guo et al. [] demonstrated through qualitative analysis of Normalized Difference Vegetation Index (NDVI) maps derived from MS imagery that haze significantly distorts vegetation information and that their proposed dehazing method partially restores these distortions. Li et al. [] similarly used a haze synthesis model to analyze how varying haze densities affect classification accuracy. The most recent study by Zhu et al. [] emphasizes the importance of constructing dehazing benchmark datasets with specific downstream task annotations as a direction for future work.

3. Proposed Remote Sensing Hyperspectral Datasets

This section introduces two datasets designed for evaluating RSID methods: RRealHyperPDID (Remote Sensing Real-World Hyperspectral Paired Dehazing Image Dataset) and RSyntHyperPDID (Remote Sensing Synthetic Hyperspectral Paired Dehazing Image Dataset). Both datasets are derived from the AVIRIS project database, which provides hyperspectral and RGB imagery acquired from aircraft. The images are approximately 1000 pixels wide, with lengths varying up to 30,000 pixels. To avoid confusion between the original images and the smaller cropped fragments used for analysis, these source images are hereafter referred to as flight-line images.

3.1. RRealHyperPDID: Remote Sensing Real-World Hyperspectral Paired Dehazing Image Dataset

During the operation of the AVIRIS project, thousands of flight-line images have been collected and made publicly available, some of which capture the same surface areas at different times. In several cases, one image of the same area contains haze, while another remains clear. This observation enabled the creation of a dataset consisting of real-world hyperspectral image pairs—captured under hazy and haze-free conditions.

To construct the dataset, the following procedure was used to identify suitable flight-line image pairs:

- Flight-line images annotated with haze in the AVIRIS Data Table were initially selected.

- RGB visualizations of these HSIs were downloaded and visually inspected to confirm the presence of haze.

- Using the interactive map provided by the AVIRIS Data Portal, hazy images with corresponding clear counterparts were identified and selected.

As a result, 17 distinct zones containing multiple flight-line images were identified, each including one hazy image. An example of such image pairs from the same zone is shown in Figure 2.

Figure 2.

A set of flight-line images consisting of one hazy and one clear image, along with three pairs of manually annotated rectangular areas affected by haze on the hazy flight-line image (highlighted with red boxes).

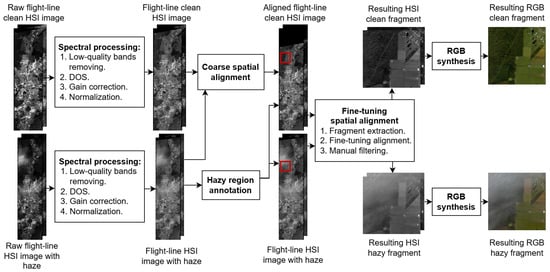

The complete processing pipeline used to construct the RRealHyperPDID dataset is shown in Figure 3.

Figure 3.

Pipeline for deriving the RRealHyperPDID dataset from raw AVIRIS flight-line images.

3.1.1. Spectral Processing

Each selected flight-line image underwent the following spectral processing steps:

- Removal of low-quality bands: bands 104–116, 152–170, and 214–224 were excluded, following standard preprocessing [].

- Dark Object Subtraction (DOS):: .

- Gain correction:, where .

- Normalization: pixel values were normalized with the global maximum across all bands, .

DOS is a standard atmospheric correction method that alters inter-band ratios by removing distortions induced by atmospheric transmission. Gain correction is another standard procedure that also modifies digital number values differently across bands; however, its objective is to restore radiometric values, modified to store images with the int16 format. Finally, normalization preserves inter-band ratios while mapping pixel values to a consistent range, which is necessary for subsequent processing (e.g., dehazing neural networks typically require inputs scaled to ).

3.1.2. Spatial Alignment

The AVIRIS data are georeferenced; however, the positional accuracy of the selected flight-line images was insufficient for precise alignment of fragments within our dataset, with errors reaching up to 10 pixels. In addition, several scenes exhibited geometric distortions that could not be corrected using standard alignment techniques (see Figure 4).

Figure 4.

An example of geometric distortions in AVIRIS data.

To ensure accurate co-registration, the following alignment procedure was applied:

- Automatic coarse alignment of flight-line images using the SIFT (Scale-Invariant Feature Transform) [], followed by estimation of projective transformation parameters via RANSAC (Random Sample Consensus) []. Feature points were detected on the full flight-line images.

- Hazy region annotation: In the selected flight-line images, haze is typically localized rather than uniformly distributed. To capture affected areas, rectangular patches pixels in size containing visible haze were manually annotated in each hazy flight-line image. The corresponding patches were then extracted from the matching haze-free images using the same spatial coordinates (see Figure 2).

- Alignment fine-tuning using feature points detected only inside processed fragments.

- Final expert verification and exclusion of pairs where distortions could not be adequately compensated (as in Figure 4).

3.1.3. RGB Synthesis

In addition to HSI data, our dataset includes an RGB version of each image generated through color synthesis from the HSIs. Two color synthesis methods were applied: The first is Color Synthesis Narrow Channels (CSNC) based on the AVIRIS quicklook generation method but without final JPEG compression. Three narrow spectral channels were extracted: R nm, G nm, B nm. The second is Color Synthesis Standard Observer (CSSO) [] based on the CIE Standard Observer color matching functions. Weighted spectral integration of the HS signal was performed at each pixel.

3.1.4. Subset of Pairs with Closest Scene Conditions

An additional criterion was introduced to identify the most suitable image pairs for evaluation. All other imaging conditions should remain as constant as possible [] to enable full-reference metrics to accurately assess the degree of haze removal. The primary sources of variation are illumination changes and alterations in the Earth’s surface objects (see Figure 5).

Figure 5.

Examples of RRealHyperPDID images showing the same area that has changed significantly between acquisitions taken just 19 days apart (18 April 2014 and 7 May 2014).

Selecting images based solely on temporal proximity is insufficient to guarantee scene similarity. As discussed in Section 2.1, the spectral reflectance of certain land-cover types, such as agricultural fields, may change significantly over short time intervals (see Figure 1). Expert evaluation was therefore conducted to identify a subset of image pairs where hazy and haze-free scenes were most similar in all aspects other than the presence of haze. To ensure the reproducibility of the results, we have published the outcome of the expert-based selection of the most similar scenes as a separate list of images in the benchmark repository.

3.1.5. Dataset Characteristics

The proposed dataset contains 110 hazy fragments pixels in size. Each hazy fragment is associated with at least one haze-free reference, with some having multiple references, resulting in 161 paired samples in total. Each hyperspectral image is accompanied by two RGB versions—one generated using the CSNC method and the other using CSSO.

The acquisition dates of the selected images range from 15 August 2011 to 2 April 2021, covering diverse terrain types, including mountainous, coastal, urban, and suburban regions. The spatial resolution varies from 8 to 18 m per pixel, depending on flight altitude. After removing low-quality bands, each image contains 182 spectral bands, spanning 365–2396 nm.

A subset of 21 image pairs (hazy vs. clear) was identified as being the most visually consistent across all parameters except for the presence of haze. Of these, 14 pairs were acquired within less than a month of each other, while 7 pairs had acquisition gaps ranging from 11 months to 1 year.

3.2. RSyntHyperPDID: Remote Sensing Synthetic Hyperspectral Paired Dehazing Image Dataset

We created a synthetic dataset, RSyntHyperPDID, based on the AVIRIS database. The dataset is sufficiently large to serve as a training set for dehazing models. Each RSyntHyperPDID image has a size of pixels, identical to that of RRealHyperPDID. We used a modified version of the CCHS method described in Section 2.1.1 for haze augmentation of the HSI data. Given the small fragment size and the high spatial resolution of AVIRIS, we employed a version of the method with a spatially independent parameter [].

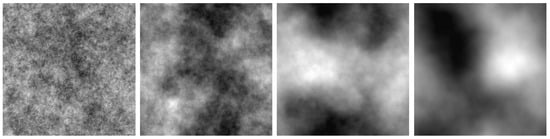

The proposed modification to the CCHS method concerns the generation of the HTM . Accurately extracting haze from real hazy images requires careful manual preparation: not every image contains haze, and even among those that do, extracted haze may also contain contrasting surface elements. Consequently, very few HTMs are typically used in data generation; for instance, Ref. [] reports using only 20 distinct HTMs for the 2000 dataset images. To overcome this limitation, instead of extracting haze from real images, we randomly generated as a two-dimensional random field parameterized by , that allows control over the spatial uniformity of (see Figure 6). We used in our experiments. We used 218 initial haze-free fragments, each of which was processed with two unique randomly generated HTMs. Combining this with six gamma variations, [], resulted in 12 distinct hazed versions per fragment.

Figure 6.

Examples of randomly generated maps with parameters .

A comparison of the characteristics of the proposed datasets with other known RSID datasets is provided in Table 1.

Table 1.

Comparison of the proposed datasets, RRealHyperPDID and RSyntHyperPDID, with existing remote sensing dehazing datasets.

3.3. Downstream Task Quality Assessment

To extend the applicability of the HyperHazeOff benchmark beyond image quality assessment (IQA), we provide downstream task quality assessment (DTQA).

Delineation-Based Dehazing Quality Assessment

First, we evaluated the task of agricultural field delineation, which is related to segmentation but focuses on accurately detecting cultivated field boundaries relative to uncultivated land []. This task is essential for maintaining up-to-date cadastral maps and monitoring agricultural activities.

From the 110 scenes in RRealHyperPDID, 34 scenes containing agricultural fields were selected, corresponding to 86 clear and hazy fragments (in some cases, multiple clear fragments corresponded to a single hazy scene). In total, 3623 fields were manually delineated by experts using CSNC RGB images.

The Delineate Anything (DA) method []—a state-of-the-art neural network approach—was employed for automatic delineation. The method’s pretrained model weights are publicly available, and its resolution-agnostic design allows for direct application to our RGB data without additional tuning. An example of DA’s performance on clear and hazy images is shown in Figure 7: the presence of haze notably reduces delineation quality.

Figure 7.

Results of applying the DA agricultural field delineation method to clean and hazy RGB images from the RRealHyperPDID dataset generated using the CSNC method. The blue delineation mask represents manual annotations, while the red delineation mask corresponds to the algorithm’s output.

To quantify performance, we used both the coefficient and the object-based metric []. While the pixel-based metric is standard for segmentation evaluation, it is relatively insensitive to over- and under-segmentation errors, while provides a more robust assessment of delineation accuracy. is defined as

where is the number of uniquely matched fields, is the number of fields detected by the evaluated method, and is the number of reference fields. Two fields and are considered uniquely matched if their Jaccard index satisfies , and no other fields and exist such that or , respectively.

A comparison of DA method performance on clear and hazy fragments is presented in Table 2. As expected, delineation accuracy on hazy images is consistently lower than on haze-free ones.

Table 2.

Comparison of the DA agricultural field delineation method results for clean and hazy RGB images.

3.4. Land Classification Quality Assessment

The previous section presented DTQA for RGB dehazing. Here, we extend DTQA to MS data by evaluating the impact of haze on land use and land cover (LULC) classification []. LULC classification is performed at the image (or image-fragment) level rather than per pixel, making it less sensitive to local distortions and label noise. To this end, we selected the neural network-based method proposed by Chong [] for inclusion in the benchmark. This method is open source, provides a complete training pipeline, and achieves performance comparable to other neural network approaches (e.g., []).

We reproduced the training procedure using the EuroSAT dataset [], which contains multispectral Sentinel-2 image patches labeled into ten classes: SeaLake, Forest, HerbaceousVegetation, PermanentCrop, Industrial, AnnualCrop, River, Pasture, Highway, and Residential. Following [], we used 5000 fragments for pretraining. We additionally constructed a training set based on our benchmark HSI data. MS synthesis was performed using the Sentinel spectral sensitivity functions. Expert annotation was conducted for our MS fragments. The training set contained 852 clean MS fragments derived from haze-free images in RSyntHyperPDID. The test set comprised 666 clean and 421 hazy MS fragments extracted from RRealHyperPDID.

After initial pretraining on EuroSAT using transfer learning, the model was fine-tuned on our dataset using progressive unfreezing and differential learning rates. This approach enables adaptation to our data while retaining generalizable features learned from the larger dataset. The classification accuracy was 74.56% for clean fragments and 56.29% for hazy fragments, demonstrating that haze significantly degrades LULC performance.

4. Dehazing Evaluation

This section presents three sets of experiments comparing state-of-the-art dehazing methods: FRIQA-based evaluation of RGB dehazing methods using RGB images from the RRealHyperPDID dataset; and FRIQA-based evaluation of hyperspectral dehazing methods; DTQA-based evaluation, assessing the effect of RGB and HS dehazing on agricultural field delineation and land classification performance. The RRealHyperPDID dataset incorporates cases where a single hazy image corresponds to multiple clean reference images that differ in their illumination conditions. As a result, there is no unique ground-truth representation of the haze-free scene. We therefore consider three strategies for handling multiple reference images per hazy observation:

- Best-value selection: The score is computed against all available reference images for each dehazed image and each metric, and the best score is retained. This produces a single representative metric value per scene, although the selected reference may differ across metrics.

- Fixed-reference evaluation: One reference image is selected for each scene and used consistently across all evaluated methods and metrics. Metrics are computed only for the fixed hazy/dehazed–reference pairs and then averaged across all scenes.

- Reference-averaged evaluation: For each scene, metrics are computed for all hazy/dehazed–reference combinations, averaged over references, and then averaged across scenes.

From the perspective of dehazing, the haze-free images with varying illumination are all equally valid results, and the evaluation should not penalize one in favor of another. Although correcting for illumination to restore the reflectance coefficients could help mitigate this issue, HSI illumination correction is a complex problem that lies beyond the scope of this work. Selecting a single reference introduces bias toward that particular acquisition, whereas averaging across references may obscure meaningful differences between methods. The best-value selection strategy offers a more practical assessment: the goal of dehazing is not to reproduce a specific reference image but to recover a plausible haze-free scene. While we consider the best-value selection strategy to be the most appropriate for remote sensing dehazing and therefore report metrics based on it in this paper, all three evaluation strategies are included in the benchmark for completeness and reproducibility. Notably, the relative ranking of the evaluated state-of-the-art dehazing methods remains consistent across all experiments regardless of the reference-handling strategy used.

4.1. RGB Dehazing Image Quality Assessment Results

We evaluated three RGB dehazing methods: DCP, the classical Dark Channel Prior method []; Color Attenuation Dark Channel Prior (CADCP), a recent approach [] that effectively compensates for color haze, simultaneously estimating haze density from the DCP and ambient illumination from the Color Attenuation Prior (CAP), where the luminance–saturation of each pixel is linearly dependent on scene depth or haze concentration []; and DehazeFormer, a modern neural network-based method [] with publicly available pretrained weights. We employed three classical metrics—PSNR, SSIM, and SAM—along with two perceptual neural metrics—LPIPS and DISTS []. To assess chromaticity difference, we used the specialized metric [].

The results of the RGB dehazing methods applied to the CSNC and CSSO outputs are presented in Table 3 and Table 4 and illustrated in Figure 8. In the case of RGB images synthesized with the CSNC method, all dehazing methods generally improve the visual quality of hazy images, with the exception of the SAM metric, according to which only DehazeFormer achieves improvement. Since the mean values of several methods were close and exhibited substantial overlap in their confidence intervals, a paired t-test was performed. The test revealed that, for the SSIM and LPIPS metrics, the apparent superiority of CADCP over DehazeFormer is not statistically significant at the level. Additionally, no significant difference was observed between DCP and DehazeFormer for the DISTS metric. Overall, CADCP and DehazeFormer demonstrate the best performance among the evaluated approaches. However, while CADCP effectively removes haze, it introduces noticeable chromatic distortions, as reflected by its higher values.

Table 3.

Results of various RGB dehazing methods applied to RRealHyperPDID images (CSNC RGB synthesis method). Entries show the mean values with 95% bootstrap confidence intervals (bias-corrected and accelerated, BCa). Bold indicates the best result, and underlined indicates the second-best.

Table 4.

Results of various RGB dehazing methods applied to RRealHyperPDID images (CSSO RGB synthesis method). Entries show the mean values with 95% bootstrap confidence intervals (bias-corrected and accelerated, BCa). Bold indicates the best result, and underlined indicates the second-best.

Figure 8.

Examples of RGB dehazing results obtained using the CSNC and CSSO RGB synthesis methods.

Regarding RGB images generated with the CSSO method, only DehazeFormer achieves statistically significant improvement over hazy inputs for PSNR, DISTS and LPIPS. In contrast, SAM and exhibit the best values for the unprocessed (hazy) images—an inconsistency with qualitative observations. As shown in Figure 8, CADCP and DCP visibly reduce haze and, in some cases, even produce visually superior results to DehazeFormer.

To resolve discrepancies between quantitative metrics and perceptual quality, we conducted an expert evaluation involving four specialists from the Faculty of Space Research (Moscow State University) and the Vision Systems Laboratory (Institute for Information Transmission Problems). The experts performed pairwise comparisons among DCP, CADCP, DehazeFormer, and the original hazy images, selecting in each case the image with the least perceived haze or indicating “cannot choose”. The results of the pairwise comparisons were aggregated into a global score using the Bradley–Terry model []. The resulting expert-based scores are presented in Table 5. According to the expert evaluation, the methods rank in the following order of dehazing effectiveness for both RGB visualization types: CADCP; DCP; DehazeFormer; Hazed.

Table 5.

Expert-based scores of RGB dehazing methods on the RRealHyperPDID dataset.

In the case of RGB images generated with the CSNC method, this expert-derived ranking is fully consistent with the PSNR metric, while for CSSO-RGB, none of the evaluated metrics show a reliable correlation with experts’ perception.

Evaluation on the Subset with the Closest Scenes

The discrepancy between the expert rankings and the metric-based rankings, as well as the resulting inadequacy of certain metrics, may be explained by the variation in scene content and imaging conditions, as discussed in Section 3.1.4. To clarify this issue, we compared the metric rankings separately for the subset of closely matched scenes and for the remaining scenes from the RRealHyperPDID dataset. Table 6 presents the Kendall correlation coefficients between the metric-based rankings of the dehazing algorithms and the reference expert rankings. For the results on the subsets, an additional paired statistical test was conducted. A dash in the table indicates that the metric did not produce a statistically significant ranking.

Table 6.

Kendall correlation coefficients between metric-based and expert rankings of dehazing algorithms, shown separately for close and other scenes of RRealHyperPDID.

Regarding RGB images synthesized with the CSNC method, the metric rankings were consistent with the expert ranking for the subset of closest scenes, with the exception being SAM and LPIPS metrics. The expert rankings aligned with the PSNR metrics for the remaining scenes. In the case of RGB images synthesized with the CSSO method, the rankings for both subsets showed no significant correspondence with the expert evaluation.

4.2. HS Dehazing Image Quality Assessment Results

The hyperspectral dehazing methods selected for comparison were AACNet [], HDMba [], and AIDTransformer []. Two experiments were conducted. In the first experiment, the selected neural network models were trained on the HyperDehazing dataset following the procedures described in the corresponding publications and subsequently tested on the RRealHyperPDID dataset, which was spectrally harmonized to match the spectral resolution of HyperDehazing. In the second experiment, the same models were trained on the proposed RSyntHyperPDID dataset and also evaluated on RRealHyperPDID.

The standard image quality metrics (PSNR, SSIM, and SAM) were used for evaluation. It should be noted, however, that the SAM metric showed weak correlation with expert rankings for RGB data, even within the subset of closely matched scenes. In addition, the evaluation of HS results is inherently challenging: the data cannot be assessed visually (even by experts), and the lack of field delineation methods for hyperspectral imagery makes delineation-based DTQA evaluation infeasible.

4.2.1. Experiment 1

Since pre-trained models from HyperDehazing were not publicly available, we reproduced the authors’ training procedure. As reported in [], 1800 images were used for training and 200 for testing; however, no exact split was provided. All models were trained for 10,000 iterations with their original hyperparameter configurations. AACNet was trained using the ADAM optimizer (, , ) with a batch size of 32, an initial learning rate of , and a Mean Squared Error (MSE) loss function. AIDTransformer was trained using the ADAM optimizer with an initial learning rate of , a cosine annealing learning rate schedule, and an loss function. HDMba was trained using the ADAM optimizer (, , ) with a batch size of 4, an initial learning rate of , a cosine annealing schedule, and a combined loss function with and . All models were implemented in PyTorch (version 1.10.2) framework and trained on NVIDIA GPUs: GeForce RTX 2080 Ti for AACNet and AIDTransformer, and Quadro GV100 for HDMba. Training required 8.5 GPU h for AACNet (9 GB memory usage), 8.7 GPU hours for AIDTransformer (11 GB memory usage), and 8.2 GPU hours for HDMba (30 GB memory usage).

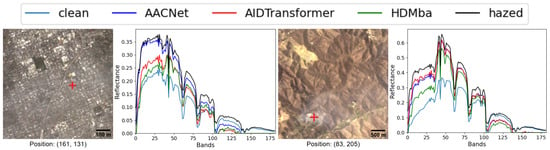

The test results on the HyperDehazing dataset achieved quality metrics comparable to those reported in [] (Table 7). Figure 9 shows that all methods effectively removed synthetic haze, while Figure 10 presents the spectral reconstruction at a representative pixel, demonstrating that the methods approximate the clean image spectra.

Table 7.

Reproduction of neural network HSI dehazing results on the HyperDehazing test set. Results for hazy images were not provided in [].

Figure 9.

RGB visualization comparison of HS dehazing methods trained on the HyperDehazing synthetic training set and evaluated on the test set.

Figure 10.

Spectral reconstruction performance for various HS dehazing methods on the HyperDehazing test set. The red plus sign indicates the pixel location for which the spectral reconstruction is shown.

The number of channels and the spectral ranges differ between HyperDehazing and RRealHyperPDID. The problem in which the response of one sensor is used to predict the response of another sensor with the known spectral sensitivity to the same radiation is called spectral harmonization []. Sensor-response-based harmonization requires a set of aligned image pairs, where the same area is captured by both sensors involved, for parameter tuning. Since such a dataset was unavailable in our case, we adopted linear interpolation to the RealHyperPDID images to match HyperDehazing spectral configuration.

The metric-based results are presented in Table 8. None of the HS dehazing methods produced a clear improvement over the original hazed images. This conclusion is also supported by qualitative analysis: Figure 11 shows RGB visualizations of the dehazed results, and Figure 12 presents spectral profiles at selected points.

Table 8.

Results of HS dehazing methods on the RRealHyperPDID dataset. Models were trained on the HyperDehazing training set. Entries show the mean values with 95% bootstrap confidence intervals (bias-corrected and accelerated, BCa). Bold indicates the best result, and underlined indicates the second-best.

Figure 11.

RGB visualization comparison of HS dehazing methods trained on the HyperDehazing synthetic dataset and tested on the RRealHyperPDID real-world dataset.

Figure 12.

Spectral reconstruction performance of HS dehazing methods on the RRealHyperPDID dataset. The red plus sign indicates the pixel location for which the spectral reconstruction is shown.

4.2.2. Experiment 2

The RSyntHyperPDID dataset was divided into training and validation subsets containing 2354 and 262 fragments, respectively. The training hyperparameters were identical to those used for the HyperDehazing dataset (see the previous section).

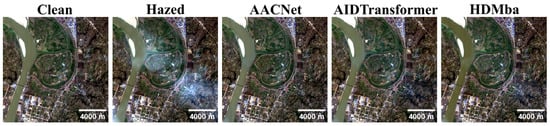

The qualitative analysis (see Figure 13) demonstrate that models trained on the RSyntHyperPDID effectively remove haze from real hazy images. This is corroborated by the spectral decomposition results (Figure 14). The quantitative results for the RSyntHyperPDID test set and the real-haze RRealHyperPDID dataset are presented in Table 9. According to the PSNR metric, all dehazing methods outperformed the “No dehazing” baseline, and paired statistical tests confirmed that these improvements are significant. In contrast, none of the methods exceeded the “No dehazing” baseline in terms of SSIM. For the SAM metric, only AACNet achieved a statistically significant improvement relative to the “No dehazing” baseline.

Figure 13.

RGB visualization comparison of HS dehazing methods trained on the RSyntHyperPDID synthetic dataset and tested on the RRealHyperPDID real-world dataset.

Figure 14.

Spectral reconstruction performance of HS dehazing methods trained on the RSyntHyperPDID dataset. The red plus sign indicates the pixel location for which the spectral reconstruction is shown.

Table 9.

Results of neural network-based HS dehazing methods on the validation subset of the synthetic-haze RSyntHyperPDID and real-haze RRealHyperPDID. Models were trained on the RSyntHyperPDID training set. Entries show the mean values with 95% bootstrap confidence intervals (bias-corrected and accelerated, BCa). Bold indicates the best result, and underlined indicates the second-best.

4.3. Downstream Task Quality Assessment Results

This section presents a DTQA comparison of dehazing methods based on their impact as preprocessing steps on the results of solving two downstream tasks: field delineation and land classification.

4.3.1. Delineation-Based RGB Dehazing Quality Assessment

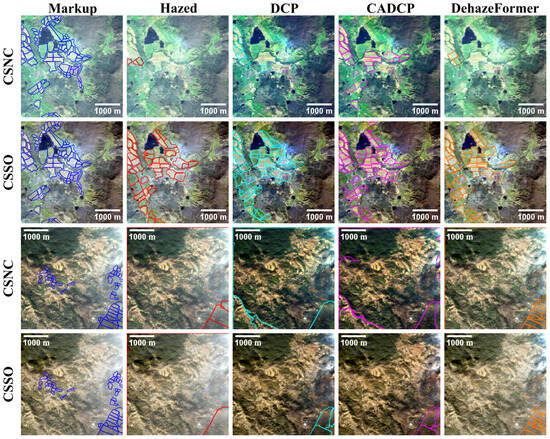

Agricultural field delineation is performed using the DA method []. The results are summarized in Table 10 and illustrated in Figure 15. All tested dehazing methods improved delineation quality, with the DehazeFormer method demonstrating the largest performance gain.

Table 10.

Comparison of DA results for hazy and dehazed images processed with DCP, CADCP, and DehazeFormer algorithms.

Figure 15.

Results of applying the DA method to hazy and dehazed images generated using the CSNC and CSSO RGB synthesis methods. The colors of delineation masks indicate DA results applied to images processed by different dehazing methods.

4.3.2. Classification-Based HS Dehazing Quality Assessment

In this experiment, dehazing was first applied to the HS images, after which MS images were synthesized from the dehazed HSI outputs. The LULC classification algorithm was then evaluated on the resulting MS images. The classification accuracy is reported in Table 11. Examples of classification results are shown in Figure 16, visualized using CSNC RGB synthesis method.

Table 11.

LULC classification results on MS images synthesized from the RRealHyperPDID dataset.

Figure 16.

LULC classification results for hazy and dehazed images. The first row shows the ground truth class for each scene. The label below each image patch indicates the class predicted by the classification algorithm.

The results in Table 11 indicate that dehazing generally improves LULC classification performance. Among the evaluated dehazing methods, AACNet achieved the highest accuracy, while HDMba showed no improvement over the hazy baseline, demonstrating the weakest performance.

5. Discussion

The limitations of the proposed RRealHyperPDID dataset include spatial and spectral inconsistencies between paired hazy and clean images. Such inconsistencies can compromise the reliability of FRQA metrics. Spatial misalignments arise from residual non-projective distortions, which remain even after co-registration procedures. A promising direction for future work is to develop more advanced alignment techniques to eliminate these residual distortions. Spectral discrepancies arise from the temporal gap between the acquisition of the clean and hazy images. These differences manifest as variations in illumination and surface properties. While closest scenes subset annotation and a nearest-clean-reference strategy for FRQA partially mitigates this issue, it cannot fully compensate for temporal spectral changes. Future studies should therefore focus on designing dedicated FRQA methods that evaluate dehazing performance using only the most stable surface elements. Aleatoric uncertainty quantification, as proposed in [], could be employed to identify such stable elements.

Although the proposed benchmark datasets encompass diverse surface types—including mountains, urban areas, and agricultural regions—they are limited in climatic diversity. The absence of scenes representing other climatic zones is a notable constraint and an avenue for future expansion.

The proposed benchmark integrates the CCHS haze augmentation method, previously applied only to satellite MS imagery. In this work, we adapted CCHS for generating synthetic haze in airborne hyperspectral images. Using the proposed RRealHyperPDID, we show that CCHS produces physically realistic haze as models trained on synthetic data successfully generalize to real-world hazy conditions. This result validates both the universality of the CCHS method and the robustness of its underlying atmospheric scattering model, demonstrating its applicability to imagery captured at different altitudes, through varying atmospheric layers, and by sensors with distinct spectral responses.

In cases involving dense or fully opaque haze, HSIs alone may be insufficient for effective restoration, necessitating the use of auxiliary data. Such auxiliary information could include co-registered RGB images, HSIs, or SAR images of the same area. When auxiliary data are obtained at different times, a multi-temporal dehazing approach becomes essential. Notably, several RRealHyperPDID scenes contain multiple reference images, which can serve as auxiliary inputs for developing and evaluating multi-temporal dehazing methods. While SAR-assisted dehazing has been explored for MS imagery [], HS dehazing with SAR guidance remains unexplored and represents a promising avenue for future research. A key prerequisite for this direction is robust HSI-SAR image alignment, a problem recently addressed in [].

6. Conclusions

This work presents a comprehensive benchmark for hyperspectral remote sensing image dehazing (RSID) that integrates real and synthetic data, standardized evaluation protocols, and baseline implementations of state-of-the-art algorithms. The proposed benchmark includes data splits from the well-known HyperDehazing dataset, the proposed real-world test set (RRealHyperPDID) with paired hazy and haze-free hyperspectral images, and the proposed synthetic training set (RSyntHyperPDID) generated using the CCHS method. In addition, we provide an open-source evaluation suite that combines image quality and delineation-based metrics, establishing a unified framework for the assessment of dehazing performance in hyperspectral remote sensing.

The conducted experiments reveal several important insights. RGB-based RSID methods are capable of effectively compensating for real haze in remote sensing imagery. The neural network-based DehazeFormer, trained on synthetic data, demonstrates competitive performance, although classical model-driven approaches such as DCP and CADCP may still achieve superior results. The reliability of image quality metrics was found to depend strongly on the acquisition setup: in the case of CSSO imagery, common universal metrics such as the PSNR, SSIM, SAM, DISTS, and LPIPS showed poor correlation with expert evaluations, whereas for CSNC data, the PSNR, SSIM, and DISTS aligned well with expert judgment. Notably, PSNR remained consistent even in complex scenes where haze was not the sole source of change, establishing them as the recommended metric for evaluation. The development of metrics suitable for analyzing RGB images synthesized from HSIs using the CSSO method is an important direction for future research.

However, experiments on hyperspectral imagery revealed a clear domain gap: models trained exclusively on the synthetic HyperDehazing dataset failed to generalize to real haze conditions, even after applying spectral harmonization designed to mitigate channel mismatches. By contrast, HS dehazing models trained on the proposed RSyntHyperPDID dataset successfully remove real haze, indicating that RSyntHyperPDID provides a more representative and transferable basis for model training. Among the evaluated architectures, AIDTransformer achieved the highest PSNR, while AACNet demonstrated statistically significant improvements over “No dehazing” baseline in terms of both PSNR and SAM metrics.

The results also demonstrate the practical significance of dehazing as a preprocessing step. When RGB dehazing is applied prior to agricultural field delineation, DehazeFormer improved delineation accuracy across all types of RGB imagery, with little dependence on the specific imaging configuration. For land use and land cover classification, applying hyperspectral dehazing increases accuracy with AACNet, whereas HDMba provided no improvement compared to using hazy data.

Overall, the proposed benchmark provides a robust and reproducible foundation for advancing research in hyperspectral dehazing.

Author Contributions

Conceptualization, A.N. and D.N.; methodology, D.S. and D.N.; formal analysis N.O.; software, N.O., V.V. and E.D.; validation, A.N., D.S. and D.N.; investigation, A.N., D.S., N.O., A.S., E.D. and M.Z.; resources, N.O. data curation, N.O., E.D. and M.Z.; writing—original draft preparation, D.S.; writing—review and editing, A.S.; visualization, N.O., V.V., E.D. and M.Z.; supervision, A.N. and D.N.; project administration, D.S.; funding acquisition, A.N. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Economic Development of the Russian Federation (agreement identifier 000000C313925P3U0002, grant No. 139-15-2025-003 dated 16.04.2025).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The proposed datasets are publicly available at https://huggingface.co/datasets/nikos74/RRealHyperPDID (accessed on 19 November 2025) for RRealHyperPDID and https://huggingface.co/datasets/nikos74/RSyntHyperPDID (accessed on 19 November 2025) for RSyntHyperPDID. Additional datasets analyzed in this study at https://github.com/RsAI-lab/HyperDehazing (accessed on 10 October 2025) for the HyperDehazing dataset and https://huggingface.co/datasets/lwCVer/RRSHID (accessed on 10 October 2025) for the RRSHID dataset. All source code, including neural network architectures, preprocessing scripts, training routines, and evaluation tools, is openly available at https://github.com/iitpvisionlab/hyperhazeoff (accessed on 19 November 2025).

Acknowledgments

The authors thank Viacheslav Volosyankin for his valuable assistance in conducting the expert evaluations.

Conflicts of Interest

Author Dmitry Nikolaev was employed by Smart Engines Service LLC. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RRealHyperPDID | Remote Sensing Real-World Hyperspectral Paired Dehazing Image Dataset |

| RSyntHyperPDID | Remote Sensing Synthetic Hyperspectral Paired Dehazing Image Dataset |

| HSI | Hyperspectral image |

| RSID | Remote sensing image dehazing |

| DCP | Dark Channel Prior |

| CNN | Convolutional neural network |

| MS | Multispectral |

| RRSHID | Real-World Remote Sensing Hazy Image Dataset |

| DTQA | Downstream task quality assessment |

| IQA | Image quality assessment |

| AS | Atmospheric scattering |

| HTM | Haze transmission map |

| HHS | Hazy HSI Synthesis |

| CCHS | Channel Correlation-Based Haze Synthesis |

| FRIQA | Full-Reference IQA |

| NRIQA | No-Reference IQA |

| RMSE | Root Mean Square Error |

| PSNR | Peak Signal-To-Noise Ratio |

| SSIM | Structural Similarity Index |

| SAM | Spectral Angle Mapper |

| LPIPS | Learned Perceptual Image Patch Similarity |

| NDVI | Normalized Difference Vegetation Index |

| DOS | Dark Object Subtraction |

| SIFT | Scale-Invariant Feature Transform |

| RANSAC | Random Sample Consensus |

| CSNC | Color Synthesis Narrow Channels |

| CSSO | Color Synthesis Standard Observer |

| DA | Delineate Anything |

| LULC | Land Use and Land Cover |

| CADCP | Color Attenuation Dark Channel Prior |

| CAP | Color Attenuation Prior |

| MSE | Mean Squared Error |

References

- Li, C.; Li, Z.; Liu, X.; Li, S. The influence of image degradation on hyperspectral image classification. Remote Sens. 2022, 14, 5199. [Google Scholar] [CrossRef]

- Kokhan, V.L.; Konyushenko, I.D.; Bocharov, D.A.; Seleznev, I.O.; Nikolaev, I.P.; Nikolaev, D.P. TSQ-2024: A Categorized Dataset of 2D LiDAR Images of Moving Dump Trucks in Various Environment Conditions. In Proceedings of the Seventeenth International Conference on Machine Vision (ICMV 2024), Edinburgh, UK, 10–13 October 2024; Osten, W., Nikolaev, D., Debayle, J., Eds.; SPIE: Bellingham, WA, USA, 2025; Volume 13517, pp. 1351709-1–1351709-6. [Google Scholar] [CrossRef]

- Ebel, P.; Garnot, V.S.F.; Schmitt, M.; Wegner, J.D.; Zhu, X.X. UnCRtainTS: Uncertainty quantification for cloud removal in optical satellite time series. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2086–2096. [Google Scholar]

- Sarukkai, V.; Jain, A.; Uzkent, B.; Ermon, S. Cloud removal from satellite images using spatiotemporal generator networks. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1796–1805. [Google Scholar]

- Zhao, X.; Jia, K. Cloud removal in remote sensing using sequential-based diffusion models. Remote Sens. 2023, 15, 2861. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [CrossRef]

- Long, J.; Shi, Z.; Tang, W.; Zhang, C. Single remote sensing image dehazing. IEEE Geosci. Remote Sens. Lett. 2013, 11, 59–63. [Google Scholar] [CrossRef]

- Ju, M.; Ding, C.; Guo, Y.J.; Zhang, D. Remote sensing image haze removal using gamma-correction-based dehazing model. IEEE Access 2018, 7, 5250–5261. [Google Scholar] [CrossRef]

- Zhu, Z.; Luo, Y.; Wei, H.; Li, Y.; Qi, G.; Mazur, N.; Li, Y.; Li, P. Atmospheric light estimation based remote sensing image dehazing. Remote Sens. 2021, 13, 2432. [Google Scholar] [CrossRef]

- Sidorchuk, D.S.; Pavlova, M.A.; Kushchev, D.O.; Selyugin, M.A.; Nikolaev, I.P.; Bocharov, D.A. CADCP: A method for chromatic haze compensation on remotely sensed images. In Proceedings of the ICMV 2023, Yerevan, Armenia, 15–18 November 2023; Osten, W., Nikolaev, D., Debayle, J., Eds.; SPIE: Bellingham, WA, USA, 2024; Volume 13072, pp. 1307216-1–1307216-10. [Google Scholar] [CrossRef]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Guo, J.; Yang, J.; Yue, H.; Tan, H.; Hou, C.; Li, K. RSDehazeNet: Dehazing network with channel refinement for multispectral remote sensing images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 2535–2549. [Google Scholar] [CrossRef]

- Wen, X.; Pan, Z.; Hu, Y.; Liu, J. An effective network integrating residual learning and channel attention mechanism for thin cloud removal. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision transformers for single image dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef]

- Kulkarni, A.; Murala, S. Aerial image dehazing with attentive deformable transformers. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 February 2023; pp. 6305–6314. [Google Scholar]

- Song, T.; Fan, S.; Li, P.; Jin, J.; Jin, G.; Fan, L. Learning an effective transformer for remote sensing satellite image dehazing. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Zhou, H.; Wu, X.; Chen, H.; Chen, X.; He, X. Rsdehamba: Lightweight vision mamba for remote sensing satellite image dehazing. arXiv 2024, arXiv:2405.10030. [Google Scholar] [CrossRef]

- Fu, H.; Sun, G.; Li, Y.; Ren, J.; Zhang, A.; Jing, C.; Ghamisi, P. HDMba: Hyperspectral remote sensing imagery dehazing with state space model. arXiv 2024, arXiv:2406.05700. [Google Scholar] [CrossRef]

- Hou, Q.; Wang, S.; Liu, J. Semi-supervised dehazing method based on image enhancement and multi-negative contrastive auxiliary learning. Int. J. Mach. Learn. Cybern. 2025, 16, 9095–9108. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; Timofte, R.; De Vleeschouwer, C. O-haze: A dehazing benchmark with real hazy and haze-free outdoor images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 754–762. [Google Scholar]

- Feng, Y.; Su, Z.; Ma, L.; Li, X.; Liu, R.; Zhou, F. Bridging the Gap Between Haze Scenarios: A Unified Image Dehazing Model. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 11070–11085. [Google Scholar] [CrossRef]

- Zhu, Z.H.; Lu, W.; Chen, S.B.; Ding, C.H.; Tang, J.; Luo, B. Real-World Remote Sensing Image Dehazing: Benchmark and Baseline. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4705014. [Google Scholar] [CrossRef]

- Xu, M.; Peng, Y.; Zhang, Y.; Jia, X.; Jia, S. AACNet: Asymmetric attention convolution network for hyperspectral image dehazing. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5526714. [Google Scholar] [CrossRef]

- Fu, H.; Ling, Z.; Sun, G.; Ren, J.; Zhang, A.; Zhang, L.; Jia, X. HyperDehazing: A hyperspectral image dehazing benchmark dataset and a deep learning model for haze removal. ISPRS J. Photogramm. Remote Sens. 2024, 218, 663–677. [Google Scholar] [CrossRef]

- Kang, X.; Fei, Z.; Duan, P.; Li, S. Fog model-based hyperspectral image defogging. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5512512. [Google Scholar] [CrossRef]

- Qin, M.; Xie, F.; Li, W.; Shi, Z.; Zhang, H. Dehazing for multispectral remote sensing images based on a convolutional neural network with the residual architecture. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1645–1655. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.; Barloon, P.; Goetz, A.F. The spectral image processing system (SIPS)—interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- Choi, L.K.; You, J.; Bovik, A.C. Referenceless prediction of perceptual fog density and perceptual image defogging. IEEE Trans. Image Process. 2015, 24, 3888–3901. [Google Scholar] [CrossRef]

- Gu, K.; Tao, D.; Qiao, J.F.; Lin, W. Learning a no-reference quality assessment model of enhanced images with big data. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 1301–1313. [Google Scholar] [CrossRef]

- Guan, T.; Li, C.; Gu, K.; Liu, H.; Zheng, Y.; Wu, X.j. Visibility and distortion measurement for no-reference dehazed image quality assessment via complex contourlet transform. IEEE Trans. Multimed. 2022, 25, 3934–3949. [Google Scholar] [CrossRef]

- Tang, P.W.; Lin, C.H. Hyperspectral Dehazing Using Admm-Adam Theory. In Proceedings of the 2022 12th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Rome, Italy, 13–16 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 21–22 September 1999; IEEE: New York, NY, USA, 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Magnusson, M.; Sigurdsson, J.; Armansson, S.E.; Ulfarsson, M.O.; Deborah, H.; Sveinsson, J.R. Creating RGB images from hyperspectral images using a color matching function. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, online, 26 September–2 October 2020; IEEE: New York, NY, USA, 2020; pp. 2045–2048. [Google Scholar]

- Pavlova, M.A.; Sidorchuk, D.S.; Kushchev, D.O.; Bocharov, D.A.; Nikolaev, D.P. Equalization of Shooting Conditions Based on Spectral Models for the Needs of Precision Agriculture Using UAVs. JCTE 2022, 67 (Suppl. 2), S283–S289. [Google Scholar] [CrossRef]

- Pavlova, M.A.; Timofeev, V.A.; Bocharov, D.A.; Sidorchuk, D.S.; Nurmukhametov, A.L.; Nikonorov, A.V.; Yarykina, M.S.; Kunina, I.A.; Smagina, A.A.; Zagarev, M.A. Low-parameter method for delineation of agricultural fields in satellite images based on multi-temporal MSAVI2 data. Comput. Opt. 2023, 47, 451–463. [Google Scholar] [CrossRef]

- Lavreniuk, M.; Kussul, N.; Shelestov, A.; Yailymov, B.; Salii, Y.; Kuzin, V.; Szantoi, Z. Delineate Anything: Resolution-Agnostic Field Boundary Delineation on Satellite Imagery. arXiv 2025, arXiv:2504.02534. [Google Scholar] [CrossRef]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar] [CrossRef]

- Chong, E. EuroSAT Land Use and Land Cover Classification Using Deep Learning. 2020. Available online: https://github.com/e-chong/Remote-Sensing (accessed on 19 November 2025).

- Naushad, R.; Kaur, T.; Ghaderpour, E. Deep transfer learning for land use and land cover classification: A comparative study. Sensors 2021, 21, 8083. [Google Scholar] [CrossRef]

- Ding, K.; Ma, K.; Wang, S.; Simoncelli, E.P. Image quality assessment: Unifying structure and texture similarity. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2567–2581. [Google Scholar] [CrossRef] [PubMed]

- Sidorchuk, D.S.; Nurmukhametov, A.L.; Maximov, P.V.; Bozhkova, V.P.; Sarycheva, A.P.; Pavlova, M.A.; Kazakova, A.A.; Gracheva, M.A.; Nikolaev, D.P. Leveraging Achromatic Component for Trichromat-Friendly Daltonization. J. Imaging 2025, 11, 225. [Google Scholar] [CrossRef]

- Li, S.; Ma, H.; Hu, X. Neural image beauty predictor based on bradley-terry model. arXiv 2021, arXiv:2111.10127. [Google Scholar] [CrossRef]

- Nurmukhametov, A.; Sidorchuk, D.; Skidanov, R. Harmonization of hyperspectral and multispectral data for calculation of vegetation index. J. Commun. Technol. Electron. 2024, 69, 38–45. [Google Scholar] [CrossRef]

- Anandakrishnan, J.; Sundaram, V.M.; Paneer, P. CERMF-Net: A SAR-Optical feature fusion for cloud elimination from Sentinel-2 imagery using residual multiscale dilated network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 11741–11749. [Google Scholar] [CrossRef]

- Liu, Q.; Huang, T.; Dong, Y.; Xiang, W. Enhancing oil spill detection with controlled random sampling: A multimodal fusion approach using SAR and HSI imagery. Remote Sens. Appl. Soc. Environ. 2025, 38, 101601. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).